Lanalisi per lesperimento ATLAS Una persona attiva come

- Slides: 13

L’analisi per l’esperimento ATLAS “Una persona attiva come utente nell'analisi dell'esperimento racconta come fa l'analisi passo e spiega quali problemi ha incontrato, quali risolti e quali ancora no, le difficolta' e i punti di forza del suo modo di procedere“ Carminati Leonardo Universita’ degli Studi e sezione INFN di Milano 13/05/2009 Workshop CCR e INFN-Grid 2009 1

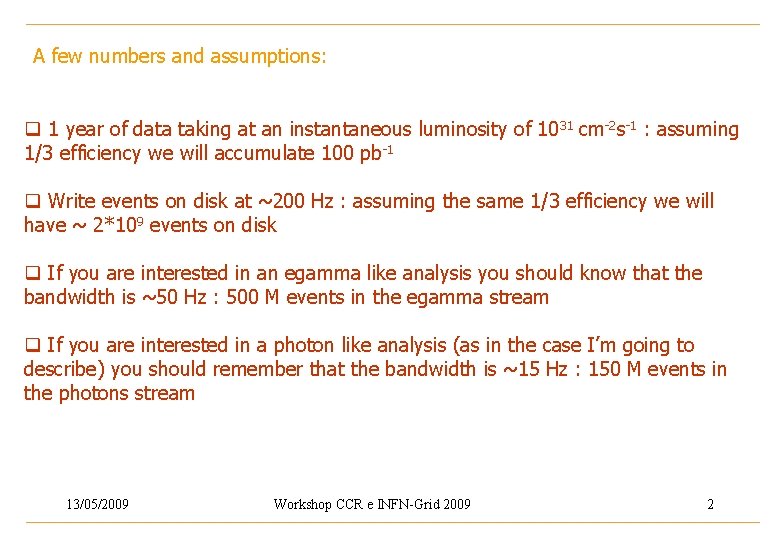

A few numbers and assumptions: q 1 year of data taking at an instantaneous luminosity of 10 31 cm-2 s-1 : assuming 1/3 efficiency we will accumulate 100 pb-1 q Write events on disk at ~200 Hz : assuming the same 1/3 efficiency we will have ~ 2*109 events on disk q If you are interested in an egamma like analysis you should know that the bandwidth is ~50 Hz : 500 M events in the egamma stream q If you are interested in a photon like analysis (as in the case I’m going to describe) you should remember that the bandwidth is ~15 Hz : 150 M events in the photons stream 13/05/2009 Workshop CCR e INFN-Grid 2009 2

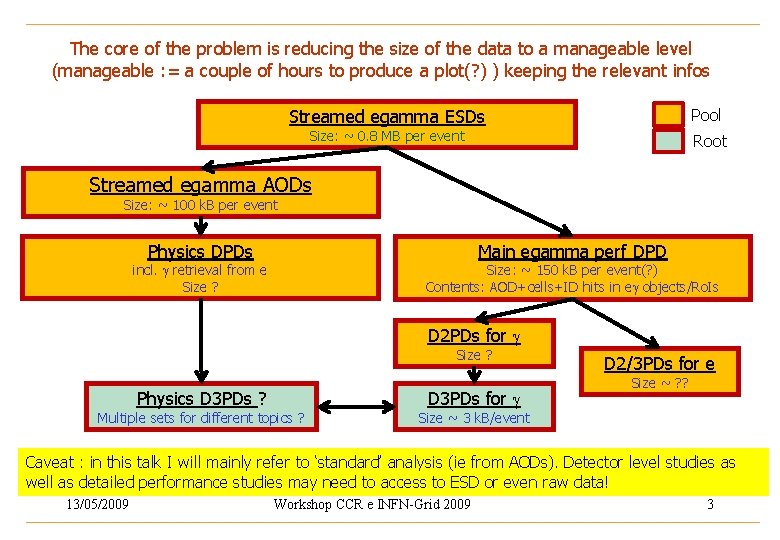

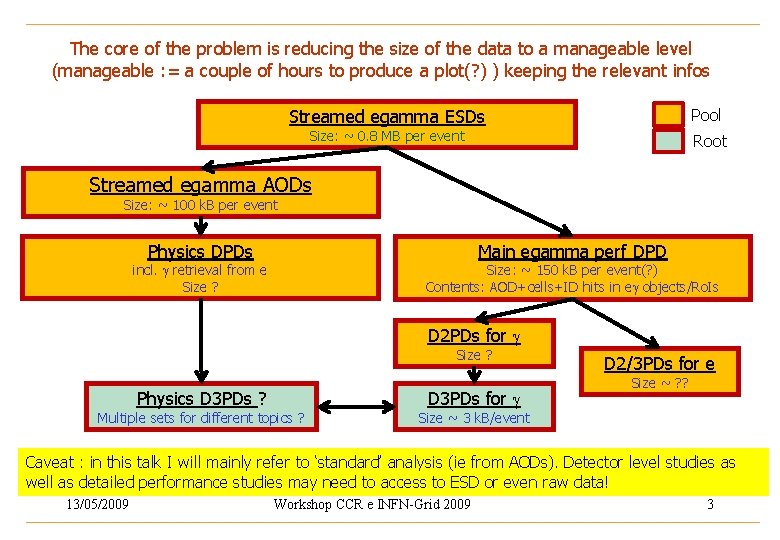

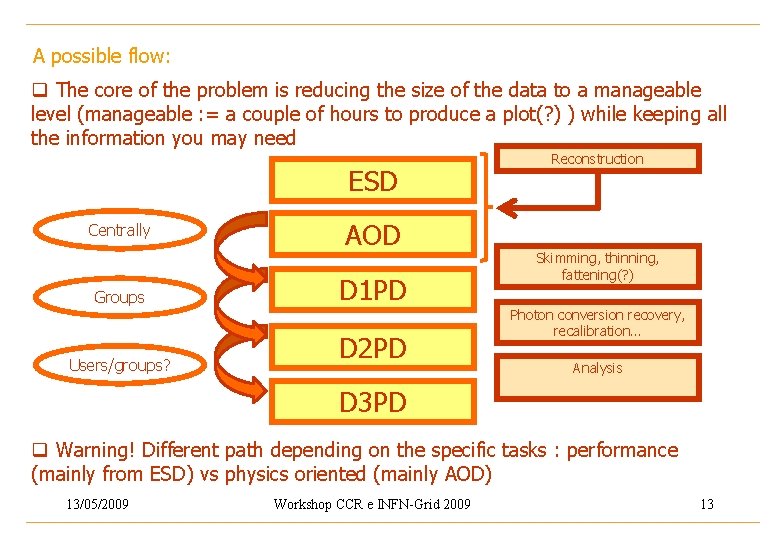

The core of the problem is reducing the size of the data to a manageable level (manageable : = a couple of hours to produce a plot(? ) ) keeping the relevant infos Pool Streamed egamma ESDs Size: ~ 0. 8 MB per event Root Streamed egamma AODs Size: ~ 100 k. B per event Physics DPDs Main egamma perf DPD incl. g retrieval from e Size ? Size: ~ 150 k. B per event(? ) Contents: AOD+cells+ID hits in eg objects/Ro. Is D 2 PDs for g Size ? Physics D 3 PDs ? D 3 PDs for g Multiple sets for different topics ? D 2/3 PDs for e Size ~ ? ? Size ~ 3 k. B/event Caveat : in this talk I will mainly refer to ‘standard’ analysis (ie from AODs). Detector level studies as well as detailed performance studies may need to access to ESD or even raw data! 13/05/2009 Workshop CCR e INFN-Grid 2009 3

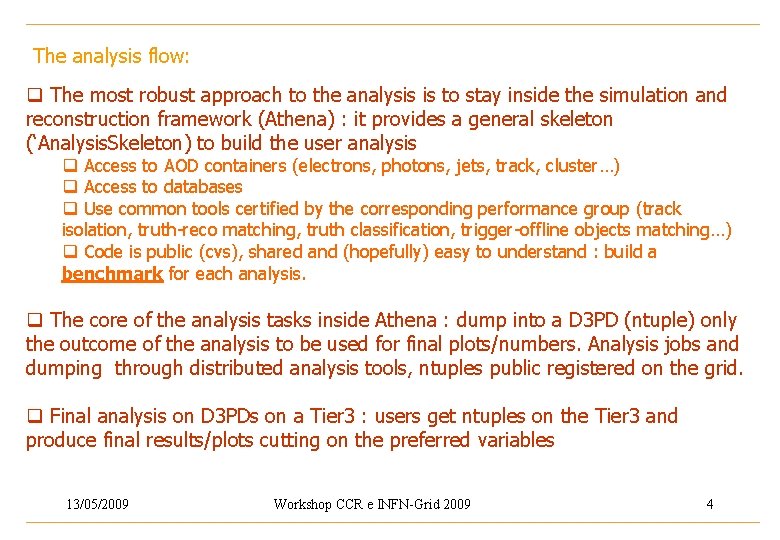

The analysis flow: q The most robust approach to the analysis is to stay inside the simulation and reconstruction framework (Athena) : it provides a general skeleton (‘Analysis. Skeleton) to build the user analysis q Access to AOD containers (electrons, photons, jets, track, cluster…) q Access to databases q Use common tools certified by the corresponding performance group (track isolation, truth-reco matching, truth classification, trigger-offline objects matching…) q Code is public (cvs), shared and (hopefully) easy to understand : build a benchmark for each analysis. q The core of the analysis tasks inside Athena : dump into a D 3 PD (ntuple) only the outcome of the analysis to be used for final plots/numbers. Analysis jobs and dumping through distributed analysis tools, ntuples public registered on the grid. q Final analysis on D 3 PDs on a Tier 3 : users get ntuples on the Tier 3 and produce final results/plots cutting on the preferred variables 13/05/2009 Workshop CCR e INFN-Grid 2009 4

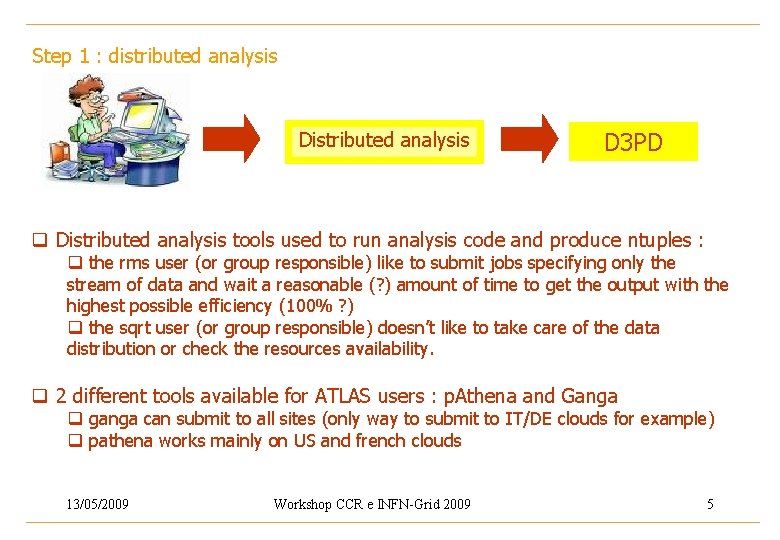

Step 1 : distributed analysis D 3 PD q Distributed analysis tools used to run analysis code and produce ntuples : q the rms user (or group responsible) like to submit jobs specifying only the stream of data and wait a reasonable (? ) amount of time to get the output with the highest possible efficiency (100% ? ) q the sqrt user (or group responsible) doesn’t like to take care of the data distribution or check the resources availability. q 2 different tools available for ATLAS users : p. Athena and Ganga q ganga can submit to all sites (only way to submit to IT/DE clouds for example) q pathena works mainly on US and french clouds 13/05/2009 Workshop CCR e INFN-Grid 2009 5

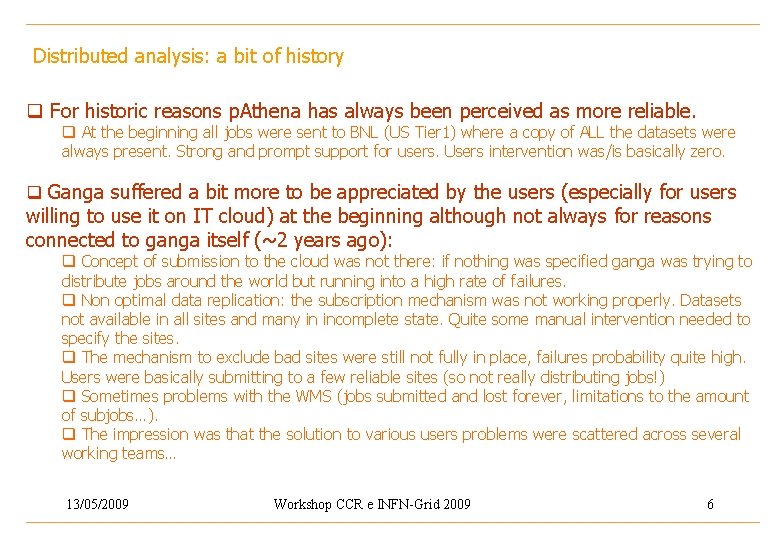

Distributed analysis: a bit of history q For historic reasons p. Athena has always been perceived as more reliable. q At the beginning all jobs were sent to BNL (US Tier 1) where a copy of ALL the datasets were always present. Strong and prompt support for users. Users intervention was/is basically zero. q Ganga suffered a bit more to be appreciated by the users (especially for users willing to use it on IT cloud) at the beginning although not always for reasons connected to ganga itself (~2 years ago): q Concept of submission to the cloud was not there: if nothing was specified ganga was trying to distribute jobs around the world but running into a high rate of failures. q Non optimal data replication: the subscription mechanism was not working properly. Datasets not available in all sites and many in incomplete state. Quite some manual intervention needed to specify the sites. q The mechanism to exclude bad sites were still not fully in place, failures probability quite high. Users were basically submitting to a few reliable sites (so not really distributing jobs!) q Sometimes problems with the WMS (jobs submitted and lost forever, limitations to the amount of subjobs…). q The impression was that the solution to various users problems were scattered across several working teams… 13/05/2009 Workshop CCR e INFN-Grid 2009 6

Distributed analysis: some recent tests q Some more recent tests (last week): three rounds of tests on 2. 5 M dijet sample. Analysis jobs sent through p. Athena and Ganga (IT cloud) : q a replica of the datasets correctly found spread over the IT cloud *(see slide 9). q jobs correctly distributed to Napoli, Milano, CNAF (no replica to Roma 1 and Frascati for this specific case) q very similar (ie between Ganga and p. Athena) execution time and failure rates (~10% but mainly ATLAS software, to be investigated) : a few hours to run the analysis jobs on the 2. 5 M statistic 13/05/2009 Workshop CCR e INFN-Grid 2009 7

Distributed analysis: a bit more on IT cloud q One of the reasons for such a good performance on the IT cloud is the high number of jobs running at the same time at CNAF (up to 150) : but in principle CNAF is not supposed to be a site for analysis q Ensure the correct replication of AODs to italian Tier 2 q Napoli was also good : up to 40 jobs running at the same time q Heavy load on Milano Tier 2 due to a special MC production q What is going to happen to users when heavy MC production will be running on our Tier 2 ? q The possibility to be able to tune carefully the priorities in order to optimize the sharing of the resources will be a key issue q Will the amount of CPUs / disk space in our Tier 2 enough when users will become to run heavily analysis jobs? 13/05/2009 Workshop CCR e INFN-Grid 2009 8

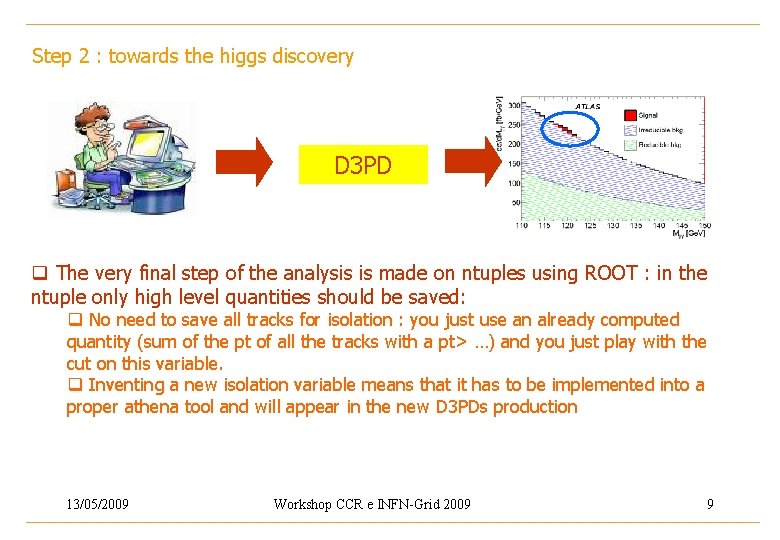

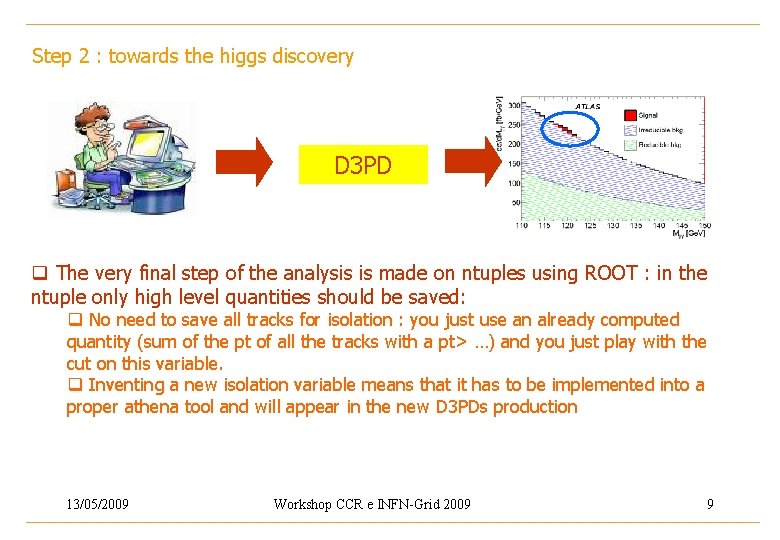

Step 2 : towards the higgs discovery D 3 PD q The very final step of the analysis is made on ntuples using ROOT : in the ntuple only high level quantities should be saved: q No need to save all tracks for isolation : you just use an already computed quantity (sum of the pt of all the tracks with a pt> …) and you just play with the cut on this variable. q Inventing a new isolation variable means that it has to be implemented into a proper athena tool and will appear in the new D 3 PDs production 13/05/2009 Workshop CCR e INFN-Grid 2009 9

D 3 PD and Tier 3 q Do we really need a Tier 3? If yes, what is it? A few assumptions: q Current Photon D 3 PD size is 3 kb/ev : in one year 300 Gb , affordable. But be careful that for specific perf studies we could also have a factor of 10 q Running my analysis macros on the largest sample we have (10 M dijet events) it takes something like 2 hours on my laptop q If we scale to the number of events in the photon stream for the first year of data taking it means ~30 hours to produce a plot. q But this analysis will be repeated several time : I would like to see for example how it goes varying the cut on the track isolation (Sumpt < 2, 3, 5 Ge. V. . ) q Clearly 30 hours for each sumpt (times all other combinations of selection cuts) cut is not affordable : PROOF ? q Assuming a perfect scaling with a standard commercial 16 core machine -> ~2 hours : excellent! For my specific case a tier 3 could be a multi-core machine with a moderate amount of disk space 13/05/2009 Workshop CCR e INFN-Grid 2009 10

Conclusions and outlook q A two stages analysis model (AOD->D 3 PD through distribute analysis tools and D 3 PD->plots on Tier 3) seems to be the most robust : q Fully supported by the ATLAS computing/software experts q Code is public and easy to understand. Use of common and certified tools q Produce small and compact ntuples for final analysis q Step 1 through distributed analysis tools : Ganga and the IT cloud is becoming more and more reliable q Ensure the availability of ALL the data in the cloud (backup solutions? ) q Optimize the priority among the users and wrt central production (MC) q Fast feedback to the users for problems q Step 2 at the Tier 3 : multicore machines with PROOF could be a promising solution. Probably disk space not an issue (but this may vary depending to the specific tasks) 13/05/2009 Workshop CCR e INFN-Grid 2009 11

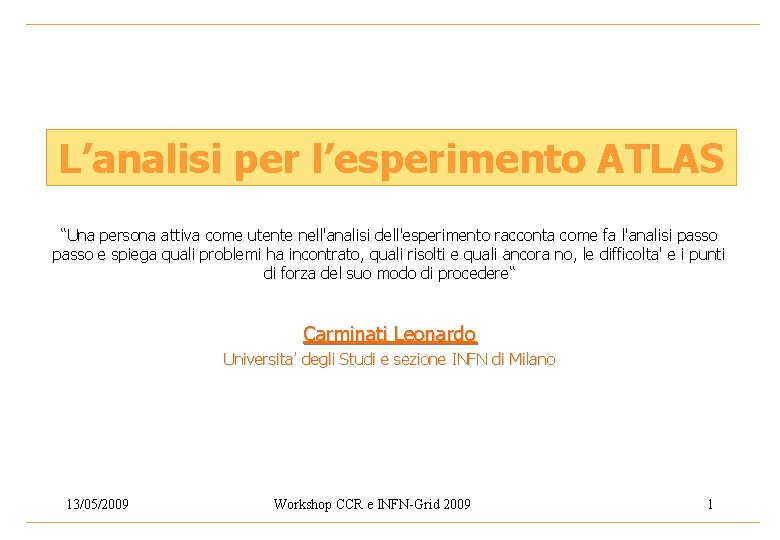

Towards a photon-like complete analysis: the Athena side q Task better performed inside the framework because there are common specific tools already available : q Event selection : identify photon candidates, apply kinematic criteria. Selectors for exclusive analyses. All these tasks into Athena Tools : no misunderstanding on the cuts/definitions q Compute relevant quantities for the analysis (track isolation, m gg, mggj for exclusive analyses…) q But also truth matching, truth classification (on montecarlo) q Trigger to offline object matching q Access efficiency/purity databases as well as Data Quality information q Dump in D 3 PD (ntuple) only the outcome of the analysis: q Flags which tell you if the event passed the selection criteria q Sum of the pt of your preferred tracks inside a cone (not all tracks!!) 13/05/2009 Workshop CCR e INFN-Grid 2009 12

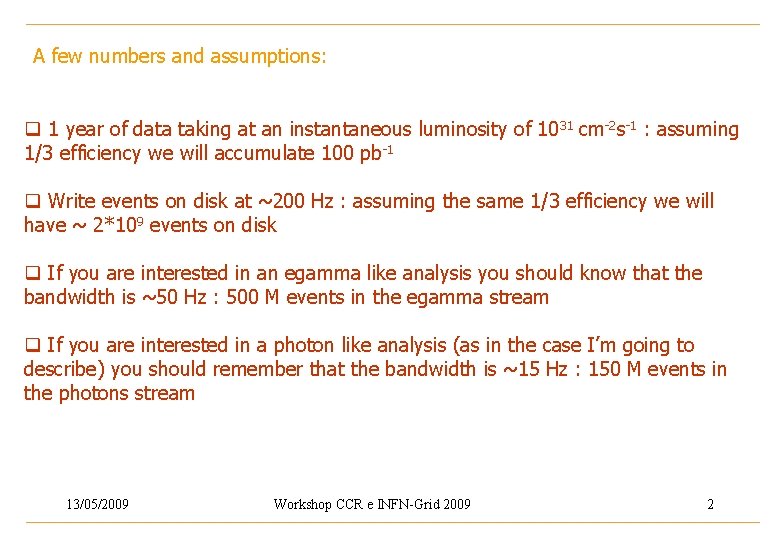

A possible flow: q The core of the problem is reducing the size of the data to a manageable level (manageable : = a couple of hours to produce a plot(? ) ) while keeping all the information you may need ESD Centrally Groups Users/groups? AOD D 1 PD D 2 PD Reconstruction Skimming, thinning, fattening(? ) Photon conversion recovery, recalibration… Analysis D 3 PD q Warning! Different path depending on the specific tasks : performance (mainly from ESD) vs physics oriented (mainly AOD) 13/05/2009 Workshop CCR e INFN-Grid 2009 13

Identifica cual es el pronombre personal

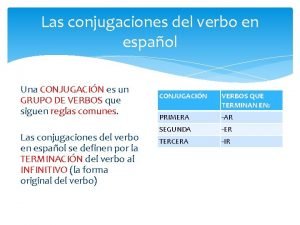

Identifica cual es el pronombre personal Las conjugaciones

Las conjugaciones Primera persona segunda y tercera

Primera persona segunda y tercera Tercera persona narrador

Tercera persona narrador Come rico come sano come pescado

Come rico come sano come pescado Descripcion de una persona ejemplos

Descripcion de una persona ejemplos Un proyecto de vida cristiana

Un proyecto de vida cristiana Teche per insetti

Teche per insetti Perifrastica passiva

Perifrastica passiva Perifrastiche latino

Perifrastiche latino Ricerca attiva del lavoro slide

Ricerca attiva del lavoro slide Variazione numeraria attiva

Variazione numeraria attiva Cos è il volontariato

Cos è il volontariato Quali sono i verbi servili

Quali sono i verbi servili