Judgment and Decision Making in Information Systems Confidence

- Slides: 25

Judgment and Decision Making in Information Systems Confidence, Forecasting, Knowledge, and Calibration Yuval Shahar M. D. , Ph. D.

Forecasting • Experts often try to forecast an event • Forecasting does not need to be absolute – it often includes a certainty measure (e. g. , 80% confidence) • Over time and multiple forecasts, it is possible to evaluate not only absolute (100%) forecasts but also probabilistic ones

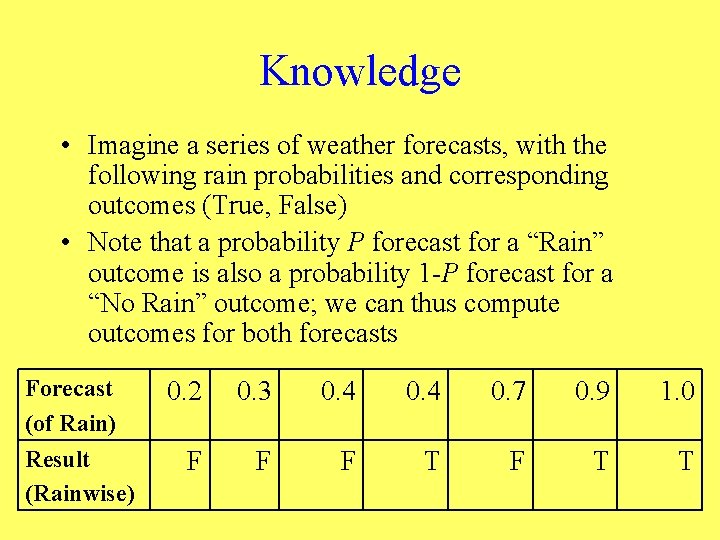

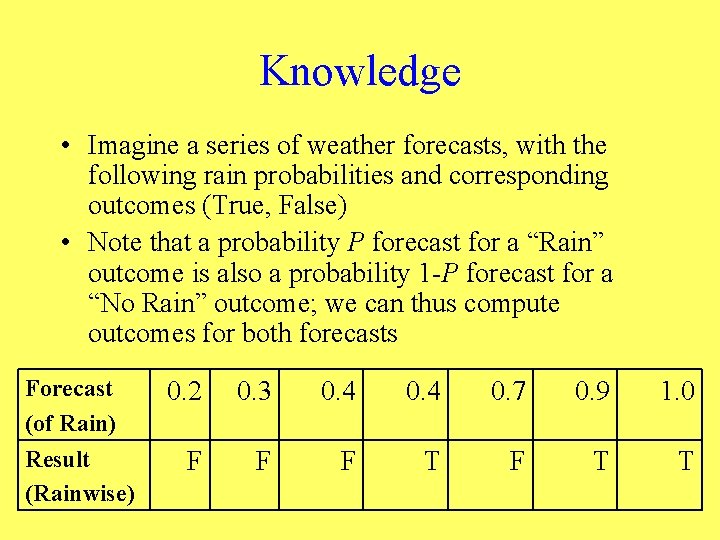

Knowledge • Imagine a series of weather forecasts, with the following rain probabilities and corresponding outcomes (True, False) • Note that a probability P forecast for a “Rain” outcome is also a probability 1 -P forecast for a “No Rain” outcome; we can thus compute outcomes for both forecasts Forecast (of Rain) Result (Rainwise) 0. 2 0. 3 0. 4 0. 7 0. 9 1. 0 F F F T T

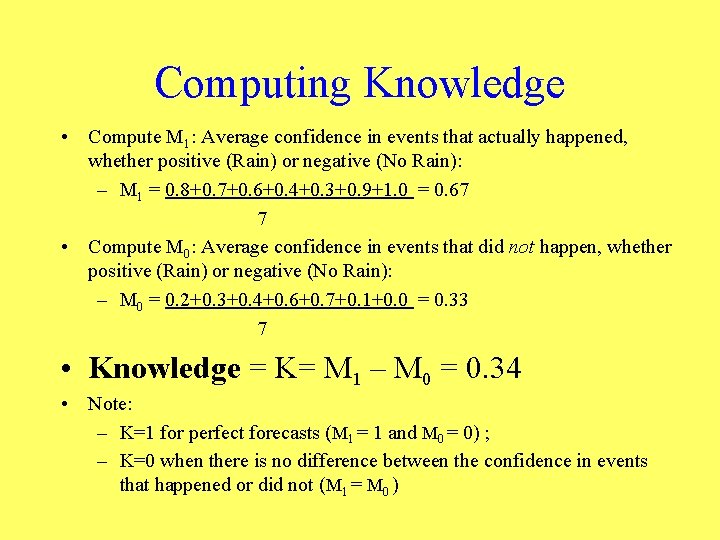

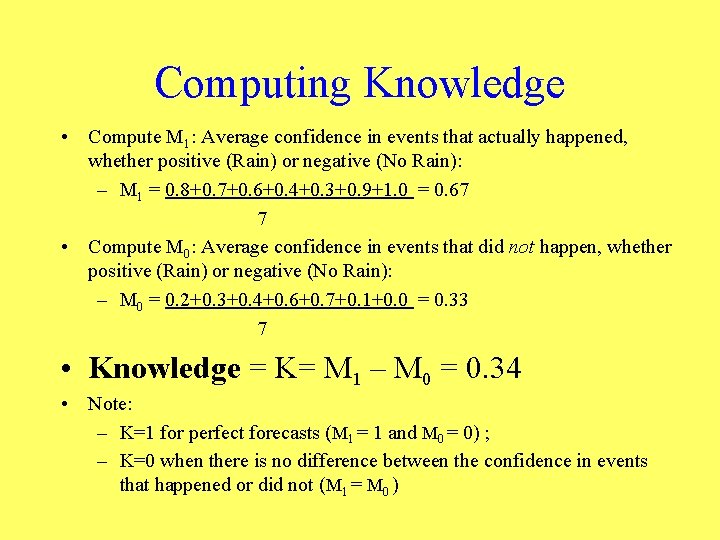

Computing Knowledge • Compute M 1: Average confidence in events that actually happened, whether positive (Rain) or negative (No Rain): – M 1 = 0. 8+0. 7+0. 6+0. 4+0. 3+0. 9+1. 0 = 0. 67 7 • Compute M 0: Average confidence in events that did not happen, whether positive (Rain) or negative (No Rain): – M 0 = 0. 2+0. 3+0. 4+0. 6+0. 7+0. 1+0. 0 = 0. 33 7 • Knowledge = K= M 1 – M 0 = 0. 34 • Note: – K=1 for perfect forecasts (M 1 = 1 and M 0 = 0) ; – K=0 when there is no difference between the confidence in events that happened or did not (M 1 = M 0 )

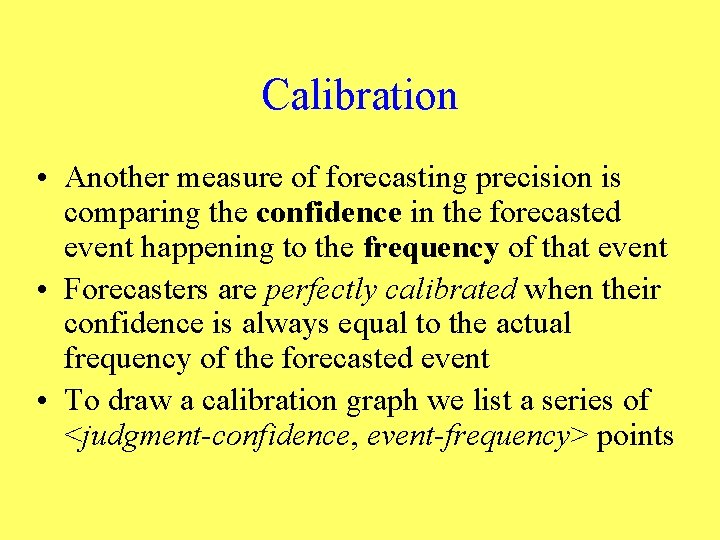

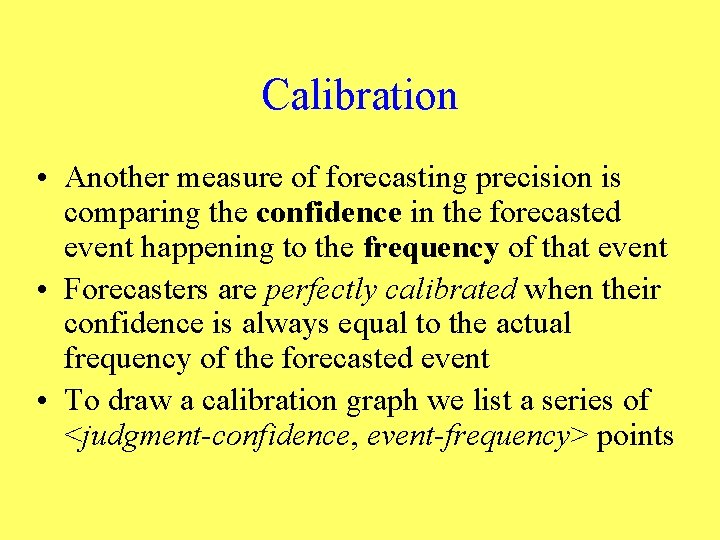

Calibration • Another measure of forecasting precision is comparing the confidence in the forecasted event happening to the frequency of that event • Forecasters are perfectly calibrated when their confidence is always equal to the actual frequency of the forecasted event • To draw a calibration graph we list a series of <judgment-confidence, event-frequency> points

A Calibration Graph Success Frequency 1 0. 5 0 0 0. 5 Judgment Probability 1

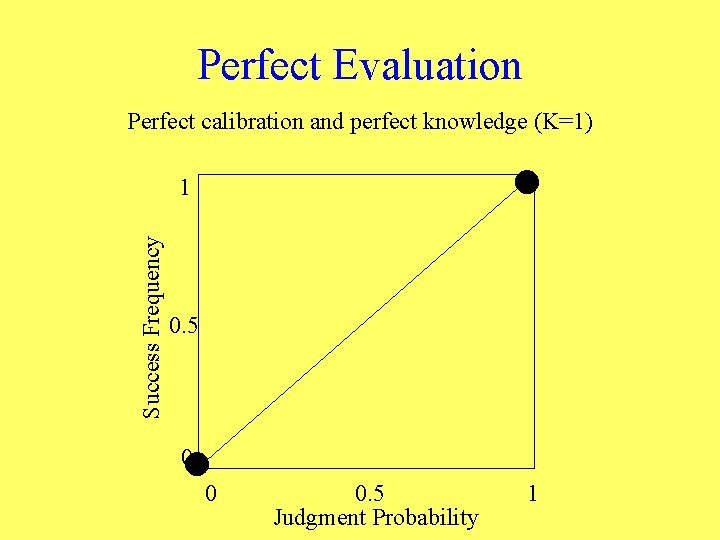

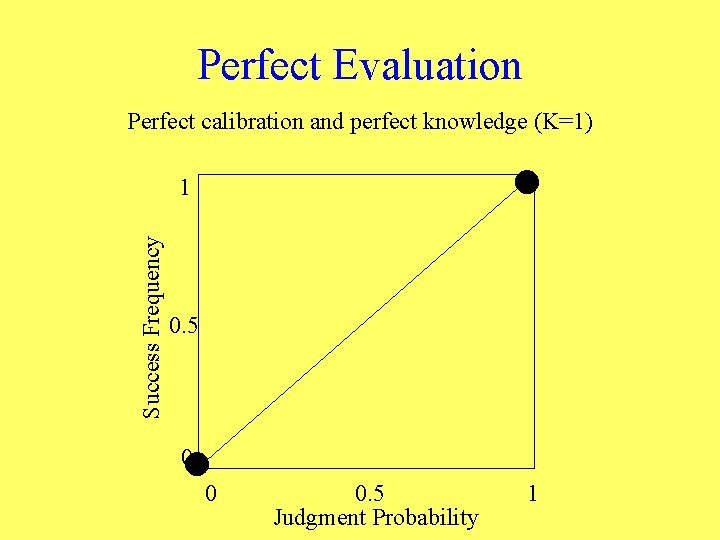

Perfect Evaluation Perfect calibration and perfect knowledge (K=1) Success Frequency 1 0. 5 0 0 0. 5 Judgment Probability 1

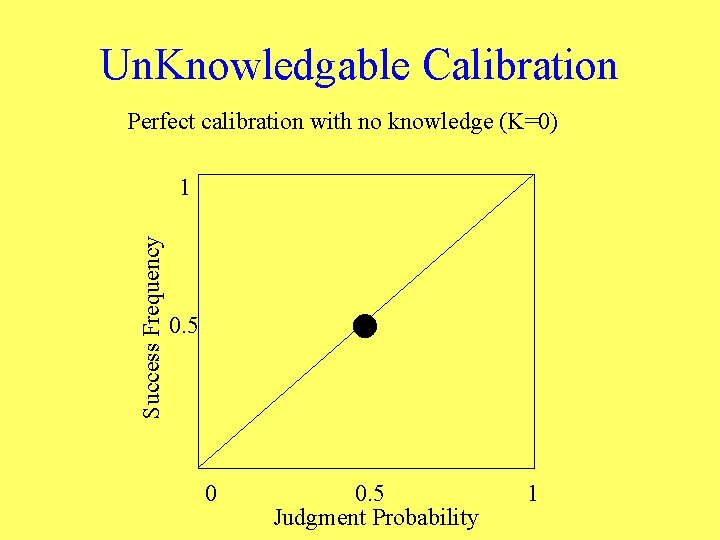

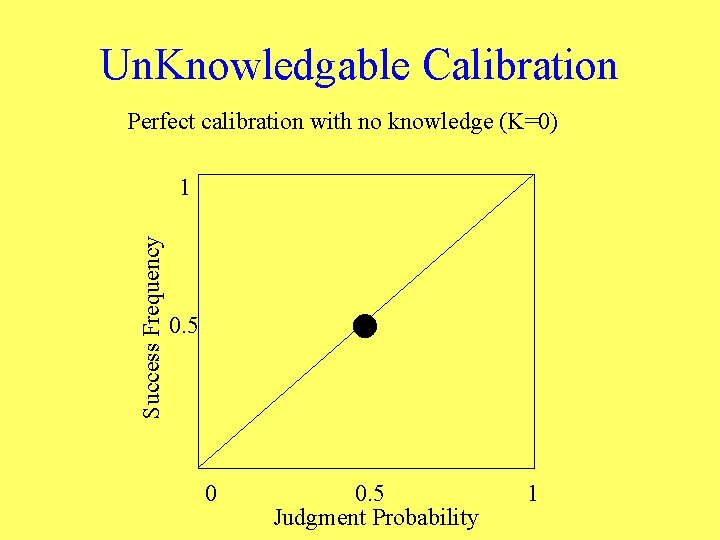

Un. Knowledgable Calibration Perfect calibration with no knowledge (K=0) Success Frequency 1 0. 5 0 0. 5 Judgment Probability 1

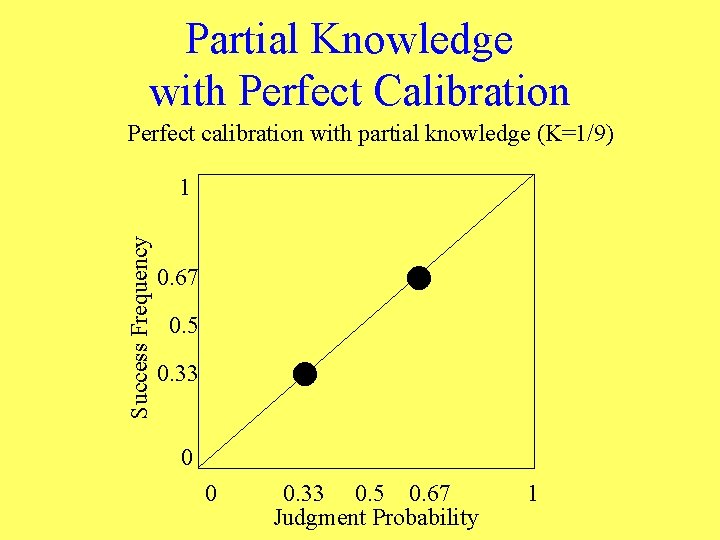

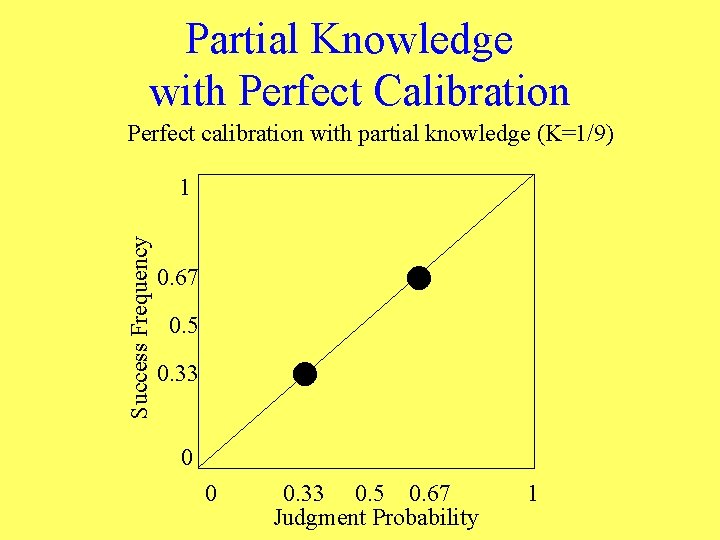

Partial Knowledge with Perfect Calibration Perfect calibration with partial knowledge (K=1/9) Success Frequency 1 0. 67 0. 5 0. 33 0 0 0. 33 0. 5 0. 67 Judgment Probability 1

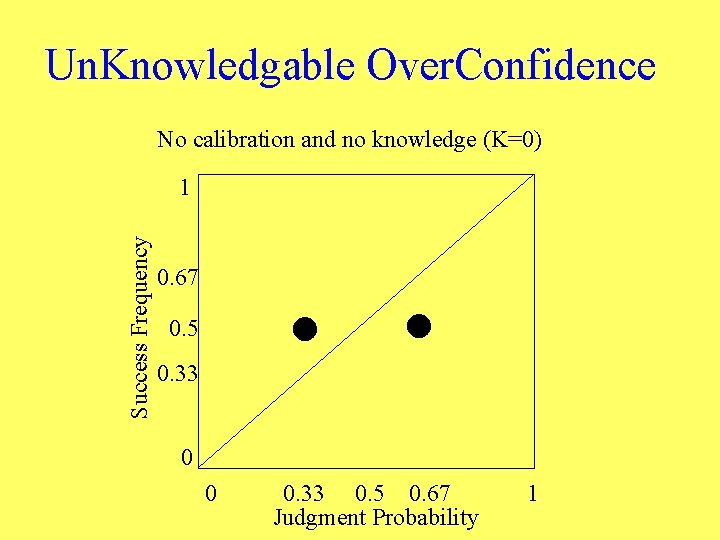

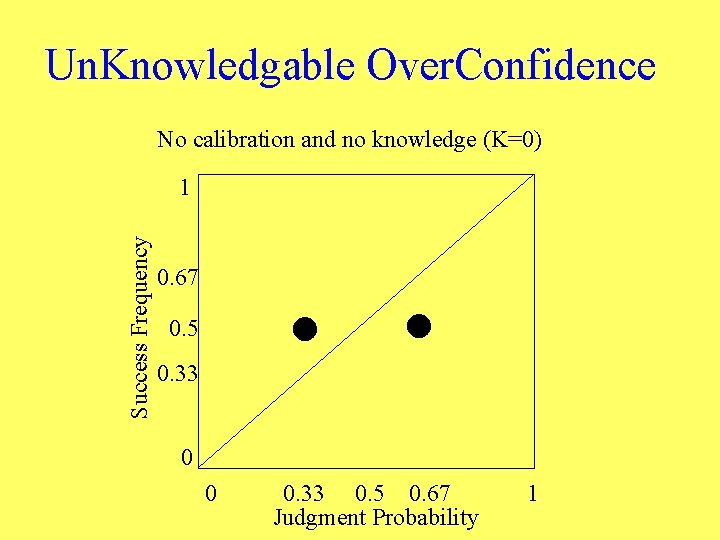

Un. Knowledgable Over. Confidence No calibration and no knowledge (K=0) Success Frequency 1 0. 67 0. 5 0. 33 0 0 0. 33 0. 5 0. 67 Judgment Probability 1

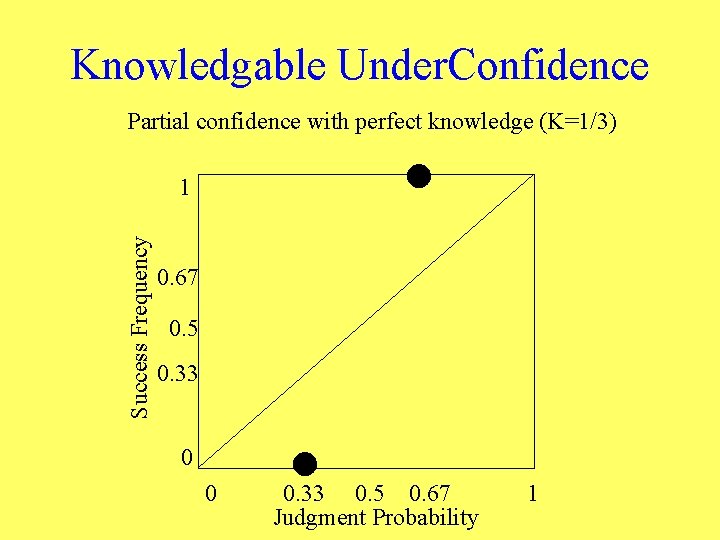

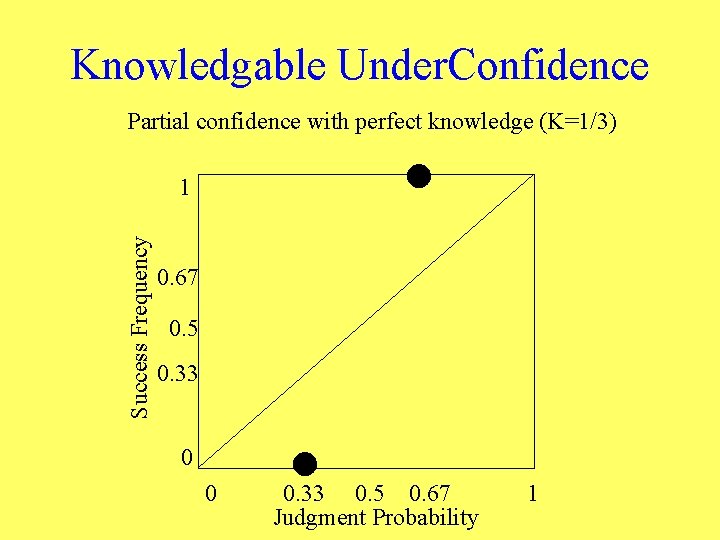

Knowledgable Under. Confidence Partial confidence with perfect knowledge (K=1/3) Success Frequency 1 0. 67 0. 5 0. 33 0 0 0. 33 0. 5 0. 67 Judgment Probability 1

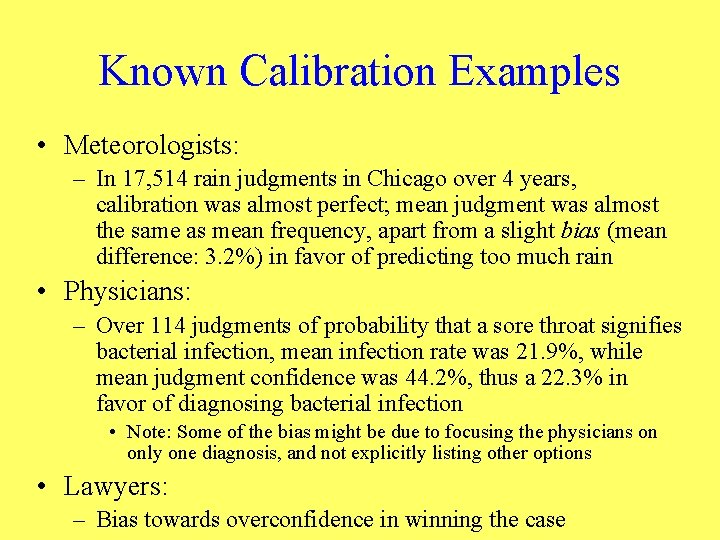

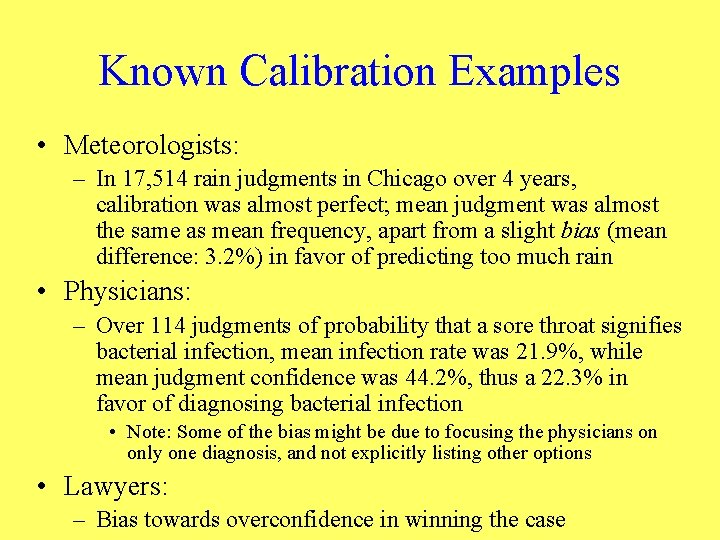

Known Calibration Examples • Meteorologists: – In 17, 514 rain judgments in Chicago over 4 years, calibration was almost perfect; mean judgment was almost the same as mean frequency, apart from a slight bias (mean difference: 3. 2%) in favor of predicting too much rain • Physicians: – Over 114 judgments of probability that a sore throat signifies bacterial infection, mean infection rate was 21. 9%, while mean judgment confidence was 44. 2%, thus a 22. 3% in favor of diagnosing bacterial infection • Note: Some of the bias might be due to focusing the physicians on only one diagnosis, and not explicitly listing other options • Lawyers: – Bias towards overconfidence in winning the case

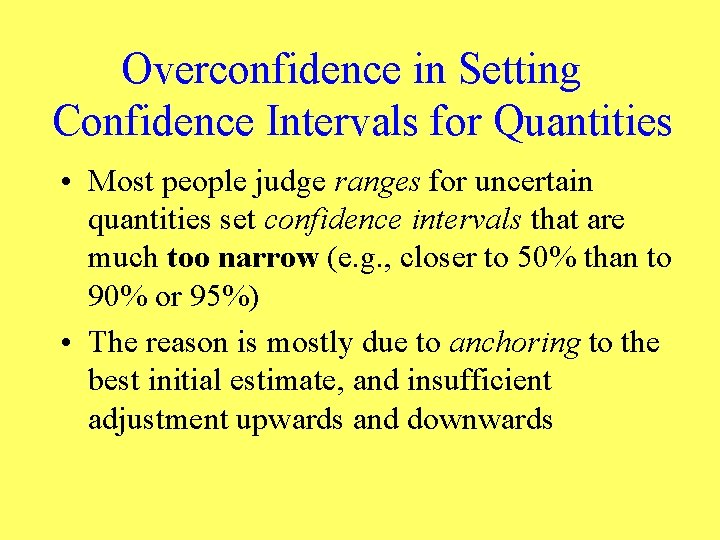

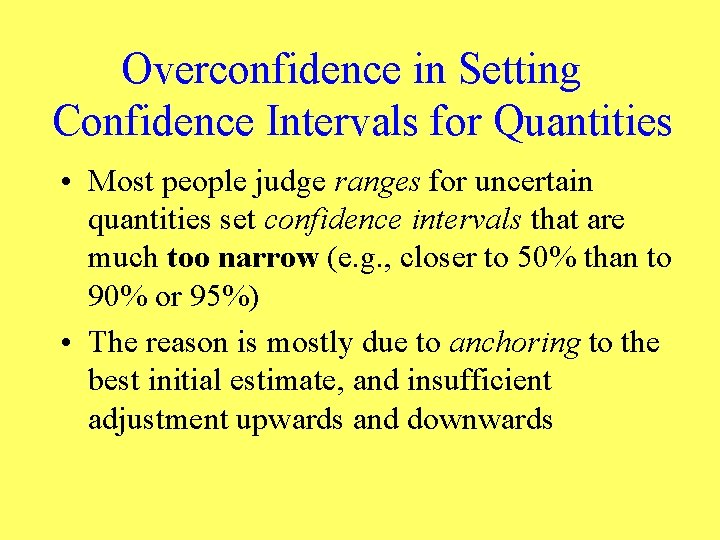

Overconfidence in Setting Confidence Intervals for Quantities • Most people judge ranges for uncertain quantities set confidence intervals that are much too narrow (e. g. , closer to 50% than to 90% or 95%) • The reason is mostly due to anchoring to the best initial estimate, and insufficient adjustment upwards and downwards

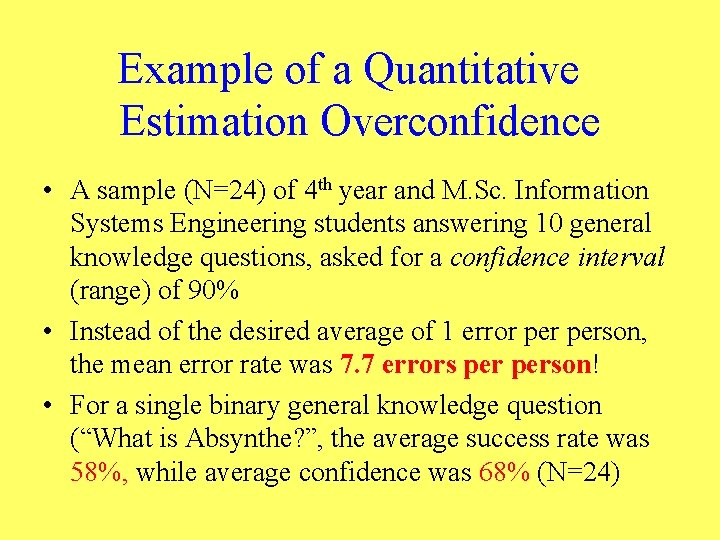

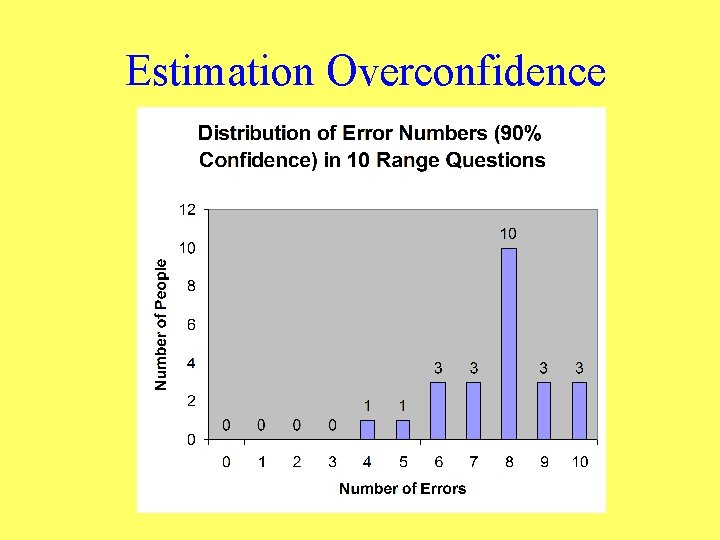

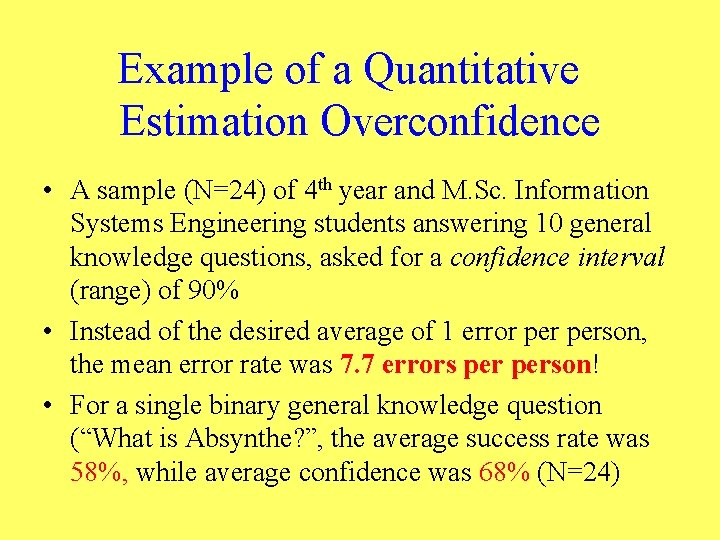

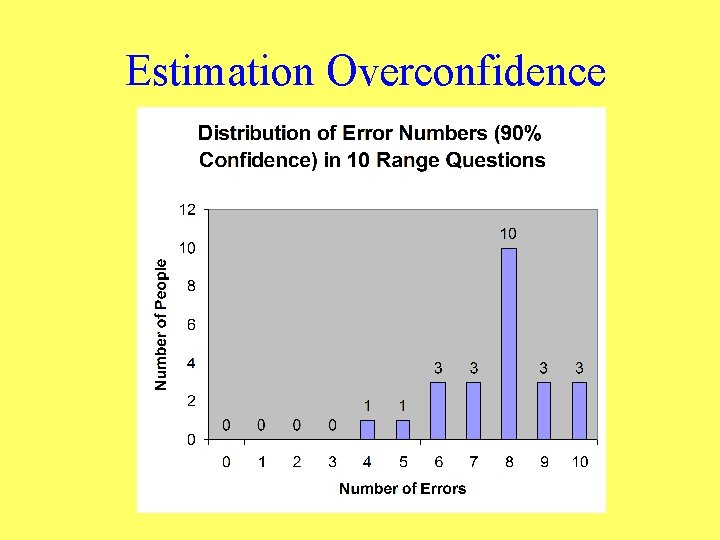

Example of a Quantitative Estimation Overconfidence • A sample (N=24) of 4 th year and M. Sc. Information Systems Engineering students answering 10 general knowledge questions, asked for a confidence interval (range) of 90% • Instead of the desired average of 1 error person, the mean error rate was 7. 7 errors person! • For a single binary general knowledge question (“What is Absynthe? ”, the average success rate was 58%, while average confidence was 68% (N=24)

Estimation Overconfidence

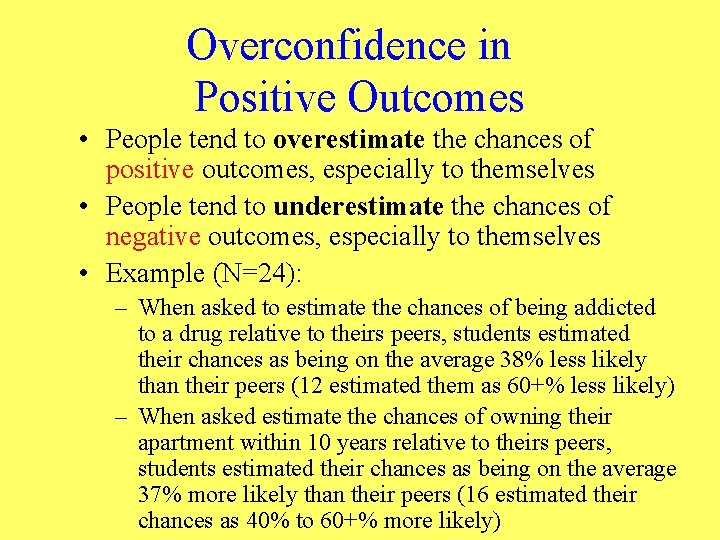

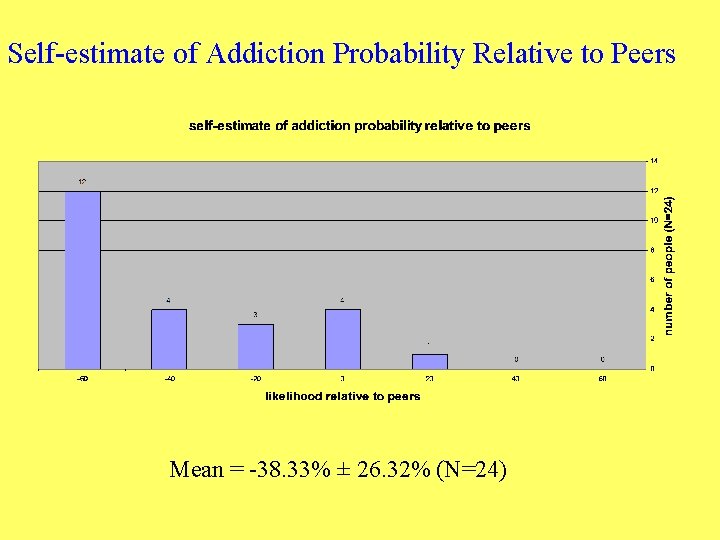

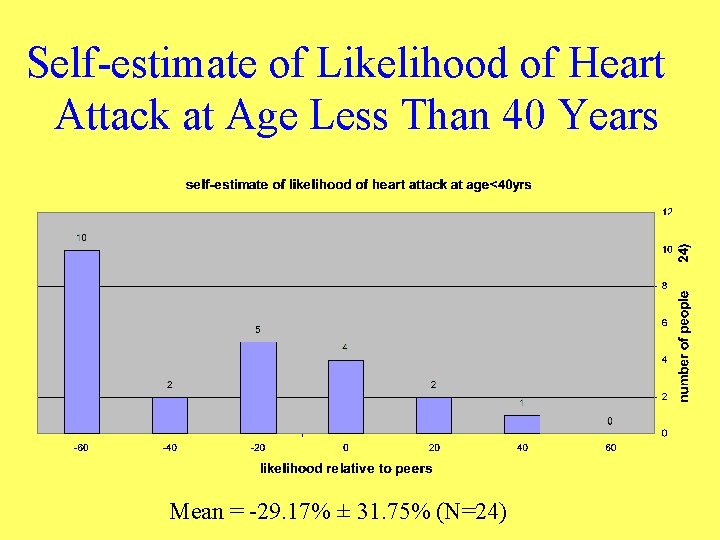

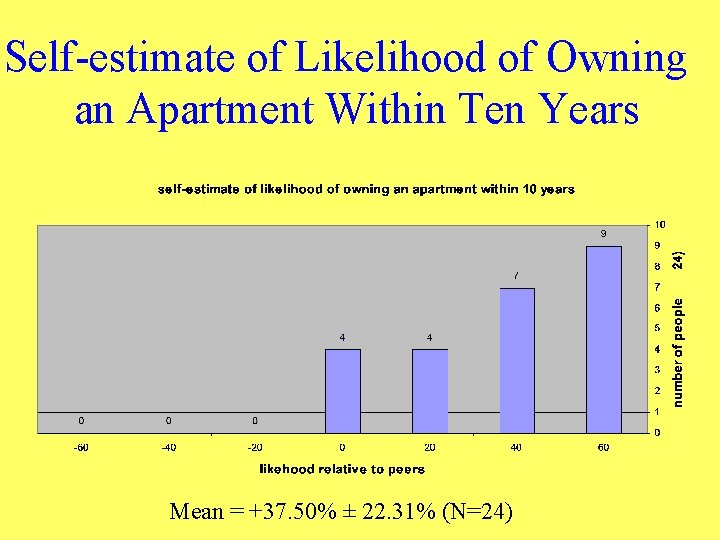

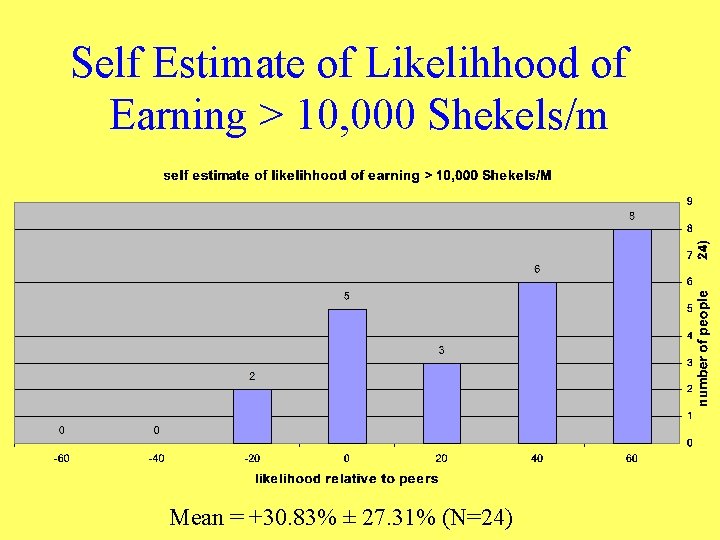

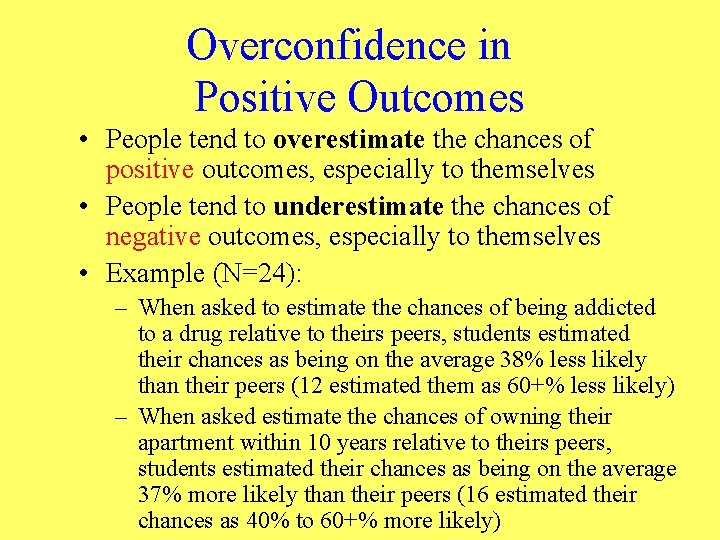

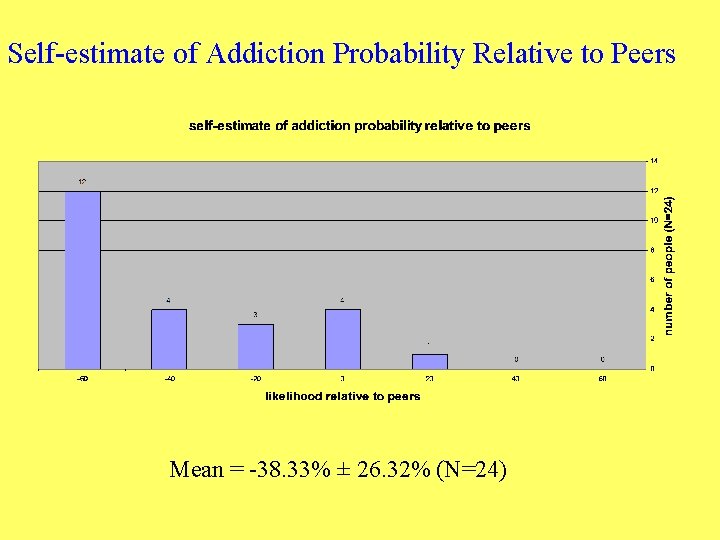

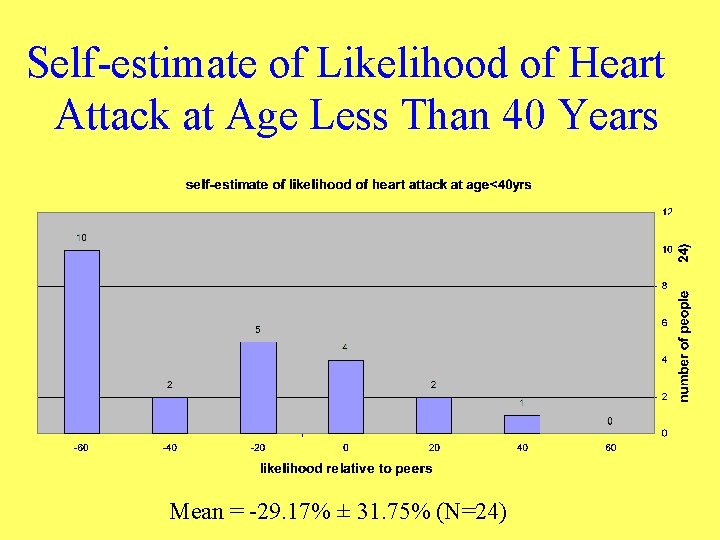

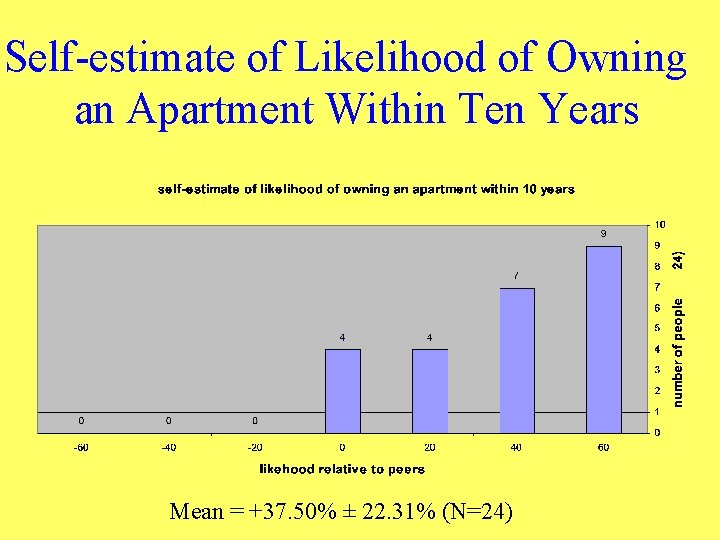

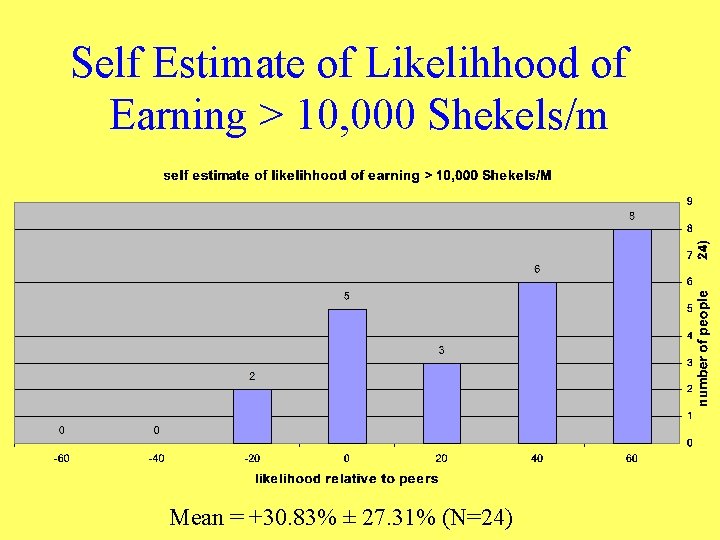

Overconfidence in Positive Outcomes • People tend to overestimate the chances of positive outcomes, especially to themselves • People tend to underestimate the chances of negative outcomes, especially to themselves • Example (N=24): – When asked to estimate the chances of being addicted to a drug relative to theirs peers, students estimated their chances as being on the average 38% less likely than their peers (12 estimated them as 60+% less likely) – When asked estimate the chances of owning their apartment within 10 years relative to theirs peers, students estimated their chances as being on the average 37% more likely than their peers (16 estimated their chances as 40% to 60+% more likely)

Self-estimate of Addiction Probability Relative to Peers Mean = -38. 33% ± 26. 32% (N=24)

Self-estimate of Likelihood of Heart Attack at Age Less Than 40 Years Mean = -29. 17% ± 31. 75% (N=24)

Self-estimate of Likelihood of Owning an Apartment Within Ten Years Mean = +37. 50% ± 22. 31% (N=24)

Self Estimate of Likelihhood of Earning > 10, 000 Shekels/m Mean = +30. 83% ± 27. 31% (N=24)

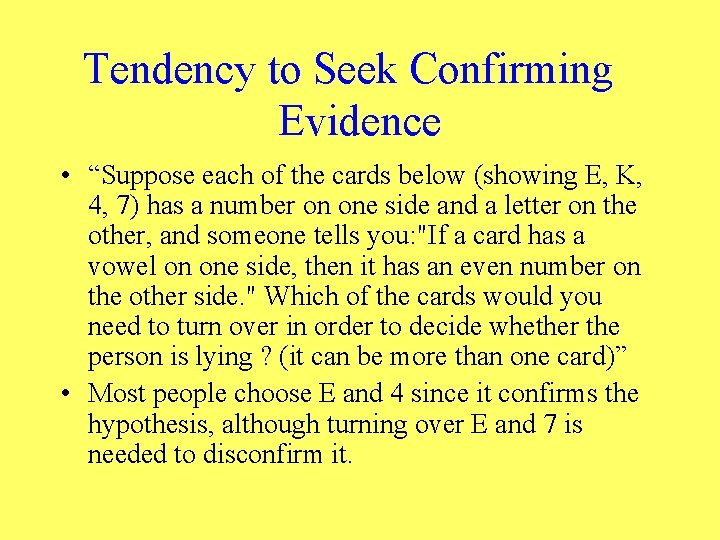

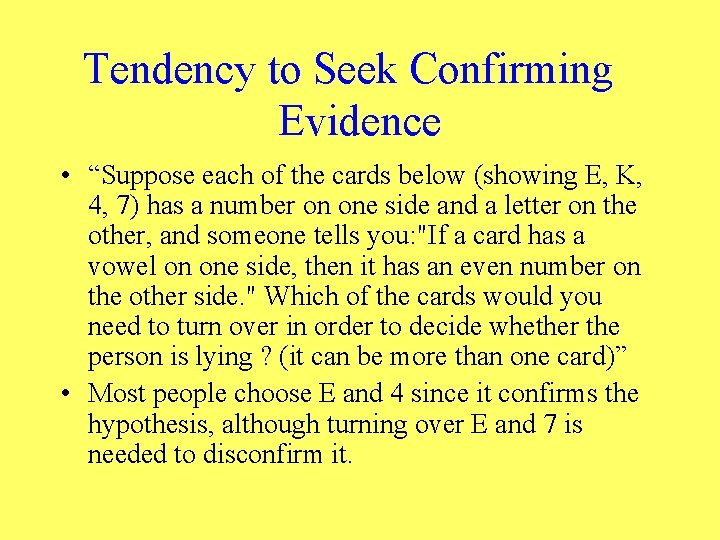

Tendency to Seek Confirming Evidence • “Suppose each of the cards below (showing E, K, 4, 7) has a number on one side and a letter on the other, and someone tells you: "If a card has a vowel on one side, then it has an even number on the other side. " Which of the cards would you need to turn over in order to decide whether the person is lying ? (it can be more than one card)” • Most people choose E and 4 since it confirms the hypothesis, although turning over E and 7 is needed to disconfirm it.

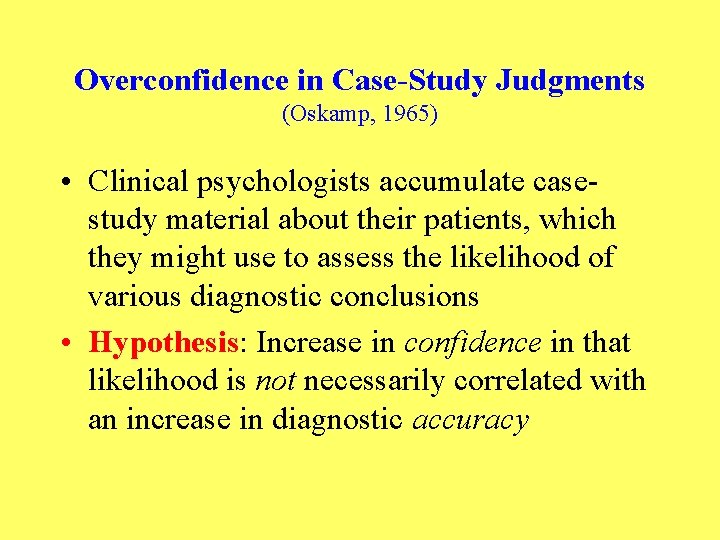

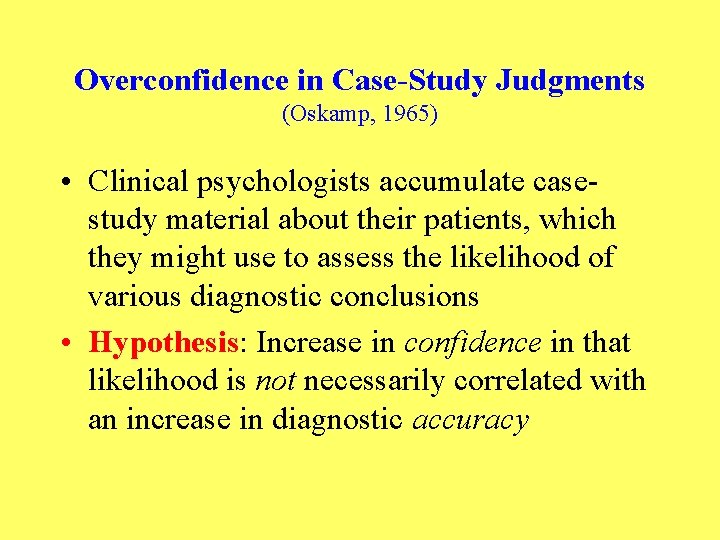

Overconfidence in Case-Study Judgments (Oskamp, 1965) • Clinical psychologists accumulate casestudy material about their patients, which they might use to assess the likelihood of various diagnostic conclusions • Hypothesis: Increase in confidence in that likelihood is not necessarily correlated with an increase in diagnostic accuracy

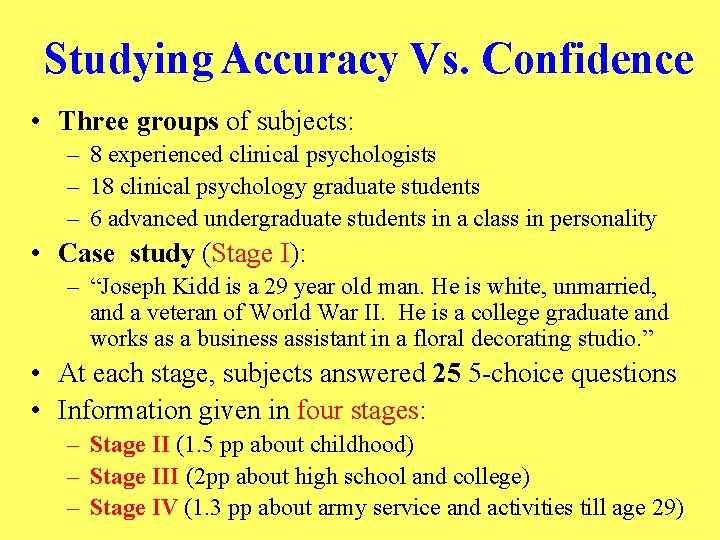

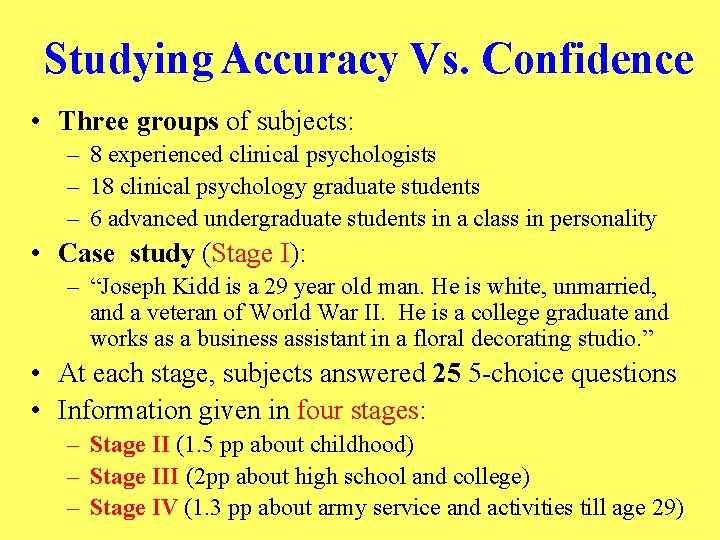

Studying Accuracy Vs. Confidence • Three groups of subjects: – 8 experienced clinical psychologists – 18 clinical psychology graduate students – 6 advanced undergraduate students in a class in personality • Case study (Stage I): – “Joseph Kidd is a 29 year old man. He is white, unmarried, and a veteran of World War II. He is a college graduate and works as a business assistant in a floral decorating studio. ” • At each stage, subjects answered 25 5 -choice questions • Information given in four stages: – Stage II (1. 5 pp about childhood) – Stage III (2 pp about high school and college) – Stage IV (1. 3 pp about army service and activities till age 29)

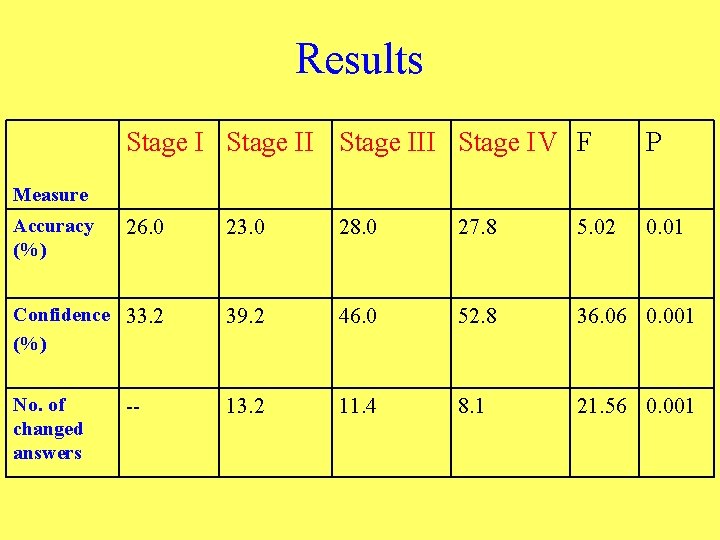

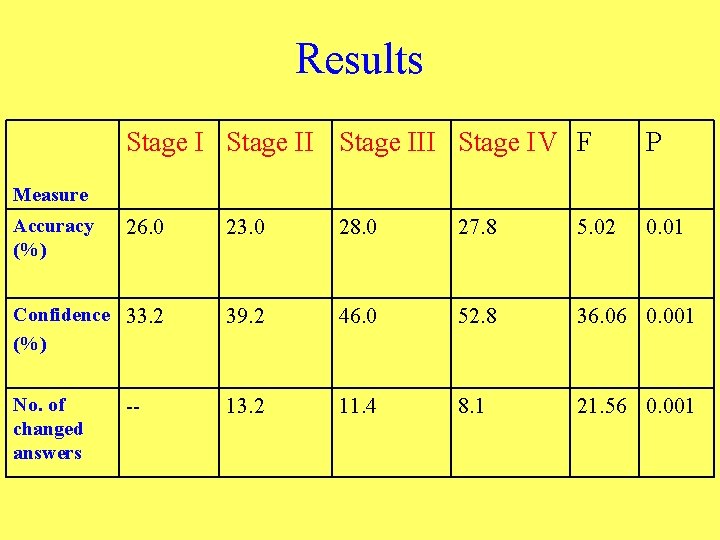

Results Stage III Stage IV F P 26. 0 23. 0 28. 0 27. 8 5. 02 0. 01 Confidence 33. 2 (%) 39. 2 46. 0 52. 8 36. 06 0. 001 No. of changed answers 13. 2 11. 4 8. 1 21. 56 0. 001 Measure Accuracy (%) --

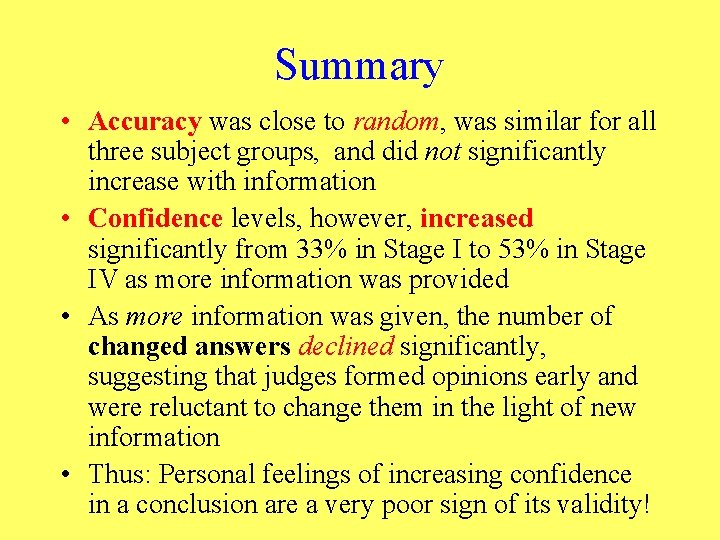

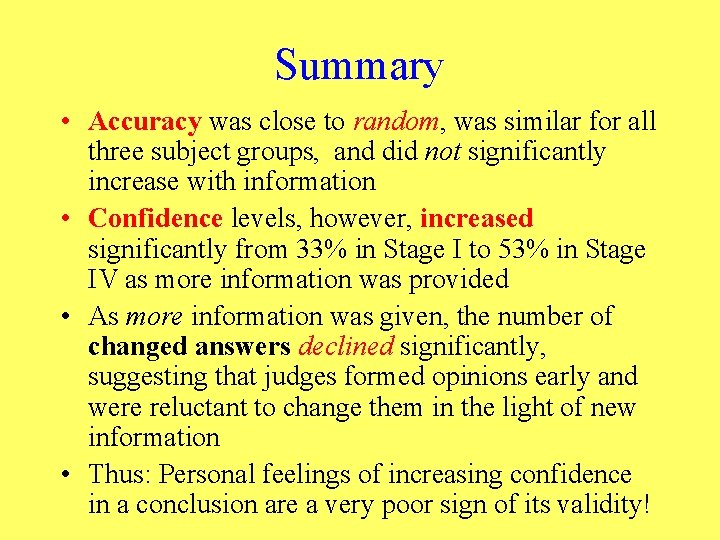

Summary • Accuracy was close to random, was similar for all three subject groups, and did not significantly increase with information • Confidence levels, however, increased significantly from 33% in Stage I to 53% in Stage IV as more information was provided • As more information was given, the number of changed answers declined significantly, suggesting that judges formed opinions early and were reluctant to change them in the light of new information • Thus: Personal feelings of increasing confidence in a conclusion are a very poor sign of its validity!