IPA Lentedagen 2006 Testing IPA Lentedagen 2006 Testing

- Slides: 25

IPA Lentedagen 2006: Testing IPA Lentedagen 2006 Testing for Dummies Judi Romijn jromijn@win. tue. nl OAS, TU/e 1

IPA Lentedagen 2006: Testing Outline • Terminology: What is. . . error/bug/fault/failure/testing? • Overview of the testing process – concept map – dimensions – topics of the Lentedagen presentations 2

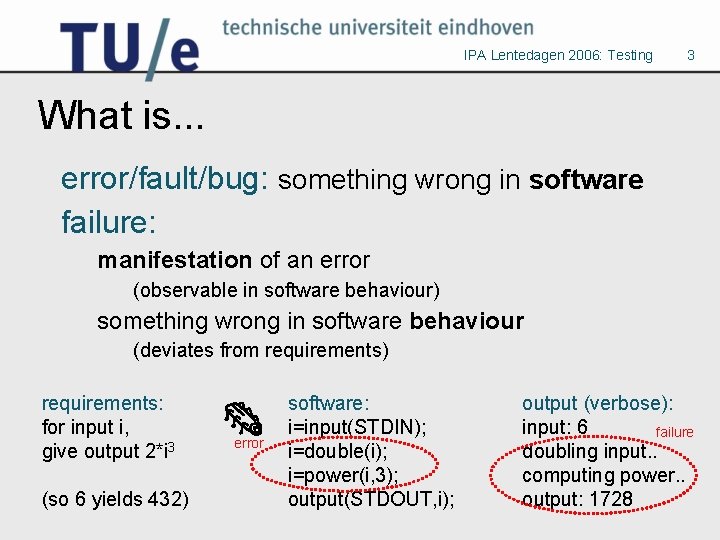

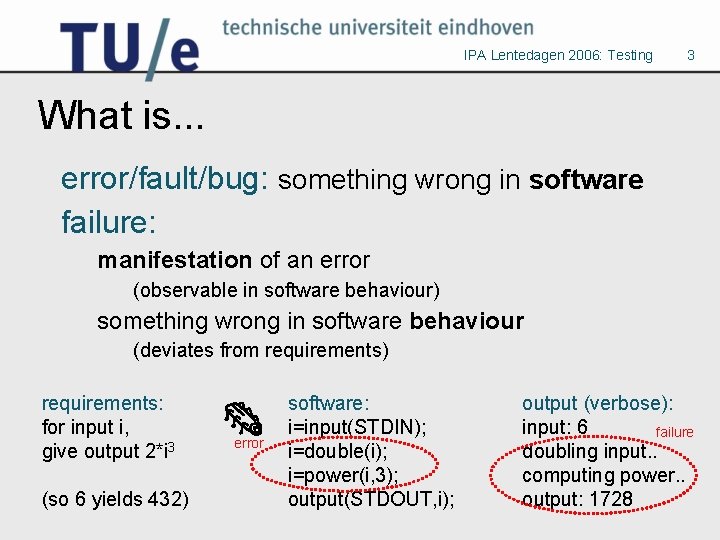

IPA Lentedagen 2006: Testing 3 What is. . . error/fault/bug: something wrong in software failure: manifestation of an error (observable in software behaviour) something wrong in software behaviour (deviates from requirements) requirements: for input i, give output 2*i 3 (so 6 yields 432) error software: i=input(STDIN); i=double(i); i=power(i, 3); output(STDOUT, i); output (verbose): input: 6 failure doubling input. . computing power. . output: 1728

IPA Lentedagen 2006: Testing What is. . . testing: test-to-fail by experiment, – find errors in software (Myers, 1979) – establish quality of software (Hetzel, 1988) a succesful test: test-to-pass – finds at least one error – passes (software works correctly) 4

IPA Lentedagen 2006: Testing 5 What’s been said? • Dijkstra: Testing can show the presence of bugs, but not the absence • Beizer: 1 st law: (Pesticide paradox) Every method you use to prevent or find bugs leaves a residue of subtler bugs, for which other methods are needed 2 nd law: Software complexity grows to the limits of our ability to manage it • Beizer: Testers are not better at test design than programmers are at code design • Humphreys: Coders introduce bugs at the rate of 4. 2 defects per hour of programming. If you crack the whip and force people to move more quickly, things get even worse. • . . . Ø Developing software & testing are truly difficult jobs! Ø Let’s see what goes on in the testing process

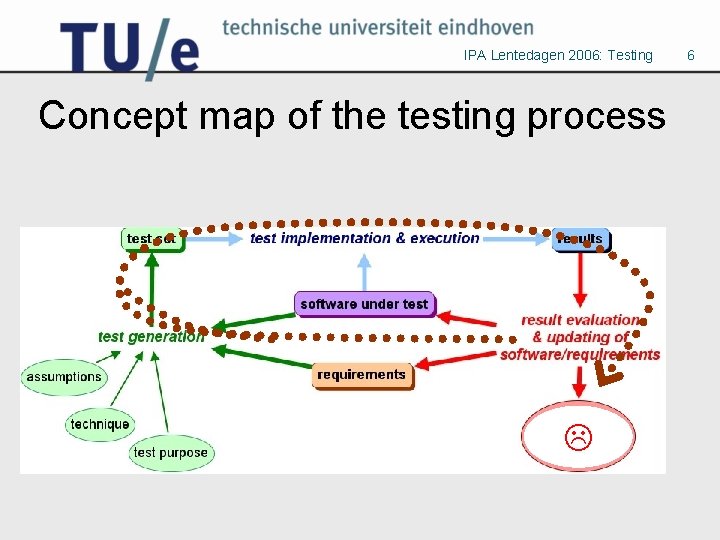

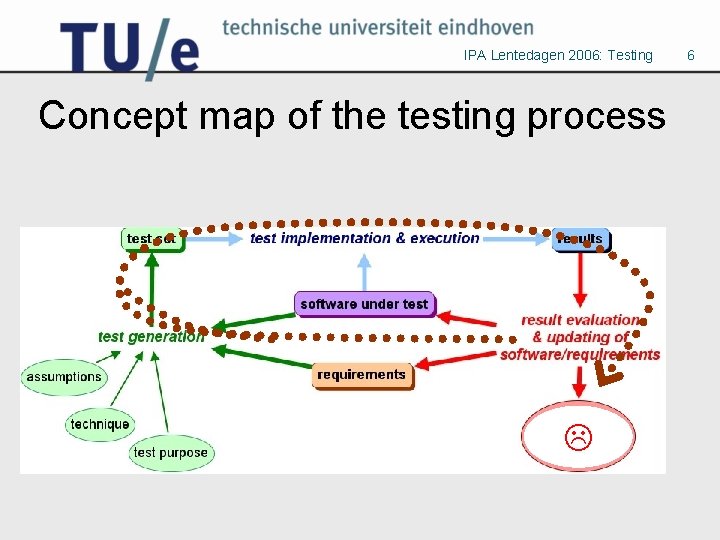

IPA Lentedagen 2006: Testing Concept map of the testing process 6

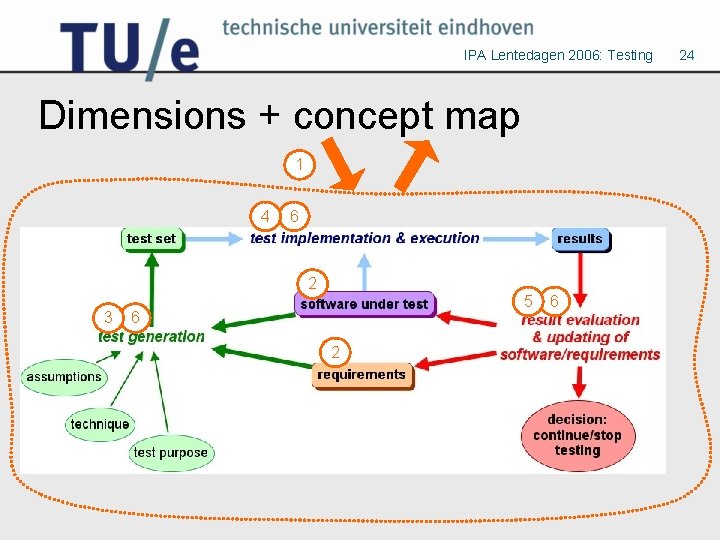

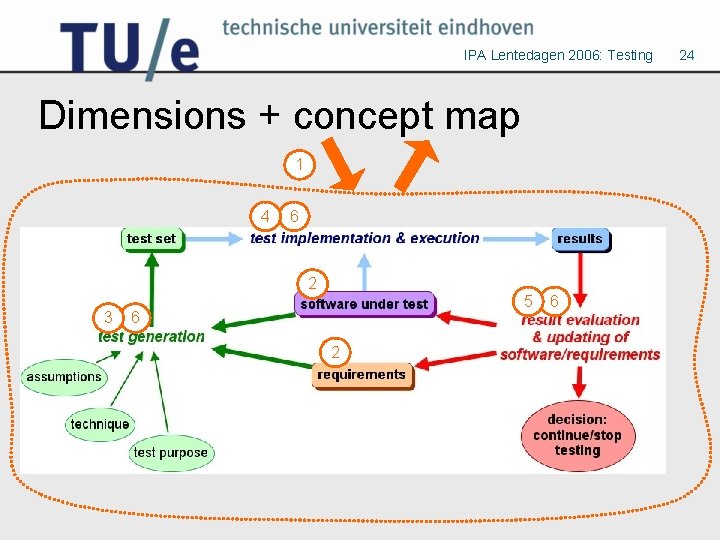

IPA Lentedagen 2006: Testing Dimensions of software testing 1. What is the surrounding software development process? (v-model/agile, unit/system/user level, planning, documentation, . . . ) 2. What is tested? • Software characteristics (design/code/binary, embedded? , language, . . . ) • Requirements (functional/performance/reliability/. . . , behaviour/data oriented, precision) 3. Which tests? • Purpose (kind of coding errors, missing/additional requirements, development/regression) • Technique (adequacy criterion: how to generate how many tests) • Assumptions (limitations, simplifications, heuristics) 4. How to test? (manual/automated, platform, reproducable) 5. How are the results evaluated? (quality model, priorities, risks) 6. Who performs which task? (programmer, tester, user, third party) • Test generation, implementation, execution, evaluation 7

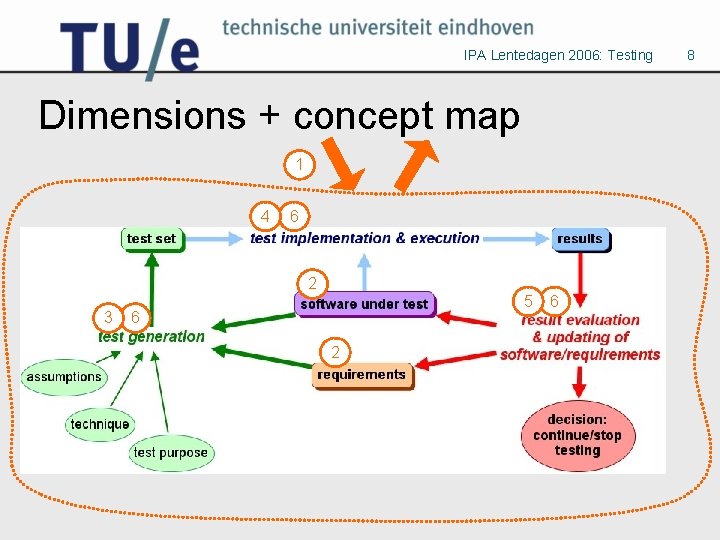

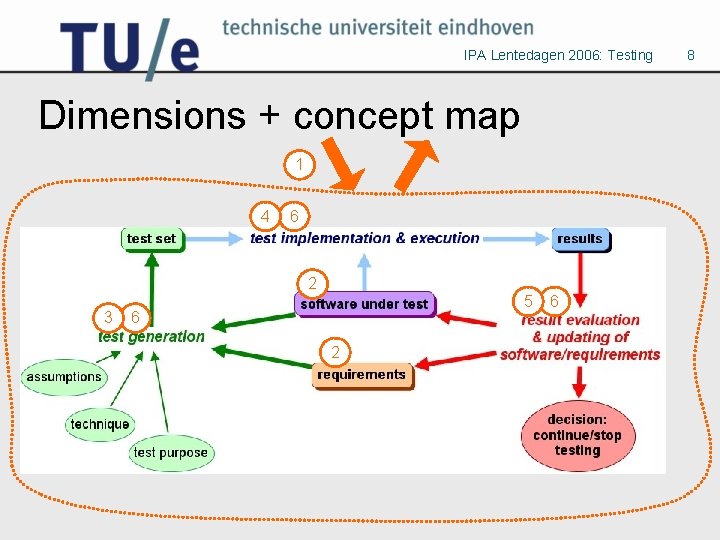

IPA Lentedagen 2006: Testing Dimensions + concept map 1 4 6 2 3 5 6 2 6 8

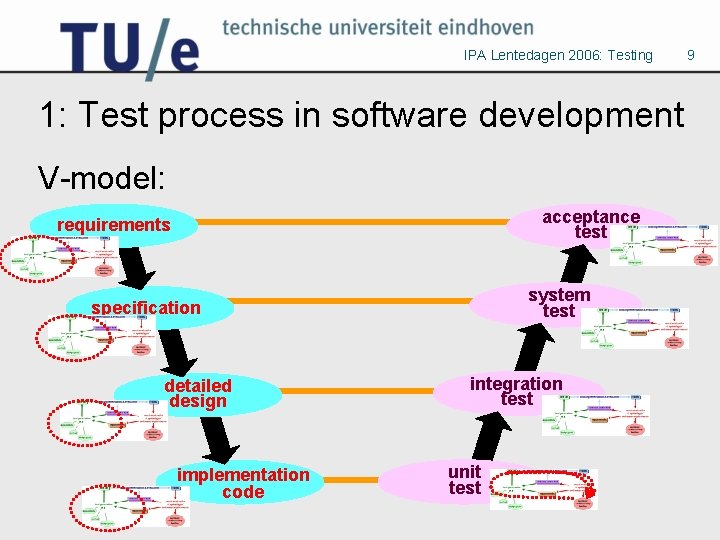

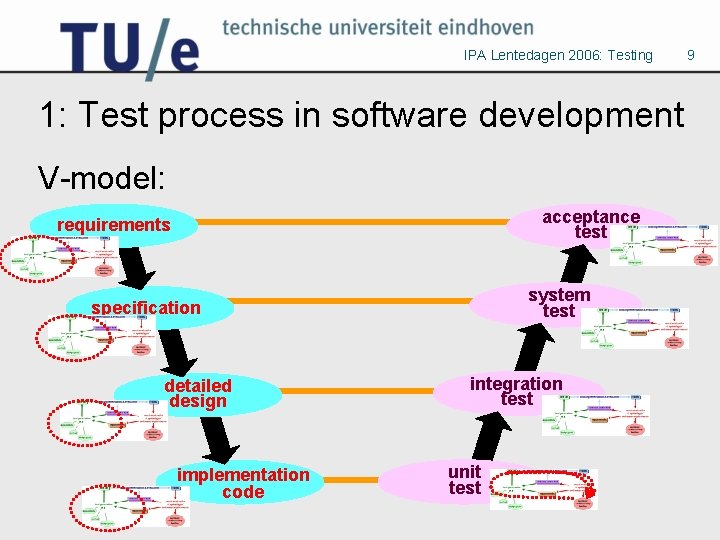

IPA Lentedagen 2006: Testing 1: Test process in software development V-model: acceptance test requirements system test specification detailed design implementation code integration test unit test 9

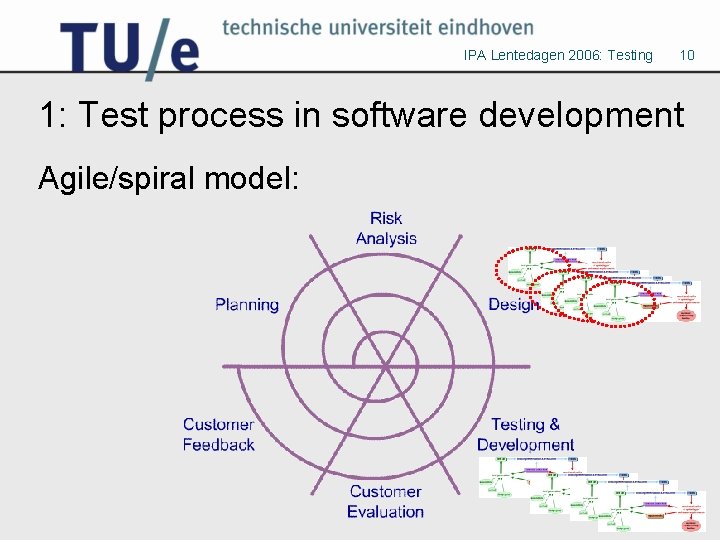

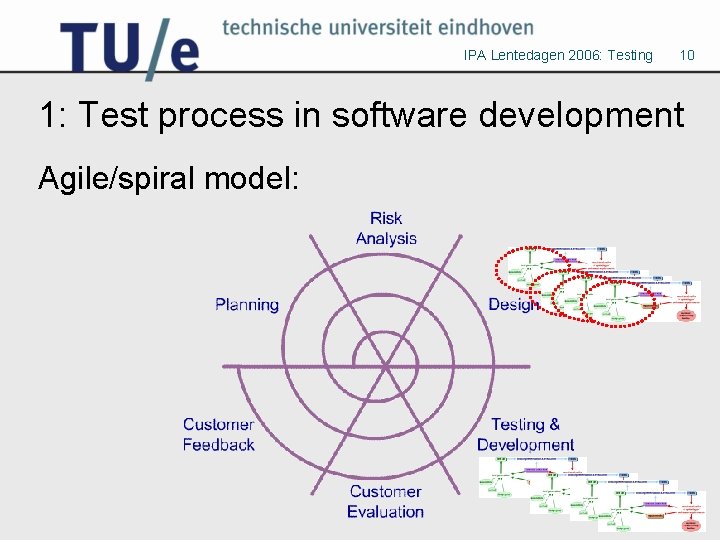

IPA Lentedagen 2006: Testing 10 1: Test process in software development Agile/spiral model:

IPA Lentedagen 2006: Testing 1: Test process in software development Topics in the Lentedagen presentations: • Integration of testing in entire development process with TTCN 3 – standardized language – different representation formats – architecture allowing for tool plugins • Test process management for manufacturing systems (ASML) – integration approach – test strategy 11

IPA Lentedagen 2006: Testing 2: Software • (phase) Unit vs. integrated system • (language) imperative/objectoriented/hardware design/binary/… • (interface) data-oriented/interactive/ embedded/distributed/… 12

IPA Lentedagen 2006: Testing 2: Requirements • functional: – the behaviour of the system should be correct – requirements can be precise, but often are not • non-functional: – performance, reliability, compatibility, robustness (stress/volume/recovery), usability, . . . – requirements are possibly quantifiable, and always vague 13

IPA Lentedagen 2006: Testing 2: Requirements Topics in the Lentedagen presentations: • models: – process algebra, automaton, labelled transition system, Spec# • coverage: – semantical: • by formal argument (see test generation) • by estimating potential errors, assigning weights – syntactical – risk-based (likelihood/impact) 14

IPA Lentedagen 2006: Testing 3: Test generation: purpose What errors to find? Related to software development phase: • unit phase typical typos, functional mistakes • integration interface errors • system/acceptance: errors w. r. t. requirements – unimplemented required features ‘software does not do all it should do’ – implemented non-required features ‘software does things it should not do’ 15

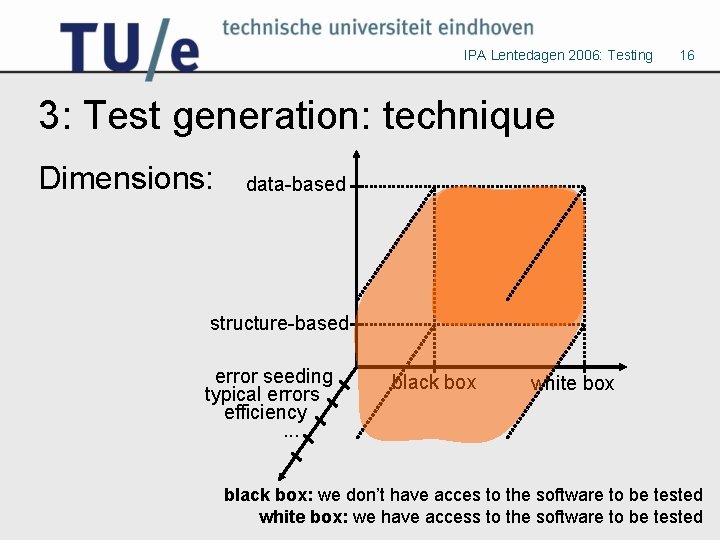

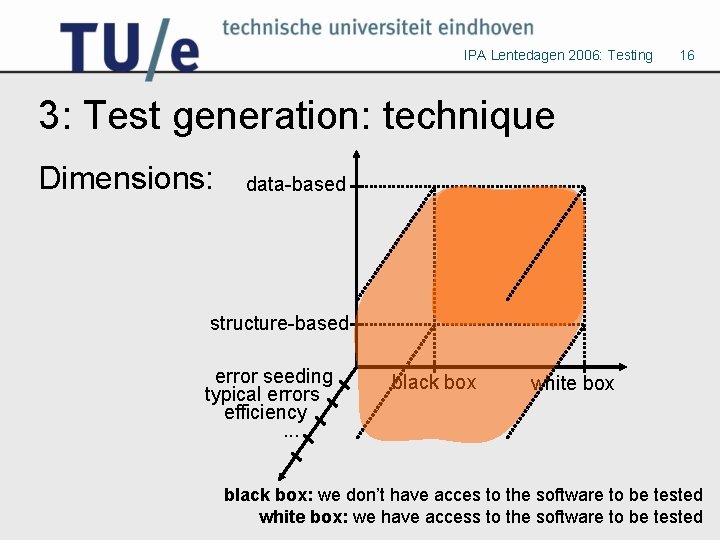

IPA Lentedagen 2006: Testing 16 3: Test generation: technique Dimensions: data-based structure-based error seeding typical errors efficiency. . . black box white box black box: we don’t have acces to the software to be tested white box: we have access to the software to be tested

IPA Lentedagen 2006: Testing 3: Test generation Assumptions, limitations • single/multiple fault: clustering/dependency of errors • perfect repair • heuristics: – knowledge about usual programming mistakes – history of the software – pesticide paradox • . . . 17

IPA Lentedagen 2006: Testing 3: Test generation Topics in the Lentedagen presentations: • Mostly black box, based on behavioural requirements: – process algebra, automaton, labelled transition system, Spec# • Techniques: – assume model of software is possible – scientific basis: formal relation between requirements and model of software • • Data values: constraint solving Synchronous vs. asynchronous communication Timing/hybrid aspects On-the-fly generation 18

IPA Lentedagen 2006: Testing 4: Test implementation & execution • Implementation – platform – batch? – inputs, outputs, coordination, . . . • Execution – – actual duration manual/interactive or automated in parallel on several systems reproducible? 19

IPA Lentedagen 2006: Testing 4: Test implementation & execution Topics in the Lentedagen presentations: • Intermediate language: TTCN 3 • Timing coordination • From abstract tests to concrete executable tests : – Automatic refinement – Data parameter constraint solving • On-the-fly: – automated, iterative 20

IPA Lentedagen 2006: Testing 5: Who performs which task • Software producer – programmer – testing department • Software consumer – end user – management • Third party – testers hired externally – certification organization 21

IPA Lentedagen 2006: Testing 6: Result evaluation • Per test: – pass/fail result – diagnostical output – which requirement was (not) met • Statistical information: – coverage (program code, requirements, input domain, output domain) – progress of testing (#errors found per test-time unit: decreasing? ) • Decide to: – stop (satisfied) – create/run more tests (not yet enough confidence) – adjust software and/or requirements, create/run more tests (errors to be repaired) 22

IPA Lentedagen 2006: Testing 6: Result evaluation Topics in the Lentedagen presentations: • Translate output back to abstract requirements – possibly on-the-fly • Statistical information: v 1. 2. 3. v cumulative times at which failures were observed fit statistical curve quality judgement: X % of errors found predict how many errors left, how long to continue assumptions: total #errors, perfect repair, single fault 23

IPA Lentedagen 2006: Testing Dimensions + concept map 1 4 6 2 3 5 6 24

IPA Lentedagen 2006: Testing Hope this helps. . . Enjoy the Lentedagen! 25