INTRODUCTION TO ARTIFICIAL INTELLIGENCE Unit 14 Sumaira Saeed

- Slides: 19

INTRODUCTION TO ARTIFICIAL INTELLIGENCE Unit 14

Sumaira Saeed Outline • Decision Tree Induction • Decision Tree Rule Extraction • Accuracy and Error Rates Fall 2019 2

Sumaira Saeed Fall 2019 3 Inducing a decision tree • There are many possible trees • How to find the most compact one • that is consistent with the data? • The key to building a decision tree - which attribute to choose in order to branch. • The heuristic is to choose the attribute with the minimum GINI/Entropy.

Sumaira Saeed Fall 2019 4 Algorithm for Decision Tree Induction • Basic algorithm (a greedy algorithm) • Tree is constructed in a top-down recursive manner • At start, all the training examples are at the root • Attributes are categorical • Examples are partitioned recursively based on selected attributes • Test attributes are selected on the basis of a heuristic or statistical measure (e. g. , GINI/Entropy) • Conditions for stopping partitioning • All examples for a given node belong to the same class • There are no remaining attributes for further partitioning – majority voting is employed for classifying the leaf • There are no examples left

Sumaira Saeed Fall 2019 5 Extracting Classification Rules from Trees • Represent the knowledge in the form of IF-THEN rules • One rule is created for each path from the root to a leaf • Each attribute-value pair along a path forms a conjunction. The leaf node holds the class prediction • Rules are easier for humans to understand • Example IF age = “<=30” AND student = “no” THEN buys_computer = “no” IF age = “<=30” AND student = “yes” THEN buys_computer = “yes” IF age = “ 31… 40” THEN buys_computer = “yes” IF age = “>40” AND credit_rating = “excellent” THEN buys_computer = “yes” IF age = “<=30” AND credit_rating = “fair” THEN buys_computer = “no”

Sumaira Saeed Fall 2019 6 Decision Tree Based Classification • Advantages: • Extremely fast at classifying unknown records • Easy to interpret for small-sized trees • Accuracy is comparable to other classification techniques for many simple data sets • One of the nicest things about decision trees is their ability to handle missing values in either numeric or categorical input fields by simply considering null to be a possible value with its own branch.

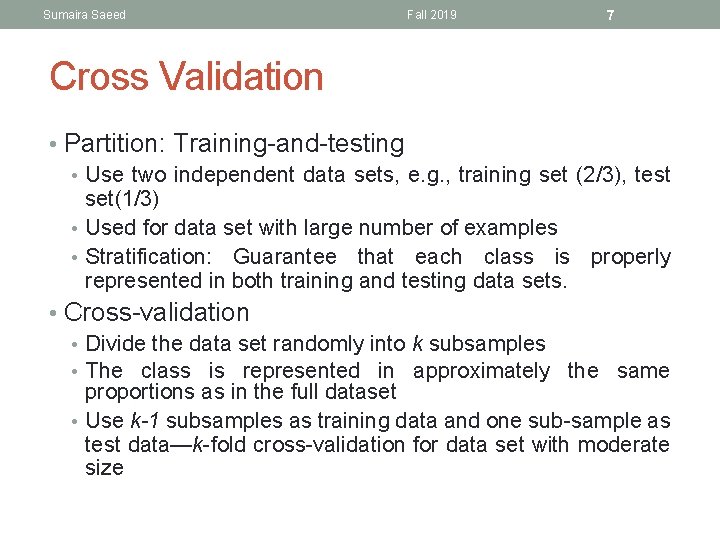

Sumaira Saeed Fall 2019 7 Cross Validation • Partition: Training-and-testing • Use two independent data sets, e. g. , training set (2/3), test set(1/3) • Used for data set with large number of examples • Stratification: Guarantee that each class is properly represented in both training and testing data sets. • Cross-validation • Divide the data set randomly into k subsamples • The class is represented in approximately the same proportions as in the full dataset • Use k-1 subsamples as training data and one sub-sample as test data—k-fold cross-validation for data set with moderate size

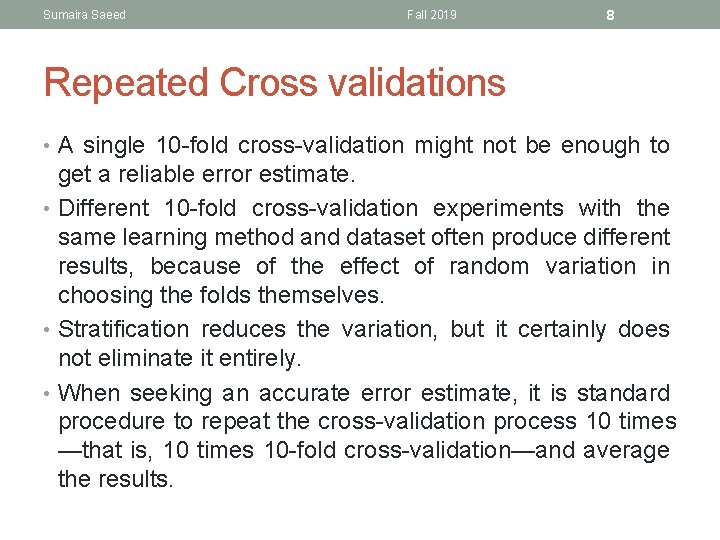

Sumaira Saeed Fall 2019 8 Repeated Cross validations • A single 10 -fold cross-validation might not be enough to get a reliable error estimate. • Different 10 -fold cross-validation experiments with the same learning method and dataset often produce different results, because of the effect of random variation in choosing the folds themselves. • Stratification reduces the variation, but it certainly does not eliminate it entirely. • When seeking an accurate error estimate, it is standard procedure to repeat the cross-validation process 10 times —that is, 10 times 10 -fold cross-validation—and average the results.

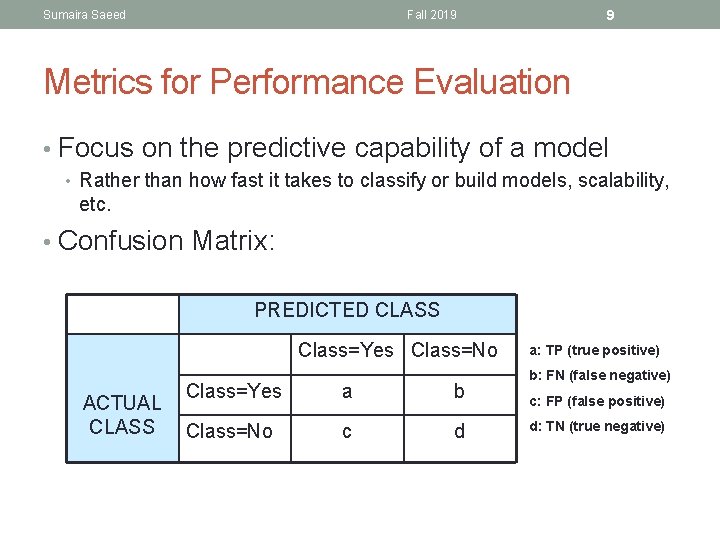

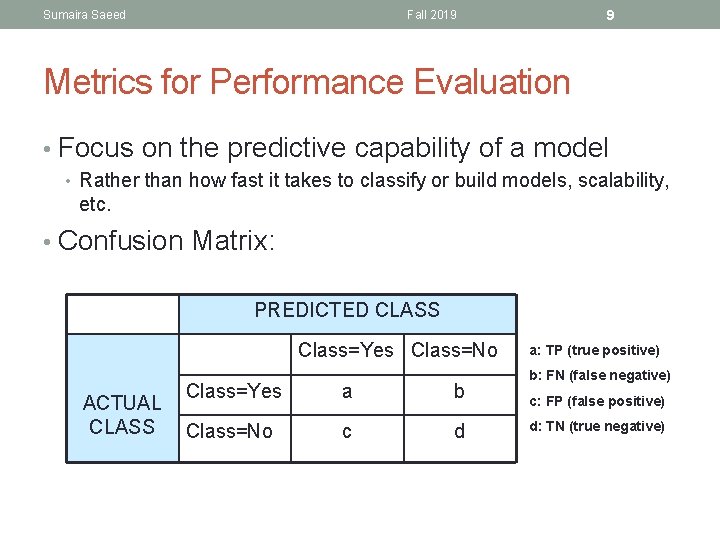

Sumaira Saeed 9 Fall 2019 Metrics for Performance Evaluation • Focus on the predictive capability of a model • Rather than how fast it takes to classify or build models, scalability, etc. • Confusion Matrix: PREDICTED CLASS Class=Yes Class=No ACTUAL CLASS Class=Yes a b Class=No c d a: TP (true positive) b: FN (false negative) c: FP (false positive) d: TN (true negative)

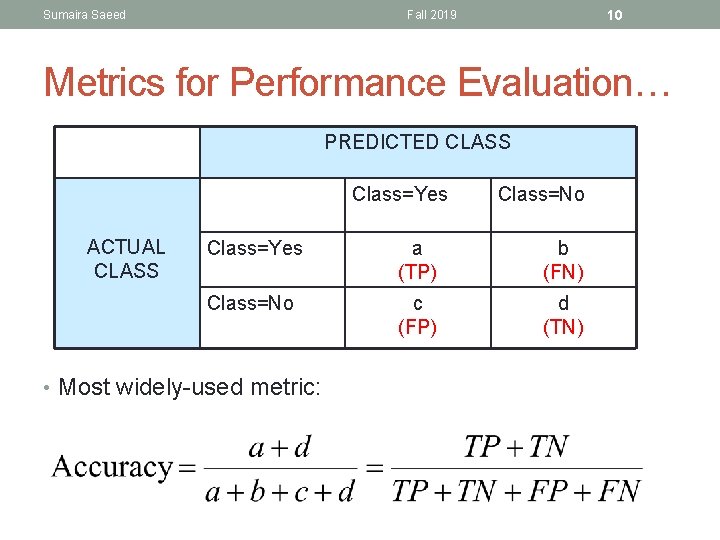

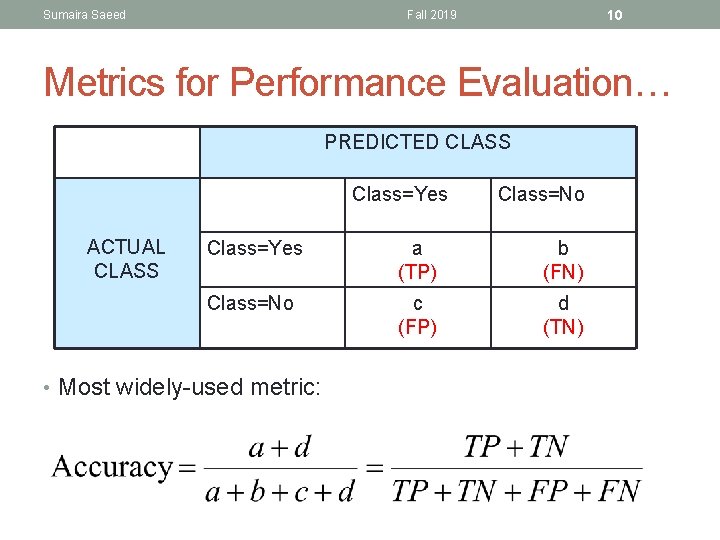

Sumaira Saeed 10 Fall 2019 Metrics for Performance Evaluation… PREDICTED CLASS Class=Yes ACTUAL CLASS Class=No Class=Yes a (TP) b (FN) Class=No c (FP) d (TN) • Most widely-used metric:

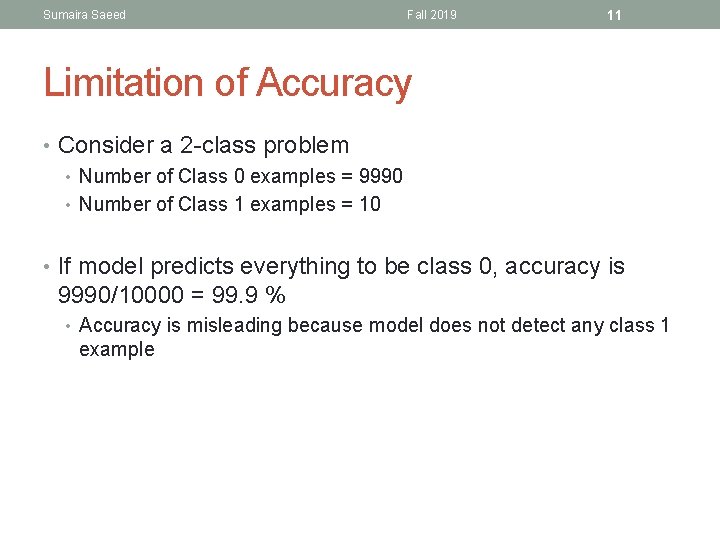

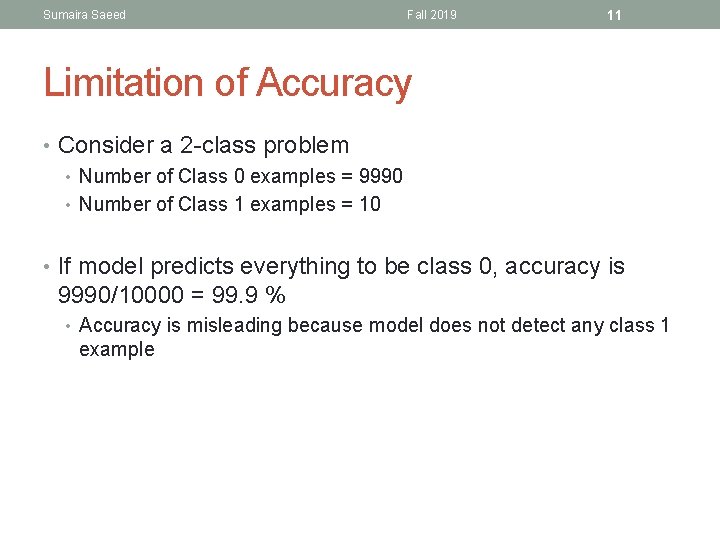

Sumaira Saeed Fall 2019 11 Limitation of Accuracy • Consider a 2 -class problem • Number of Class 0 examples = 9990 • Number of Class 1 examples = 10 • If model predicts everything to be class 0, accuracy is 9990/10000 = 99. 9 % • Accuracy is misleading because model does not detect any class 1 example

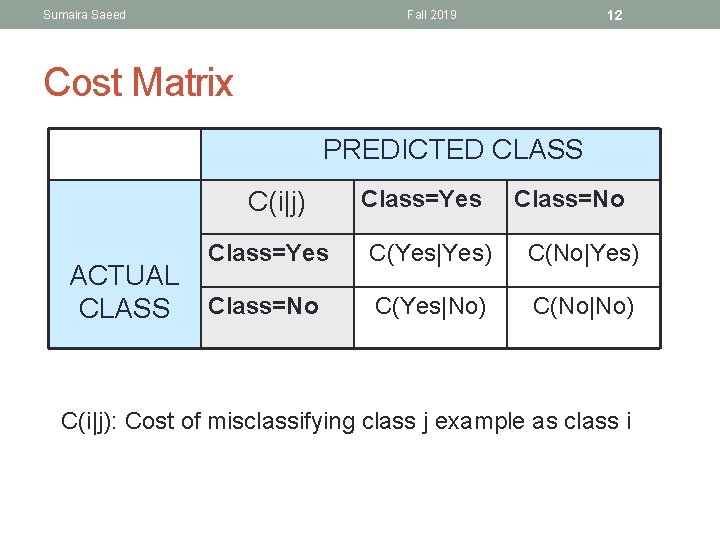

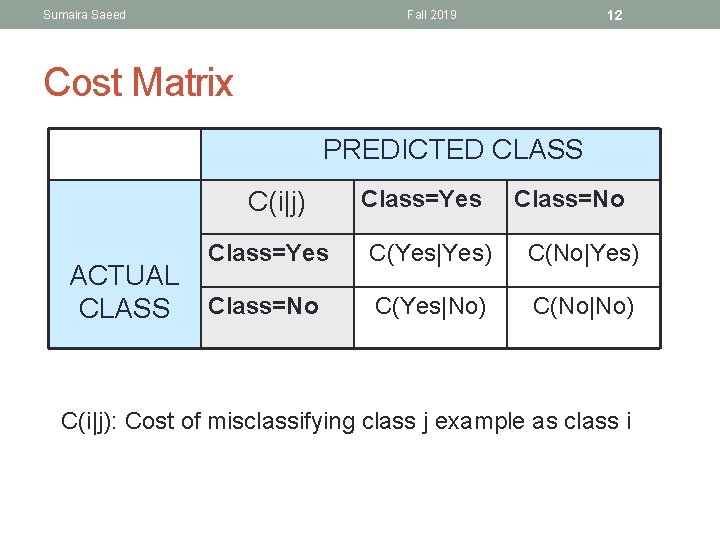

Sumaira Saeed 12 Fall 2019 Cost Matrix PREDICTED CLASS C(i|j) ACTUAL CLASS Class=Yes Class=No Class=Yes C(Yes|Yes) C(No|Yes) Class=No C(Yes|No) C(No|No) C(i|j): Cost of misclassifying class j example as class i

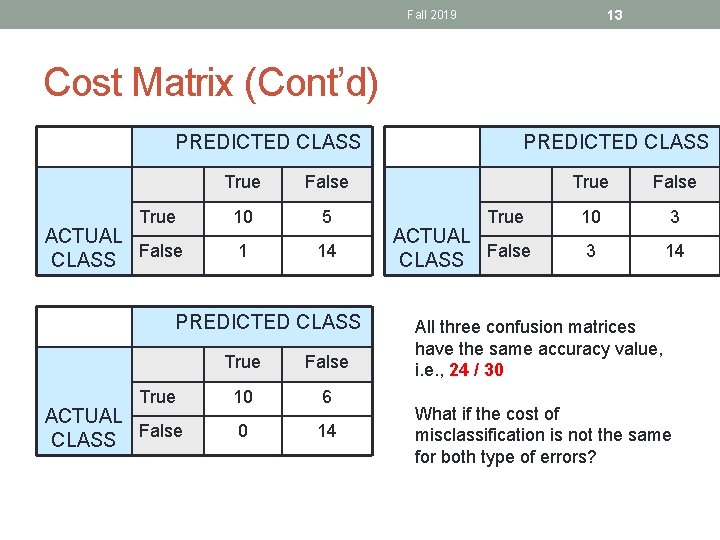

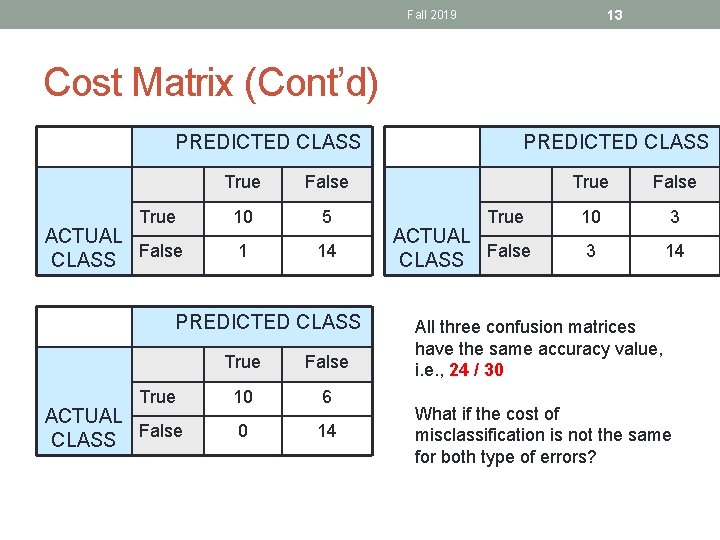

13 Fall 2019 Cost Matrix (Cont’d) PREDICTED CLASS True ACTUAL CLASS False True False 10 5 1 14 PREDICTED CLASS True ACTUAL CLASS False Sumaira Saeed True False 10 6 0 14 PREDICTED CLASS True ACTUAL CLASS False True False 10 3 3 14 All three confusion matrices have the same accuracy value, i. e. , 24 / 30 What if the cost of misclassification is not the same for both type of errors?

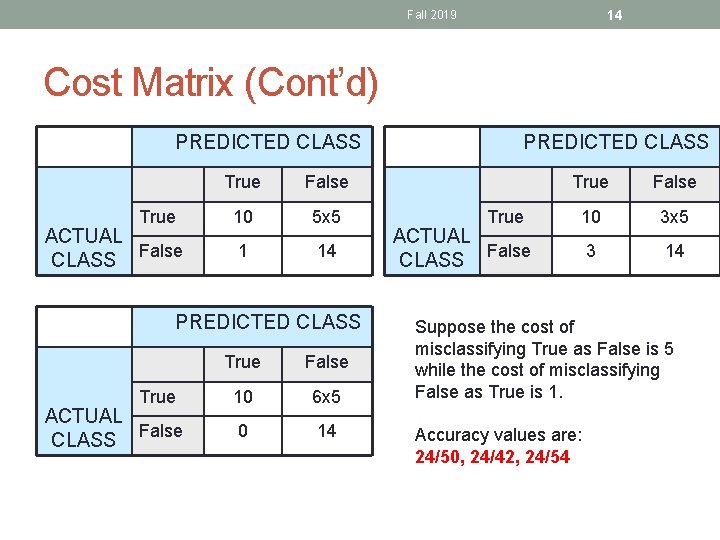

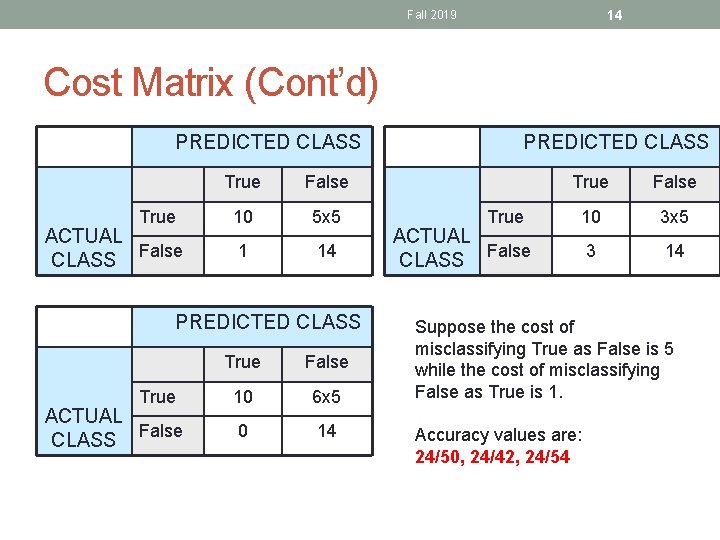

14 Fall 2019 Cost Matrix (Cont’d) PREDICTED CLASS True ACTUAL CLASS False True False 10 5 x 5 1 14 PREDICTED CLASS True ACTUAL CLASS False Sumaira Saeed True False 10 6 x 5 0 14 PREDICTED CLASS True ACTUAL CLASS False True False 10 3 x 5 3 14 Suppose the cost of misclassifying True as False is 5 while the cost of misclassifying False as True is 1. Accuracy values are: 24/50, 24/42, 24/54

15 Fall 2019 Cost Matrix (Cont’d) PREDICTED CLASS True ACTUAL CLASS False True False 10 5 x 4 1 14 PREDICTED CLASS True ACTUAL CLASS False Sumaira Saeed True False 10 6 x 4 0 14 PREDICTED CLASS True ACTUAL CLASS False True False 10 3 x 4 3 14 Suppose the cost of misclassifying True as False is 4 while the cost of misclassifying False as True is 1. Accuracy values are: 24/45, 24/39, 24/48

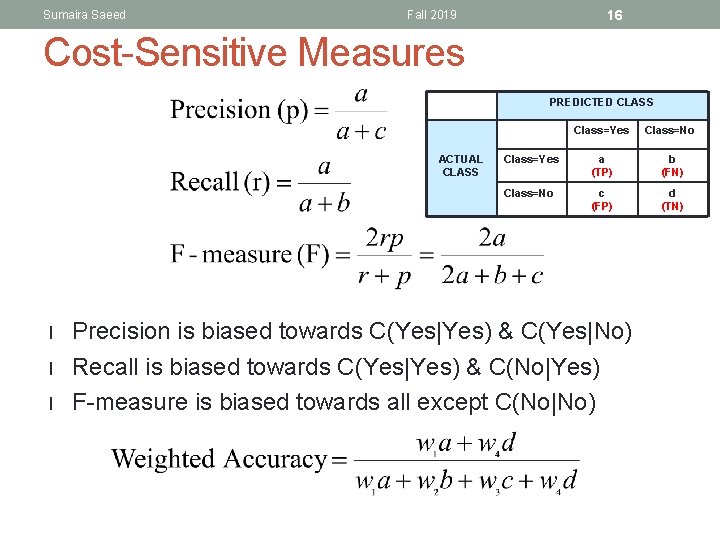

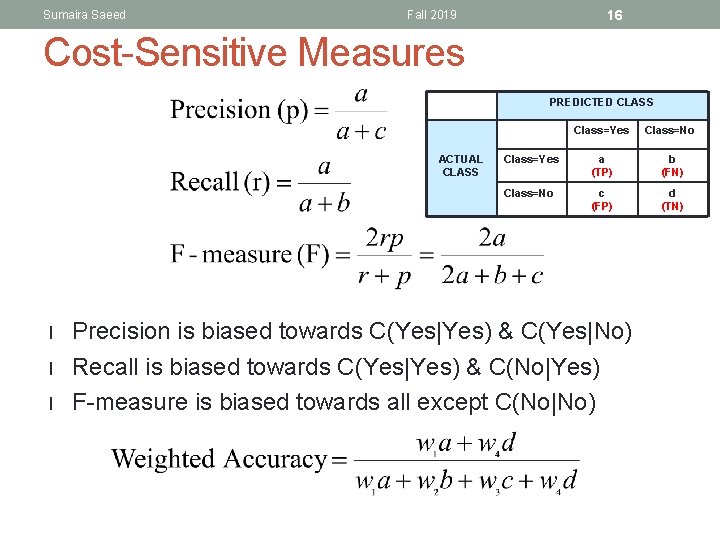

Sumaira Saeed 16 Fall 2019 Cost-Sensitive Measures PREDICTED CLASS ACTUAL CLASS l l l Class=Yes Class=No Class=Yes a (TP) b (FN) Class=No c (FP) d (TN) Precision is biased towards C(Yes|Yes) & C(Yes|No) Recall is biased towards C(Yes|Yes) & C(No|Yes) F-measure is biased towards all except C(No|No)

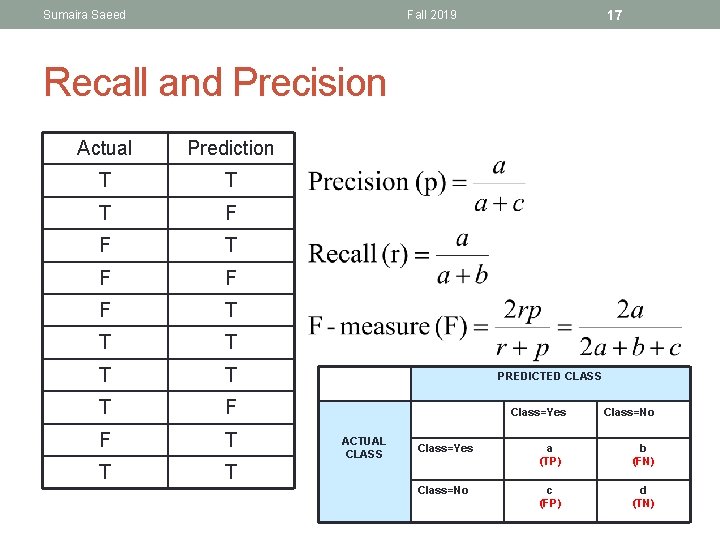

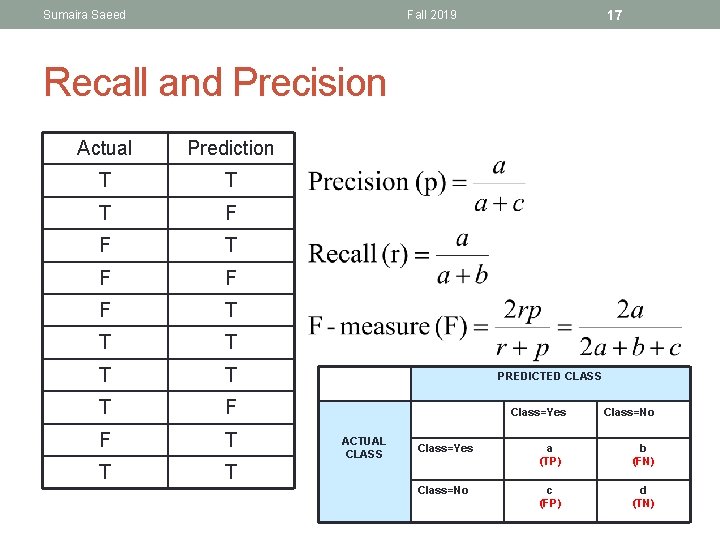

Sumaira Saeed 17 Fall 2019 Recall and Precision Actual Prediction T T T F F F T T T PREDICTED CLASS Class=Yes ACTUAL CLASS Class=No Class=Yes a (TP) b (FN) Class=No c (FP) d (TN)

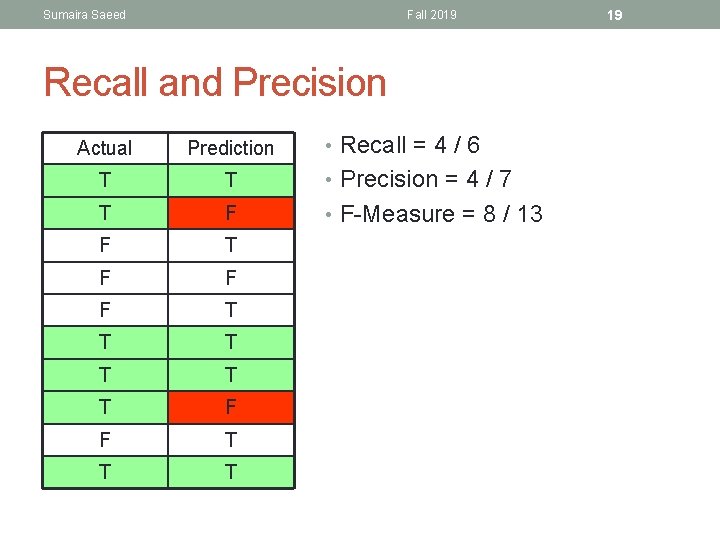

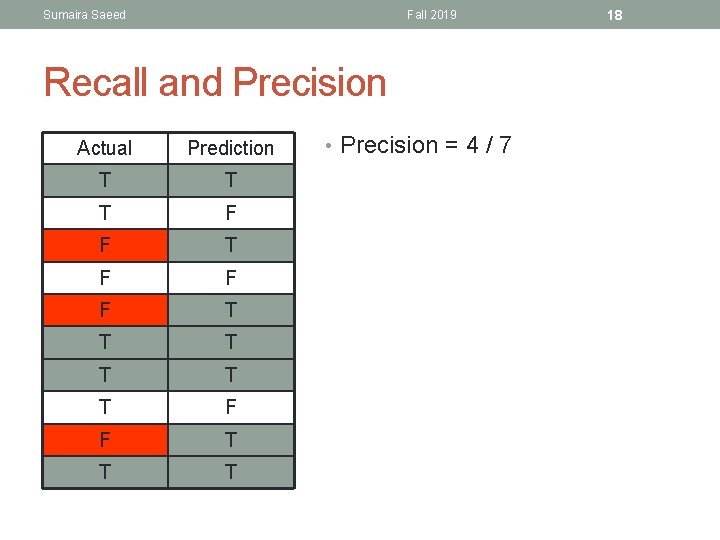

Sumaira Saeed Fall 2019 Recall and Precision Actual Prediction T T T F F F T T T • Precision = 4 / 7 18

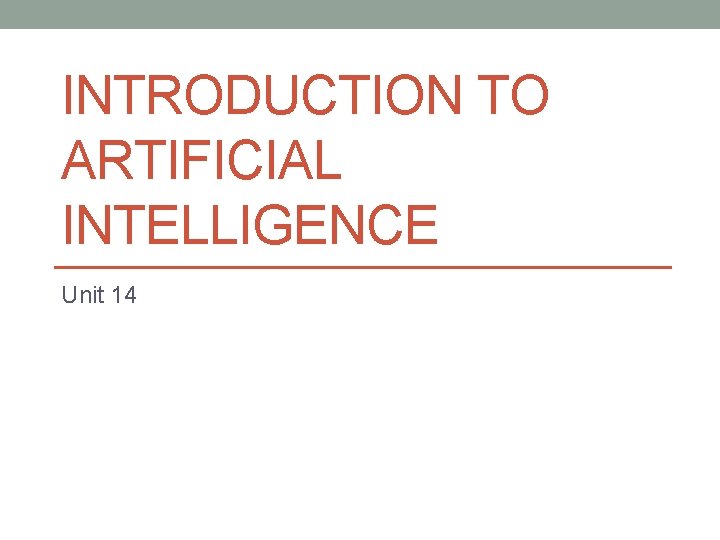

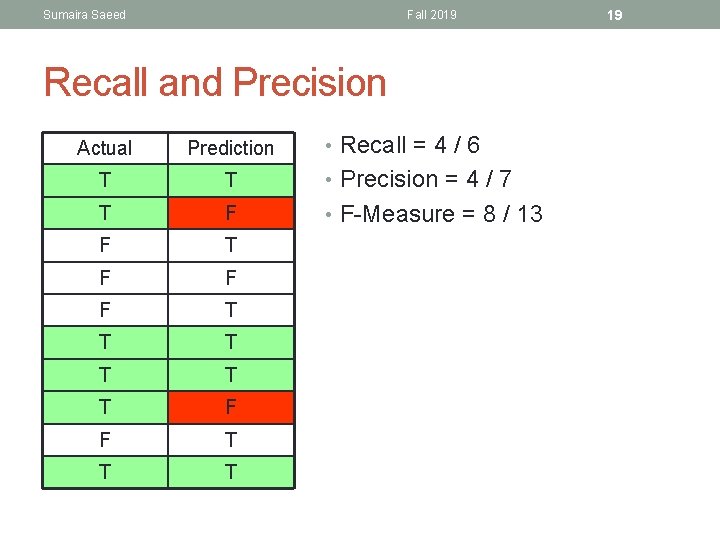

Sumaira Saeed Fall 2019 Recall and Precision • Recall = 4 / 6 Actual Prediction T T • Precision = 4 / 7 T F • F-Measure = 8 / 13 F T F F F T T T 19