Introduction to ANNIE http gate ac uk http

- Slides: 20

Introduction to ANNIE http: //gate. ac. uk/ http: //nlp. shef. ac. uk/ Diana Maynard University of Sheffield March 2004

What is ANNIE? • ANNIE is a vanilla information extraction system comprising a set of core PRs: – – – Tokeniser Sentence Splitter POS tagger Gazetteers Semantic tagger (JAPE transducer) Orthomatcher (orthographic coreference)

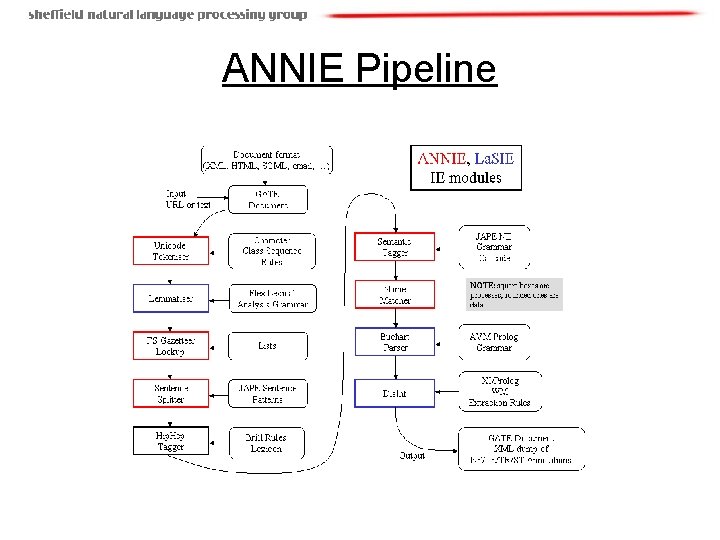

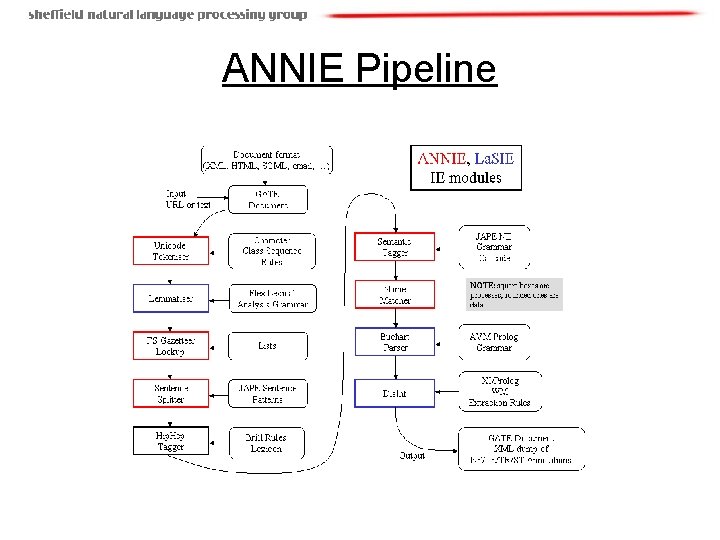

ANNIE Pipeline

Other Processing Resources • There also lots of additional processing resources which are not part of ANNIE itself but which come with the default installation of GATE – Gazetteer collector – PRs for Machine Learning – Various exporters – Annotation set transfer etc….

Creating a new application from ANNIE • Typically a new application will use most of the core components from ANNIE • The tokeniser, sentence splitter and orthomatcher are basically language, domain and application-independent • The POS tagger is language dependent but domain and applicationindependent • The gazetteer lists and JAPE grammars may act as a starting point but will almost certainly need to be modified • You may also require additional PRs (either existing or new ones)

Modifying gazetteers • Gazetteers are plain text files containing lists of names • Each gazetteer set has an index file listing all the lists, plus features of each list (major. Type, minor. Type and language) • Lists can be modified either internally using Gaze, or externally in your favourite editor • Gazetteers can also be mapped to ontologies • To use Gaze and the ontology editor, you need to download the relevant creole files

JAPE grammars • A semantic tagger consists of a set of rule-based JAPE grammars run sequentially • JAPE is a pattern-matching language • The LHS of each rule contains patterns to be matched • The RHS contains details of annotations (and optionally features) to be created • More complex rules can also be created

Input specifications • The head of each grammar phase needs to contain certain information – Phase name – Inputs – Matching style e. g. Phase: location Input: Token Lookup Number Control: appelt

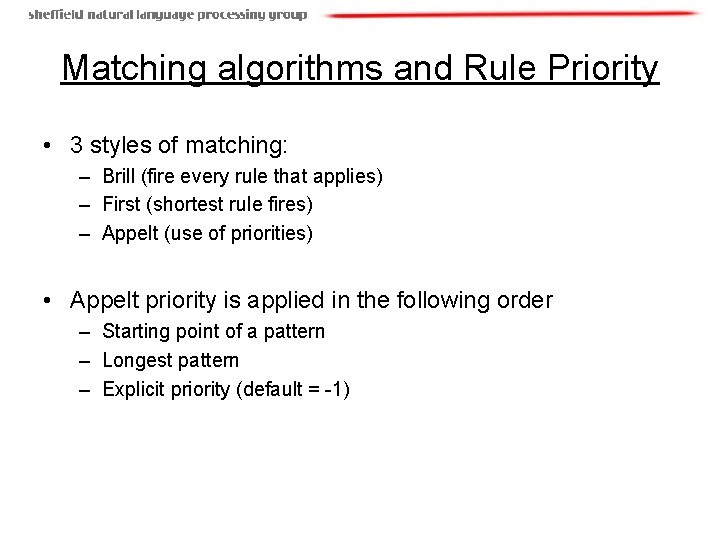

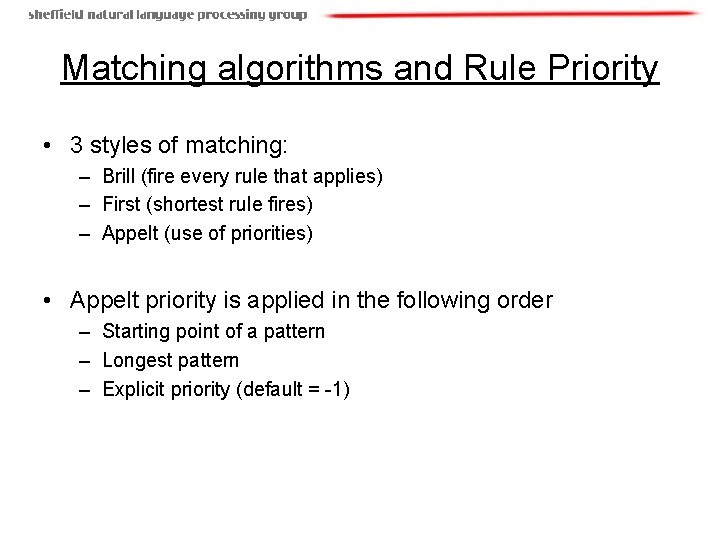

Matching algorithms and Rule Priority • 3 styles of matching: – Brill (fire every rule that applies) – First (shortest rule fires) – Appelt (use of priorities) • Appelt priority is applied in the following order – Starting point of a pattern – Longest pattern – Explicit priority (default = -1)

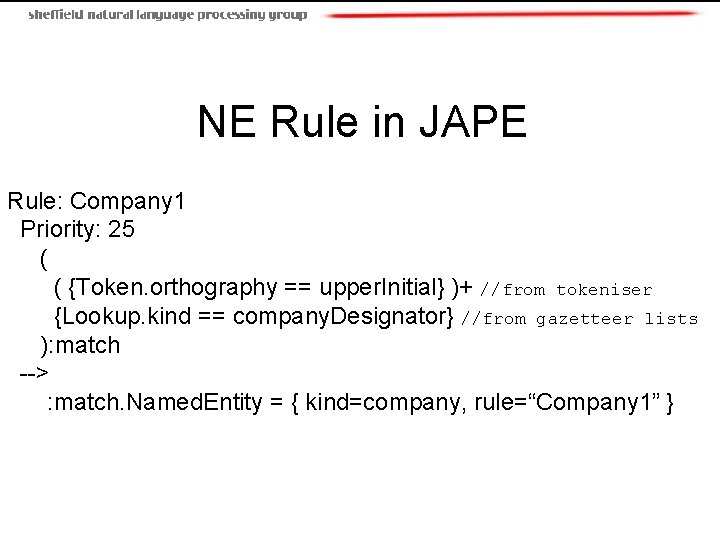

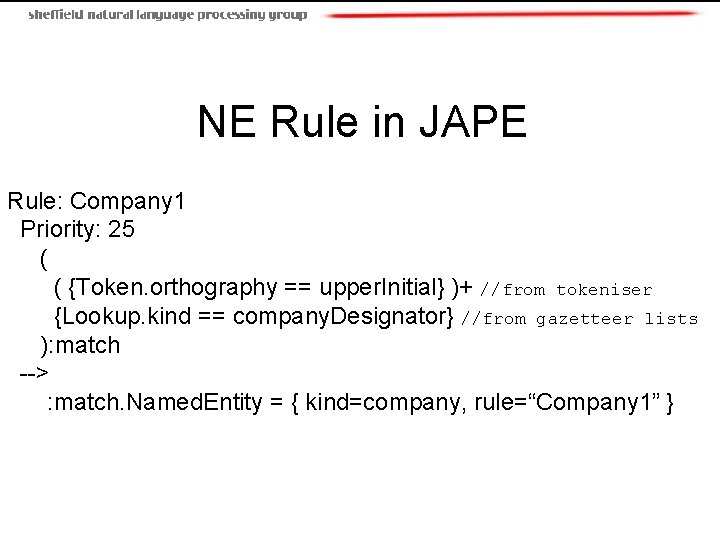

NE Rule in JAPE Rule: Company 1 Priority: 25 ( ( {Token. orthography == upper. Initial} )+ //from tokeniser {Lookup. kind == company. Designator} //from gazetteer lists ): match --> : match. Named. Entity = { kind=company, rule=“Company 1” }

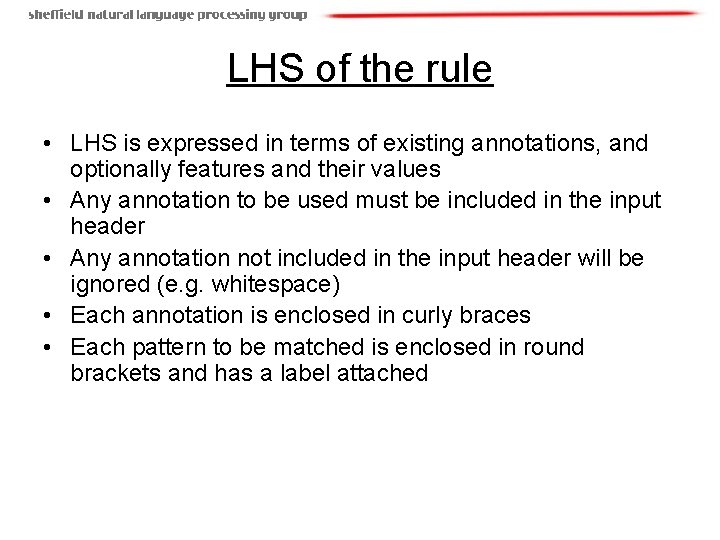

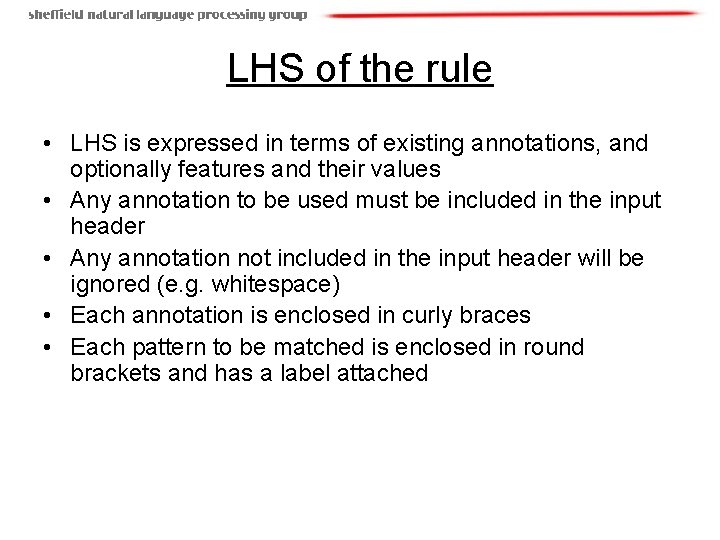

LHS of the rule • LHS is expressed in terms of existing annotations, and optionally features and their values • Any annotation to be used must be included in the input header • Any annotation not included in the input header will be ignored (e. g. whitespace) • Each annotation is enclosed in curly braces • Each pattern to be matched is enclosed in round brackets and has a label attached

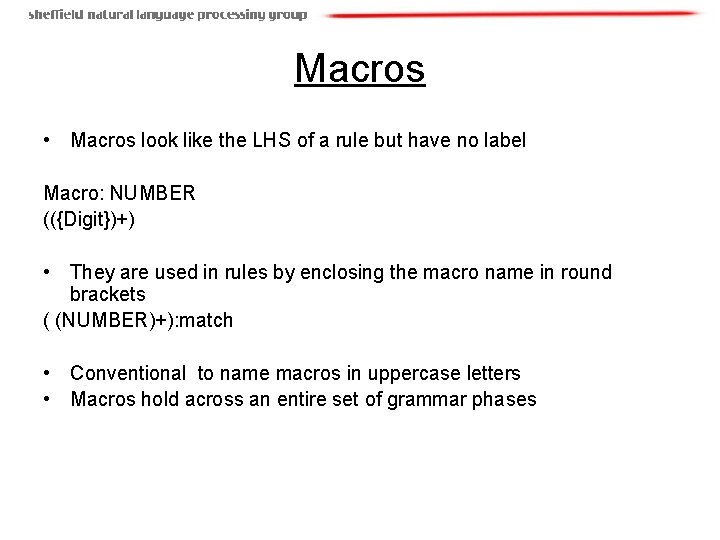

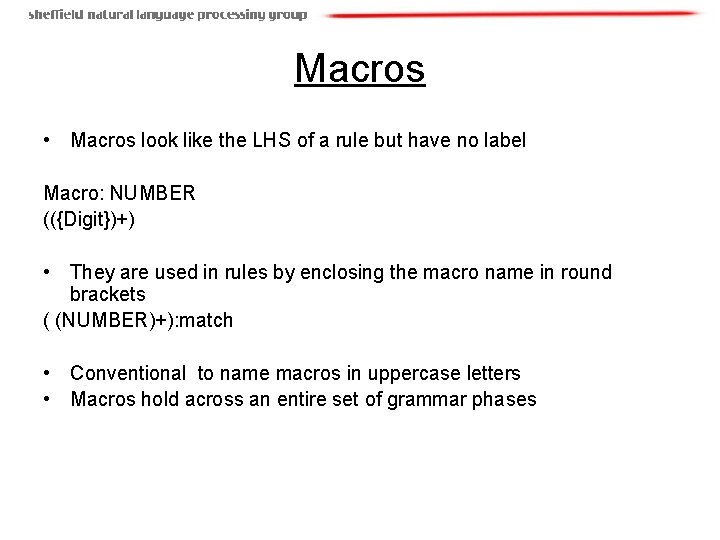

Macros • Macros look like the LHS of a rule but have no label Macro: NUMBER (({Digit})+) • They are used in rules by enclosing the macro name in round brackets ( (NUMBER)+): match • Conventional to name macros in uppercase letters • Macros hold across an entire set of grammar phases

Contextual information • Contextual information can be specified in the same way, but has no label • Contextual information will be consumed by the rule ({Annotation 1}) ({Annotation 2}): match ({Annotation 3})

RHS of the rule • LHS and RHS are separated by • Label matches that on the LHS • Annotation to be created follows the label (Annotation 1): match : match. NE = {feature 1 = value 1, feature 2 = value 2}

Using phases • Grammars usually consist of several phases, run sequentially • Only one rule within a single phase can fire • Temporary annotations may be created in early phases and used as input for later phases • Annotations from earlier phases may need to be combined or modified • A definition phase (conventionally called main. jape) lists the phases to be used, in order • Only the definition phase needs to be loaded

More complex JAPE rules • Any Java code can be used on the RHS of a rule • This is useful for e. g. feature percolation, ontology population, accessing information not readily available, comparing feature values, deleting existing annotations etc. • There are examples of these in the user guide and in the ANNIE NE grammars • Most JAPE rules end up being complex!

Using JAPE for other tasks • JAPE grammars are not just useful for NE annotation • They can be a quick and easy way of performing any kind of task where patterns can be easily recognised and a finite-state approach is possible, e. g. transforming one style of markup into another, deriving features for the learning algorithms

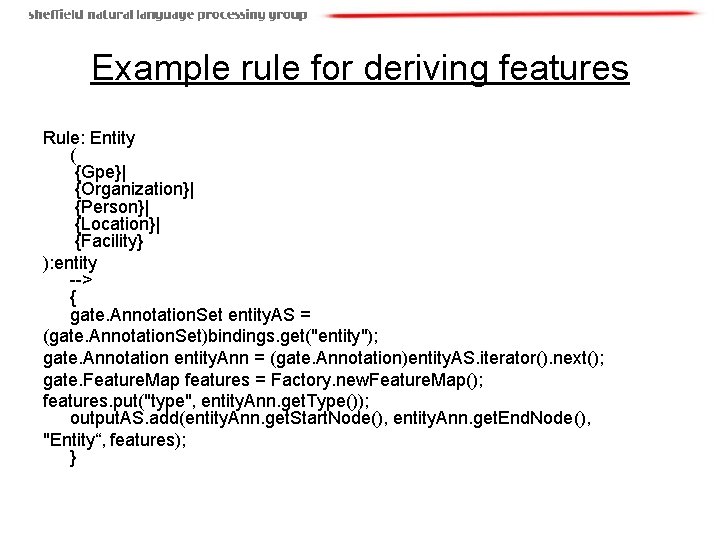

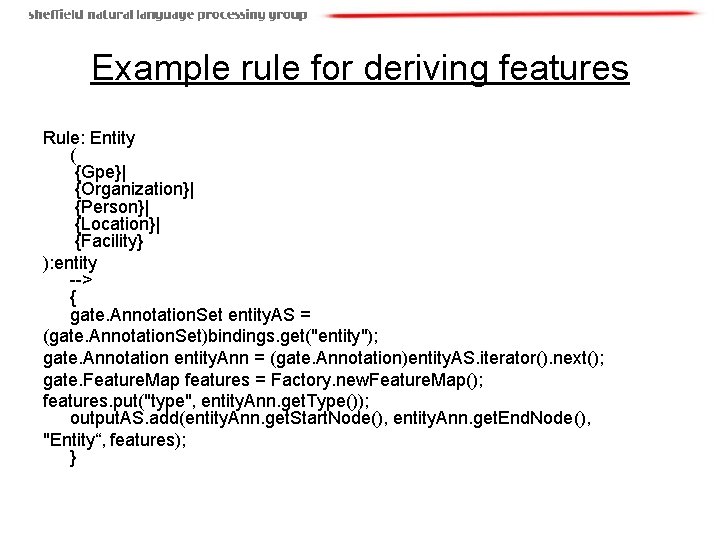

Example rule for deriving features Rule: Entity ( {Gpe}| {Organization}| {Person}| {Location}| {Facility} ): entity --> { gate. Annotation. Set entity. AS = (gate. Annotation. Set)bindings. get("entity"); gate. Annotation entity. Ann = (gate. Annotation)entity. AS. iterator(). next(); gate. Feature. Map features = Factory. new. Feature. Map(); features. put("type", entity. Ann. get. Type()); output. AS. add(entity. Ann. get. Start. Node(), entity. Ann. get. End. Node(), "Entity“, features); }

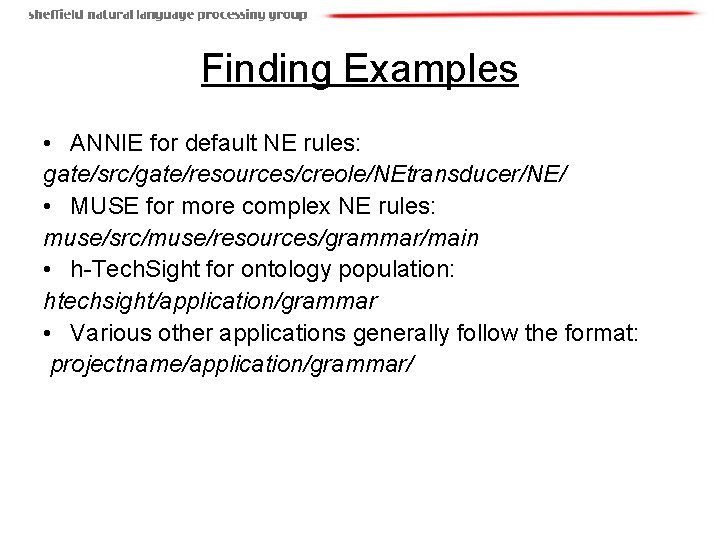

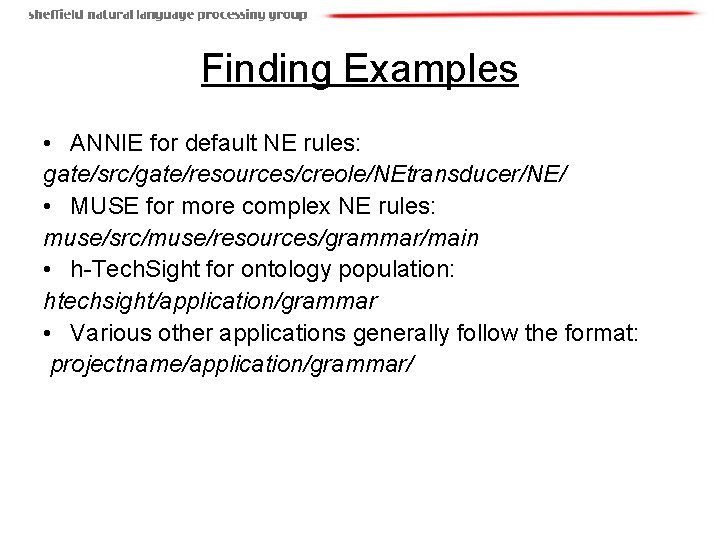

Finding Examples • ANNIE for default NE rules: gate/src/gate/resources/creole/NEtransducer/NE/ • MUSE for more complex NE rules: muse/src/muse/resources/grammar/main • h-Tech. Sight for ontology population: htechsight/application/grammar • Various other applications generally follow the format: projectname/application/grammar/

Conclusion This talk: http: //gate. ac. uk/sale/talks/annie-tutorial. ppt More information: http: //gate. ac. uk/