GATE Human Language and Machine Learning http gate

![Attributes can be: – Boolean The [lack of] presence of an annotation of a Attributes can be: – Boolean The [lack of] presence of an annotation of a](https://slidetodoc.com/presentation_image/aed91ea447142628e3d3b177f50a3544/image-21.jpg)

![<DATASET> <INSTANCE-TYPE>Token</INSTANCE-TYPE> <ATTRIBUTE> <NAME>POS_category(0)</NAME> <TYPE>Token</TYPE> <FEATURE>category</FEATURE> <POSITION>0</POSITION> <VALUES> <VALUE>NN</VALUE> <VALUE>NNPS</VALUE> … </VALUES> [<CLASS/>] </ATTRIBUTE> <DATASET> <INSTANCE-TYPE>Token</INSTANCE-TYPE> <ATTRIBUTE> <NAME>POS_category(0)</NAME> <TYPE>Token</TYPE> <FEATURE>category</FEATURE> <POSITION>0</POSITION> <VALUES> <VALUE>NN</VALUE> <VALUE>NNPS</VALUE> … </VALUES> [<CLASS/>] </ATTRIBUTE>](https://slidetodoc.com/presentation_image/aed91ea447142628e3d3b177f50a3544/image-23.jpg)

- Slides: 30

GATE, Human Language and Machine Learning http: //gate. ac. uk/ http: //nlp. shef. ac. uk/ Hamish Cunningham, Valentin Tablan, Kalina Bontcheva, Diana Maynard 9 th July/2003 1. 2. 3. The Knowledge Economy and Human Language Technology GATE: a General Architecture for Text Engineering GATE, Information Extraction and Machine Learning (30)

1. The Knowledge Economy and Human Language Gartner, December 2002: • • taxonomic and hierachical knowledge mapping and indexing will be prevalent in almost all information-rich applications through 2012 more than 95% of human-to-computer information input will involve textual language A contradiction: formal knowledge in semantics-based systems vs. ambiguous informal natural language The challenge: to reconcile these two opposing tendencies 2(30)

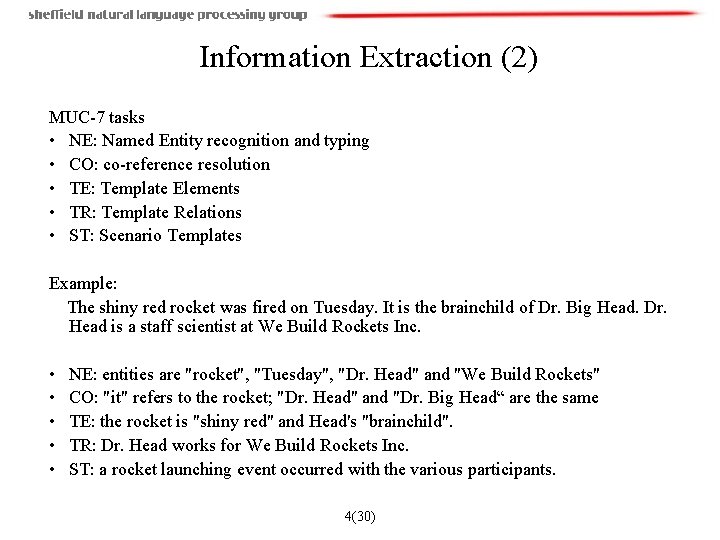

Information Extraction (1): from text to structured data Two trends in the early 1990 s: • NLU: too difficult! Restrict the task and increase the performance • Quantitative measurement (MUC – Message Understanding Conference, ACE – Advanced Content Extraction, TREC – Text Retrieval Conference. . . ) means good estimation of accuracy Types of extraction: • Identify named entities (domain independent) • Persons • Dates • Numbers • Organizations • Identify domain-specific events and terms; e. g. , if we’re processing football: • Relations: which team a player plays for • Events: goal, foul, etc 3(30)

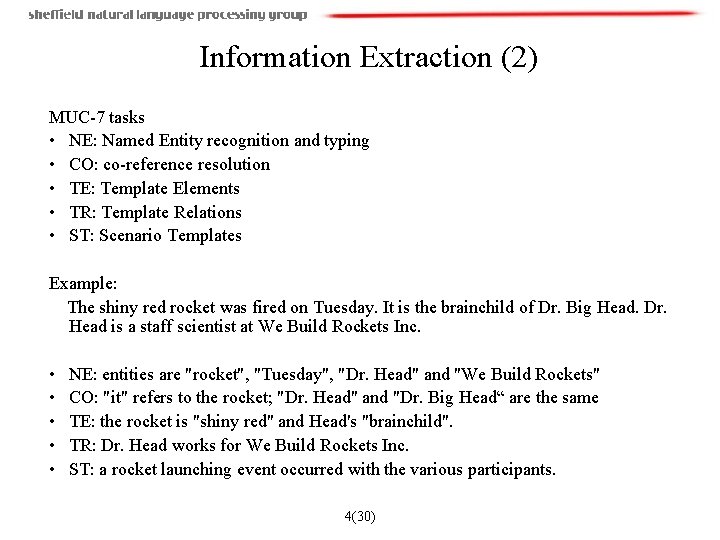

Information Extraction (2) MUC-7 tasks • NE: Named Entity recognition and typing • CO: co-reference resolution • TE: Template Elements • TR: Template Relations • ST: Scenario Templates Example: The shiny red rocket was fired on Tuesday. It is the brainchild of Dr. Big Head. Dr. Head is a staff scientist at We Build Rockets Inc. • • • NE: entities are "rocket", "Tuesday", "Dr. Head" and "We Build Rockets" CO: "it" refers to the rocket; "Dr. Head" and "Dr. Big Head“ are the same TE: the rocket is "shiny red" and Head's "brainchild". TR: Dr. Head works for We Build Rockets Inc. ST: a rocket launching event occurred with the various participants. 4(30)

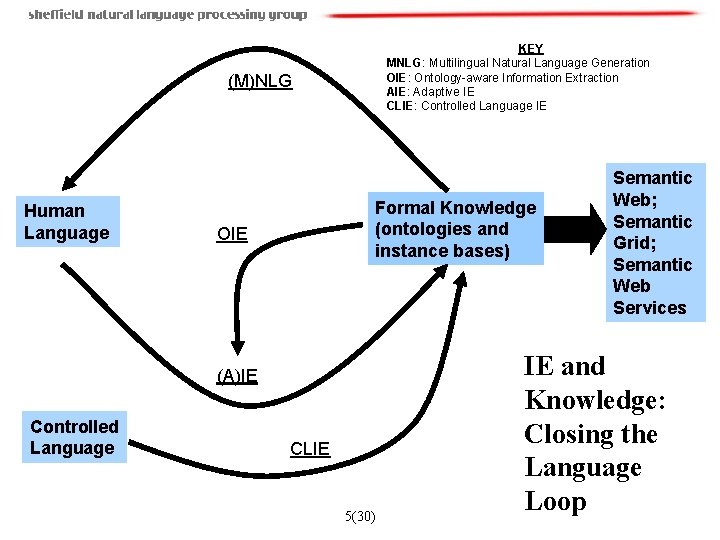

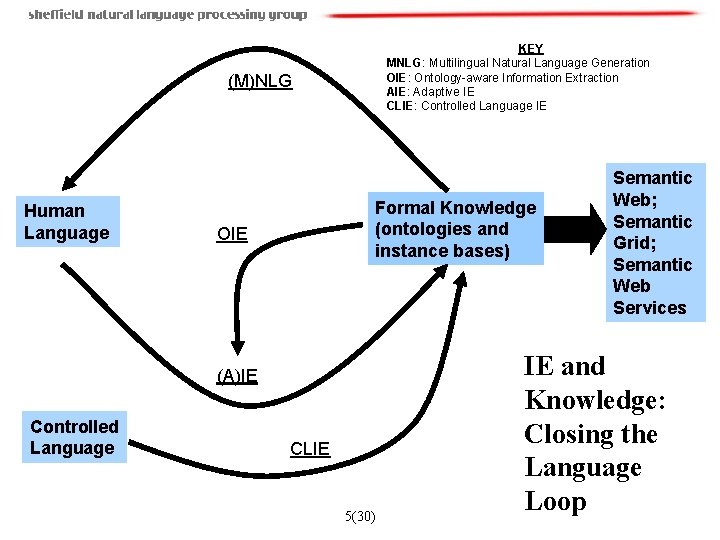

KEY MNLG: Multilingual Natural Language Generation OIE: Ontology-aware Information Extraction AIE: Adaptive IE CLIE: Controlled Language IE (M)NLG Human Language Formal Knowledge (ontologies and instance bases) OIE (A)IE Controlled Language CLIE 5(30) Semantic Web; Semantic Grid; Semantic Web Services IE and Knowledge: Closing the Language Loop

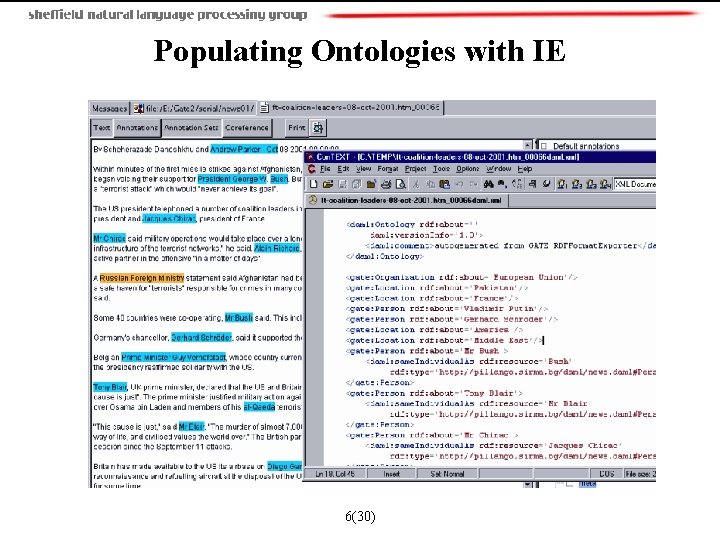

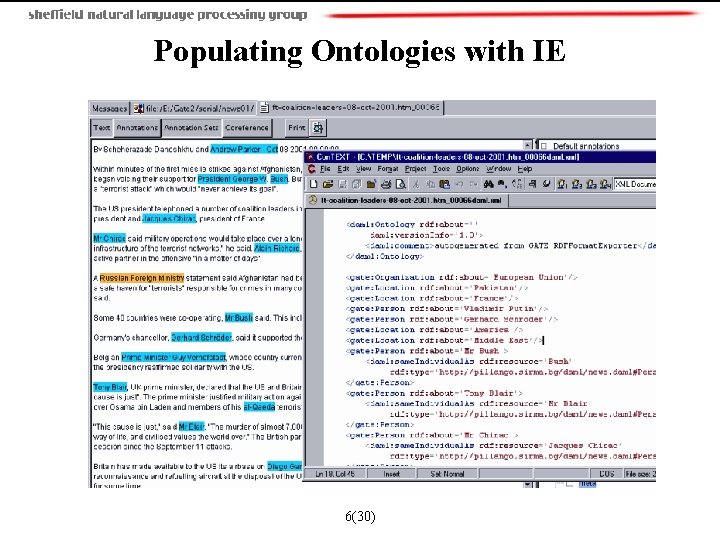

Populating Ontologies with IE 6(30)

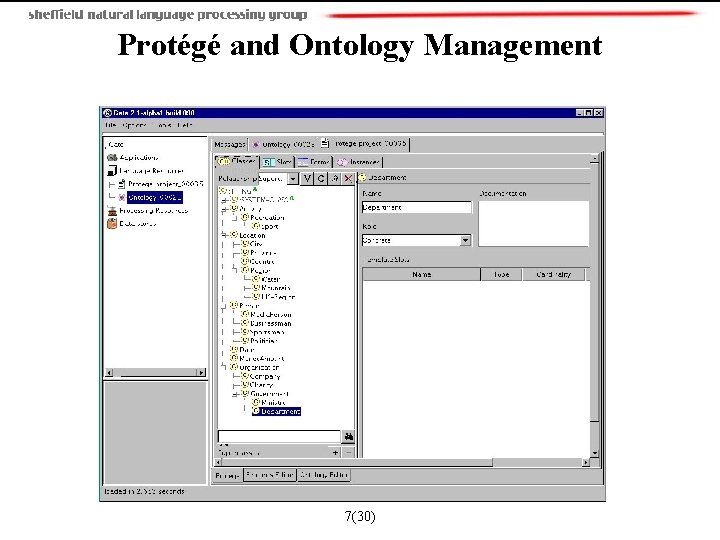

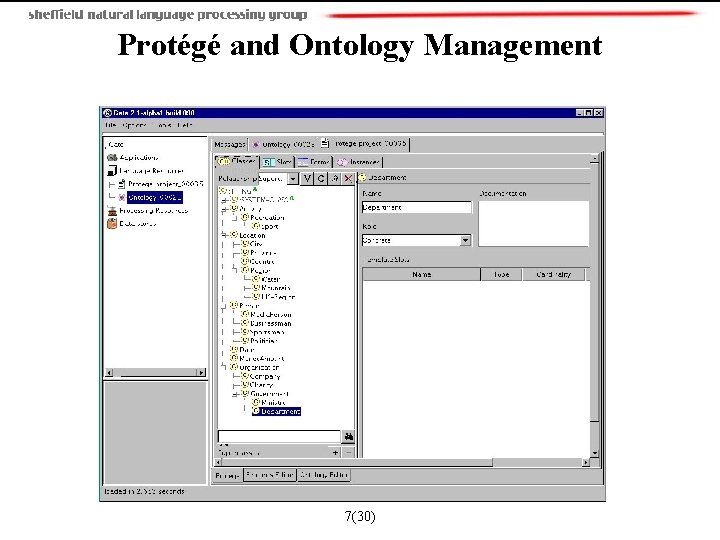

Protégé and Ontology Management 7(30)

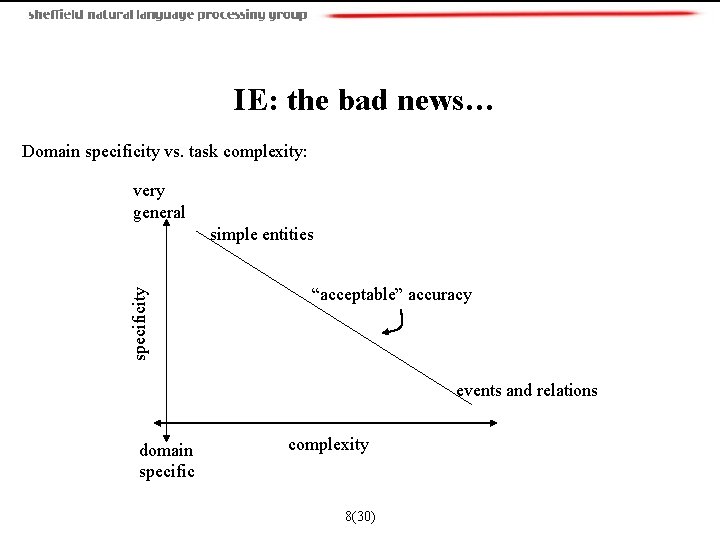

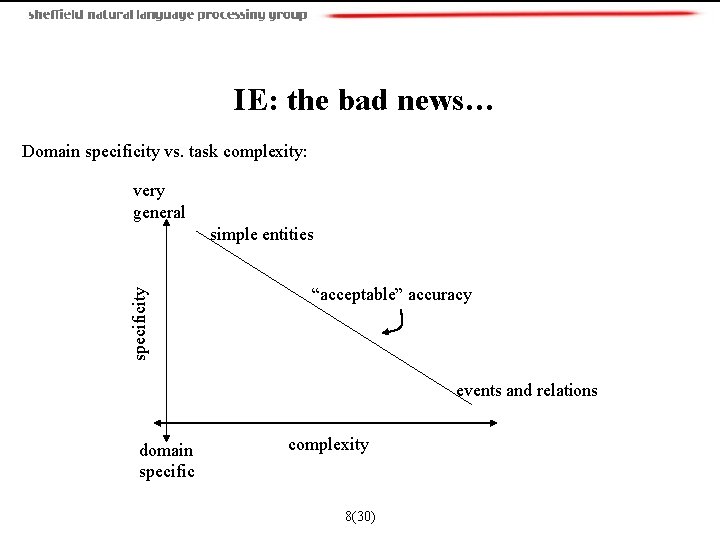

IE: the bad news… Domain specificity vs. task complexity: very general specificity simple entities “acceptable” accuracy events and relations domain specific complexity 8(30)

2. GATE: Software Architecure for HLT Software lifecycle in collaborative research Project Proposal: We love each other. We can work so well together. We can hold workshops on Santorini together. We will solve all the problems of AI that our predecessors were too stupid to. Analysis and Design: Stop work entirely, for a period of reflection and recuperation following the stress of attending the kick-off meeting in Luxembourg. Implementation: Each developer partner tries to convince the others that program X that they just happen to have lying around on a dusty disk-drive meets the project objectives exactly and should form the centrepiece of the demonstrator. Integration and Testing: The lead partner gets desperate and decides to hardcode the results for a small set of examples into the demonstrator, and have a failsafe crash facility for unknown input ("well, you know, it's still a prototype. . . "). Evaluation: Everyone says how nice it is, how it solves all sorts of terribly hard problems, and how if we had another grant we could go on to transform information processing the World over (or at least the European business travel industry). 9(30)

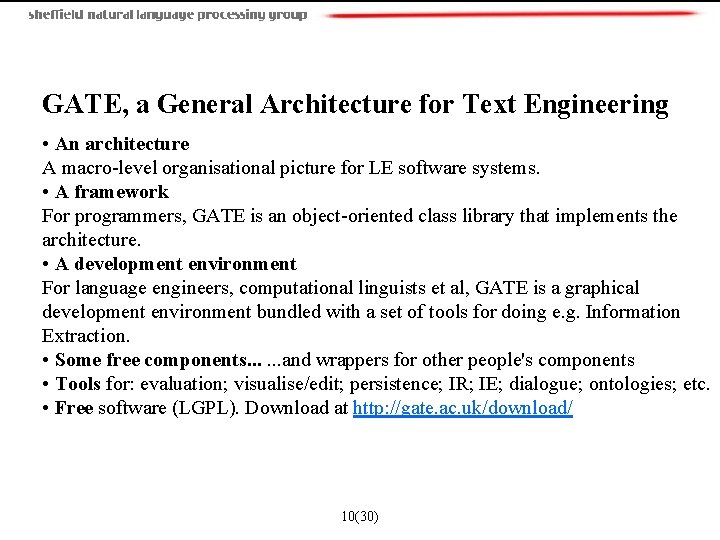

GATE, a General Architecture for Text Engineering • An architecture A macro-level organisational picture for LE software systems. • A framework For programmers, GATE is an object-oriented class library that implements the architecture. • A development environment For language engineers, computational linguists et al, GATE is a graphical development environment bundled with a set of tools for doing e. g. Information Extraction. • Some free components. . . and wrappers for other people's components • Tools for: evaluation; visualise/edit; persistence; IR; IE; dialogue; ontologies; etc. • Free software (LGPL). Download at http: //gate. ac. uk/download/ 10(30)

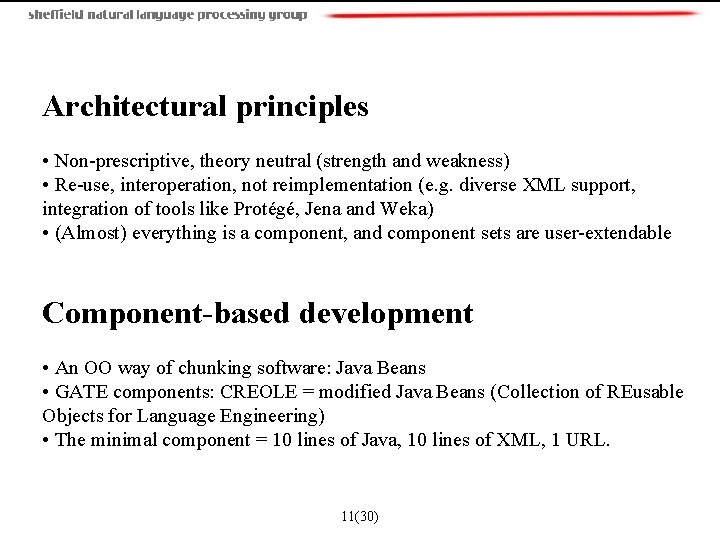

Architectural principles • Non-prescriptive, theory neutral (strength and weakness) • Re-use, interoperation, not reimplementation (e. g. diverse XML support, integration of tools like Protégé, Jena and Weka) • (Almost) everything is a component, and component sets are user-extendable Component-based development • An OO way of chunking software: Java Beans • GATE components: CREOLE = modified Java Beans (Collection of REusable Objects for Language Engineering) • The minimal component = 10 lines of Java, 10 lines of XML, 1 URL. 11(30)

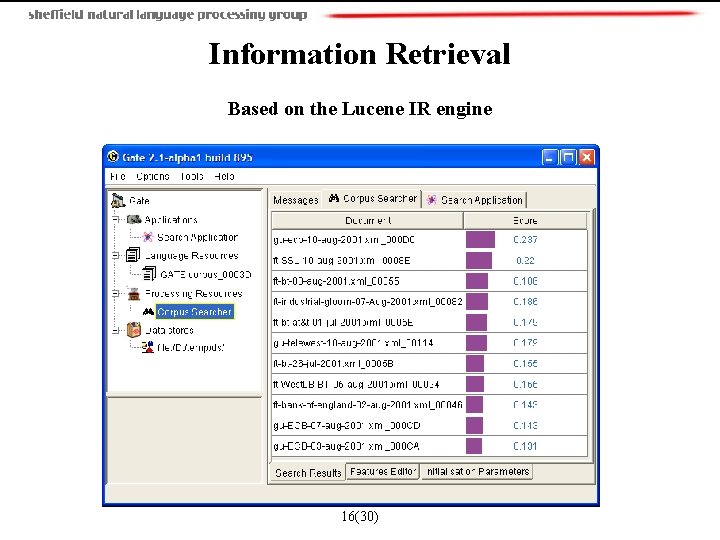

GATE Language Resources GATE LRs are documents, ontologies, corpora, lexicons, …… Documents / corpora: • GATE documents loaded from local files or the web. . . • Diverse document formats: text, html, XML, email, RTF, SGML. Processing Resources Algorithmic components knows as PRs – beans with execute methods. • All PRs can handle Unicode data by default. • Clear distinction between code and data (simple repurposing). • 20 -30 freebies with GATE • e. g. Named entity recognition; Word. Net; Protégé; Ontology; Onto. Gazetteer; DAML+OIL export; Information Retrieval based on Lucene 12(30)

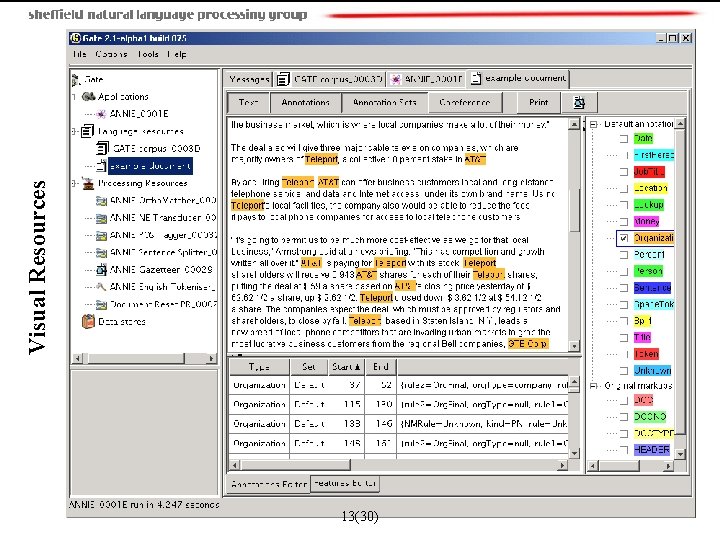

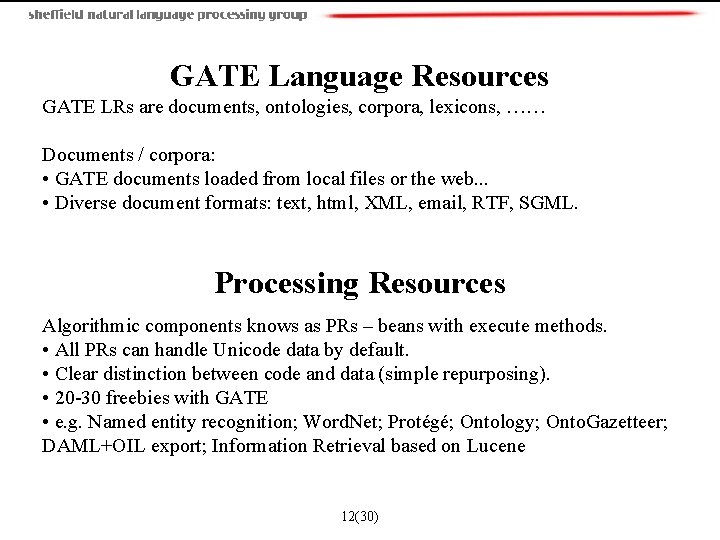

13(30) Visual Resources

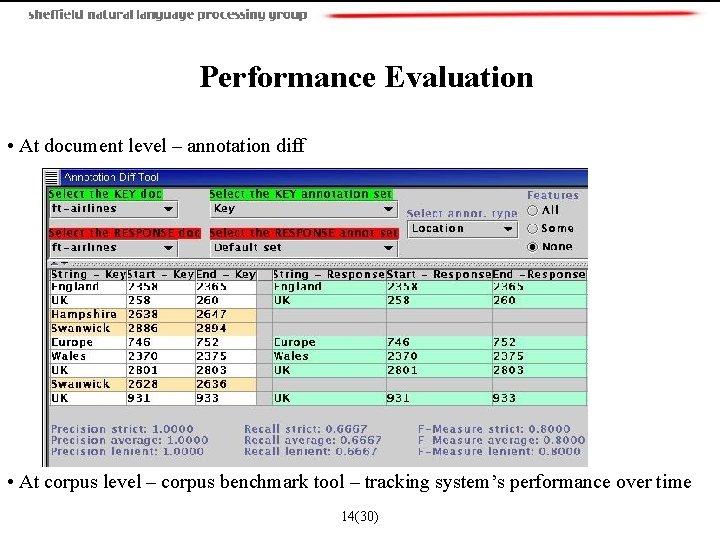

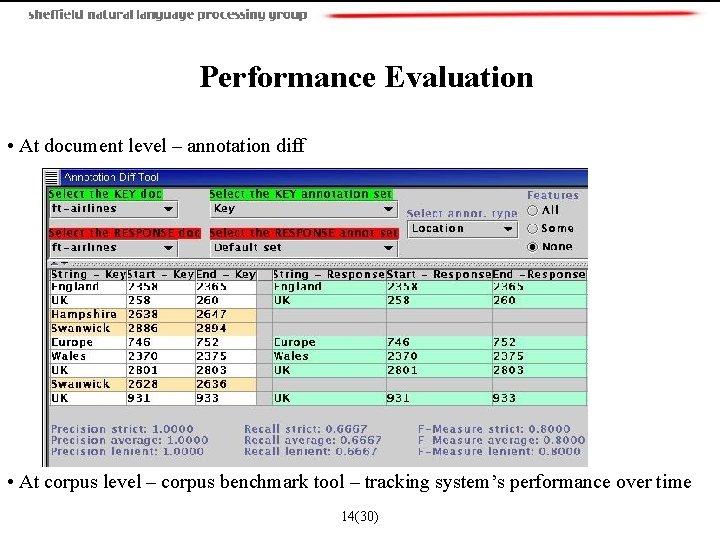

Performance Evaluation • At document level – annotation diff • At corpus level – corpus benchmark tool – tracking system’s performance over time 14(30)

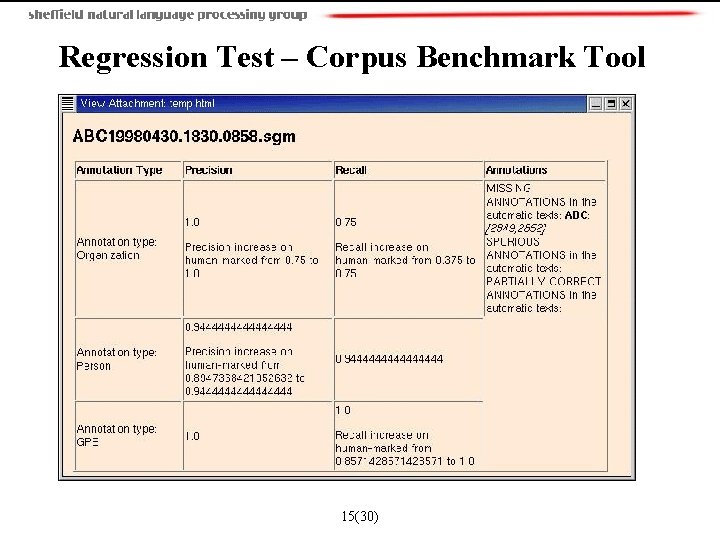

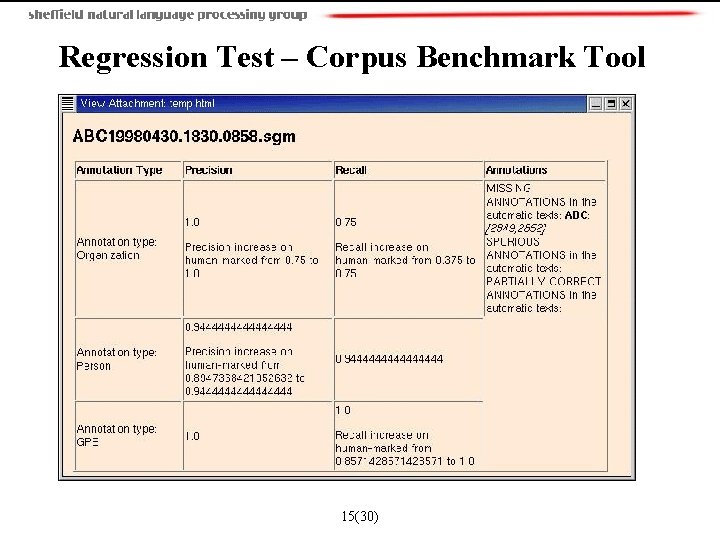

Regression Test – Corpus Benchmark Tool 15(30)

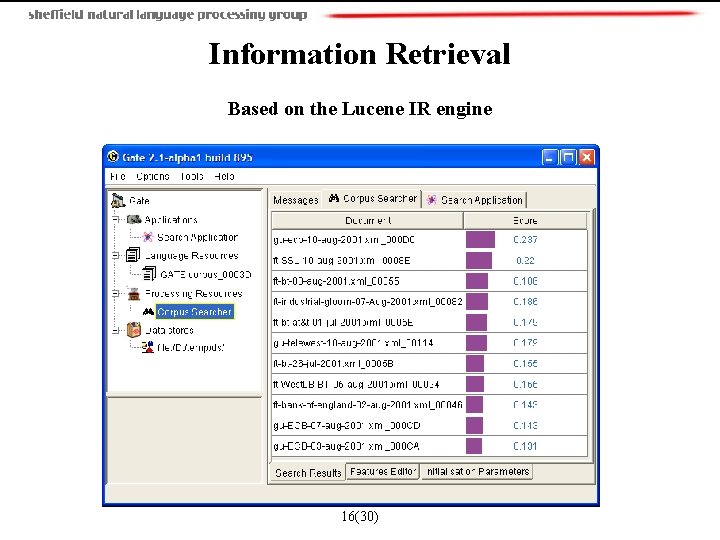

Information Retrieval Based on the Lucene IR engine 16(30)

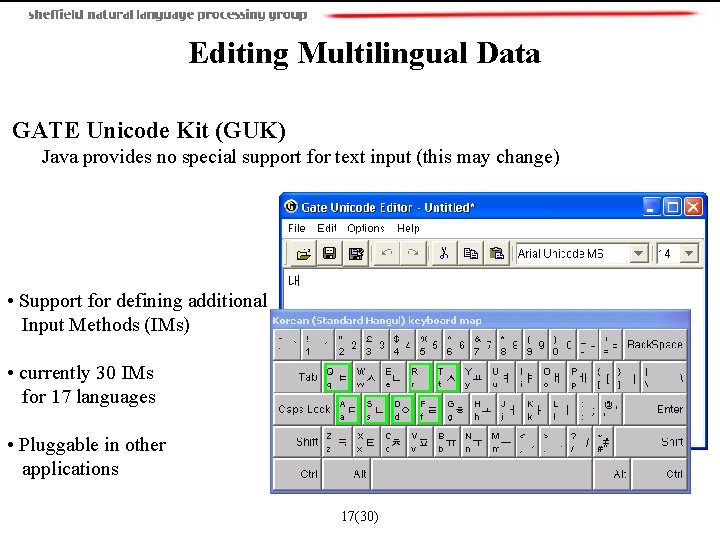

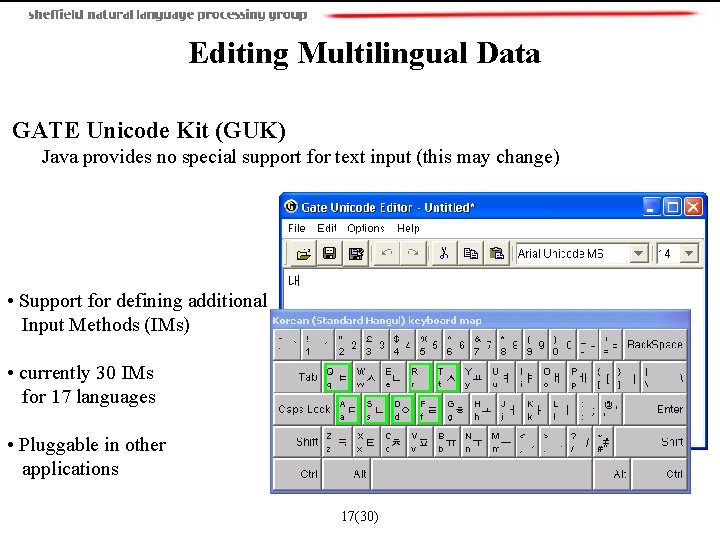

Editing Multilingual Data GATE Unicode Kit (GUK) Java provides no special support for text input (this may change) • Support for defining additional Input Methods (IMs) • currently 30 IMs for 17 languages • Pluggable in other applications 17(30)

Processing Multilingual Data All the visualisation and editing tools for ML LRs use enhanced Java facilities: 18(30)

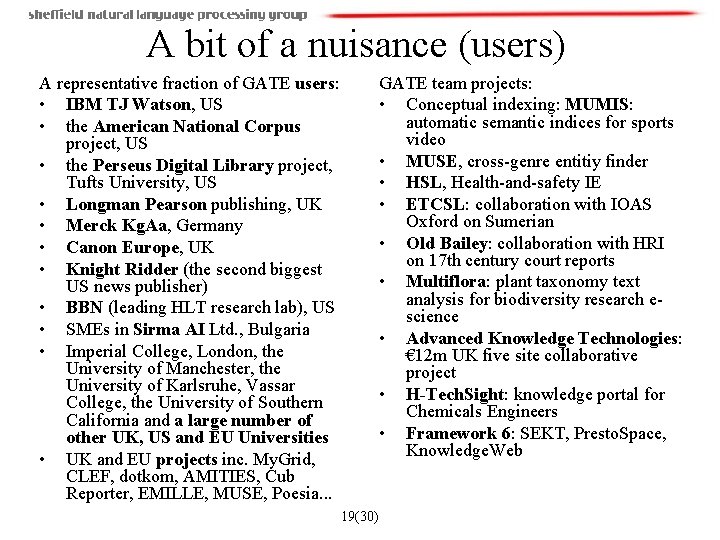

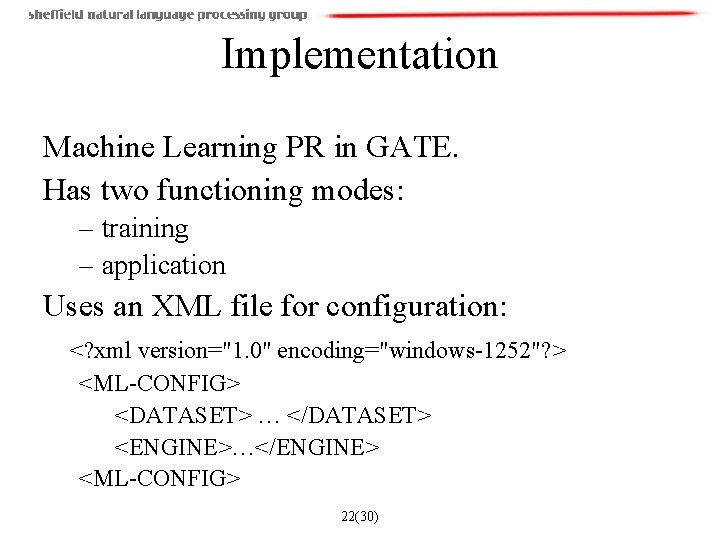

A bit of a nuisance (users) A representative fraction of GATE users: • IBM TJ Watson, US • the American National Corpus project, US • the Perseus Digital Library project, Tufts University, US • Longman Pearson publishing, UK • Merck Kg. Aa, Germany • Canon Europe, UK • Knight Ridder (the second biggest US news publisher) • BBN (leading HLT research lab), US • SMEs in Sirma AI Ltd. , Bulgaria • Imperial College, London, the University of Manchester, the University of Karlsruhe, Vassar College, the University of Southern California and a large number of other UK, US and EU Universities • UK and EU projects inc. My. Grid, CLEF, dotkom, AMITIES, Cub Reporter, EMILLE, MUSE, Poesia. . . 19(30) GATE team projects: • Conceptual indexing: MUMIS: automatic semantic indices for sports video • MUSE, cross-genre entitiy finder • HSL, Health-and-safety IE • ETCSL: collaboration with IOAS Oxford on Sumerian • Old Bailey: collaboration with HRI on 17 th century court reports • Multiflora: plant taxonomy text analysis for biodiversity research escience • Advanced Knowledge Technologies: € 12 m UK five site collaborative project • H-Tech. Sight: knowledge portal for Chemicals Engineers • Framework 6: SEKT, Presto. Space, Knowledge. Web

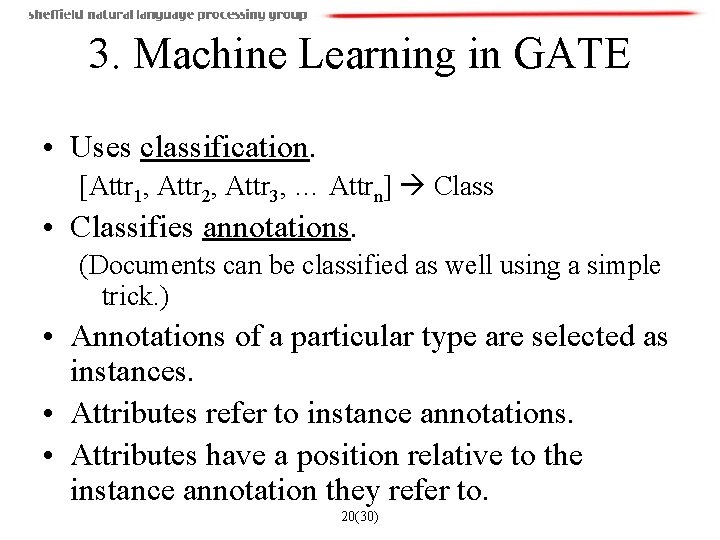

3. Machine Learning in GATE • Uses classification. [Attr 1, Attr 2, Attr 3, … Attrn] Class • Classifies annotations. (Documents can be classified as well using a simple trick. ) • Annotations of a particular type are selected as instances. • Attributes refer to instance annotations. • Attributes have a position relative to the instance annotation they refer to. 20(30)

![Attributes can be Boolean The lack of presence of an annotation of a Attributes can be: – Boolean The [lack of] presence of an annotation of a](https://slidetodoc.com/presentation_image/aed91ea447142628e3d3b177f50a3544/image-21.jpg)

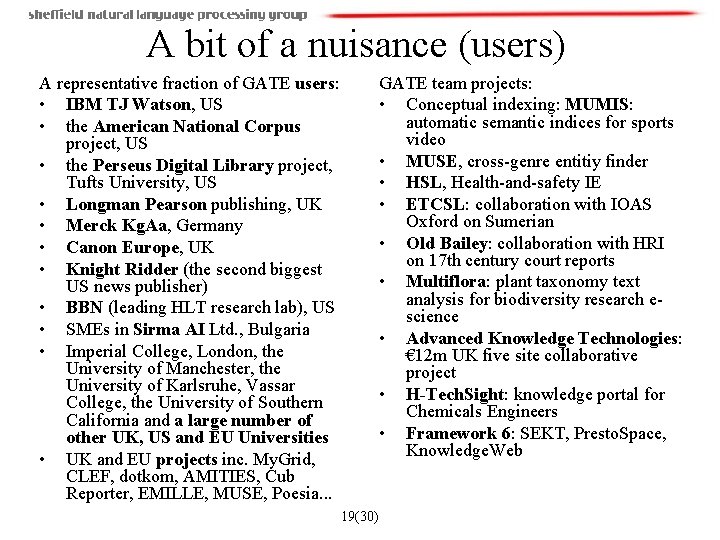

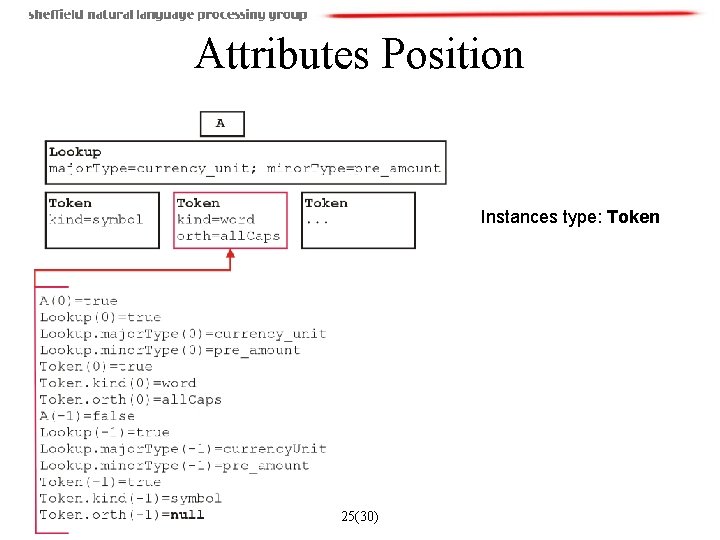

Attributes can be: – Boolean The [lack of] presence of an annotation of a particular type [partially] overlapping the referred instance annotation. – Nominal The value of a particular feature of the referred instance annotation. The complete set of acceptable values must be specified a-priori. – Numeric The numeric value (converted from String) of a particular feature of the referred instance annotation. 21(30)

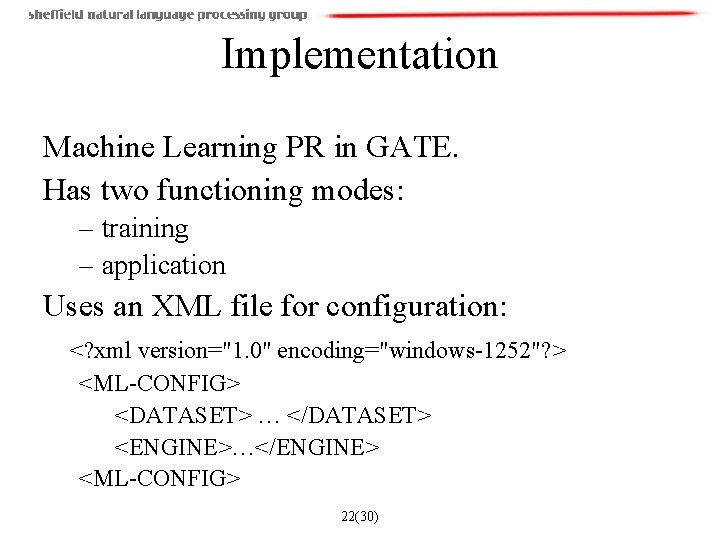

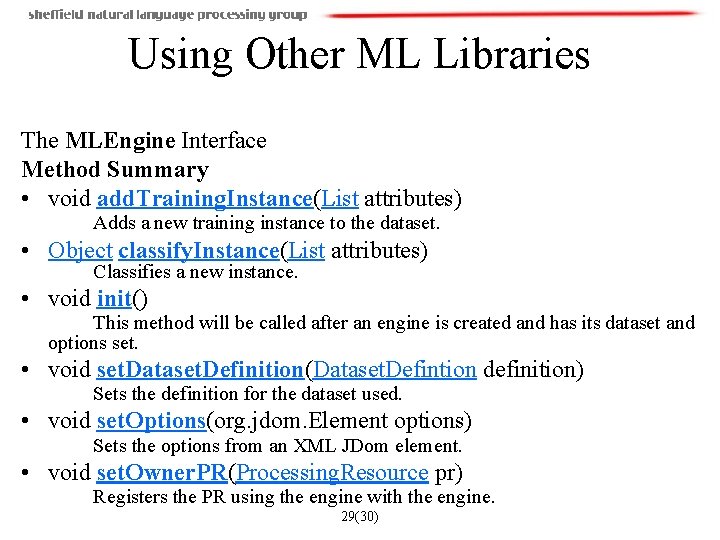

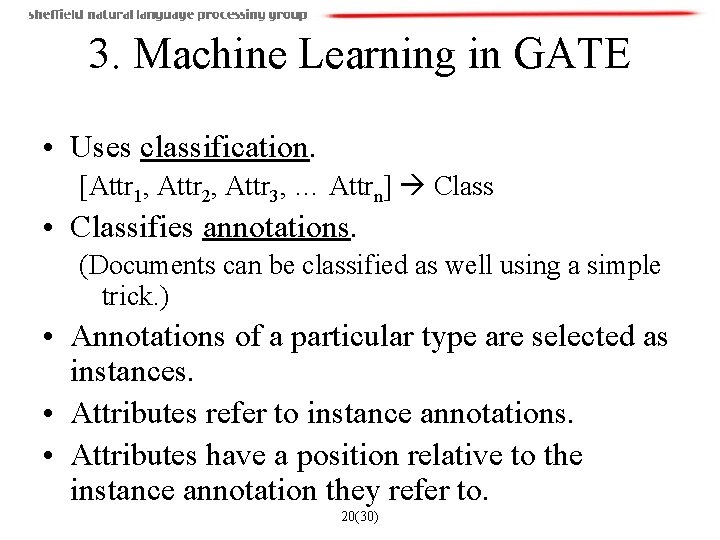

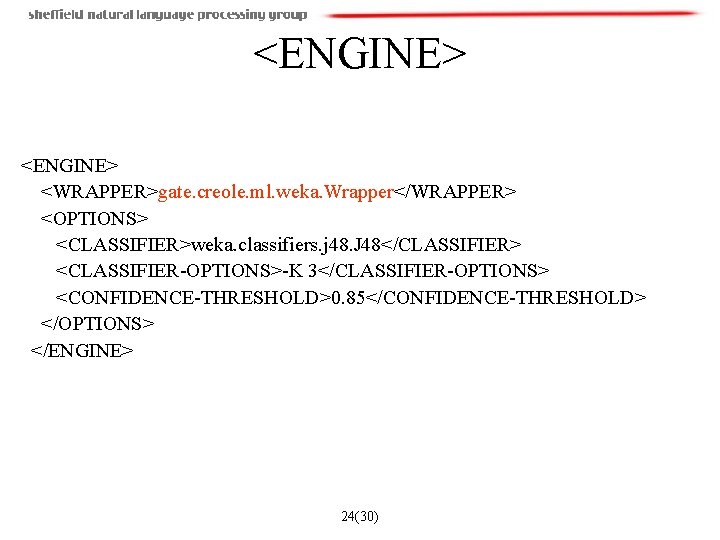

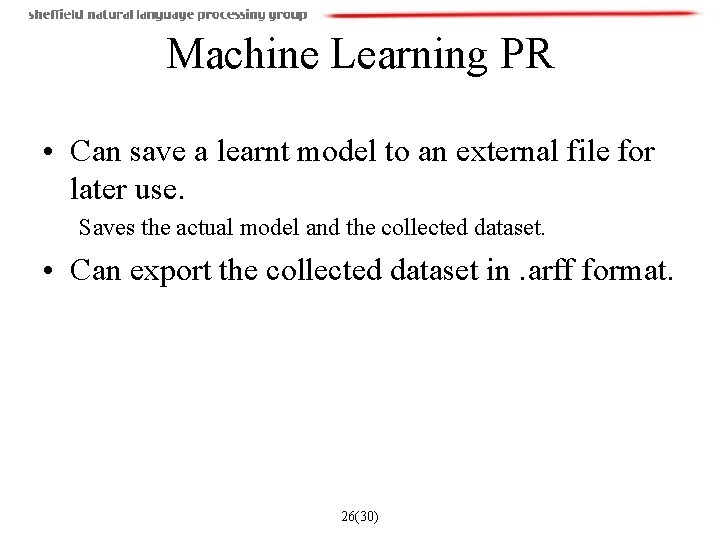

Implementation Machine Learning PR in GATE. Has two functioning modes: – training – application Uses an XML file for configuration: <? xml version="1. 0" encoding="windows-1252"? > <ML-CONFIG> <DATASET> … </DATASET> <ENGINE>…</ENGINE> <ML-CONFIG> 22(30)

![DATASET INSTANCETYPETokenINSTANCETYPE ATTRIBUTE NAMEPOScategory0NAME TYPETokenTYPE FEATUREcategoryFEATURE POSITION0POSITION VALUES VALUENNVALUE VALUENNPSVALUE VALUES CLASS ATTRIBUTE <DATASET> <INSTANCE-TYPE>Token</INSTANCE-TYPE> <ATTRIBUTE> <NAME>POS_category(0)</NAME> <TYPE>Token</TYPE> <FEATURE>category</FEATURE> <POSITION>0</POSITION> <VALUES> <VALUE>NN</VALUE> <VALUE>NNPS</VALUE> … </VALUES> [<CLASS/>] </ATTRIBUTE>](https://slidetodoc.com/presentation_image/aed91ea447142628e3d3b177f50a3544/image-23.jpg)

<DATASET> <INSTANCE-TYPE>Token</INSTANCE-TYPE> <ATTRIBUTE> <NAME>POS_category(0)</NAME> <TYPE>Token</TYPE> <FEATURE>category</FEATURE> <POSITION>0</POSITION> <VALUES> <VALUE>NN</VALUE> <VALUE>NNPS</VALUE> … </VALUES> [<CLASS/>] </ATTRIBUTE> … </DATASET> 23(30)

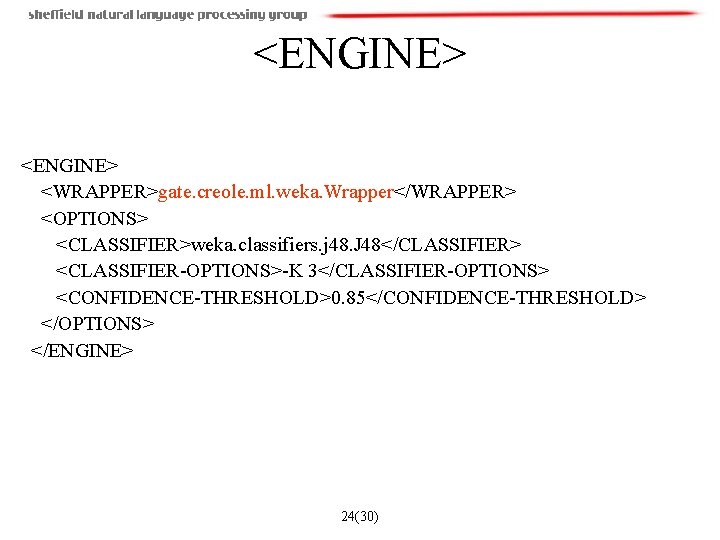

<ENGINE> <WRAPPER>gate. creole. ml. weka. Wrapper</WRAPPER> <OPTIONS> <CLASSIFIER>weka. classifiers. j 48. J 48</CLASSIFIER> <CLASSIFIER-OPTIONS>-K 3</CLASSIFIER-OPTIONS> <CONFIDENCE-THRESHOLD>0. 85</CONFIDENCE-THRESHOLD> </OPTIONS> </ENGINE> 24(30)

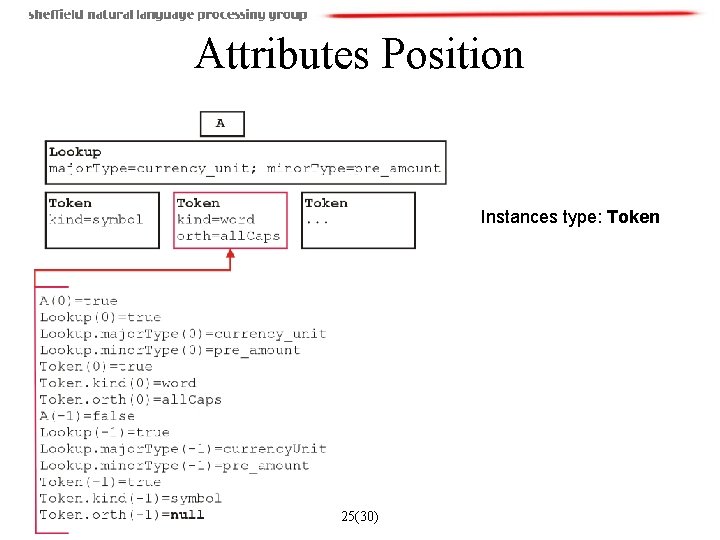

Attributes Position Instances type: Token 25(30)

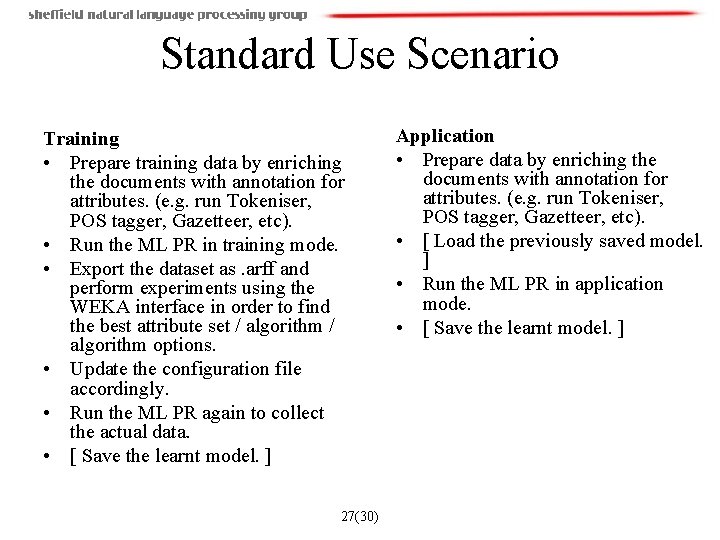

Machine Learning PR • Can save a learnt model to an external file for later use. Saves the actual model and the collected dataset. • Can export the collected dataset in. arff format. 26(30)

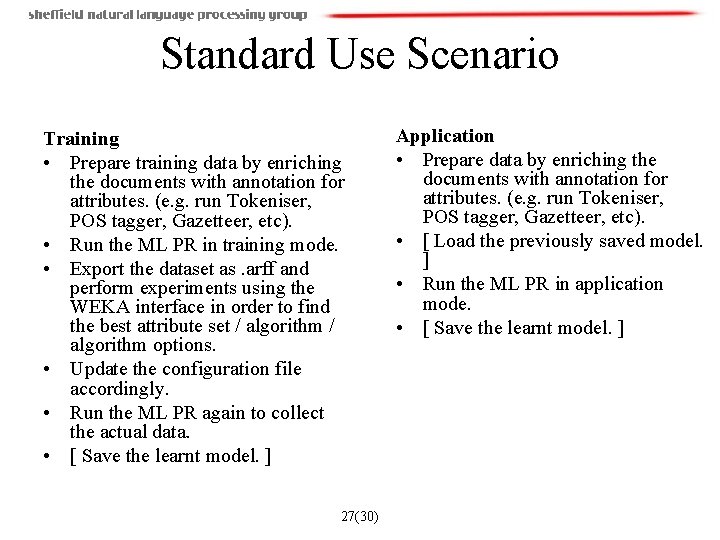

Standard Use Scenario Training • Prepare training data by enriching the documents with annotation for attributes. (e. g. run Tokeniser, POS tagger, Gazetteer, etc). • Run the ML PR in training mode. • Export the dataset as. arff and perform experiments using the WEKA interface in order to find the best attribute set / algorithm options. • Update the configuration file accordingly. • Run the ML PR again to collect the actual data. • [ Save the learnt model. ] 27(30) Application • Prepare data by enriching the documents with annotation for attributes. (e. g. run Tokeniser, POS tagger, Gazetteer, etc). • [ Load the previously saved model. ] • Run the ML PR in application mode. • [ Save the learnt model. ]

An Example Learn POS category from POS context. 28(30)

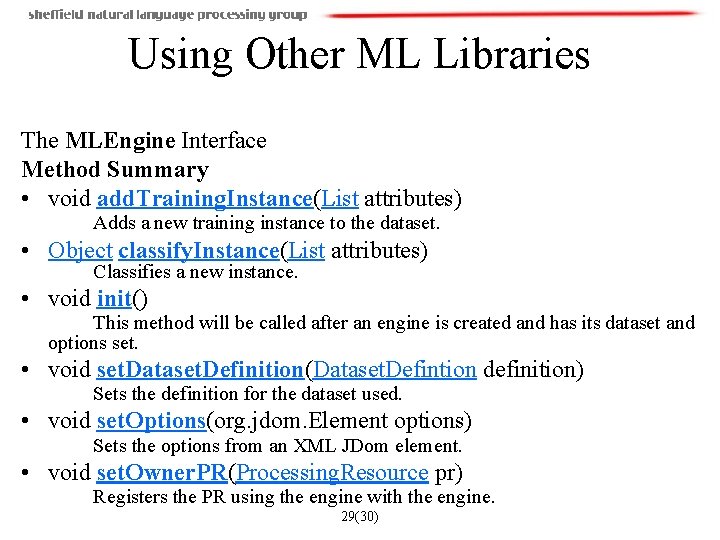

Using Other ML Libraries The MLEngine Interface Method Summary • void add. Training. Instance(List attributes) Adds a new training instance to the dataset. • Object classify. Instance(List attributes) Classifies a new instance. • void init() This method will be called after an engine is created and has its dataset and options set. • void set. Dataset. Definition(Dataset. Defintion definition) Sets the definition for the dataset used. • void set. Options(org. jdom. Element options) Sets the options from an XML JDom element. • void set. Owner. PR(Processing. Resource pr) Registers the PR using the engine with the engine. 29(30)

Conclusion GATE is: • Addressing the need for scalable, reusable, and portable HLT solutions • Supporting large data, in multiple media, languages, formats, and locations • Lowering the cost of creation of new language processing components • Promoting quantitative evaluation metrics via tools and a level playing field • Promoting experimental repeatability by developing and supporting free software Perhaps it may become: • A vehicle for the spread of collaborative experiments in ML and HLT? 30(30)