Improving GPU Performance via Large Warps and TwoLevel

- Slides: 18

Improving GPU Performance via Large Warps and Two-Level Warp Scheduling Veynu Narasiman The University of Texas at Austin Rustam Miftakhutdinov The University of Texas at Austin Michael Shebanow NVIDIA Onur Mutlu Carnegie Mellon University MICRO-44 December 6 th, 2011 Porto Alegre, Brazil Chang Joo Lee Intel Yale N. Patt The University of Texas at Austin

Rise of GPU Computing GPUs have become a popular platform for general purpose applications New Programming Models CUDA ATI Stream Technology Open. CL Order of magnitude speedup over single-threaded CPU

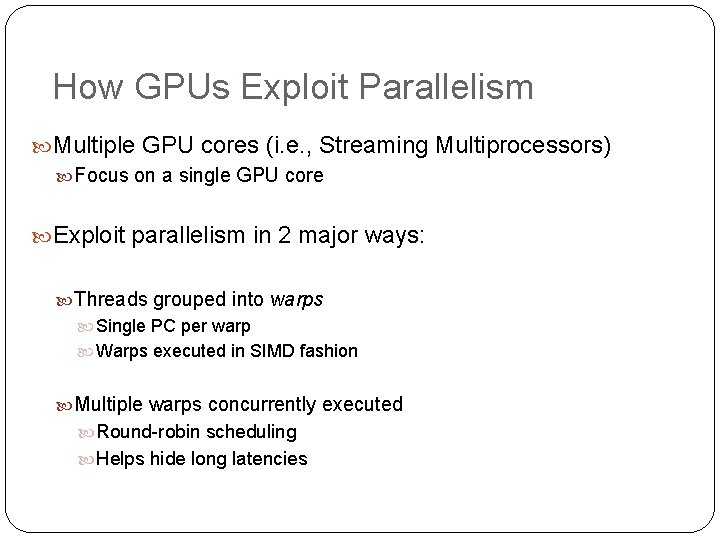

How GPUs Exploit Parallelism Multiple GPU cores (i. e. , Streaming Multiprocessors) Focus on a single GPU core Exploit parallelism in 2 major ways: Threads grouped into warps Single PC per warp Warps executed in SIMD fashion Multiple warps concurrently executed Round-robin scheduling Helps hide long latencies

The Problem Despite these techniques, computational resources can still be underutilized Two reasons for this: Branch divergence Long latency operations

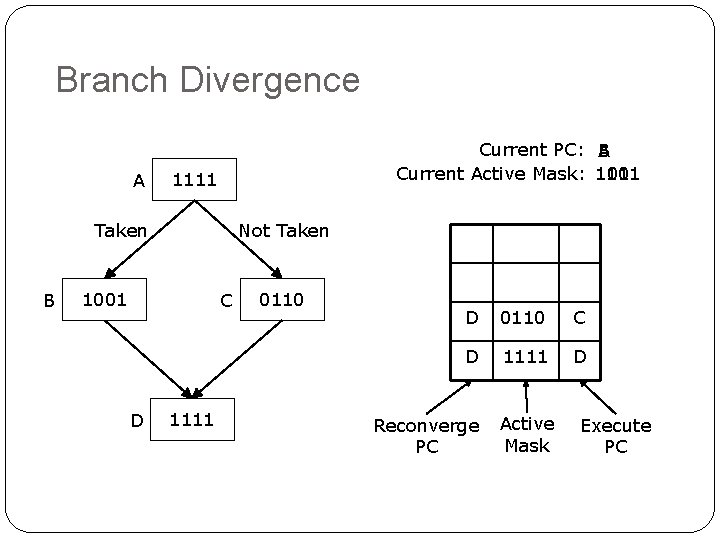

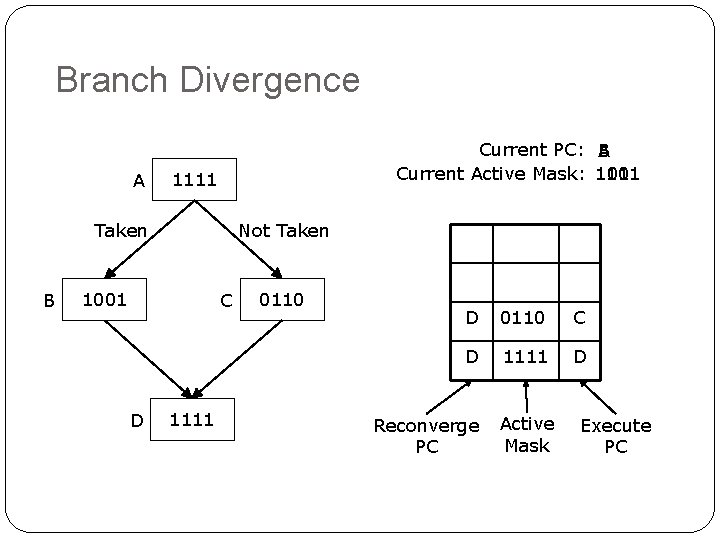

Branch Divergence A Current PC: A B Current Active Mask: 1111 1001 1111 Taken B Not Taken 1001 C D 1111 0110 D 0110 C D 1111 D Reconverge PC Active Mask Execute PC

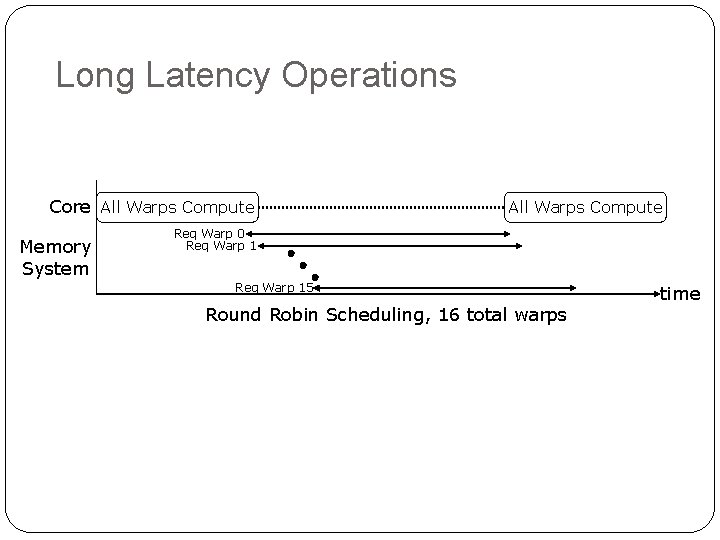

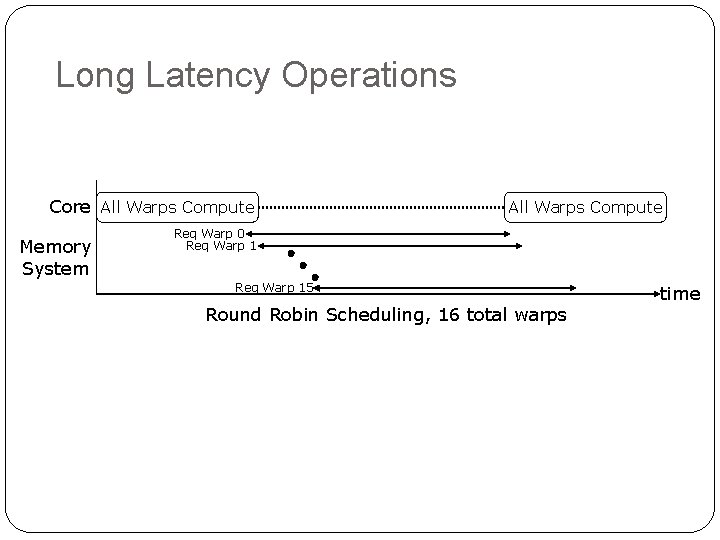

Long Latency Operations Core All Warps Compute Memory System All Warps Compute Req Warp 0 Req Warp 15 Round Robin Scheduling, 16 total warps time

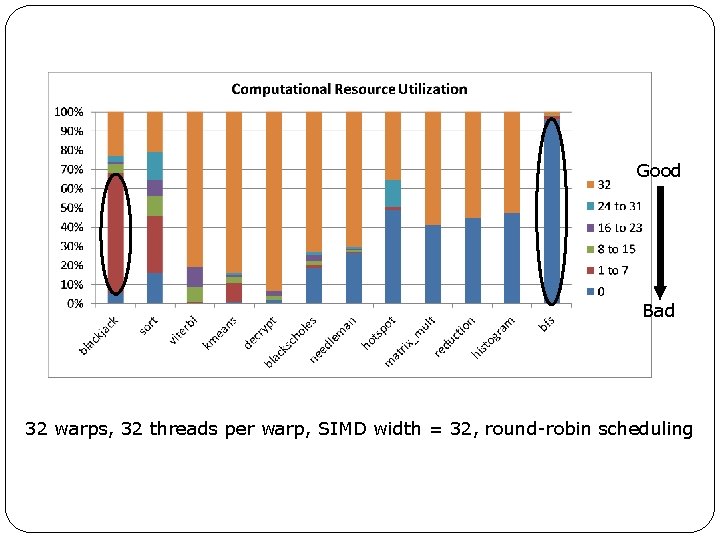

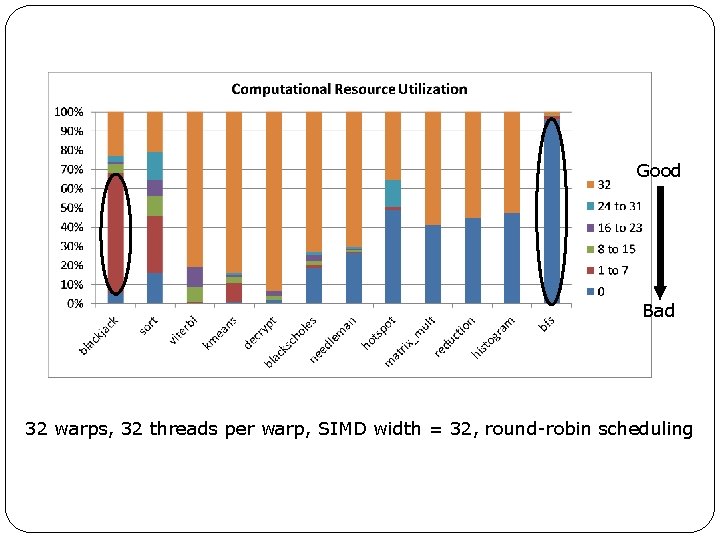

Good Bad 32 warps, 32 threads per warp, SIMD width = 32, round-robin scheduling

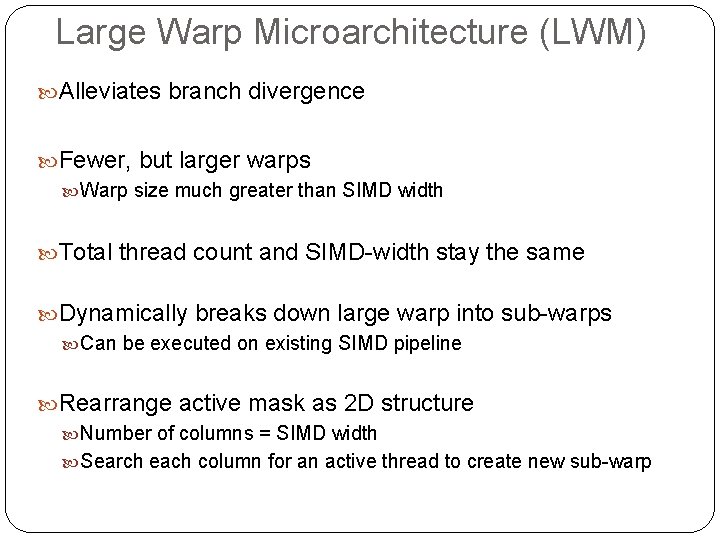

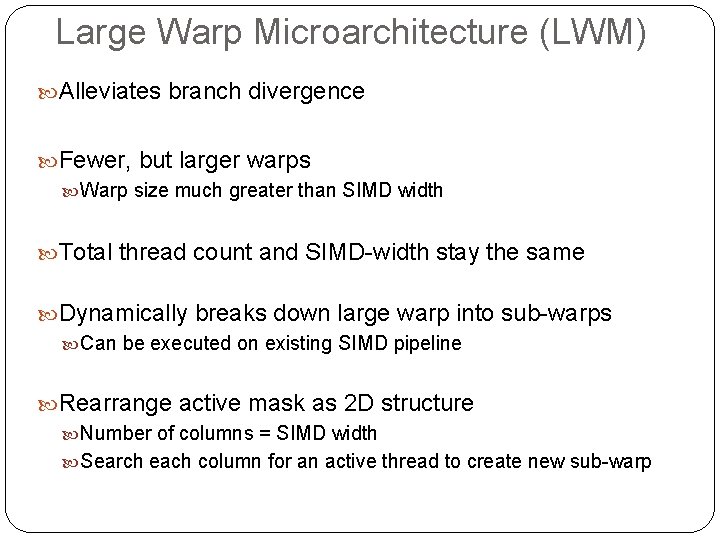

Large Warp Microarchitecture (LWM) Alleviates branch divergence Fewer, but larger warps Warp size much greater than SIMD width Total thread count and SIMD-width stay the same Dynamically breaks down large warp into sub-warps Can be executed on existing SIMD pipeline Rearrange active mask as 2 D structure Number of columns = SIMD width Search each column for an active thread to create new sub-warp

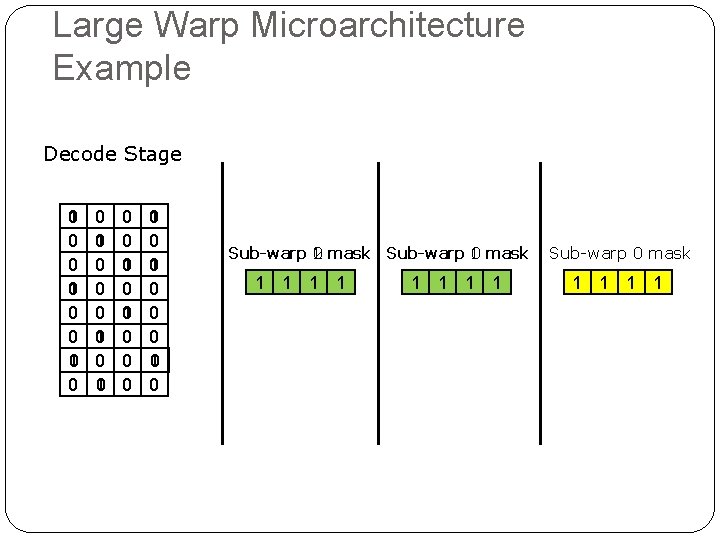

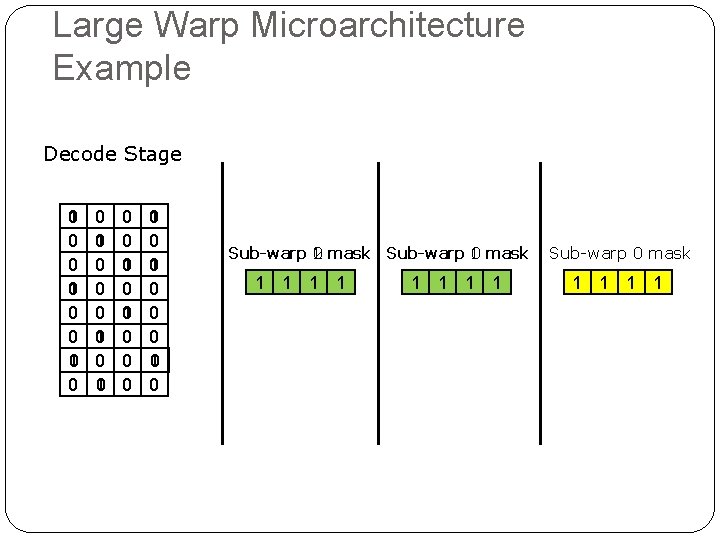

Large Warp Microarchitecture Example Decode Stage 0 1 0 0 0 0 1 0 0 1 0 0 Sub-warp 1 0 mask Sub-warp 1 2 0 mask 1 1 0 1 1 1 Sub-warp 0 mask 1 1

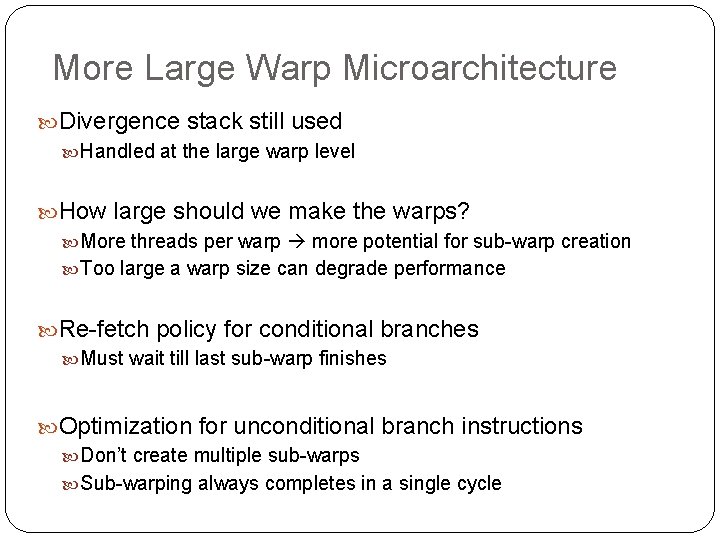

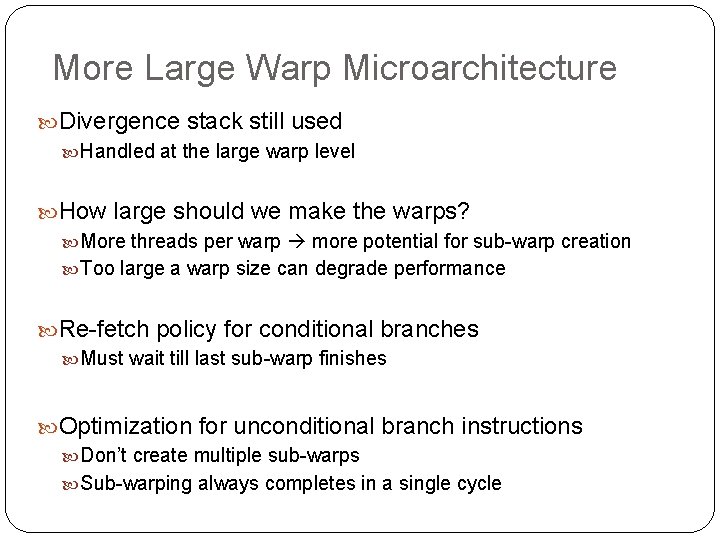

More Large Warp Microarchitecture Divergence stack still used Handled at the large warp level How large should we make the warps? More threads per warp more potential for sub-warp creation Too large a warp size can degrade performance Re-fetch policy for conditional branches Must wait till last sub-warp finishes Optimization for unconditional branch instructions Don’t create multiple sub-warps Sub-warping always completes in a single cycle

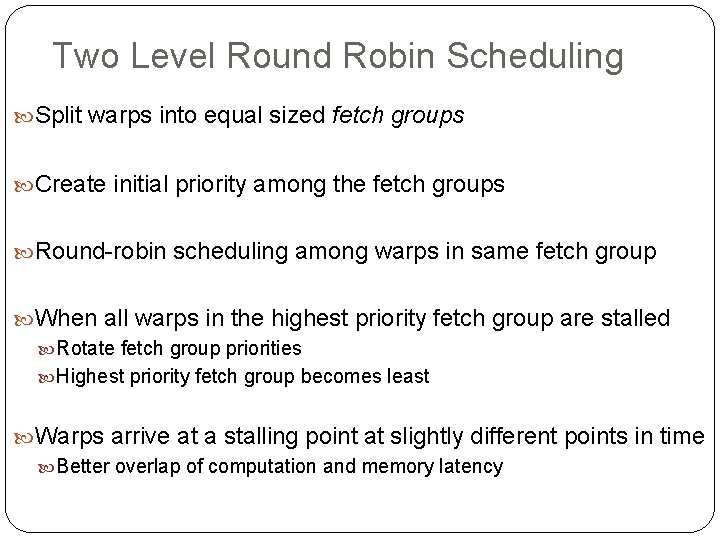

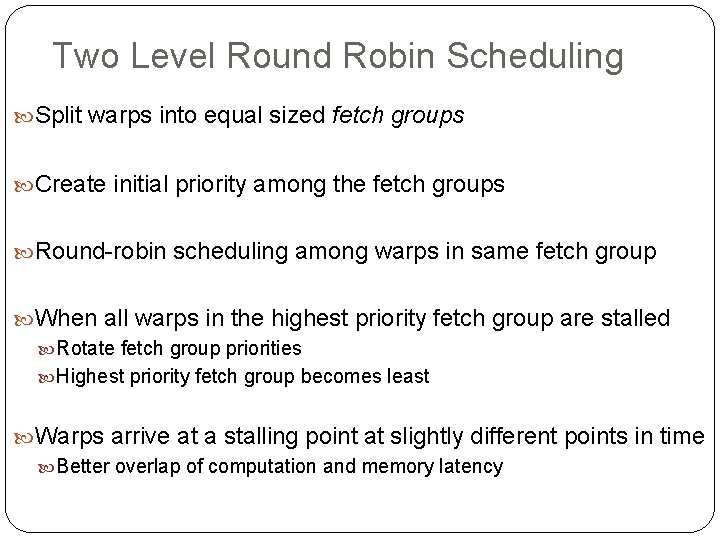

Two Level Round Robin Scheduling Split warps into equal sized fetch groups Create initial priority among the fetch groups Round-robin scheduling among warps in same fetch group When all warps in the highest priority fetch group are stalled Rotate fetch group priorities Highest priority fetch group becomes least Warps arrive at a stalling point at slightly different points in time Better overlap of computation and memory latency

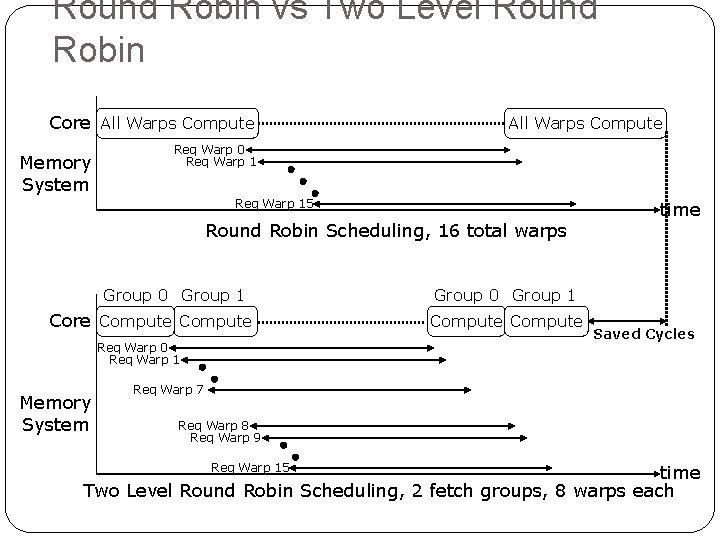

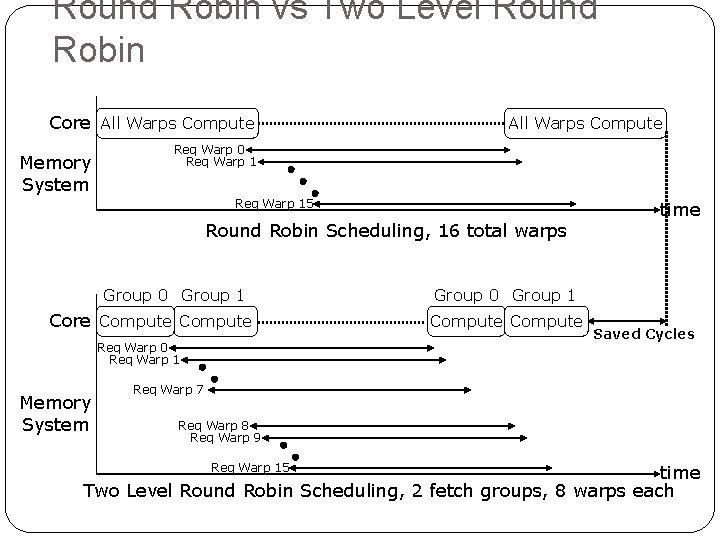

Round Robin vs Two Level Round Robin Core All Warps Compute Memory System All Warps Compute Req Warp 0 Req Warp 15 Round Robin Scheduling, 16 total warps Group 0 Group 1 Core Compute Req Warp 0 Req Warp 1 Memory System time Group 0 Group 1 Compute Saved Cycles Req Warp 7 Req Warp 8 Req Warp 9 Req Warp 15 time Two Level Round Robin Scheduling, 2 fetch groups, 8 warps each

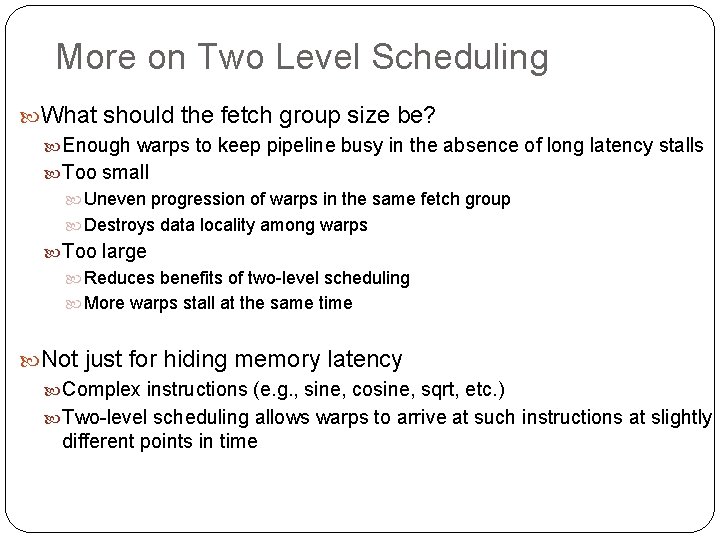

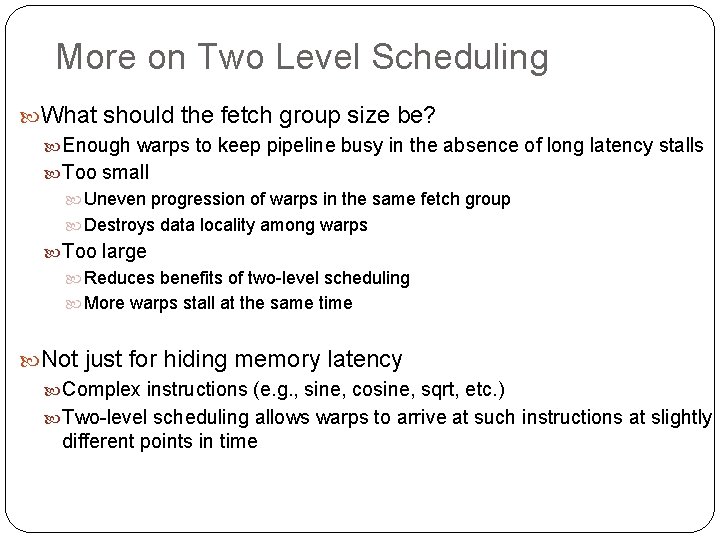

More on Two Level Scheduling What should the fetch group size be? Enough warps to keep pipeline busy in the absence of long latency stalls Too small Uneven progression of warps in the same fetch group Destroys data locality among warps Too large Reduces benefits of two-level scheduling More warps stall at the same time Not just for hiding memory latency Complex instructions (e. g. , sine, cosine, sqrt, etc. ) Two-level scheduling allows warps to arrive at such instructions at slightly different points in time

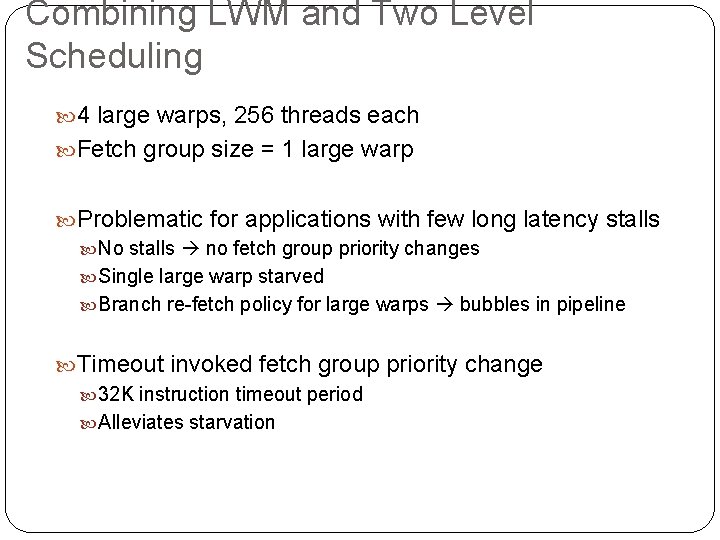

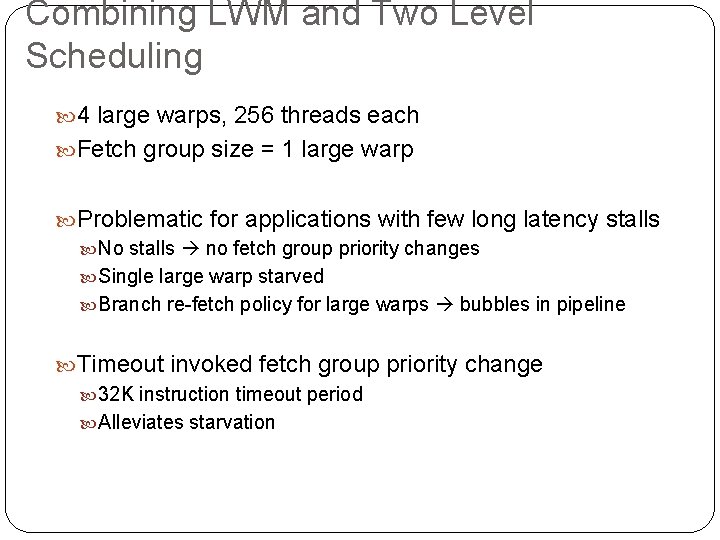

Combining LWM and Two Level Scheduling 4 large warps, 256 threads each Fetch group size = 1 large warp Problematic for applications with few long latency stalls No stalls no fetch group priority changes Single large warp starved Branch re-fetch policy for large warps bubbles in pipeline Timeout invoked fetch group priority change 32 K instruction timeout period Alleviates starvation

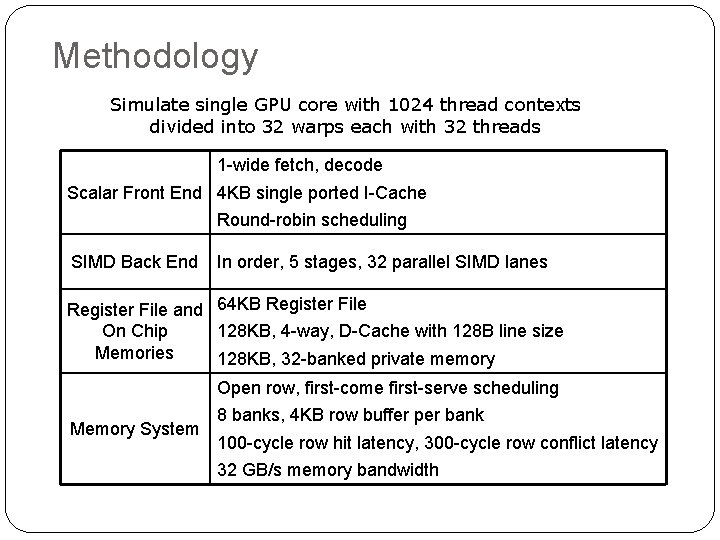

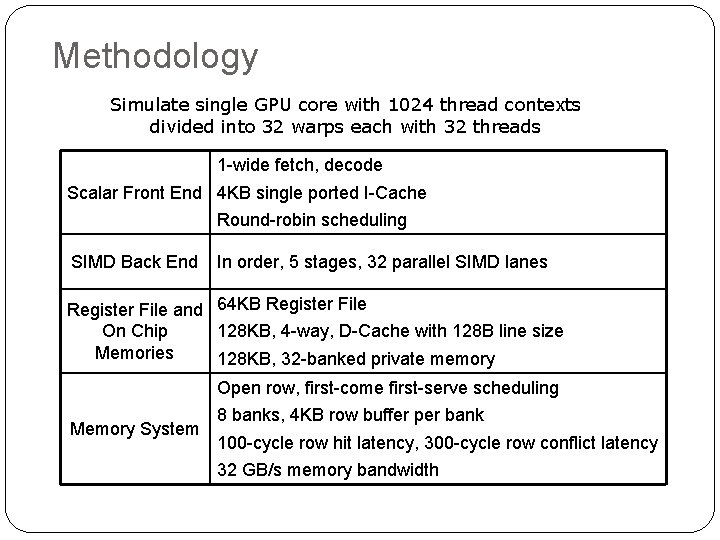

Methodology Simulate single GPU core with 1024 thread contexts divided into 32 warps each with 32 threads 1 -wide fetch, decode Scalar Front End 4 KB single ported I-Cache Round-robin scheduling SIMD Back End In order, 5 stages, 32 parallel SIMD lanes Register File and 64 KB Register File On Chip 128 KB, 4 -way, D-Cache with 128 B line size Memories 128 KB, 32 -banked private memory Open row, first-come first-serve scheduling Memory System 8 banks, 4 KB row buffer per bank 100 -cycle row hit latency, 300 -cycle row conflict latency 32 GB/s memory bandwidth

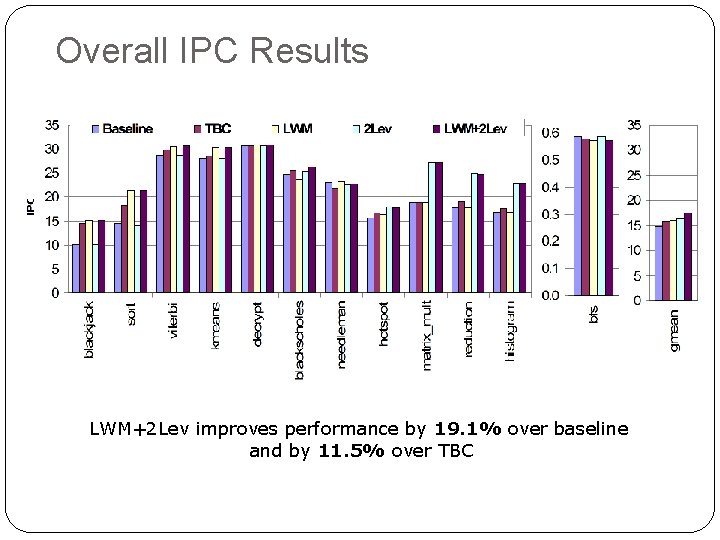

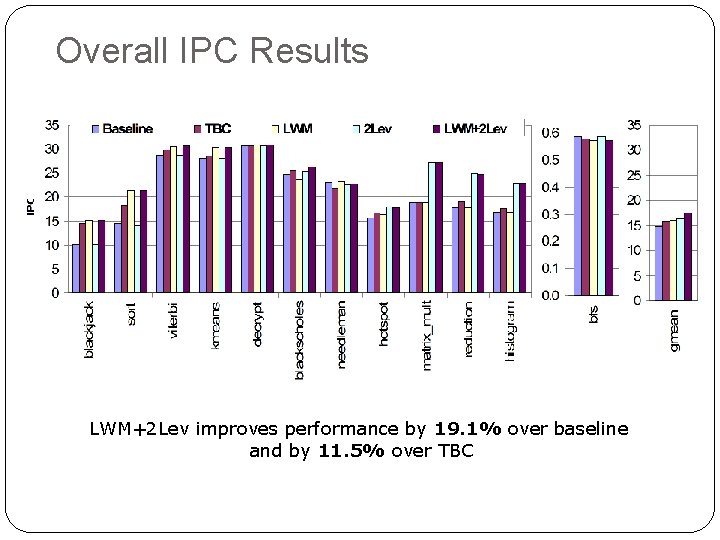

Overall IPC Results LWM+2 Lev improves performance by 19. 1% over baseline and by 11. 5% over TBC

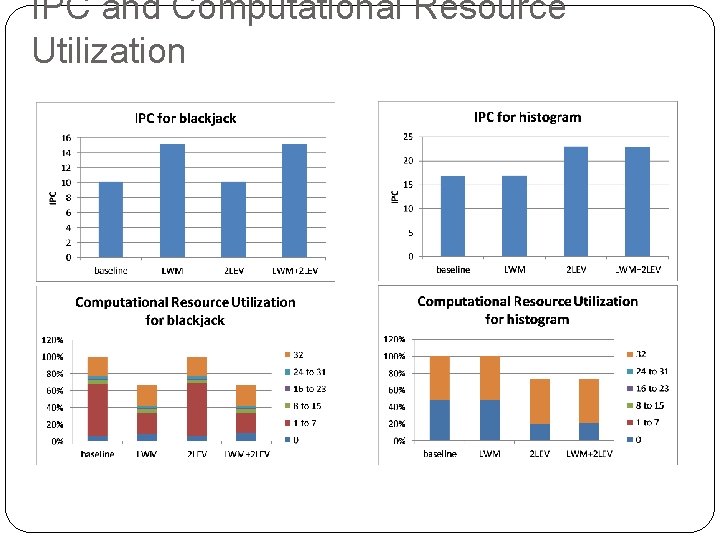

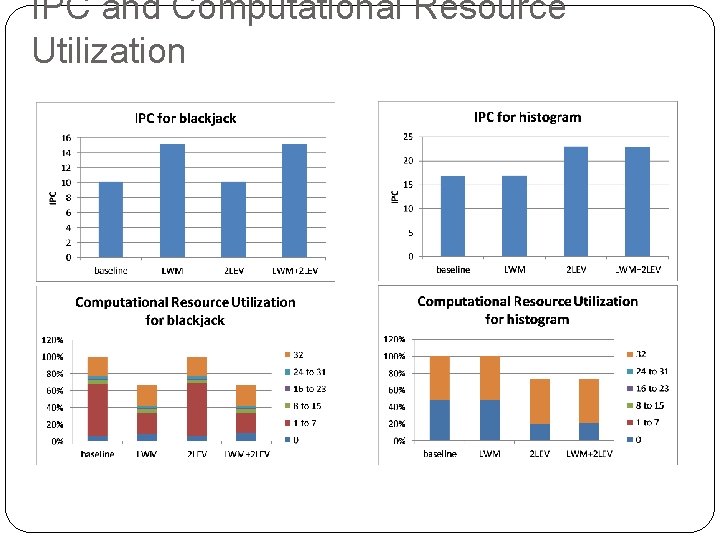

IPC and Computational Resource Utilization

Conclusion For maximum performance, the computational resources on GPUs must be effectively utilized Branch divergence and long latency operations cause them to be underutilized or unused We proposed two mechanism to alleviate this Large Warp Microarchitecture for branch divergence Two-level scheduling for long latency operations Improves performance by 19. 1% over traditional GPU cores Increases scope of applications that can run efficiently on a GPU Questions