Improving GPU Performance Through Resource Sharing Vishwesh Jatala

![Dynamic Warp Execution q q Kayiran et. al. [1] show that increasing number of Dynamic Warp Execution q q Kayiran et. al. [1] show that increasing number of](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-15.jpg)

![Experimental Setup n n Implemented in GPGPU-Sim V 3. X [2] n GPGPU-Sim Configuration Experimental Setup n n Implemented in GPGPU-Sim V 3. X [2] n GPGPU-Sim Configuration](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-17.jpg)

![References [1] O. Kayiran, A. Jog, M. Kandemir, and C. Das. Neither more nor References [1] O. Kayiran, A. Jog, M. Kandemir, and C. Das. Neither more nor](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-24.jpg)

- Slides: 25

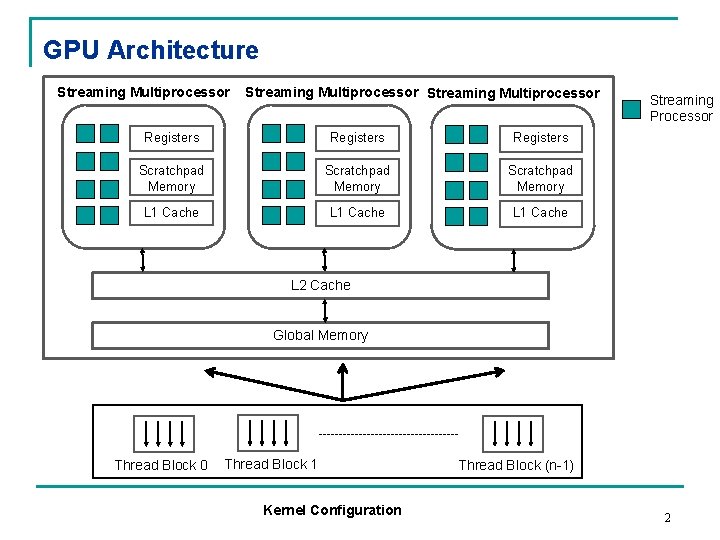

Improving GPU Performance Through Resource Sharing Vishwesh Jatala 1, Jayvant Anantpur 2, and Amey Karkare 1 1 Department of Computer Science and Engineering, Indian Institute of Technology Kanpur, India 2 Department of Computational and Data Sciences, Indian Institute of Science, Bangalore, India ACM Symposium on High-Performance Parallel and Distributed Computing (HPDC-2016) 1

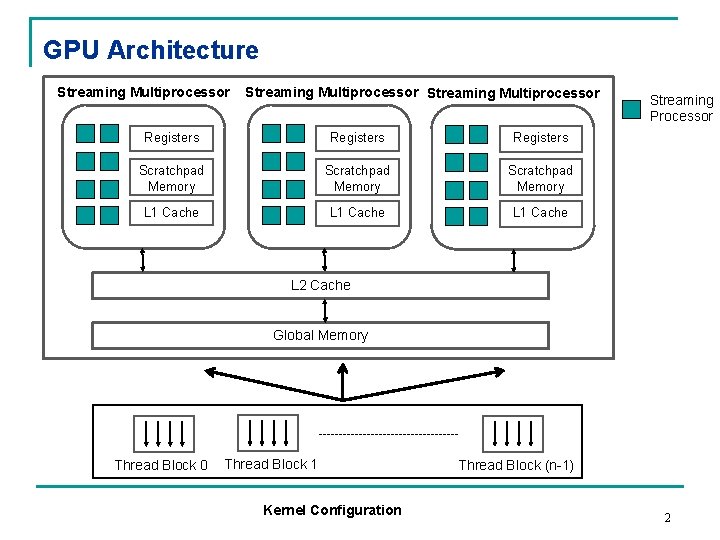

GPU Architecture Streaming Multiprocessor Registers Scratchpad Memory L 1 Cache Streaming Processor L 2 Cache Global Memory Thread Block 0 Thread Block 1 Kernel Configuration Thread Block (n-1) 2

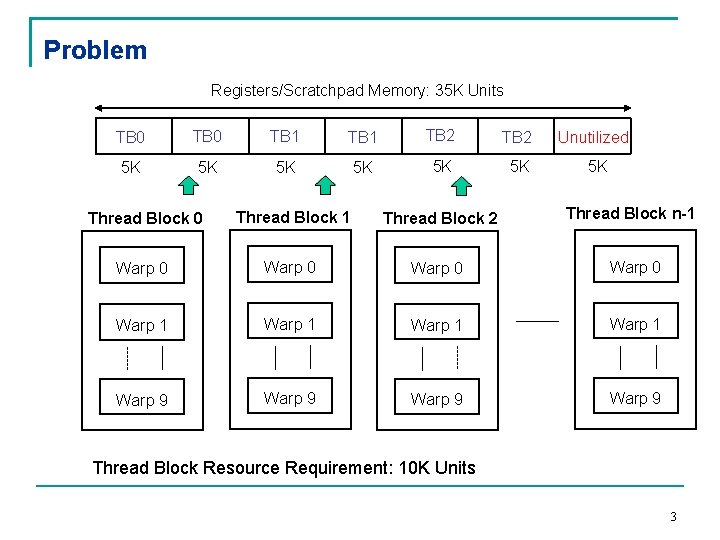

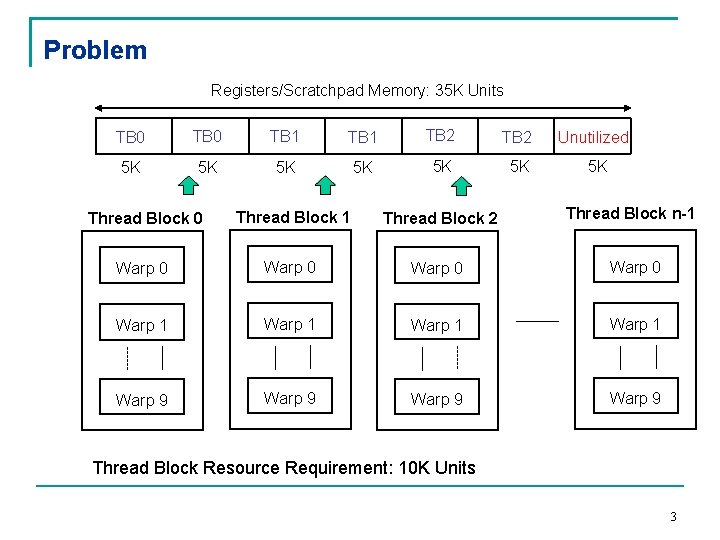

Problem Registers/Scratchpad Memory: 35 K Units TB 0 TB 1 TB 2 Unutilized 5 K 5 K Thread Block 0 Thread Block 1 Thread Block 2 Thread Block n-1 Warp 0 Warp 1 Warp 9 Thread Block Resource Requirement: 10 K Units 3

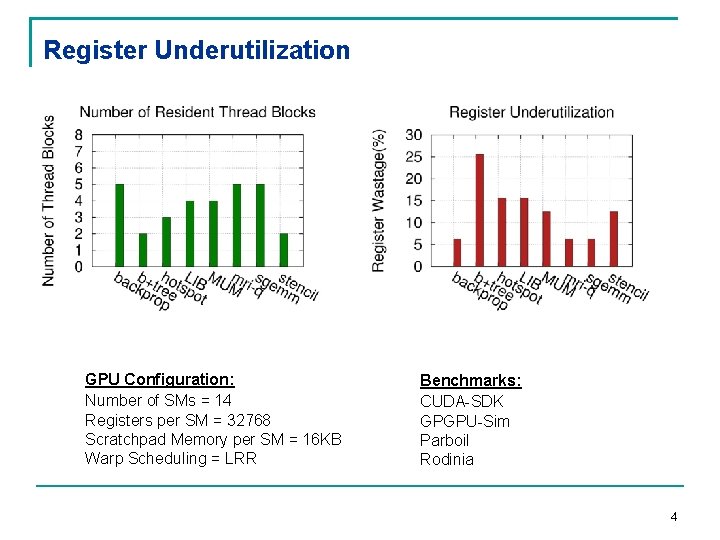

Register Underutilization GPU Configuration: Number of SMs = 14 Registers per SM = 32768 Scratchpad Memory per SM = 16 KB Warp Scheduling = LRR Benchmarks: CUDA-SDK GPGPU-Sim Parboil Rodinia 4

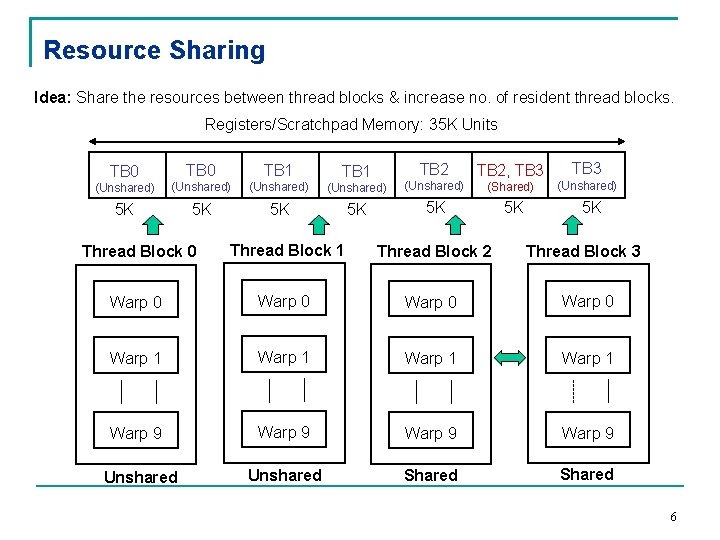

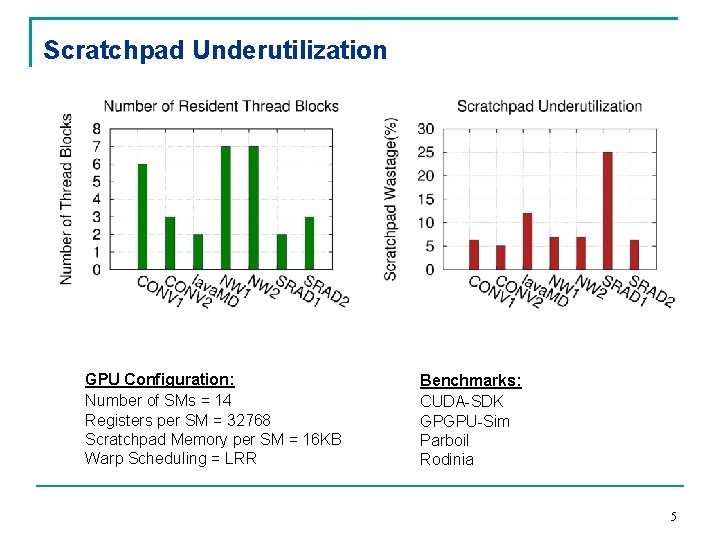

Scratchpad Underutilization GPU Configuration: Number of SMs = 14 Registers per SM = 32768 Scratchpad Memory per SM = 16 KB Warp Scheduling = LRR Benchmarks: CUDA-SDK GPGPU-Sim Parboil Rodinia 5

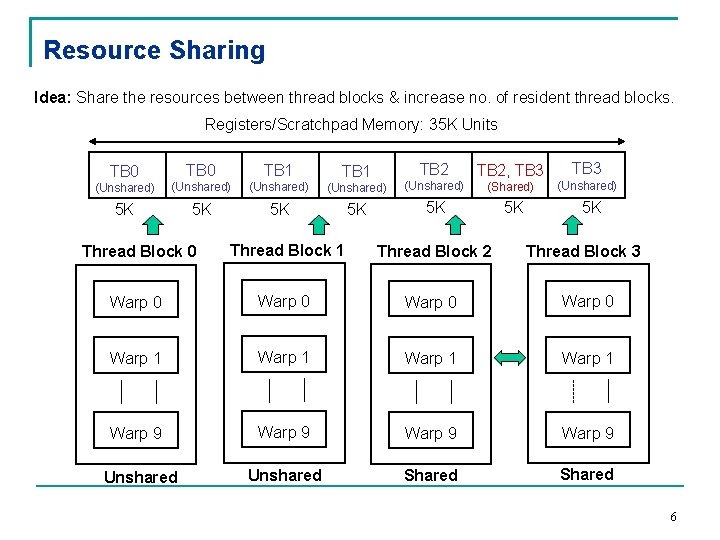

Resource Sharing Idea: Share the resources between thread blocks & increase no. of resident thread blocks. Registers/Scratchpad Memory: 35 K Units TB 2 TB 0 TB 1 (Unshared) (Unshared) 5 K 5 K 5 K TB 0 TB 2, TB 3 (Shared) 5 K TB 3 (Unshared) 5 K Thread Block 0 Thread Block 1 Thread Block 2 Thread Block 3 Warp 0 Warp 1 Warp 9 Unshared Shared 6

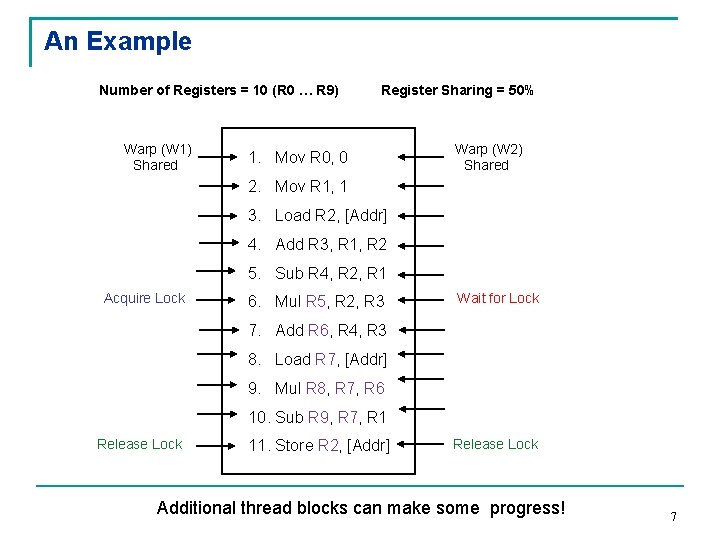

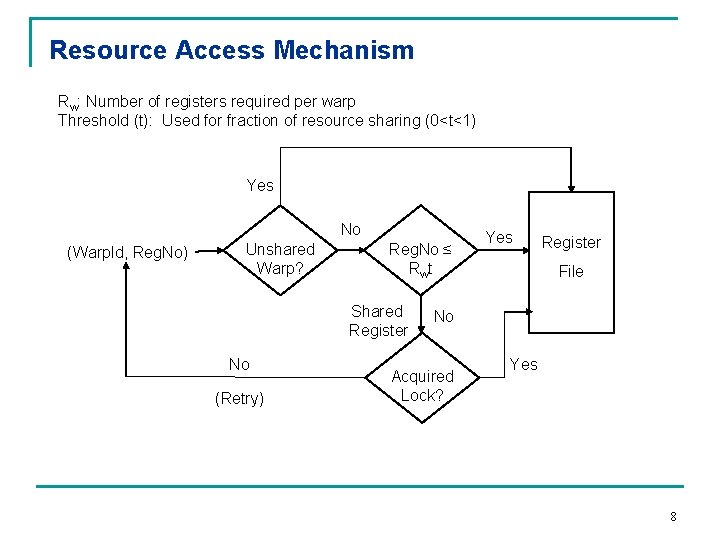

An Example Number of Registers = 10 (R 0 … R 9) Warp (W 1) Shared Register Sharing = 50% 1. Mov R 0, 0 Warp (W 2) Shared 2. Mov R 1, 1 3. Load R 2, [Addr] 4. Add R 3, R 1, R 2 5. Sub R 4, R 2, R 1 Acquire Lock 6. Mul R 5, R 2, R 3 Wait for Lock 7. Add R 6, R 4, R 3 8. Load R 7, [Addr] 9. Mul R 8, R 7, R 6 10. Sub R 9, R 7, R 1 Release Lock 11. Store R 2, [Addr] Release Lock Additional thread blocks can make some progress! 7

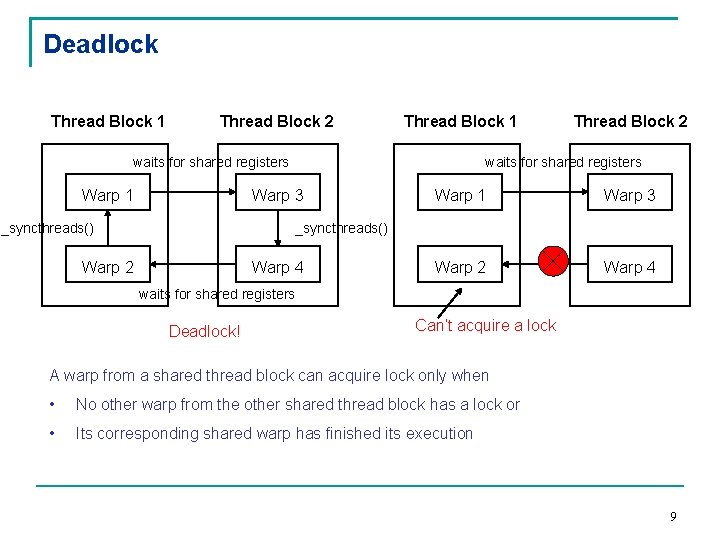

Resource Access Mechanism Rw: Number of registers required per warp Threshold (t): Used for fraction of resource sharing (0<t<1) Yes No (Warp. Id, Reg. No) Unshared Warp? Reg. No ≤ R wt Shared Register No (Retry) Yes Register File No Acquired Lock? Yes 8

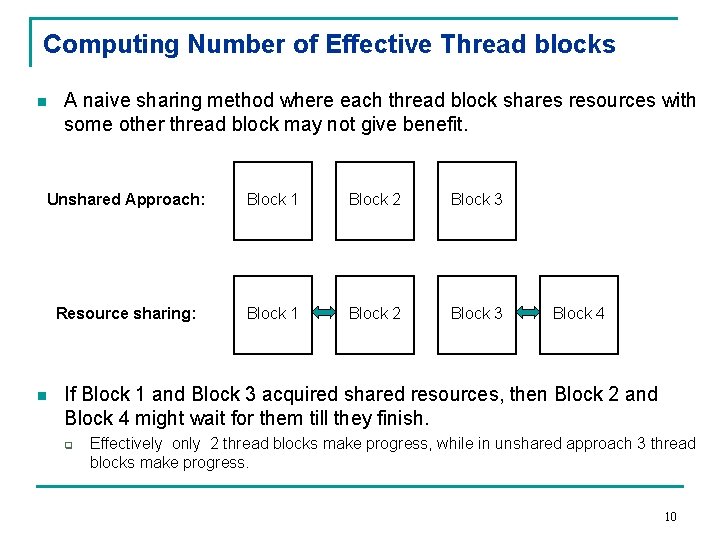

Deadlock Thread Block 1 Thread Block 2 Thread Block 1 waits for shared registers Warp 3 _syncthreads() Thread Block 2 Warp 1 Warp 3 Warp 2 Warp 4 _syncthreads() Warp 2 Warp 4 waits for shared registers Deadlock! Can’t acquire a lock A warp from a shared thread block can acquire lock only when • No other warp from the other shared thread block has a lock or • Its corresponding shared warp has finished its execution 9

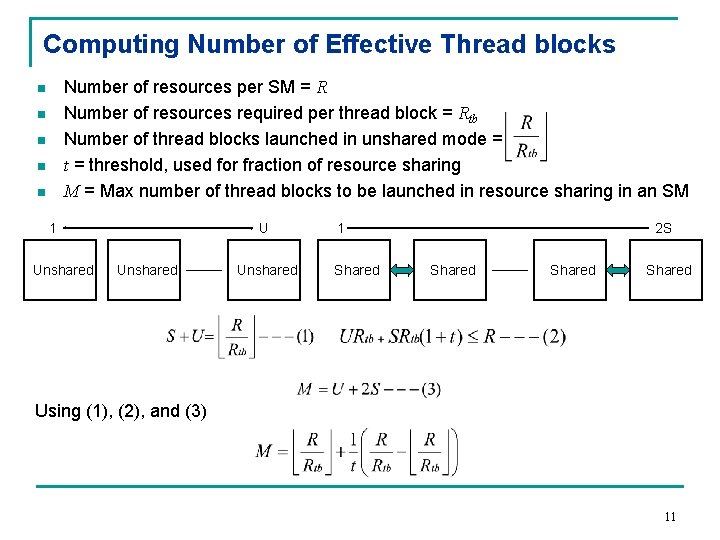

Computing Number of Effective Thread blocks n A naive sharing method where each thread block shares resources with some other thread block may not give benefit. Unshared Approach: Block 1 Block 2 Block 3 Resource sharing: Block 1 Block 2 Block 3 n Block 4 If Block 1 and Block 3 acquired shared resources, then Block 2 and Block 4 might wait for them till they finish. q Effectively only 2 thread blocks make progress, while in unshared approach 3 thread blocks make progress. 10

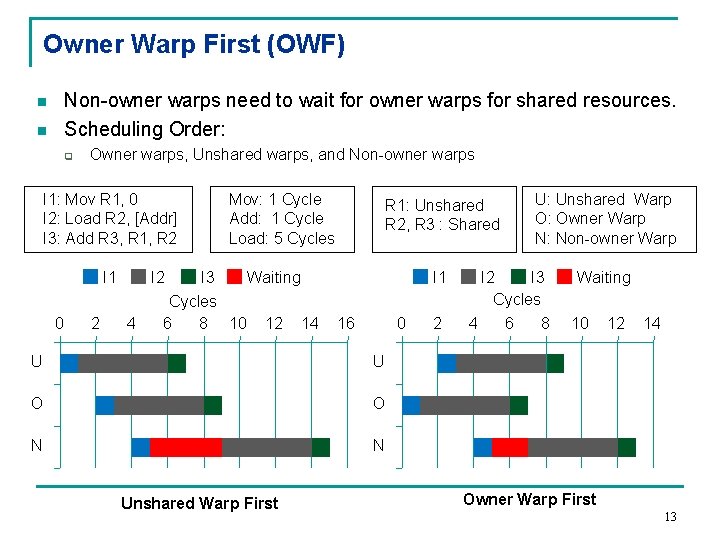

Computing Number of Effective Thread blocks Number of resources per SM = R Number of resources required per thread block = Rtb Number of thread blocks launched in unshared mode = threshold, used for fraction of resource sharing M = Max number of thread blocks to be launched in resource sharing in an SM n n n 1 Unshared 1 Shared 2 S Shared Using (1), (2), and (3) 11

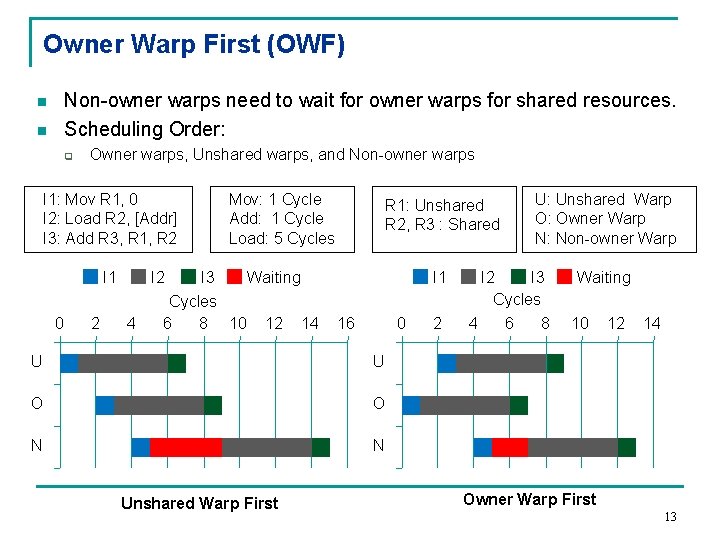

Optimizations n Type of warps in the SM: q q q n Unshared warps (warps from unshared thread block) Owner warps (warps that have exclusive lock) Non-owner warps (warps that do not have lock) Optimizations: q q q Owner Warp First (OWF) Unrolling and Reordering of Register Declarations Dynamic Warp Execution 12

Owner Warp First (OWF) Non-owner warps need to wait for owner warps for shared resources. Scheduling Order: n n q Owner warps, Unshared warps, and Non-owner warps I 1: Mov R 1, 0 I 2: Load R 2, [Addr] I 3: Add R 3, R 1, R 2 I 1 0 2 Mov: 1 Cycle Add: 1 Cycle Load: 5 Cycles U: Unshared Warp O: Owner Warp N: Non-owner Warp I 1 Waiting I 2 4 I 3 Waiting Cycles 6 8 10 12 14 R 1: Unshared R 2, R 3 : Shared 16 0 U U O O N N Unshared Warp First 2 I 3 Cycles 4 6 8 10 12 14 Owner Warp First 13

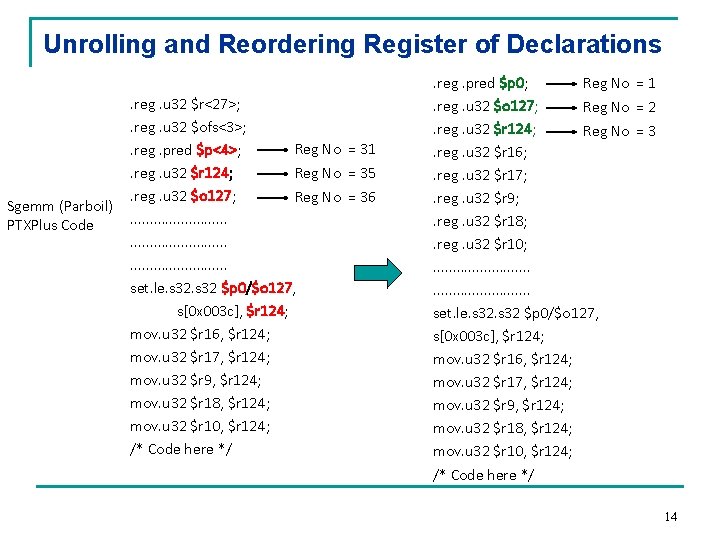

Unrolling and Reordering Register of Declarations Sgemm (Parboil) PTXPlus Code . reg. u 32 $r<27>; . reg. u 32 $ofs<3>; Reg No = 31. reg. pred $p<4>; . reg. u 32 $r 124; Reg No = 35. reg. u 32 $o 127; Reg No = 36. . . . . set. le. s 32 $p 0/$o 127, s[0 x 003 c], $r 124; mov. u 32 $r 16, $r 124; mov. u 32 $r 17, $r 124; mov. u 32 $r 9, $r 124; mov. u 32 $r 18, $r 124; mov. u 32 $r 10, $r 124; /* Code here */ . reg. pred $p 0; Reg No = 1. reg. u 32 $o 127; Reg No = 2. reg. u 32 $r 124; Reg No = 3. reg. u 32 $r 16; . reg. u 32 $r 17; . reg. u 32 $r 9; . reg. u 32 $r 18; . reg. u 32 $r 10; . . . set. le. s 32 $p 0/$o 127, s[0 x 003 c], $r 124; mov. u 32 $r 16, $r 124; mov. u 32 $r 17, $r 124; mov. u 32 $r 9, $r 124; mov. u 32 $r 18, $r 124; mov. u 32 $r 10, $r 124; /* Code here */ 14

![Dynamic Warp Execution q q Kayiran et al 1 show that increasing number of Dynamic Warp Execution q q Kayiran et. al. [1] show that increasing number of](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-15.jpg)

Dynamic Warp Execution q q Kayiran et. al. [1] show that increasing number of thread blocks may degrade the performance of memory bound applications. Control the execution of memory instructions from non-owner warps Strategy: q q Periodically monitor stall cycles of each SM Decrease/Increase the probability of executing memory instructions from non-owner warps Steps: q q q Consider SM[1. . N]. SM[1] is in unshared mode, SM[2]. . SM[N] are in sharing mode. Periodically compare the stall cycles of SM[2]. . SM[N] with SM[1] and decrease/increase the probability of executing memory instructions of non-owner warps 15

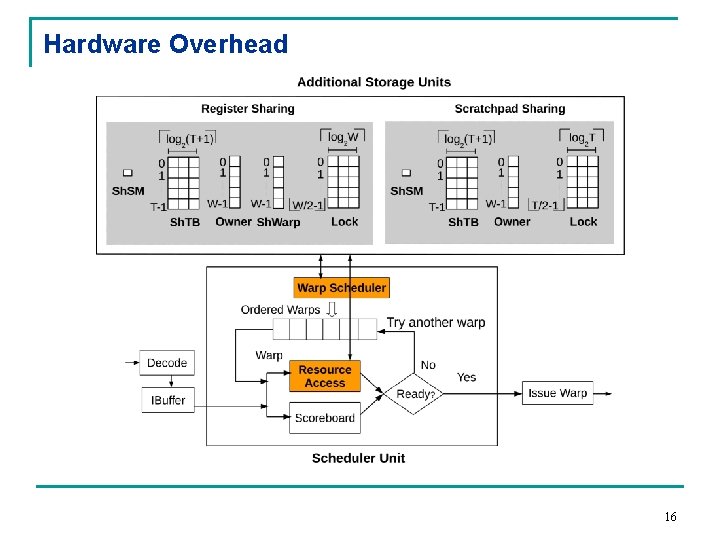

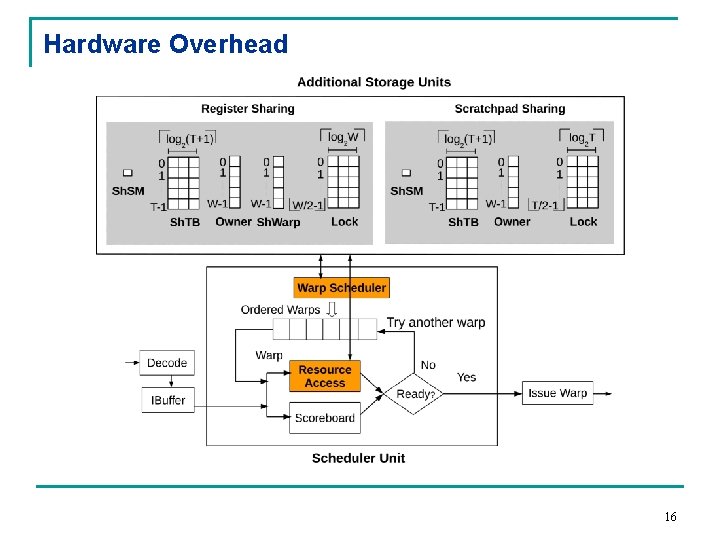

Hardware Overhead 16

![Experimental Setup n n Implemented in GPGPUSim V 3 X 2 n GPGPUSim Configuration Experimental Setup n n Implemented in GPGPU-Sim V 3. X [2] n GPGPU-Sim Configuration](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-17.jpg)

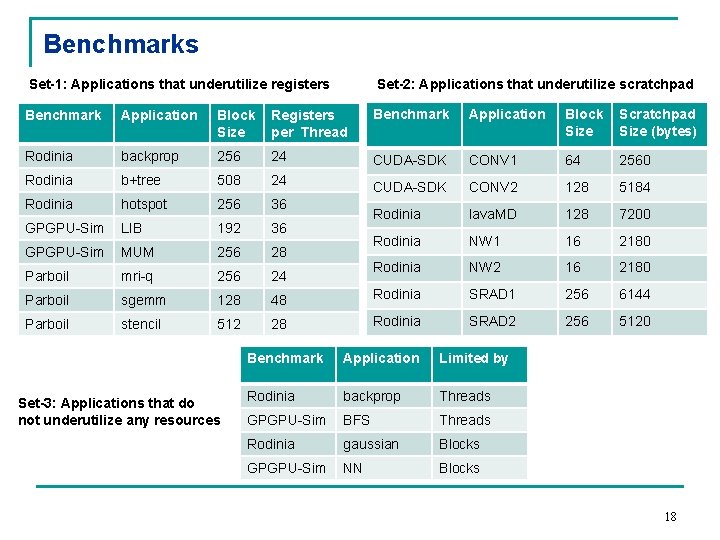

Experimental Setup n n Implemented in GPGPU-Sim V 3. X [2] n GPGPU-Sim Configuration Number of SMs 14 Registers per SM 32768 Scratchpad Memory per SM 16 K Warp Scheduling LRR Max Num of TBs/Core 8 Max Num of Threads/Core 1536 Benchmark Suites: q n Resource GPGPU-SIM [3], Rodinia [4], Parboil [5], CUDA-SDK [6] Simulated the benchmark applications with PTXPlus assembly code Threshold t = 0. 1 (Resource Sharing 90%) 17

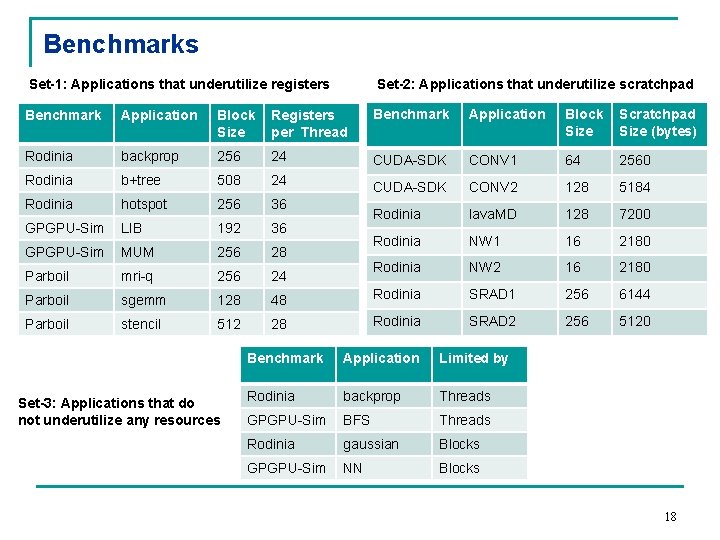

Benchmarks Set-1: Applications that underutilize registers Set-2: Applications that underutilize scratchpad Benchmark Application Block Size Registers per Thread Benchmark Application Block Size Scratchpad Size (bytes) Rodinia backprop 256 24 CUDA-SDK CONV 1 64 2560 Rodinia b+tree 508 24 CUDA-SDK CONV 2 128 5184 Rodinia hotspot 256 36 GPGPU-Sim LIB 192 36 Rodinia lava. MD 128 7200 GPGPU-Sim MUM 256 28 Rodinia NW 1 16 2180 Parboil mri-q 256 24 Rodinia NW 2 16 2180 Parboil sgemm 128 48 Rodinia SRAD 1 256 6144 Parboil stencil 512 28 Rodinia SRAD 2 256 5120 Set-3: Applications that do not underutilize any resources Benchmark Application Limited by Rodinia backprop Threads GPGPU-Sim BFS Threads Rodinia gaussian Blocks GPGPU-Sim NN Blocks 18

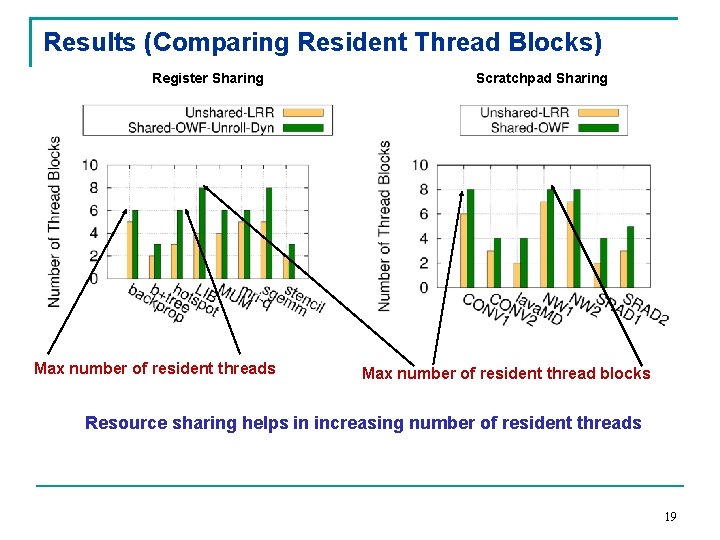

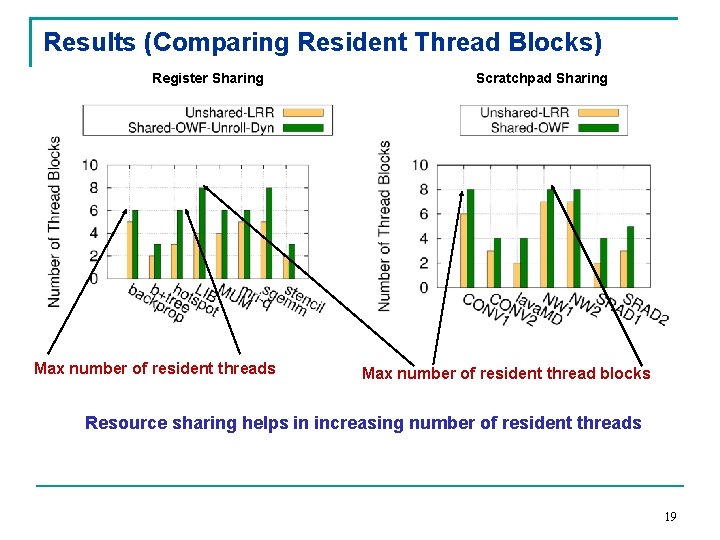

Results (Comparing Resident Thread Blocks) Register Sharing Max number of resident threads Scratchpad Sharing Max number of resident thread blocks Resource sharing helps in increasing number of resident threads 19

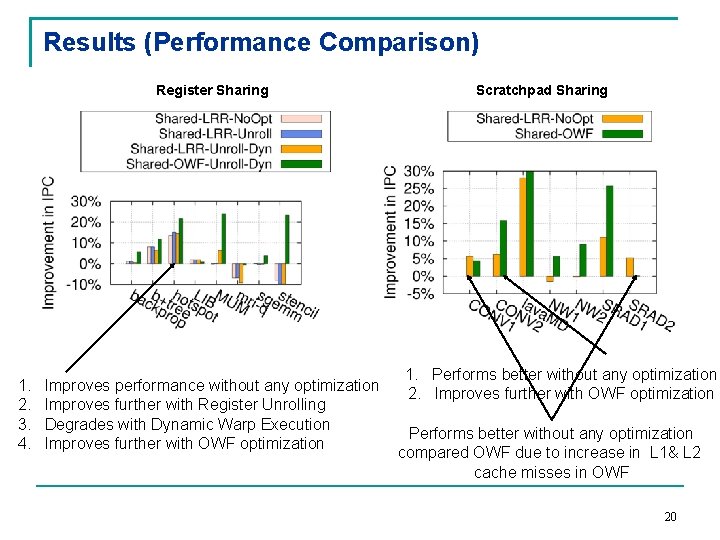

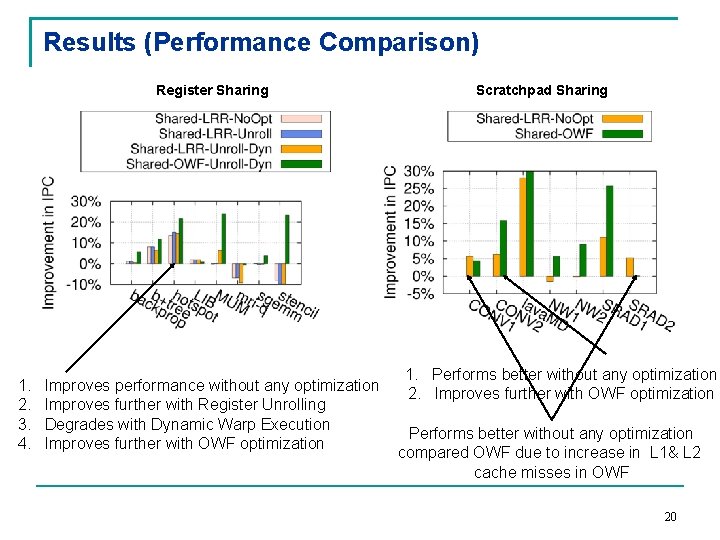

Results (Performance Comparison) Register Sharing 1. 2. 3. 4. Improves performance without any optimization Improves further with Register Unrolling Degrades with Dynamic Warp Execution Improves further with OWF optimization Scratchpad Sharing 1. Performs better without any optimization 2. Improves further with OWF optimization Performs better without any optimization compared OWF due to increase in L 1& L 2 cache misses in OWF 20

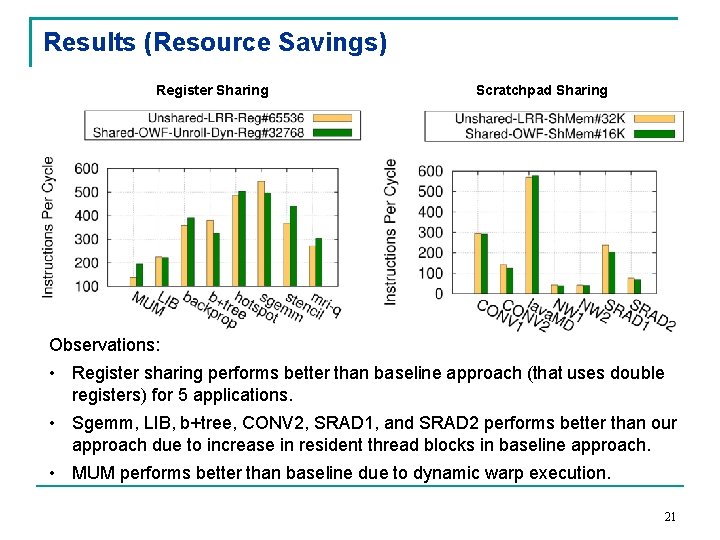

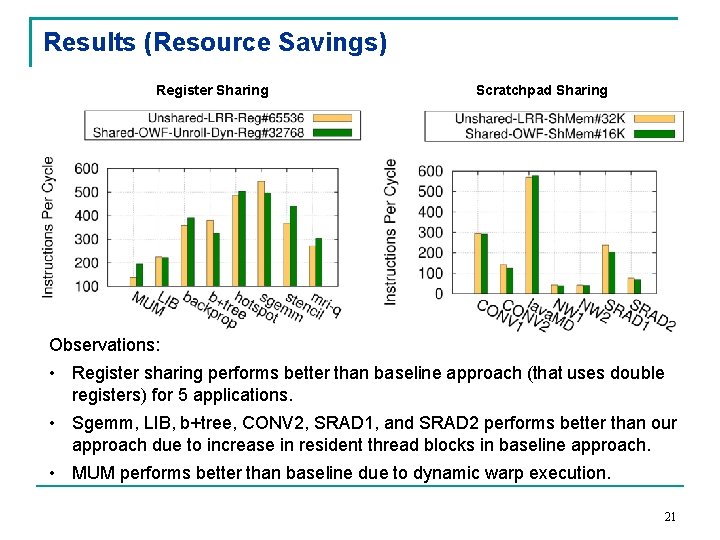

Results (Resource Savings) Register Sharing Scratchpad Sharing Observations: • Register sharing performs better than baseline approach (that uses double registers) for 5 applications. • Sgemm, LIB, b+tree, CONV 2, SRAD 1, and SRAD 2 performs better than our approach due to increase in resident thread blocks in baseline approach. • MUM performs better than baseline due to dynamic warp execution. 21

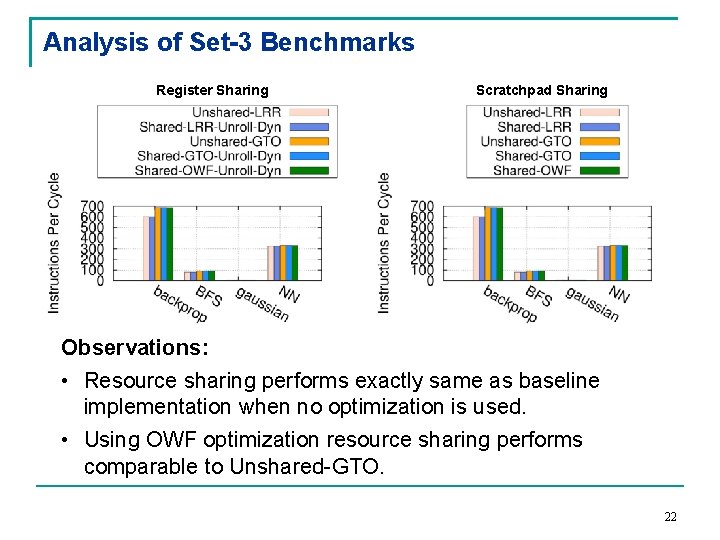

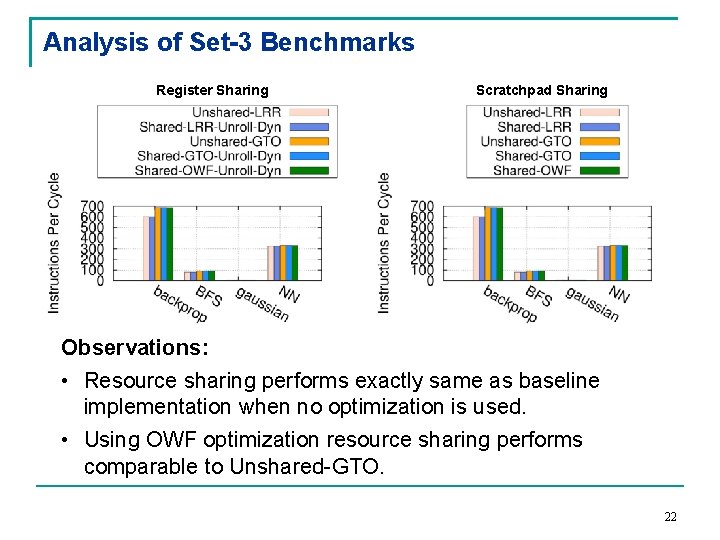

Analysis of Set-3 Benchmarks Register Sharing Scratchpad Sharing Observations: • Resource sharing performs exactly same as baseline implementation when no optimization is used. • Using OWF optimization resource sharing performs comparable to Unshared-GTO. 22

Conclusion • • Problems with default resource allocation Resource Sharing Optimizations Performance gains up to 24% with register sharing and up to 30% with scratchpad sharing Future Work • Increase the availability of shared resources • Introduce compiler optimizations • Mechanism to release the shared resources early 23

![References 1 O Kayiran A Jog M Kandemir and C Das Neither more nor References [1] O. Kayiran, A. Jog, M. Kandemir, and C. Das. Neither more nor](https://slidetodoc.com/presentation_image_h/dc56c69f985fa4c66270ff0a29a6b4ef/image-24.jpg)

References [1] O. Kayiran, A. Jog, M. Kandemir, and C. Das. Neither more nor less: Optimizing thread-level parallelism for GPGPUs. In PACT, 2013 [2] GPGPU-Sim. http: //www. gpgpu-sim. org [3] A. Bakhoda, G. Yuan, W. Fung, H. Wong, and T. Aamodt. Analyzing CUDA workloads using a detailed GPU simulator. In ISPASS, 2009. [4] S. Che, M. Boyer, J. Meng, D. Tarjan, J. Sheaer, S. -H. Lee, and K. Skadron. Rodinia: A benchmark suite for heterogeneous computing. In IISWC, 2009. [5] Parboil Benchmarks. http: //impact. crhc. illinois. edu/Parboil/parboil. aspx. [6] CUDA C Programming Guide https: //docs. nvidia. com/cuda-cprogramming-guide/ [7] P. Xiang, Y. Yang, and H. Zhou. Warp-level divergence in GPUs: Characterization, impact, and mitigation. In HPCA, 2014 [8] Y. Yang, P. Xiang, M. Mantor, N. Rubin, and H. Zhou. Shared Memory Multiplexing: A Novel Way to Improve GPGPU Throughput. In PACT, 2012. 24

Thank You 25