Human Language Technology Part of Speech POS Tagging

- Slides: 11

Human Language Technology Part of Speech (POS) Tagging II l Rule-based Tagging

Acknowledgment l Most slides taken from Bonnie Dorr’s course notes: www. umiacs. umd. edu/~bonnie/courses/cmsc 723 -03 l Jurafsky & Martin Chapter 5 April 2005 CLINT Lecture IV 2

Bibliography l A. Voutilainen, Morphological disambiguation, in Karlsson, Voutilainen, Heikkila, Anttila (eds) Constraint Grammar pp 165 -284, Mouton de Gruyter, 1995. See [e-book] April 2005 CLINT Lecture IV 3

Eng. CG Rule-Based Tagger (Voutilainen 1995) l Rules based on English Constraint Grammar l Two stage design l Uses ENGTWOL Lexicon l Hand written disambiguation rules April 2005 CLINT Lecture IV 4

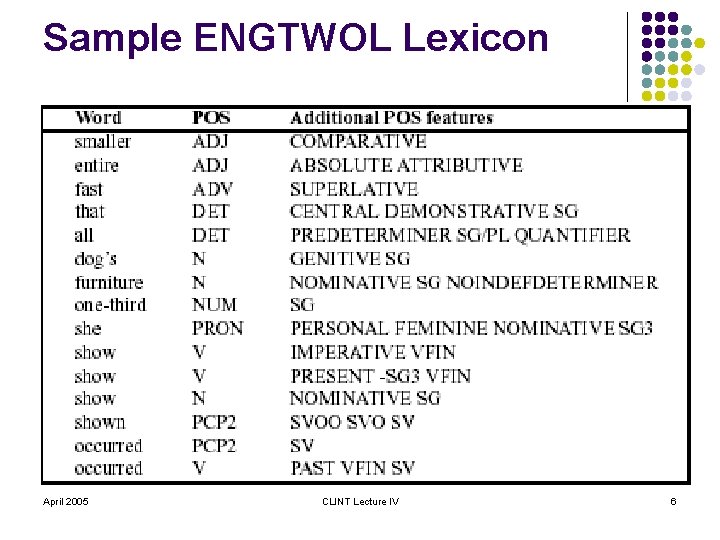

ENGTWOL Lexicon l l l Based on TWO-Level morphology of English (hence the name) 56, 000 entries for English word stems Each entry annotated with morphological and syntactic features April 2005 CLINT Lecture IV 5

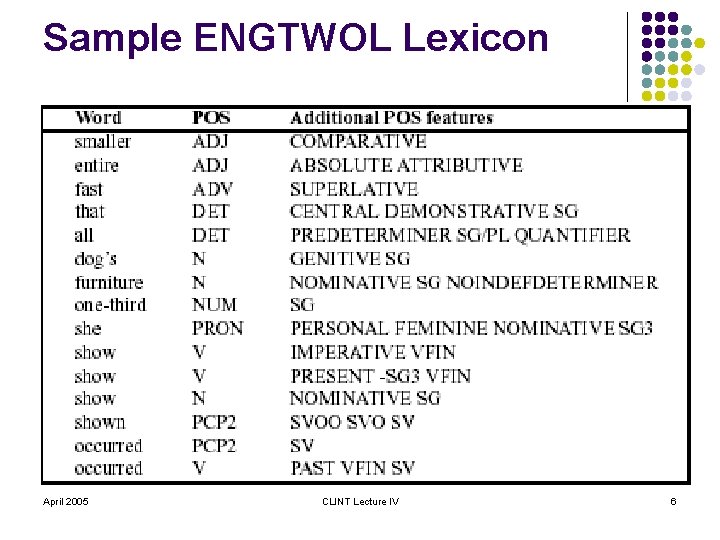

Sample ENGTWOL Lexicon April 2005 CLINT Lecture IV 6

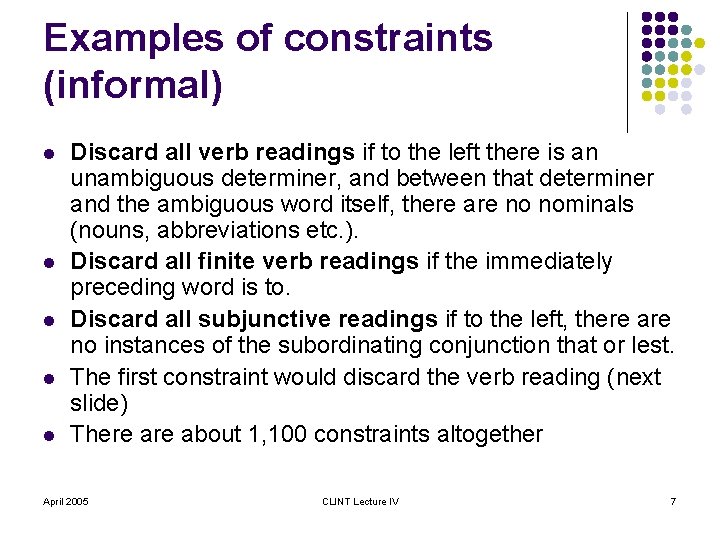

Examples of constraints (informal) l l l Discard all verb readings if to the left there is an unambiguous determiner, and between that determiner and the ambiguous word itself, there are no nominals (nouns, abbreviations etc. ). Discard all finite verb readings if the immediately preceding word is to. Discard all subjunctive readings if to the left, there are no instances of the subordinating conjunction that or lest. The first constraint would discard the verb reading (next slide) There about 1, 100 constraints altogether April 2005 CLINT Lecture IV 7

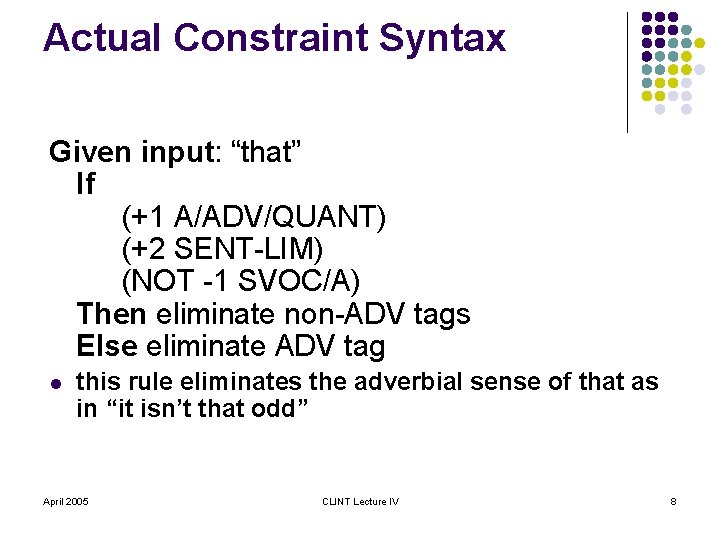

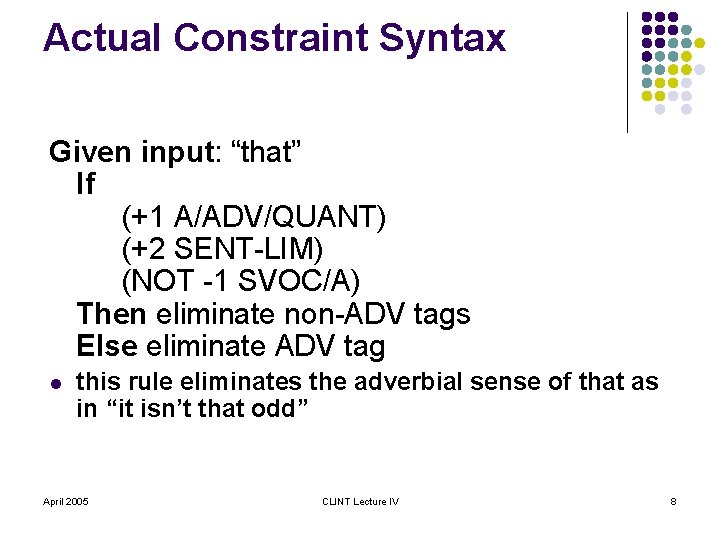

Actual Constraint Syntax Given input: “that” If (+1 A/ADV/QUANT) (+2 SENT-LIM) (NOT -1 SVOC/A) Then eliminate non-ADV tags Else eliminate ADV tag l this rule eliminates the adverbial sense of that as in “it isn’t that odd” April 2005 CLINT Lecture IV 8

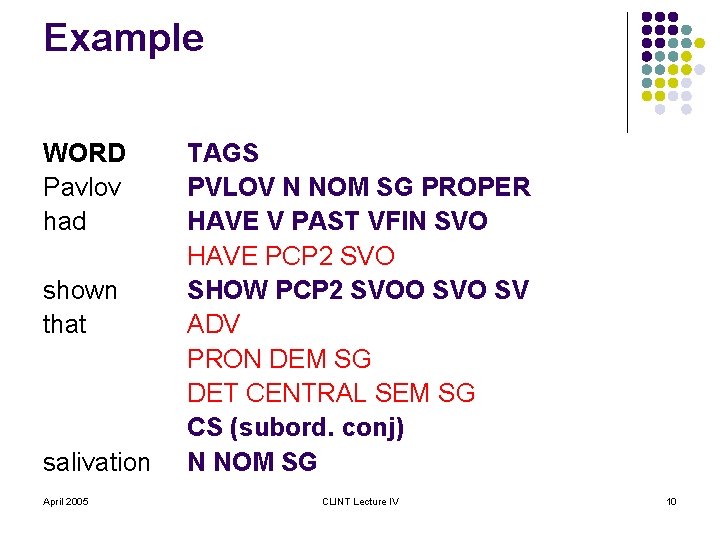

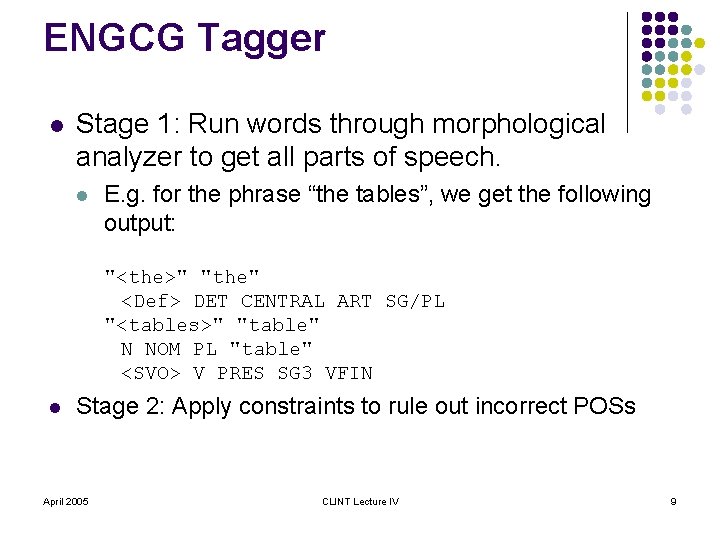

ENGCG Tagger l Stage 1: Run words through morphological analyzer to get all parts of speech. l E. g. for the phrase “the tables”, we get the following output: "<the>" "the" <Def> DET CENTRAL ART SG/PL "<tables>" "table" N NOM PL "table" <SVO> V PRES SG 3 VFIN l Stage 2: Apply constraints to rule out incorrect POSs April 2005 CLINT Lecture IV 9

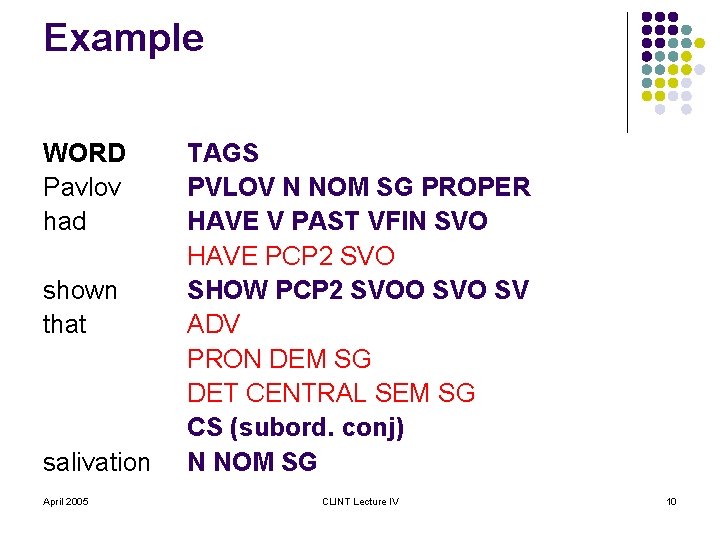

Example WORD Pavlov had shown that salivation April 2005 TAGS PVLOV N NOM SG PROPER HAVE V PAST VFIN SVO HAVE PCP 2 SVO SHOW PCP 2 SVOO SV ADV PRON DEM SG DET CENTRAL SEM SG CS (subord. conj) N NOM SG CLINT Lecture IV 10

Performance l l Tested on examples from Wall St Journal, Brown Corpus, Lancaster-Oslo-Bergen Corpus After application of the rules 93 -97% of all words are fully disambiguated, and 99. 7% of all words retain correct reading. At the time, this was superior performance to other taggers However, one should not discount the amount of effort needed to create this system