POS Tagging Markov Models POS Tagging Purpose to

- Slides: 12

POS Tagging Markov Models

POS Tagging • Purpose: to give us explicit information about the structure of a text, and of the language itself, without necessarily having a complete understanding of the text • To feed other NLP applications/processes: – Chunking (feeds IE tasks) – Speech Recognition – IR • Stemming (to more accurately stem) • QA – Adding more structure (Parsing – in all its flavors)

Tags • Most common: PTB’s ~45 tags • Another common one: CLAWS 7 (BNC), ~140 tags (up from a historic 62 tags)

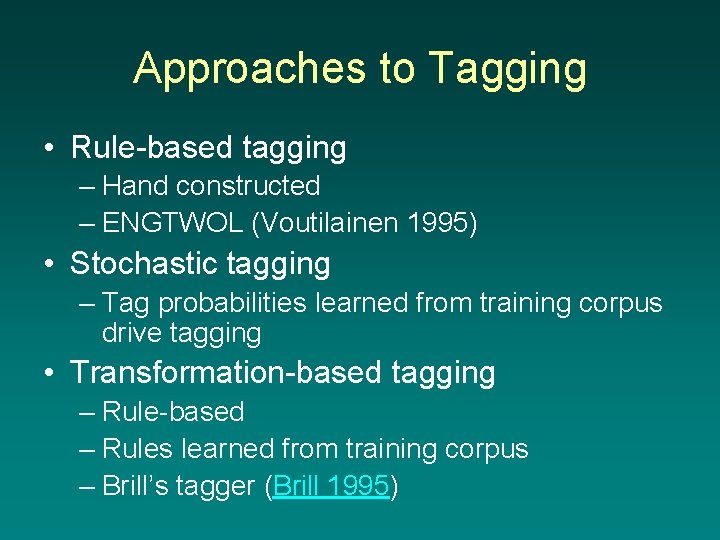

Approaches to Tagging • Rule-based tagging – Hand constructed – ENGTWOL (Voutilainen 1995) • Stochastic tagging – Tag probabilities learned from training corpus drive tagging • Transformation-based tagging – Rule-based – Rules learned from training corpus – Brill’s tagger (Brill 1995)

A Really Stupid Tagger • Read the words and tags from a POS tagged corpus • Count the # of tags for any given word • Calculate the frequency for each tag-word pair • Ignore all but the most frequent (for each word) • Use the frequencies thus learned to tag a text • Sound familiar? – HW#3! (All but last 2 steps. )

A Really Stupid Tagger • But Charniak 1993 showed: – Such a tagger has an accuracy of 90% • An early rule-based tagger (Greene and Rubin 1971), using hand-coded rules and patterns got 77% right • The best stochastic taggers around hit about 95% (controlled experiments approach 99%) • Let’s just give up and go home!

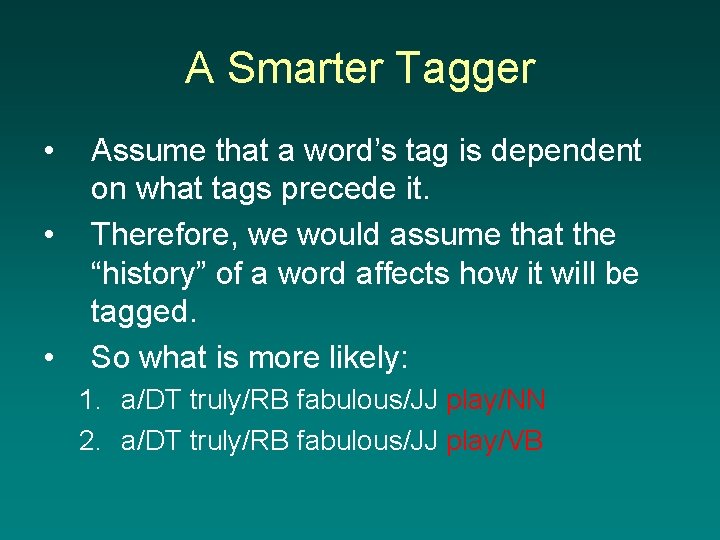

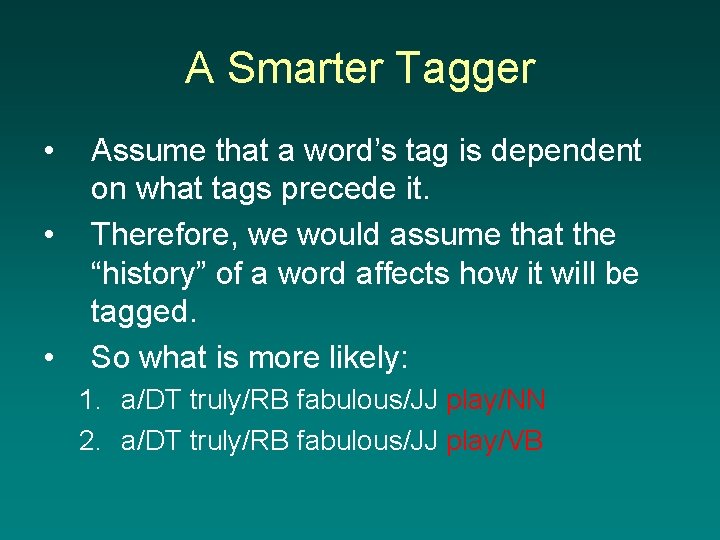

A Smarter Tagger • • • Assume that a word’s tag is dependent on what tags precede it. Therefore, we would assume that the “history” of a word affects how it will be tagged. So what is more likely: 1. a/DT truly/RB fabulous/JJ play/NN 2. a/DT truly/RB fabulous/JJ play/VB

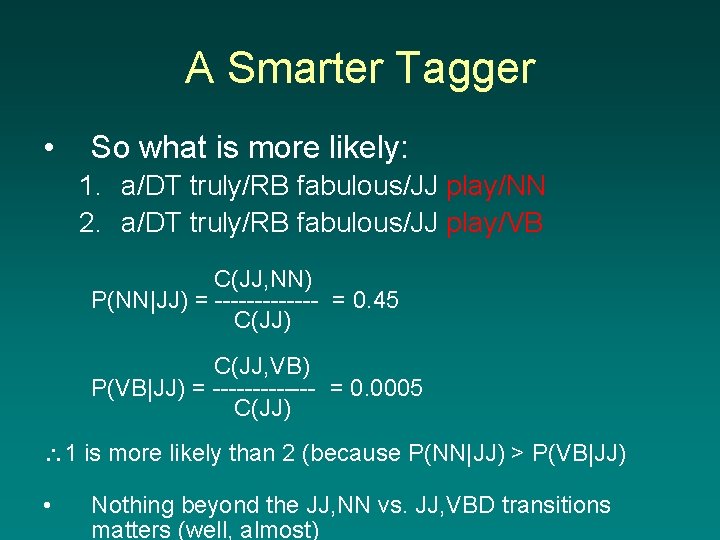

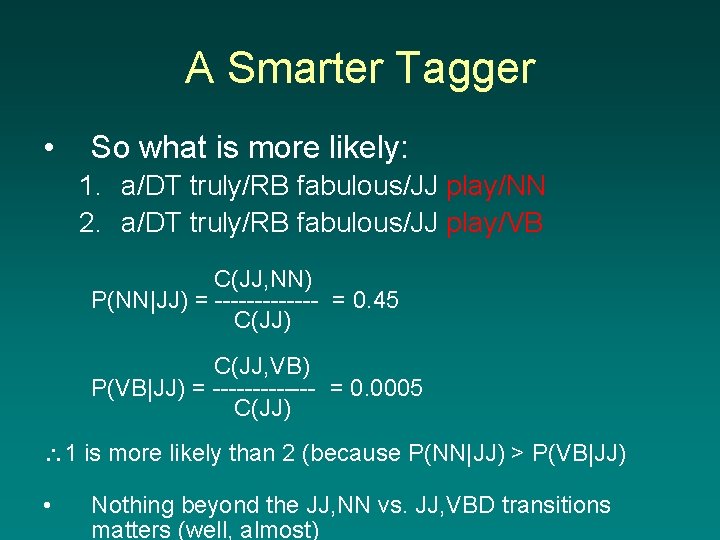

A Smarter Tagger • So what is more likely: 1. a/DT truly/RB fabulous/JJ play/NN 2. a/DT truly/RB fabulous/JJ play/VB C(JJ, NN) P(NN|JJ) = ------- = 0. 45 C(JJ) C(JJ, VB) P(VB|JJ) = ------- = 0. 0005 C(JJ) 1 is more likely than 2 (because P(NN|JJ) > P(VB|JJ) • Nothing beyond the JJ, NN vs. JJ, VBD transitions matters (well, almost)

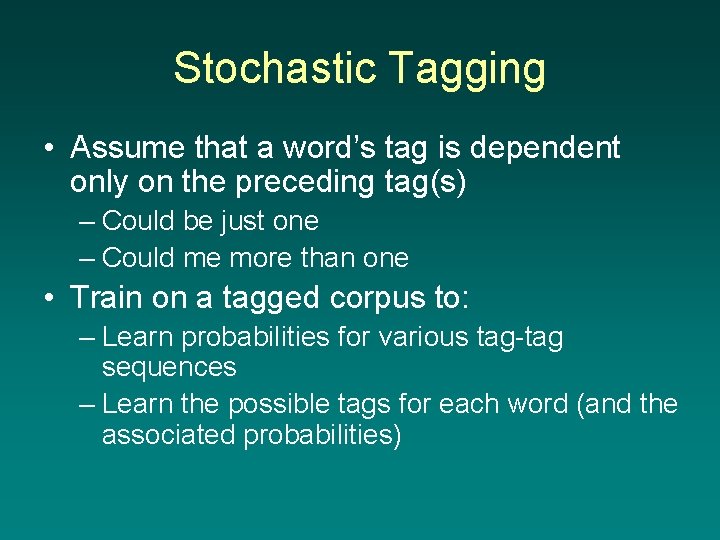

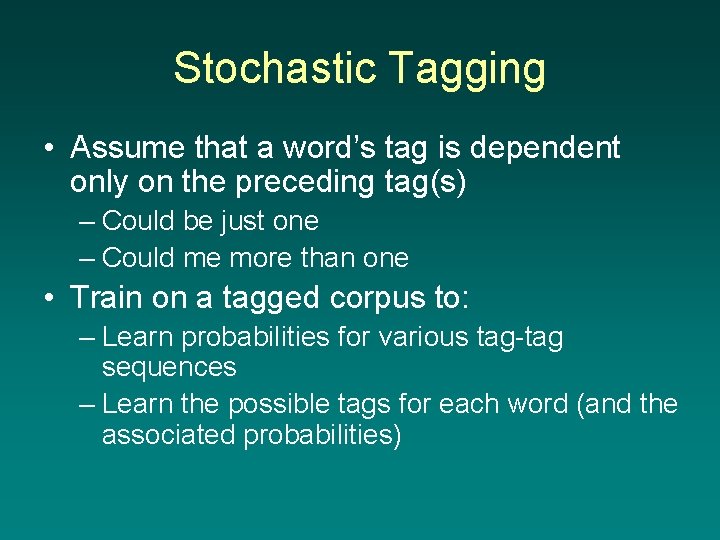

Stochastic Tagging • Assume that a word’s tag is dependent only on the preceding tag(s) – Could be just one – Could me more than one • Train on a tagged corpus to: – Learn probabilities for various tag-tag sequences – Learn the possible tags for each word (and the associated probabilities)

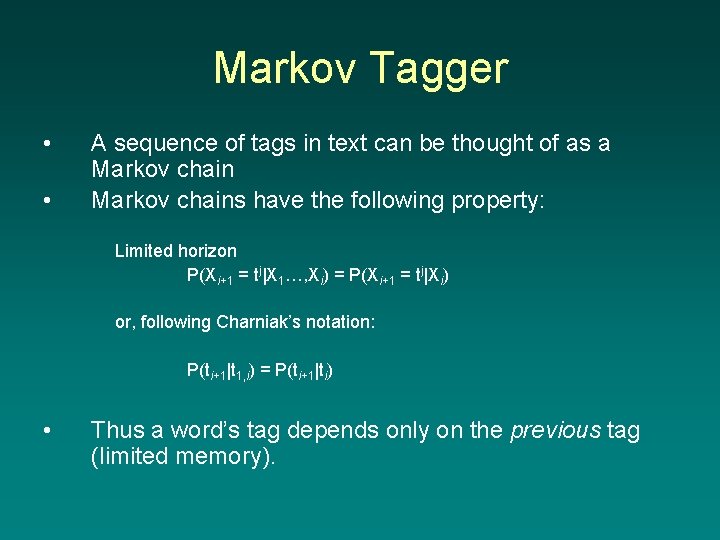

Markov Tagger • What is the goal of a Markov Tagger? • To maximize the following equation: P(wi|tj) P(tj|t 1, j-1)

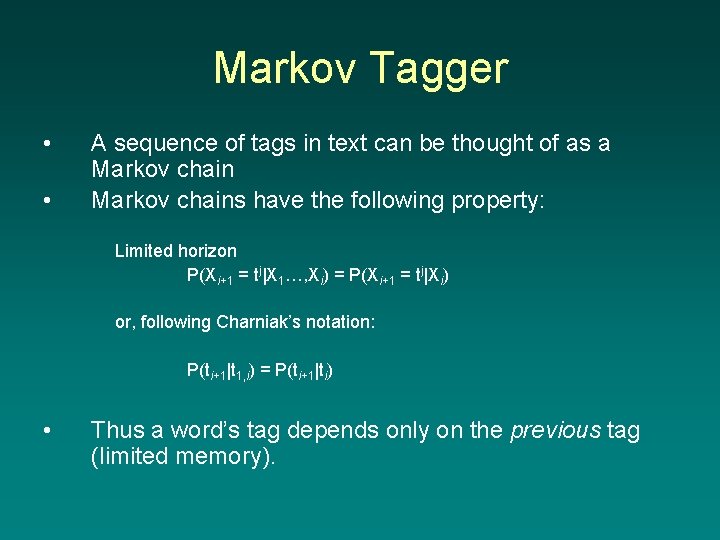

Markov Tagger • • A sequence of tags in text can be thought of as a Markov chains have the following property: Limited horizon P(Xi+1 = tj|X 1…, Xi) = P(Xi+1 = tj|Xi) or, following Charniak’s notation: P(ti+1|t 1, i) = P(ti+1|ti) • Thus a word’s tag depends only on the previous tag (limited memory).

Next Time • For next time, bring M&S & Charniak 93 • Read the appropriate sections in 9 and 10. Study 10 over 9 (for now).