Grid PP UK Computing for Particle Physics Tony

![Forward Look Scenario Planning – Resource Requirements [TB, k. SI 2 k] Grid. PP Forward Look Scenario Planning – Resource Requirements [TB, k. SI 2 k] Grid. PP](https://slidetodoc.com/presentation_image_h2/ebcc6af3e8317ed51efcd310a987ca2e/image-23.jpg)

- Slides: 27

Grid. PP: UK Computing for Particle Physics Tony Doyle

Outline The Icemen Cometh 11 May 2007 Context A Brief History Of Grid. PP UK Computing Centres The Grid & its Challenges Resource Accounting Performance Monitoring Outlook Conclusions R-ECFA Meeting Tony Doyle - University of Glasgow

Context (2000) • To create a UK Particle Physics Grid and the computing technologies required for the Large Hadron Collider (LHC) at CERN • To place the UK in a leadership position in the international development of the development of an EU Grid infrastructure 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

A Brief History Of Grid. PP • • • • • 1999 Grid: Blueprint for a New Computing Infrastructure by Ian Foster and Carl Kesselman published. February 2000 A Joint Infrastructure Fund bid is submitted for £ 6. 2 m to fund a prototype Tier-1 centre at RAL, for the EU-funded Data. Grid project. At the time of the JIF bid the LHC was expected to produce 4 PB of data a year for 10 years. By 2005, the expected figures had risen to 15 PB a year for 15 years. RAL was chosen as the location of the Tier-1 centre because it already hosts the UK Ba. Bar computing centre. May 2000 Last R-ECFA meeting in the UK. October 2000 PPARC signs up to the EU Data. Grid project, contributing 20 people and a Tier-1 centre. November 2000 Trade and Industry Secretary Stephen Byers announces £ 98 m for e-Science with Spending Review 2000. This includes £ 26 m for PPARC to develop HEP and astronomy Grid Projects. December 2000 Grid. PP plan created at a meeting at RAL. Initially the £ 26 m was to help fund UK posts to coordinate the UK arm of LCG, as part of that organisation. April 2001 A Shadow Project Management Board, refered to as "Data. Grid-UK", is established. Grid. PP first proposal submitted. 11 May 2007 • • • • • 30/31 st May 2001 PPARC's e-Science Committee meets to consider the proposal and approves the Grid. PP project, allocating £ 17 m. 1 st September 2001 Grid. PP officially starts, with funding for 3 years January 2002 Data. Grid releases first production version of the testbed middleware. February 2002 First international file transfers using X. 509 digital certificates 1 st March 2002 RAL involved in a test of Data. Grid by creating a small 5 site testbed Grid, with CERN, IN 2 P 3 -Lyon, CNAF-Bologna and NIKHEF 11 th March 2002 LHC Computing Grid Project launched. 23 th March 2002 First Prototype Tier 1/A Hardware delivered to RAL, consisting of 156 dual CPU PCs with 30 GB of storage each. 25 th April 2002 UK National e-Science Centre (Ne. SC) opened in Edinburgh by Gordon Brown June 2002 Scot. Grid, one of the four Tier-2 s in Grid. PP, goes into production August 2002 Grid. PP makes its first visit to the All Hands e-Science meeting R-ECFA Meeting Tony Doyle - University of Glasgow

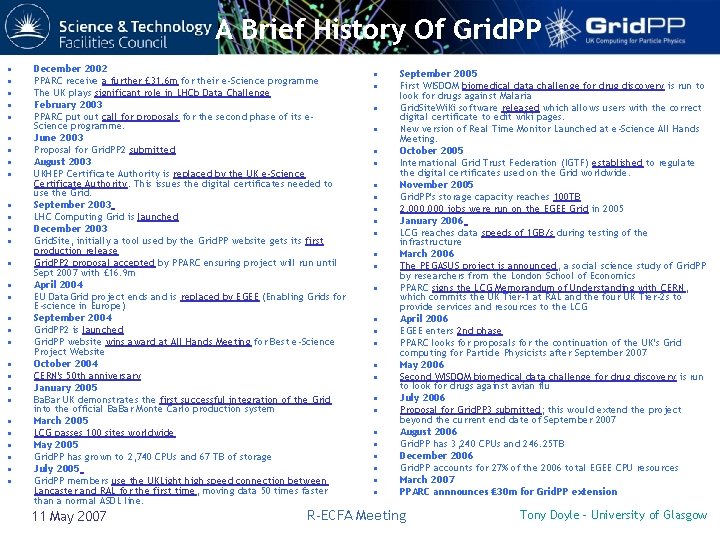

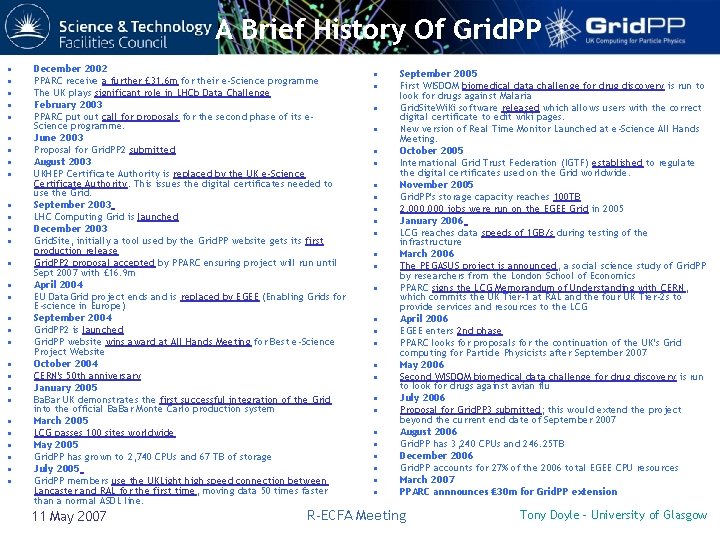

A Brief History Of Grid. PP • • • • • • • • December 2002 PPARC receive a further £ 31. 6 m for their e-Science programme The UK plays significant role in LHCb Data Challenge February 2003 PPARC put out call for proposals for the second phase of its e. Science programme. June 2003 Proposal for Grid. PP 2 submitted August 2003 UKHEP Certificate Authority is replaced by the UK e-Science Certificate Authority. This issues the digital certificates needed to use the Grid. September 2003 LHC Computing Grid is launched December 2003 Grid. Site, initially a tool used by the Grid. PP website gets its first production release Grid. PP 2 proposal accepted by PPARC ensuring project will run until Sept 2007 with £ 16. 9 m April 2004 EU Data. Grid project ends and is replaced by EGEE (Enabling Grids for E-science in Europe) September 2004 Grid. PP 2 is launched Grid. PP website wins award at All Hands Meeting for Best e-Science Project Website October 2004 CERN's 50 th anniversary January 2005 Ba. Bar UK demonstrates the first successful integration of the Grid into the official Ba. Bar Monte Carlo production system March 2005 LCG passes 100 sites worldwide May 2005 Grid. PP has grown to 2, 740 CPUs and 67 TB of storage July 2005 Grid. PP members use the UKLight high speed connection between Lancaster and RAL for the first time, moving data 50 times faster than a normal ASDL line. 11 May 2007 • • • • • • • September 2005 First WISDOM biomedical data challenge for drug discovery is run to look for drugs against Malaria Grid. Site. Wi. Ki software released which allows users with the correct digital certificate to edit wiki pages. New version of Real Time Monitor Launched at e-Science All Hands Meeting. October 2005 International Grid Trust Federation (IGTF) established to regulate the digital certificates used on the Grid worldwide. November 2005 Grid. PP's storage capacity reaches 100 TB 2, 000 jobs were run on the EGEE Grid in 2005 January 2006 LCG reaches data speeds of 1 GB/s during testing of the infrastructure March 2006 The PEGASUS project is announced, a social science study of Grid. PP by researchers from the London School of Economics PPARC signs the LCG Memorandum of Understanding with CERN, which commits the UK Tier-1 at RAL and the four UK Tier-2 s to provide services and resources to the LCG April 2006 EGEE enters 2 nd phase PPARC looks for proposals for the continuation of the UK's Grid computing for Particle Physicists after September 2007 May 2006 Second WISDOM biomedical data challenge for drug discovery is run to look for drugs against avian flu July 2006 Proposal for Grid. PP 3 submitted; this would extend the project beyond the current end date of September 2007 August 2006 Grid. PP has 3, 240 CPUs and 246. 25 TB December 2006 Grid. PP accounts for 27% of the 2006 total EGEE CPU resources March 2007 PPARC annnounces ₤ 30 m for Grid. PP extension R-ECFA Meeting Tony Doyle - University of Glasgow

Context (2007) • 2006 was the second full year for the UK Production Grid • More than 5, 000 CPUs and more than 1/2 Petabyte of disk storage • The UK is the largest CPU provider on the EGEE Grid, with total CPU used of 15 GSI 2 k-hours in 2006 • The Grid. PP 2 project has met 69% of its original targets with 92% of the metrics within specification • The initial LCG Grid Service is now underway and will run for the first 6 months of 2007 • The aim is to continue to improve reliability and performance ready for startup of the full Grid service on 1 st July 2007 • The Grid. PP 2 project has been extended by 7 months to April 2008 • The Grid. PP 3 proposal was recently accepted by PPARC (£ 30 m) to extend the project to March 2011 • We anticipate a challenging period ahead 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

Real Time Monitor 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

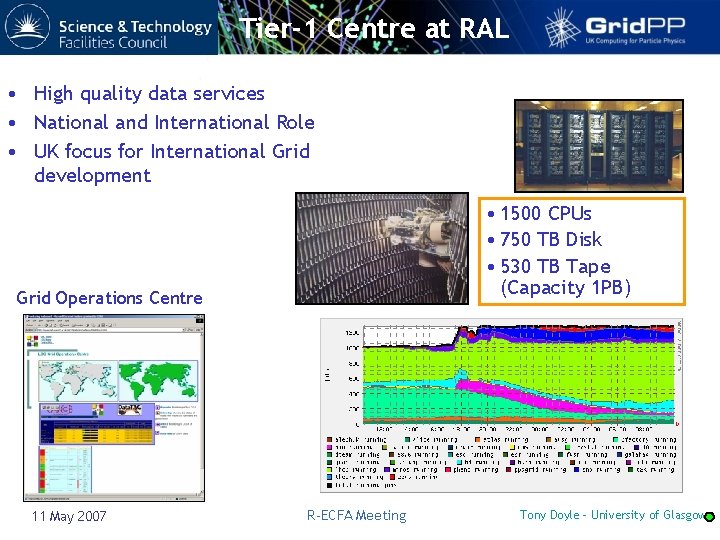

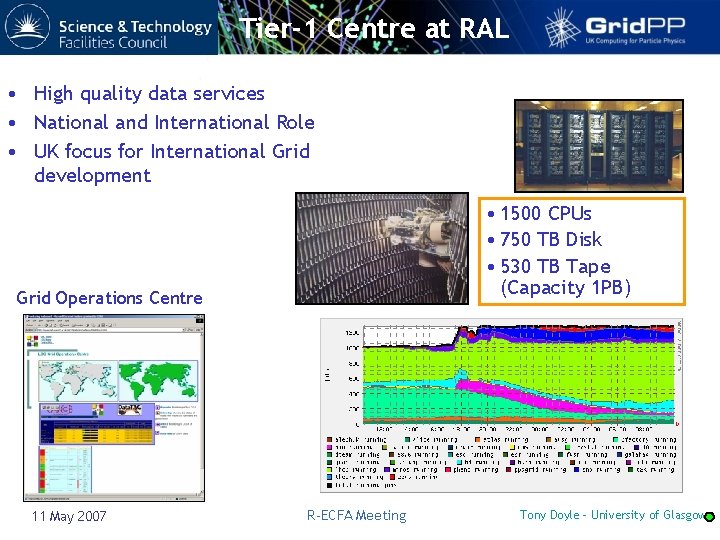

Tier-1 Centre at RAL • High quality data services • National and International Role • UK focus for International Grid development • 1500 CPUs • 750 TB Disk • 530 TB Tape (Capacity 1 PB) Grid Operations Centre 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

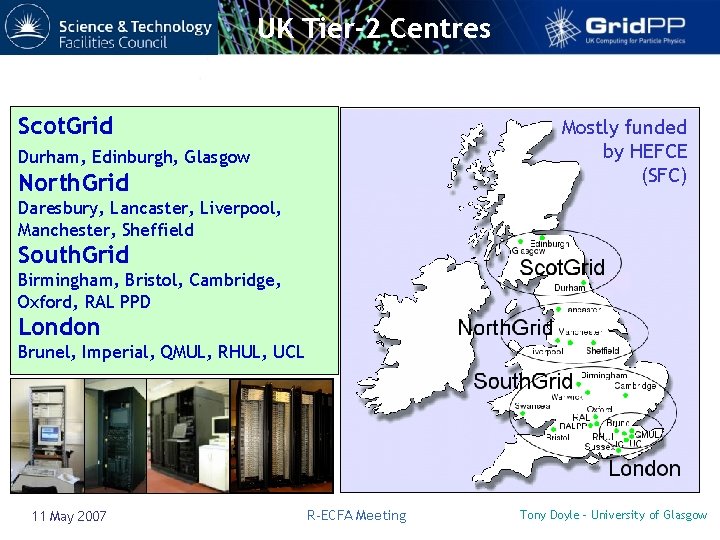

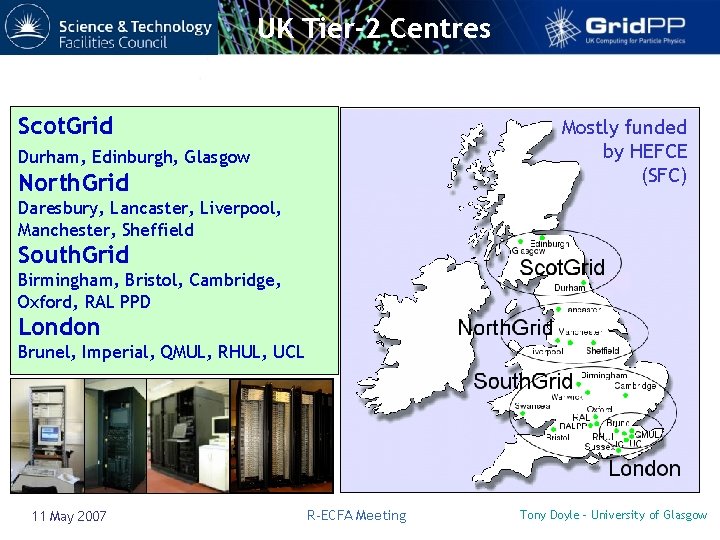

UK Tier-2 Centres Scot. Grid Mostly funded by HEFCE (SFC) Durham, Edinburgh, Glasgow North. Grid Daresbury, Lancaster, Liverpool, Manchester, Sheffield South. Grid Birmingham, Bristol, Cambridge, Oxford, RAL PPD London Brunel, Imperial, QMUL, RHUL, UCL 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

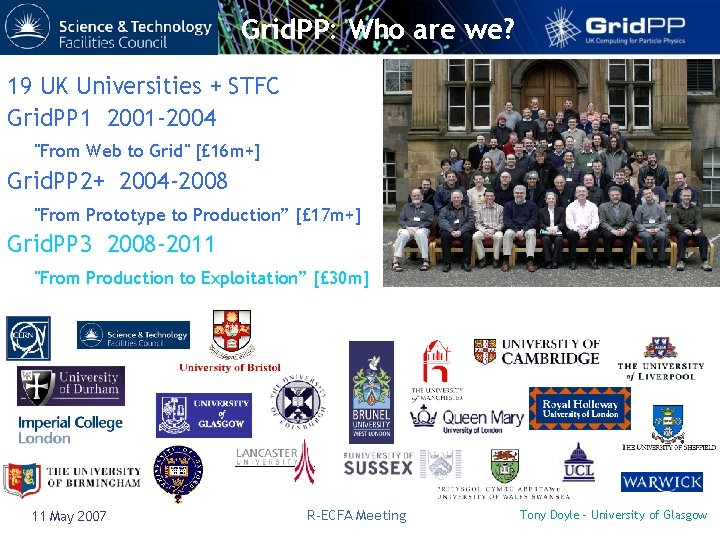

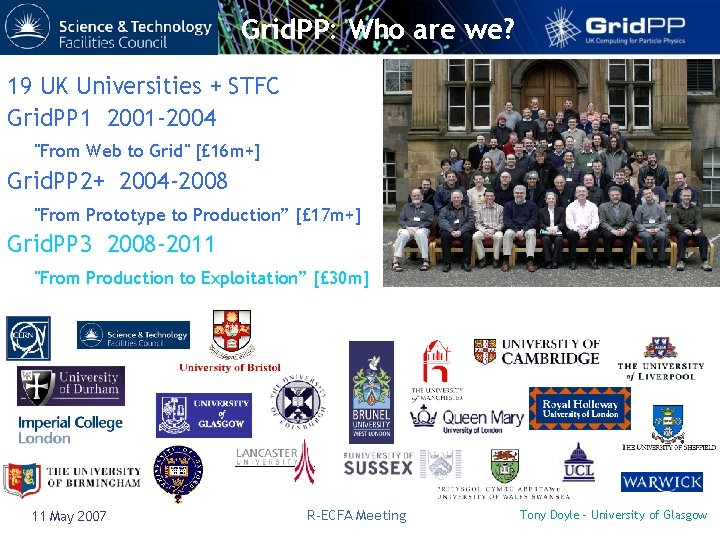

Grid. PP: Who are we? 19 UK Universities + STFC Grid. PP 1 2001 -2004 "From Web to Grid" [£ 16 m+] Grid. PP 2+ 2004 -2008 "From Prototype to Production” [£ 17 m+] Grid. PP 3 2008 -2011 "From Production to Exploitation” [£ 30 m] 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

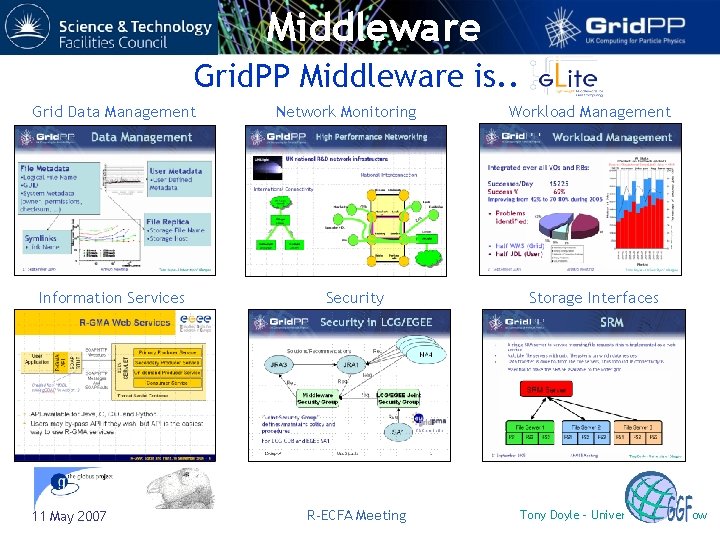

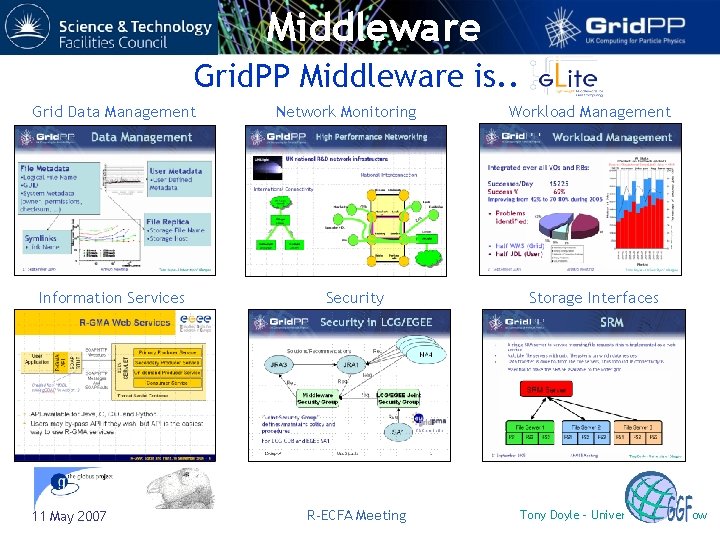

Middleware Grid. PP Middleware is. . Grid Data Management Information Services 11 May 2007 Network Monitoring Security R-ECFA Meeting Workload Management Storage Interfaces Tony Doyle - University of Glasgow

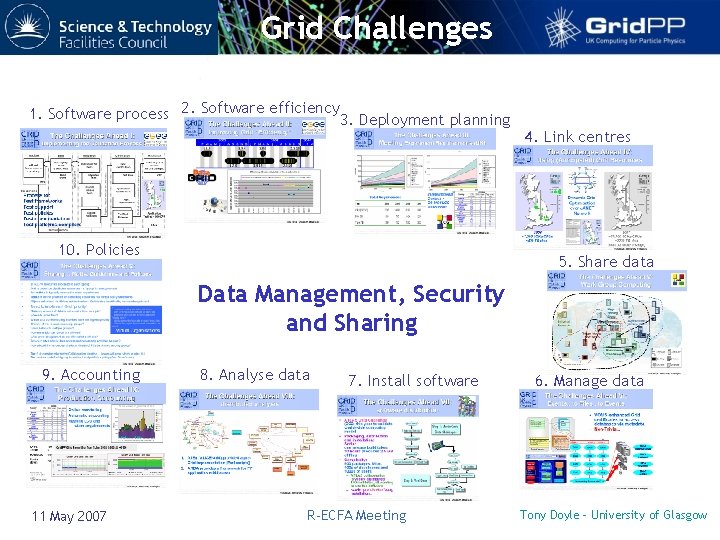

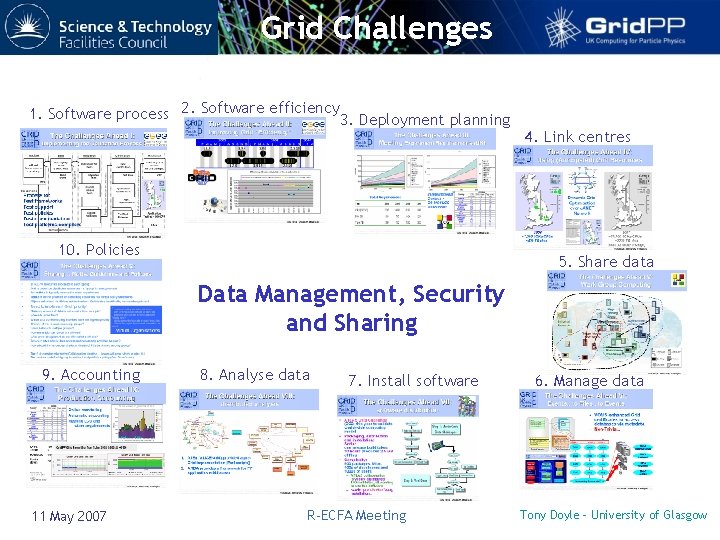

Grid Challenges 1. Software process 2. Software efficiency 3. Deployment planning 10. Policies 4. Link centres 5. Share data Data Management, Security and Sharing 9. Accounting 11 May 2007 8. Analyse data 7. Install software R-ECFA Meeting 6. Manage data Tony Doyle - University of Glasgow

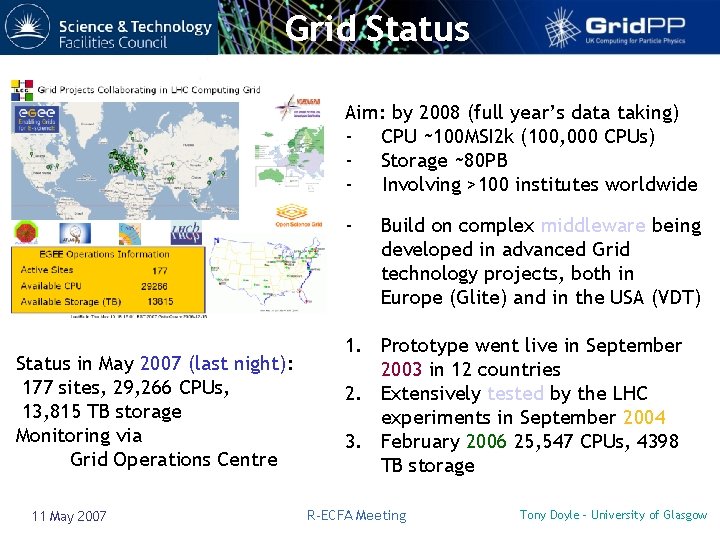

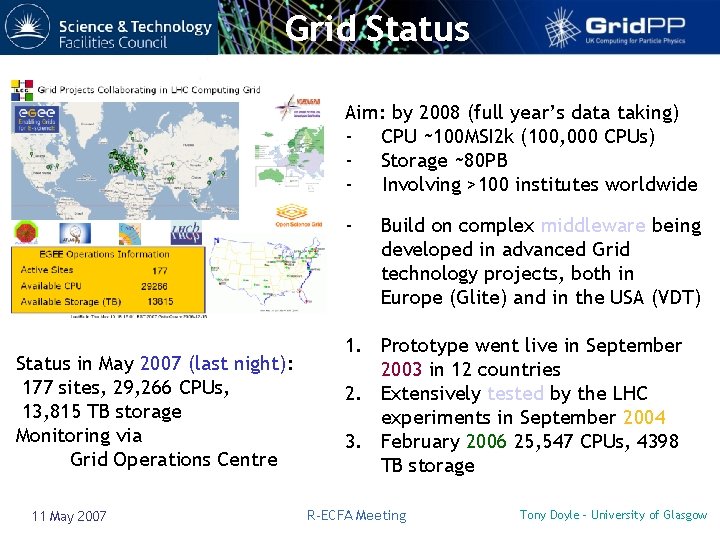

Grid Status Aim: by 2008 (full year’s data taking) - CPU ~100 MSI 2 k (100, 000 CPUs) - Storage ~80 PB Involving >100 institutes worldwide - Status in May 2007 (last night): 177 sites, 29, 266 CPUs, 13, 815 TB storage Monitoring via Grid Operations Centre 11 May 2007 Build on complex middleware being developed in advanced Grid technology projects, both in Europe (Glite) and in the USA (VDT) 1. Prototype went live in September 2003 in 12 countries 2. Extensively tested by the LHC experiments in September 2004 3. February 2006 25, 547 CPUs, 4398 TB storage R-ECFA Meeting Tony Doyle - University of Glasgow

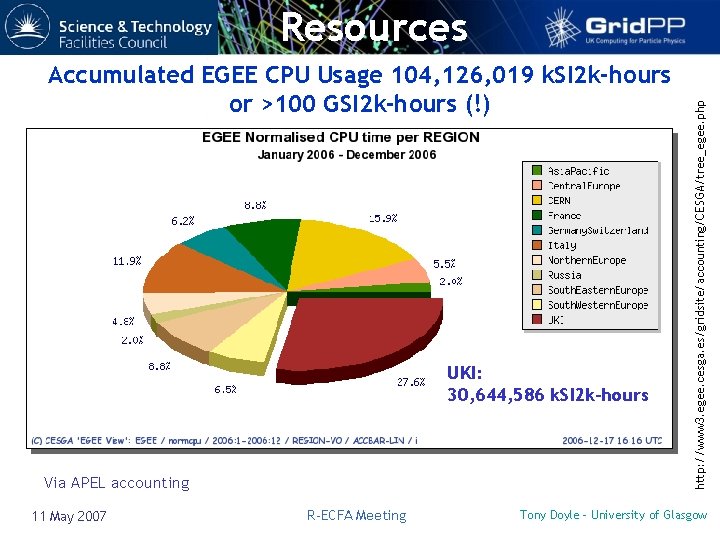

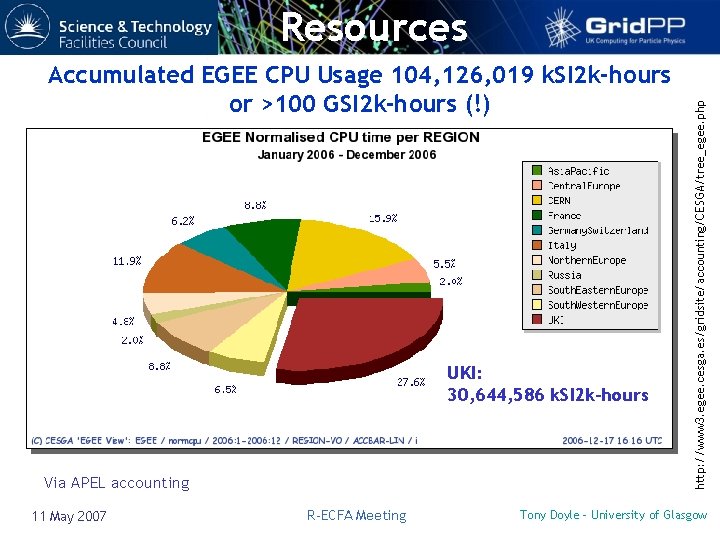

Accumulated EGEE CPU Usage 104, 126, 019 k. SI 2 k-hours or >100 GSI 2 k-hours (!) UKI: 30, 644, 586 k. SI 2 k-hours Via APEL accounting 11 May 2007 R-ECFA Meeting http: //www 3. egee. cesga. es/gridsite/accounting/CESGA/tree_egee. php Resources Tony Doyle - University of Glasgow

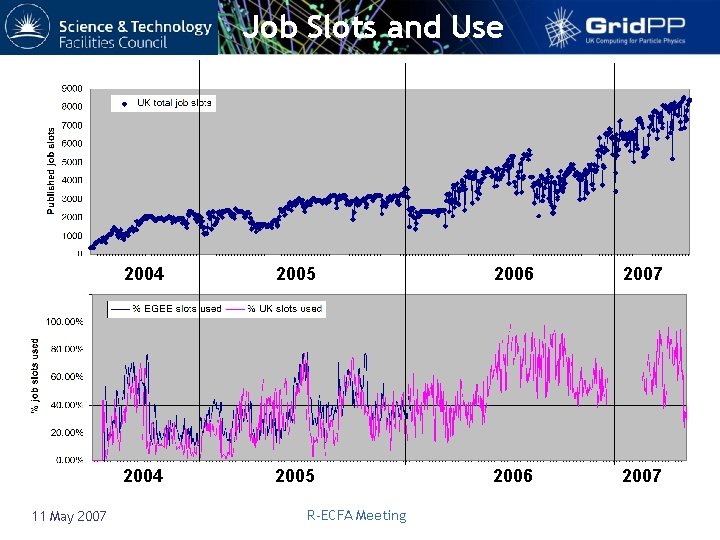

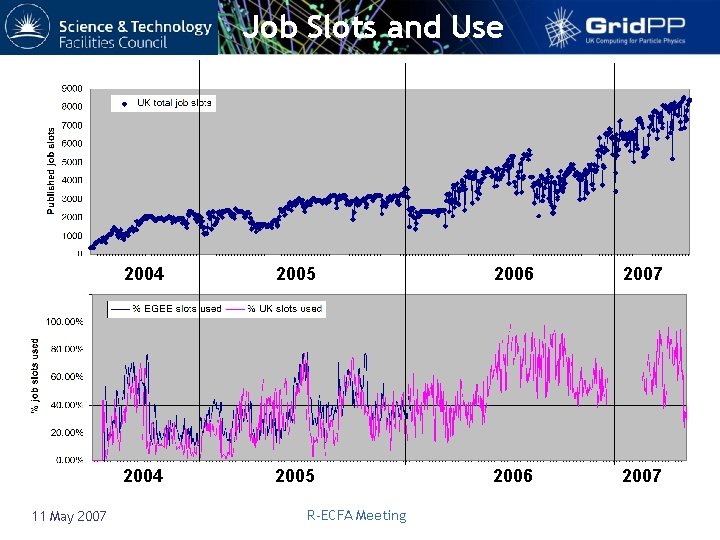

Job Slots and Use 11 May 2007 2004 2005 2006 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

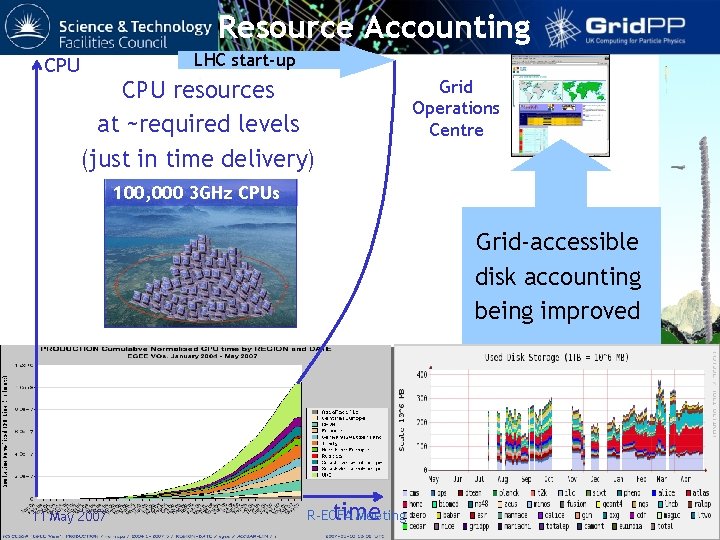

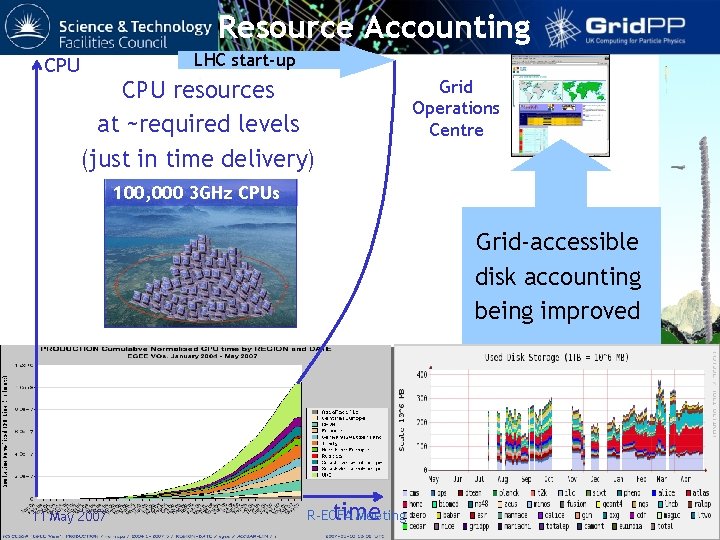

Resource Accounting CPU LHC start-up CPU resources at ~required levels (just in time delivery) Grid Operations Centre 100, 000 3 GHz CPUs Grid-accessible disk accounting being improved 11 May 2007 time R-ECFA Meeting Tony Doyle - University of Glasgow

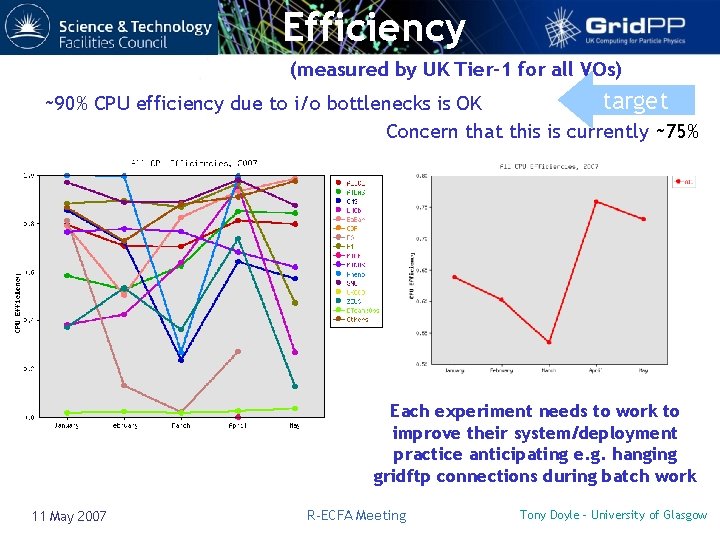

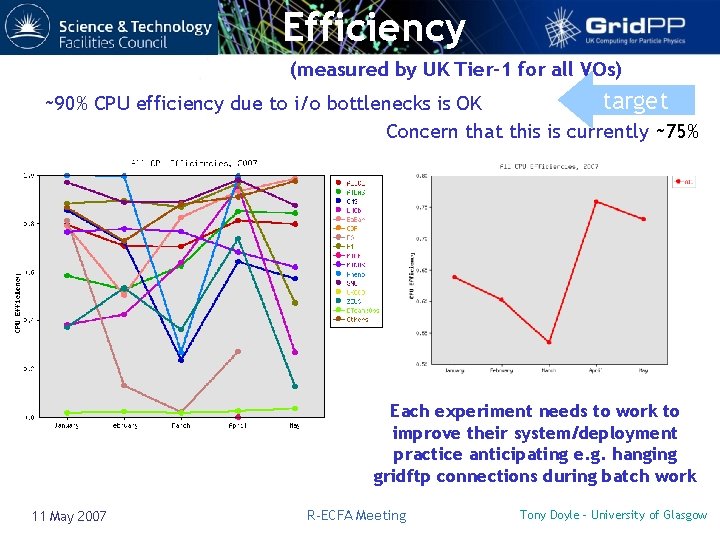

Efficiency (measured by UK Tier-1 for all VOs) target ~90% CPU efficiency due to i/o bottlenecks is OK Concern that this is currently ~75% Each experiment needs to work to improve their system/deployment practice anticipating e. g. hanging gridftp connections during batch work 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

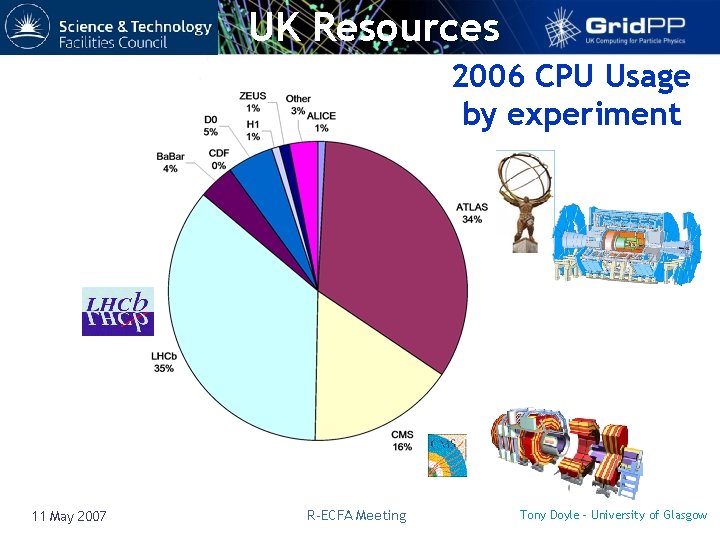

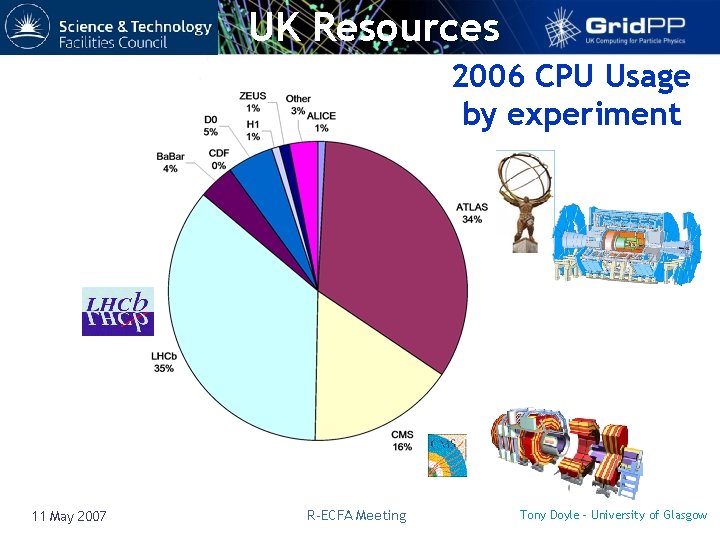

UK Resources 2006 CPU Usage by experiment 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

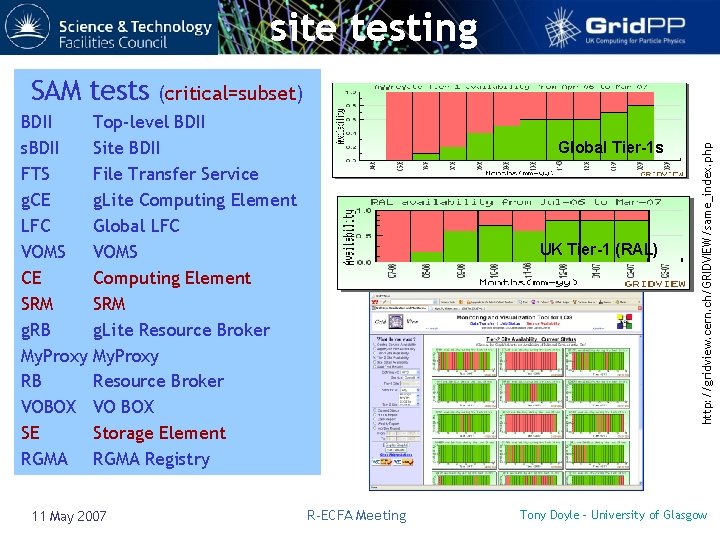

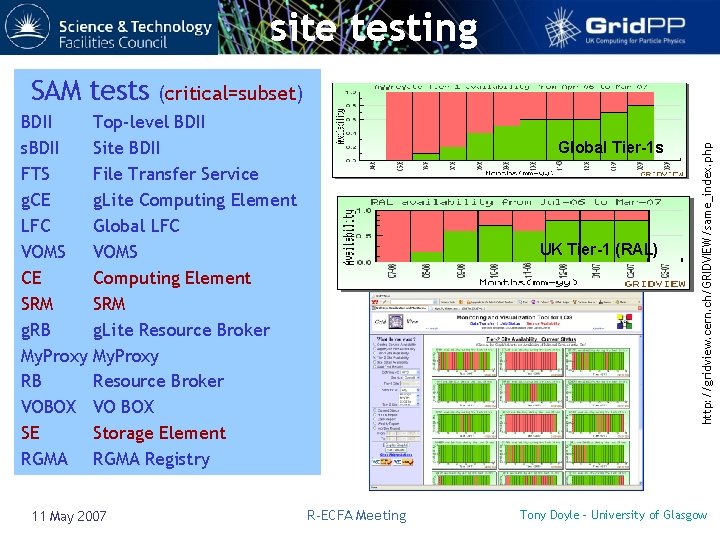

site testing BDII s. BDII FTS g. CE LFC VOMS CE SRM g. RB My. Proxy RB VOBOX SE RGMA (critical=subset) Top-level BDII Site BDII File Transfer Service g. Lite Computing Element Global LFC VOMS Computing Element SRM g. Lite Resource Broker My. Proxy Resource Broker VO BOX Storage Element RGMA Registry 11 May 2007 Global Tier-1 s UK Tier-1 (RAL) R-ECFA Meeting http: //gridview. cern. ch/GRIDVIEW/same_index. php SAM tests Tony Doyle - University of Glasgow

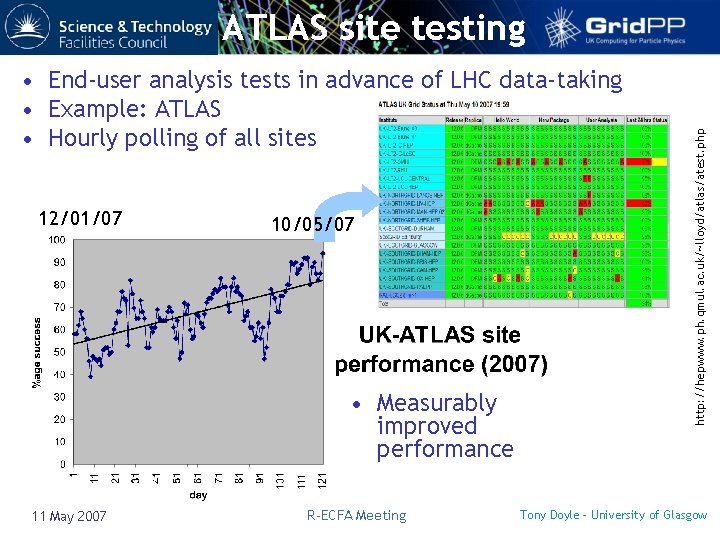

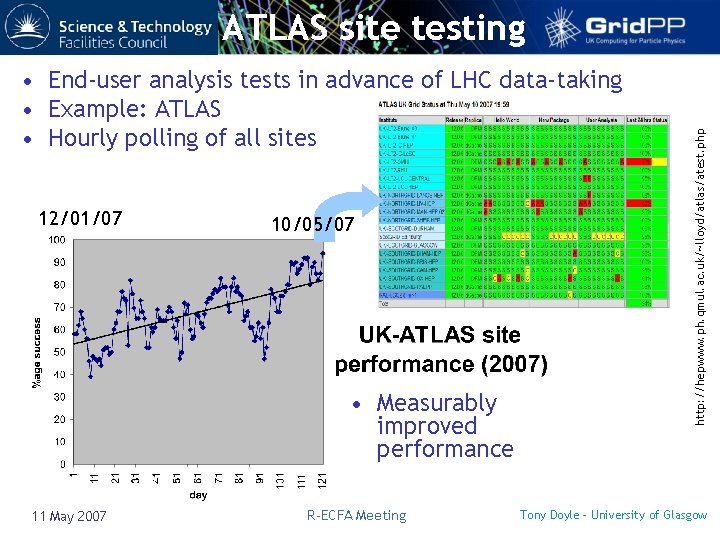

• End-user analysis tests in advance of LHC data-taking • Example: ATLAS • Hourly polling of all sites 12/01/07 10/05/07 • Measurably improved performance 11 May 2007 R-ECFA Meeting http: //hepwww. ph. qmul. ac. uk/~lloyd/atlas/atest. php ATLAS site testing Tony Doyle - University of Glasgow

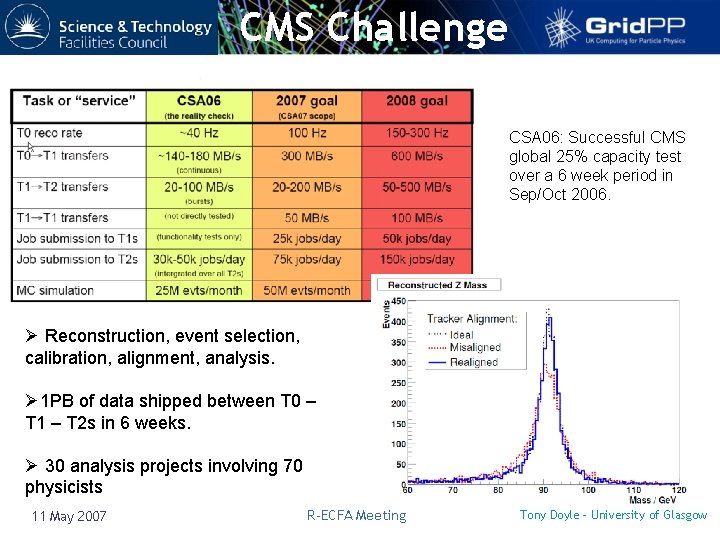

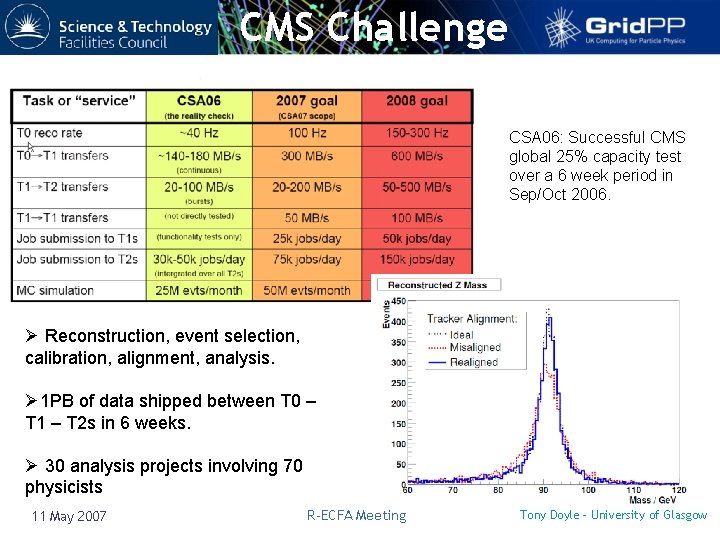

CMS Challenge CSA 06: Successful CMS global 25% capacity test over a 6 week period in Sep/Oct 2006. Ø Reconstruction, event selection, calibration, alignment, analysis. Ø 1 PB of data shipped between T 0 – T 1 – T 2 s in 6 weeks. Ø 30 analysis projects involving 70 physicists 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

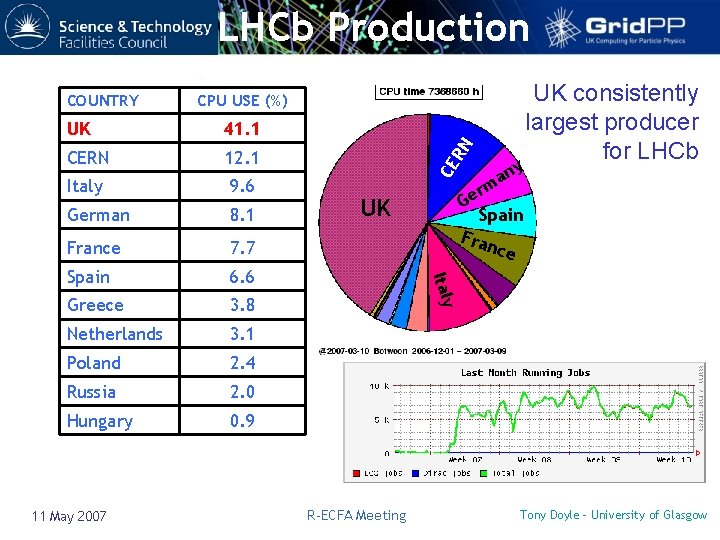

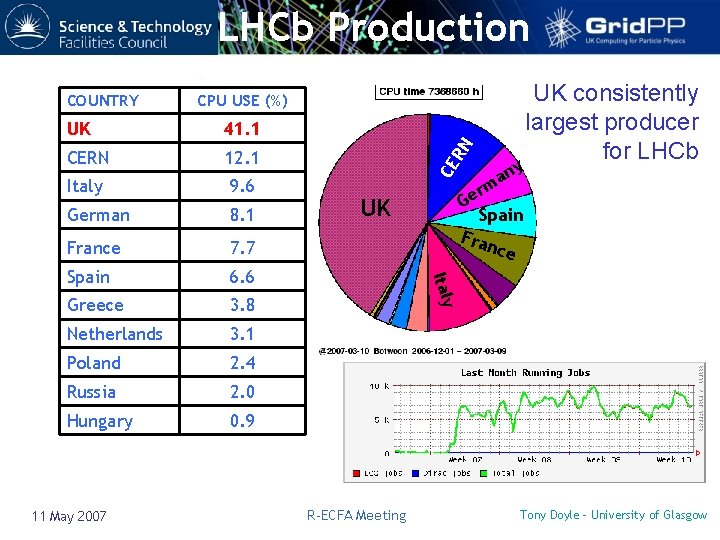

LHCb Production 41. 1 CERN 12. 1 Italy 9. 6 German 8. 1 France 7. 7 Spain 6. 6 Greece 3. 8 Netherlands 3. 1 Poland 2. 4 Russia 2. 0 Hungary 0. 9 11 May 2007 UK y n a m UK consistently largest producer for LHCb r Ge Spain Fra nce Italy UK RN CPU USE (%) CE COUNTRY R-ECFA Meeting Tony Doyle - University of Glasgow

![Forward Look Scenario Planning Resource Requirements TB k SI 2 k Grid PP Forward Look Scenario Planning – Resource Requirements [TB, k. SI 2 k] Grid. PP](https://slidetodoc.com/presentation_image_h2/ebcc6af3e8317ed51efcd310a987ca2e/image-23.jpg)

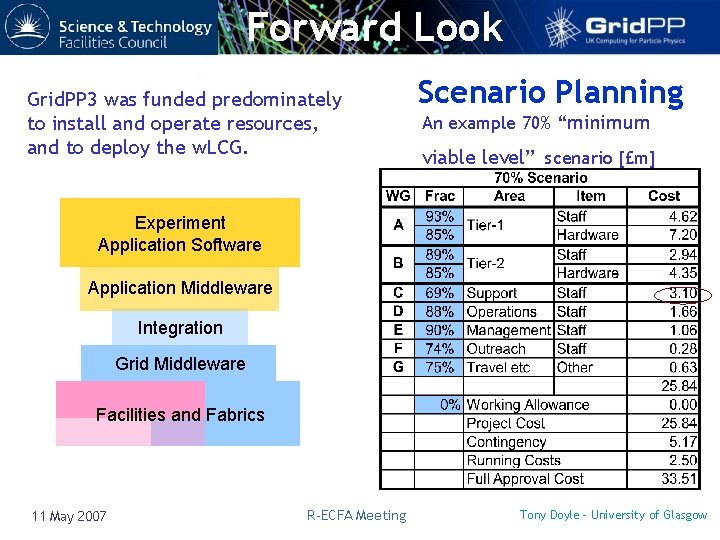

Forward Look Scenario Planning – Resource Requirements [TB, k. SI 2 k] Grid. PP requested a fair share of global requirements, according to experiment requirements Changes in the LHC schedule prompted a(nother) round of resource planning - presented to CRRB on Oct 24 th New UK resource requirements have been derived and incorporated in the scenario planning e. g. Tier-1 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

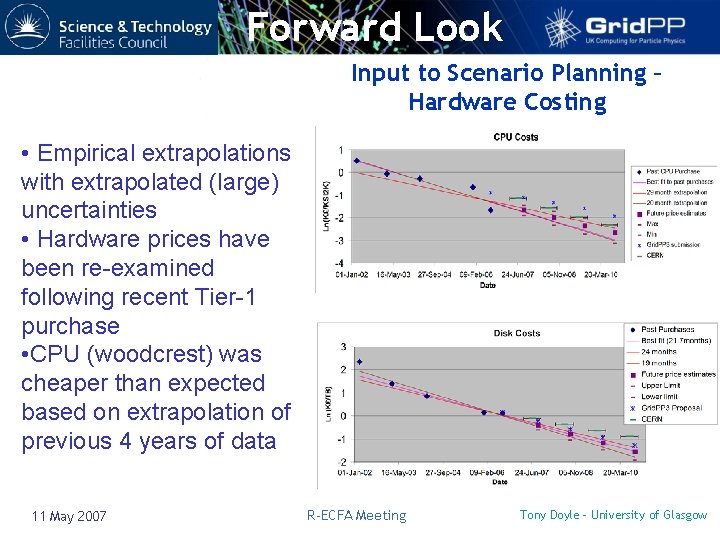

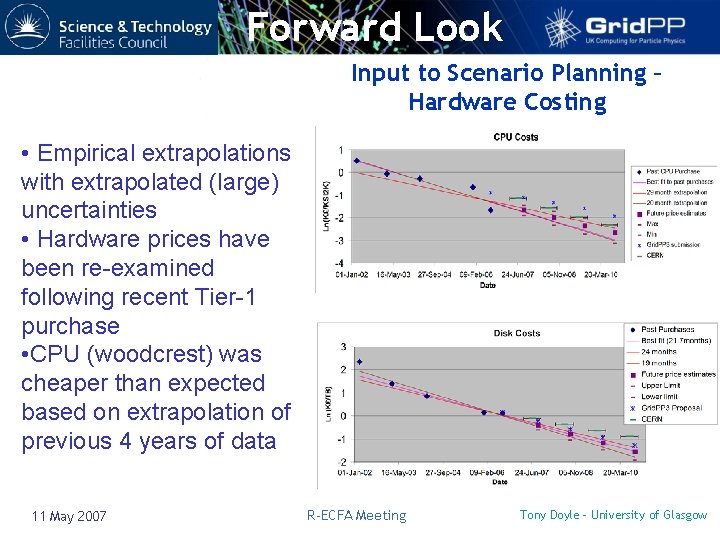

Forward Look Input to Scenario Planning – Hardware Costing • Empirical extrapolations with extrapolated (large) uncertainties • Hardware prices have been re-examined following recent Tier-1 purchase • CPU (woodcrest) was cheaper than expected based on extrapolation of previous 4 years of data 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

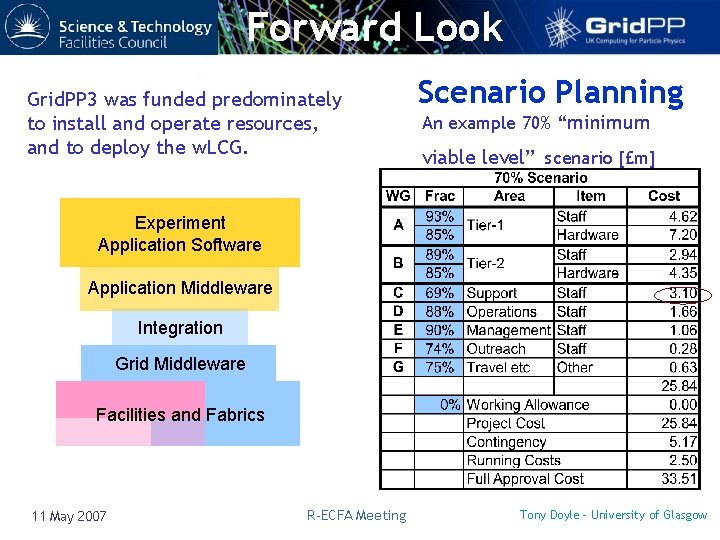

Forward Look Grid. PP 3 was funded predominately to install and operate resources, and to deploy the w. LCG. Scenario Planning An example 70% “minimum viable level” scenario [£m] Experiment Application Software Application Middleware Integration Grid Middleware Facilities and Fabrics 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

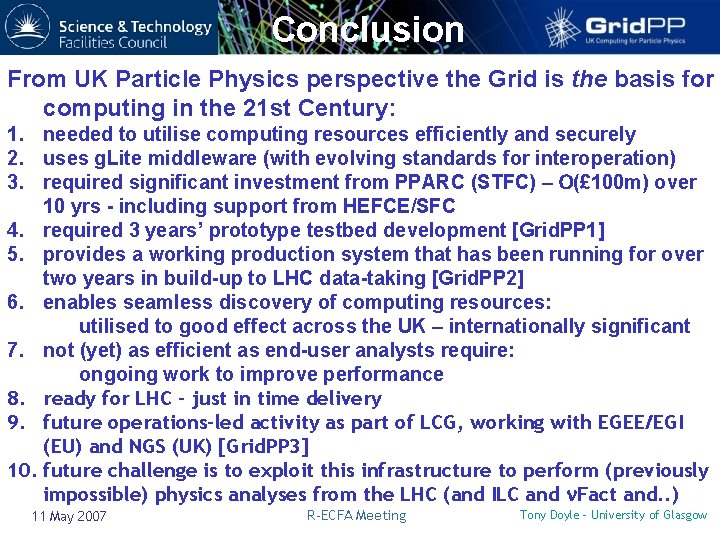

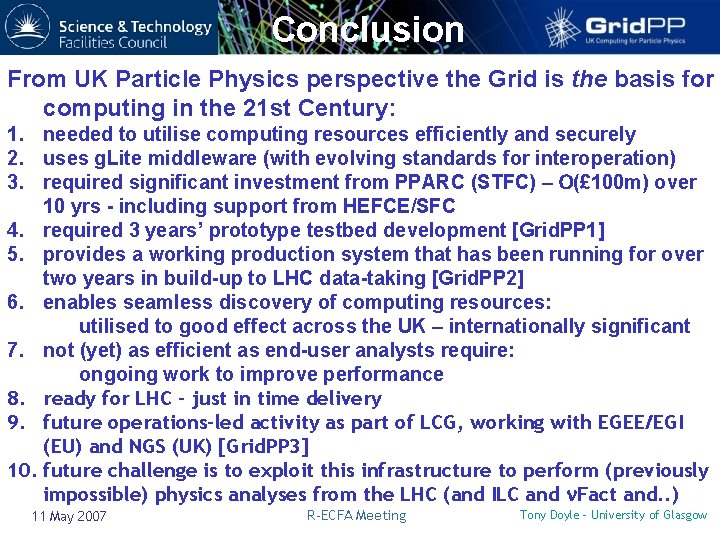

Conclusion From UK Particle Physics perspective the Grid is the basis for computing in the 21 st Century: 1. needed to utilise computing resources efficiently and securely 2. uses g. Lite middleware (with evolving standards for interoperation) 3. required significant investment from PPARC (STFC) – O(£ 100 m) over 10 yrs - including support from HEFCE/SFC 4. required 3 years’ prototype testbed development [Grid. PP 1] 5. provides a working production system that has been running for over two years in build-up to LHC data-taking [Grid. PP 2] 6. enables seamless discovery of computing resources: utilised to good effect across the UK – internationally significant 7. not (yet) as efficient as end-user analysts require: ongoing work to improve performance 8. ready for LHC – just in time delivery 9. future operations-led activity as part of LCG, working with EGEE/EGI (EU) and NGS (UK) [Grid. PP 3] 10. future challenge is to exploit this infrastructure to perform (previously impossible) physics analyses from the LHC (and ILC and n. Fact and. . ) 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow

Further Info http: //www. gridpp. ac. uk/ 11 May 2007 R-ECFA Meeting Tony Doyle - University of Glasgow