GPU Accelerated TopK Selection With Efficient Early Stopping

- Slides: 21

GPU Accelerated Top-K Selection With Efficient Early Stopping Vasileios Zois, Vassilis J. Tsotras, and Walid A. Najjar Department of Computer Science & Engineering University of California, Riverside Presented By Pritom Ahmed Department of Computer Science & Engineering University of California, Riverside ADMS 2019 – Monday, August 26 th

Outline 1. Overview of Top-K Selection Operator 2. GPU Processing Challenges & Top-K Selection For GPUs 3. GPU Accelerated Top-K Selection With Support For Early Termination 4. Experimental Evaluation of Proposed Solutions 2

Top-k Queries Motivation • Information Retrieval v Data object: an information entity characterized by a set of features/attributes v Examples: Documents, Images, Videos, Time Series, Objects, Tweets v Users are interested in objects that may partially match their query specification v Ranking based on relevance is pivotal to multi-criteria decision making applications. • Top-k Selection Problem v Enabling support for Top-K queries on database management systems v Use indexes to retrieve and evaluate only few tuples. v Terminate processing early and return the exact solution. v Support different monotone aggregation functions and query weights. 3

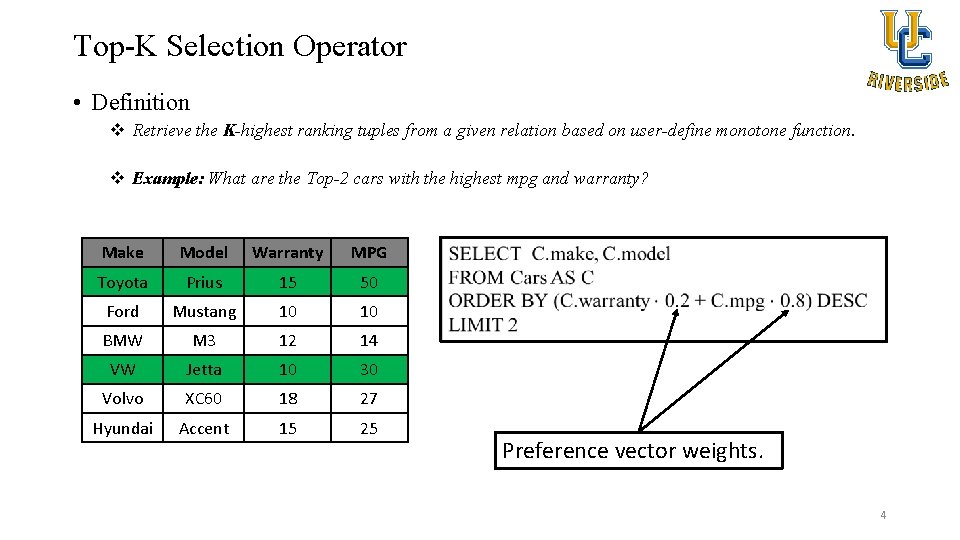

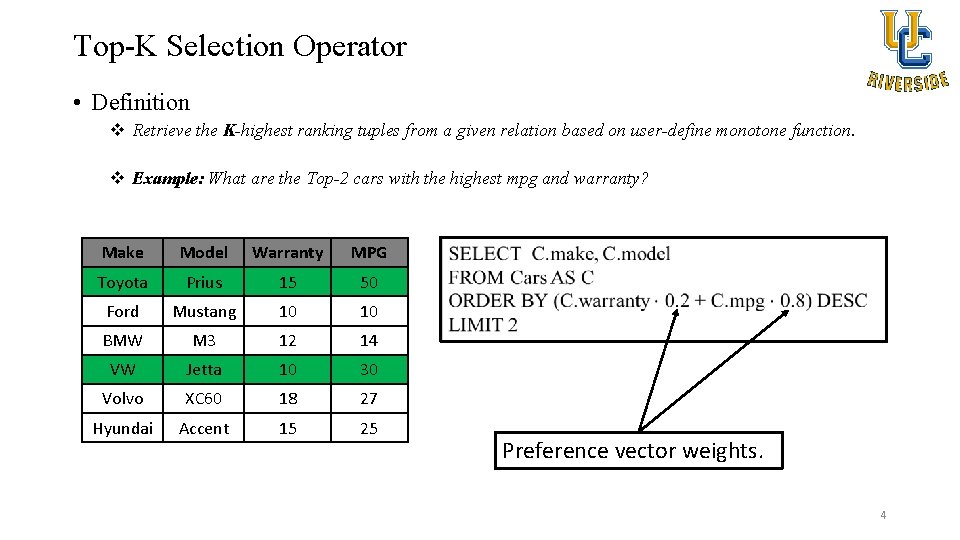

Top-K Selection Operator • Definition v Retrieve the K-highest ranking tuples from a given relation based on user-define monotone function. v Example: What are the Top-2 cars with the highest mpg and warranty? Make Model Warranty MPG Toyota Prius 15 50 Ford Mustang 10 10 BMW M 3 12 14 VW Jetta 10 30 Volvo XC 60 18 27 Hyundai Accent 15 25 Preference vector weights. 4

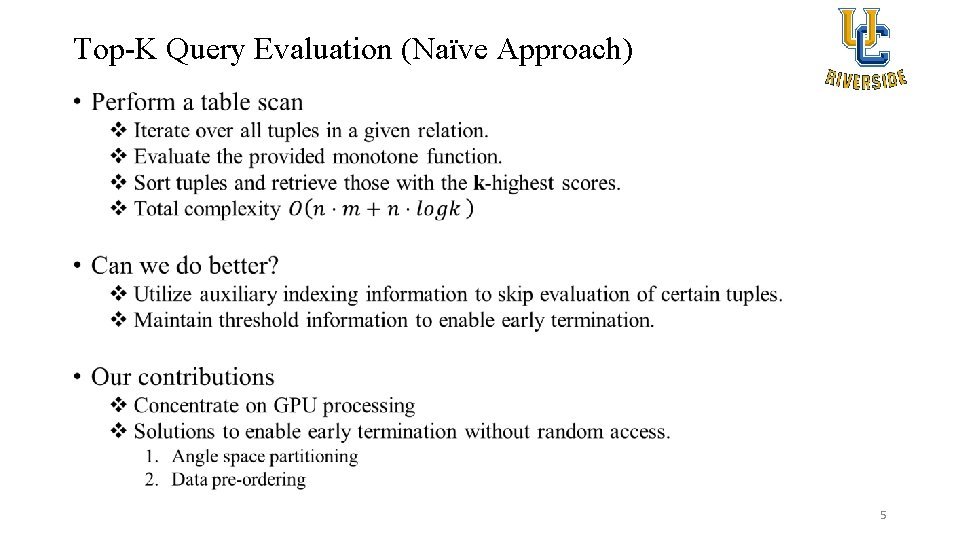

Top-K Query Evaluation (Naïve Approach) • 5

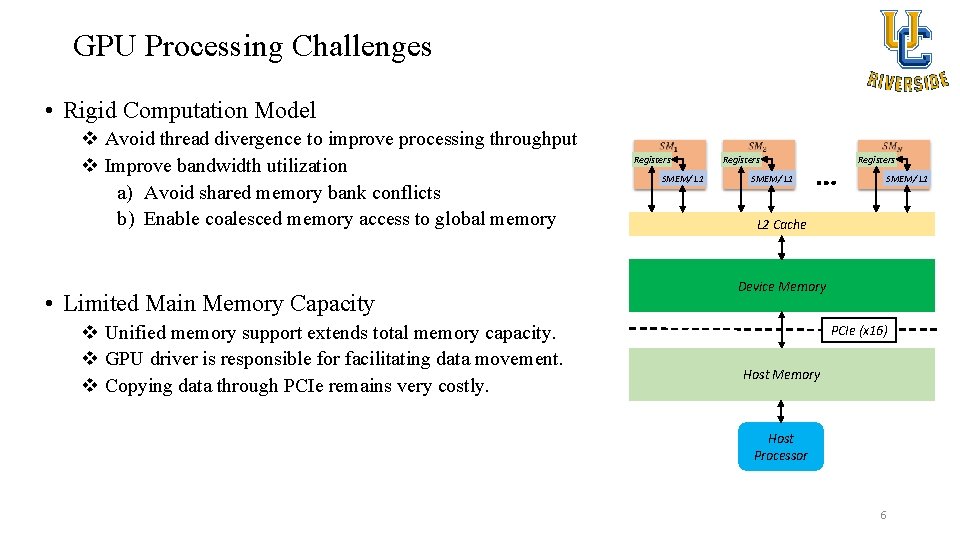

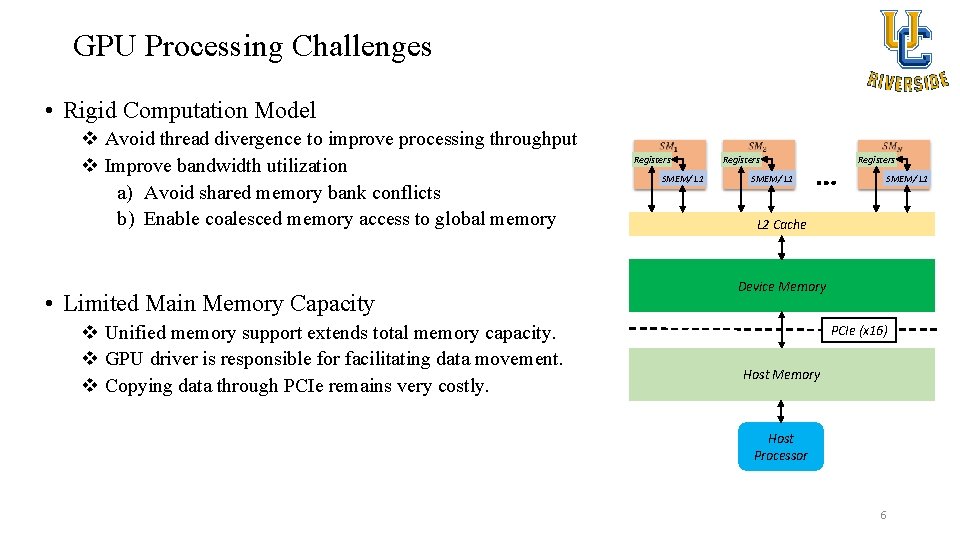

GPU Processing Challenges • Rigid Computation Model v Avoid thread divergence to improve processing throughput v Improve bandwidth utilization a) Avoid shared memory bank conflicts b) Enable coalesced memory access to global memory • Limited Main Memory Capacity v Unified memory support extends total memory capacity. v GPU driver is responsible for facilitating data movement. v Copying data through PCIe remains very costly. Registers SMEM/ L 1 … Registers SMEM/ L 1 L 2 Cache Device Memory PCIe (x 16) Host Memory Host Processor 6

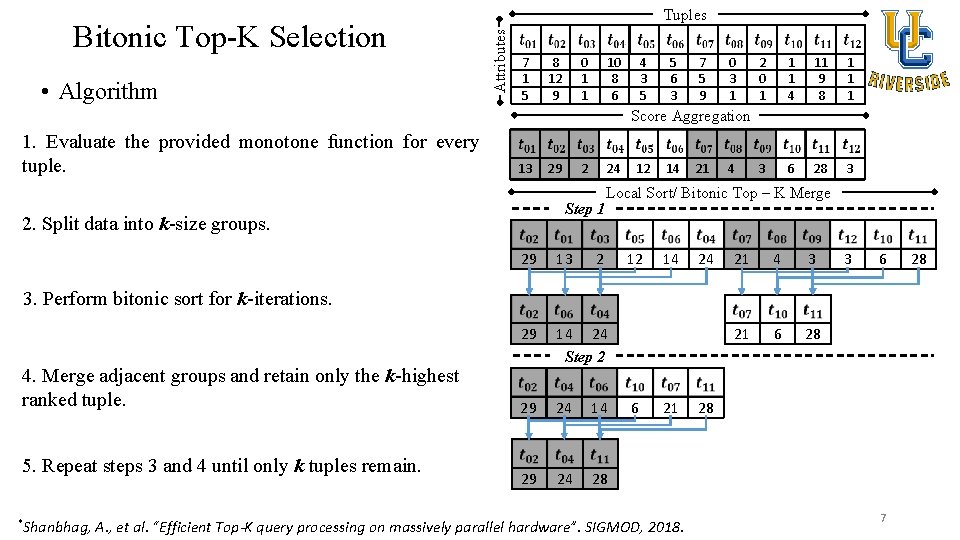

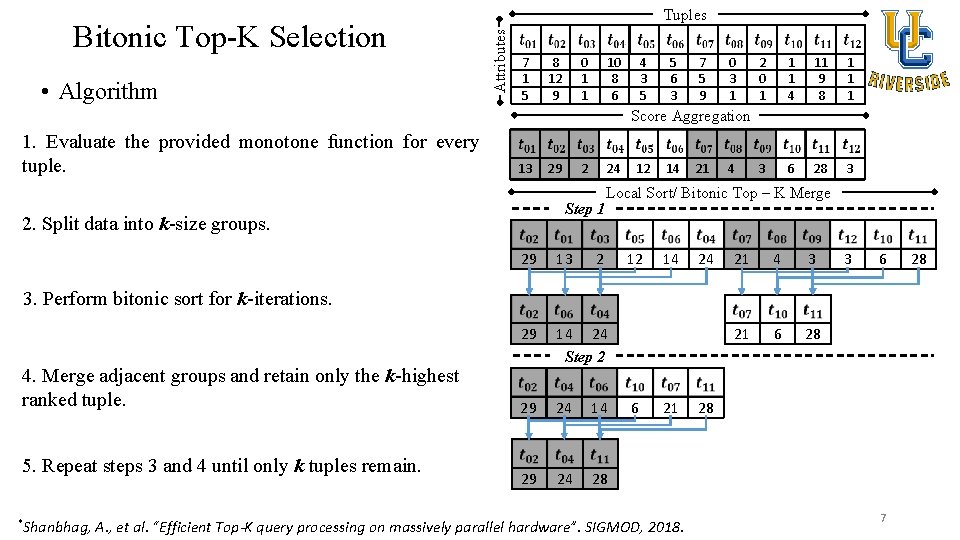

• Algorithm Attributes Bitonic Top-K Selection Tuples 7 1 5 8 12 9 0 1 1 10 8 6 4 3 5 5 6 3 7 5 9 0 3 1 2 0 1 1 1 4 11 9 8 1 1 1 3 6 28 3 Score Aggregation 1. Evaluate the provided monotone function for every tuple. 13 29 2 24 Step 1 2. Split data into k-size groups. 12 14 21 4 Local Sort/ Bitonic Top – K Merge 29 13 2 29 14 24 Step 2 29 24 14 29 24 28 12 14 24 21 4 3 21 6 28 3 6 3. Perform bitonic sort for k-iterations. 4. Merge adjacent groups and retain only the k-highest ranked tuple. 5. Repeat steps 3 and 4 until only k tuples remain. *Shanbhag, 6 21 A. , et al. “Efficient Top-K query processing on massively parallel hardware”. SIGMOD, 2018. 28 7 28

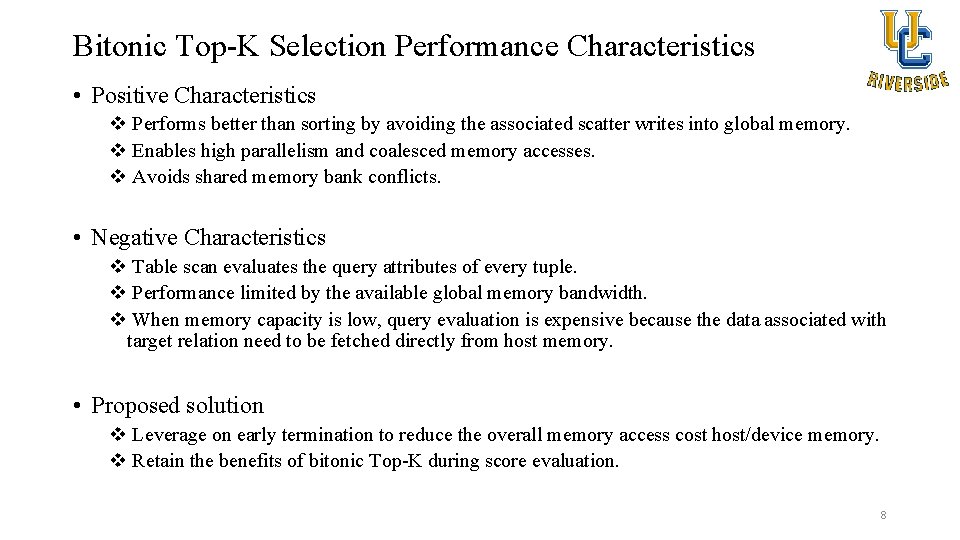

Bitonic Top-K Selection Performance Characteristics • Positive Characteristics v Performs better than sorting by avoiding the associated scatter writes into global memory. v Enables high parallelism and coalesced memory accesses. v Avoids shared memory bank conflicts. • Negative Characteristics v Table scan evaluates the query attributes of every tuple. v Performance limited by the available global memory bandwidth. v When memory capacity is low, query evaluation is expensive because the data associated with target relation need to be fetched directly from host memory. • Proposed solution v Leverage on early termination to reduce the overall memory access cost host/device memory. v Retain the benefits of bitonic Top-K during score evaluation. 8

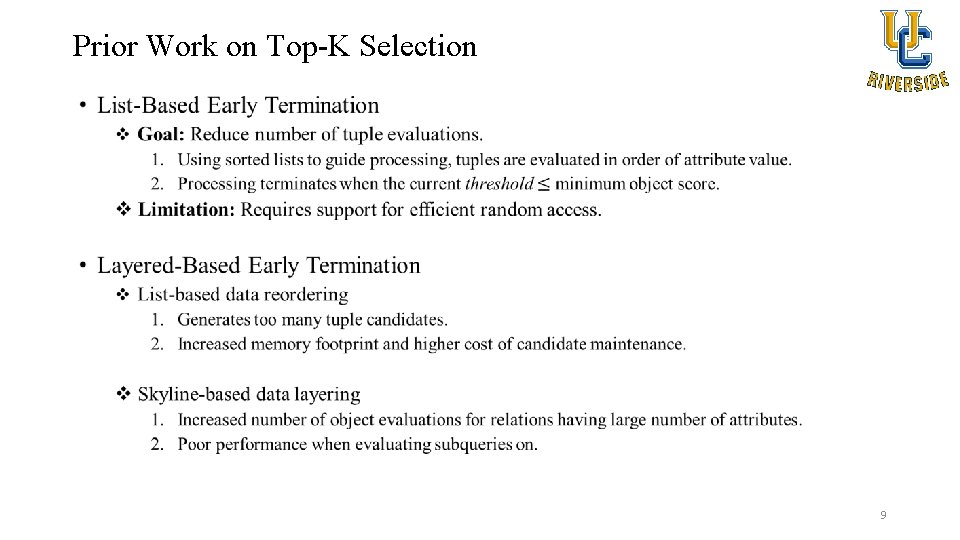

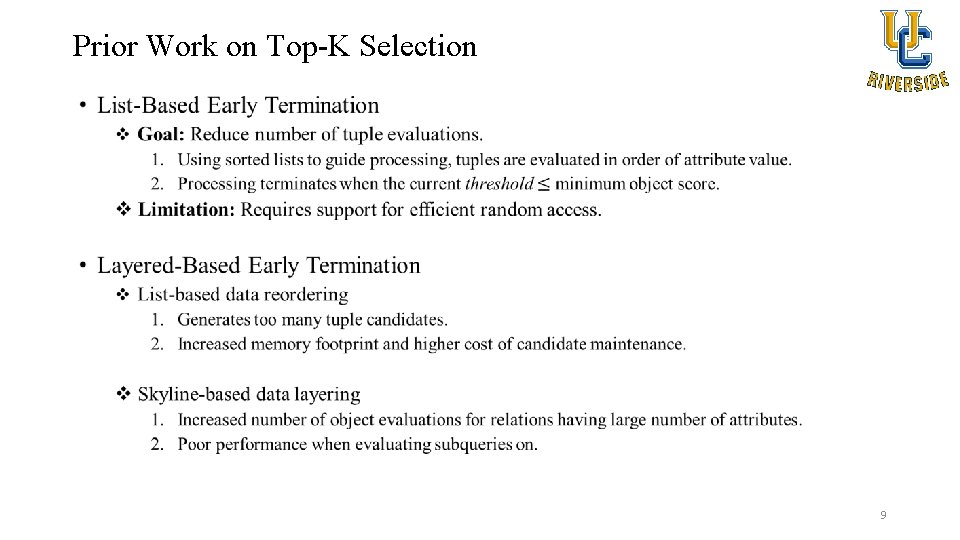

Prior Work on Top-K Selection • 9

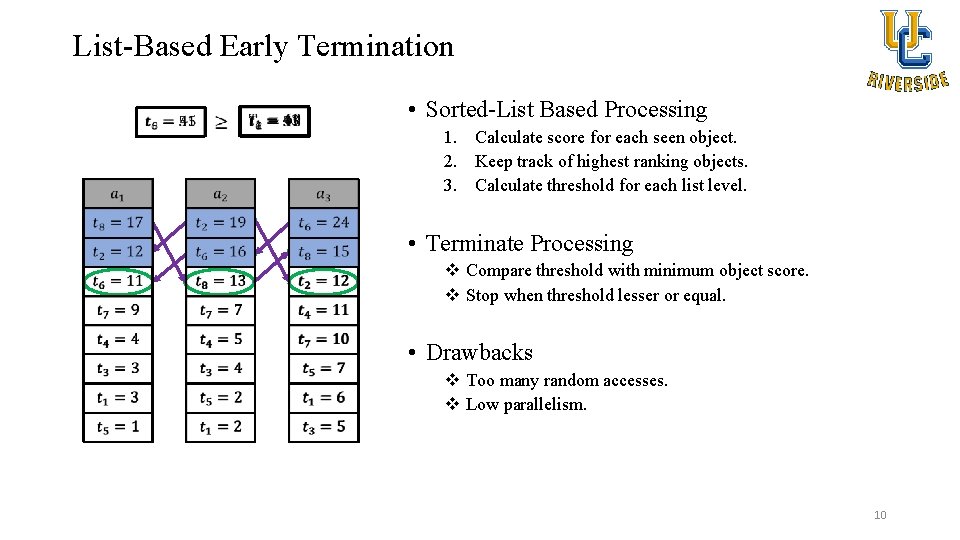

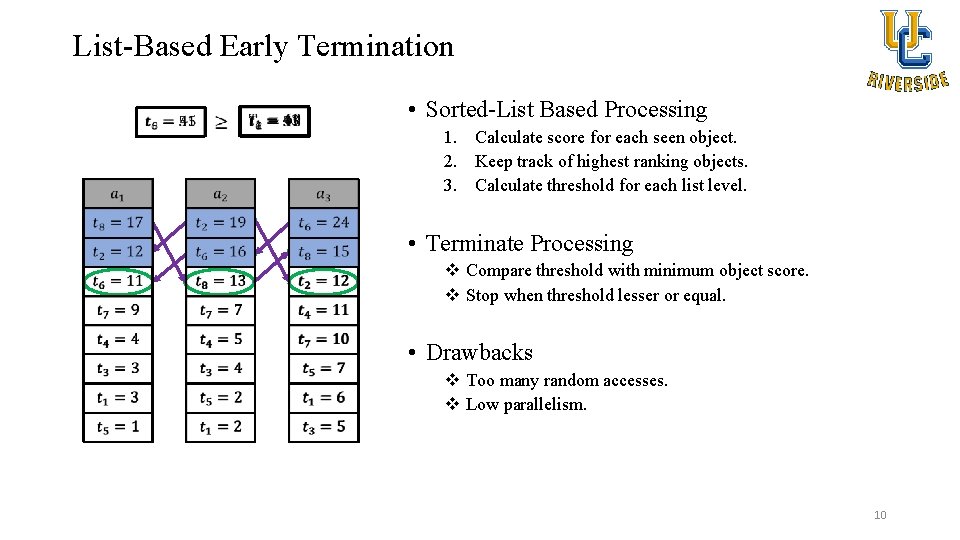

List-Based Early Termination • Sorted-List Based Processing 1. Calculate score for each seen object. 2. Keep track of highest ranking objects. 3. Calculate threshold for each list level. • Terminate Processing v Compare threshold with minimum object score. v Stop when threshold lesser or equal. • Drawbacks v Too many random accesses. v Low parallelism. 10

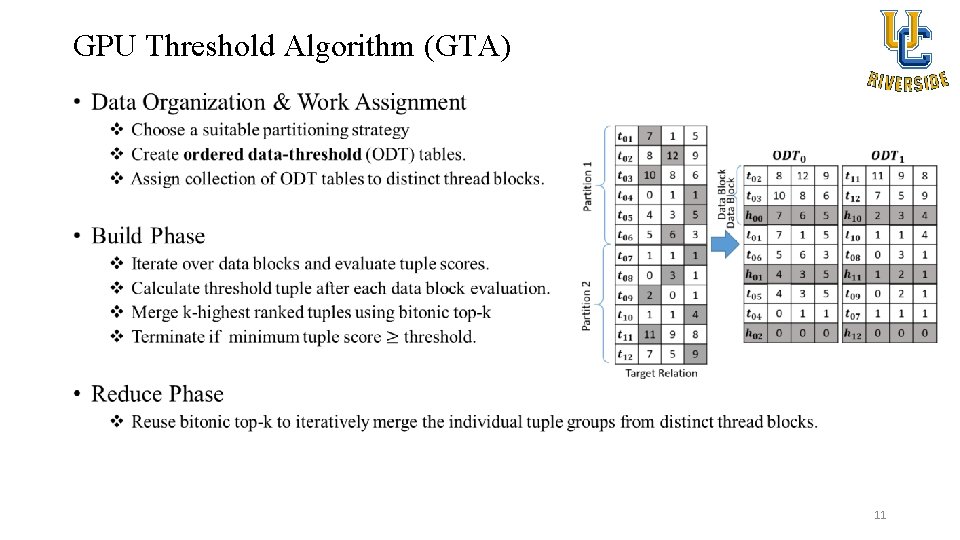

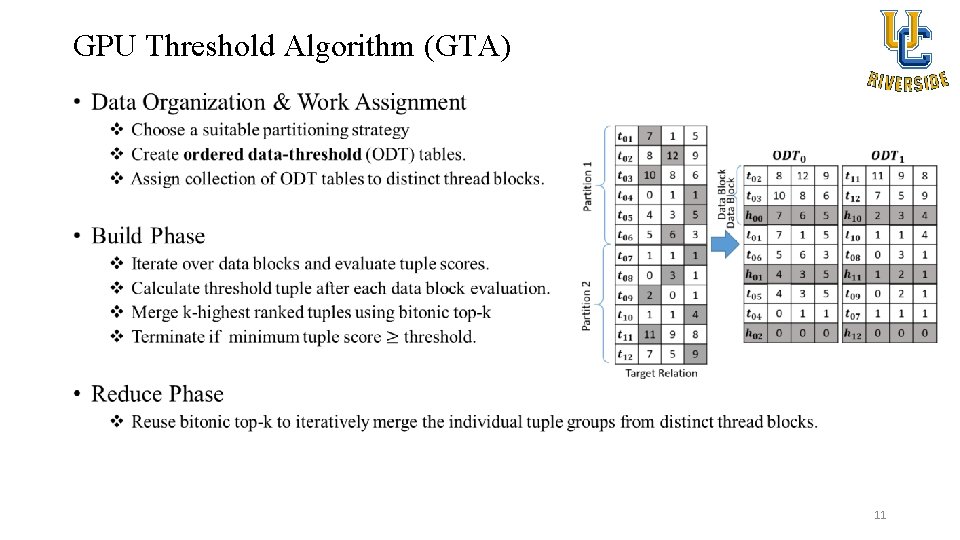

GPU Threshold Algorithm (GTA) • 11

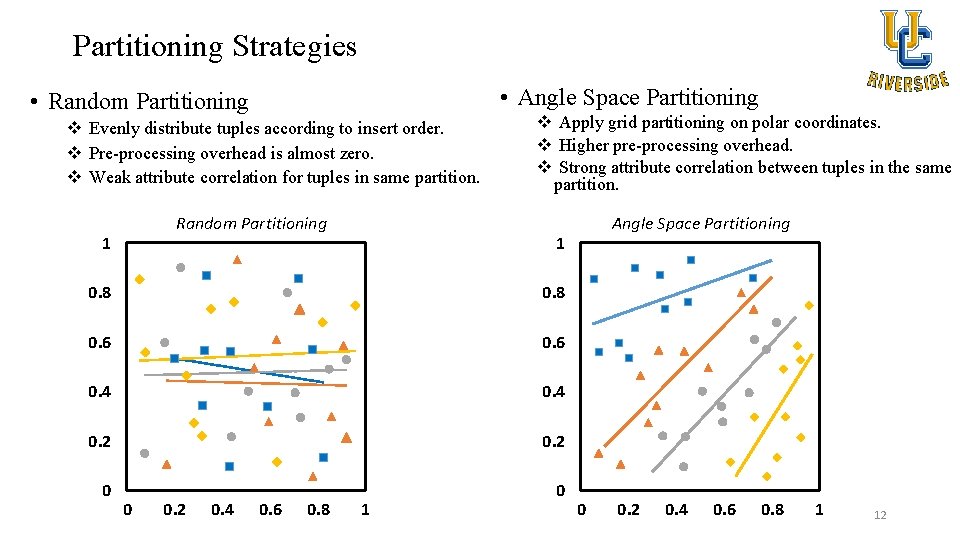

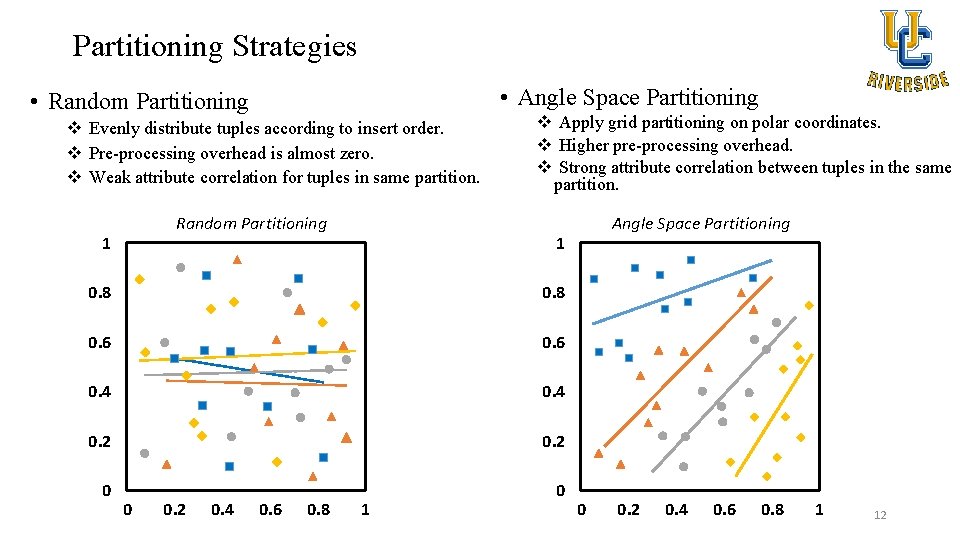

Partitioning Strategies • Angle Space Partitioning • Random Partitioning v Evenly distribute tuples according to insert order. v Pre-processing overhead is almost zero. v Weak attribute correlation for tuples in same partition. Random Partitioning 1 v Apply grid partitioning on polar coordinates. v Higher pre-processing overhead. v Strong attribute correlation between tuples in the same partition. 1 0. 8 0. 6 0. 4 0. 2 0. 4 0. 6 0. 8 Angle Space Partitioning 1 0 0. 2 0. 4 0. 6 0. 8 1 12

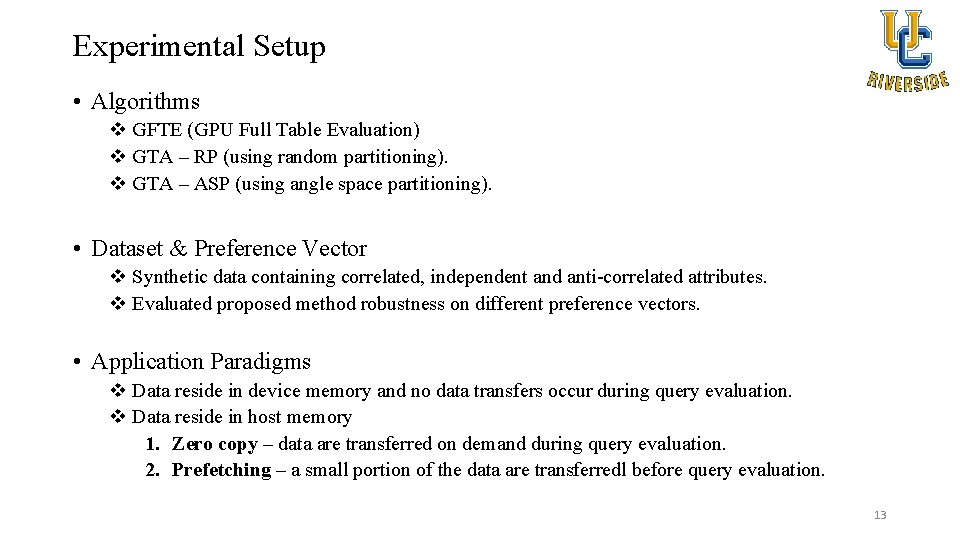

Experimental Setup • Algorithms v GFTE (GPU Full Table Evaluation) v GTA – RP (using random partitioning). v GTA – ASP (using angle space partitioning). • Dataset & Preference Vector v Synthetic data containing correlated, independent and anti-correlated attributes. v Evaluated proposed method robustness on different preference vectors. • Application Paradigms v Data reside in device memory and no data transfers occur during query evaluation. v Data reside in host memory 1. Zero copy – data are transferred on demand during query evaluation. 2. Prefetching – a small portion of the data are transferredl before query evaluation. 13

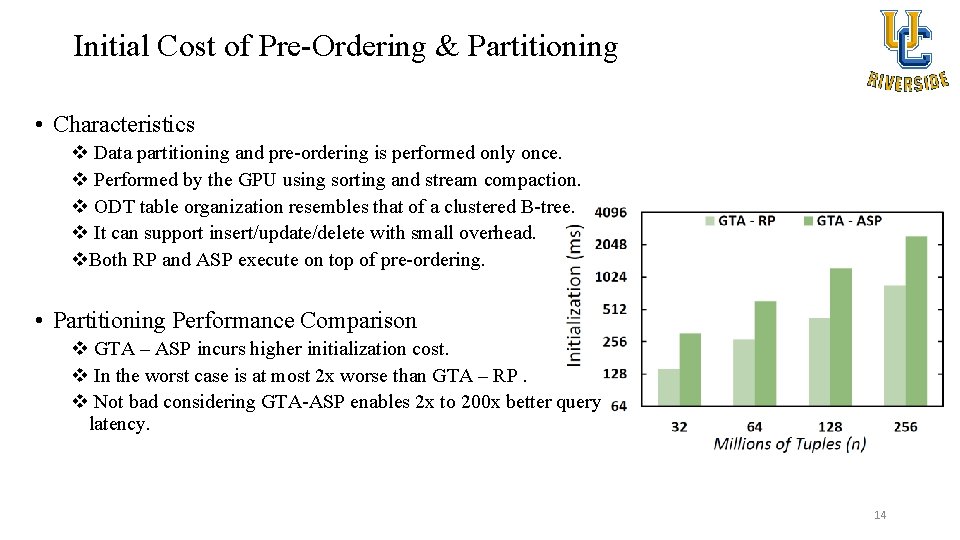

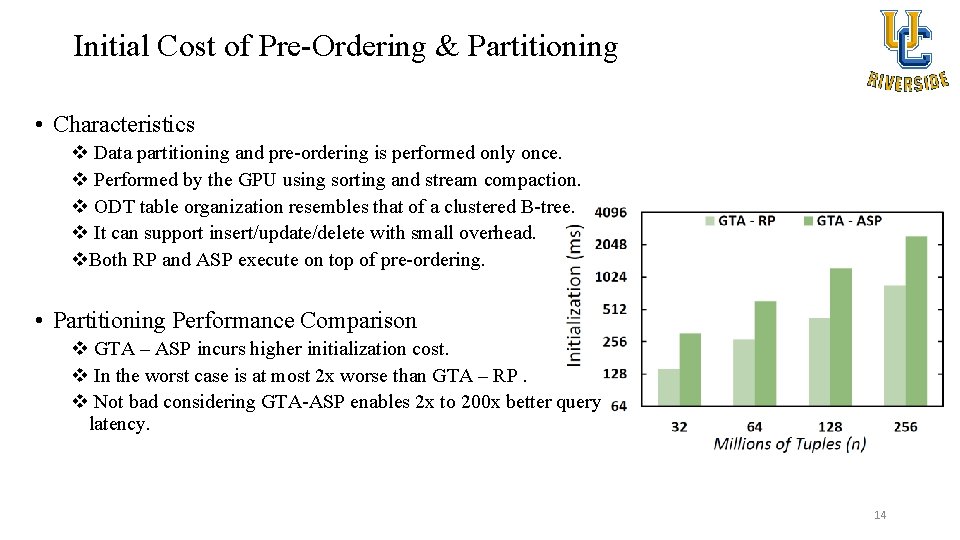

Initial Cost of Pre-Ordering & Partitioning • Characteristics v Data partitioning and pre-ordering is performed only once. v Performed by the GPU using sorting and stream compaction. v ODT table organization resembles that of a clustered B-tree. v It can support insert/update/delete with small overhead. v. Both RP and ASP execute on top of pre-ordering. • Partitioning Performance Comparison v GTA – ASP incurs higher initialization cost. v In the worst case is at most 2 x worse than GTA – RP. v Not bad considering GTA-ASP enables 2 x to 200 x better query latency. 14

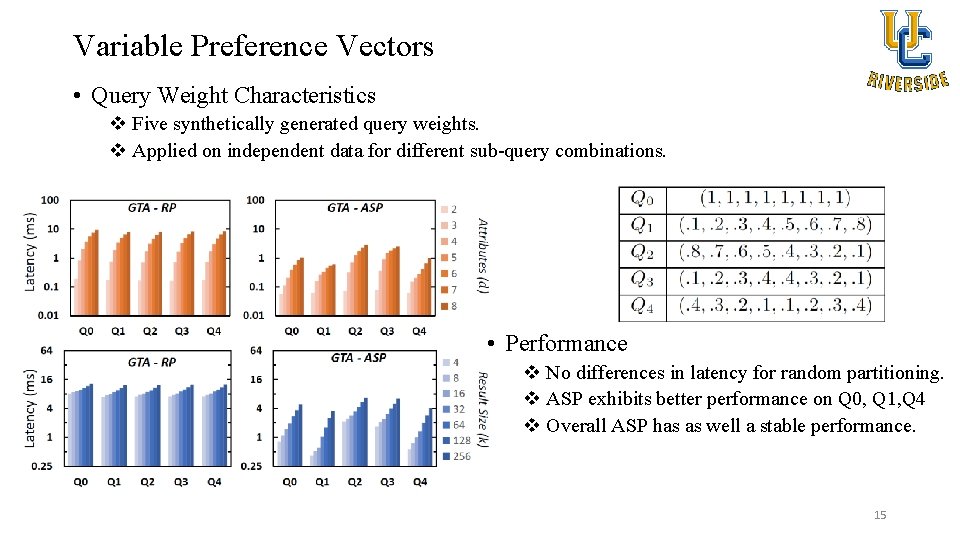

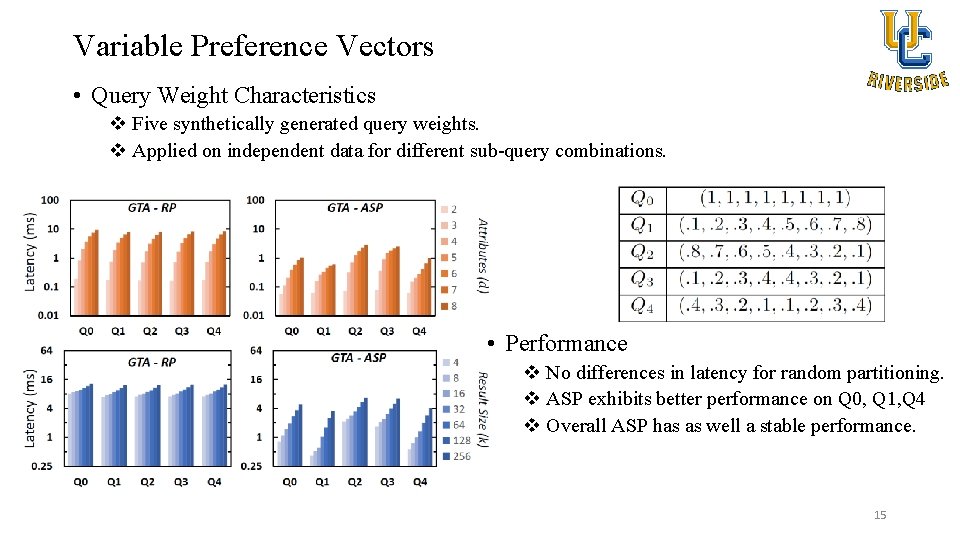

Variable Preference Vectors • Query Weight Characteristics v Five synthetically generated query weights. v Applied on independent data for different sub-query combinations. • Performance v No differences in latency for random partitioning. v ASP exhibits better performance on Q 0, Q 1, Q 4 v Overall ASP has as well a stable performance. 15

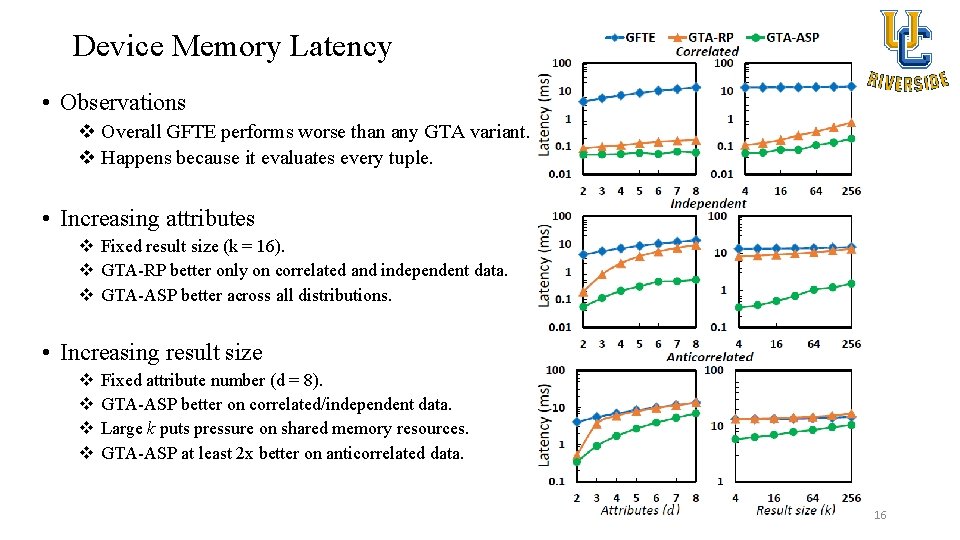

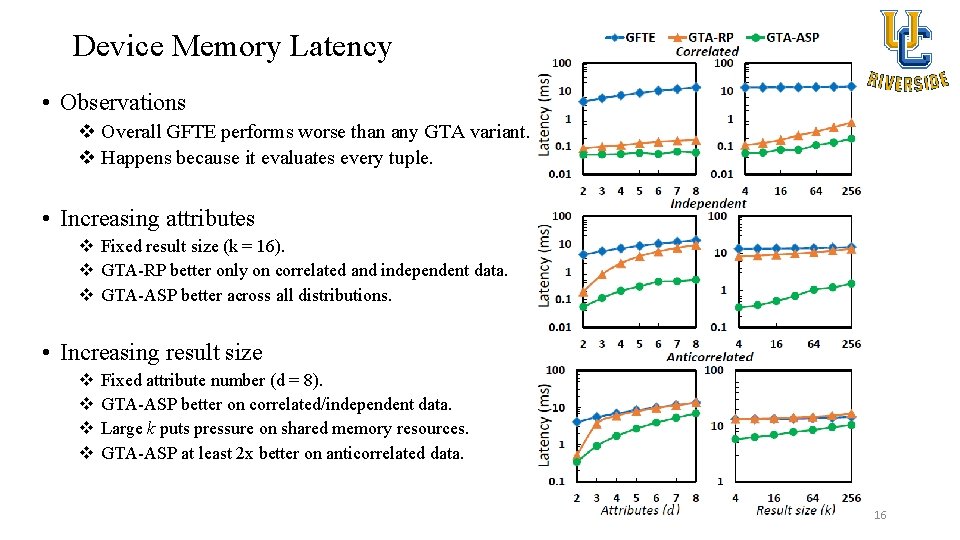

Device Memory Latency • Observations v Overall GFTE performs worse than any GTA variant. v Happens because it evaluates every tuple. • Increasing attributes v Fixed result size (k = 16). v GTA-RP better only on correlated and independent data. v GTA-ASP better across all distributions. • Increasing result size v v Fixed attribute number (d = 8). GTA-ASP better on correlated/independent data. Large k puts pressure on shared memory resources. GTA-ASP at least 2 x better on anticorrelated data. 16

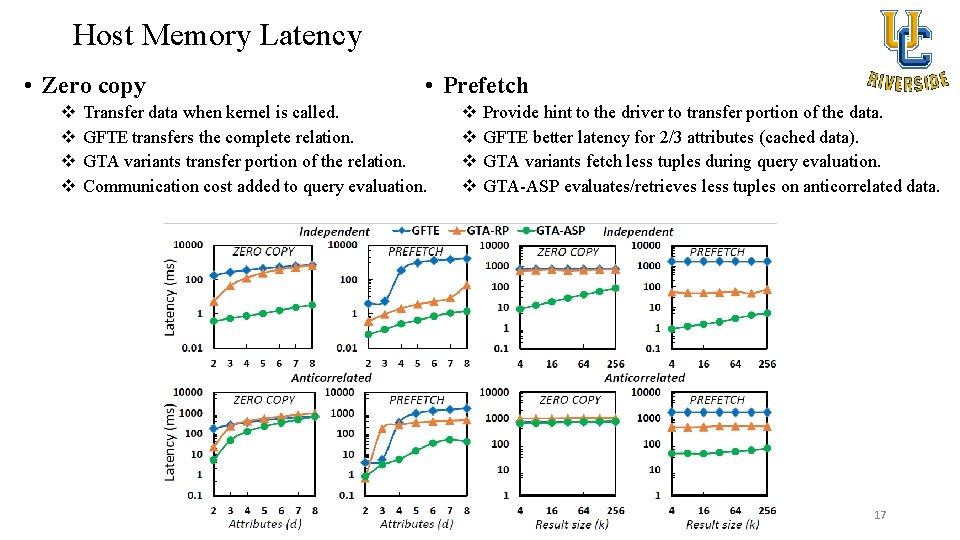

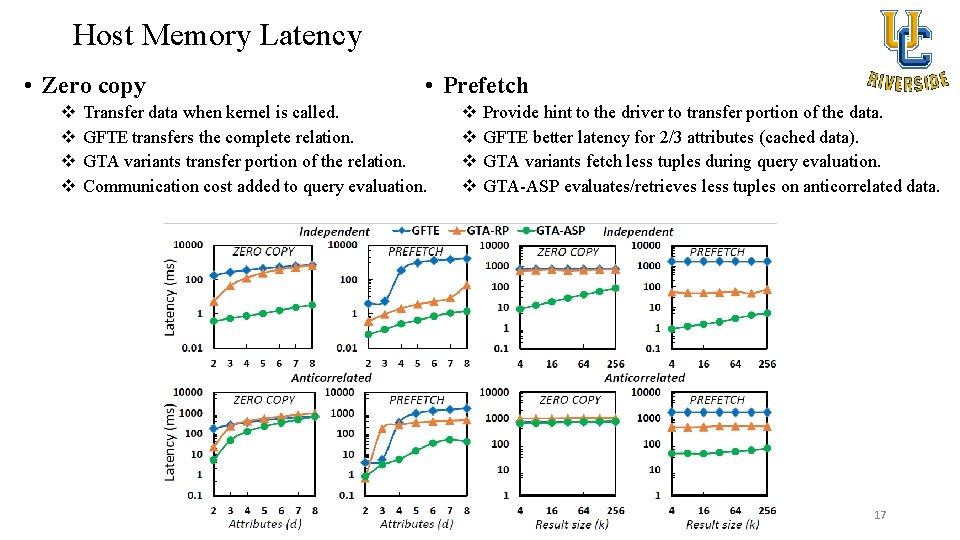

Host Memory Latency • Zero copy v v • Prefetch Transfer data when kernel is called. GFTE transfers the complete relation. GTA variants transfer portion of the relation. Communication cost added to query evaluation. v v Provide hint to the driver to transfer portion of the data. GFTE better latency for 2/3 attributes (cached data). GTA variants fetch less tuples during query evaluation. GTA-ASP evaluates/retrieves less tuples on anticorrelated data. 17

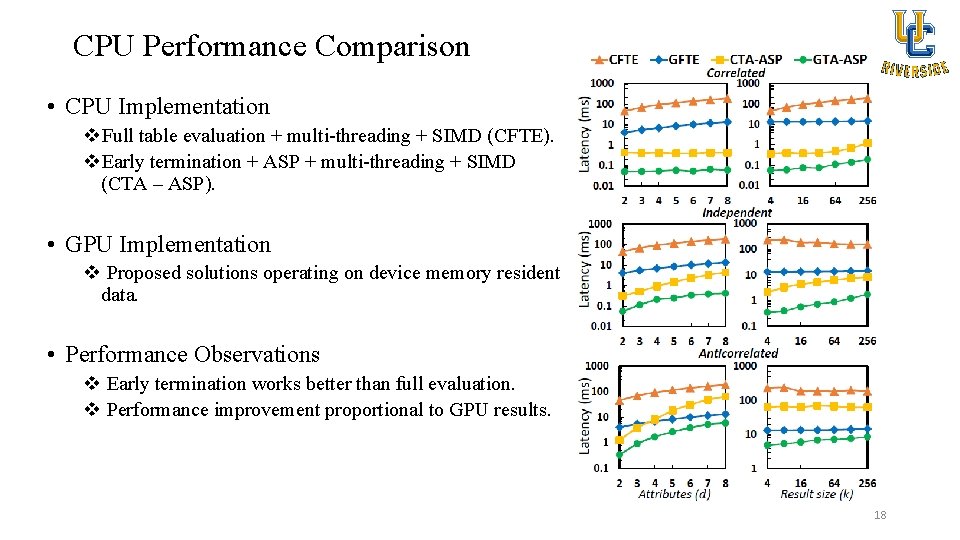

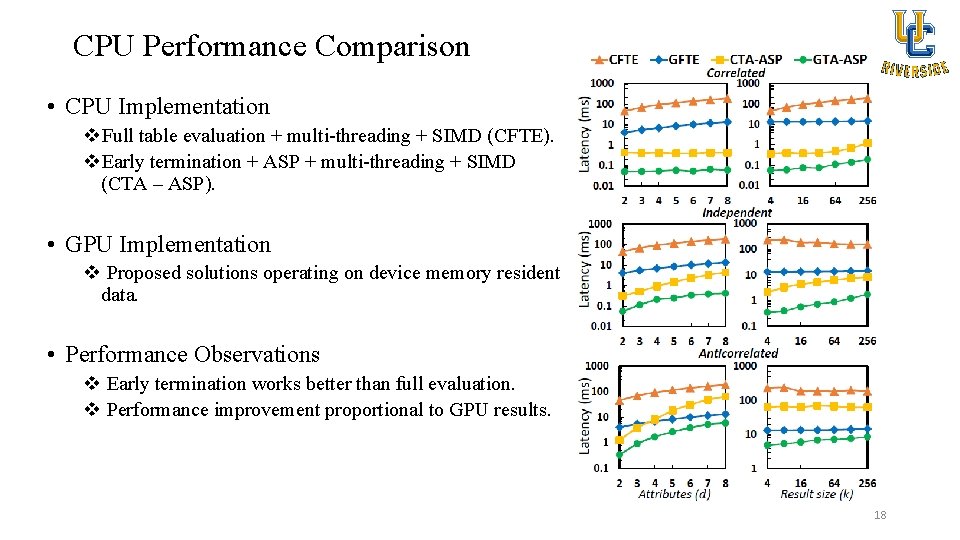

CPU Performance Comparison • CPU Implementation v. Full table evaluation + multi-threading + SIMD (CFTE). v. Early termination + ASP + multi-threading + SIMD (CTA – ASP). • GPU Implementation v Proposed solutions operating on device memory resident data. • Performance Observations v Early termination works better than full evaluation. v Performance improvement proportional to GPU results. 18

Questions? ! 19

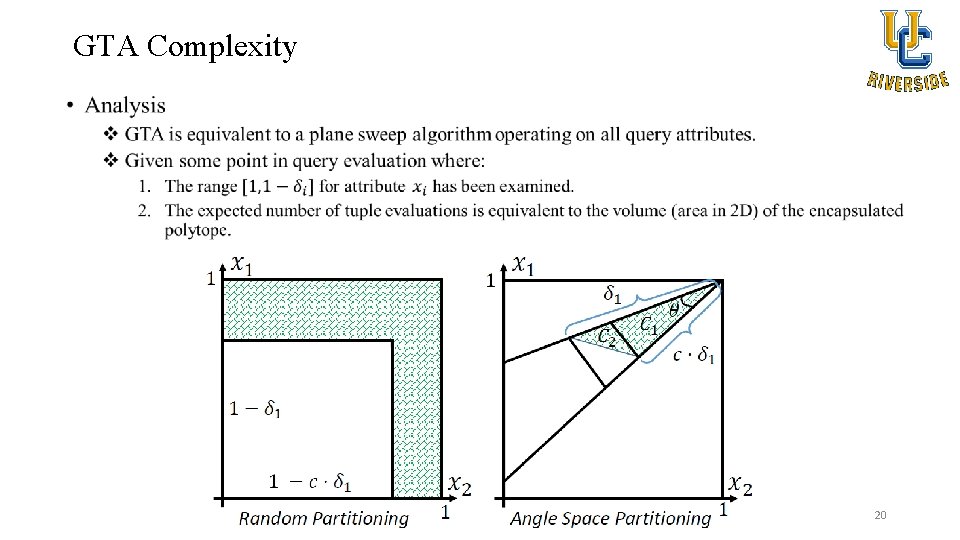

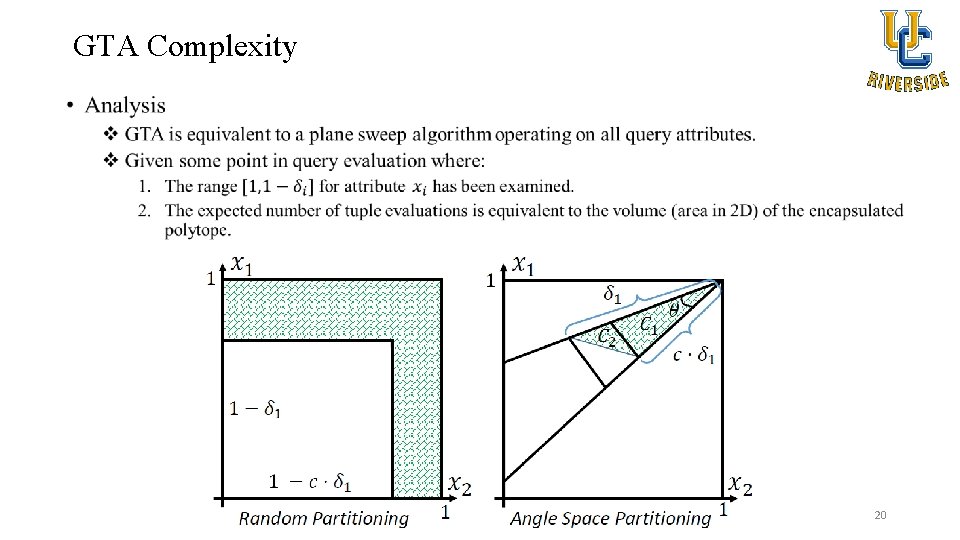

GTA Complexity • 20

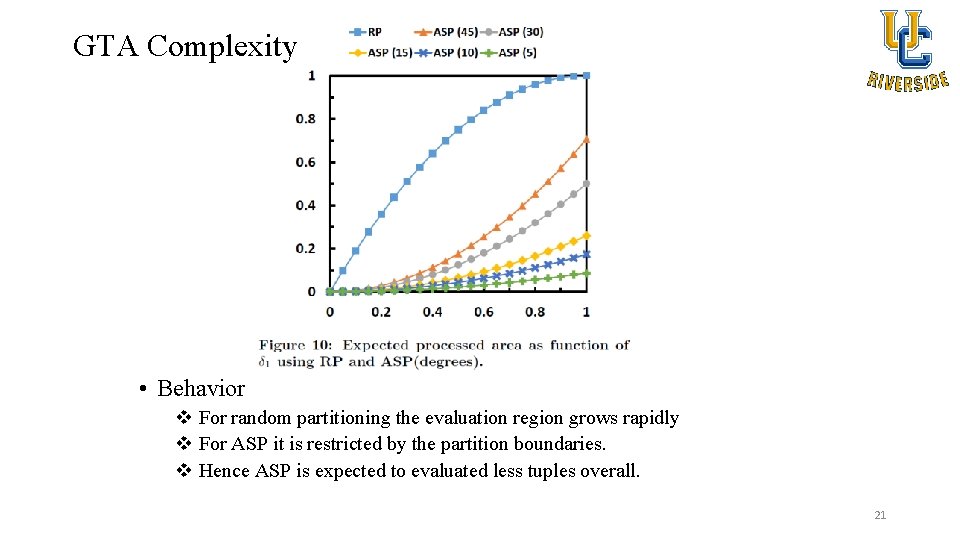

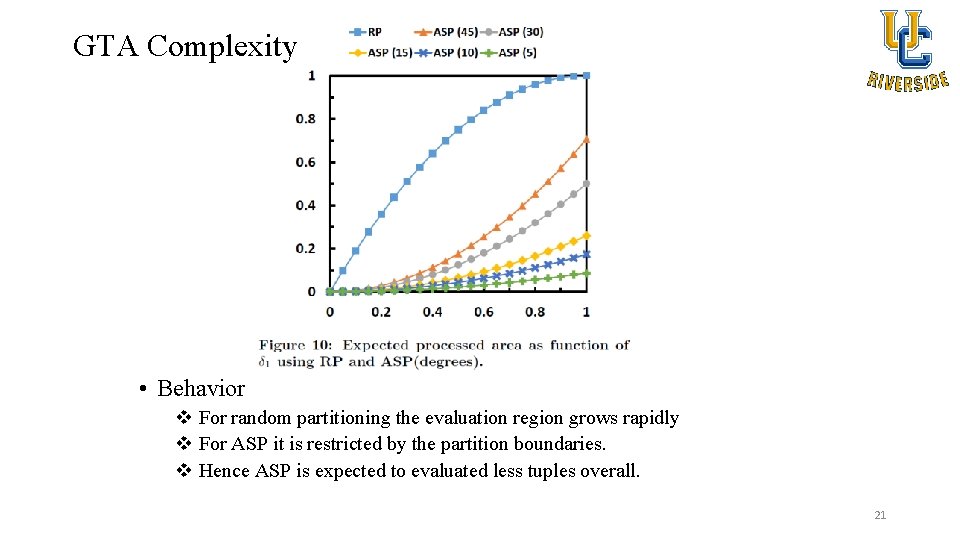

GTA Complexity • Behavior v For random partitioning the evaluation region grows rapidly v For ASP it is restricted by the partition boundaries. v Hence ASP is expected to evaluated less tuples overall. 21