Top X Efficient and Versatile Topk Query Processing

![An XML-IR Scenario (INEX IEEE) … //article[. //bib[about(. //item, “W 3 C”)] ]//sec[about(. //, An XML-IR Scenario (INEX IEEE) … //article[. //bib[about(. //item, “W 3 C”)] ]//sec[about(. //,](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-2.jpg)

![Inverted Block-Index for Content & Structure sec[“xml”] title[“native”] Random Access (RA) par[“retrieval”] Sorted Access Inverted Block-Index for Content & Structure sec[“xml”] title[“native”] Random Access (RA) par[“retrieval”] Sorted Access](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-8.jpg)

![Navigational Index sec C=1. 0 title[“native”] Random Access (RA) Sorted Access (SA) par[“retrieval”] sec Navigational Index sec C=1. 0 title[“native”] Random Access (RA) Sorted Access (SA) par[“retrieval”] sec](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-9.jpg)

![Top. X Query Processing By Example (NRA) Top-2 results sec[“xml”] 46 worst=1. 0 171 Top. X Query Processing By Example (NRA) Top-2 results sec[“xml”] 46 worst=1. 0 171](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-12.jpg)

![Random Access Scheduling – Minimal Probing article RA 1. 0 [1, 419] bib sec Random Access Scheduling – Minimal Probing article RA 1. 0 [1, 419] bib sec](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-15.jpg)

![Selectivity Estimator [VLDB ’ 05] Split the query into a set of basic, characteristic Selectivity Estimator [VLDB ’ 05] Split the query into a set of basic, characteristic](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-17.jpg)

![Score Predictor [VLDB ’ 04] Consider score distributions of the content-related inverted lists PC Score Predictor [VLDB ’ 04] Consider score distributions of the content-related inverted lists PC](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-18.jpg)

![Dynamic and Self-tuning Query Expansion [SIGIR ’ 05] Incrementally merge inverted lists for a Dynamic and Self-tuning Query Expansion [SIGIR ’ 05] Incrementally merge inverted lists for a](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-20.jpg)

- Slides: 28

Top. X Efficient and Versatile Top-k Query Processing for Text, Structured, and Semistructured Data Ph. D Defense May 16 th 2006 Martin Theobald Max Planck Institute for Informatics VLDB ‘ 05

![An XMLIR Scenario INEX IEEE article bibabout item W 3 C secabout An XML-IR Scenario (INEX IEEE) … //article[. //bib[about(. //item, “W 3 C”)] ]//sec[about(. //,](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-2.jpg)

An XML-IR Scenario (INEX IEEE) … //article[. //bib[about(. //item, “W 3 C”)] ]//sec[about(. //, “XML retrieval”)] //par[about(. //, “native XML databases”)] article RANKING title “Current Approaches to XML Data Manage- sec ment” “XML queries with an expressive power similar to that of Datalog …” title “The XML Files” sec bib VAGUENESS title par article “Native XML Data Bases. ” par “Data management systems control data acquisition, storage, and retrieval. Systems evolved from flat files … ” title item inproc “XML-QL: “Proc. Query A Query Languages Language Workshop, for XML. ” W 3 C, 1998. ” title “The Ontology Game” PRUNING “Native XML data base systems can store schemaless data. . . ” par sec bib title “The Dirty Little Secret” par “Sophisticated technologies “There, I've said developed by it - the "O" word. If smart people. ” anyone is thinking along ontology lines, I would like to break some old news …” item title “XML” par url “w 3 c. org/xml” “What does XML add for retrieval? It adds formal ways …”

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

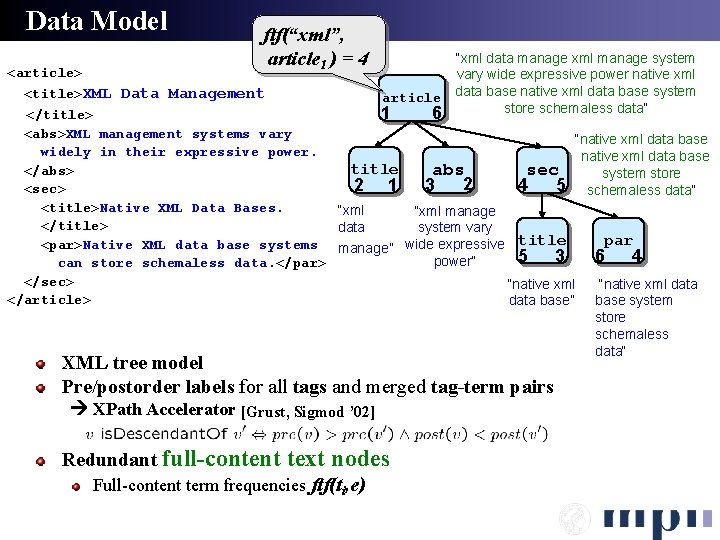

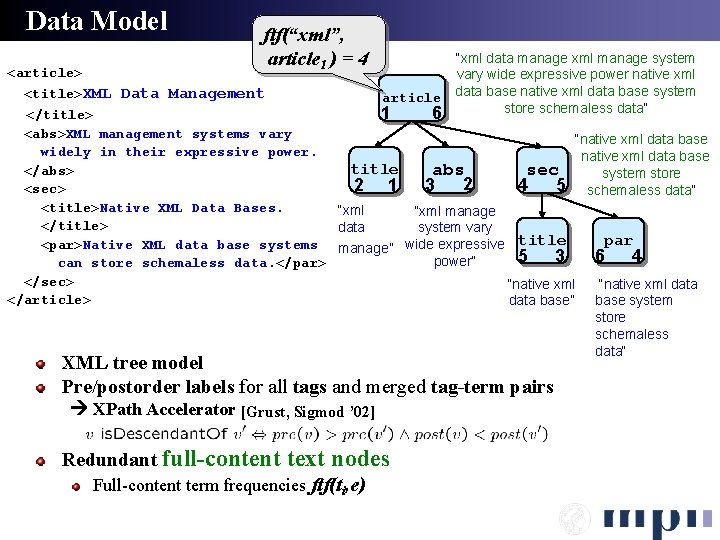

Data Model ftf(“xml”, article 1 ) = 4 <article> <title>XML Data Management “xml data manage xml manage system vary wide expressive power native xml article data base native xml data base system store schemaless data“ 1 6 </title> <abs>XML management systems vary “native xml data base widely in their expressive power. native xml data base title </abs> abs sec system store 2 2 1 3 4 5 <sec> schemaless data“ <title>Native XML Data Bases. “xml manage </title> data system vary par <par>Native XML data base systems manage” wide expressive title 5 3 6 4 power“ can store schemaless data. </par> </sec> “native xml data “native xml </article> data base” base system store schemaless data“ XML tree model Pre/postorder labels for all tags and merged tag-term pairs XPath Accelerator [Grust, Sigmod ’ 02] Redundant full-content text nodes Full-content term frequencies ftf(ti, e)

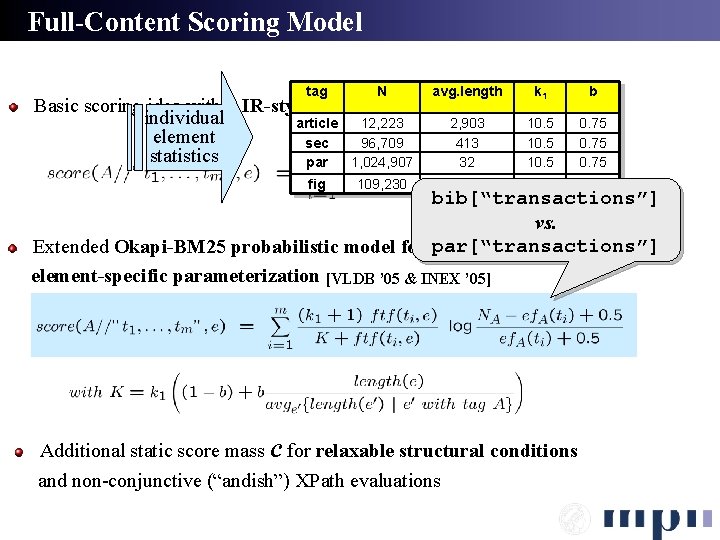

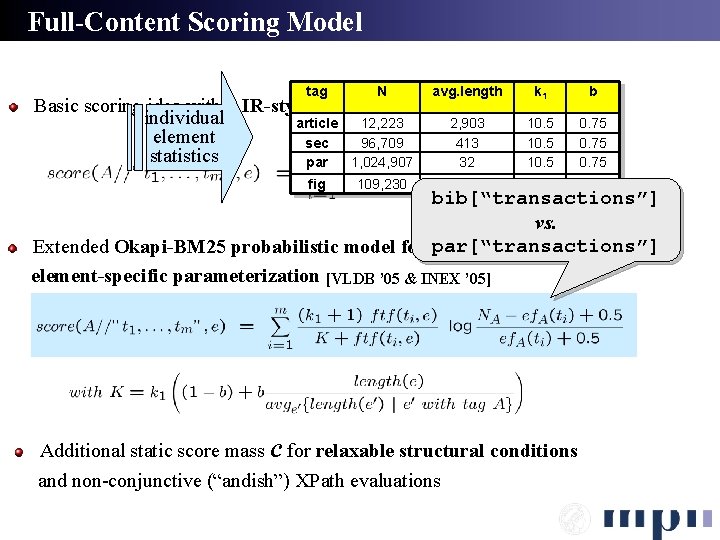

Full-Content Scoring Model tag N avg. length k 1 b fig 109, 230 13 10. 5 0. 75 Basic scoring idea within IR-style family of TF*IDF ranking functions individual article 12, 223 2, 903 10. 5 0. 75 element sec 96, 709 413 10. 5 0. 75 statistics par 1, 024, 907 32 10. 5 0. 75 bib[“transactions”] vs. par[“transactions”] Extended Okapi-BM 25 probabilistic model for XML with element-specific parameterization [VLDB ’ 05 & INEX ’ 05] Additional static score mass c for relaxable structural conditions and non-conjunctive (“andish”) XPath evaluations

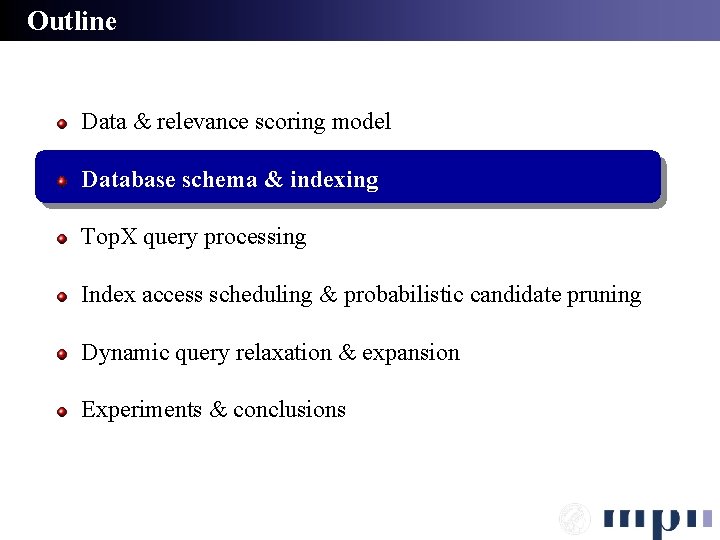

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

![Inverted BlockIndex for Content Structure secxml titlenative Random Access RA parretrieval Sorted Access Inverted Block-Index for Content & Structure sec[“xml”] title[“native”] Random Access (RA) par[“retrieval”] Sorted Access](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-8.jpg)

Inverted Block-Index for Content & Structure sec[“xml”] title[“native”] Random Access (RA) par[“retrieval”] Sorted Access (SA) sec[“xml”] title[“native”] par[“retrieval”] eid docid score pre post maxscore 216 17 0. 9 2 15 0. 9 72 3 0. 8 14 10 0. 8 51 2 0. 5 4 12 0. 5 671 31 0. 4 12 23 0. 4 eid docid score post maxscore 46 2 0. 9 2 15 0. 9 9 2 0. 5 10 8 0. 9 171 5 0. 85 1 20 0. 85 84 3 0. 1 1 12 0. 1 3 28 182 96 1 2 5 4 1. 0 0. 8 0. 75 1 8 3 6 Combined inverted index over merged tag-term pairs (on redundant element full-contents) Sequential block-scans Group elements in descending order of (maxscore, docid) per list Block-scan all elements per doc for a given (tag, term) key Stored as inverted files or database tables (two B+-tree indexes over full range of attributes) 21 14 7 4 1. 0 0. 8 0. 75

![Navigational Index sec C1 0 titlenative Random Access RA Sorted Access SA parretrieval sec Navigational Index sec C=1. 0 title[“native”] Random Access (RA) Sorted Access (SA) par[“retrieval”] sec](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-9.jpg)

Navigational Index sec C=1. 0 title[“native”] Random Access (RA) Sorted Access (SA) par[“retrieval”] sec title[“native”] par[“retrieval”] eid docid pre post eid docid score post maxscore 216 17 0. 9 2 15 0. 9 72 3 0. 8 14 10 0. 8 51 2 0. 5 4 12 0. 5 671 31 0. 4 12 23 0. 4 eid docid score post maxscore 46 2 2 15 9 2 10 8 171 5 1 20 84 3 1 12 3 28 182 96 1 2 5 4 1. 0 0. 8 0. 75 Additional element directory Random accesses on B+-tree index using (docid, tag) as key Carefully scheduled probes Schema-oblivious indexing & querying Non-schematic, heterogeneous data sources (no DTD required) Supports full NEXI syntax Supports all 13 XPath axes (+level ) 1 8 3 6 21 14 7 4 1. 0 0. 8 0. 75

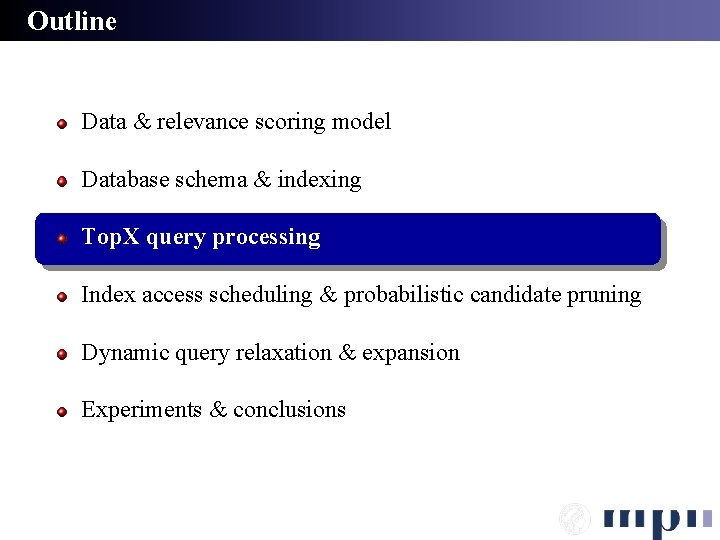

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

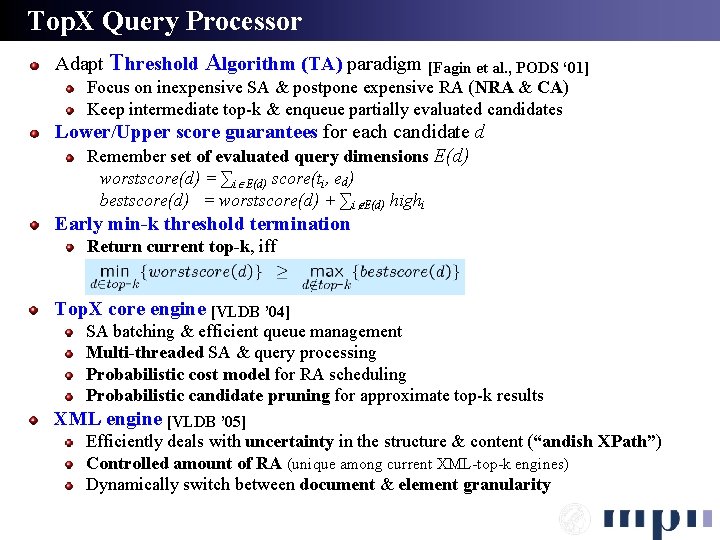

Top. X Query Processor Adapt Threshold Algorithm (TA) paradigm [Fagin et al. , PODS ‘ 01] Focus on inexpensive SA & postpone expensive RA (NRA & CA) Keep intermediate top-k & enqueue partially evaluated candidates Lower/Upper score guarantees for each candidate d Remember set of evaluated query dimensions E(d) worstscore(d) = ∑i E(d) score(ti, ed) bestscore(d) = worstscore(d) + ∑i E(d) highi Early min-k threshold termination Return current top-k, iff Top. X core engine [VLDB ’ 04] SA batching & efficient queue management Multi-threaded SA & query processing Probabilistic cost model for RA scheduling Probabilistic candidate pruning for approximate top-k results XML engine [VLDB ’ 05] Efficiently deals with uncertainty in the structure & content (“andish XPath”) Controlled amount of RA (unique among current XML-top-k engines) Dynamically switch between document & element granularity

![Top X Query Processing By Example NRA Top2 results secxml 46 worst1 0 171 Top. X Query Processing By Example (NRA) Top-2 results sec[“xml”] 46 worst=1. 0 171](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-12.jpg)

Top. X Query Processing By Example (NRA) Top-2 results sec[“xml”] 46 worst=1. 0 171 worst=0. 9 worst=1. 6 3 182 title[“native”] par[“retrieval”] 9 worst=0. 9 46 worst=0. 5 worst=1. 7 worst=2. 2 216 51 28 min-2=0. 5 min-2=1. 6 min-2=1. 0 min-2=0. 9 min-2=0. 0 1. 0 sec[“xml”] 0. 9 eid docid score 0. 85 0. 1 pre post 46 2 0. 9 2 15 9 2 0. 5 10 8 171 5 0. 85 1 20 84 3 0. 1 1 12 doc 2 46 worst=2. 2 worst=0. 9 worst=1. 7 best=2. 9 best=2. 8 best=2. 7 best=2. 5 best=2. 2 51 doc 3 9 worst=0. 5 51 2 0. 5 4 12 182 5 0. 75 3 7 671 31 0. 4 12 23 96 4 0. 75 6 4 doc 17 best=2. 5 best=2. 4 best=2. 3 best=1. 3 best=0. 5 28 worst=0. 8 84 worst=0. 1 best=2. 65 best=2. 45 best=1. 6 best=0. 9 72 1. 0 title[“native”] 1. 0 par[“retrieval”] 0. 9 eid docid score post 1. 0 eid docid score post 0. 9 2 15 1 1. 0 1 21 0. 8 216 17 0. 8 3 3 0. 8 14 10 28 2 0. 8 8 14 0. 5 72 0. 75 worst=0. 9 best=2. 8 best=2. 75 best=2. 55 best=1. 8 best=1. 0 doc worst=1. 0 best=2. 8 best=2. 75 best=2. 65 best=1. 9 best=1. 6 3 216 Pseudo- doc 1 worst=0. 0 best=2. 9 best=2. 8 best=2. 75 best=2. 65 best=2. 45 best=1. 7 best=1. 4 best=1. 35 Candidate queue doc 5 171 worst=0. 85 worst=1. 6 best=2. 75 best=2. 65 best=2. 45 best=2. 1 182

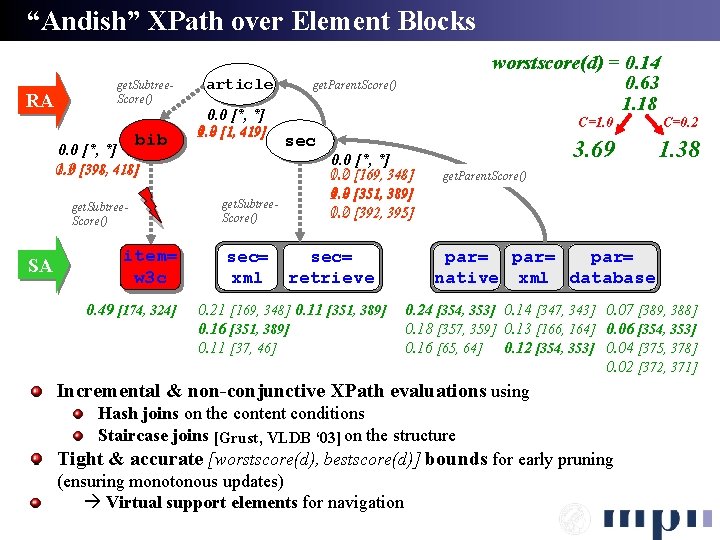

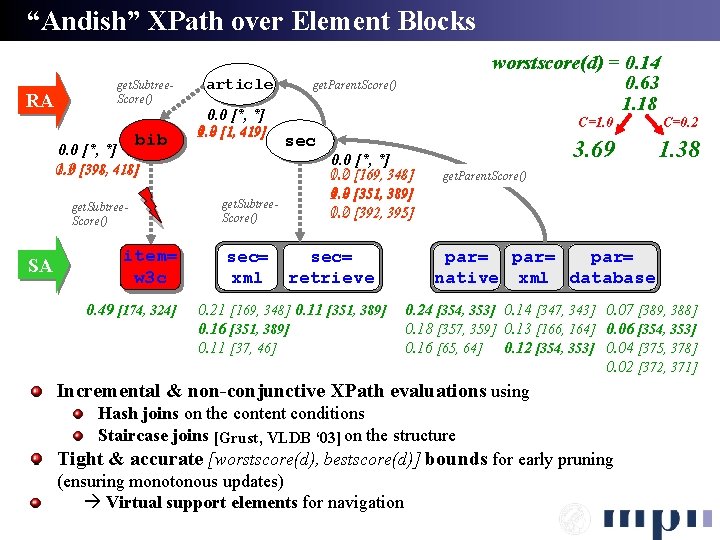

“Andish” XPath over Element Blocks RA get. Subtree. Score() bib article 0. 0 [*, *] 0. 2 1. 0 [1, 419] 0. 0 [*, *] 1. 0 [398, 418] 0. 2 get. Subtree. Score() SA item= w 3 c 0. 49 [174, 324] get. Subtree. Score() sec= xml worstscore(d) = 0. 14 0. 63 1. 18 get. Parent. Score() sec 0. 0 [*, *] 0. 2 [169, 348] 1. 0 0. 2 [351, 389] 1. 0 0. 2 [392, 395] 1. 0 sec= retrieve 0. 21 [169, 348] 0. 11 [351, 389] 0. 16 [351, 389] 0. 11 [37, 46] C=1. 0 C=0. 2 3. 69 1. 38 get. Parent. Score() par= native xml database 0. 24 [354, 353] 0. 14 [347, 343] 0. 07 [389, 388] 0. 18 [357, 359] 0. 13 [166, 164] 0. 06 [354, 353] 0. 16 [65, 64] 0. 12 [354, 353] 0. 04 [375, 378] 0. 02 [372, 371] Incremental & non-conjunctive XPath evaluations using Hash joins on the content conditions Staircase joins [Grust, VLDB ‘ 03] on the structure Tight & accurate [worstscore(d), bestscore(d)] bounds for early pruning (ensuring monotonous updates) Virtual support elements for navigation

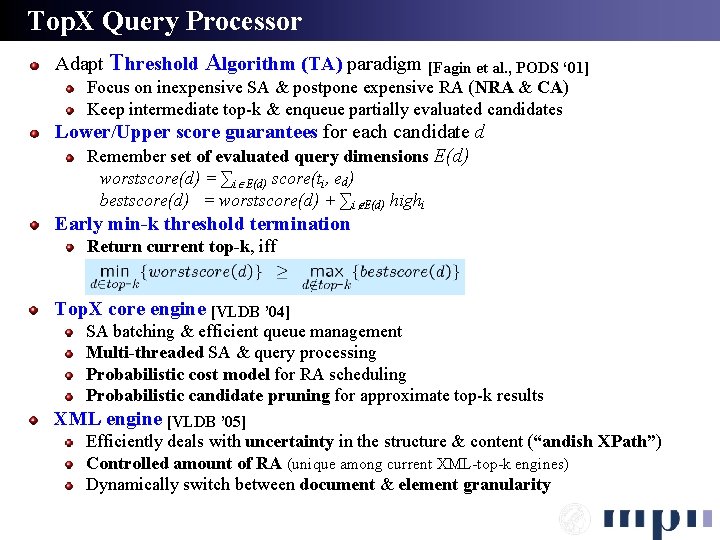

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

![Random Access Scheduling Minimal Probing article RA 1 0 1 419 bib sec Random Access Scheduling – Minimal Probing article RA 1. 0 [1, 419] bib sec](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-15.jpg)

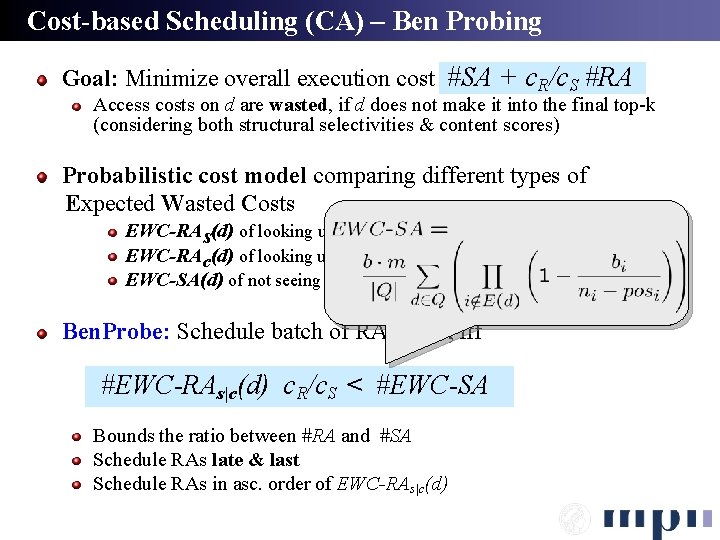

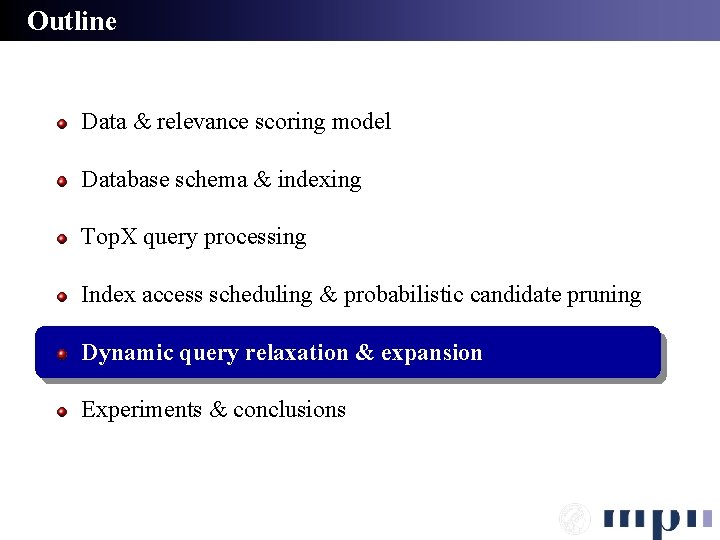

Random Access Scheduling – Minimal Probing article RA 1. 0 [1, 419] bib sec 1. 0 [398, 418] SA 1. 0 [169, 348] item= w 3 c 0. 49 [174, 324] sec= xml retrieve 0. 16 [351, 389] 0. 11 [351, 389] par= native xml database 0. 24 [354, 353] 0. 12 [354, 353] 0. 06 [354, 353] Min. Probe: Schedule RAs only for the most promising candidates Extending “Expensive Predicates & Minimal Probing” [Chang&Hwang, SIGMOD ‘ 02] Schedule batch of RAs on d, only iff rank-k worstscore(d) + rd c > min-k evaluated content & structurerelated score unresolved, static structural score mass

Cost-based Scheduling (CA) – Ben Probing Goal: Minimize overall execution cost #SA + c. R/c. S #RA Access costs on d are wasted, if d does not make it into the final top-k (considering both structural selectivities & content scores) Probabilistic cost model comparing different types of Expected Wasted Costs EWC-RAs(d) of looking up d in the remaining structure EWC-RAc(d) of looking up d in the remaining content EWC-SA(d) of not seeing d in the next batch of b SAs Ben. Probe: Schedule batch of RAs on d, iff #EWC-RAs|c(d) c. R/c. S < #EWC-SA Bounds the ratio between #RA and #SA Schedule RAs late & last Schedule RAs in asc. order of EWC-RAs|c(d)

![Selectivity Estimator VLDB 05 Split the query into a set of basic characteristic Selectivity Estimator [VLDB ’ 05] Split the query into a set of basic, characteristic](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-17.jpg)

Selectivity Estimator [VLDB ’ 05] Split the query into a set of basic, characteristic XML patterns: twigs, paths & tag-term pairs //sec[//figure=“java”] [//par=“xml”] [//bib=“vldb”] sec Consider structural selectivities of unresolved & non-redundant patterns Y PS [d satisfies all structural conditions Y] = PS [d satisfies a subset Y’ of structural conditions Y] = Consider binary correlations between structural patterns and/or tag-term pairs (data sampling, query logs, etc. ) figure= “java” conjunctive sh” i d “an par= “xml” //sec[//figure]//par //sec[//figure]//bib //sec[//par]//bib //sec//figure //sec//par //sec//bib=“vldb” //par=“xml” //figure=“java” bib= “vldb” p 1 = 0. 682 p 2 = 0. 001 p 3 = 0. 002 p 4 = 0. 688 p 5 = 0. 968 p 6 = 0. 002 p 7= 0. 023 p 8 = 0. 067 p 9 = 0. 011

![Score Predictor VLDB 04 Consider score distributions of the contentrelated inverted lists PC Score Predictor [VLDB ’ 04] Consider score distributions of the content-related inverted lists PC](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-18.jpg)

Score Predictor [VLDB ’ 04] Consider score distributions of the content-related inverted lists PC [d gets in the final top-k] = Convolutions of score histograms (assuming independence) Probabilistic candidate pruning: title[“native”] eid docid score post f 1 the candidate queue, iff Drop d from maxscore 216 17 0. 9 2 15 0. 9 72 3 0. 8 10 8 0. 8 51 2 PC [d gets in the final top-k] < ε par[“retrieval”] eid docid score post maxscore 3 1 1. 0 1 21 1. 0 28 2 0. 8 8 14 0. 8 182 5 0. 75 3 7 0. 75 sampling 0. 5 4 12 0. 5 (with probabilistic guarantees for relative precision & recall) 0 1 high 1 f 2 2 1 high 2 0 Closed-form convolutions, e. g. , truncated Poisson Moment-generating functions & Chernoff-Hoeffding bounds Combined score predictor & selectivity estimator δ(d) 0

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

![Dynamic and Selftuning Query Expansion SIGIR 05 Incrementally merge inverted lists for a Dynamic and Self-tuning Query Expansion [SIGIR ’ 05] Incrementally merge inverted lists for a](https://slidetodoc.com/presentation_image_h2/9667faab22095cd386a17f4f61ab4a69/image-20.jpg)

Dynamic and Self-tuning Query Expansion [SIGIR ’ 05] Incrementally merge inverted lists for a set of active expansions exp(t 1). . exp(tm) in descending order of scores s(ti, d) SA fire d 78 d 10 d 11 d 1. . . d 37 d 42 d 32 d 87. . . ~disaster accident disaster SA d 42 d 11 d 92 d 37 … d 42 d 11 d 92 d 21. . . tunnel d 95 d 17 d 11 d 99. . . Dynamically expand set of active expansions only when beneficial for finding the final top-k results Top-k (transport, tunnel, ~disaster) SA transport d 66 d 93 d 95 d 101. . . Max-score aggregation for fending off topic drifts TREC Robust Topic no. 363 Specialized expansion operators Incremental Merge operator Nested Top-k operator (phrase matching) Supports text, structured records & XML Boolean (but ranked) retrieval mode rge e Incr. M

Outline Data & relevance scoring model Database schema & indexing Top. X query processing Index access scheduling & probabilistic candidate pruning Dynamic query relaxation & expansion Experiments & conclusions

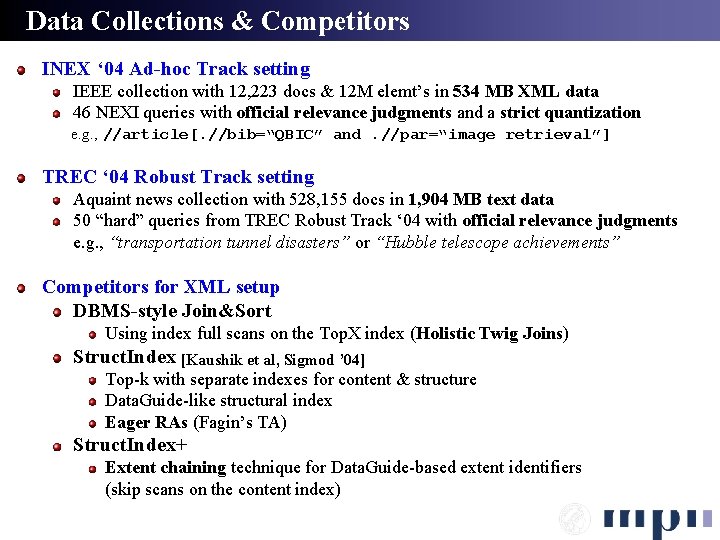

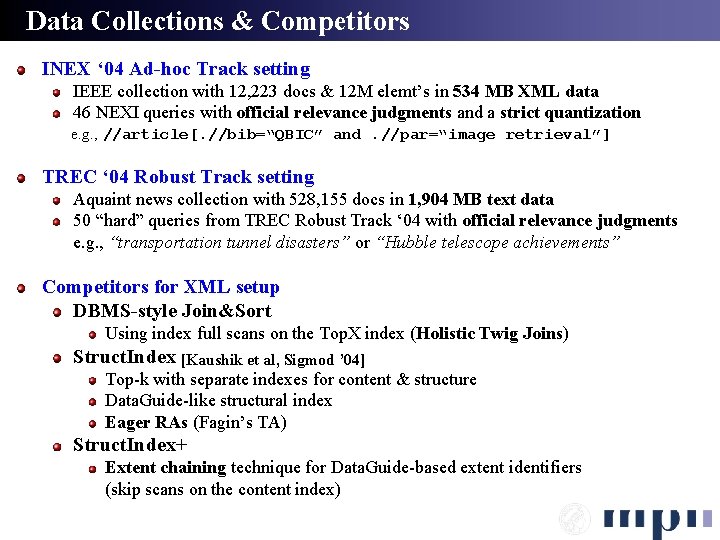

Data Collections & Competitors INEX ‘ 04 Ad-hoc Track setting IEEE collection with 12, 223 docs & 12 M elemt’s in 534 MB XML data 46 NEXI queries with official relevance judgments and a strict quantization e. g. , //article[. //bib=“QBIC” and. //par=“image retrieval”] TREC ‘ 04 Robust Track setting Aquaint news collection with 528, 155 docs in 1, 904 MB text data 50 “hard” queries from TREC Robust Track ‘ 04 with official relevance judgments e. g. , “transportation tunnel disasters” or “Hubble telescope achievements” Competitors for XML setup DBMS-style Join&Sort Using index full scans on the Top. X index (Holistic Twig Joins) Struct. Index [Kaushik et al, Sigmod ’ 04] Top-k with separate indexes for content & structure Data. Guide-like structural index Eager RAs (Fagin’s TA) Struct. Index+ Extent chaining technique for Data. Guide-based extent identifiers (skip scans on the content index)

0 12. 01 761, 970 3, 25, 068 17. 02 n/a 77, 482 5, 074, 384 80. 02 10 0. 0 635, 507 64, 807 1. 38 Top. X – Ben. Probe 10 0. 0 723, 169 84, 424 3. 22 Top. X – Ben. Probe 1, 000 0. 0 882, 929 1, 902, 427 16. 10 Join&Sort 10 n/a 9, 122, 318 Struct. Index 10 n/a Struct. Index+ 10 Top. X – Min. Probe k M AP @ re k l. P. P rree cc P@ # RA se # SA CP U on sil k ep 46 NEXI Queries c INEX: Top. X vs. Join&Sort & Struct. Index 0. 34 0. 09 1. 00 0. 03 0. 17 1. 00

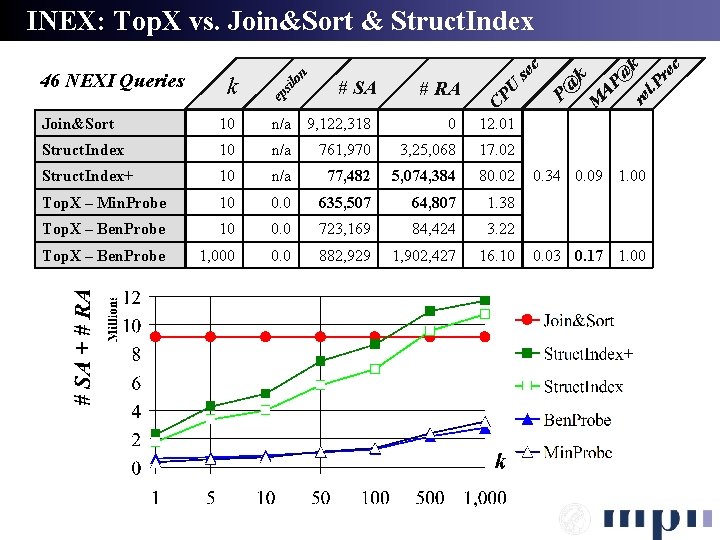

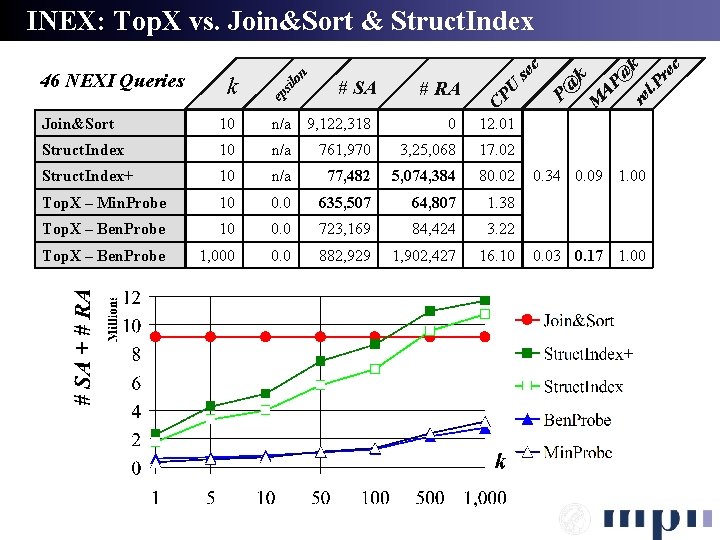

Top. X - Min. Probe M AP @ re k l. P re c k # RA se on # SA 635, 507 64, 807 1. 38 0. 34 0. 09 1. 00 10 0. 25 392, 395 56, 952 2. 31 0. 34 0. 08 0. 77 10 0. 50 231, 109 48, 963 0. 92 0. 31 0. 08 0. 65 10 0. 75 102, 118 42, 174 0. 46 0. 33 0. 08 0. 51 10 1. 00 36, 936 35, 327 0. 46 0. 30 0. 07 0. 38 P@ 0. 00 CP U 10 sil k ep 46 NEXI Queries c INEX: Top. X with Probabilistic Pruning

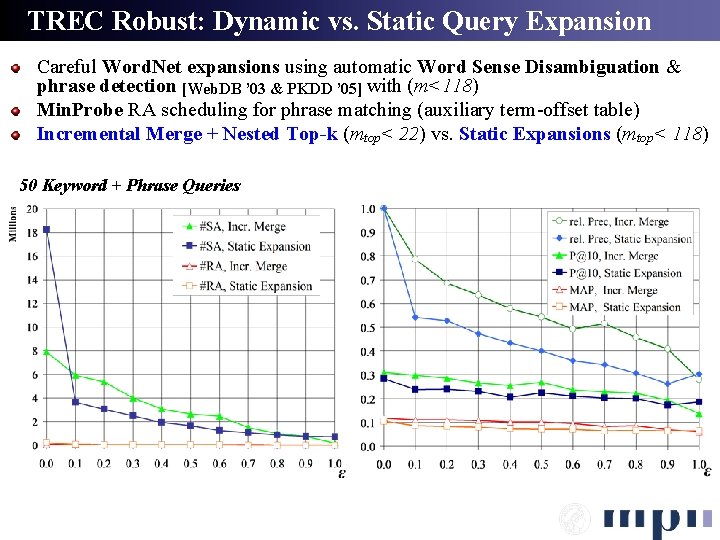

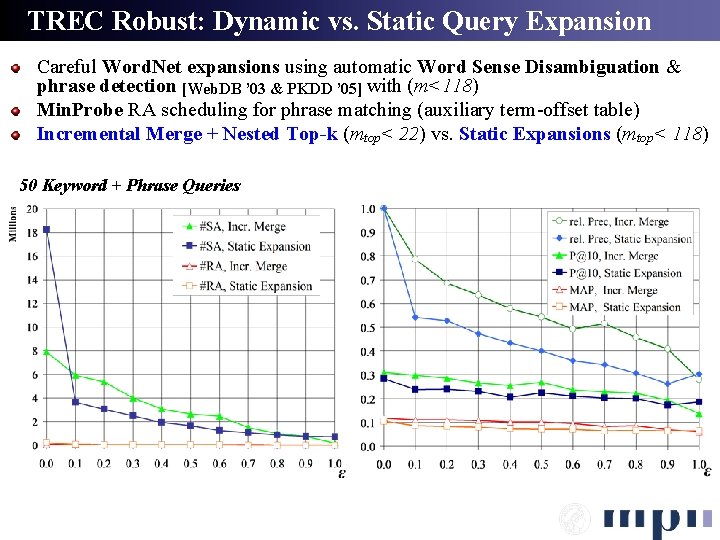

TREC Robust: Dynamic vs. Static Query Expansion Careful Word. Net expansions using automatic Word Sense Disambiguation & phrase detection [Web. DB ’ 03 & PKDD ’ 05] with (m<118) Min. Probe RA scheduling for phrase matching (auxiliary term-offset table) Incremental Merge + Nested Top-k (mtop< 22) vs. Static Expansions (mtop< 118) 50 Keyword + Phrase Queries

Conclusions Efficient and versatile Top. X query processor Extensible framework for XML-IR & full-text search Very good precision/runtime ratio for probabilistic candidate pruning Self-tuning solution for robust query expansions & IR-style vague search Combined SA and RA scheduling close to lower bound for CA access cost [Submitted for VLDB ’ 06] Scalability Optimized for query processing IO Exploits cheap disk space for redundant index structures (constant redundancy factor of 4 -5 for INEX IEEE) Extensive TREC Terabyte runs with 25, 000 text documents (426 GB) INEX 2006 New Wikipedia XML collection with 660, 000 documents & 120, 000 elements (~ 6 GB raw XML) Official host for the Topic Development and Interactive Track (69 groups registered worldwide) Top. X Web. Service available (SOAP connector)

That’s it. Thank you!

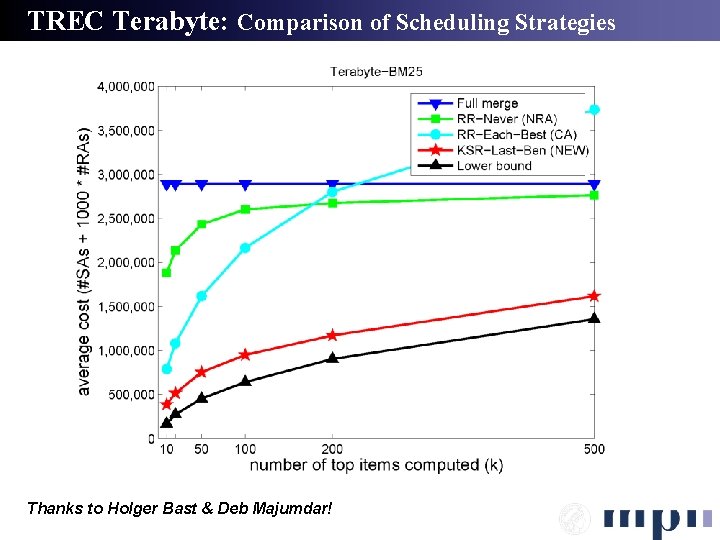

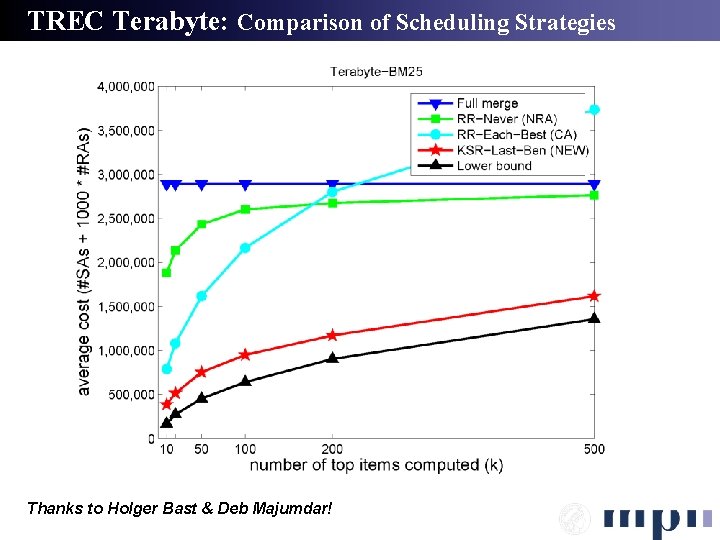

TREC Terabyte: Comparison of Scheduling Strategies Thanks to Holger Bast & Deb Majumdar!