Firstorder Learning Limitations of AVL I Consider the

- Slides: 29

First-order Learning

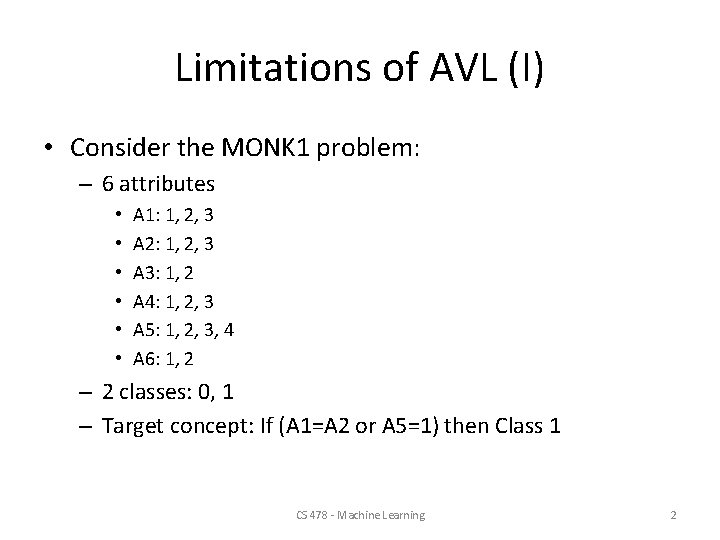

Limitations of AVL (I) • Consider the MONK 1 problem: – 6 attributes • • • A 1: 1, 2, 3 A 2: 1, 2, 3 A 3: 1, 2 A 4: 1, 2, 3 A 5: 1, 2, 3, 4 A 6: 1, 2 – 2 classes: 0, 1 – Target concept: If (A 1=A 2 or A 5=1) then Class 1 CS 478 - Machine Learning 2

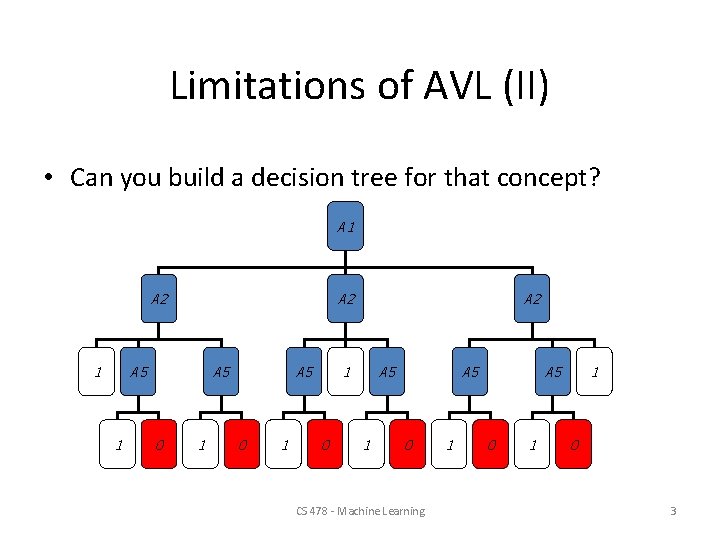

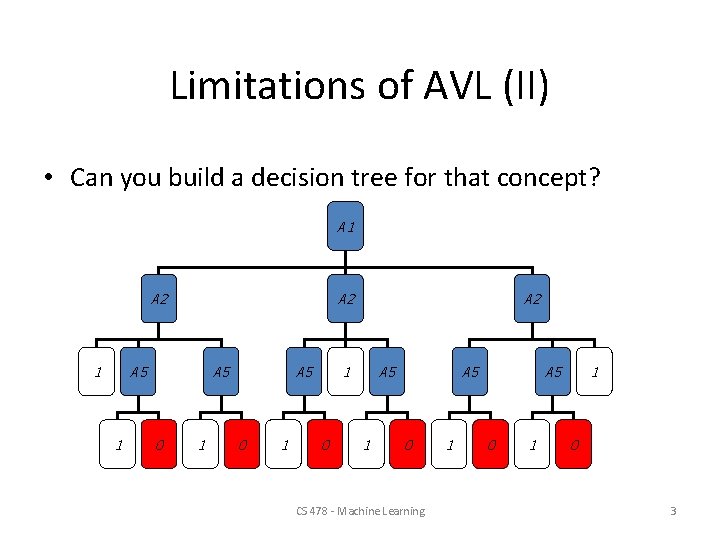

Limitations of AVL (II) • Can you build a decision tree for that concept? A 1 A 2 A 5 1 A 5 0 1 A 2 1 0 A 5 1 A 5 0 CS 478 - Machine Learning 1 A 5 0 1 1 0 3

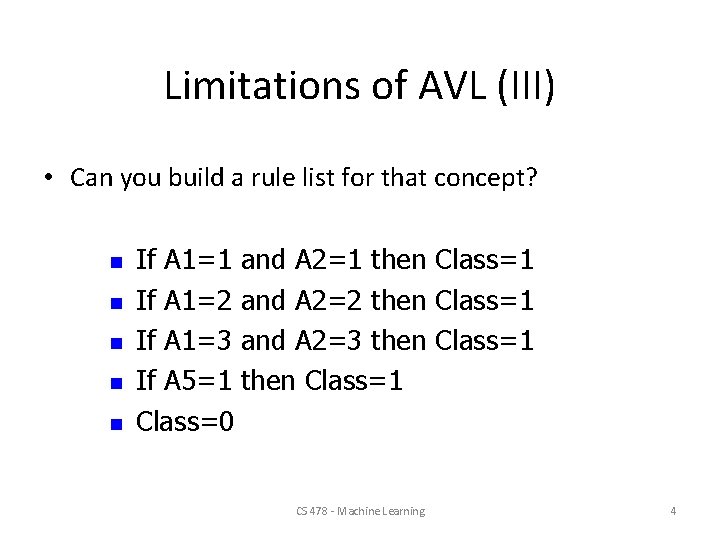

Limitations of AVL (III) • Can you build a rule list for that concept? n n n If A 1=1 and A 2=1 then Class=1 If A 1=2 and A 2=2 then Class=1 If A 1=3 and A 2=3 then Class=1 If A 5=1 then Class=1 Class=0 CS 478 - Machine Learning 4

First-order Language • What we really want is a language of generalization that supports first-order concepts, so that relations between attributes may be accounted for in a natural way • For simplicity, we restrict ourselves to Horn clauses CS 478 - Machine Learning 5

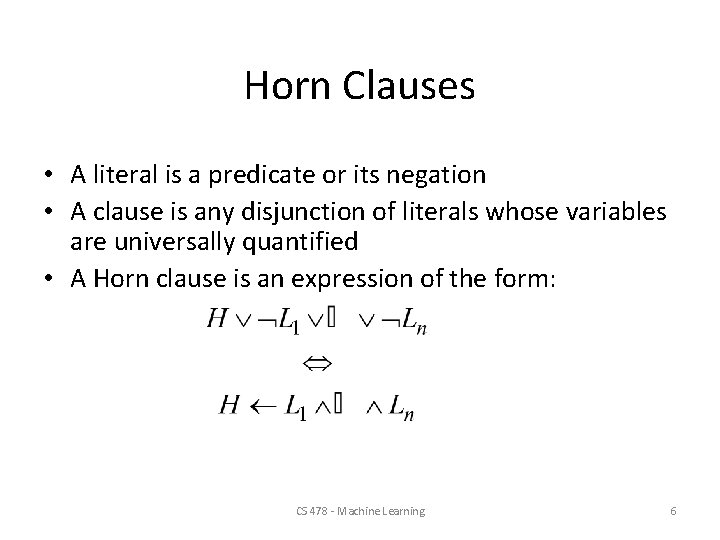

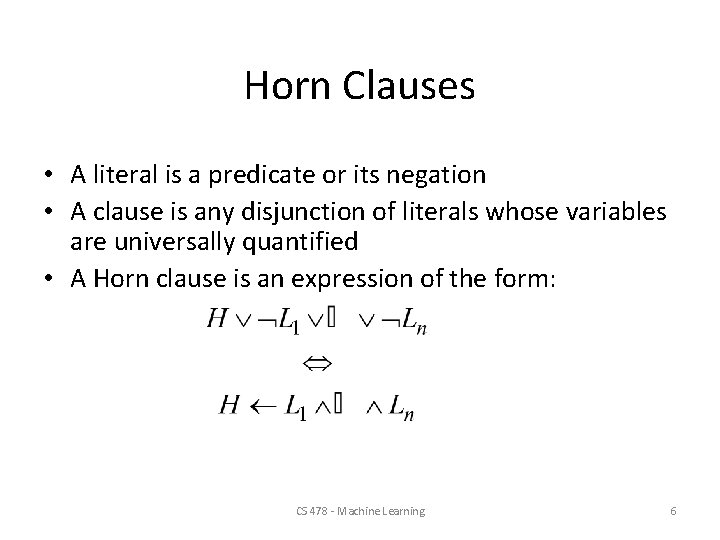

Horn Clauses • A literal is a predicate or its negation • A clause is any disjunction of literals whose variables are universally quantified • A Horn clause is an expression of the form: CS 478 - Machine Learning 6

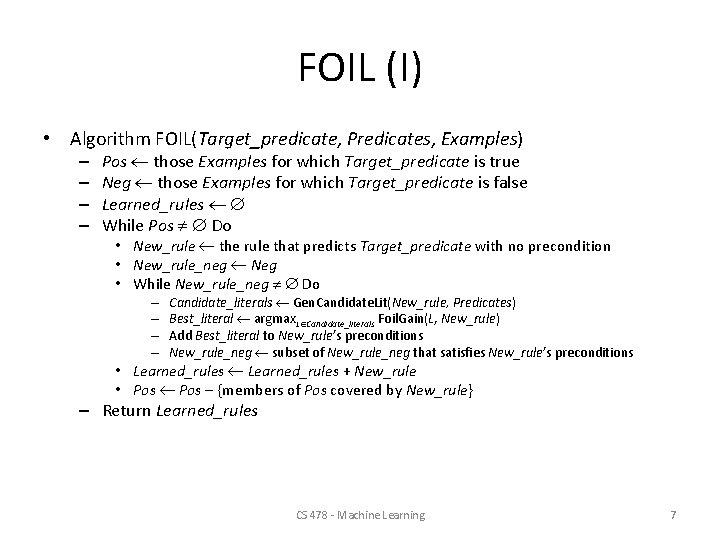

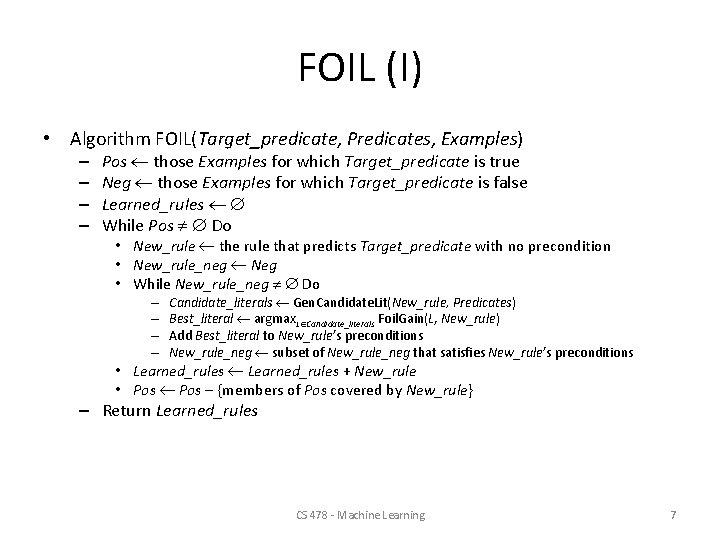

FOIL (I) • Algorithm FOIL(Target_predicate, Predicates, Examples) – – Pos those Examples for which Target_predicate is true Neg those Examples for which Target_predicate is false Learned_rules While Pos Do • New_rule the rule that predicts Target_predicate with no precondition • New_rule_neg Neg • While New_rule_neg Do – – Candidate_literals Gen. Candidate. Lit(New_rule, Predicates) Best_literal argmax. L Candidate_literals Foil. Gain(L, New_rule) Add Best_literal to New_rule’s preconditions New_rule_neg subset of New_rule_neg that satisfies New_rule’s preconditions • Learned_rules + New_rule • Pos – {members of Pos covered by New_rule} – Return Learned_rules CS 478 - Machine Learning 7

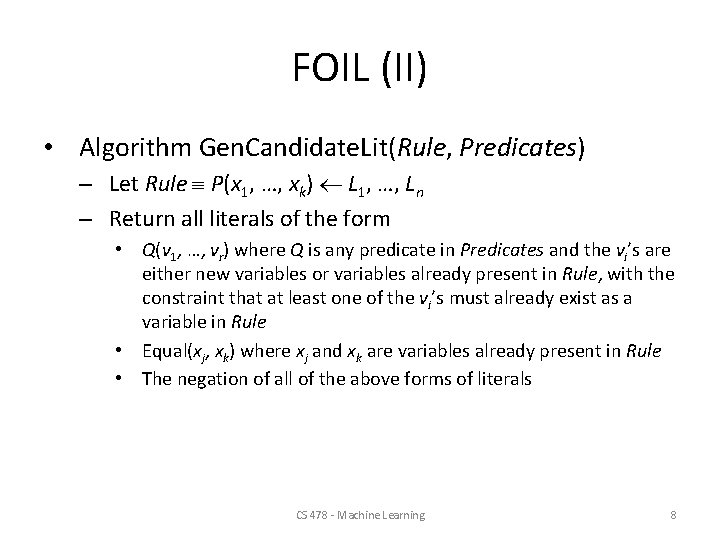

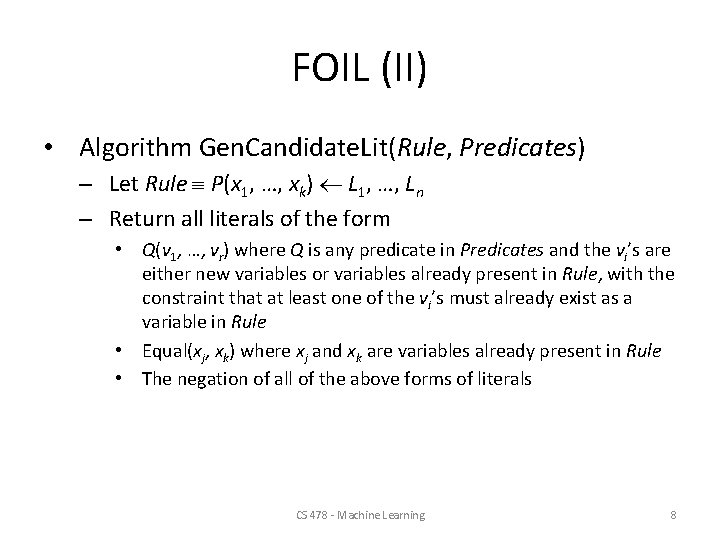

FOIL (II) • Algorithm Gen. Candidate. Lit(Rule, Predicates) – Let Rule P(x 1, …, xk) L 1, …, Ln – Return all literals of the form • Q(v 1, …, vr) where Q is any predicate in Predicates and the vi’s are either new variables or variables already present in Rule, with the constraint that at least one of the vi’s must already exist as a variable in Rule • Equal(xj, xk) where xj and xk are variables already present in Rule • The negation of all of the above forms of literals CS 478 - Machine Learning 8

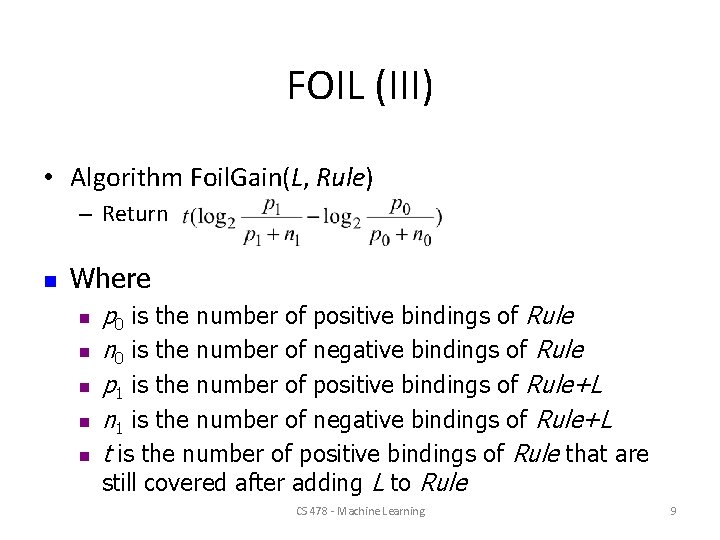

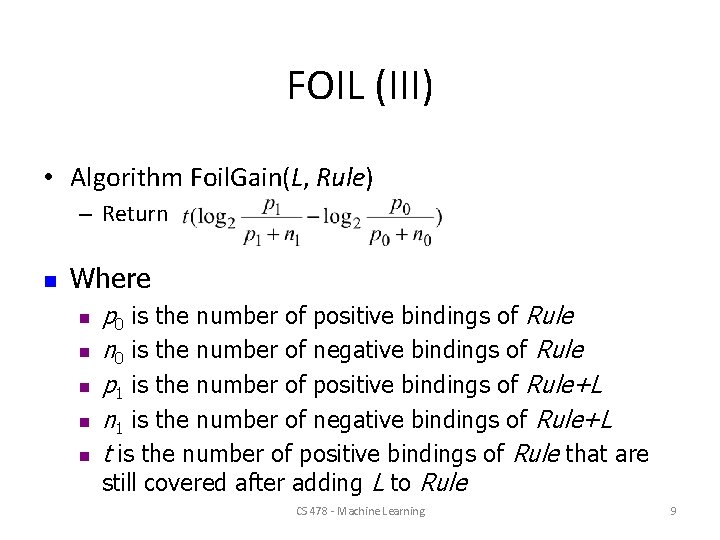

FOIL (III) • Algorithm Foil. Gain(L, Rule) – Return n Where n n n p 0 is the number of positive bindings of Rule n 0 is the number of negative bindings of Rule p 1 is the number of positive bindings of Rule+L n 1 is the number of negative bindings of Rule+L t is the number of positive bindings of Rule that are still covered after adding L to Rule CS 478 - Machine Learning 9

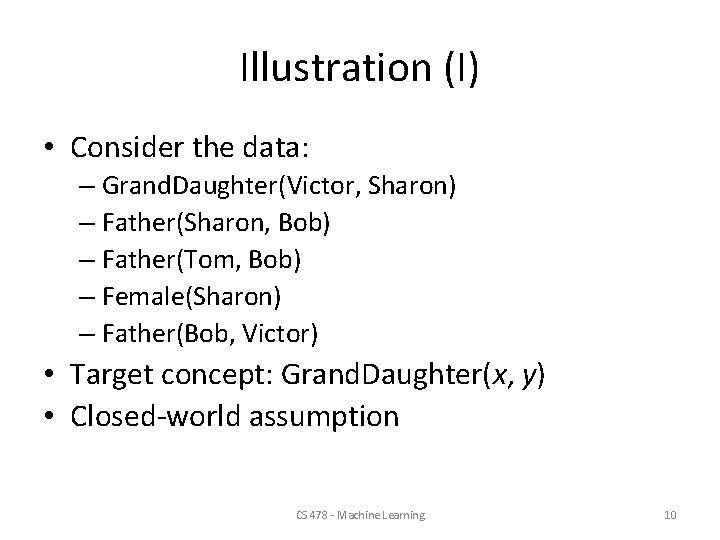

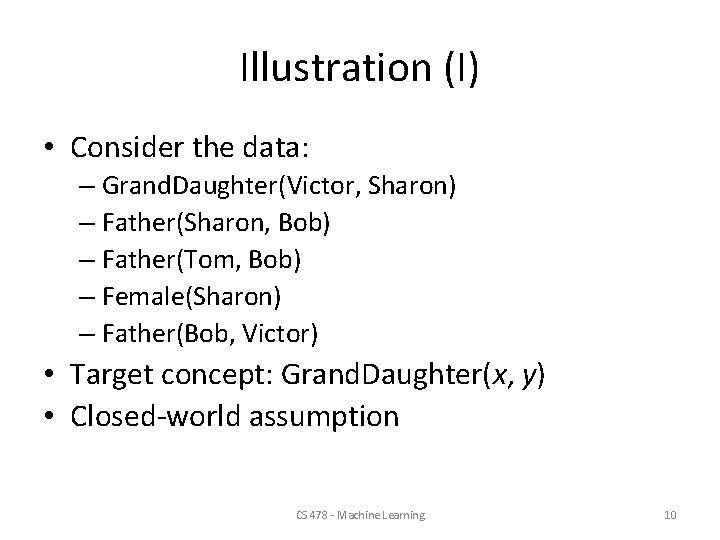

Illustration (I) • Consider the data: – Grand. Daughter(Victor, Sharon) – Father(Sharon, Bob) – Father(Tom, Bob) – Female(Sharon) – Father(Bob, Victor) • Target concept: Grand. Daughter(x, y) • Closed-world assumption CS 478 - Machine Learning 10

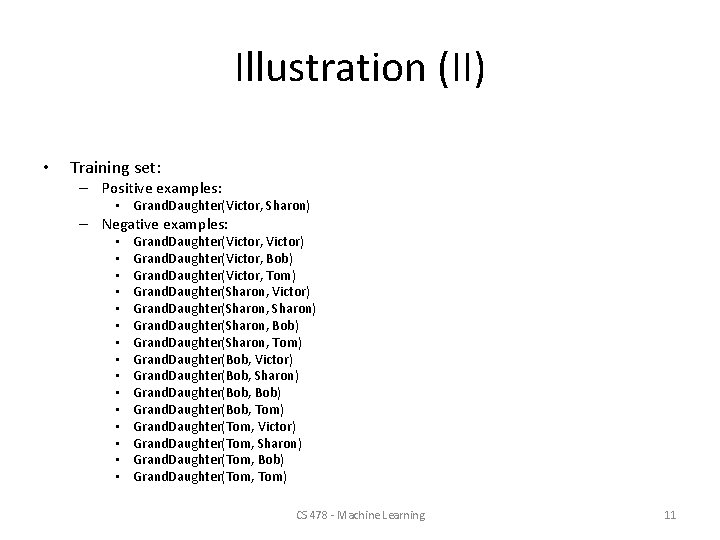

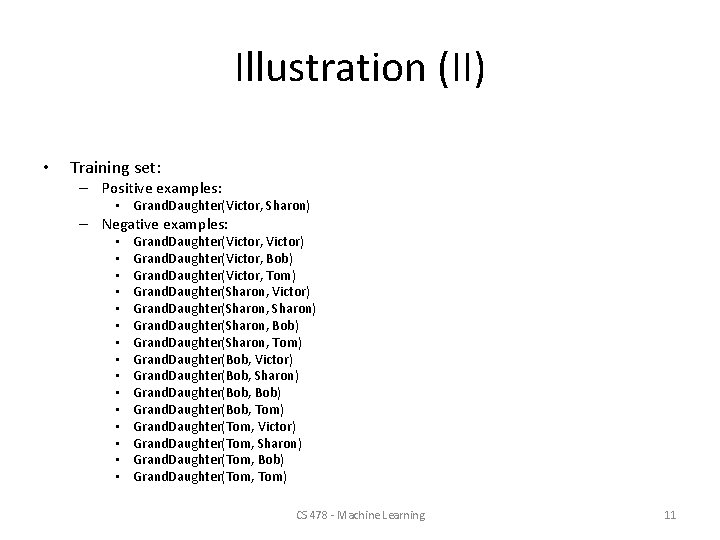

Illustration (II) • Training set: – Positive examples: • Grand. Daughter(Victor, Sharon) – Negative examples: • • • • Grand. Daughter(Victor, Victor) Grand. Daughter(Victor, Bob) Grand. Daughter(Victor, Tom) Grand. Daughter(Sharon, Victor) Grand. Daughter(Sharon, Sharon) Grand. Daughter(Sharon, Bob) Grand. Daughter(Sharon, Tom) Grand. Daughter(Bob, Victor) Grand. Daughter(Bob, Sharon) Grand. Daughter(Bob, Bob) Grand. Daughter(Bob, Tom) Grand. Daughter(Tom, Victor) Grand. Daughter(Tom, Sharon) Grand. Daughter(Tom, Bob) Grand. Daughter(Tom, Tom) CS 478 - Machine Learning 11

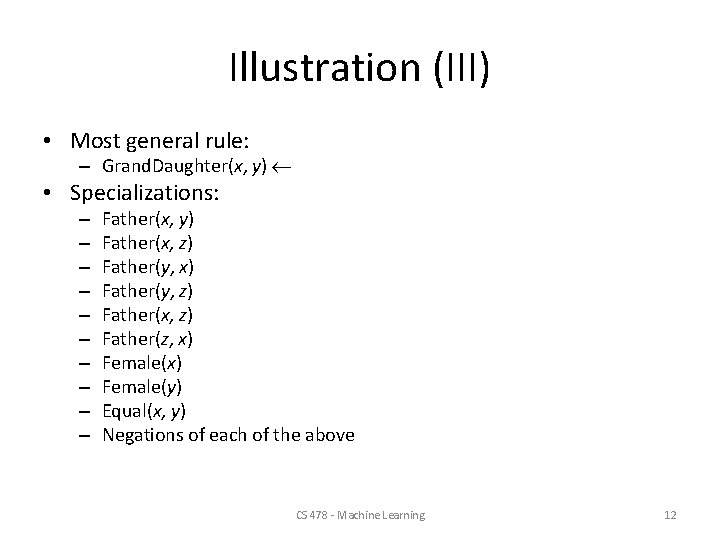

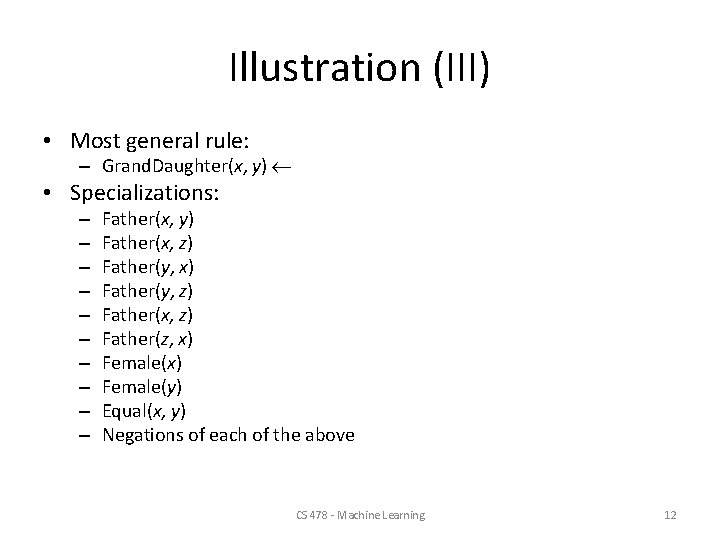

Illustration (III) • Most general rule: – Grand. Daughter(x, y) • Specializations: – – – – – Father(x, y) Father(x, z) Father(y, x) Father(y, z) Father(x, z) Father(z, x) Female(y) Equal(x, y) Negations of each of the above CS 478 - Machine Learning 12

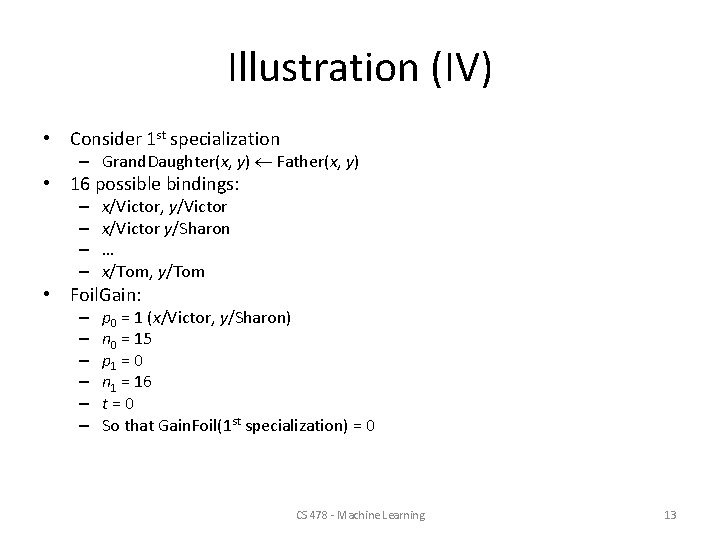

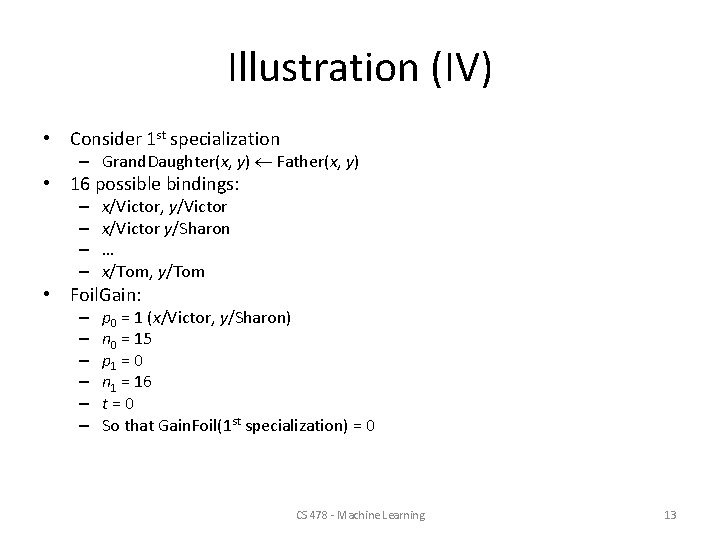

Illustration (IV) • Consider 1 st specialization – Grand. Daughter(x, y) Father(x, y) • 16 possible bindings: – – x/Victor, y/Victor x/Victor y/Sharon … x/Tom, y/Tom • Foil. Gain: – – – p 0 = 1 (x/Victor, y/Sharon) n 0 = 15 p 1 = 0 n 1 = 16 t = 0 So that Gain. Foil(1 st specialization) = 0 CS 478 - Machine Learning 13

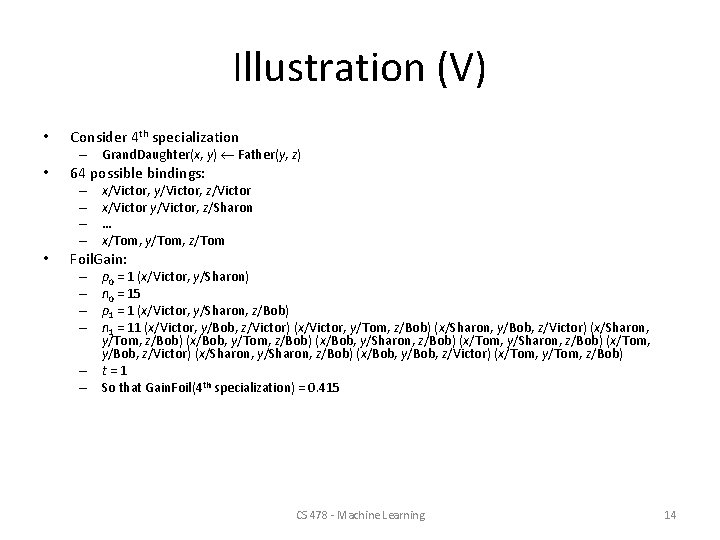

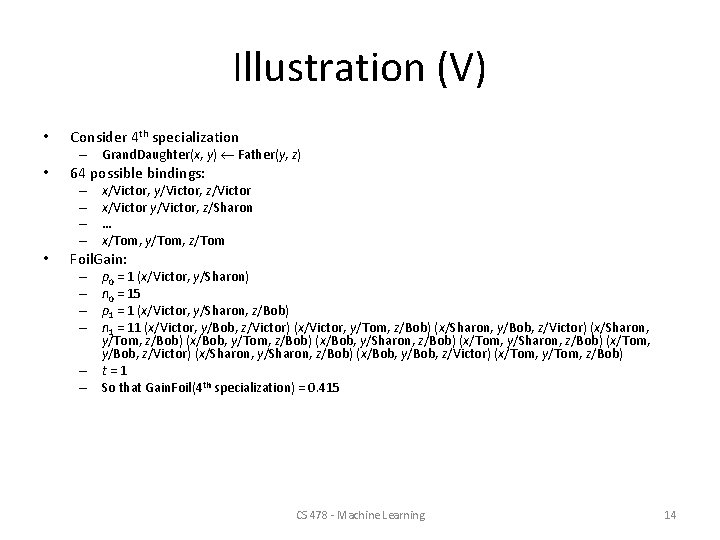

Illustration (V) • Consider 4 th specialization – Grand. Daughter(x, y) Father(y, z) • 64 possible bindings: – – • x/Victor, y/Victor, z/Victor x/Victor y/Victor, z/Sharon … x/Tom, y/Tom, z/Tom Foil. Gain: – – – p 0 = 1 (x/Victor, y/Sharon) n 0 = 15 p 1 = 1 (x/Victor, y/Sharon, z/Bob) n 1 = 11 (x/Victor, y/Bob, z/Victor) (x/Victor, y/Tom, z/Bob) (x/Sharon, y/Bob, z/Victor) (x/Sharon, y/Tom, z/Bob) (x/Bob, y/Sharon, z/Bob) (x/Tom, y/Bob, z/Victor) (x/Sharon, y/Sharon, z/Bob) (x/Bob, y/Bob, z/Victor) (x/Tom, y/Tom, z/Bob) t = 1 So that Gain. Foil(4 th specialization) = 0. 415 CS 478 - Machine Learning 14

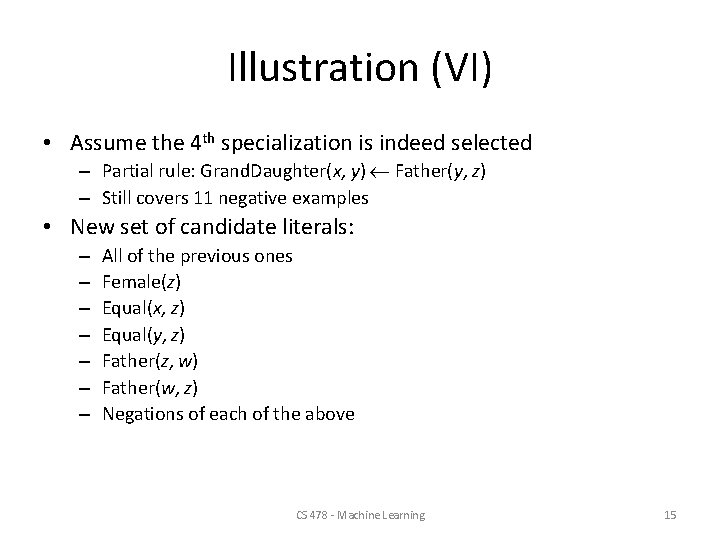

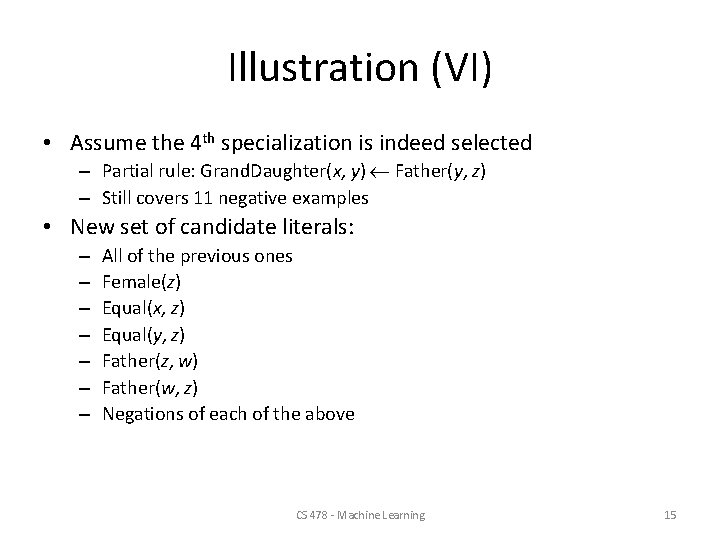

Illustration (VI) • Assume the 4 th specialization is indeed selected – Partial rule: Grand. Daughter(x, y) Father(y, z) – Still covers 11 negative examples • New set of candidate literals: – – – – All of the previous ones Female(z) Equal(x, z) Equal(y, z) Father(z, w) Father(w, z) Negations of each of the above CS 478 - Machine Learning 15

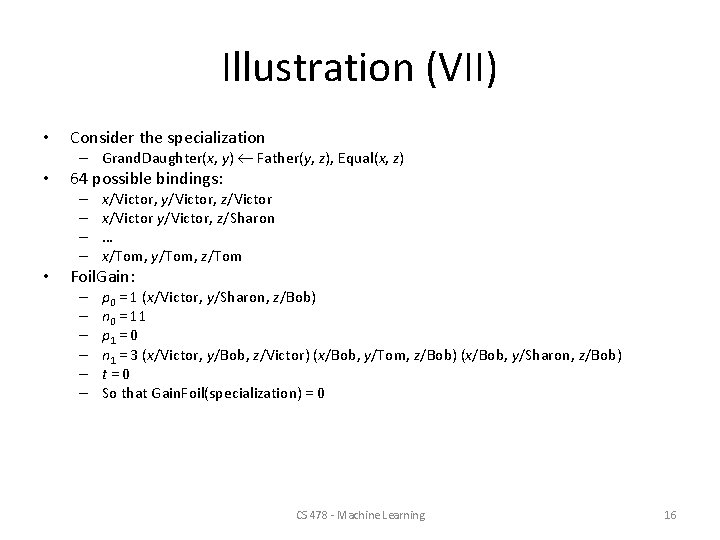

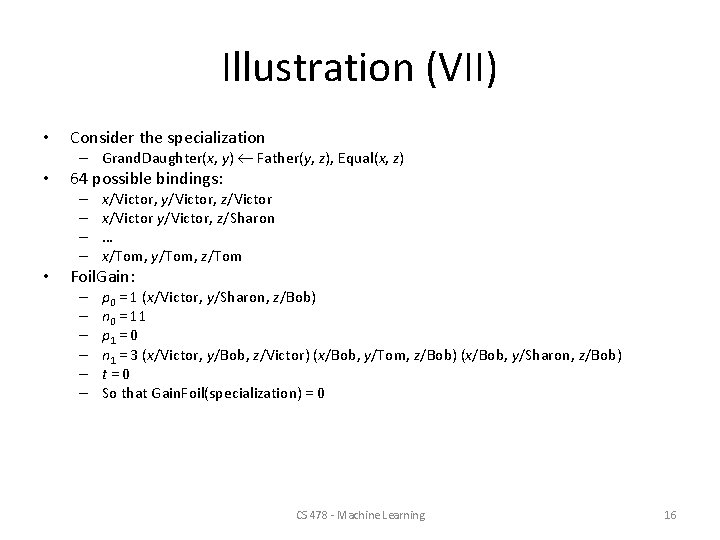

Illustration (VII) • Consider the specialization – Grand. Daughter(x, y) Father(y, z), Equal(x, z) • 64 possible bindings: – – • x/Victor, y/Victor, z/Victor x/Victor y/Victor, z/Sharon … x/Tom, y/Tom, z/Tom Foil. Gain: – – – p 0 = 1 (x/Victor, y/Sharon, z/Bob) n 0 = 11 p 1 = 0 n 1 = 3 (x/Victor, y/Bob, z/Victor) (x/Bob, y/Tom, z/Bob) (x/Bob, y/Sharon, z/Bob) t = 0 So that Gain. Foil(specialization) = 0 CS 478 - Machine Learning 16

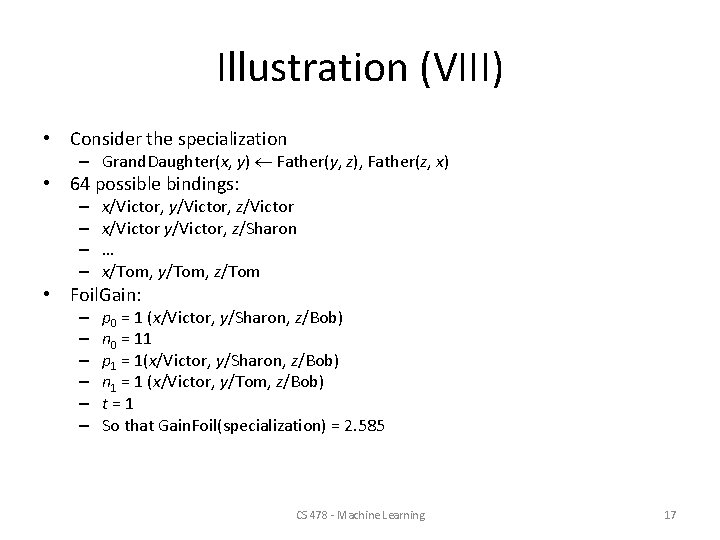

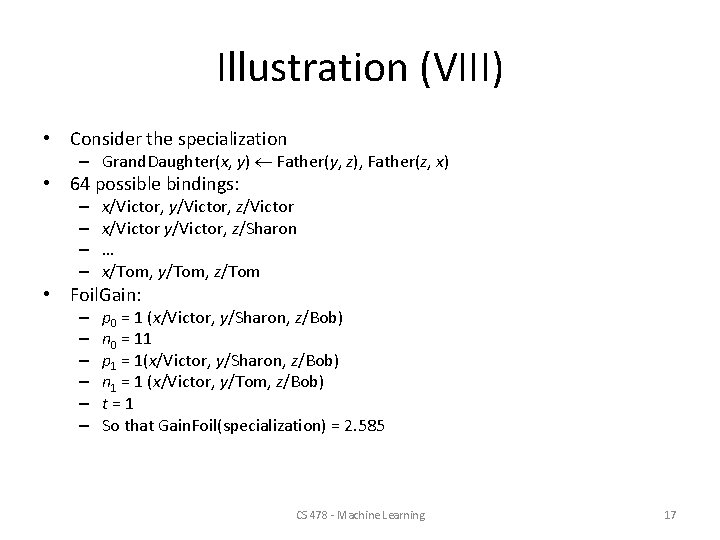

Illustration (VIII) • Consider the specialization – Grand. Daughter(x, y) Father(y, z), Father(z, x) • 64 possible bindings: – – x/Victor, y/Victor, z/Victor x/Victor y/Victor, z/Sharon … x/Tom, y/Tom, z/Tom • Foil. Gain: – – – p 0 = 1 (x/Victor, y/Sharon, z/Bob) n 0 = 11 p 1 = 1(x/Victor, y/Sharon, z/Bob) n 1 = 1 (x/Victor, y/Tom, z/Bob) t = 1 So that Gain. Foil(specialization) = 2. 585 CS 478 - Machine Learning 17

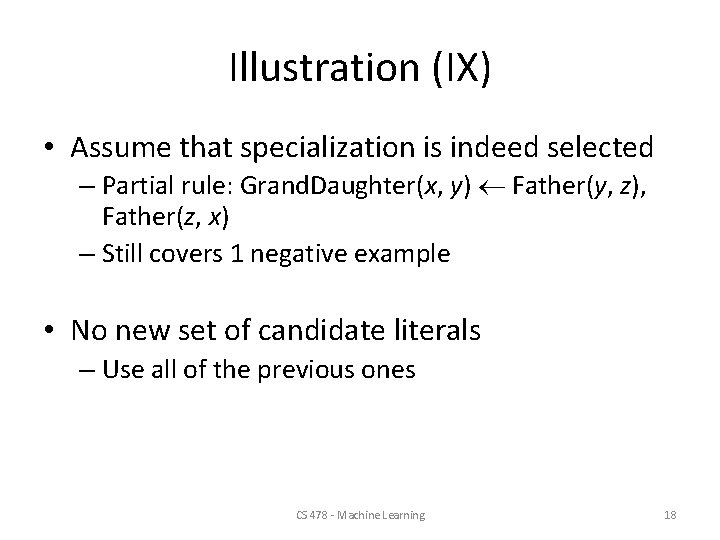

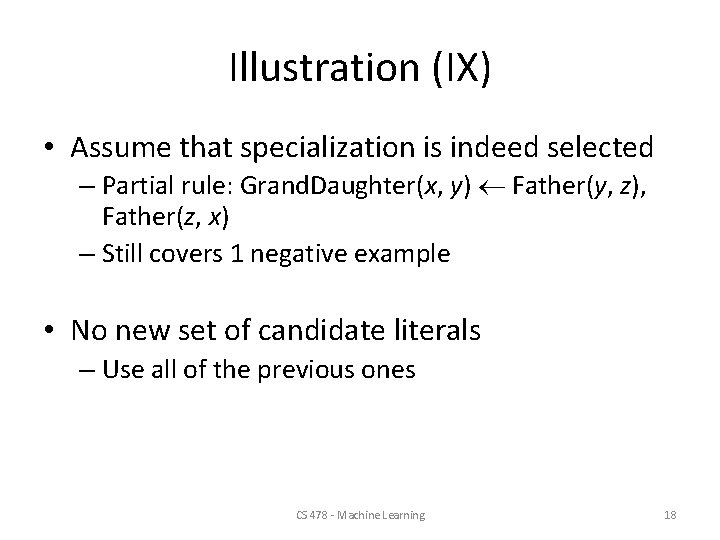

Illustration (IX) • Assume that specialization is indeed selected – Partial rule: Grand. Daughter(x, y) Father(y, z), Father(z, x) – Still covers 1 negative example • No new set of candidate literals – Use all of the previous ones CS 478 - Machine Learning 18

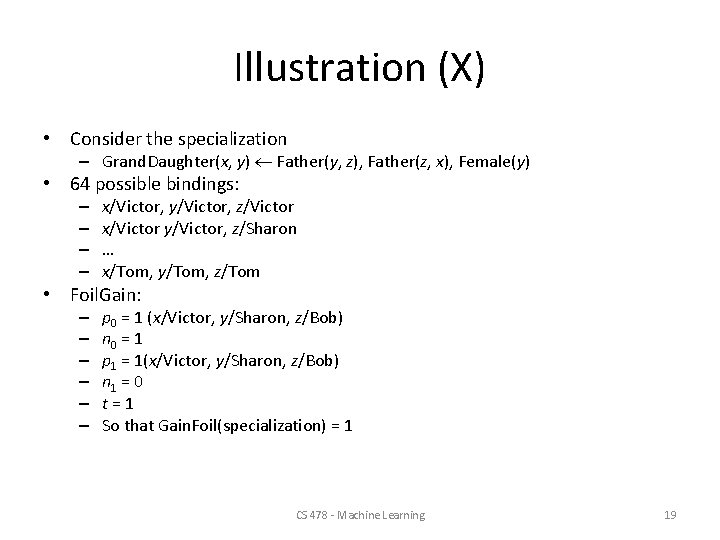

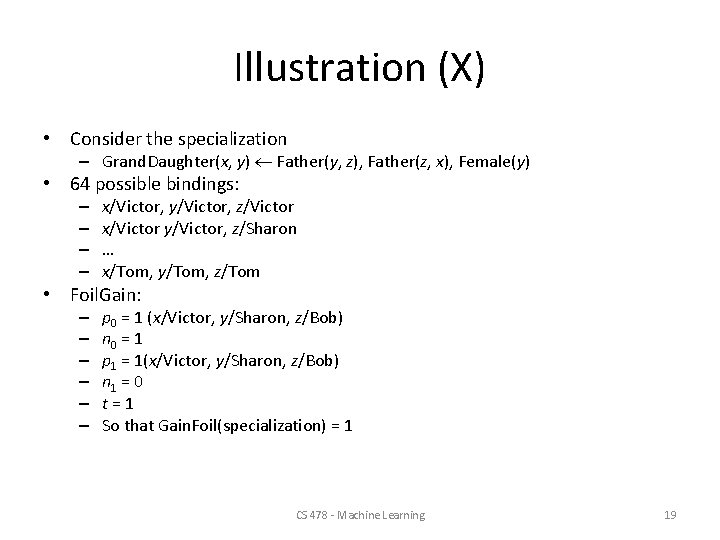

Illustration (X) • Consider the specialization – Grand. Daughter(x, y) Father(y, z), Father(z, x), Female(y) • 64 possible bindings: – – x/Victor, y/Victor, z/Victor x/Victor y/Victor, z/Sharon … x/Tom, y/Tom, z/Tom • Foil. Gain: – – – p 0 = 1 (x/Victor, y/Sharon, z/Bob) n 0 = 1 p 1 = 1(x/Victor, y/Sharon, z/Bob) n 1 = 0 t = 1 So that Gain. Foil(specialization) = 1 CS 478 - Machine Learning 19

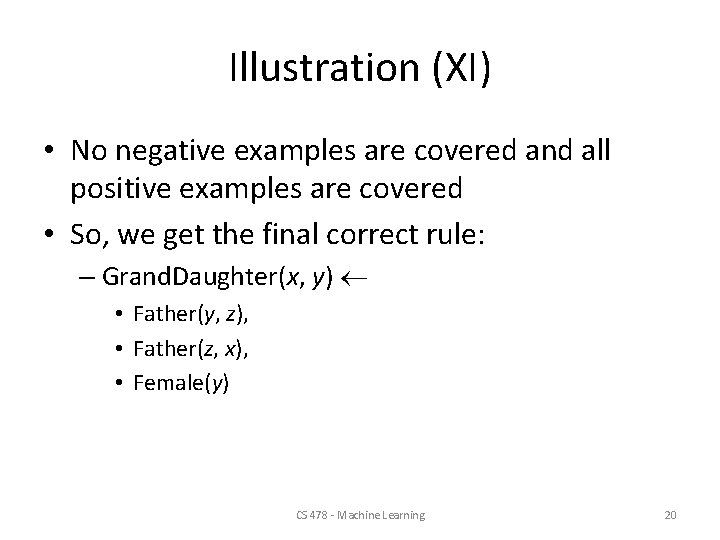

Illustration (XI) • No negative examples are covered and all positive examples are covered • So, we get the final correct rule: – Grand. Daughter(x, y) • Father(y, z), • Father(z, x), • Female(y) CS 478 - Machine Learning 20

Recursive Predicates • If the target predicate is included in the list Predicates, then FOIL can learn recursive definitions such as: – Ancestor(x, y) Parent(x, y) – Ancestor(x, y) Parent(x, z), Ancestor(z, y) CS 478 - Machine Learning 21

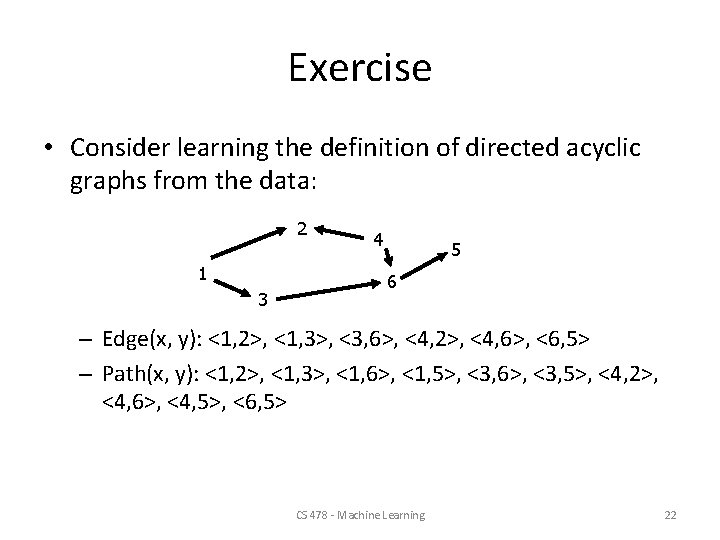

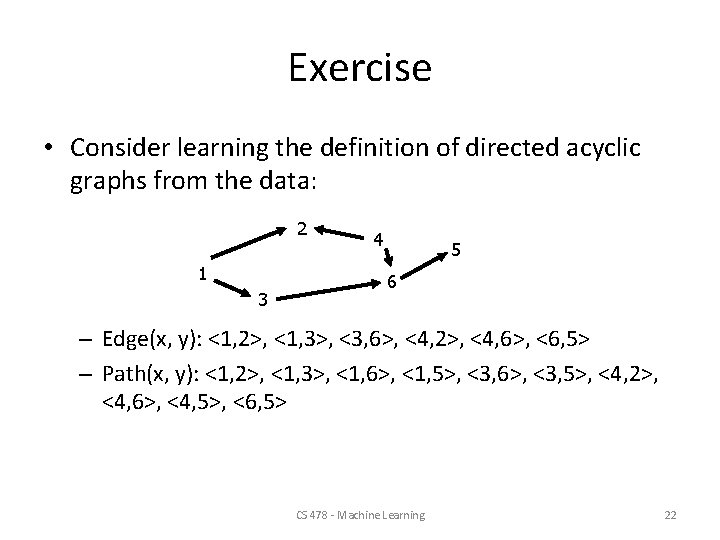

Exercise • Consider learning the definition of directed acyclic graphs from the data: 2 1 3 4 5 6 – Edge(x, y): <1, 2>, <1, 3>, <3, 6>, <4, 2>, <4, 6>, <6, 5> – Path(x, y): <1, 2>, <1, 3>, <1, 6>, <1, 5>, <3, 6>, <3, 5>, <4, 2>, <4, 6>, <4, 5>, <6, 5> CS 478 - Machine Learning 22

Going Further… • What if the domain calls for richer structure and/or expressiveness – In principle, we can always flatten the representation – BUT: • From a knowledge acquisition point of view – Structure may be essential to induce good concepts • From a knowledge representation point of view – It seems desirable to be able to capture physical structures in the data with corresponding abstract structures in its representation CS 478 - Machine Learning 23

Proposal • Use highly-expressive representation language (based on higher-order logic) – – Sets, multisets, graphs, etc. Functions as well as predicates In principle, arbitrary data structures Functions/predicates as arguments • Three algorithms • Decision-tree learner • Rule-based learner • Strongly-typed evolutionary programming CS 478 - Machine Learning 24

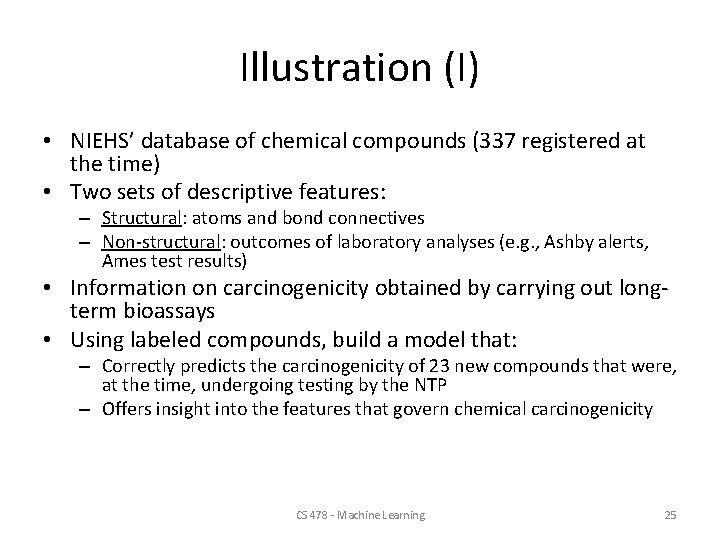

Illustration (I) • NIEHS’ database of chemical compounds (337 registered at the time) • Two sets of descriptive features: – Structural: atoms and bond connectives – Non-structural: outcomes of laboratory analyses (e. g. , Ashby alerts, Ames test results) • Information on carcinogenicity obtained by carrying out longterm bioassays • Using labeled compounds, build a model that: – Correctly predicts the carcinogenicity of 23 new compounds that were, at the time, undergoing testing by the NTP – Offers insight into the features that govern chemical carcinogenicity CS 478 - Machine Learning 25

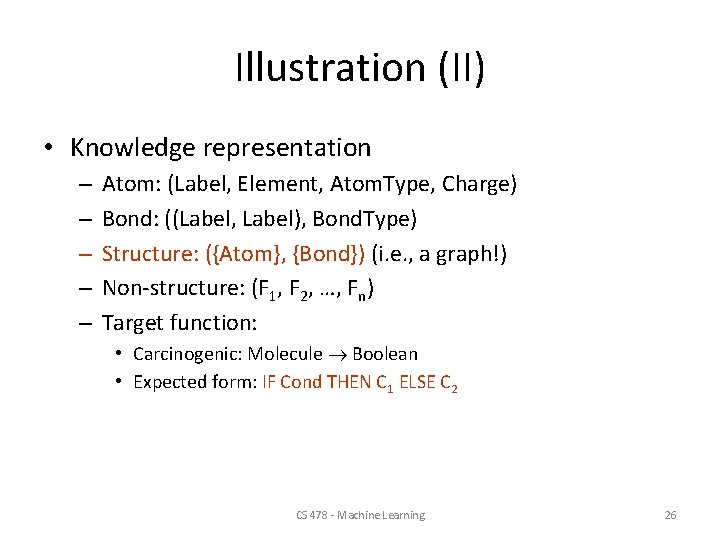

Illustration (II) • Knowledge representation – – – Atom: (Label, Element, Atom. Type, Charge) Bond: ((Label, Label), Bond. Type) Structure: ({Atom}, {Bond}) (i. e. , a graph!) Non-structure: (F 1, F 2, …, Fn) Target function: • Carcinogenic: Molecule Boolean • Expected form: IF Cond THEN C 1 ELSE C 2 CS 478 - Machine Learning 26

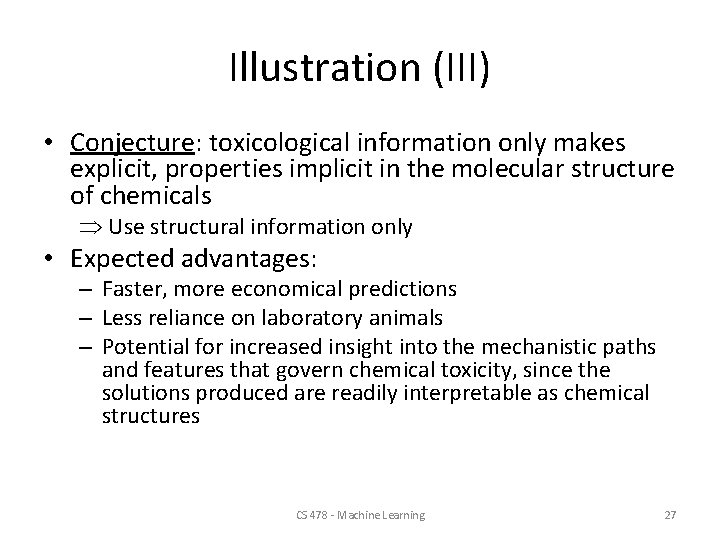

Illustration (III) • Conjecture: toxicological information only makes explicit, properties implicit in the molecular structure of chemicals Use structural information only • Expected advantages: – Faster, more economical predictions – Less reliance on laboratory animals – Potential for increased insight into the mechanistic paths and features that govern chemical toxicity, since the solutions produced are readily interpretable as chemical structures CS 478 - Machine Learning 27

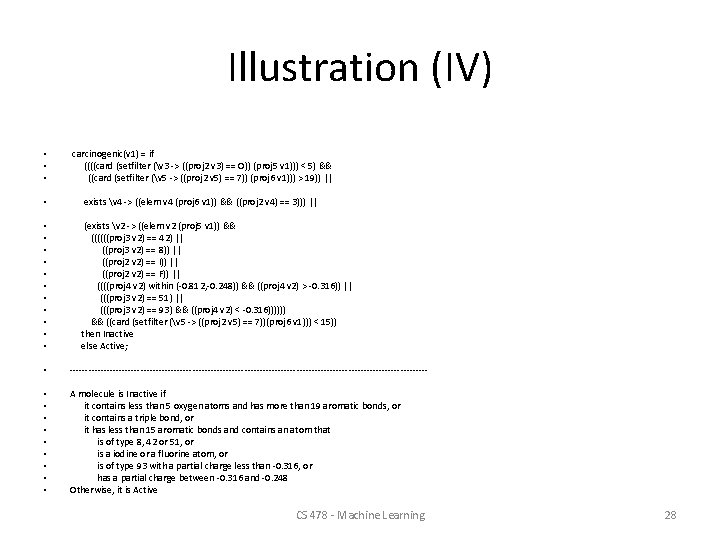

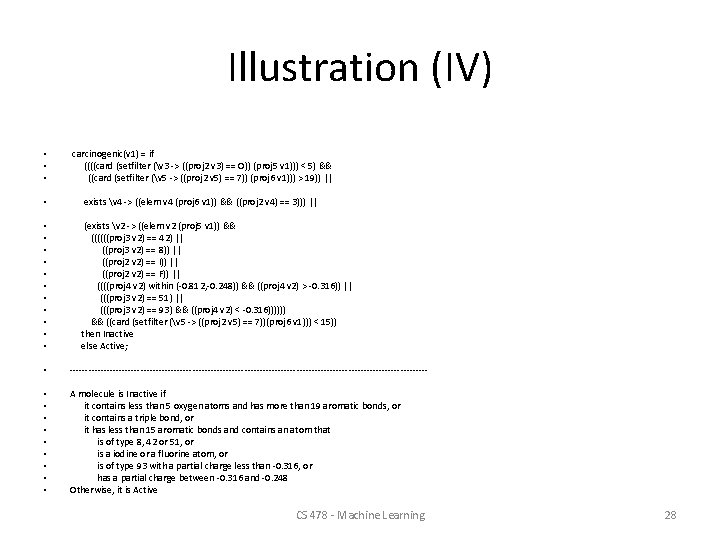

Illustration (IV) • • • carcinogenic(v 1) = if ((((card (setfilter (v 3 -> ((proj 2 v 3) == O)) (proj 5 v 1))) < 5) && ((card (setfilter (v 5 -> ((proj 2 v 5) == 7)) (proj 6 v 1))) > 19)) || • exists v 4 -> ((elem v 4 (proj 6 v 1)) && ((proj 2 v 4) == 3))) || • • • (exists v 2 -> ((elem v 2 (proj 5 v 1)) && ((((((proj 3 v 2) == 42) || ((proj 3 v 2) == 8)) || ((proj 2 v 2) == I)) || ((proj 2 v 2) == F)) || ((((proj 4 v 2) within (-0. 812, -0. 248)) && ((proj 4 v 2) > -0. 316)) || (((proj 3 v 2) == 51) || (((proj 3 v 2) == 93) && ((proj 4 v 2) < -0. 316)))))) && ((card (setfilter (v 5 -> ((proj 2 v 5) == 7))(proj 6 v 1))) < 15)) then Inactive else Active; • ----------------------------------------------------------- • • • A molecule is Inactive if it contains less than 5 oxygen atoms and has more than 19 aromatic bonds, or it contains a triple bond, or it has less than 15 aromatic bonds and contains an atom that is of type 8, 42 or 51, or is a iodine or a fluorine atom, or is of type 93 with a partial charge less than -0. 316, or has a partial charge between -0. 316 and -0. 248 Otherwise, it is Active CS 478 - Machine Learning 28

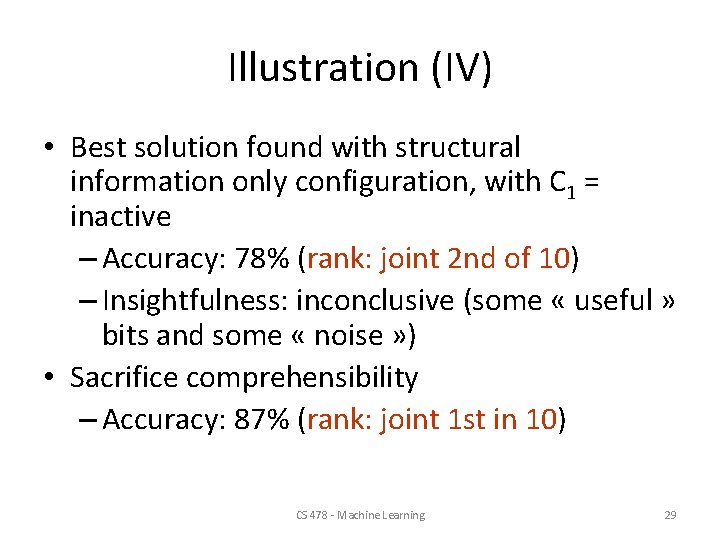

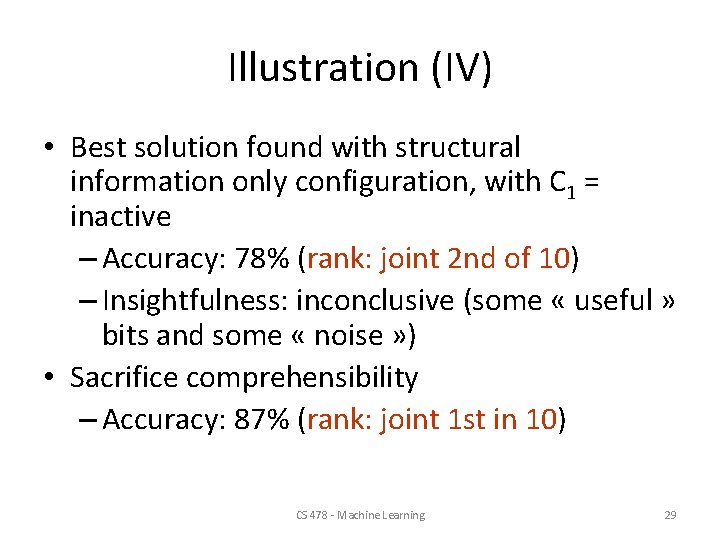

Illustration (IV) • Best solution found with structural information only configuration, with C 1 = inactive – Accuracy: 78% (rank: joint 2 nd of 10) – Insightfulness: inconclusive (some « useful » bits and some « noise » ) • Sacrifice comprehensibility – Accuracy: 87% (rank: joint 1 st in 10) CS 478 - Machine Learning 29