Evaluating TCP Congestion Control Doug Leith Hamilton Institute

- Slides: 34

Evaluating TCP Congestion Control Doug Leith Hamilton Institute Ireland Thanks: Robert Shorten, Yee Ting Lee, Baruch Even. Hamilton Institute

TCP (Transport Control Protocol) reliable data transfer, congestion control Hamilton Institute

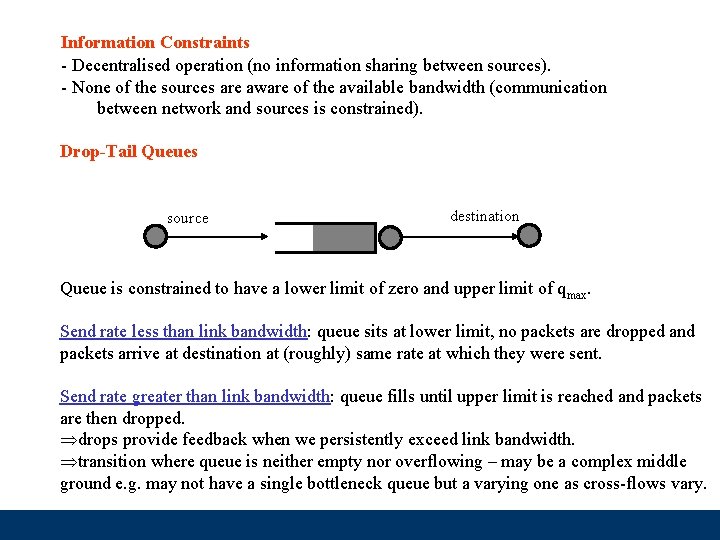

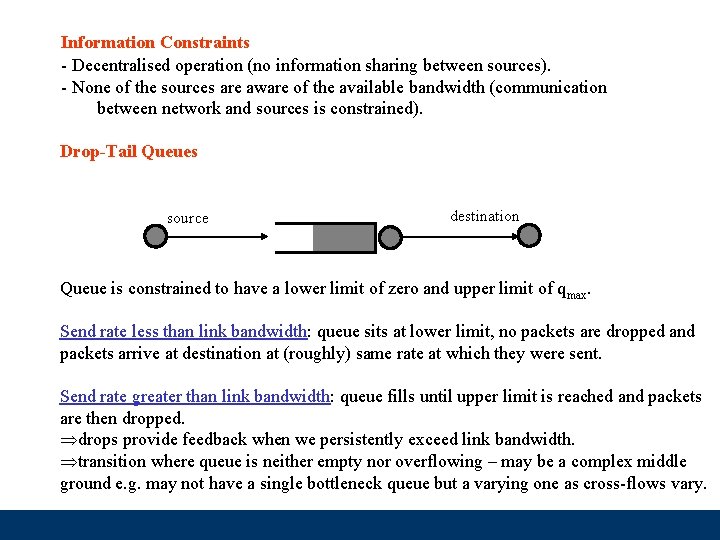

Information Constraints - Decentralised operation (no information sharing between sources). - None of the sources are aware of the available bandwidth (communication between network and sources is constrained). Drop-Tail Queues source destination Queue is constrained to have a lower limit of zero and upper limit of qmax. Send rate less than link bandwidth: queue sits at lower limit, no packets are dropped and packets arrive at destination at (roughly) same rate at which they were sent. Send rate greater than link bandwidth: queue fills until upper limit is reached and packets are then dropped. Þdrops provide feedback when we persistently exceed link bandwidth. Þtransition where queue is neither empty nor overflowing – may be a complex middle ground e. g. may not have a single bottleneck queue but a varying one as cross-flows vary. Hamilton Institute

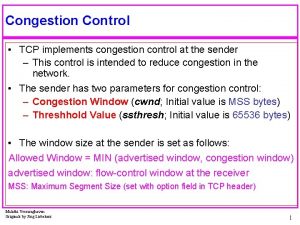

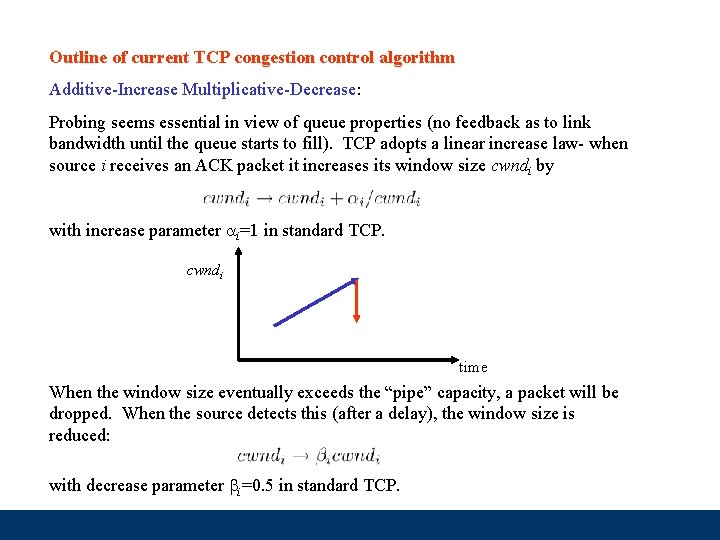

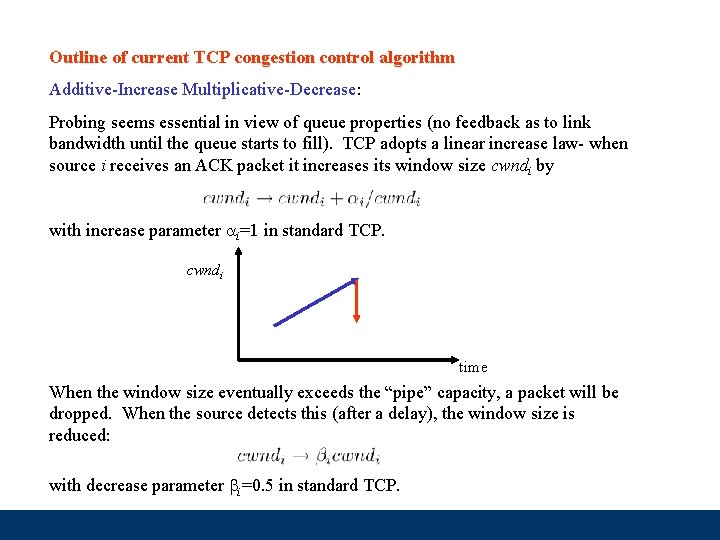

Outline of current TCP congestion control algorithm Additive-Increase Multiplicative-Decrease: Probing seems essential in view of queue properties (no feedback as to link bandwidth until the queue starts to fill). TCP adopts a linear increase law- when source i receives an ACK packet it increases its window size cwndi by with increase parameter i=1 in standard TCP. cwndi time When the window size eventually exceeds the “pipe” capacity, a packet will be dropped. When the source detects this (after a delay), the window size is reduced: with decrease parameter i=0. 5 in standard TCP. Hamilton Institute

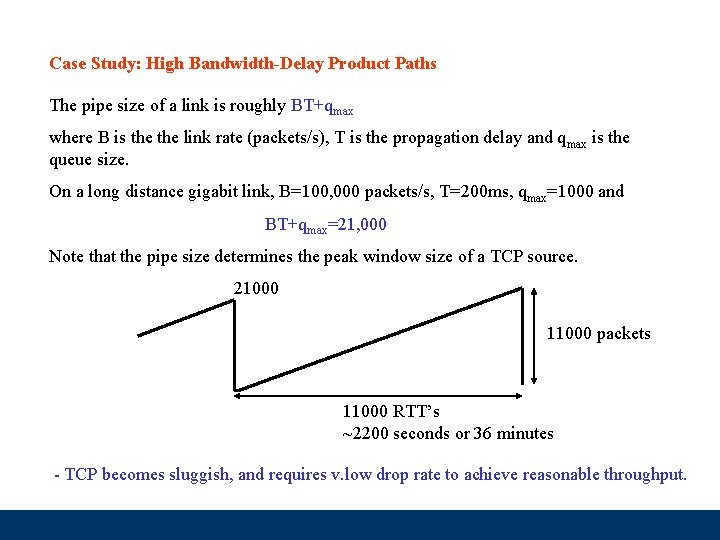

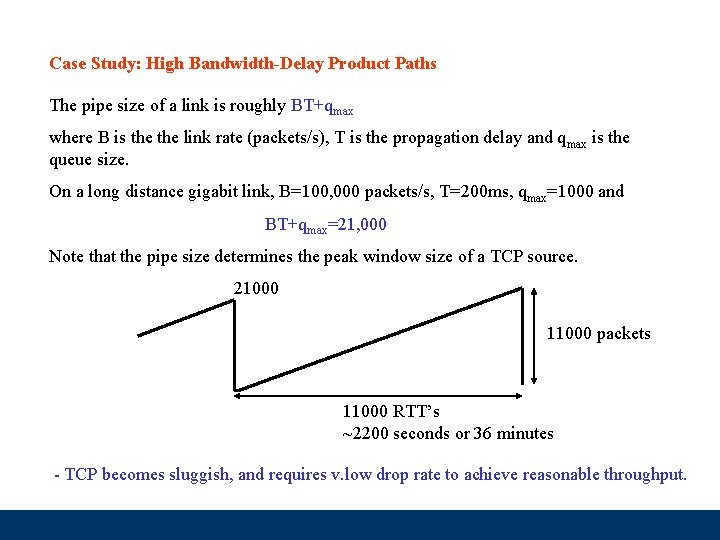

Case Study: High Bandwidth-Delay Product Paths The pipe size of a link is roughly BT+qmax where B is the link rate (packets/s), T is the propagation delay and qmax is the queue size. On a long distance gigabit link, B=100, 000 packets/s, T=200 ms, qmax=1000 and BT+qmax=21, 000 Note that the pipe size determines the peak window size of a TCP source. 21000 11000 packets 11000 RTT’s ~2200 seconds or 36 minutes - TCP becomes sluggish, and requires v. low drop rate to achieve reasonable throughput. Hamilton Institute

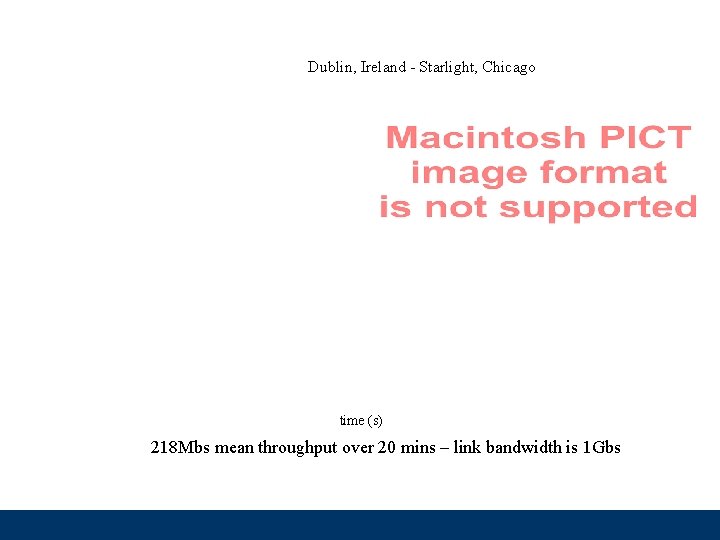

Dublin, Ireland - Starlight, Chicago time (s) 218 Mbs mean throughput over 20 mins – link bandwidth is 1 Gbs Hamilton Institute

Case Study: High Bandwidth-Delay Product Paths Problem is not confined to long distance networks - at gigabit speeds, delay >10 ms is enough to degrade performance. Solutions ? Simply making the increase parameter larger is inadmissable – on low-speed networks we require backward compatibility with current sources. Large in high-speed regimes, =1 in low-speed regimes suggests some sort of mode switch. Hamilton Institute

A Motivating Case Study … Scalable TCP Scaleable TCP has convergence issues … Hamilton Institute

A Motivating Case Study … Scalable TCP Hamilton Institute

A Motivating Case Study … Scalable TCP What’s going on here ? Scalable is MIMD - can easily show this. MIMD congestion control algorithms with drop-tail queueing do not converge to fairness in general. D. Chiu and R. Jain, "Analysis of the Increase/Decrease Algorithms for Congestion Avoidance in Computer Networks, " Journal of Computer Networks and ISDN, Vol. 17, No. 1, June 1989, pp. 1 -14. More precisely, MIMD algorithms do not converge to fairness when drop synchronisation occurs, and may converge arbitrarily slowly otherwise. We can therefore dismiss MIMD algorithms as candidates for congestion control. Hamilton Institute

Another Example … High-Speed-TCP Example of two HS-TCP flows - the second flow experiences a drop early in slow-start focussing attention on the responsiveness of the congestion avoidance algorithm. (NS simulation: 500 Mb bottleneck link, 100 ms delay, queue 500 packets) Hamilton Institute

Two questions … 1. How can we measure the performance of proposed changes to TCP in a systematic and meaningful way (that relates to issues of practical interest, supports fair comparisons) ? 2. Live experimental testing is time consuming, difficult and expensive. Can we screen for known issues and gotchas at an early stage (e. g. via simulation or lab testing) prior to full scale experimental testing ? Questions are related of course. Also, no screening or measurements can be exhaustive – we cannot prove the correctness of a protocol – but we can demonstrate incorrectness and tests can improve confidence. Hamilton Institute

Two practical issues • Need to control for different network stack implementations • Need to ensure that congestion control action is exercised. Hamilton Institute

Network stack implementation Linux 2. 6. 6, 250 Mb/s, 200 ms RTT . Hamilton Institute

Network stack implementation. Hamilton Institute

Network stack implementation. • O(loss) walk of packets in flight linked list rather than O(cwnd) walk • SACK coalescing cf delayed acking • Throttle disabled - netdev queue modified to be pure drop-tail Patch available at ww. hamilton/ie/net/ Hamilton Institute

Network stack implementation . Hamilton Institute

Network stack implementation. Hamilton Institute

Two practical issues • Need to control for different network stack implementations • Need to ensure that congestion control action is exercised. Hamilton Institute

Congestion control action not exercised Initial tests – CERN-Chicago. . Bottleneck in NIC and with web 100: throughput max’s out regardless of congestion avoidance algorithm used. Hamilton Institute

Putative Performance Measures Most of issues with existing TCP proposals have been associated with the behaviour of competing flows. Suggest using behaviour of standard TCP as a baseline against which to compare performance of new proposals. Focus on long-lived flows initially - this suggests consideration of the following characteristics: • Fairness (between like flows) • Friendliness (with legacy TCP) • Efficiency (use of available network capacity). • Responsiveness (how rapidly does the network respond to changes in network conditions, e. g. flows starting/stopping). Hamilton Institute

Putative Performance Measures (cont) Important not to focus on a single network condition. -We know that current TCP behaviour depends on bandwidth, RTT, queue size, number of users etc. We therefore expect to have to measure performance of proposed changes over a range of conditions also. Suggest taking measurements for a grid of data points … -we consider bandwidths of 10 Mb/s, 100 Mb/s and 250 Mb/s -two-way propagation delays of 16 ms - 324 ms -range of queue sizes from 5% - 100% BDP. Hamilton Institute

Putative Performance Measures (cont) • Rather than defining a single metric (problematic to say the least), suggest using measurements of current TCP as baseline against which to make comparisons. 1. Symmetric conditions – flows use same congestion control algorithm, have same RTT, share common network bottleneck. • • Fairness should be largely insensitive to bandwidth, number of users, queue size Competing flows with same RTT should have same long-term throughput. Hamilton Institute

Symmetric conditions (2 flows): Fairness “Scalable, FAST have unfairness issues” • Common network stack implementation used • Averages over 5 tests runs • Queue 20% DBP Hamilton Institute

Symmetric conditions (2 flows): Fairness 250 Mbs, 42 ms RTT 250 Mbs, 162 ms RTT Hamilton Institute

250 Mbs, 42 ms RTT 250 Mbs, 162 ms RTT 250 Mbs, 324 ms RTT Hamilton Institute

Symmetric conditions (2 flows): Fairness “Scalable, FAST have unfairness issues” • Common network stack implementation used • Averages over 5 tests runs • Queue 20% DBP Hamilton Institute

250 Mbs, 42 ms RTT 250 Mbs, 162 ms RTT 250 Mbs, 324 ms RTT Hamilton Institute

250 Mbs, 42 ms RTT 250 Mbs, 162 ms RTT 250 Mbs, 324 ms RTT Hamilton Institute

RTT Unfairness • Competing flows with different RTT’s may be unfair; • Unfairness no worse than throughputs being roughly proportional to 1/RTT 2 (cwnd proportional to 1/RTT). Hamilton Institute

RTT Unfairness Hamilton Institute

RTT Unfairness “Scalable is v. RTT unfair at high-speeds” “FAST, BIC, HS-TCP similarly” Hamilton Institute

RTT Unfairness Hamilton Institute

Summary • Proposed use of standard TCP as a baseline for evaluating performance • Demonstrate that even simple tests can be surprisingly revealing. Suggests that some screening is indeed worthwhile. • Careful experiment design is important however e. g. controlling for network stack implementation Hamilton Institute

Leith hamilton

Leith hamilton In2140

In2140 Tcp congestion control

Tcp congestion control New reno tcp

New reno tcp Tcp segment len

Tcp segment len Pincuegula

Pincuegula General principles of congestion control

General principles of congestion control Network provisioning in congestion control

Network provisioning in congestion control Principles of congestion control

Principles of congestion control General principles of congestion control

General principles of congestion control Congestion control in virtual circuit

Congestion control in virtual circuit Udp congestion control

Udp congestion control Principles of congestion control

Principles of congestion control Congestion control in network layer

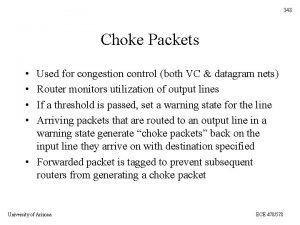

Congestion control in network layer Packet choke

Packet choke General principles of congestion control

General principles of congestion control Karns algorithm

Karns algorithm Tcp flow control

Tcp flow control Cpe 426

Cpe 426 Tcp flow control

Tcp flow control Tcp flow control diagram

Tcp flow control diagram Error control in tcp

Error control in tcp Tcp (transmission control protocol) to protokół

Tcp (transmission control protocol) to protokół Tcp sliding window

Tcp sliding window Tcp flow control sliding window

Tcp flow control sliding window Tcp sliding window mechanism

Tcp sliding window mechanism Network congestion causes

Network congestion causes Cause and effect introduction

Cause and effect introduction Conclusion of traffic congestion

Conclusion of traffic congestion Cvc lung

Cvc lung Eneritis

Eneritis Pathology

Pathology Essential oils for pelvic congestion syndrome

Essential oils for pelvic congestion syndrome Heart failure cells are seen in lungs

Heart failure cells are seen in lungs Congestion

Congestion