TCP Congestion Control 1 TCP Segment Structure 32

![TCP AIMD Sender Data Packets Network Receiver TCP Acknowledgment Packets q AIMD [Jacobson 1988]: TCP AIMD Sender Data Packets Network Receiver TCP Acknowledgment Packets q AIMD [Jacobson 1988]:](https://slidetodoc.com/presentation_image_h/13bbfcb13d4710bd148a78b745196ddd/image-22.jpg)

- Slides: 28

TCP Congestion Control 1

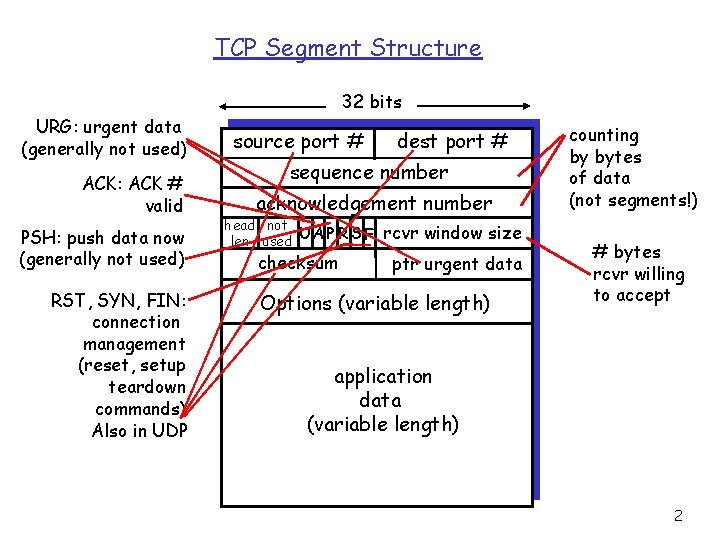

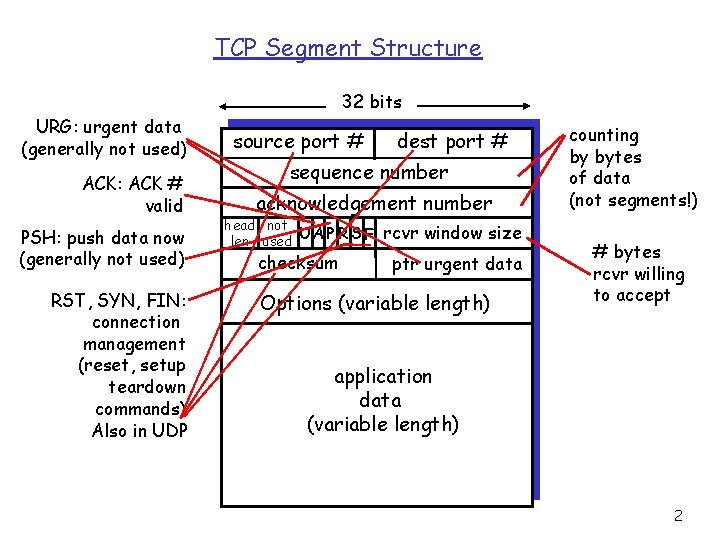

TCP Segment Structure 32 bits URG: urgent data (generally not used) ACK: ACK # valid PSH: push data now (generally not used) RST, SYN, FIN: connection management (reset, setup teardown commands) Also in UDP source port # dest port # sequence number acknowledgement number head not UA P R S F len used checksum rcvr window size ptr urgent data Options (variable length) counting by bytes of data (not segments!) # bytes rcvr willing to accept application data (variable length) 2

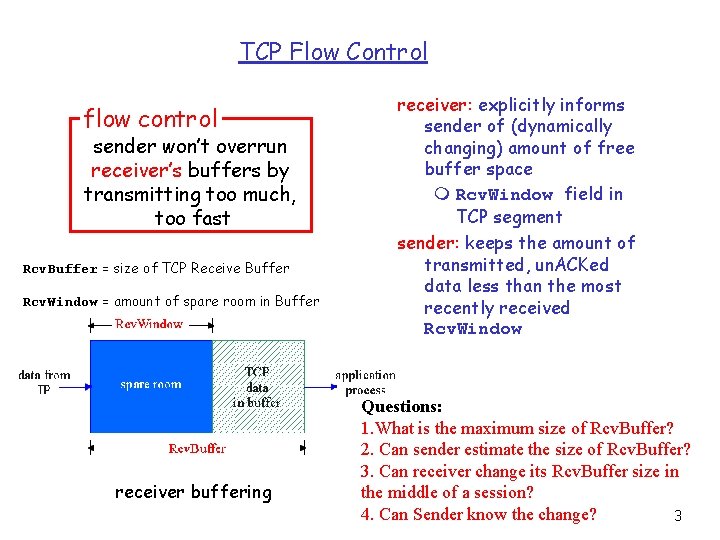

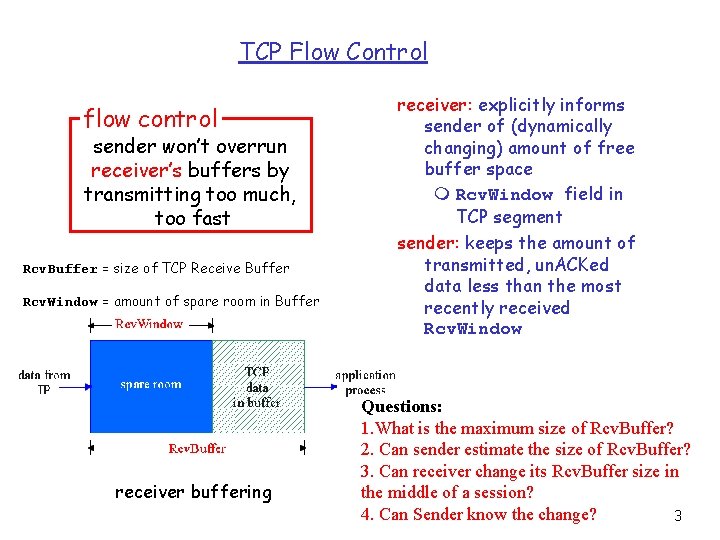

TCP Flow Control flow control sender won’t overrun receiver’s buffers by transmitting too much, too fast Rcv. Buffer = size of TCP Receive Buffer Rcv. Window = amount of spare room in Buffer receiver buffering receiver: explicitly informs sender of (dynamically changing) amount of free buffer space m Rcv. Window field in TCP segment sender: keeps the amount of transmitted, un. ACKed data less than the most recently received Rcv. Window Questions: 1. What is the maximum size of Rcv. Buffer? 2. Can sender estimate the size of Rcv. Buffer? 3. Can receiver change its Rcv. Buffer size in the middle of a session? 4. Can Sender know the change? 3

Outline Ø Principle of congestion control q TCP/Reno congestion control 4

Principles of Congestion Control Big picture: q How to determine a flow’s sending rate? Congestion: q informally: “too many sources sending too much data too fast for the network to handle” q different from flow control! q manifestations: m lost packets (buffer overflow at routers) m wasted bandwidth m long delays (queueing in router buffers) q a top-10 problem! 5

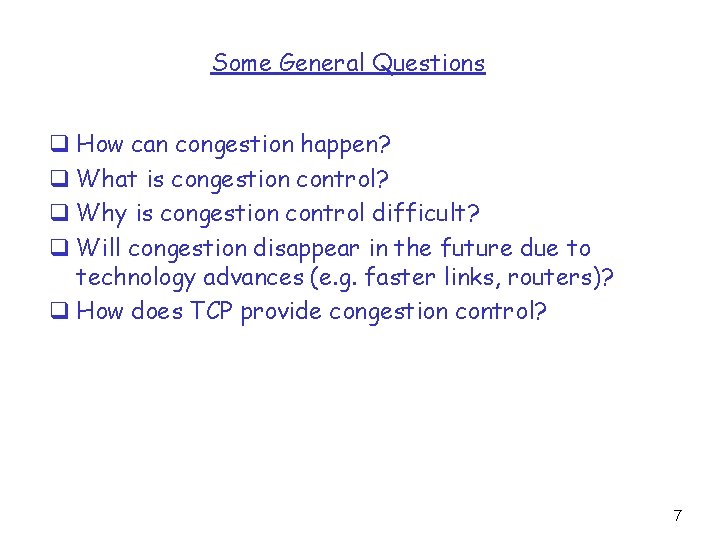

History q TCP congestion control in mid-1980 s m fixed window size w m timeout value = 2 RTT q Congestion collapse in the mid-1980 s m UCB LBL throughput dropped by 1000 X! 6

Some General Questions q How can congestion happen? q What is congestion control? q Why is congestion control difficult? q Will congestion disappear in the future due to technology advances (e. g. faster links, routers)? q How does TCP provide congestion control? 7

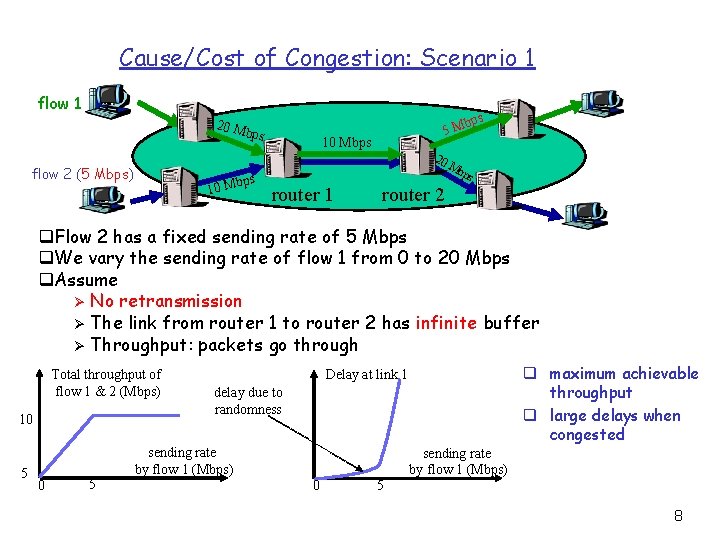

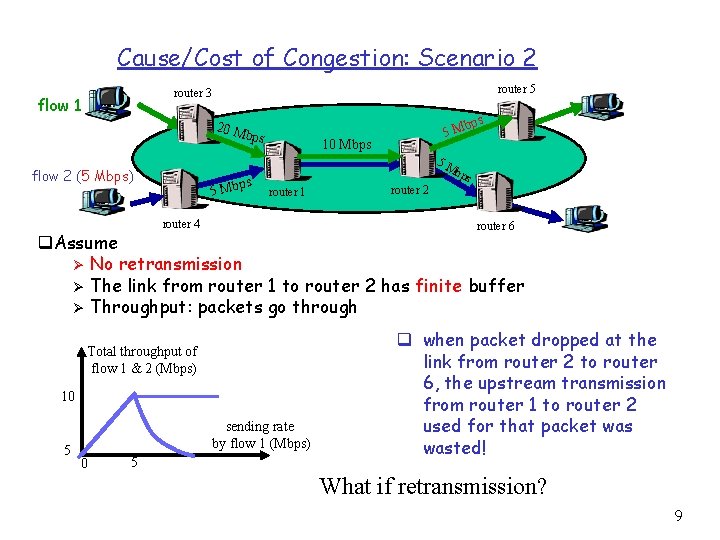

Cause/Cost of Congestion: Scenario 1 flow 1 20 M bps 5 M 10 Mbps 20 flow 2 (5 Mbps) bps 10 M router 1 bps Mb ps router 2 q. Flow 2 has a fixed sending rate of 5 Mbps q. We vary the sending rate of flow 1 from 0 to 20 Mbps q. Assume Ø No retransmission Ø The link from router 1 to router 2 has infinite buffer Ø Throughput: packets go through Total throughput of flow 1 & 2 (Mbps) 10 5 q maximum achievable throughput q large delays when congested Delay at link 1 delay due to randomness sending rate by flow 1 (Mbps) 0 5 8

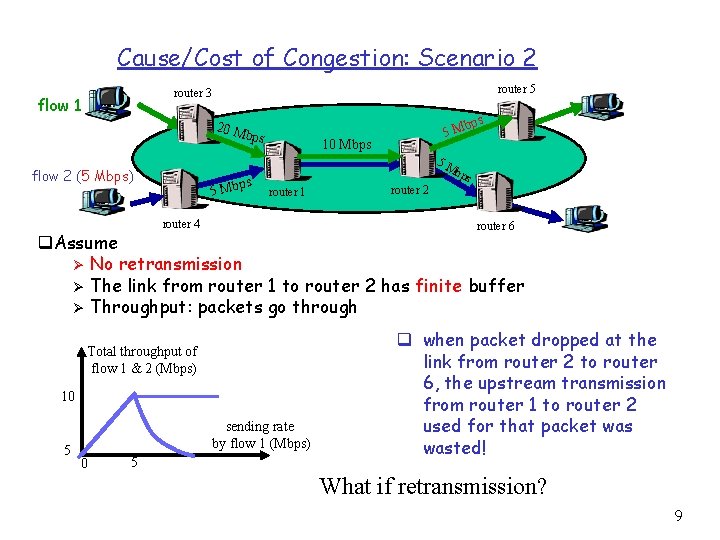

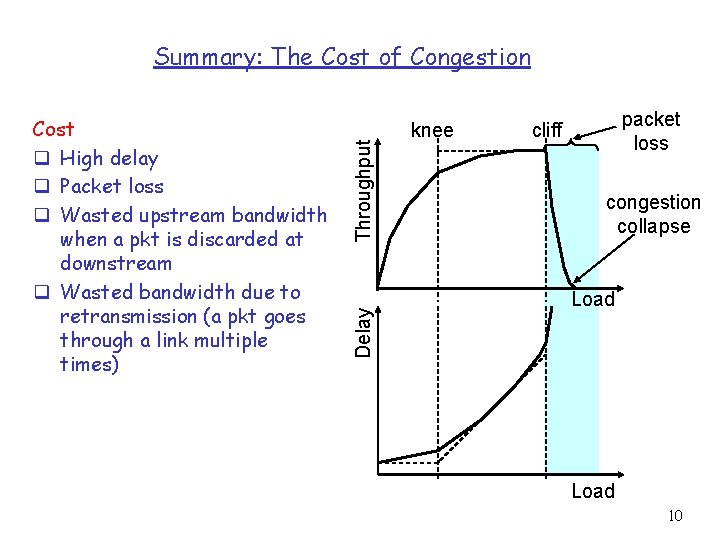

Cause/Cost of Congestion: Scenario 2 router 5 router 3 flow 1 20 M bps 5 M 10 Mbps 5 M flow 2 (5 Mbps) 5 Mbps router 1 router 4 router 2 bps router 6 q. Assume Ø No retransmission Ø The link from router 1 to router 2 has finite buffer Ø Throughput: packets go through Total throughput of flow 1 & 2 (Mbps) 10 5 sending rate by flow 1 (Mbps) 0 5 q when packet dropped at the link from router 2 to router 6, the upstream transmission from router 1 to router 2 used for that packet wasted! What if retransmission? 9

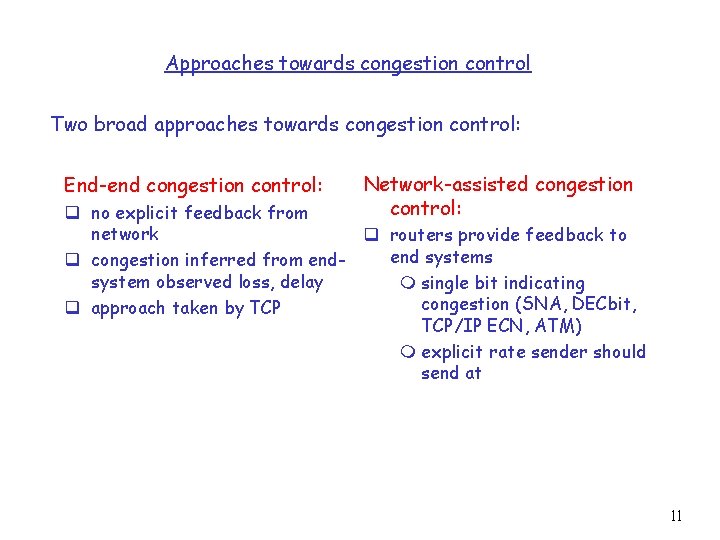

Delay Cost q High delay q Packet loss q Wasted upstream bandwidth when a pkt is discarded at downstream q Wasted bandwidth due to retransmission (a pkt goes through a link multiple times) Throughput Summary: The Cost of Congestion knee packet loss cliff congestion collapse Load 10

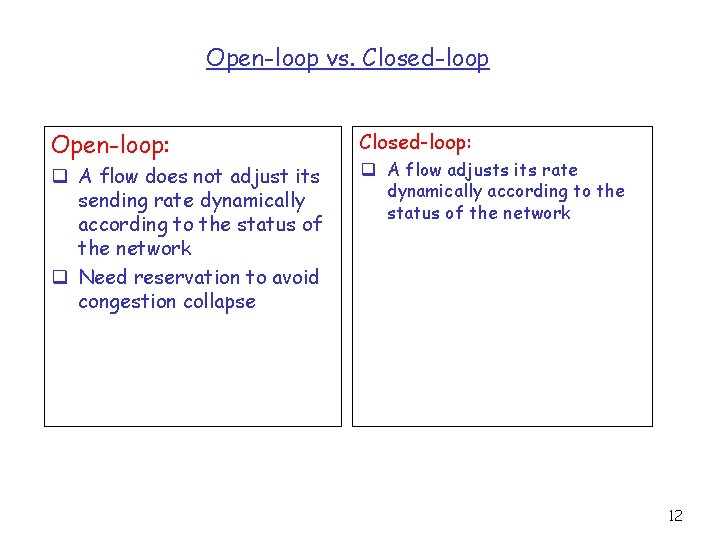

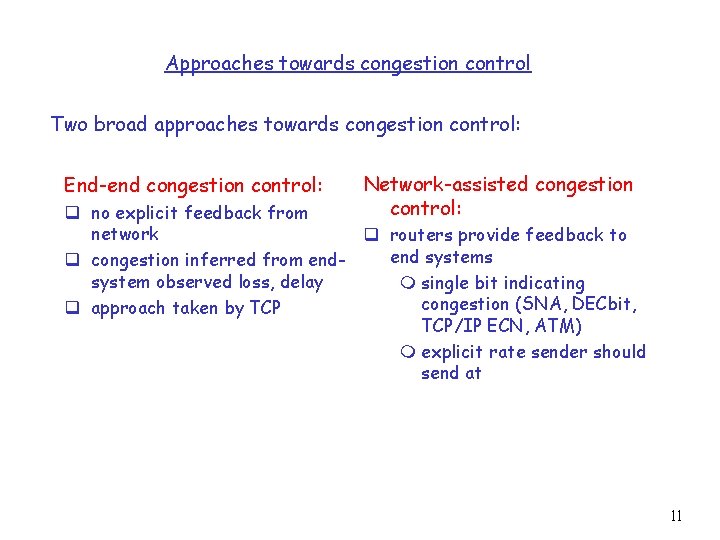

Approaches towards congestion control Two broad approaches towards congestion control: End-end congestion control: Network-assisted congestion control: q no explicit feedback from network q routers provide feedback to end systems q congestion inferred from endsystem observed loss, delay m single bit indicating congestion (SNA, DECbit, q approach taken by TCP/IP ECN, ATM) m explicit rate sender should send at 11

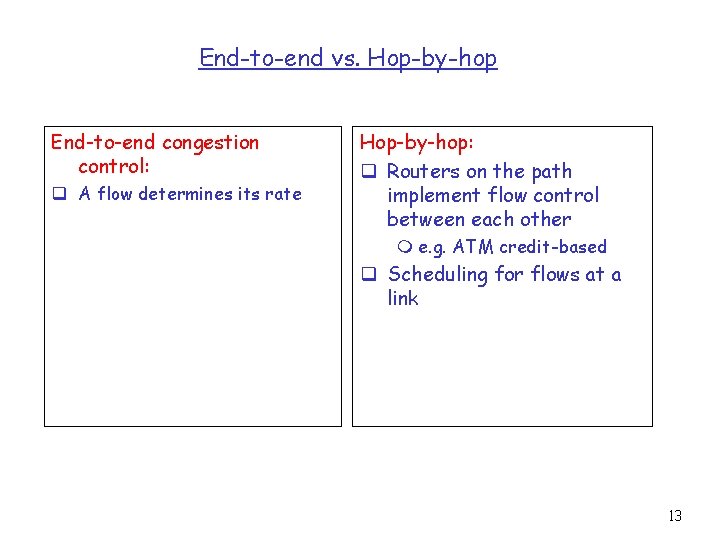

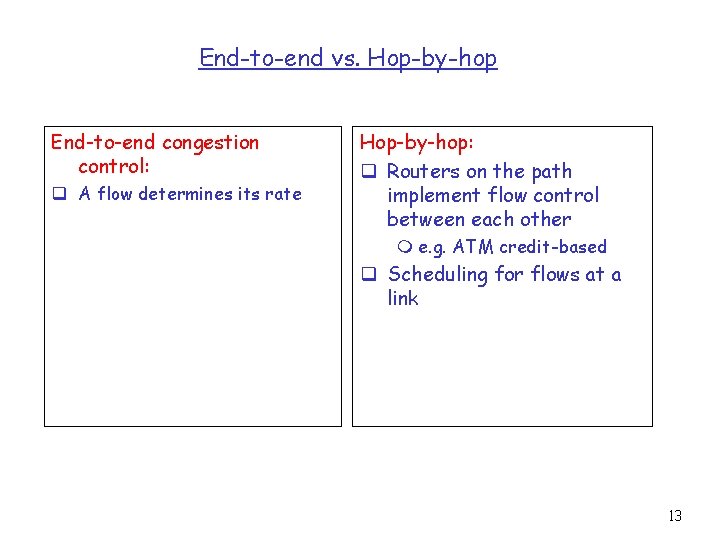

Open-loop vs. Closed-loop Open-loop: q A flow does not adjust its sending rate dynamically according to the status of the network q Need reservation to avoid congestion collapse Closed-loop: q A flow adjusts its rate dynamically according to the status of the network 12

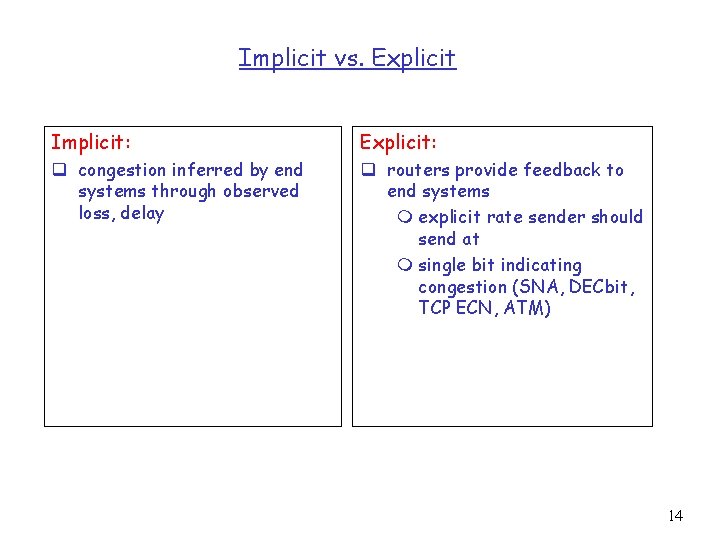

End-to-end vs. Hop-by-hop End-to-end congestion control: q A flow determines its rate Hop-by-hop: q Routers on the path implement flow control between each other m e. g. ATM credit-based q Scheduling for flows at a link 13

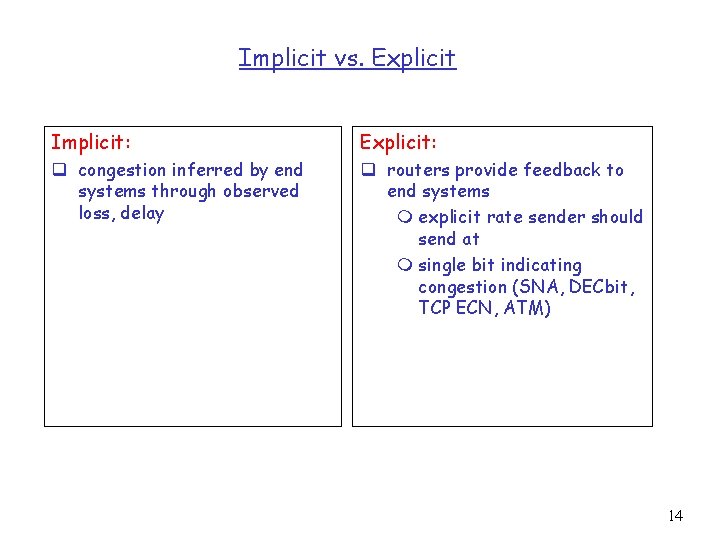

Implicit vs. Explicit Implicit: Explicit: q congestion inferred by end systems through observed loss, delay q routers provide feedback to end systems m explicit rate sender should send at m single bit indicating congestion (SNA, DECbit, TCP ECN, ATM) 14

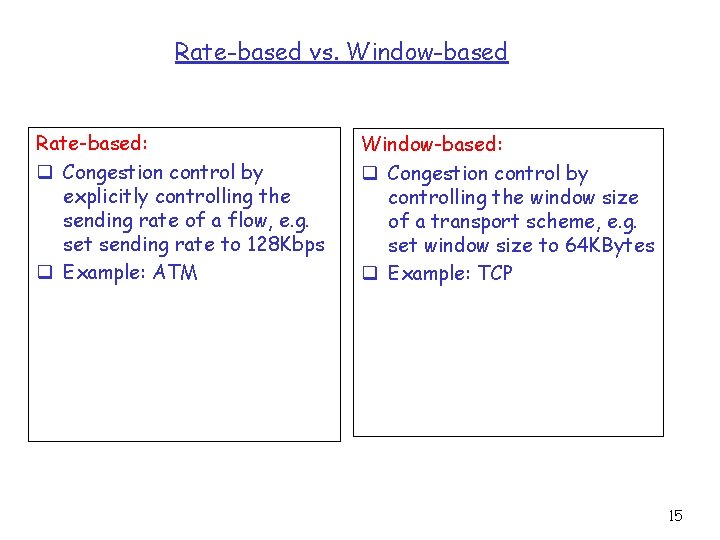

Rate-based vs. Window-based Rate-based: q Congestion control by explicitly controlling the sending rate of a flow, e. g. set sending rate to 128 Kbps q Example: ATM Window-based: q Congestion control by controlling the window size of a transport scheme, e. g. set window size to 64 KBytes q Example: TCP 15

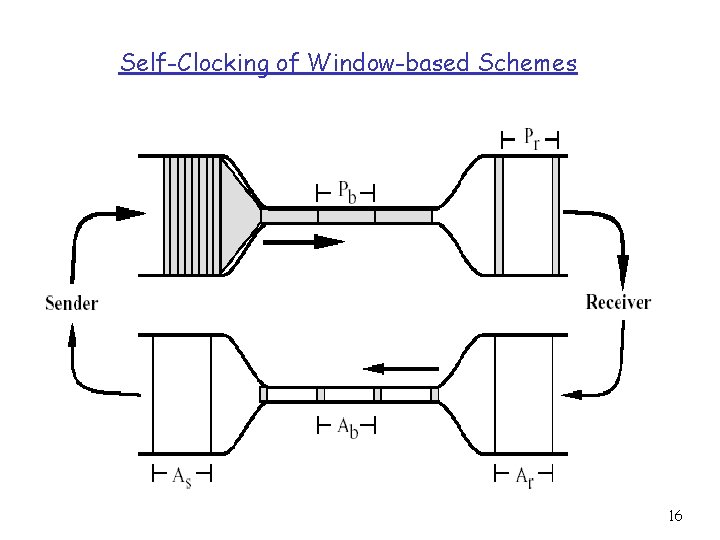

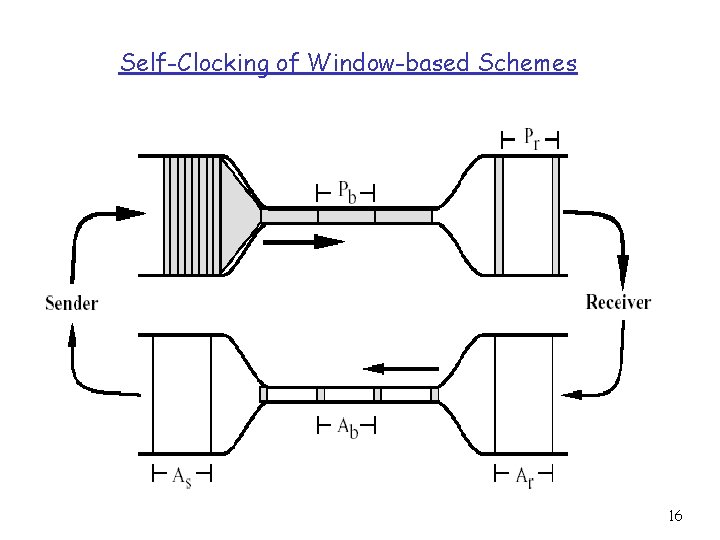

Self-Clocking of Window-based Schemes 16

Outline q TCP Overview q Principle of congestion control Ø TCP/Reno congestion control 17

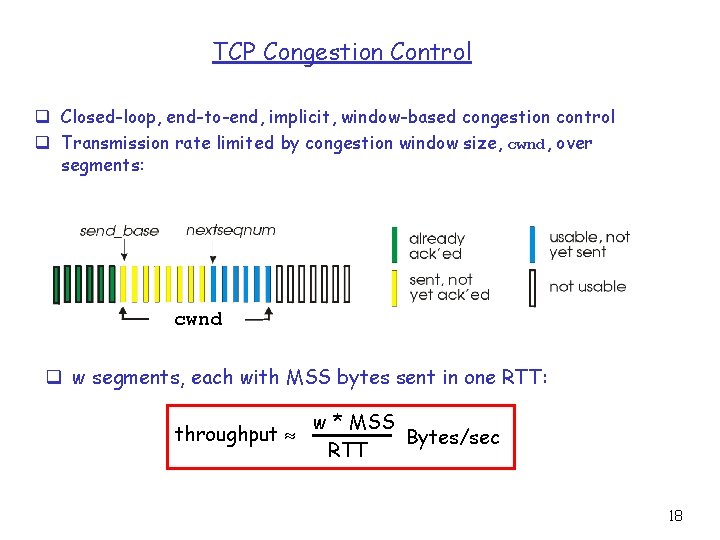

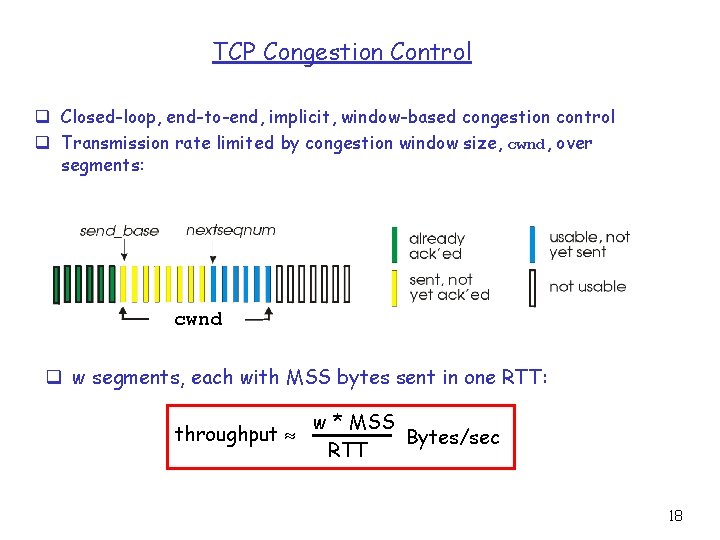

TCP Congestion Control q Closed-loop, end-to-end, implicit, window-based congestion control q Transmission rate limited by congestion window size, cwnd, over segments: cwnd q w segments, each with MSS bytes sent in one RTT: throughput w * MSS Bytes/sec RTT 18

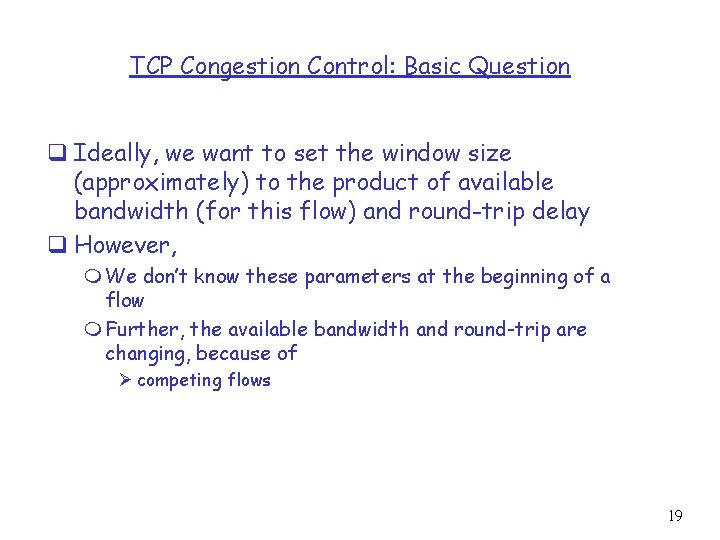

TCP Congestion Control: Basic Question q Ideally, we want to set the window size (approximately) to the product of available bandwidth (for this flow) and round-trip delay q However, m We don’t know these parameters at the beginning of a flow m Further, the available bandwidth and round-trip are changing, because of Ø competing flows 19

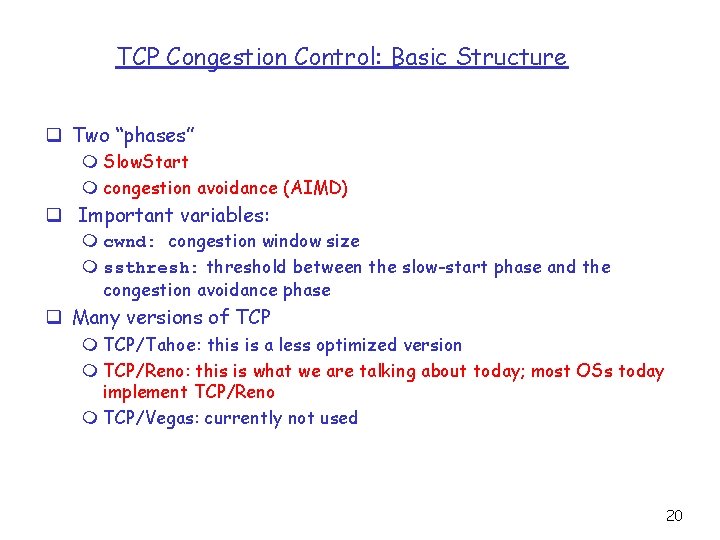

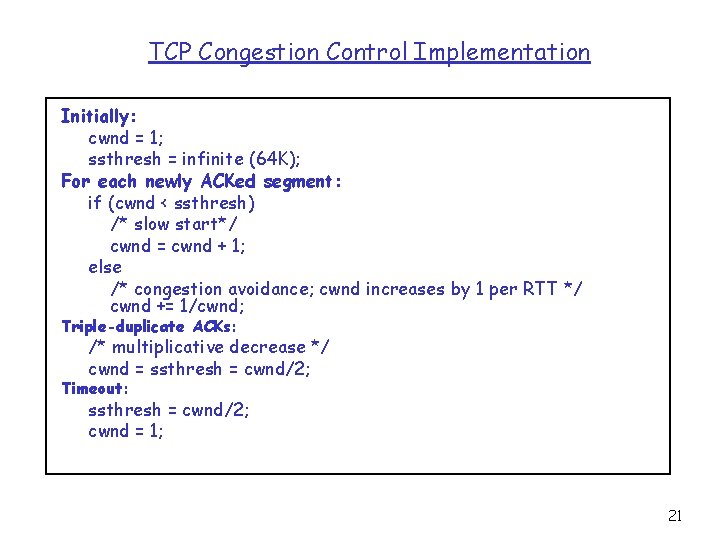

TCP Congestion Control: Basic Structure q Two “phases” m Slow. Start m congestion avoidance (AIMD) q Important variables: m cwnd: congestion window size m ssthresh: threshold between the slow-start phase and the congestion avoidance phase q Many versions of TCP m TCP/Tahoe: this is a less optimized version m TCP/Reno: this is what we are talking about today; most OSs today implement TCP/Reno m TCP/Vegas: currently not used 20

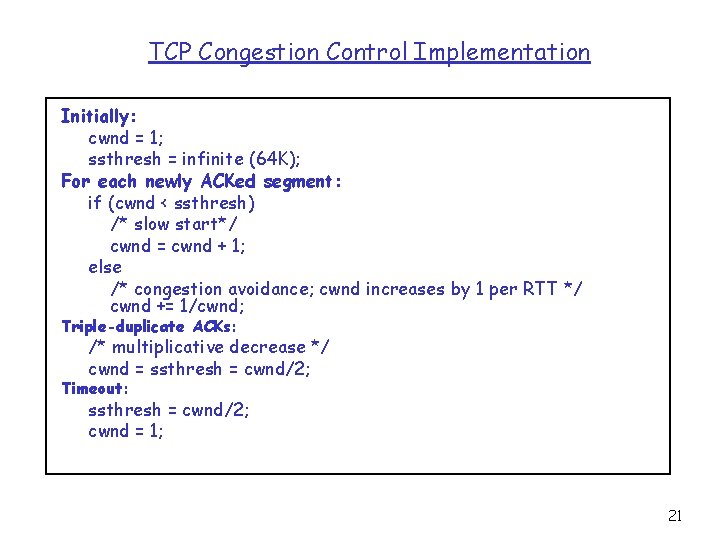

TCP Congestion Control Implementation Initially: cwnd = 1; ssthresh = infinite (64 K); For each newly ACKed segment: if (cwnd < ssthresh) /* slow start*/ cwnd = cwnd + 1; else /* congestion avoidance; cwnd increases by 1 per RTT */ cwnd += 1/cwnd; Triple-duplicate ACKs: /* multiplicative decrease */ cwnd = ssthresh = cwnd/2; Timeout: ssthresh = cwnd/2; cwnd = 1; 21

![TCP AIMD Sender Data Packets Network Receiver TCP Acknowledgment Packets q AIMD Jacobson 1988 TCP AIMD Sender Data Packets Network Receiver TCP Acknowledgment Packets q AIMD [Jacobson 1988]:](https://slidetodoc.com/presentation_image_h/13bbfcb13d4710bd148a78b745196ddd/image-22.jpg)

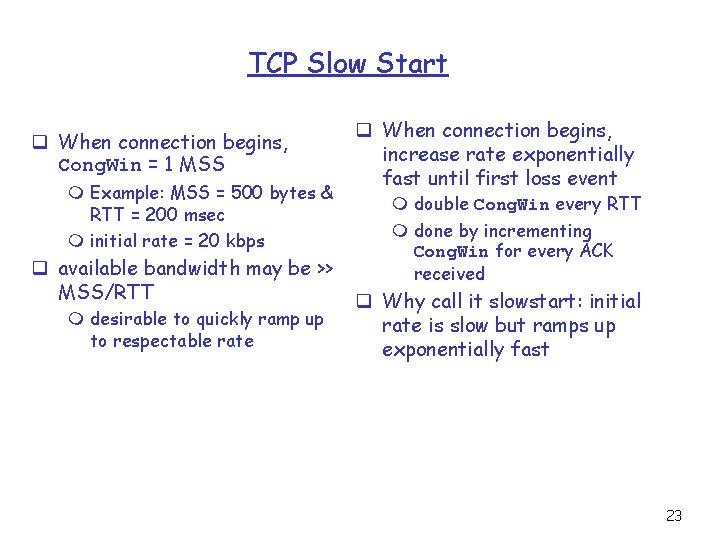

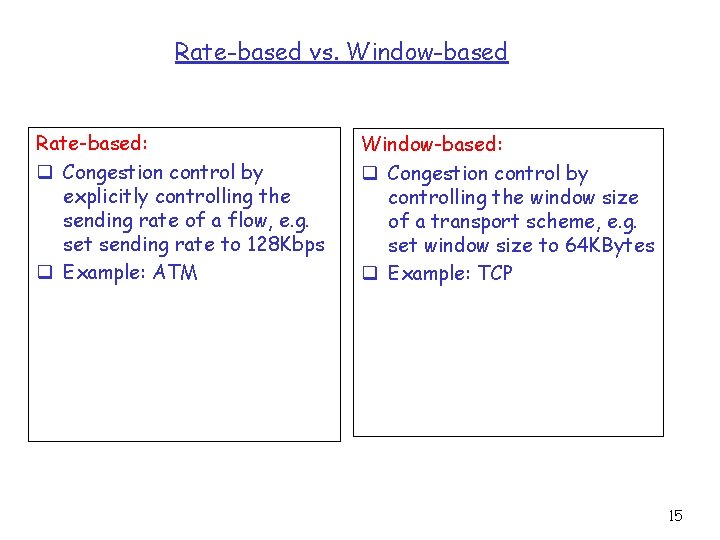

TCP AIMD Sender Data Packets Network Receiver TCP Acknowledgment Packets q AIMD [Jacobson 1988]: Additive Increase : In every RTT W = W + 1*MSS Multiplicative Decrease : Congestion Window Size Upon a congestion event W = W/2 MD AI 1 RTT 0 Time 22

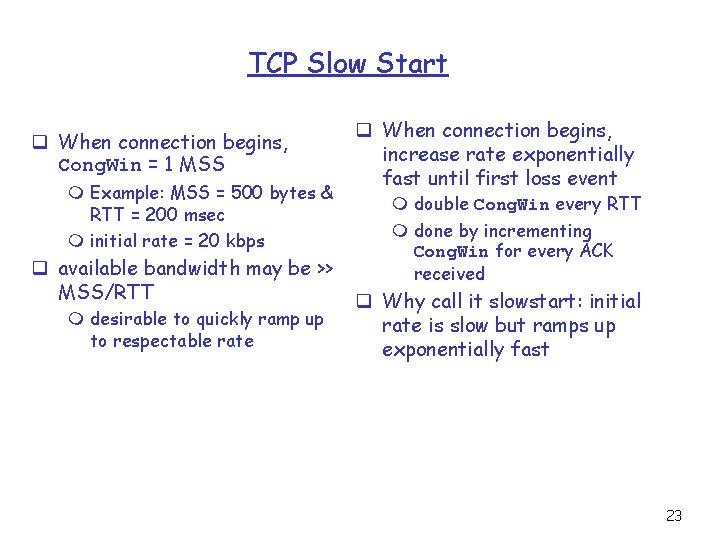

TCP Slow Start q When connection begins, Cong. Win = 1 MSS m Example: MSS = 500 bytes & RTT = 200 msec m initial rate = 20 kbps q available bandwidth may be >> MSS/RTT m desirable to quickly ramp up to respectable rate q When connection begins, increase rate exponentially fast until first loss event m double Cong. Win every RTT m done by incrementing Cong. Win for every ACK received q Why call it slowstart: initial rate is slow but ramps up exponentially fast 23

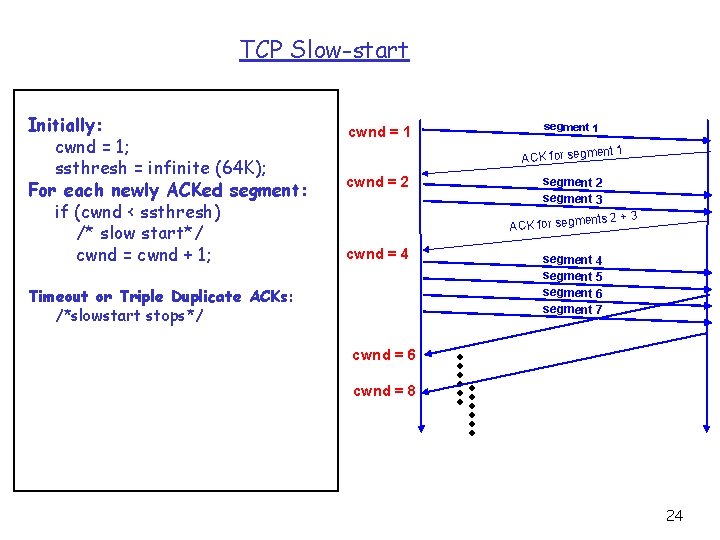

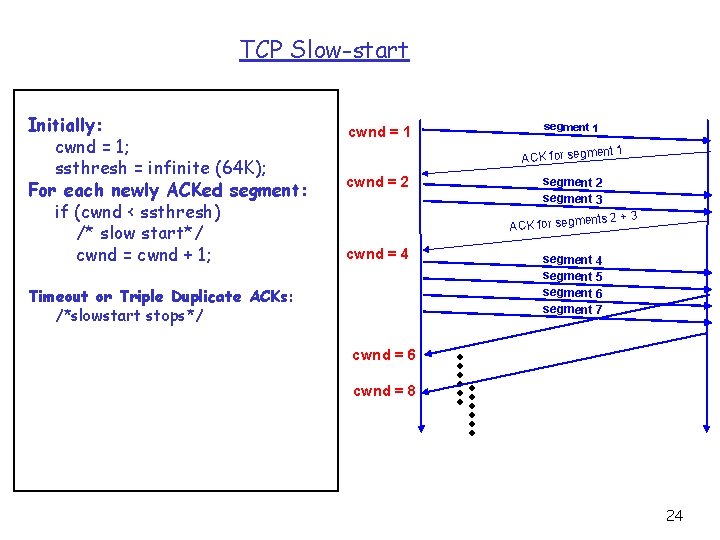

TCP Slow-start Initially: cwnd = 1; ssthresh = infinite (64 K); For each newly ACKed segment: if (cwnd < ssthresh) /* slow start*/ cwnd = cwnd + 1; cwnd = 1 segment 1 ACK for segm cwnd = 2 ent 1 segment 2 segment 3 ents 2 + 3 ACK for segm cwnd = 4 Timeout or Triple Duplicate ACKs: /*slowstart stops*/ segment 4 segment 5 segment 6 segment 7 cwnd = 6 cwnd = 8 24

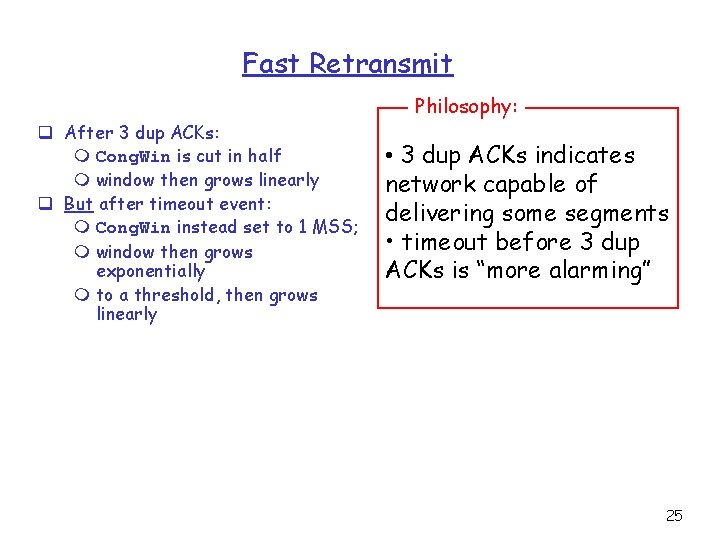

Fast Retransmit Philosophy: q After 3 dup ACKs: m Cong. Win is cut in half m window then grows linearly q But after timeout event: m Cong. Win instead set to 1 MSS; m window then grows exponentially m to a threshold, then grows linearly • 3 dup ACKs indicates network capable of delivering some segments • timeout before 3 dup ACKs is “more alarming” 25

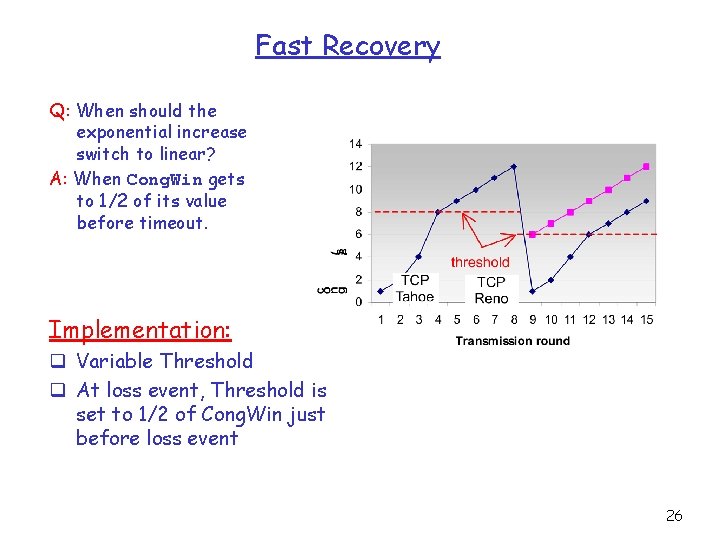

Fast Recovery Q: When should the exponential increase switch to linear? A: When Cong. Win gets to 1/2 of its value before timeout. Implementation: q Variable Threshold q At loss event, Threshold is set to 1/2 of Cong. Win just before loss event 26

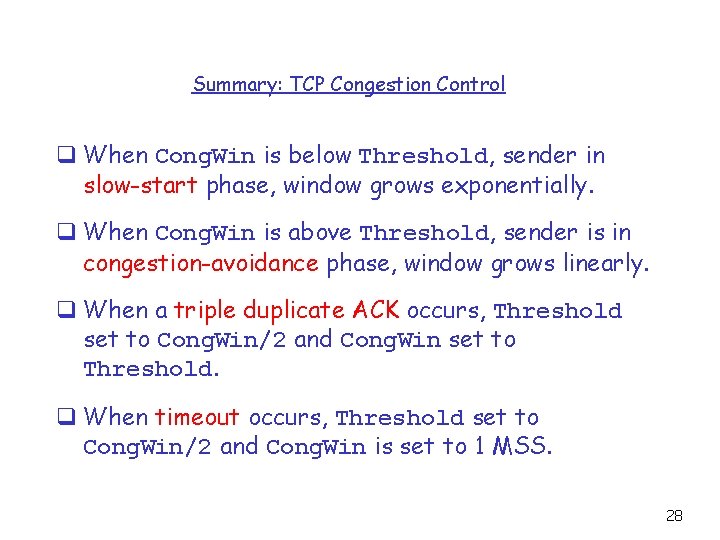

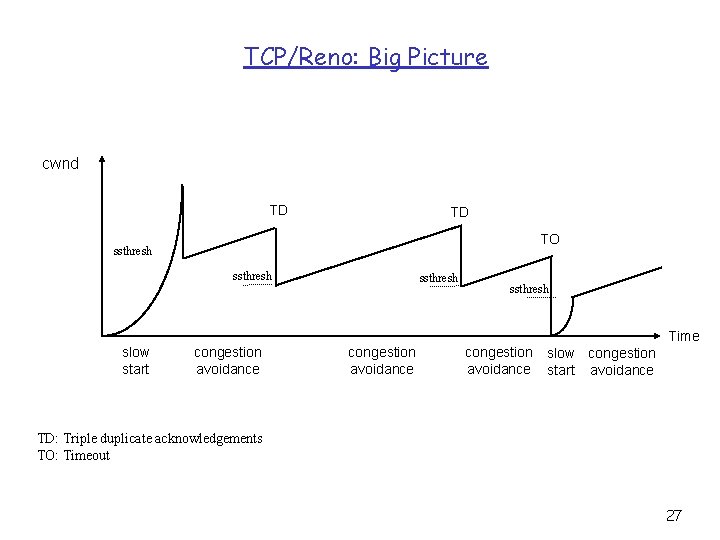

TCP/Reno: Big Picture cwnd TD TD TO ssthresh Time slow start congestion avoidance slow congestion start avoidance TD: Triple duplicate acknowledgements TO: Timeout 27

Summary: TCP Congestion Control q When Cong. Win is below Threshold, sender in slow-start phase, window grows exponentially. q When Cong. Win is above Threshold, sender is in congestion-avoidance phase, window grows linearly. q When a triple duplicate ACK occurs, Threshold set to Cong. Win/2 and Cong. Win set to Threshold. q When timeout occurs, Threshold set to Cong. Win/2 and Cong. Win is set to 1 MSS. 28