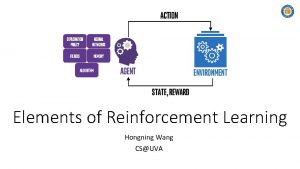

Elements of Reinforcement Learning Hongning Wang CSUVA Outline

- Slides: 19

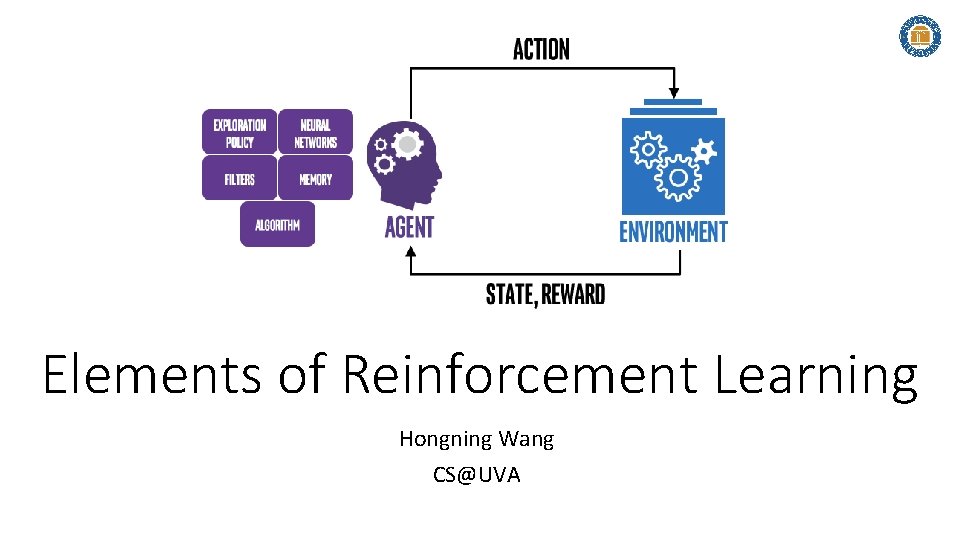

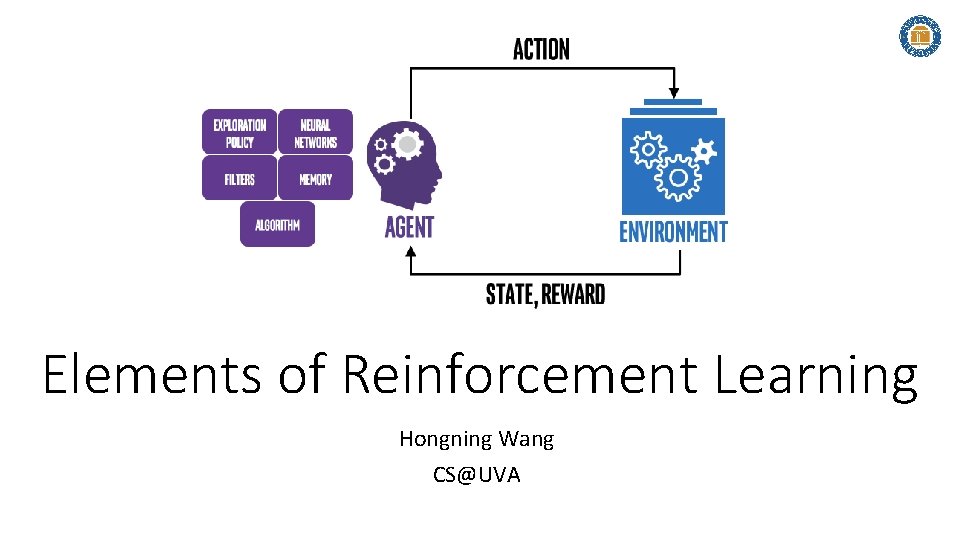

Elements of Reinforcement Learning Hongning Wang CS@UVA

Outline • Action vs. reward • State vs. value • Policy • Model CS@UVA RL 2020 -Fall 2

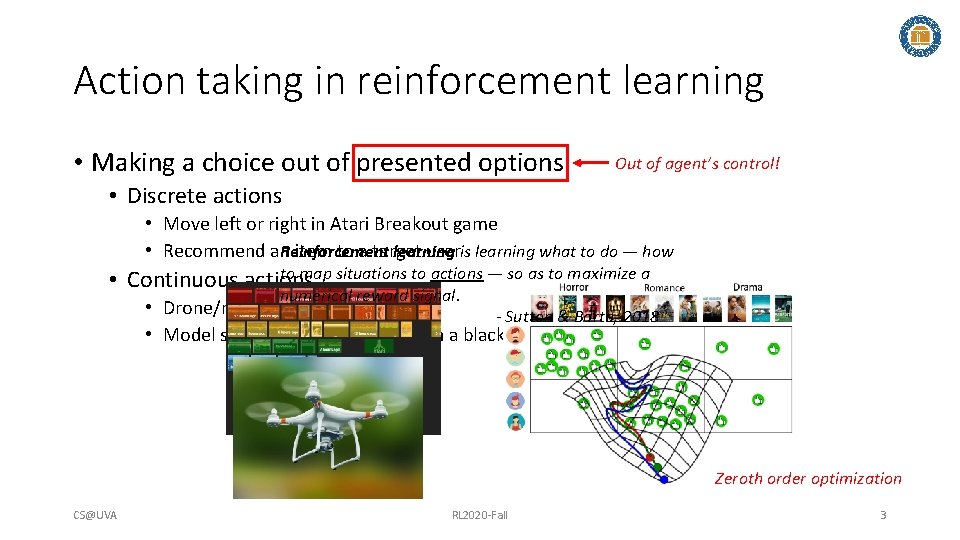

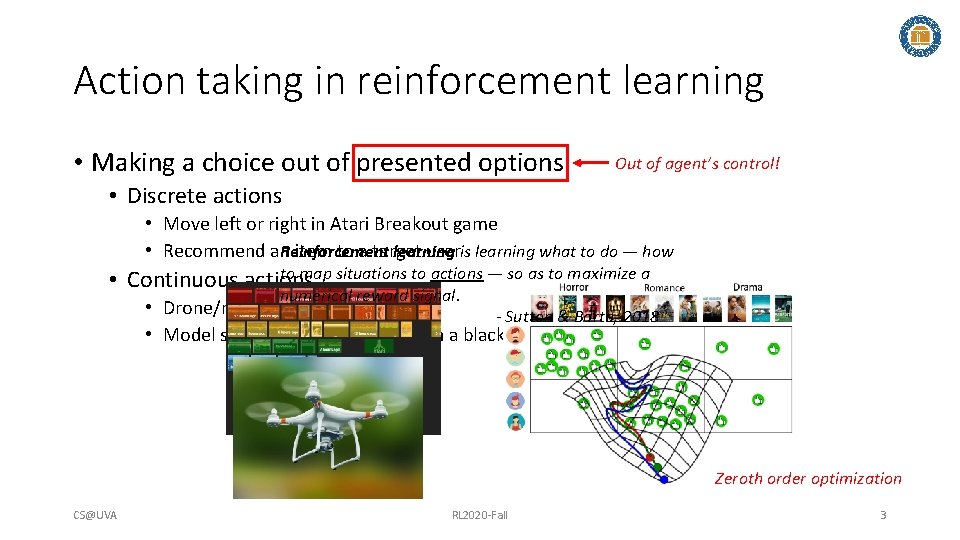

Action taking in reinforcement learning • Making a choice out of presented options Out of agent’s control! • Discrete actions • Move left or right in Atari Breakout game • Recommend an item to a target useris learning what to do — how Reinforcement learning to map situations to actions — so as to maximize a • Continuous actions numerical reward signal. • Drone/robotics control - Sutton & Barto, 2018 • Model selection/optimization with a black box function Zeroth order optimization CS@UVA RL 2020 -Fall 3

Reward in reinforcement learning • A scalar feedback signal about the taken action • Suggest good/bad immediate consequence of the action • Score in Atari game • User clicks/purchase in a recommender system • Change of black-box function value • Delayed feedback • GO game • Generate a sentence in chat-bot • Goal of learning – maximize cumulative rewards • Reward hypothesis: “All goals can be described by the maximization of expected cumulative reward. ” CS@UVA RL 2020 -Fall 4

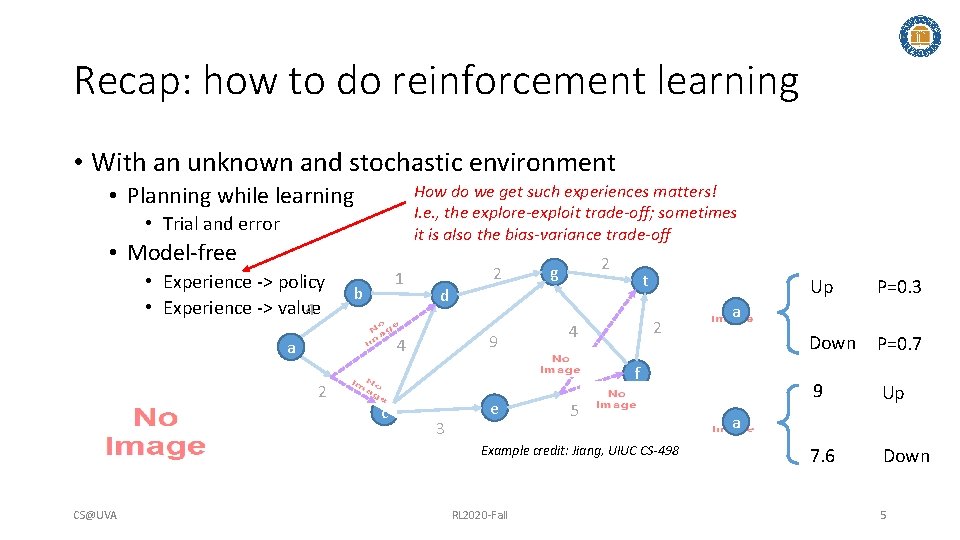

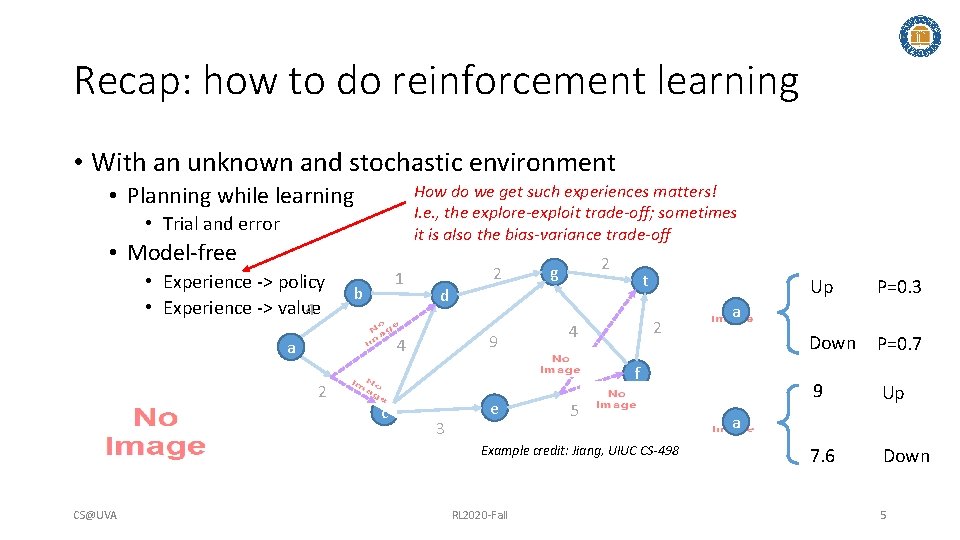

Recap: how to do reinforcement learning • With an unknown and stochastic environment How do we get such experiences matters! I. e. , the explore-exploit trade-off; sometimes it is also the bias-variance trade-off • Planning while learning • Trial and error • Model-free • Experience -> policy • Experience -> value 4 1 b 2 2 t d 9 4 a 2 g 2 4 3 e 5 Example credit: Jiang, UIUC CS-498 CS@UVA RL 2020 -Fall P=0. 3 Down P=0. 7 9 Up 7. 6 Down a f c Up a 5

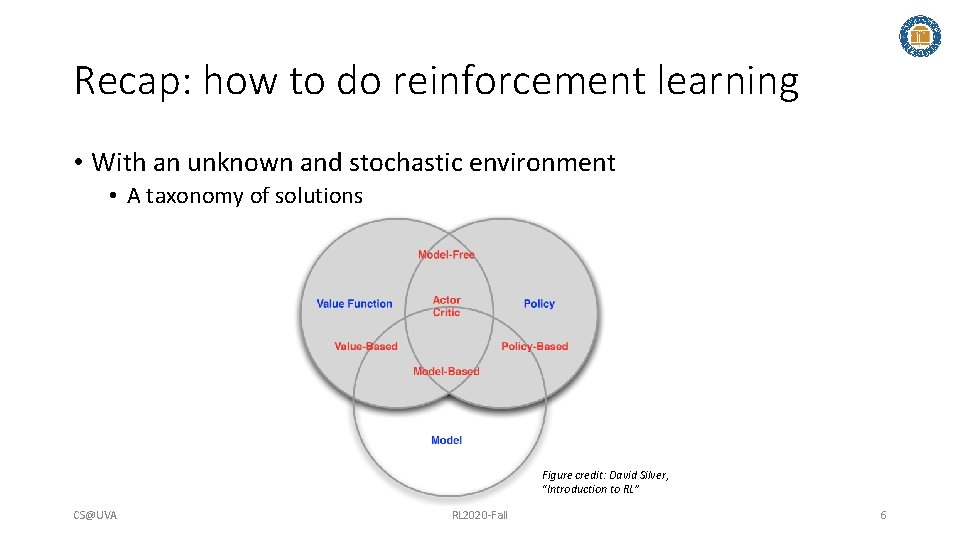

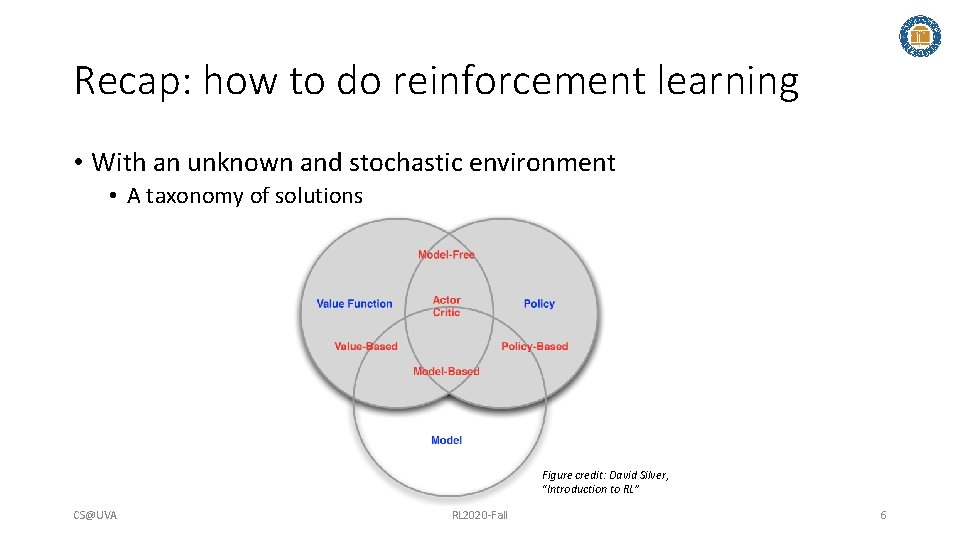

Recap: how to do reinforcement learning • With an unknown and stochastic environment • A taxonomy of solutions Figure credit: David Silver, “Introduction to RL” CS@UVA RL 2020 -Fall 6

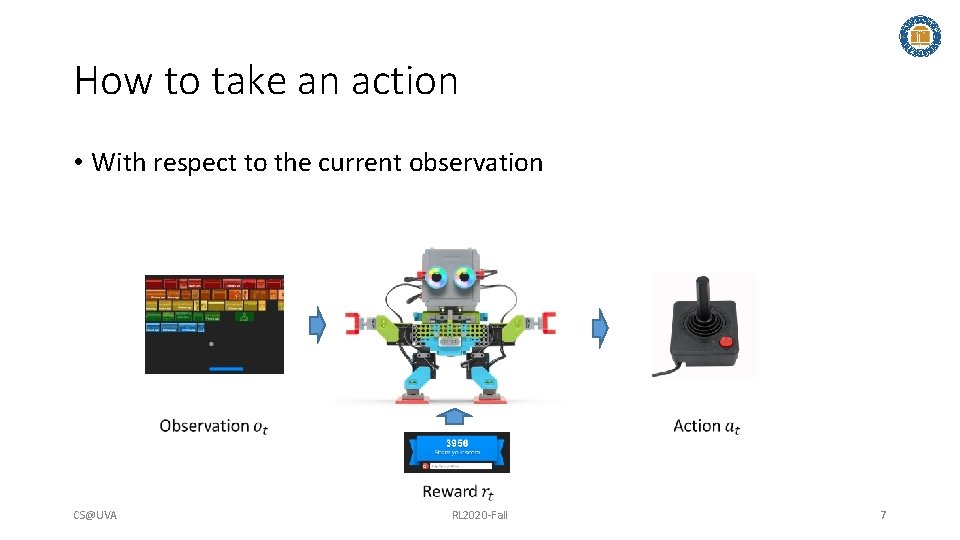

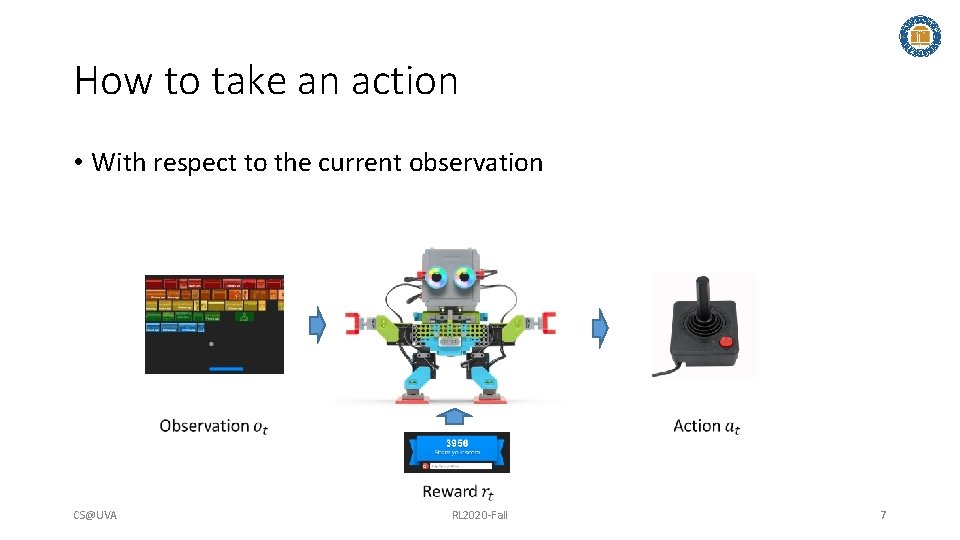

How to take an action • With respect to the current observation CS@UVA RL 2020 -Fall 7

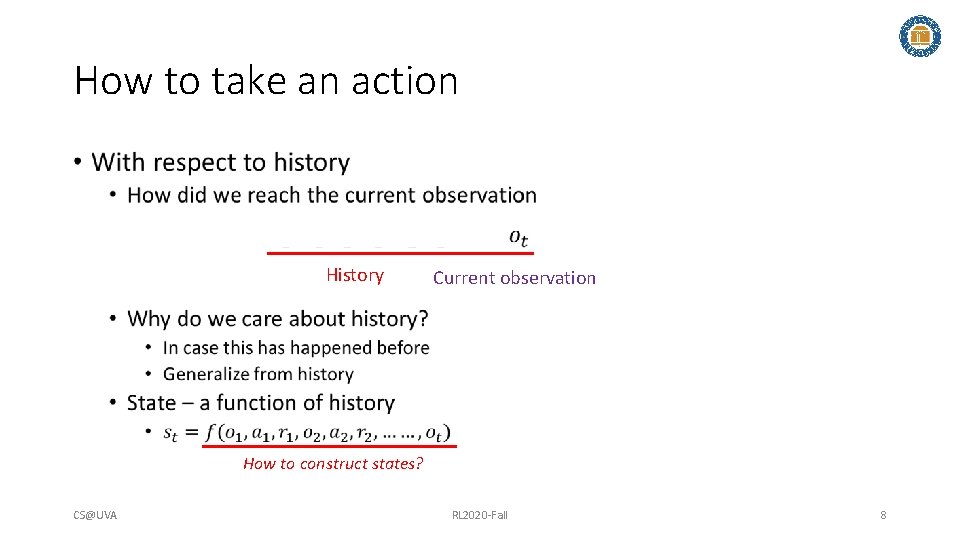

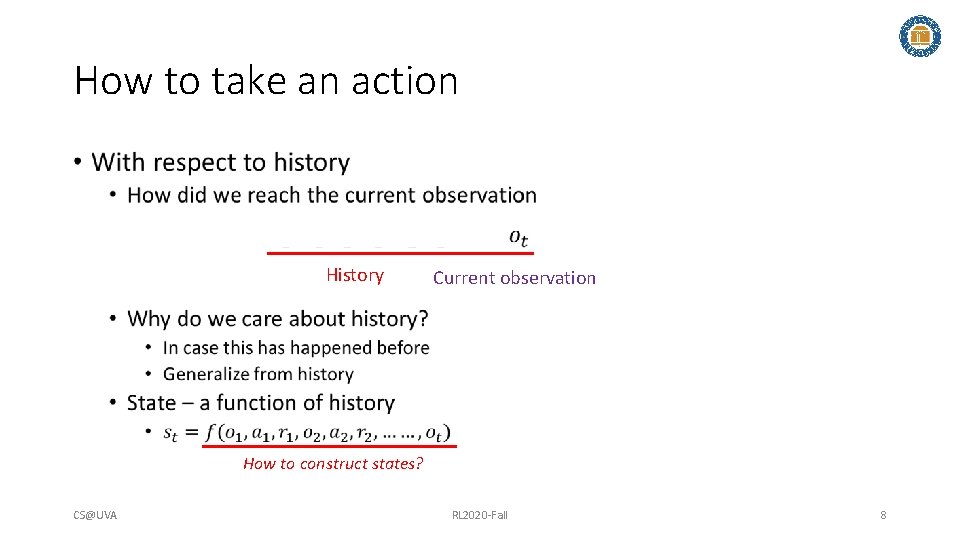

How to take an action • History Current observation How to construct states? CS@UVA RL 2020 -Fall 8

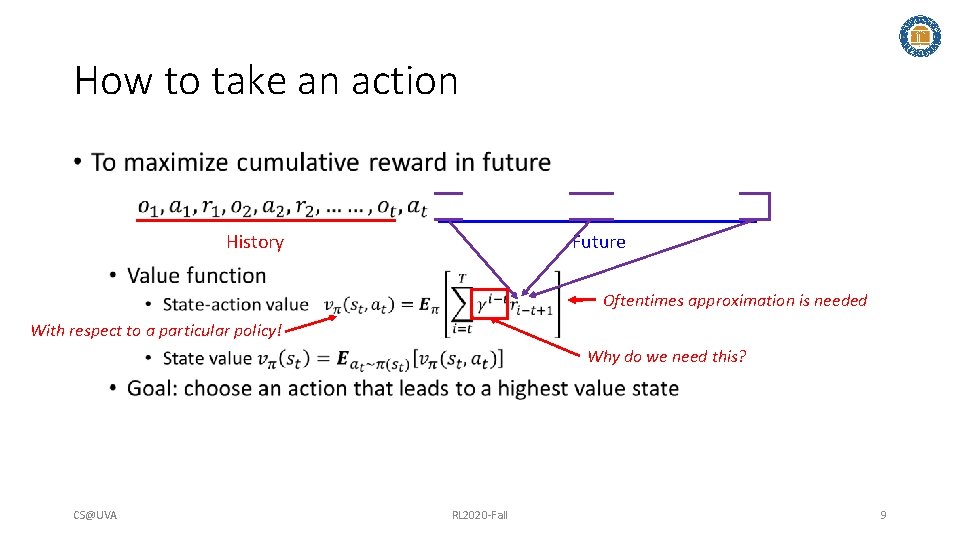

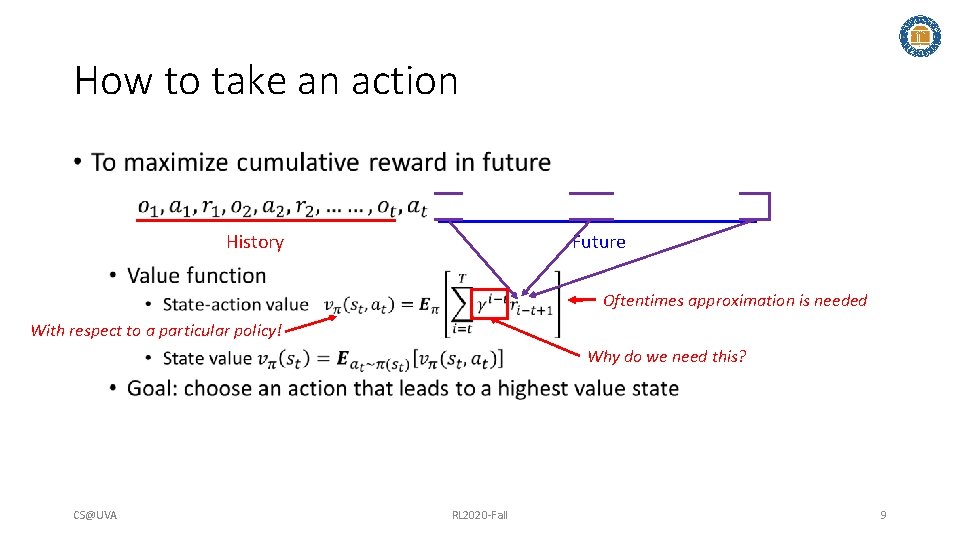

How to take an action • History Future Oftentimes approximation is needed With respect to a particular policy! Why do we need this? CS@UVA RL 2020 -Fall 9

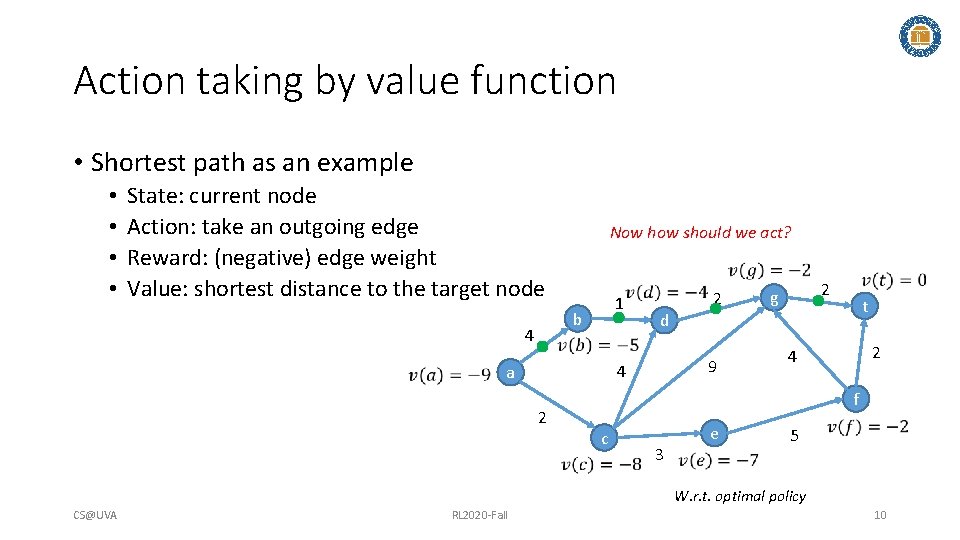

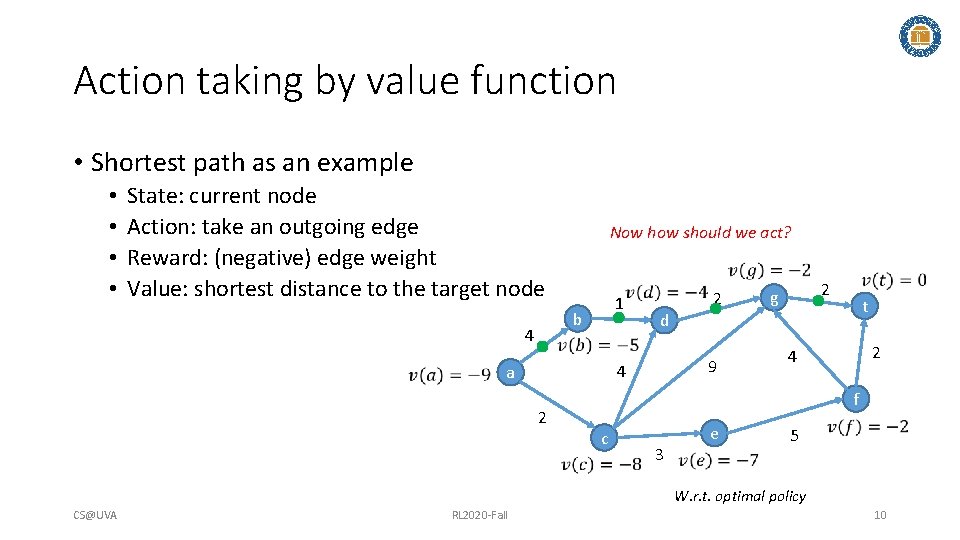

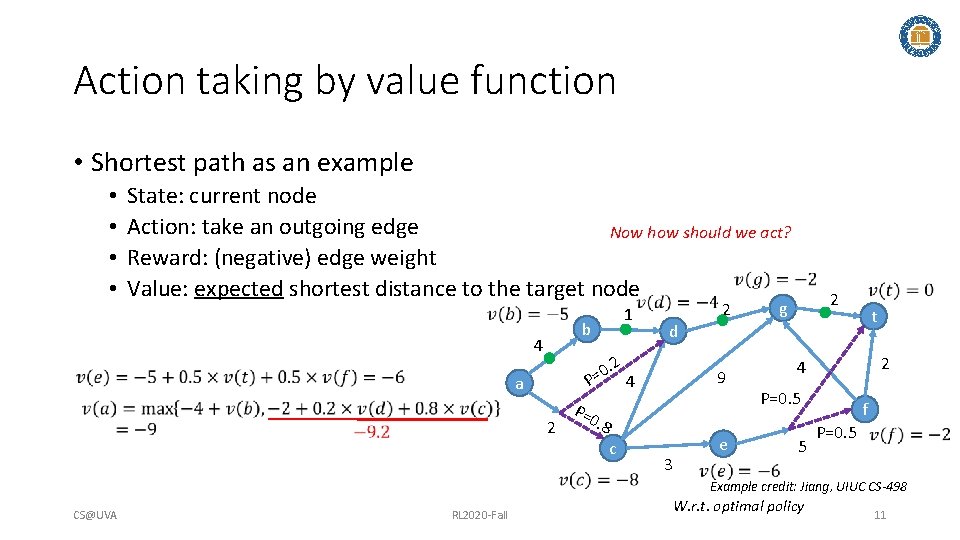

Action taking by value function • Shortest path as an example • • State: current node Action: take an outgoing edge Reward: (negative) edge weight Value: shortest distance to the target node Now how should we act? 1 b 4 2 2 t d 9 4 a 2 g 2 4 f c 3 e 5 W. r. t. optimal policy CS@UVA RL 2020 -Fall 10

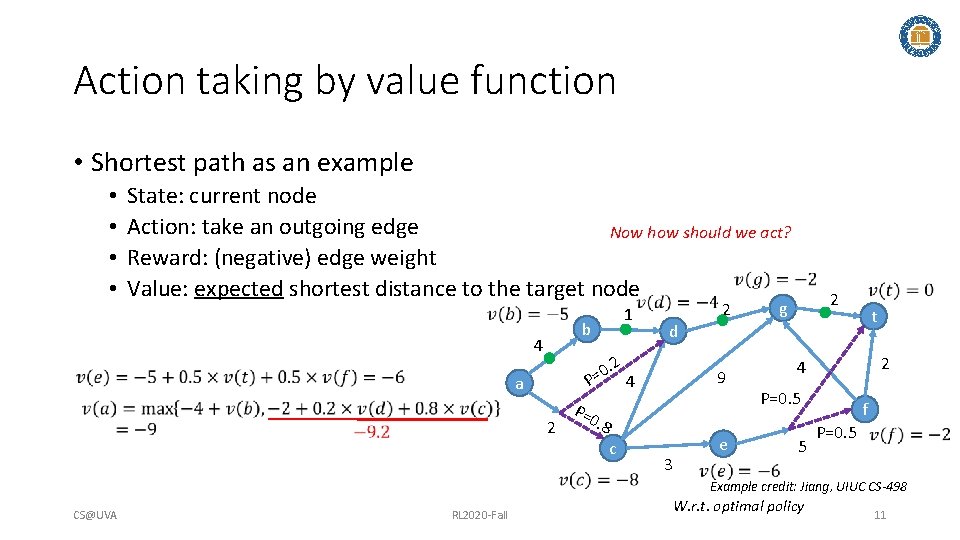

Action taking by value function • Shortest path as an example • • State: current node Action: take an outgoing edge Now how should we act? Reward: (negative) edge weight Value: expected shortest distance to the target node 1 b 4 2 2 9 P=0 . 8 c t d 0. 2 = P 4 a 2 g 3 e 2 4 P=0. 5 5 f P=0. 5 Example credit: Jiang, UIUC CS-498 CS@UVA RL 2020 -Fall W. r. t. optimal policy 11

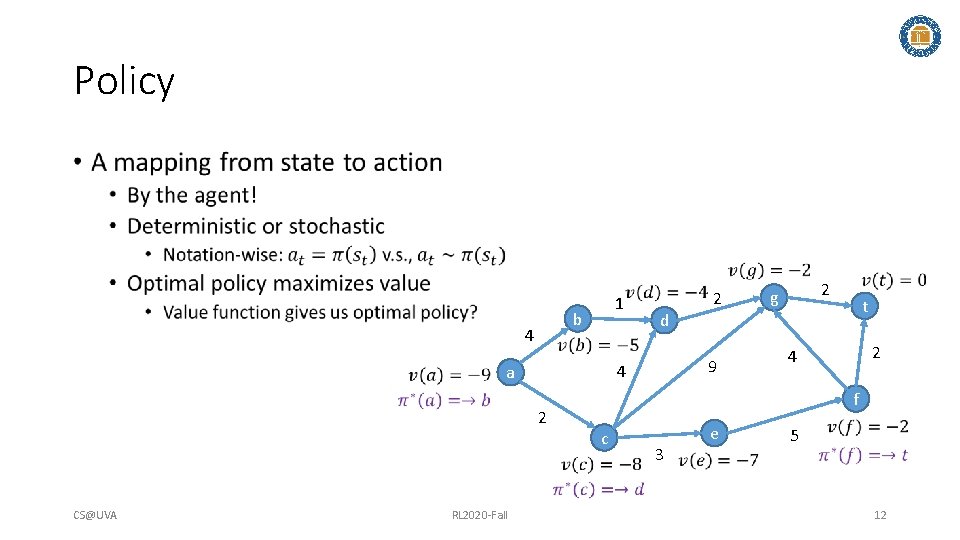

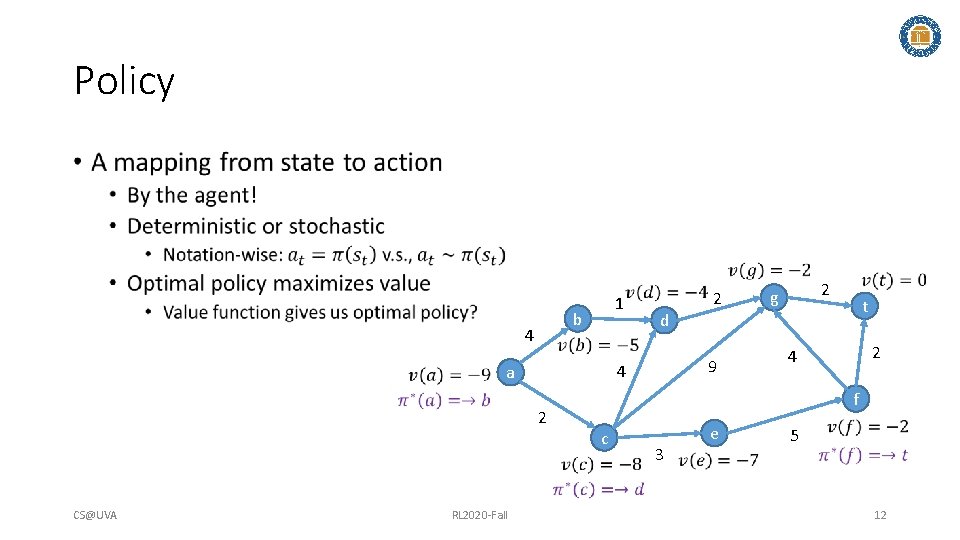

Policy • 1 b 4 2 RL 2020 -Fall 2 g t d 9 4 a CS@UVA 2 2 4 f c 3 e 5 12

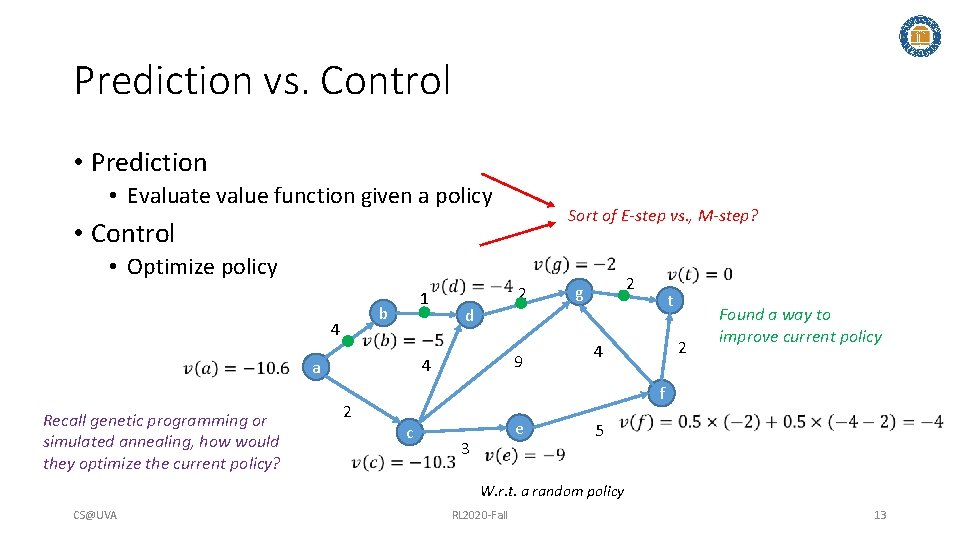

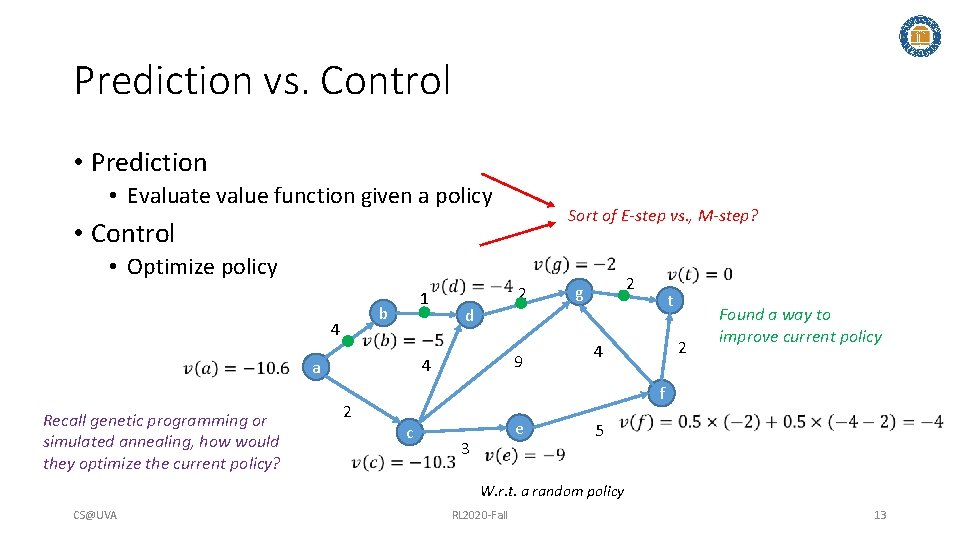

Prediction vs. Control • Prediction • Evaluate value function given a policy Sort of E-step vs. , M-step? • Control • Optimize policy 1 b 4 2 2 g t d 9 4 a Recall genetic programming or simulated annealing, how would they optimize the current policy? 2 2 4 Found a way to improve current policy f c e 3 5 W. r. t. a random policy CS@UVA RL 2020 -Fall 13

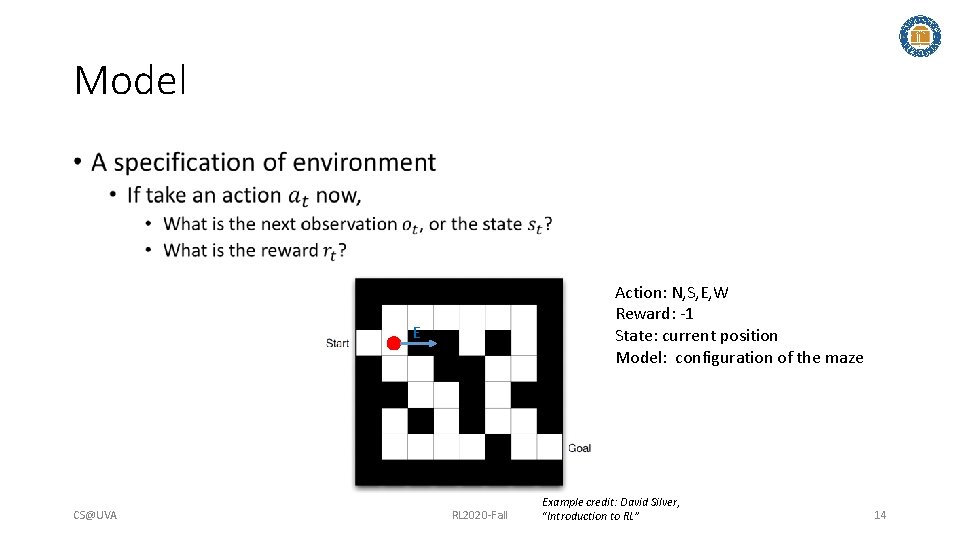

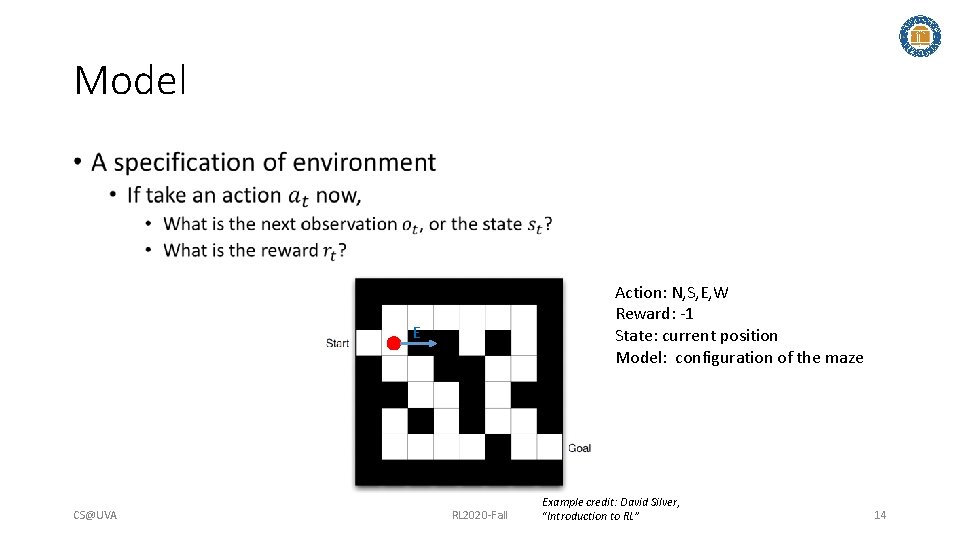

Model • Action: N, S, E, W Reward: -1 State: current position Model: configuration of the maze E CS@UVA RL 2020 -Fall Example credit: David Silver, “Introduction to RL” 14

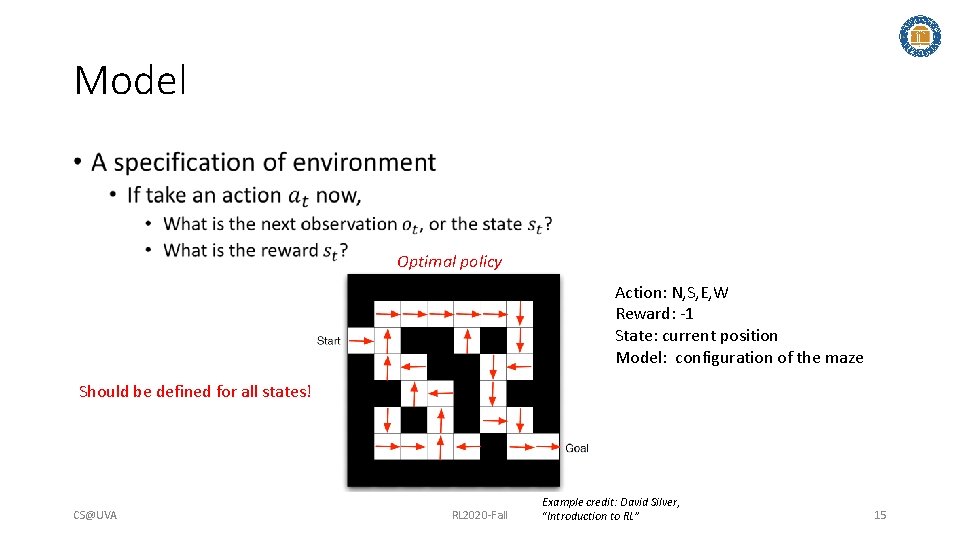

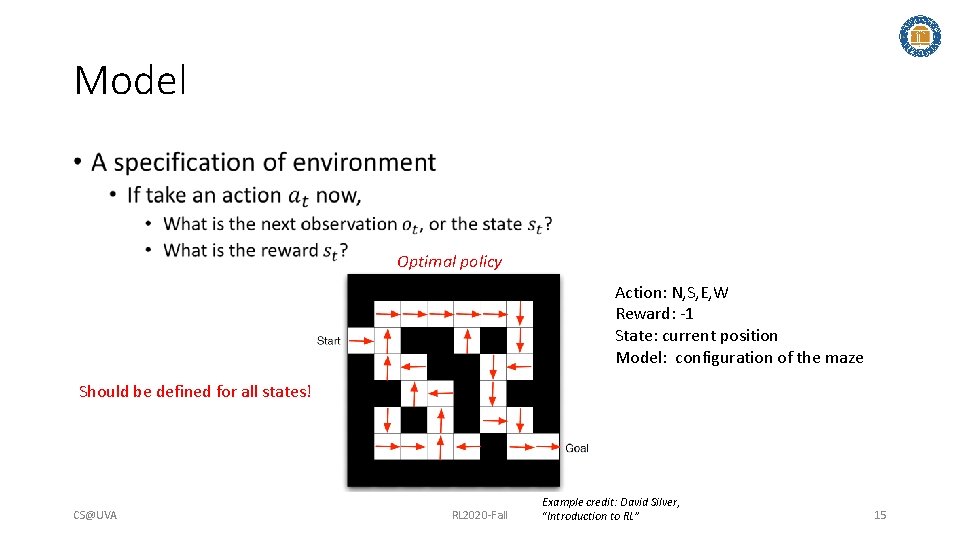

Model • Optimal policy Action: N, S, E, W Reward: -1 State: current position Model: configuration of the maze Should be defined for all states! CS@UVA RL 2020 -Fall Example credit: David Silver, “Introduction to RL” 15

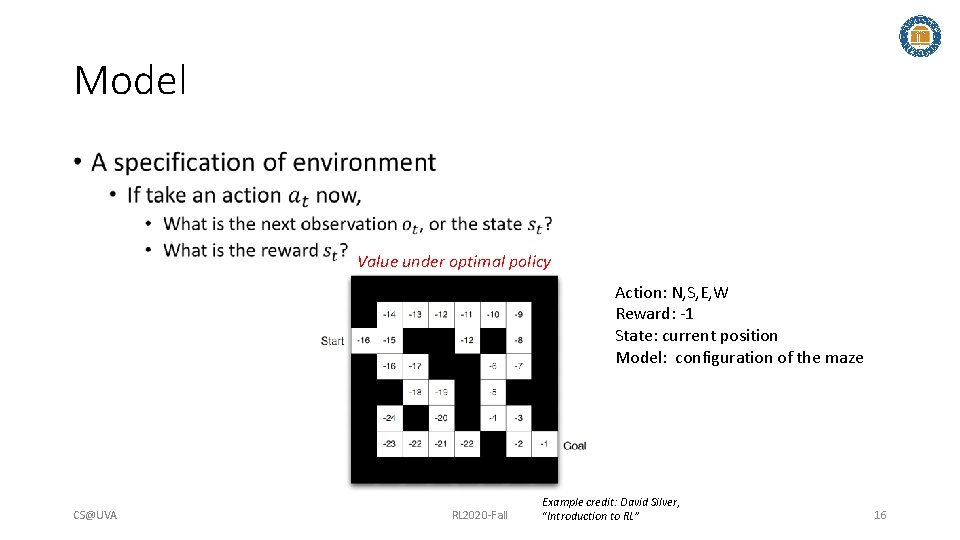

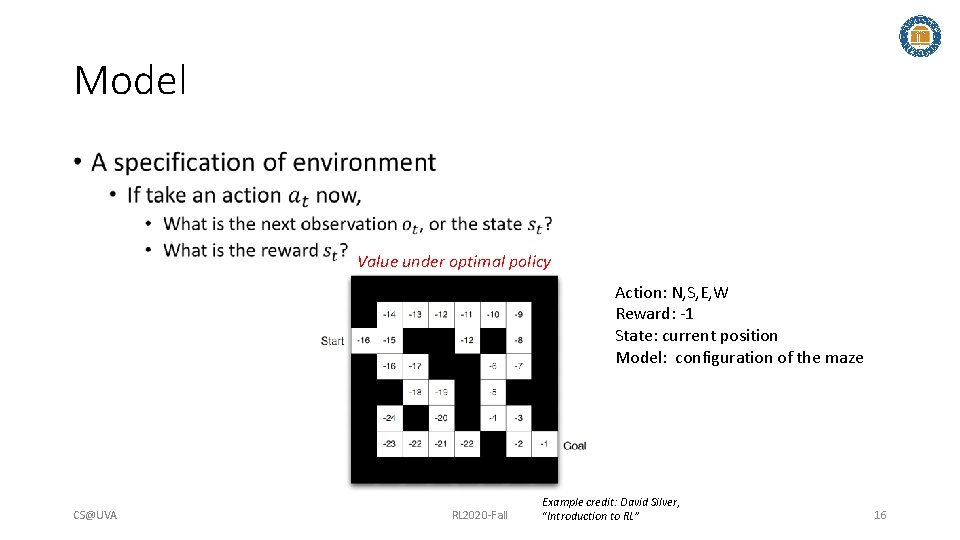

Model • Value under optimal policy Action: N, S, E, W Reward: -1 State: current position Model: configuration of the maze CS@UVA RL 2020 -Fall Example credit: David Silver, “Introduction to RL” 16

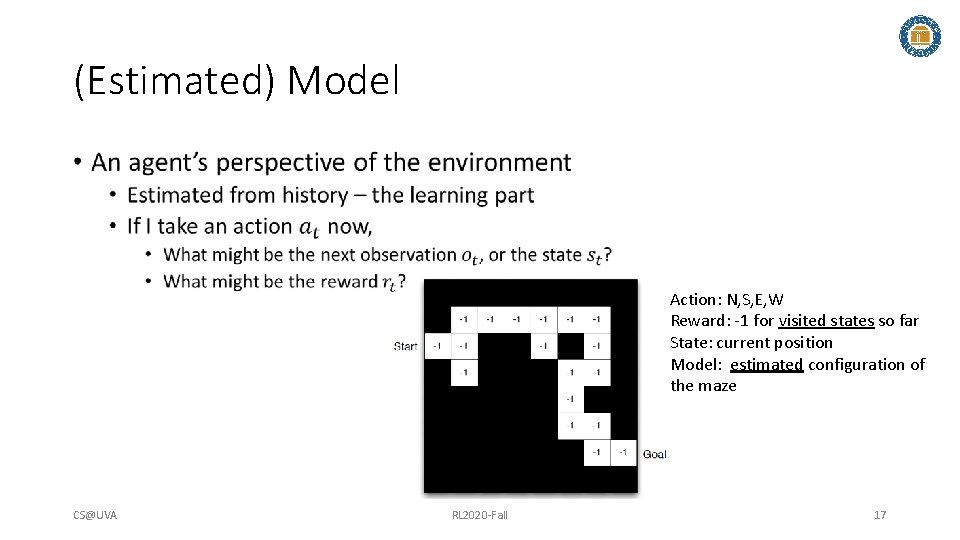

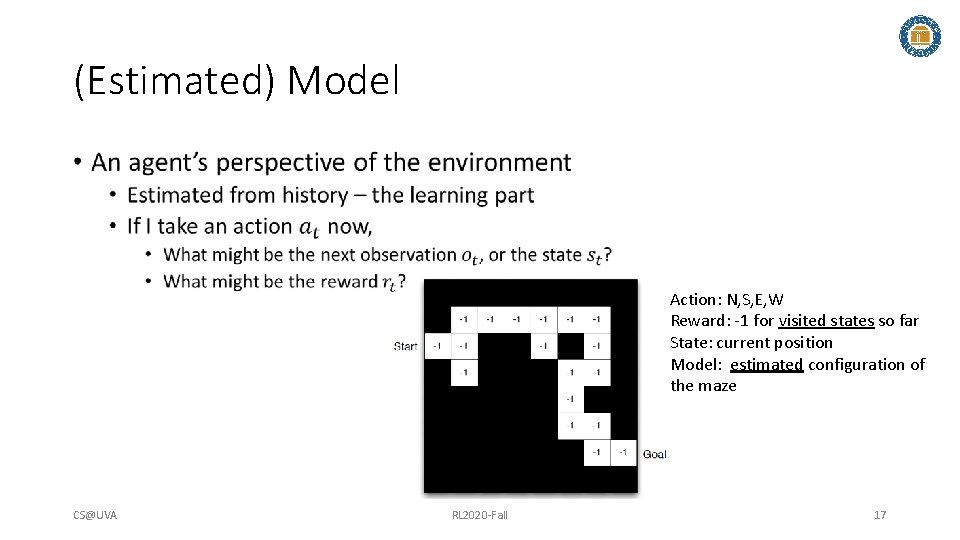

(Estimated) Model • Action: N, S, E, W Reward: -1 for visited states so far State: current position Model: estimated configuration of the maze CS@UVA RL 2020 -Fall 17

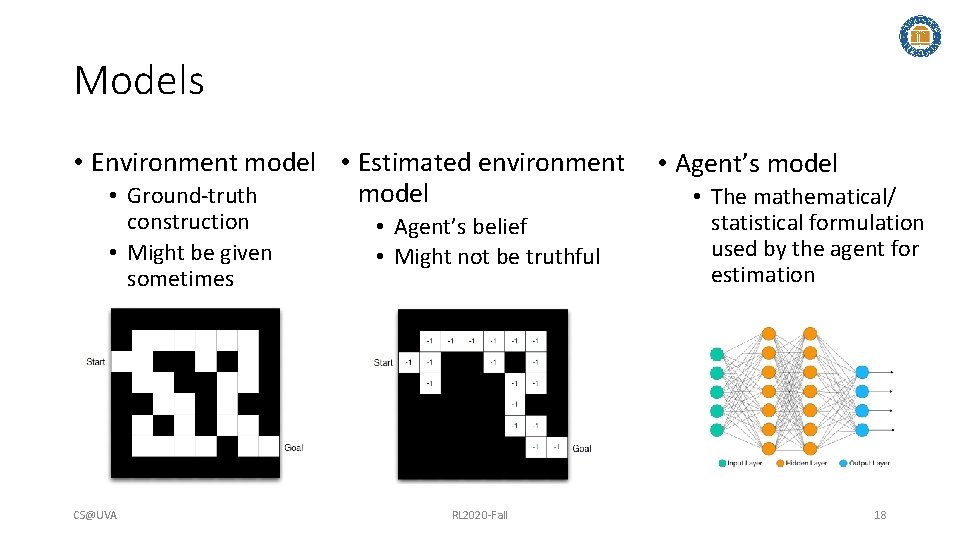

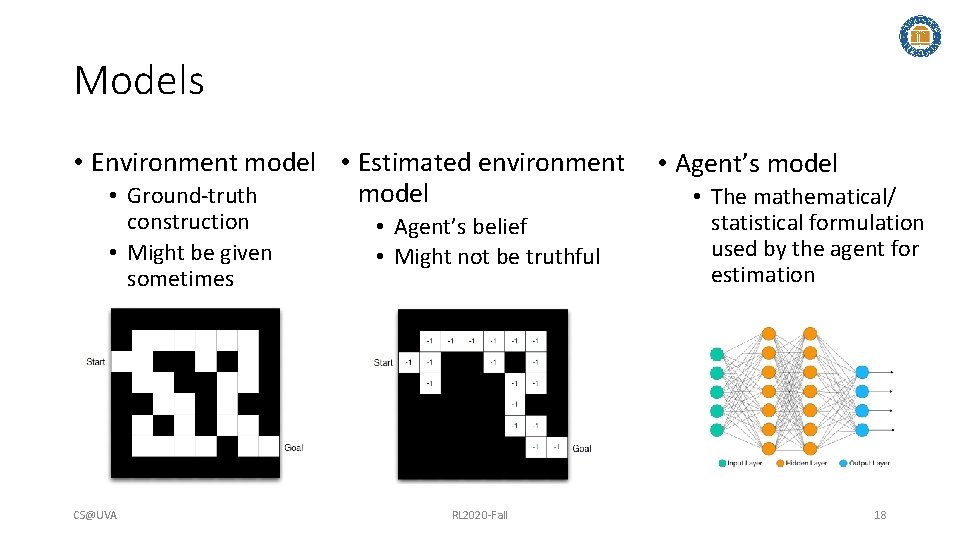

Models • Environment model • Estimated environment model • Ground-truth construction • Might be given sometimes CS@UVA • Agent’s belief • Might not be truthful RL 2020 -Fall • Agent’s model • The mathematical/ statistical formulation used by the agent for estimation 18

Takeaways • RL agents take actions with respect to history/state • Their goal is to find highest value states • Model is about the environment, and can be estimated by the agent CS@UVA RL 2020 -Fall 19

Hongning wang

Hongning wang Hongning wang

Hongning wang Hongning wang

Hongning wang Hongning wang

Hongning wang Cs 6501

Cs 6501 Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Apprenticeship learning via inverse reinforcement learning

Apprenticeship learning via inverse reinforcement learning Active learning reinforcement learning

Active learning reinforcement learning Sexondary reinforcer

Sexondary reinforcer Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Search engine architecture

Search engine architecture Csuva

Csuva Cuadro comparativo de e-learning

Cuadro comparativo de e-learning What is optimal policy in reinforcement learning

What is optimal policy in reinforcement learning