Edgetoedge Control Congestion Avoidance and Service Differentiation for

- Slides: 44

Edge-to-edge Control: Congestion Avoidance and Service Differentiation for the Internet David Harrison Rensselaer Polytechnic Institute harrisod@cs. rpi. edu http: //networks. ecse. rpi. edu/~harrisod David Harrison Rensselaer Polytechnic Institute 1

Outline q Qo. S for Multi-Provider Private Networks q Edge-to-Edge Control Architecture q Riviera Congestion Avoidance q Trunk Service Building Blocks q. Weighted Sharing q. Guaranteed Bandwidth q. Assured Bandwidth David Harrison Rensselaer Polytechnic Institute 2

Qo. S for Multi-Provider Private Networks q Principle Problems q. Coordination: scheduled upgrades, crossprovider agreements q. Scale: thousands-millions connections, Gbps. q. Heterogeneity: many datalink layers, 48 kbps to >10 Gbps David Harrison Rensselaer Polytechnic Institute 3

Single Vs. Multi-Provider Solutions q ATM and frame relay operate on single datalink layer. q All intermediate providers must agree on a common infrastructure. Requires upgrades throughout the network. Coordination to eliminate heterogeneity. q Or operate at lowest common denominator. q Overprovision: Operate at single digit utilization. q More bandwidth than sum of access points. q 1700 DSL (at 1. 5 Mbps) or 60 T 3 (at 45 Mbps) DDo. S swamps an OC -48 (2. 4 Gbps). Peering points often last upgraded in each upgrade cycle. Performance between MY customers more important. q Hard for multi-provider scenarios. q David Harrison Rensselaer Polytechnic Institute 4

Scalability Issues q Traditional solutions: q Use Qo. S: q. ATM, Int. Serv: per-flow/per-VC scheduling at every hop. q. Frame Relay: Drop preference, per-VC routing at every hop. q. Diff. Serv: per-class (eg: high, low priority) scheduling, drop preference at every hop. Perflow Qo. S done only at network boundaries (edges). David Harrison Rensselaer Polytechnic Institute 5

Edge-to-Edge Control (EC) Over-engineered Domain EC class Provider 1 End-toend Flows Peering Point EC Egress Provider 2 P I Peering Point P Best-effort or other DS class(es) E Provider 3 EC Ingress Edge-to-edge control loop (trunk) Use Edge-to-edge congestion Control to push queuing, packet loss and perflow bandwidth sharing issues to edges (e. g. access router) of the network David Harrison Rensselaer Polytechnic Institute 6

Qo. S via Edge-to-Edge Congestion Control q q Benefits: q Conquers scale and heterogeneity in same sense as TCP. q Allows Qo. S without upgrades to either end-systems or intermediate networks. q Only incremental upgrade of edges (e. g. , customer premise access point). q Bottleneck is Co. S FIFO. q Edge knows congestion state and can apply stateful Qo. S mechanisms. Drawbacks: q Congestion control cannot react faster then propagation delay. Loose control of delay and delay variance. q Only appropriate for data and streaming (non-live) multimedia. q Must configure edges and potential bottlenecks. David Harrison Rensselaer Polytechnic Institute 7

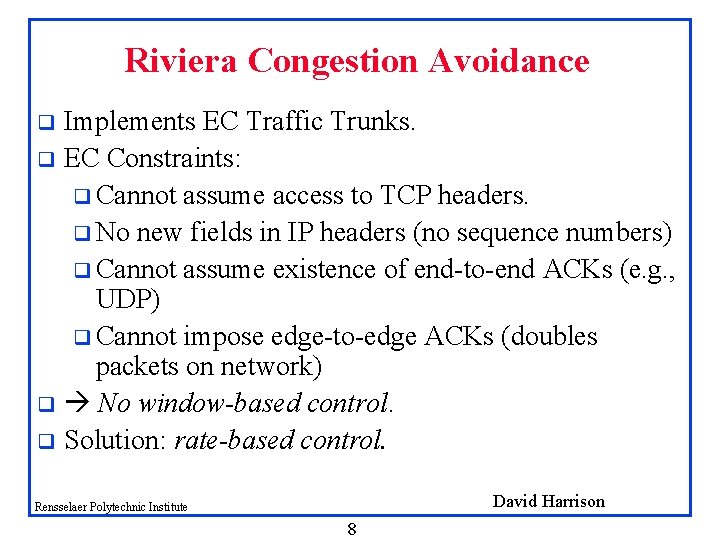

Riviera Congestion Avoidance Implements EC Traffic Trunks. q EC Constraints: q Cannot assume access to TCP headers. q No new fields in IP headers (no sequence numbers) q Cannot assume existence of end-to-end ACKs (e. g. , UDP) q Cannot impose edge-to-edge ACKs (doubles packets on network) q No window-based control. q Solution: rate-based control. q David Harrison Rensselaer Polytechnic Institute 8

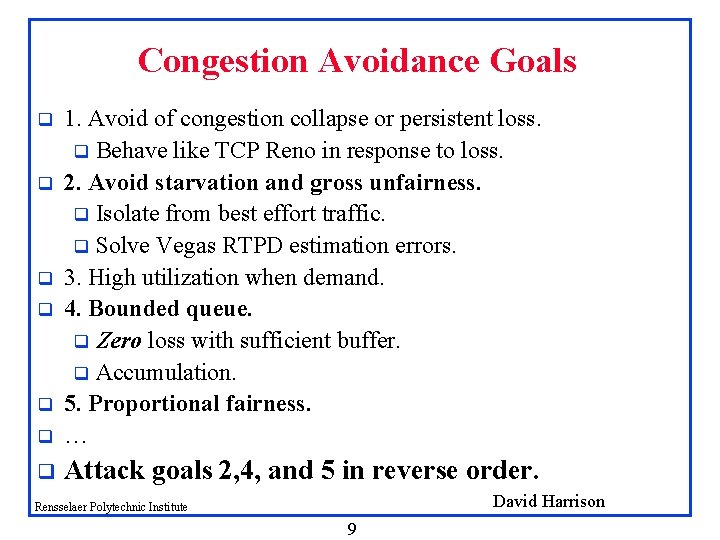

Congestion Avoidance Goals q 1. Avoid of congestion collapse or persistent loss. q Behave like TCP Reno in response to loss. 2. Avoid starvation and gross unfairness. q Isolate from best effort traffic. q Solve Vegas RTPD estimation errors. 3. High utilization when demand. 4. Bounded queue. q Zero loss with sufficient buffer. q Accumulation. 5. Proportional fairness. … q Attack goals 2, 4, and 5 in reverse order. q q q David Harrison Rensselaer Polytechnic Institute 9

Mechanisms for Fairness and Bounded Queue q Estimate this control loop’s backlog in path. If backlog > max_thresh Congestion = true Else if backlog <= min_thresh Congestion = false q All control loops try to maintain between min_thresh and max_thresh backlog in path. q q bounded queue (Goal 4) Each control loop has roughly equal backlog in path proportional fairness [Low] (Goal 5) Well come back to goal 5. David Harrison Rensselaer Polytechnic Institute 10

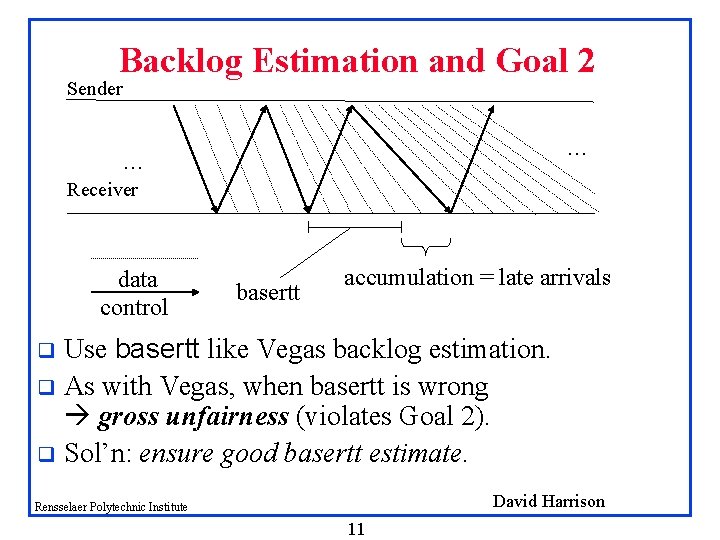

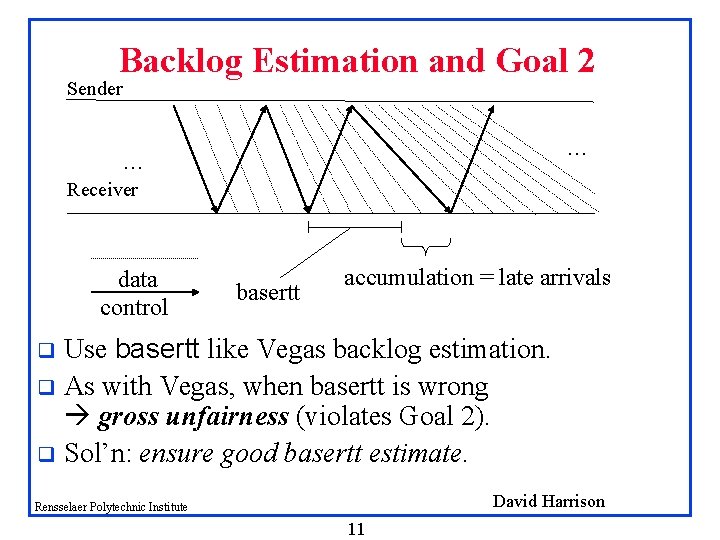

Backlog Estimation and Goal 2 Sender … … Receiver data control basertt accumulation = late arrivals Use basertt like Vegas backlog estimation. q As with Vegas, when basertt is wrong gross unfairness (violates Goal 2). q Sol’n: ensure good basertt estimate. q David Harrison Rensselaer Polytechnic Institute 11

Vegas & Delay Increase (Goal 2) q q Vegas sets basertt to the minimum RTT seen so far. GROSS UNFAIRNESS! David Harrison Rensselaer Polytechnic Institute 12

Riviera Round-trip Propagation Delay (RTPD) Estimation (Goal 2) Reduce gross unfairness w/ good RTPD estimation. q Minimum of last k=30 control packet RTTs. q Drain queues in path so RTT in last k RTTs likely reflects RTPD. q Set max_thresh high enough to avoid excessive false positives. q Set min_thresh low enough to ensure queue drain. q Provision drain capacity with each decrease step q David Harrison Rensselaer Polytechnic Institute 13

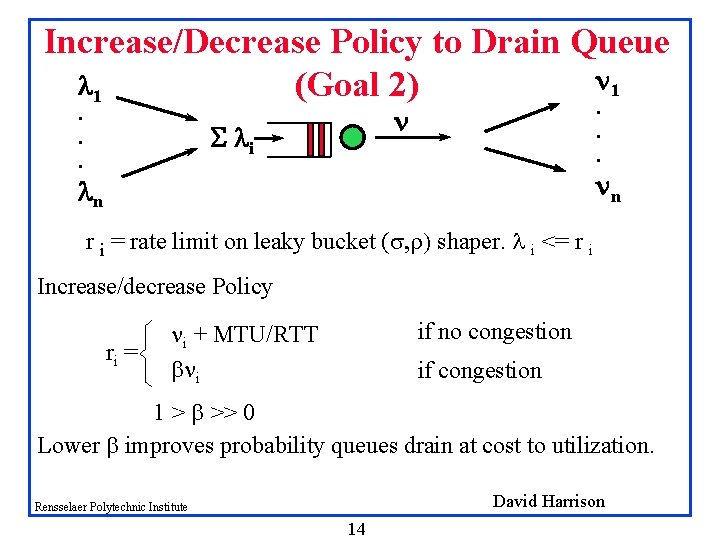

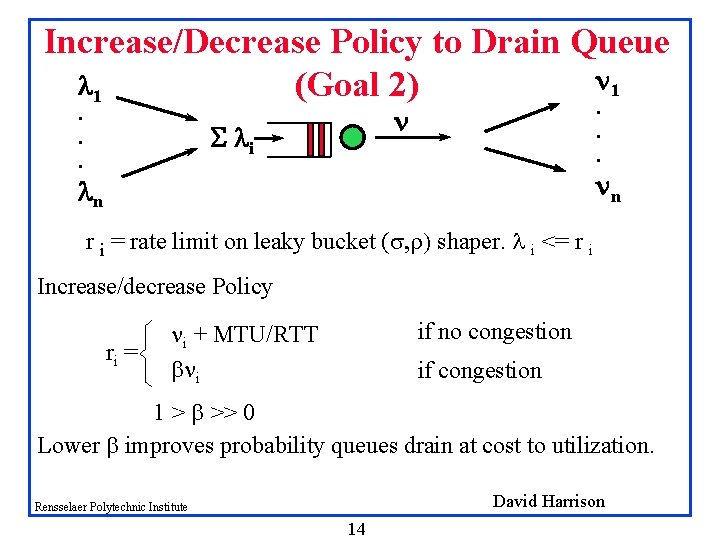

Increase/Decrease Policy to Drain Queue n 1 1 (Goal 2). . . n i nn n r i = rate limit on leaky bucket (s, r) shaper. i <= r i Increase/decrease Policy ri = if no congestion ni + MTU/RTT bni if congestion 1 > b >> 0 Lower b improves probability queues drain at cost to utilization. David Harrison Rensselaer Polytechnic Institute 14

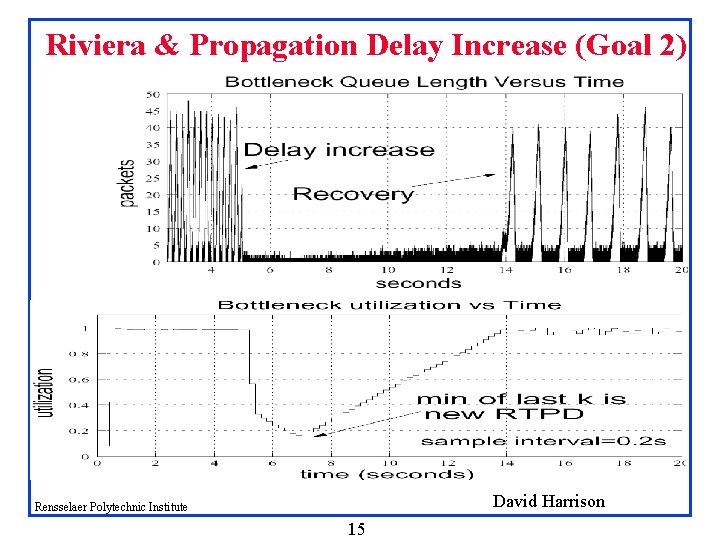

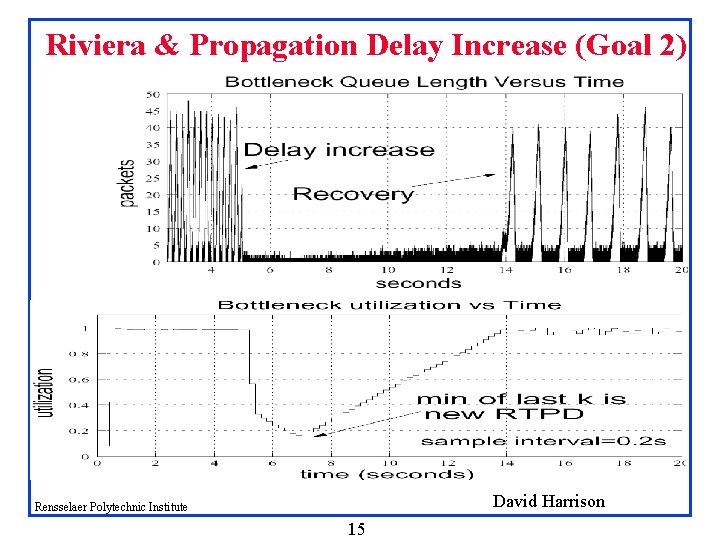

Riviera & Propagation Delay Increase (Goal 2) David Harrison Rensselaer Polytechnic Institute 15

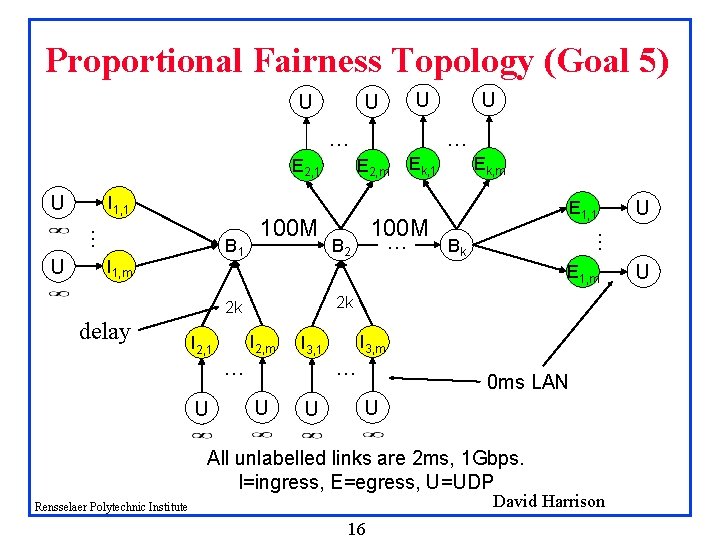

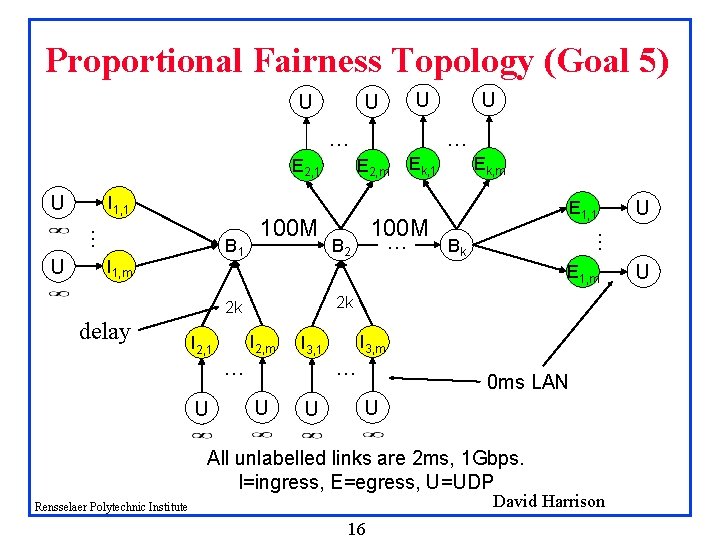

Proportional Fairness Topology (Goal 5) U U U … E 2, 1 U I 1, 1 I 1, m E 2, m Ek, 1 100 M … B 2 Ek, m E 1, 1 Bk E 1, m 2 k 2 k delay … I 2, m I 2, 1 … U I 3, m I 3, 1 … U U 0 ms LAN U All unlabelled links are 2 ms, 1 Gbps. I=ingress, E=egress, U=UDP David Harrison Rensselaer Polytechnic Institute 16 U … … U B 1 100 M U U

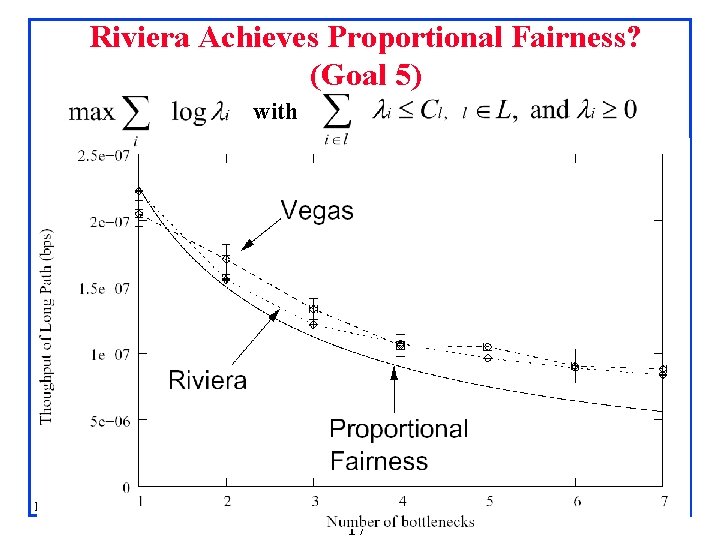

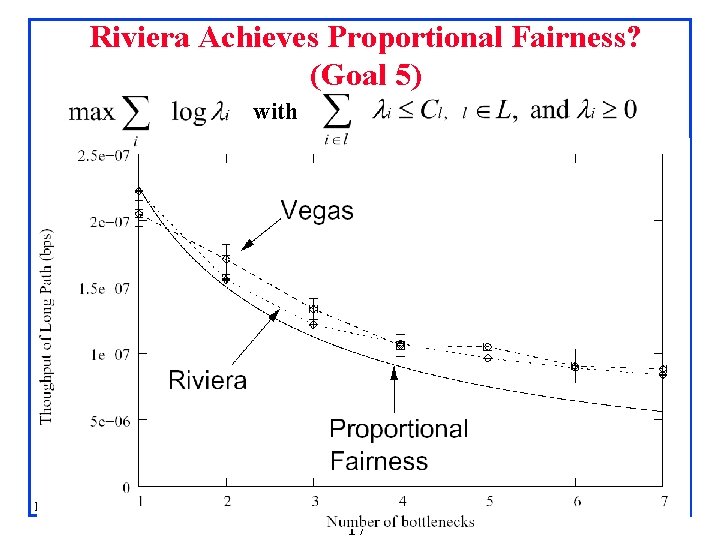

Riviera Achieves Proportional Fairness? (Goal 5) with David Harrison Rensselaer Polytechnic Institute 17

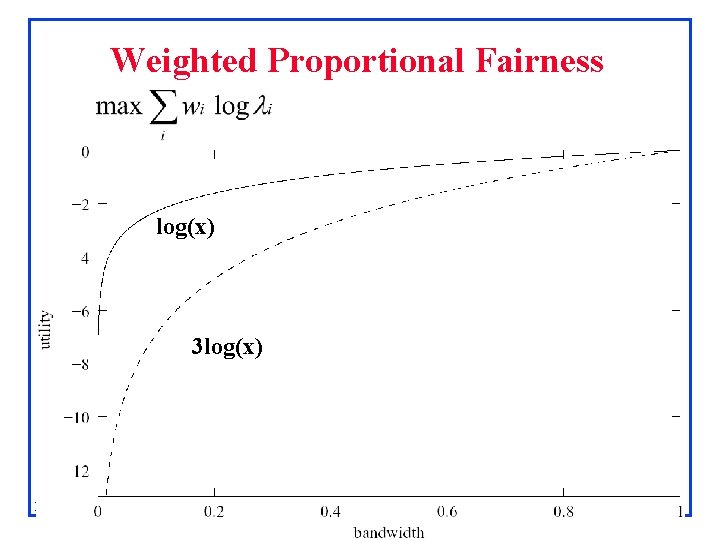

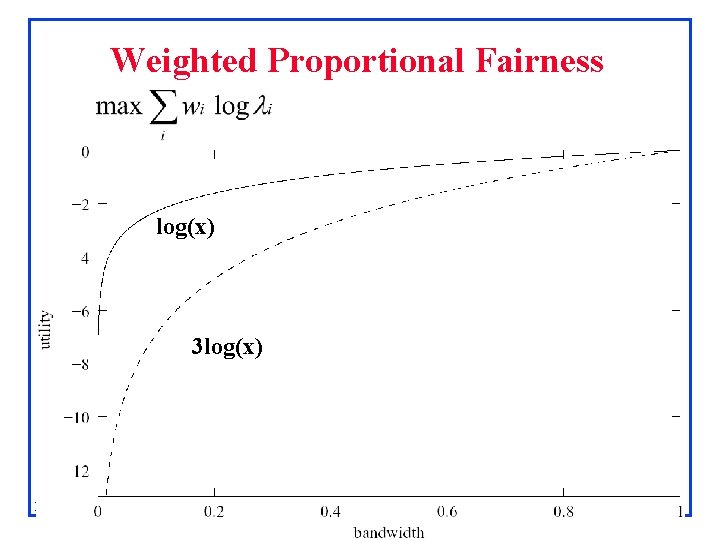

Weighted Proportional Fairness log(x) 3 log(x) David Harrison Rensselaer Polytechnic Institute 18

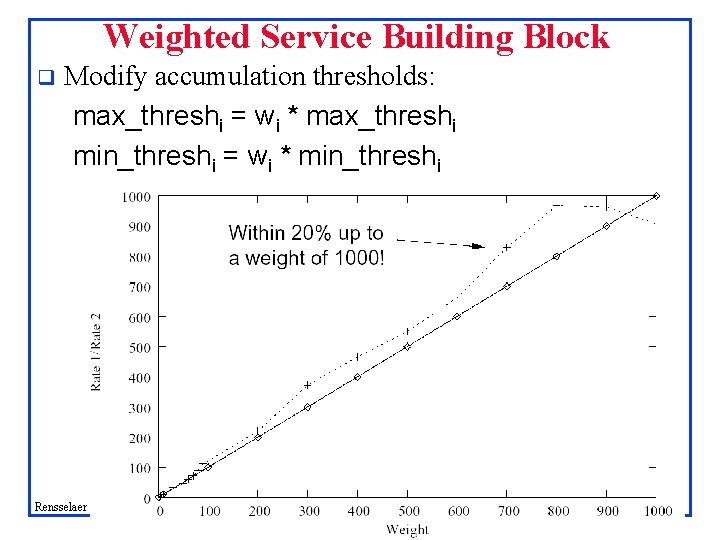

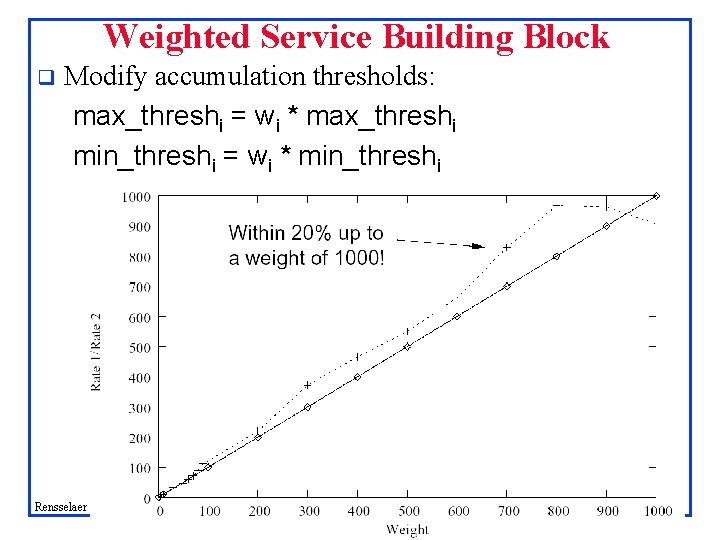

Weighted Service Building Block q Modify accumulation thresholds: max_threshi = wi * max_threshi min_threshi = wi * min_threshi David Harrison Rensselaer Polytechnic Institute 19

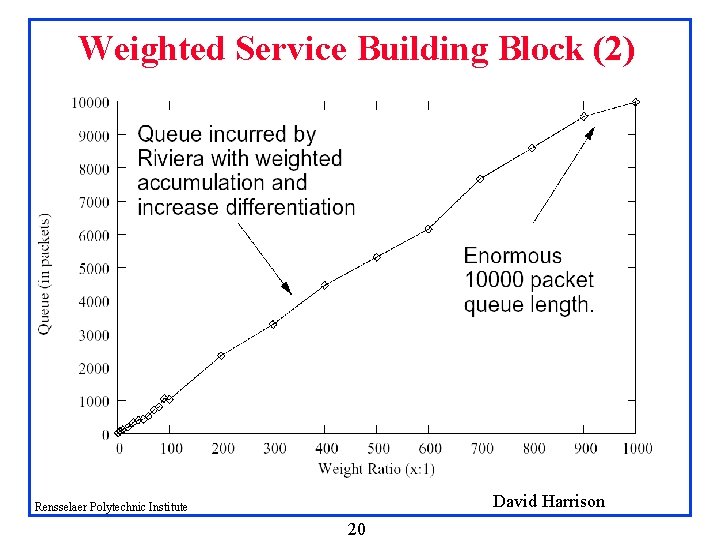

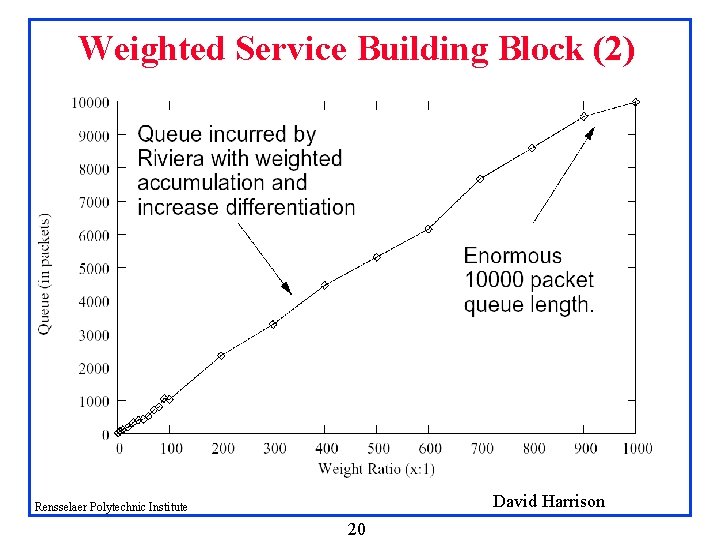

Weighted Service Building Block (2) David Harrison Rensselaer Polytechnic Institute 20

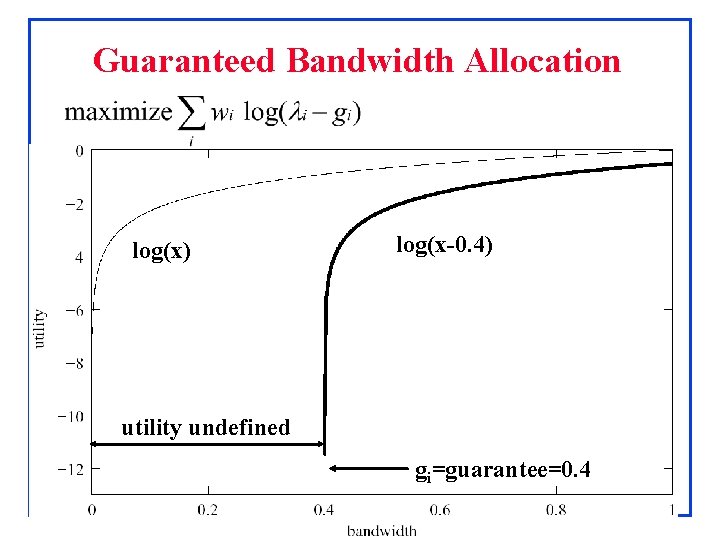

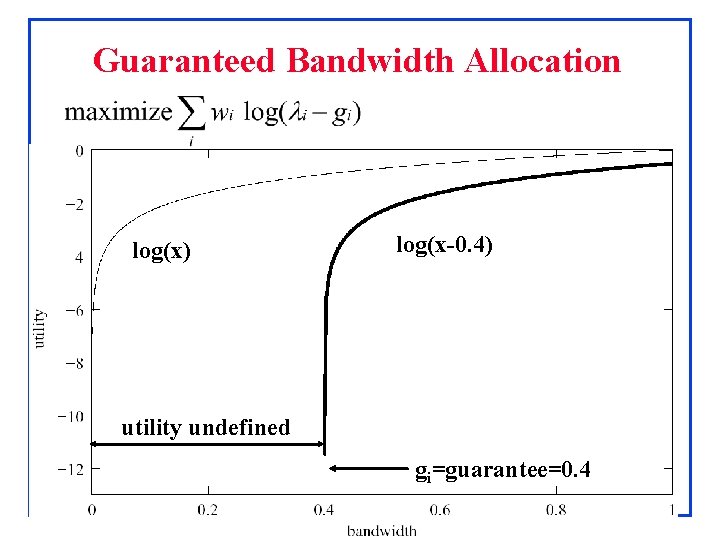

Guaranteed Bandwidth Allocation log(x-0. 4) log(x) utility undefined gi=guarantee=0. 4 David Harrison Rensselaer Polytechnic Institute 21

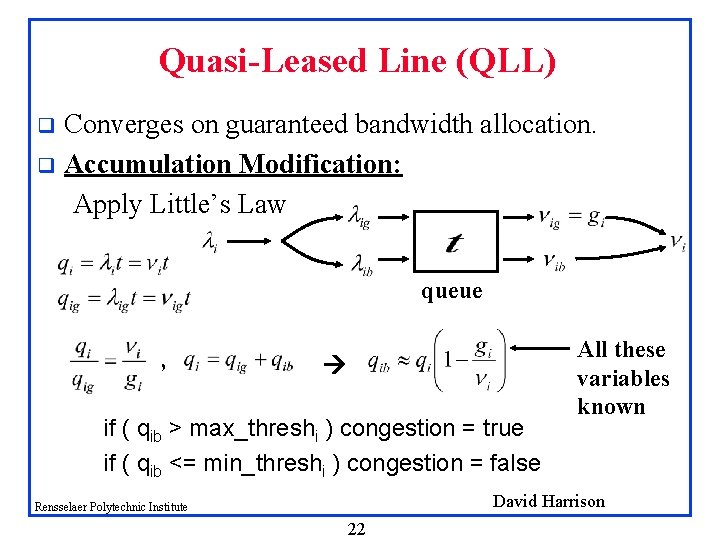

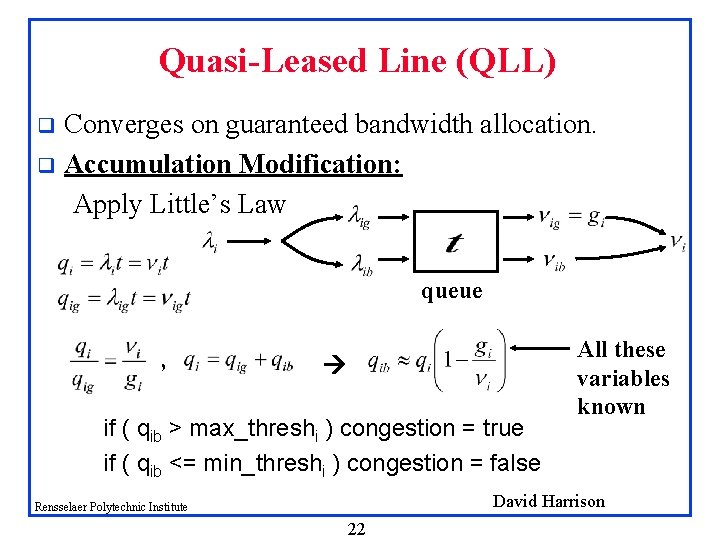

Quasi-Leased Line (QLL) Converges on guaranteed bandwidth allocation. q Accumulation Modification: Apply Little’s Law q queue , if ( qib > max_threshi ) congestion = true if ( qib <= min_threshi ) congestion = false All these variables known David Harrison Rensselaer Polytechnic Institute 22

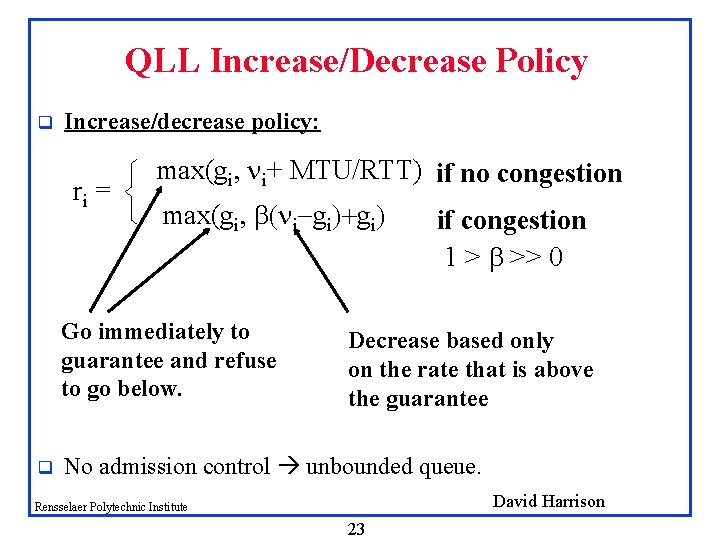

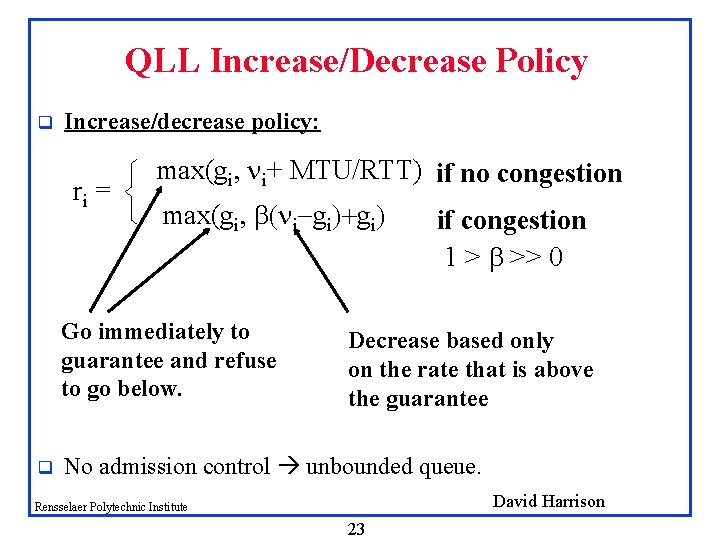

QLL Increase/Decrease Policy q Increase/decrease policy: ri = max(gi, ni+ MTU/RTT) if no congestion max(gi, b(ni-gi)+gi) if congestion 1 > b >> 0 Go immediately to guarantee and refuse to go below. q Decrease based only on the rate that is above the guarantee No admission control unbounded queue. David Harrison Rensselaer Polytechnic Institute 23

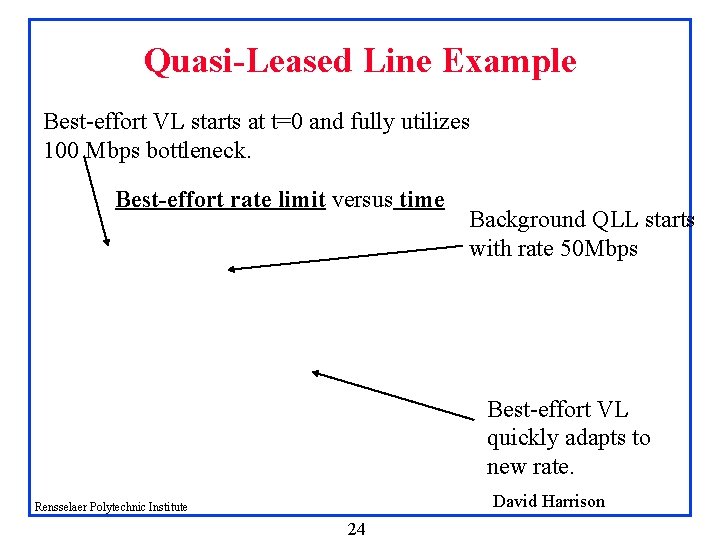

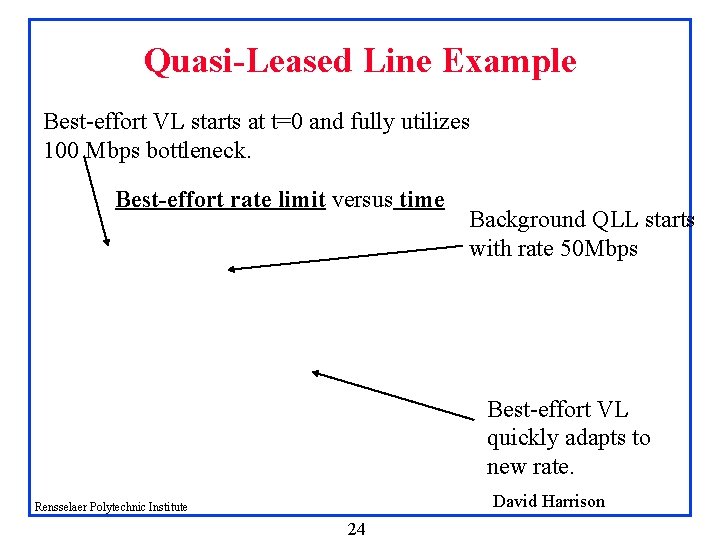

Quasi-Leased Line Example Best-effort VL starts at t=0 and fully utilizes 100 Mbps bottleneck. Best-effort rate limit versus time Background QLL starts with rate 50 Mbps Best-effort VL quickly adapts to new rate. David Harrison Rensselaer Polytechnic Institute 24

Quasi-Leased Line Example (cont. ) Bottleneck queue versus time Starting QLL incurs backlog. Unlike TCP, VL traffic trunks backoff without requiring loss and without bottleneck assistance. Requires more buffers: larger max queue David Harrison Rensselaer Polytechnic Institute 25

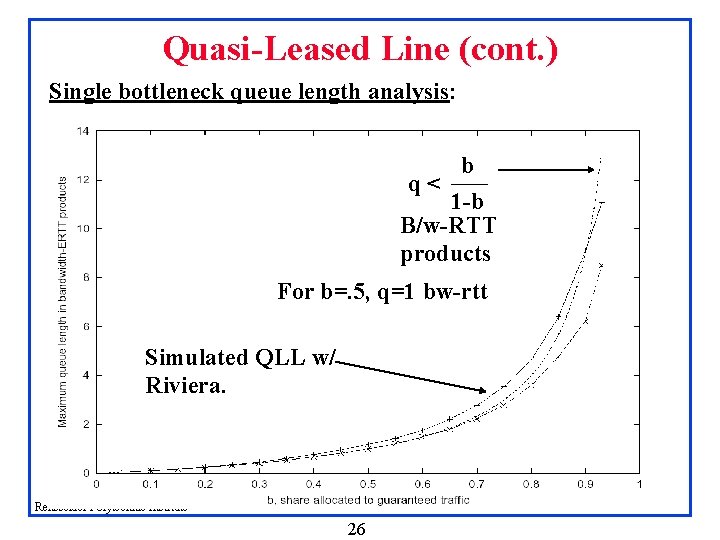

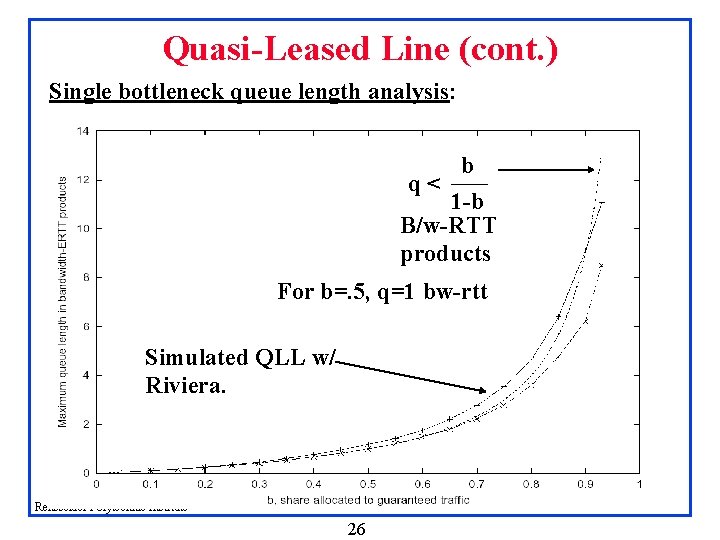

Quasi-Leased Line (cont. ) Single bottleneck queue length analysis: b q< 1 -b B/w-RTT products For b=. 5, q=1 bw-rtt Simulated QLL w/ Riviera. David Harrison Rensselaer Polytechnic Institute 26

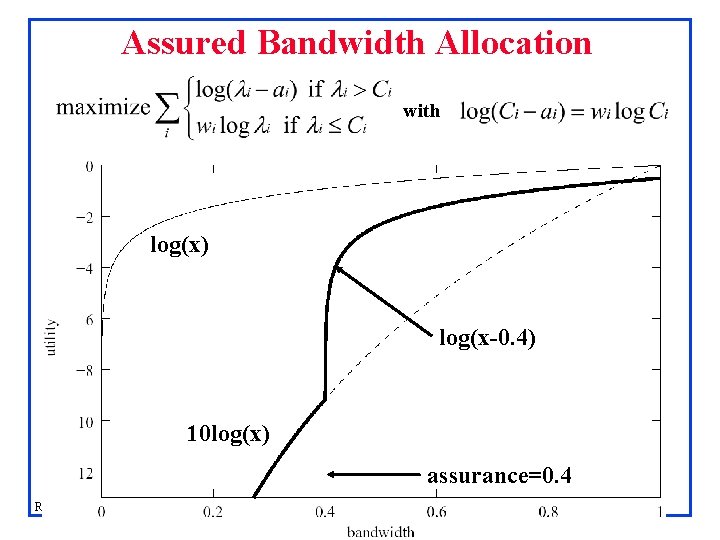

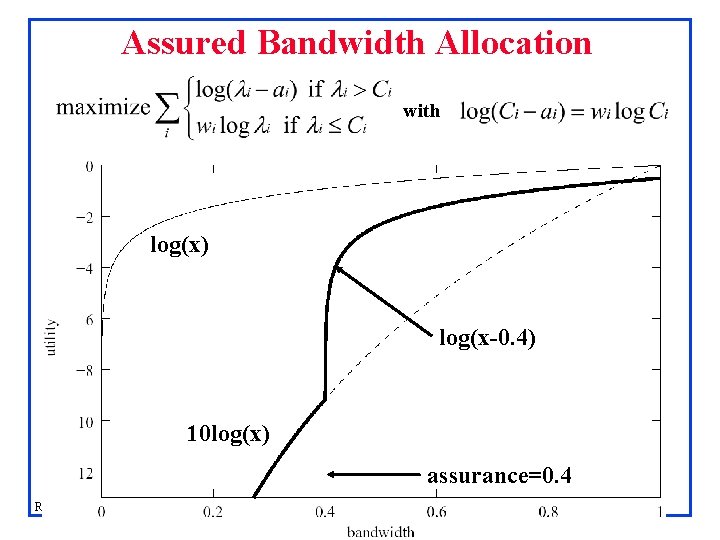

Assured Bandwidth Allocation with log(x) log(x-0. 4) 10 log(x) assurance=0. 4 David Harrison Rensselaer Polytechnic Institute 27

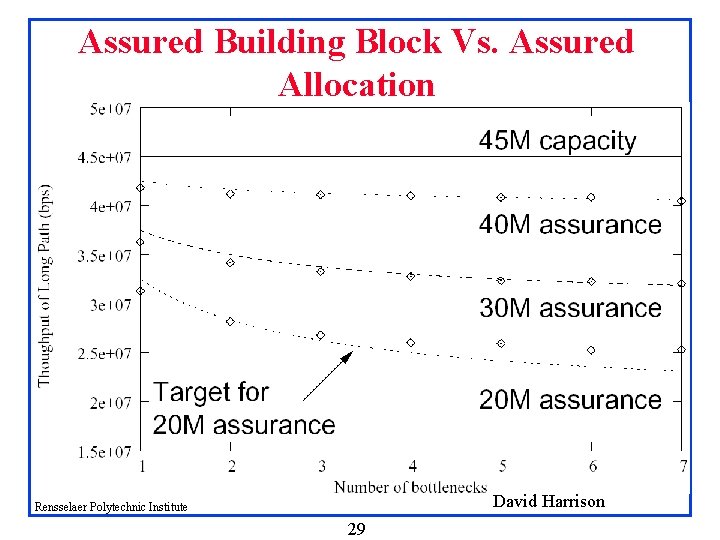

Assured Building Block q Accumulation: if (qib > max_thresh || qi > wi * max_thresh ) congestion = true else if ( qib <= min_thresh && qi <= wi * max_thresh ) congestion = false q q Increase/Decrease Policy: if no congestion ni + MTU/RTT ri = min(b. AS ni, b. BE(ni-ai)+ai) if congestion 1 > b. AS > b. BE >> 0 Backoff little (bas) when below assurance (a), Backoff (bbe) same as best effort when above assurance (a) David Harrison Rensselaer Polytechnic Institute 28

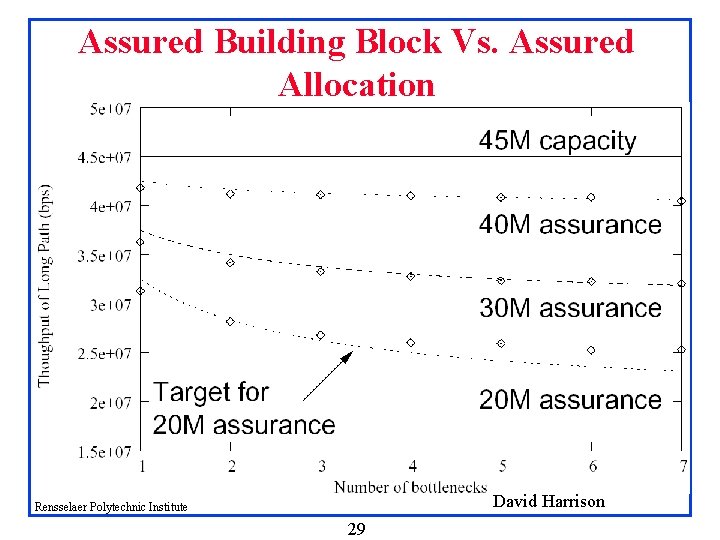

Assured Building Block Vs. Assured Allocation David Harrison Rensselaer Polytechnic Institute 29

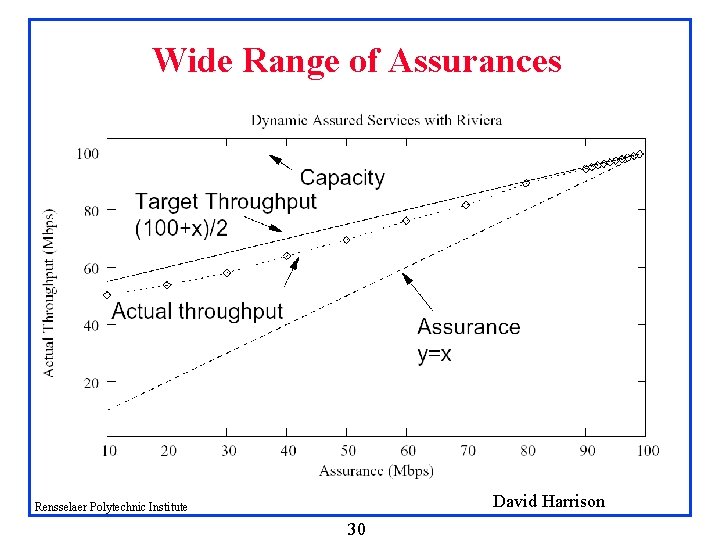

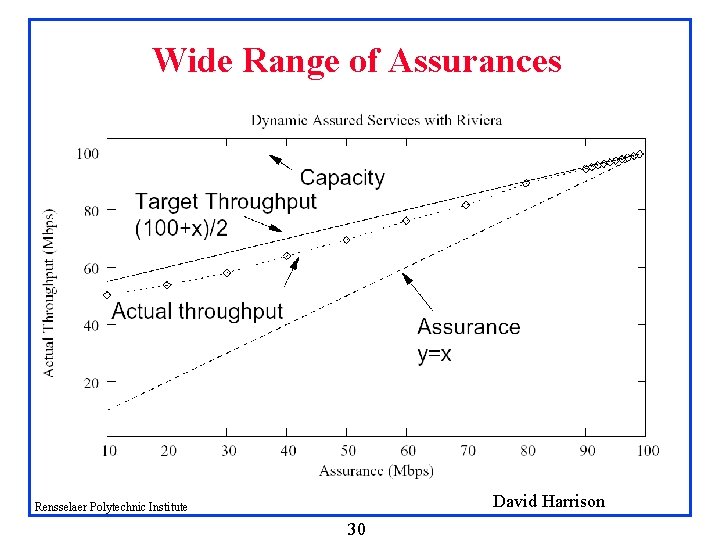

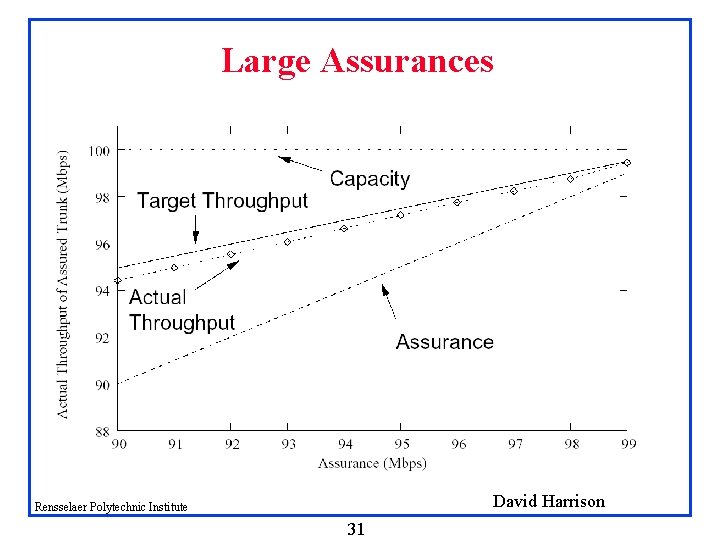

Wide Range of Assurances David Harrison Rensselaer Polytechnic Institute 30

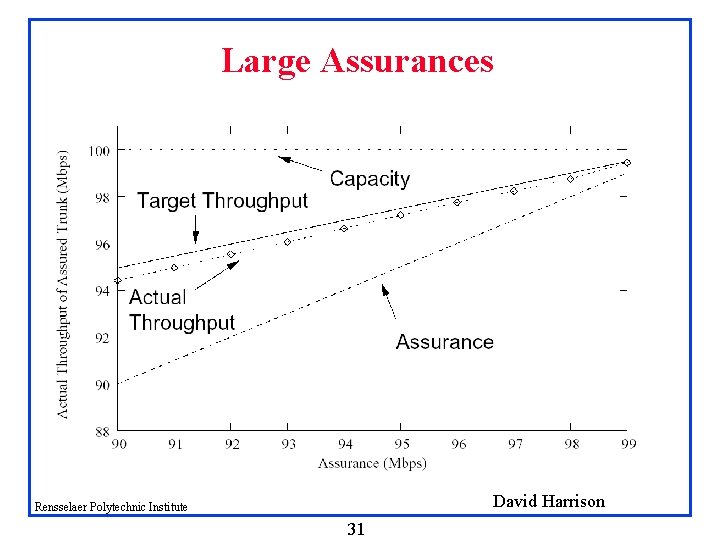

Large Assurances David Harrison Rensselaer Polytechnic Institute 31

Summary q q Issues: q Simplified overlay Qo. S architecture q Intangibles: deployment, configuration advantages Edge-based Building Blocks & Overlay services: q A closed-loop Qo. S building block q Weighted services, Assured services, Quasi-leased lines David Harrison Rensselaer Polytechnic Institute 32

Backup Slides David Harrison Rensselaer Polytechnic Institute 33

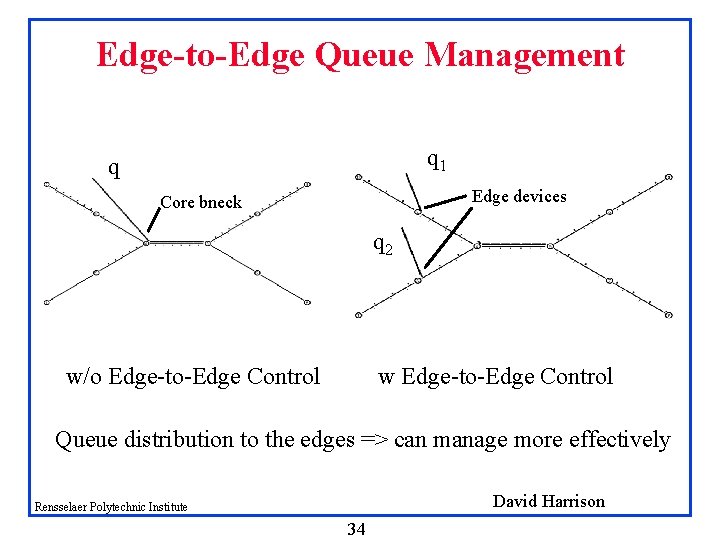

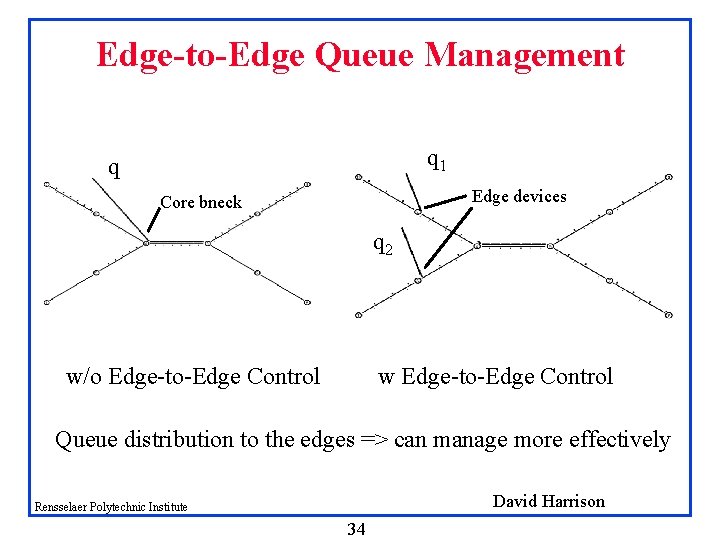

Edge-to-Edge Queue Management q 1 q Edge devices Core bneck q 2 w/o Edge-to-Edge Control w Edge-to-Edge Control Queue distribution to the edges => can manage more effectively David Harrison Rensselaer Polytechnic Institute 34

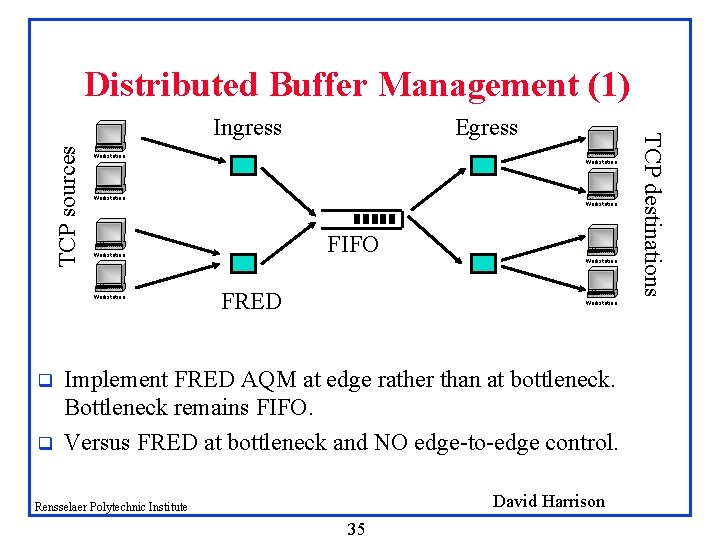

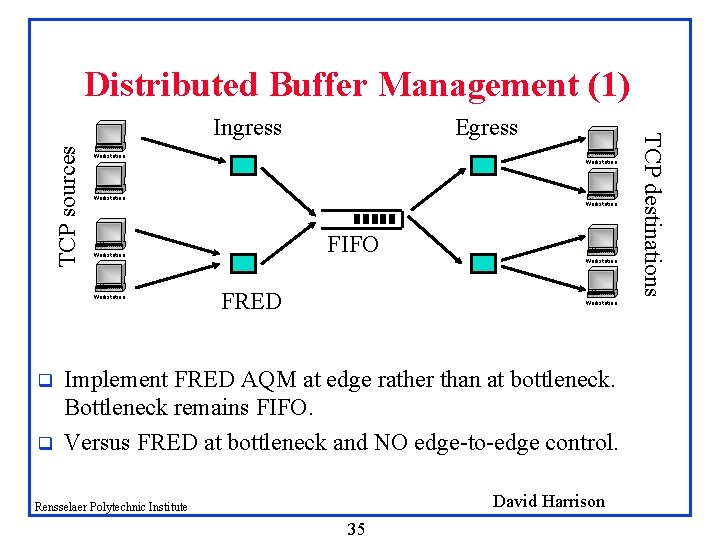

Distributed Buffer Management (1) TCP sources Workstation q Workstation FIFO Workstation q Egress FRED Workstation Implement FRED AQM at edge rather than at bottleneck. Bottleneck remains FIFO. Versus FRED at bottleneck and NO edge-to-edge control. David Harrison Rensselaer Polytechnic Institute 35 TCP destinations Ingress

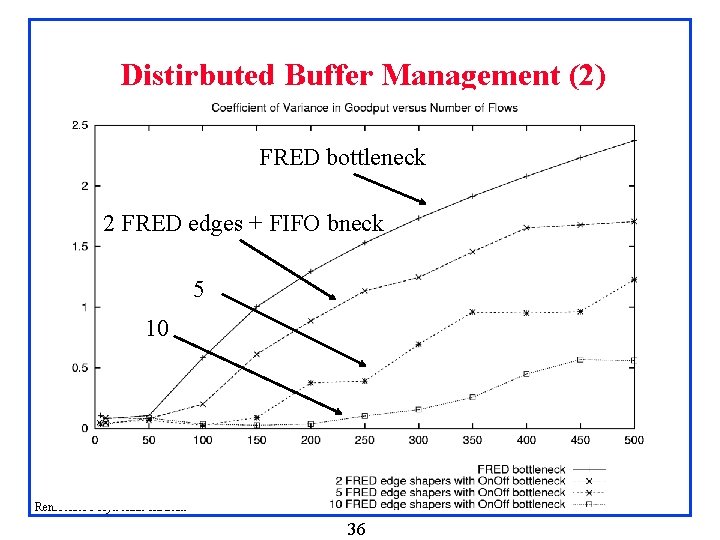

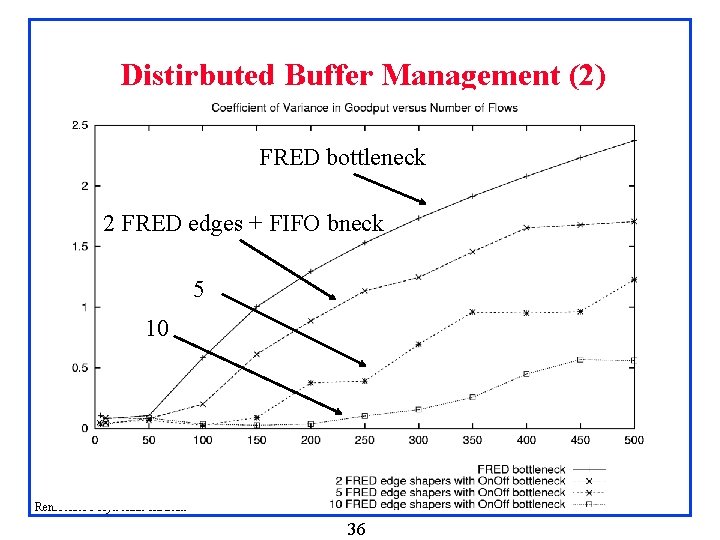

Distirbuted Buffer Management (2) FRED bottleneck 2 FRED edges + FIFO bneck 5 10 David Harrison Rensselaer Polytechnic Institute 36

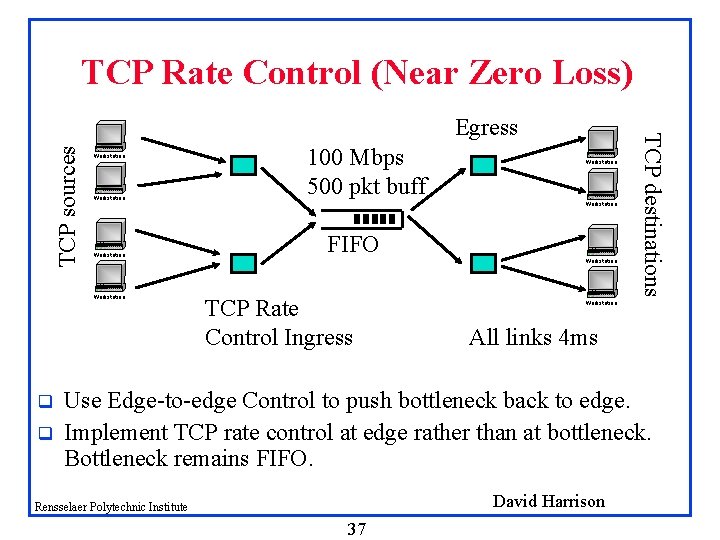

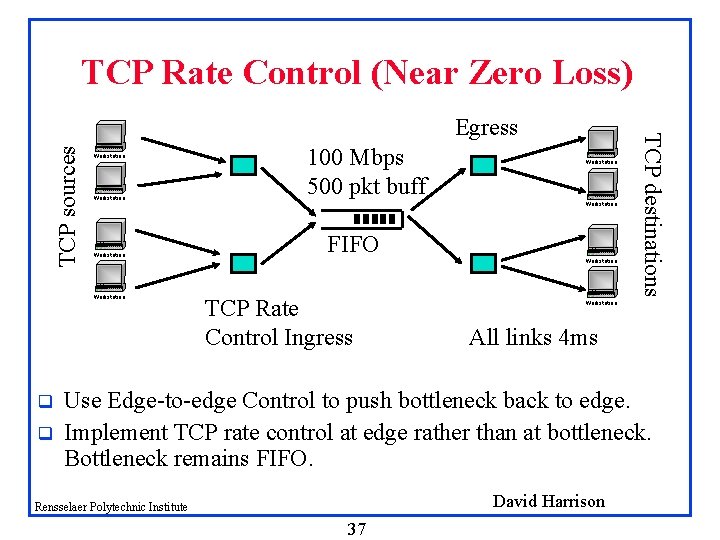

TCP Rate Control (Near Zero Loss) TCP sources Workstation q q 100 Mbps 500 pkt buff Workstation FIFO TCP Rate Control Ingress Workstation TCP destinations Egress Workstation All links 4 ms Use Edge-to-edge Control to push bottleneck back to edge. Implement TCP rate control at edge rather than at bottleneck. Bottleneck remains FIFO. David Harrison Rensselaer Polytechnic Institute 37

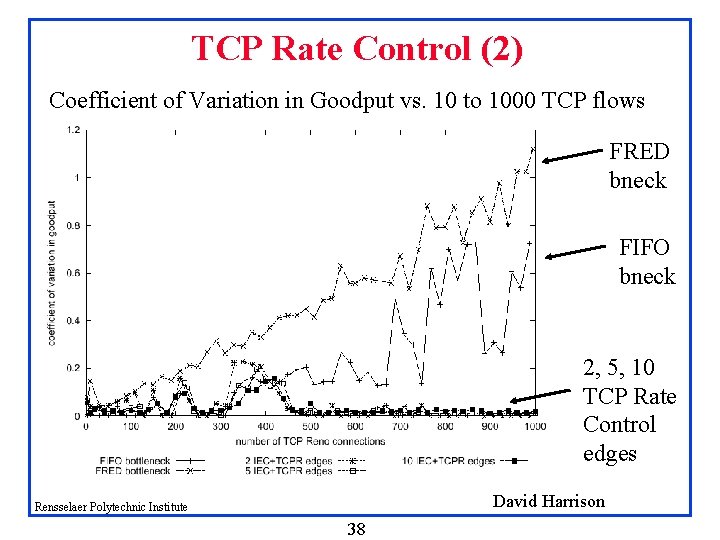

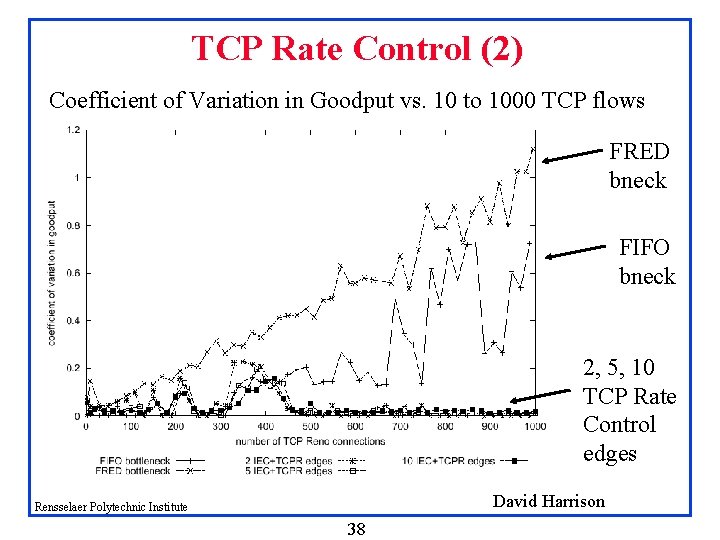

TCP Rate Control (2) Coefficient of Variation in Goodput vs. 10 to 1000 TCP flows FRED bneck FIFO bneck 2, 5, 10 TCP Rate Control edges David Harrison Rensselaer Polytechnic Institute 38

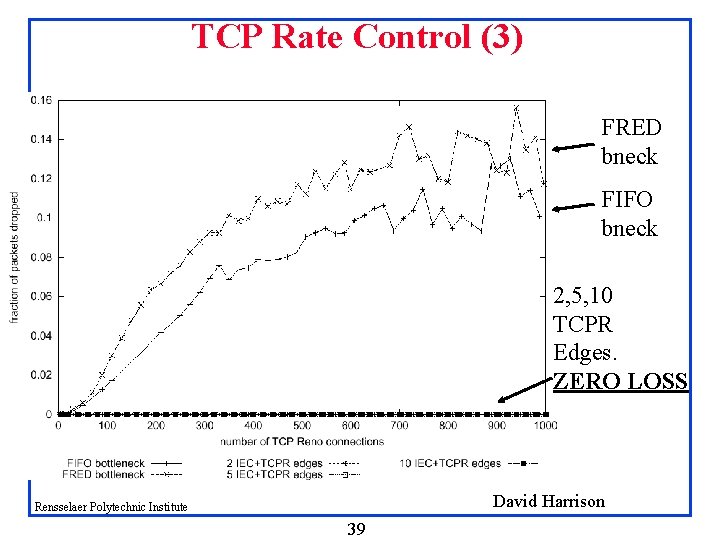

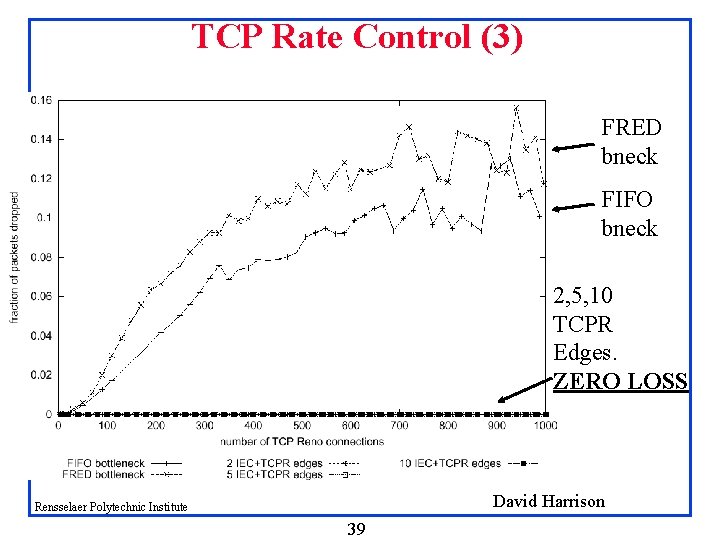

TCP Rate Control (3) FRED bneck FIFO bneck 2, 5, 10 TCPR Edges. ZERO LOSS David Harrison Rensselaer Polytechnic Institute 39

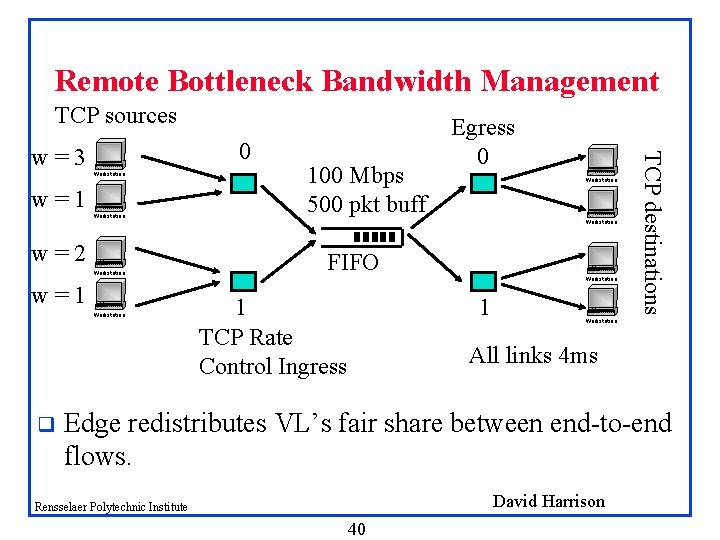

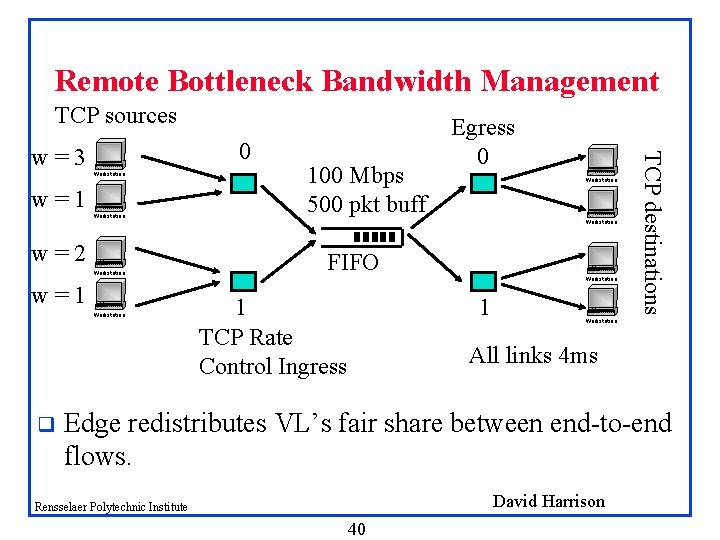

Remote Bottleneck Bandwidth Management TCP sources Workstation w=1 Workstation w=2 Workstation w=1 Workstation q 100 Mbps 500 pkt buff Workstation FIFO 1 TCP Rate Control Ingress Workstation 1 TCP destinations 0 w=3 Egress 0 Workstation All links 4 ms Edge redistributes VL’s fair share between end-to-end flows. David Harrison Rensselaer Polytechnic Institute 40

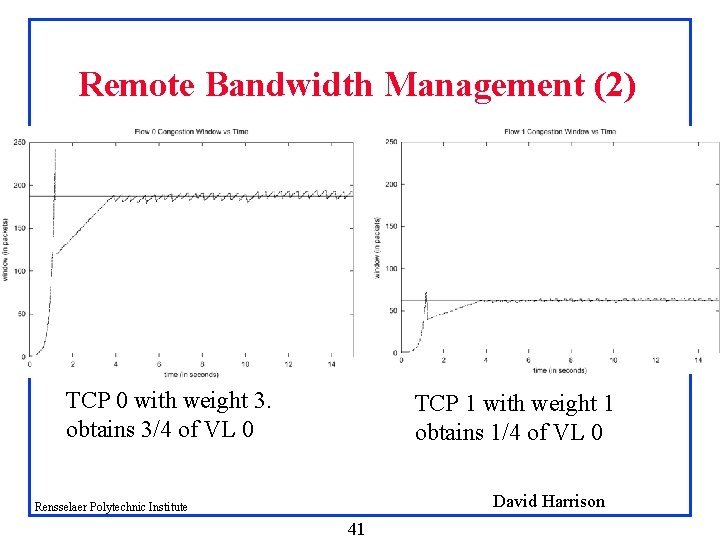

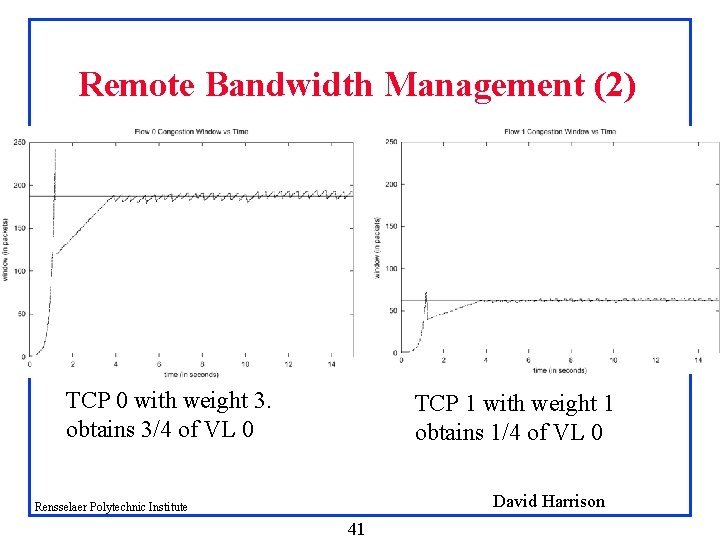

Remote Bandwidth Management (2) TCP 0 with weight 3. obtains 3/4 of VL 0 TCP 1 with weight 1 obtains 1/4 of VL 0 David Harrison Rensselaer Polytechnic Institute 41

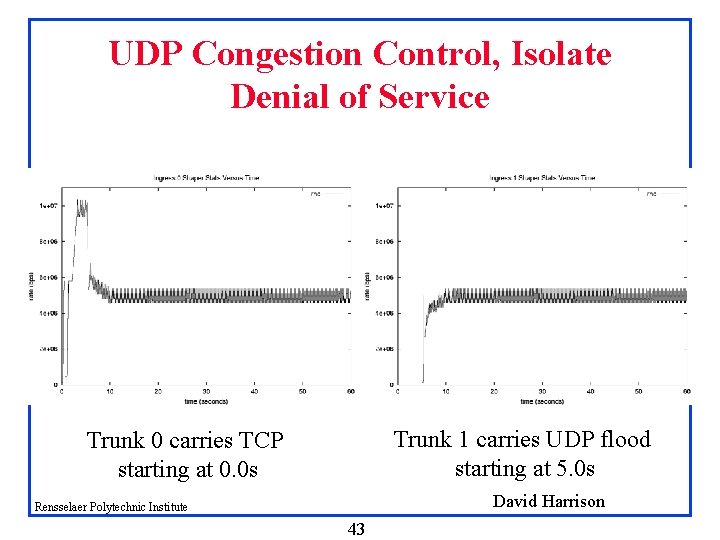

UDP Congestion Control, Isolate Denial of Service TCP source Ingress 0 10 Mbps Egress 0 Workstation TCP dest Workstation FIFO Workstation UDP source floods networks 1 1 Workstation UDP dest David Harrison Rensselaer Polytechnic Institute 42

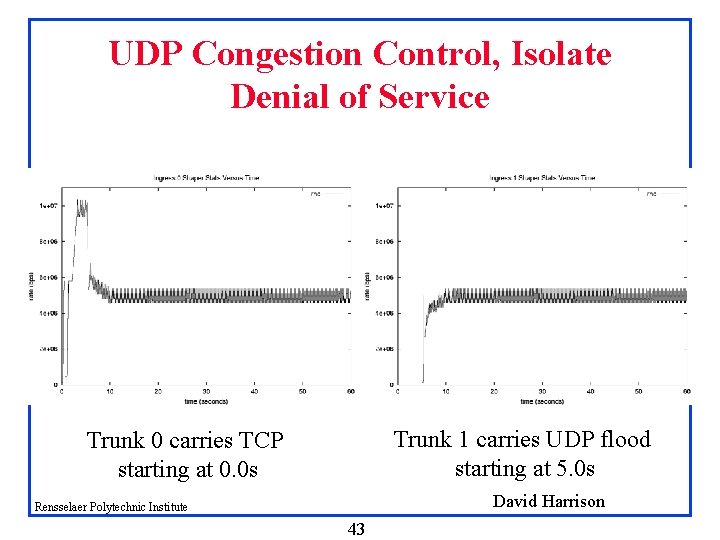

UDP Congestion Control, Isolate Denial of Service Trunk 1 carries UDP flood starting at 5. 0 s Trunk 0 carries TCP starting at 0. 0 s David Harrison Rensselaer Polytechnic Institute 43

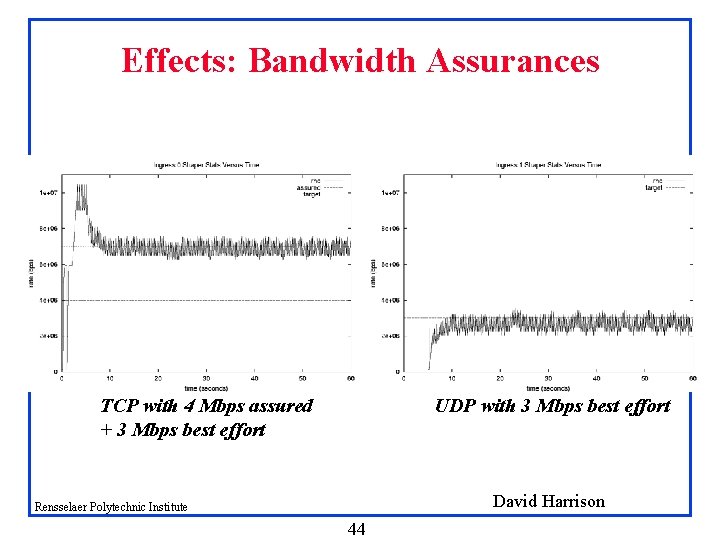

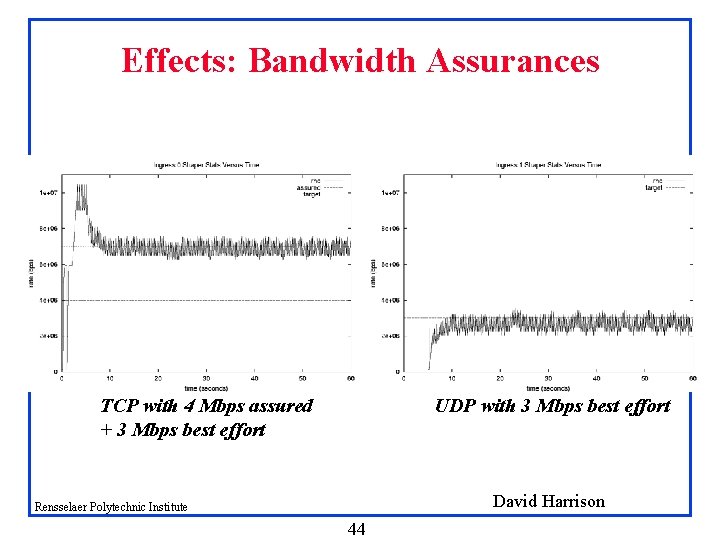

Effects: Bandwidth Assurances TCP with 4 Mbps assured + 3 Mbps best effort UDP with 3 Mbps best effort David Harrison Rensselaer Polytechnic Institute 44