Chapter 5 Overfitting and Its Avoidance R 16014101

- Slides: 17

Chapter 5 Overfitting and Its Avoidance 指 導 教 授 : 徐 立 群教 學 生 授 : R 16014101 陳怡齊 R 16011234 吳年鑫

Overfitting & Generalization p A extreme example – p Customer churn or non-churn p Training data & Holdout data

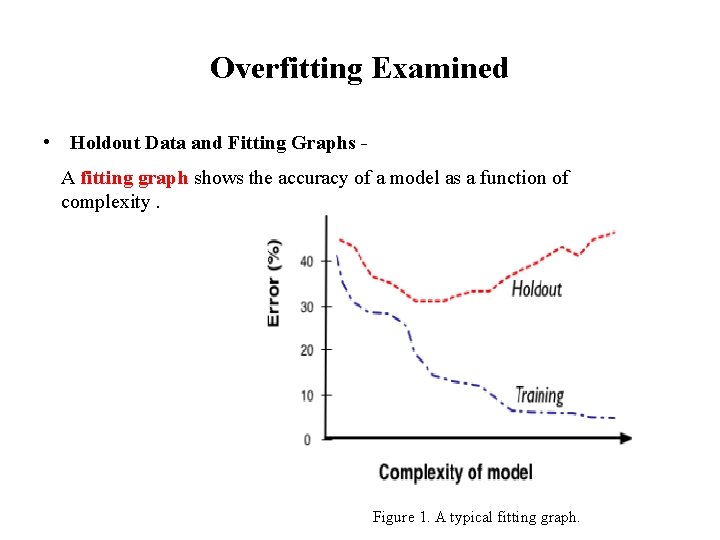

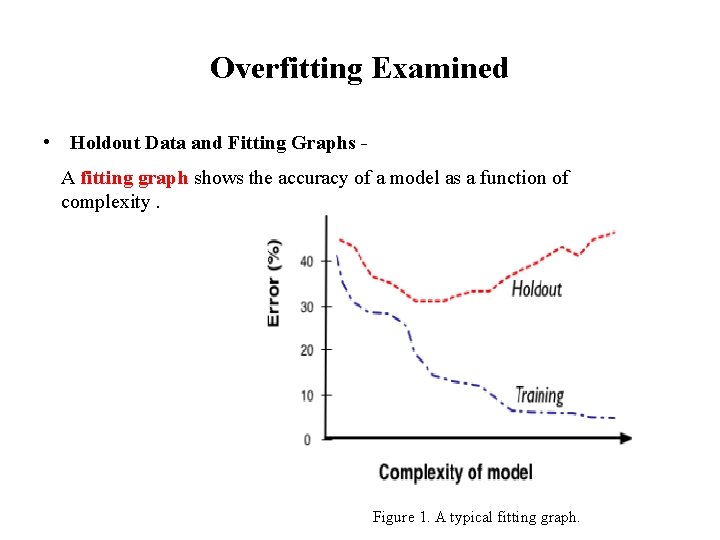

Overfitting Examined • Holdout Data and Fitting Graphs A fitting graph shows the accuracy of a model as a function of complexity. Figure 1. A typical fitting graph.

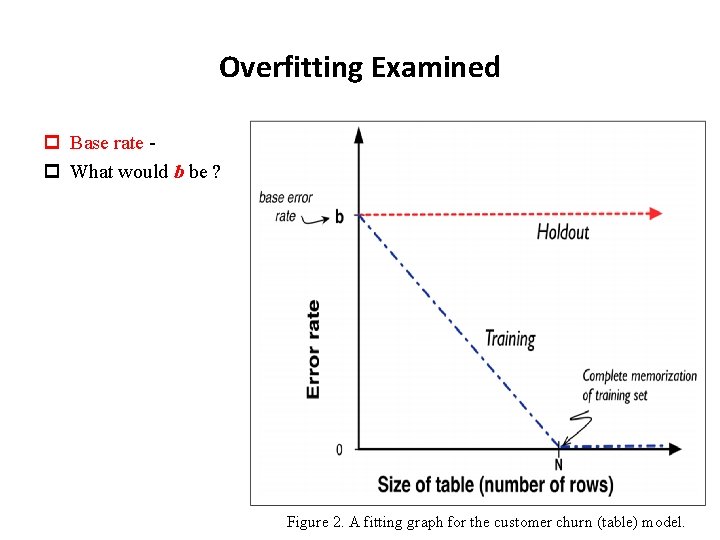

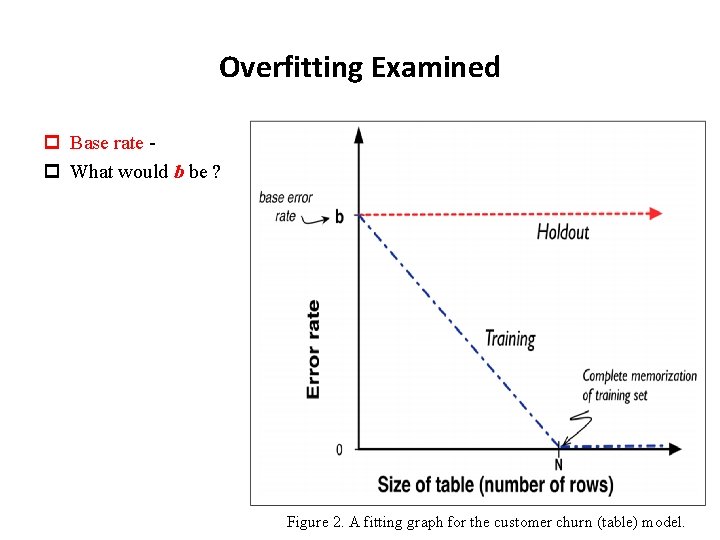

Overfitting Examined p Base rate p What would b be ? Figure 2. A fitting graph for the customer churn (table) model.

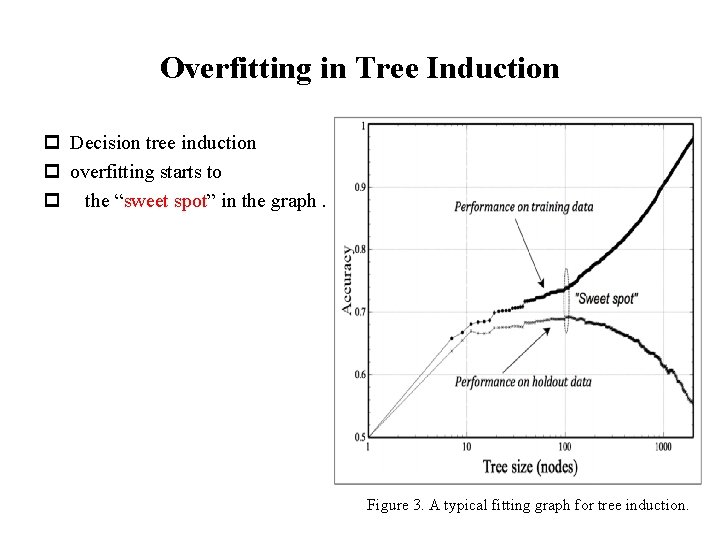

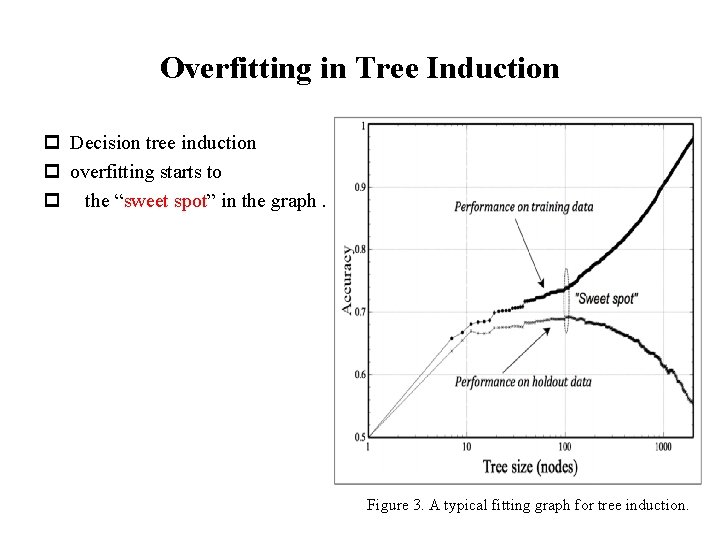

Overfitting in Tree Induction p Decision tree induction p overfitting starts to p the “sweet spot” in the graph. Figure 3. A typical fitting graph for tree induction.

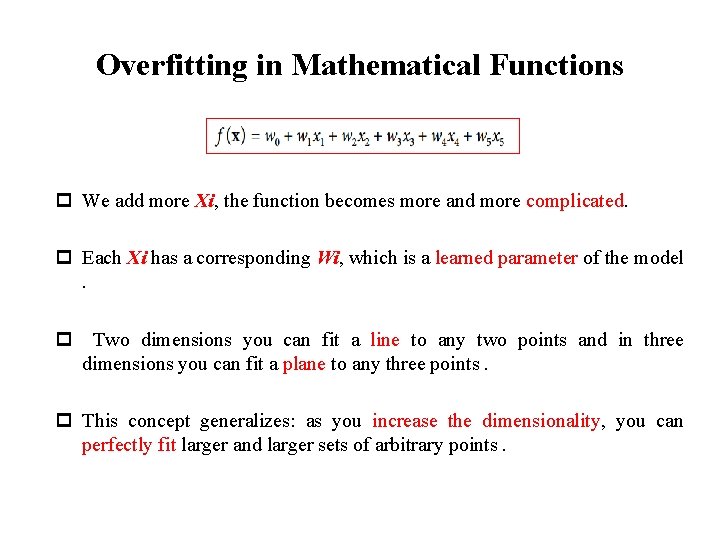

Overfitting in Mathematical Functions p We add more Xi, the function becomes more and more complicated. p Each Xi has a corresponding Wi, which is a learned parameter of the model. p Two dimensions you can fit a line to any two points and in three dimensions you can fit a plane to any three points. p This concept generalizes: as you increase the dimensionality, you can perfectly fit larger and larger sets of arbitrary points.

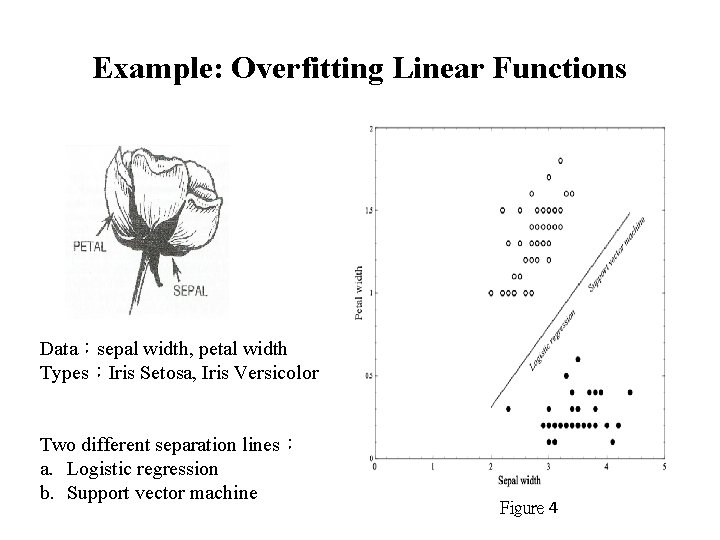

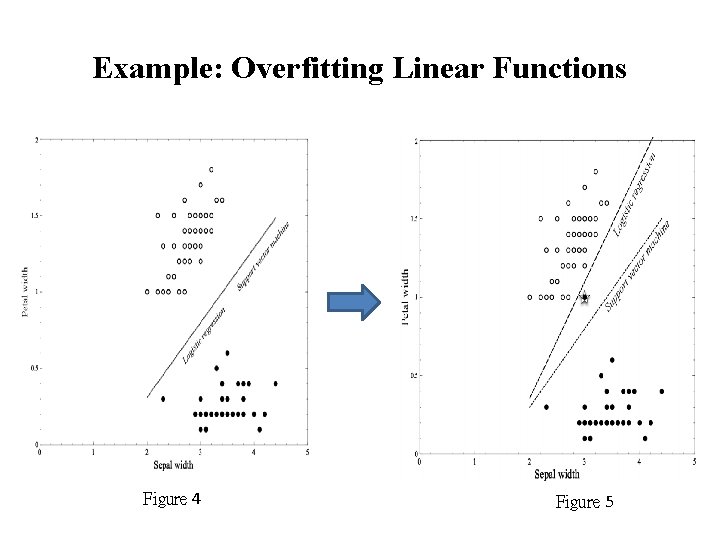

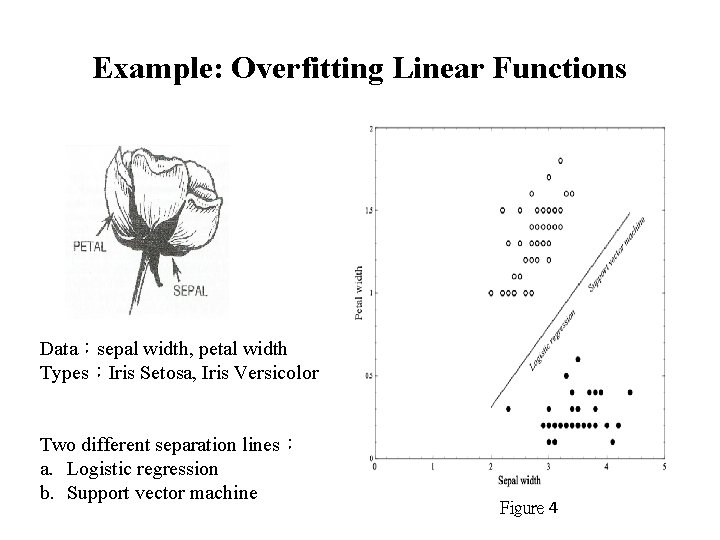

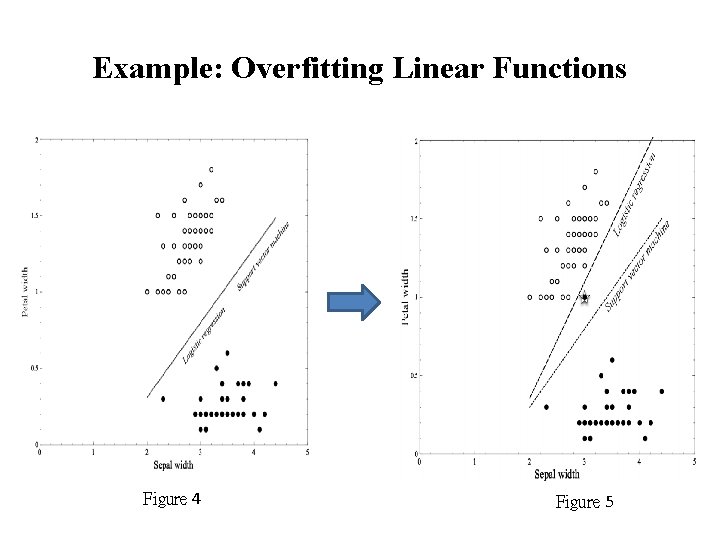

Example: Overfitting Linear Functions Data:sepal width, petal width Types:Iris Setosa, Iris Versicolor Two different separation lines: a. Logistic regression b. Support vector machine Figure 4

Example: Overfitting Linear Functions Figure 4 Figure 5

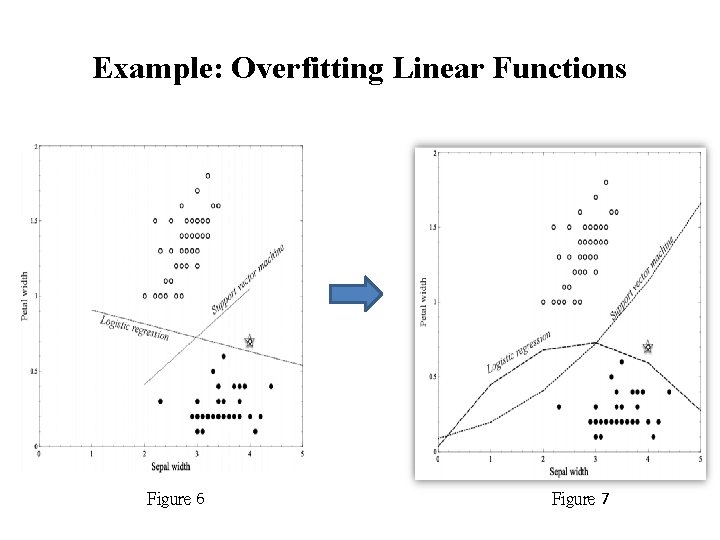

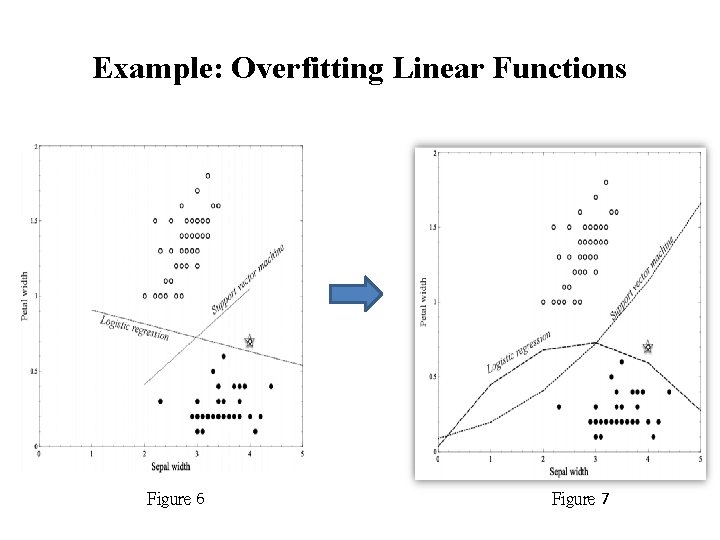

Example: Overfitting Linear Functions Figure 6 Figure 7

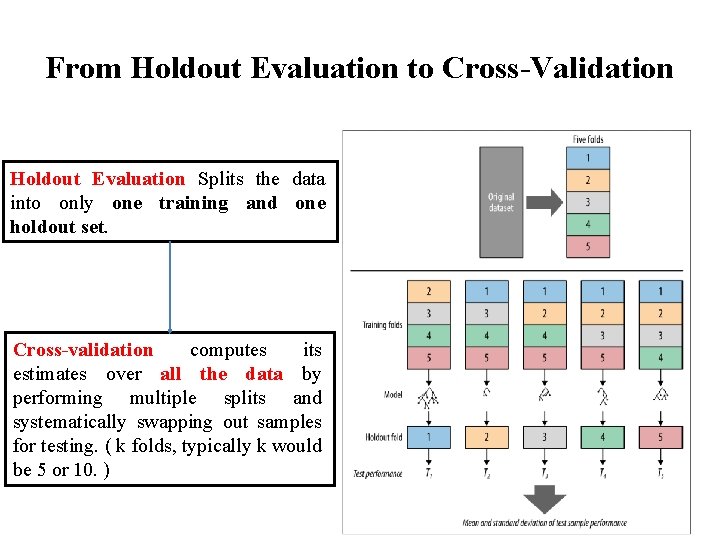

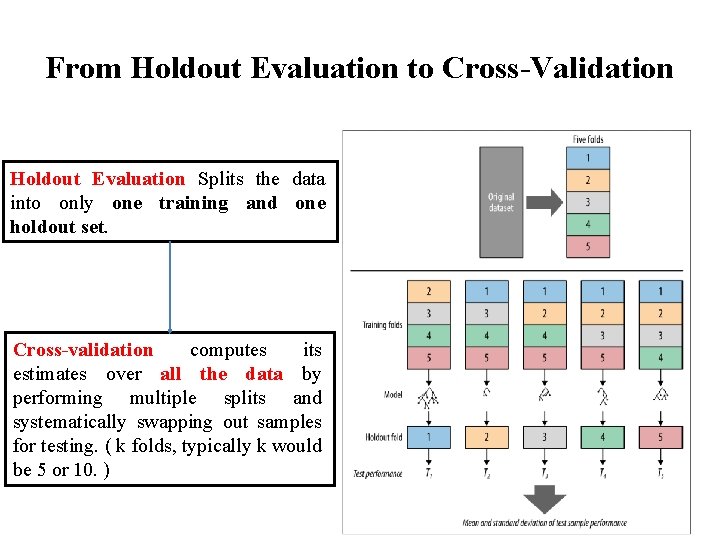

From Holdout Evaluation to Cross-Validation Holdout Evaluation Splits the data into only one training and one holdout set. Cross-validation computes its estimates over all the data by performing multiple splits and systematically swapping out samples for testing. ( k folds, typically k would be 5 or 10. )

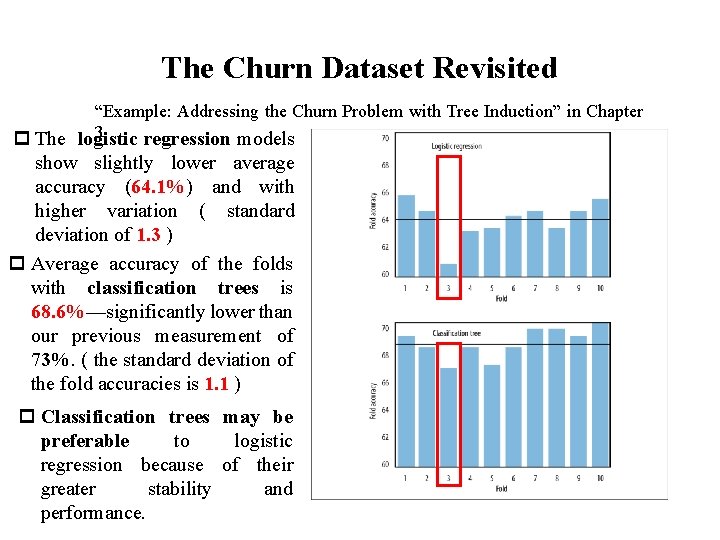

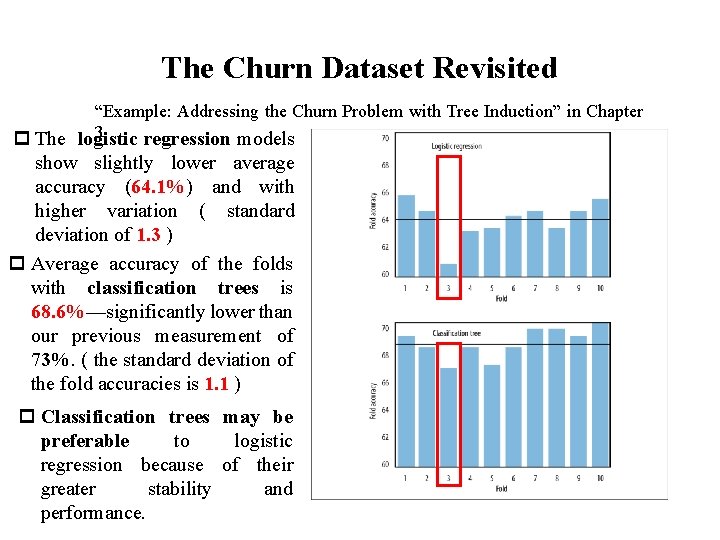

The Churn Dataset Revisited “Example: Addressing the Churn Problem with Tree Induction” in Chapter 3. p The logistic regression models show slightly lower average accuracy (64. 1%) and with higher variation ( standard deviation of 1. 3 ) p Average accuracy of the folds with classification trees is 68. 6%—significantly lower than our previous measurement of 73%. ( the standard deviation of the fold accuracies is 1. 1 ) p Classification trees may be preferable to logistic regression because of their greater stability and performance.

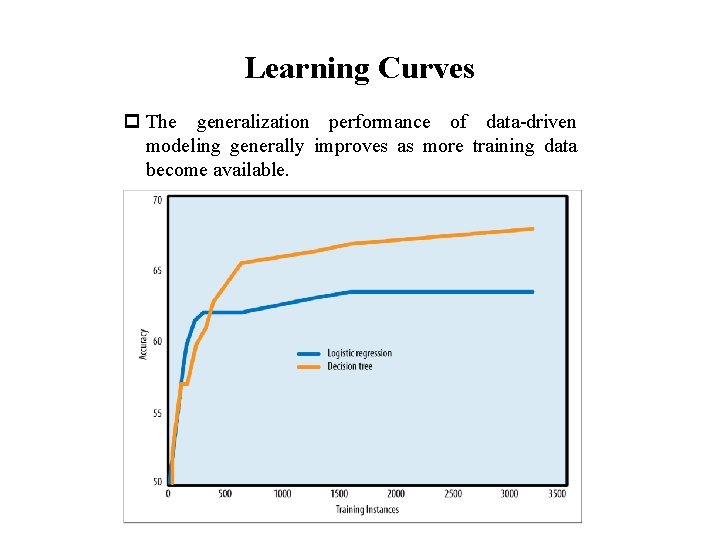

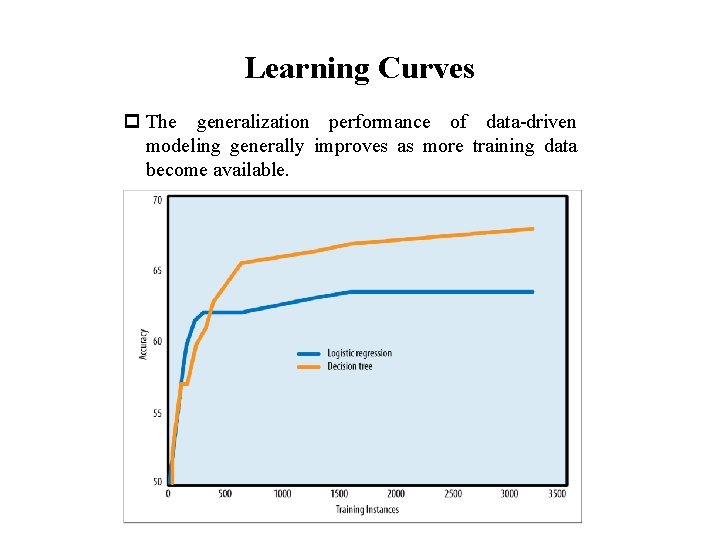

Learning Curves p The generalization performance of data-driven modeling generally improves as more training data become available.

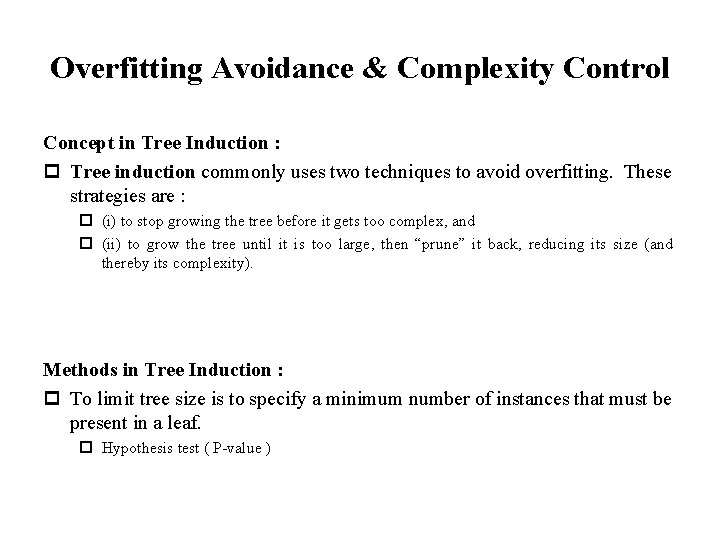

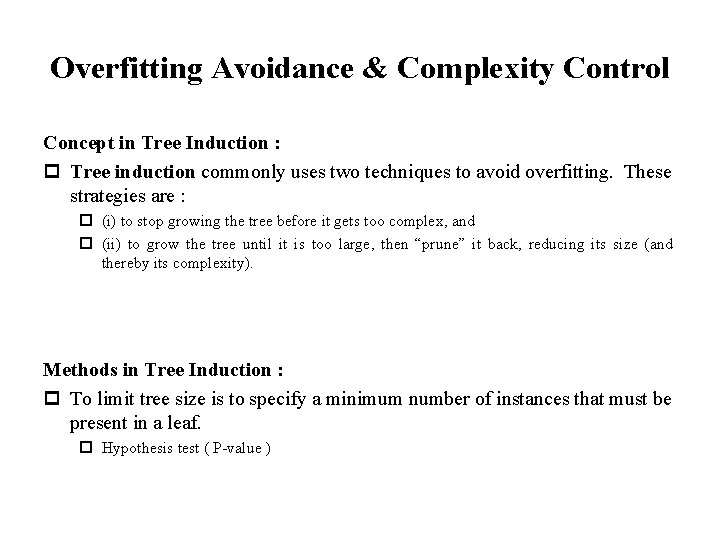

Overfitting Avoidance & Complexity Control Concept in Tree Induction : p Tree induction commonly uses two techniques to avoid overfitting. These strategies are : p (i) to stop growing the tree before it gets too complex, and p (ii) to grow the tree until it is too large, then “prune” it back, reducing its size (and thereby its complexity). Methods in Tree Induction : p To limit tree size is to specify a minimum number of instances that must be present in a leaf. p Hypothesis test ( P-value )

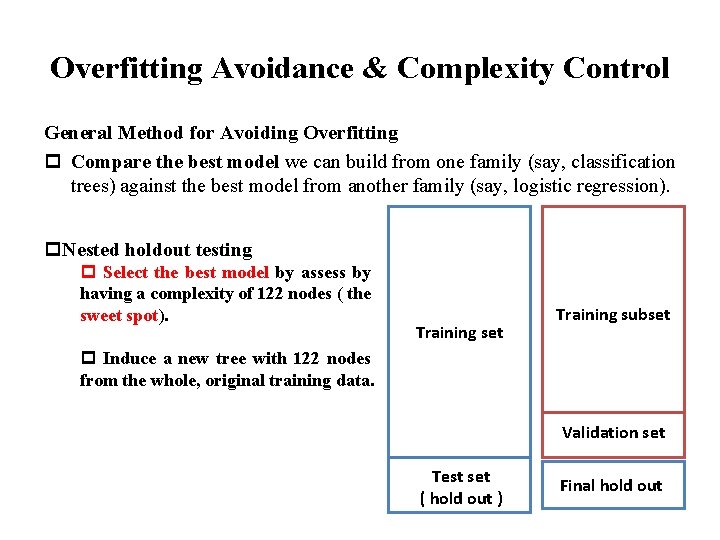

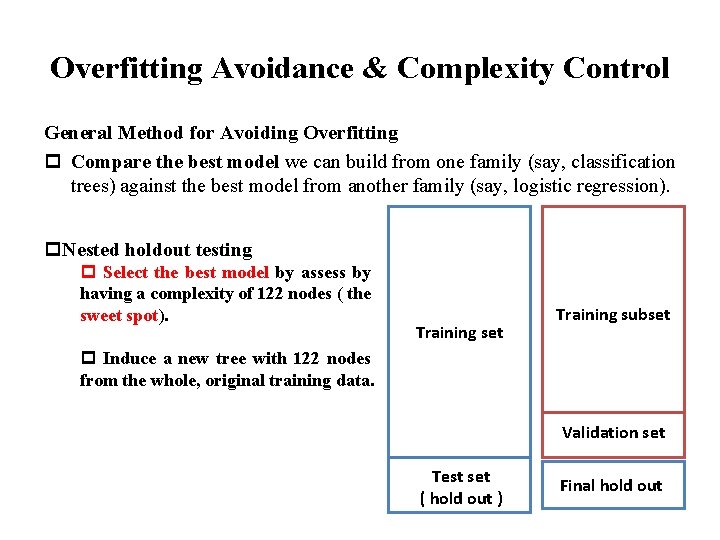

Overfitting Avoidance & Complexity Control General Method for Avoiding Overfitting p Compare the best model we can build from one family (say, classification trees) against the best model from another family (say, logistic regression). p. Nested holdout testing p Select the best model by assess by having a complexity of 122 nodes ( the sweet spot). Training set Training subset p Induce a new tree with 122 nodes from the whole, original training data. Validation set Test set ( hold out ) Final hold out

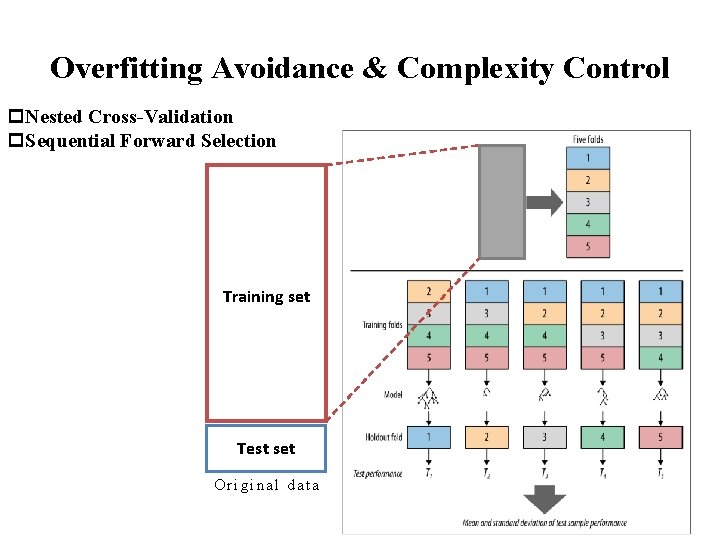

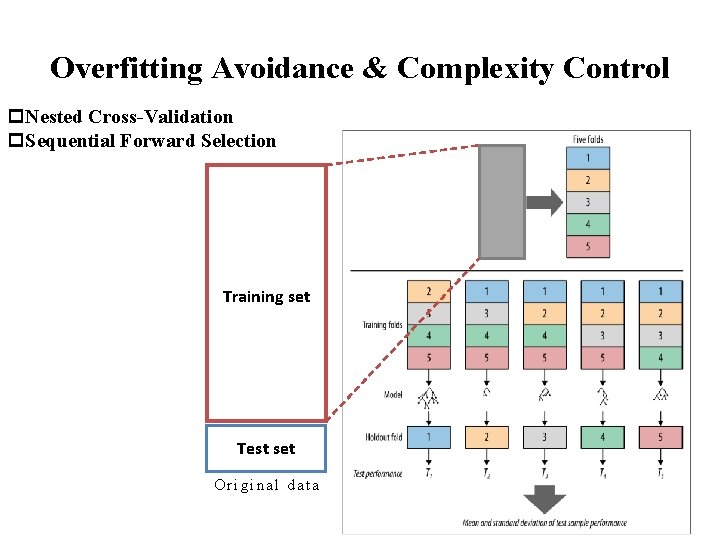

Overfitting Avoidance & Complexity Control p. Nested Cross-Validation p. Sequential Forward Selection Training set Test set Original data