Demands on next generation dynamical solvers Model and

- Slides: 21

Demands on next generation dynamical solvers Model and Data Hierarchies for Simulating and Understanding Climate Marco A. Giorgetta

(My) wish list for the dynamical solver • • • Conserves mass, tracer mass (and energy) Numerical consistency between continuity and transport equation Well behaved dynamics – – – • • • Low numerical noise Low numerical diffusion Physically reasonable dispersion relationship Accurate Grid refinements (of different kinds) Fast

Numerical consistency between continuity and transport equation

Example: ECHAM – A spectral transform dynamical core solving for: • • – … using 3 time level “leap frog” time integration scheme A hybrid “eta” vertical coordinate: • • – Relative vorticity Divergence Temperature Log(surface pressure) Pressure at interface between layers: Mass of air in a layer x g: p(k, t) = a x p(t) + b dp(k, t) = p(k+1, t) - pi(k, t) A flux form transport scheme • q, cloud water, cloud ice, (and tracers for chemistry or aerosols … using a 2 time level scheme PROBLEM: tracer mass not conserved

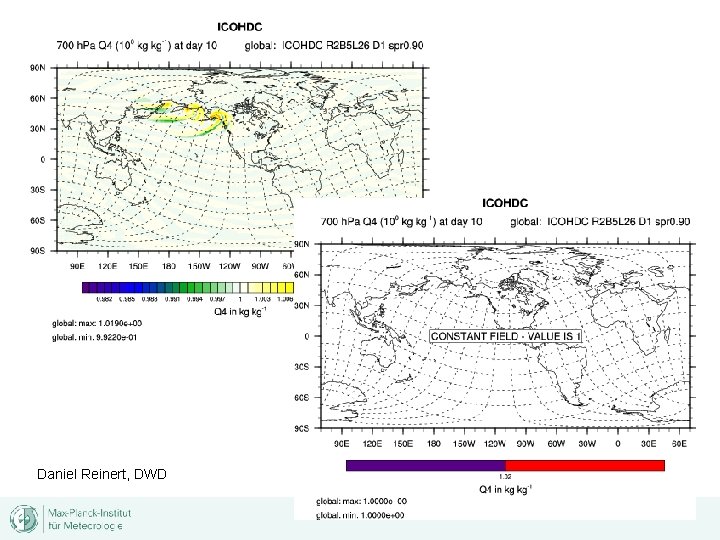

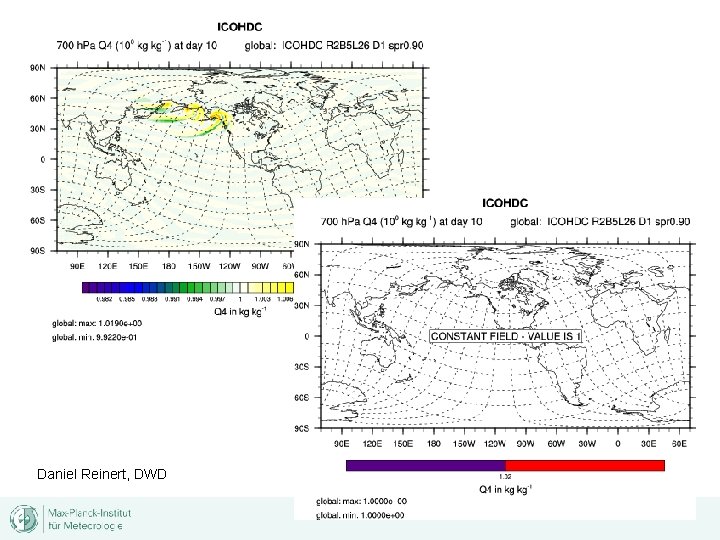

Illustration: • ICON dynamical core + transport scheme – – – • Triangular grid Hydrostatic dynamics Hybrid vertical “eta” coordinate 2 time level semi-implicit time stepping Flux form semi-Lagrangian transport scheme Jablonowski-Williamson test – – – Initial state = zonally symmetric, but dynamically instable flow Initial perturbation Baroclinic wave develops over ~10 days 4 Tracers, of which Q 4(x, y, z, t=0) = 1

Daniel Reinert, DWD

Grid refinements (of different kinds)

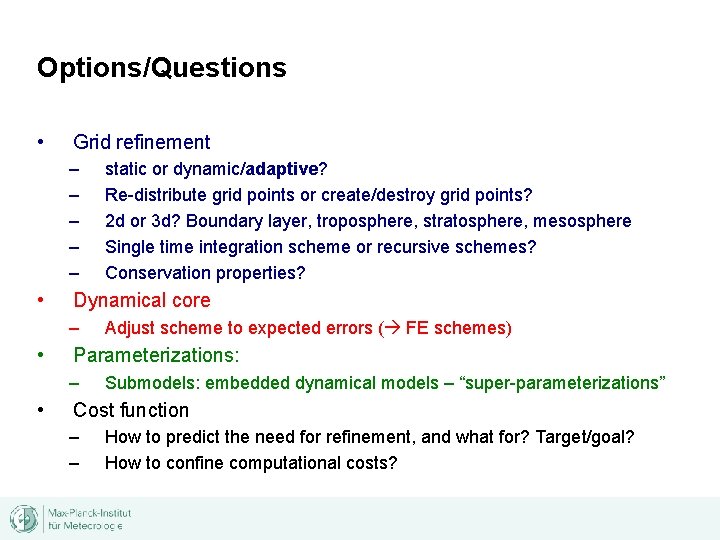

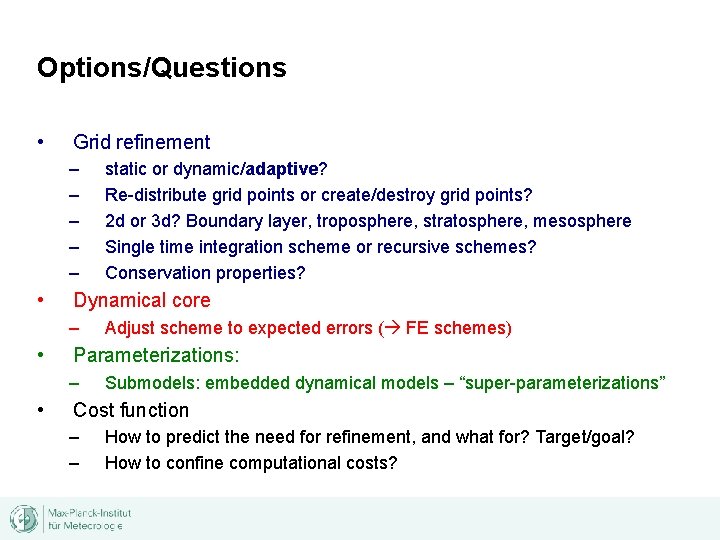

Options/Questions • Grid refinement – – – • Dynamical core – • Adjust scheme to expected errors ( FE schemes) Parameterizations: – • static or dynamic/adaptive? Re-distribute grid points or create/destroy grid points? 2 d or 3 d? Boundary layer, troposphere, stratosphere, mesosphere Single time integration scheme or recursive schemes? Conservation properties? Submodels: embedded dynamical models – “super-parameterizations” Cost function – – How to predict the need for refinement, and what for? Target/goal? How to confine computational costs?

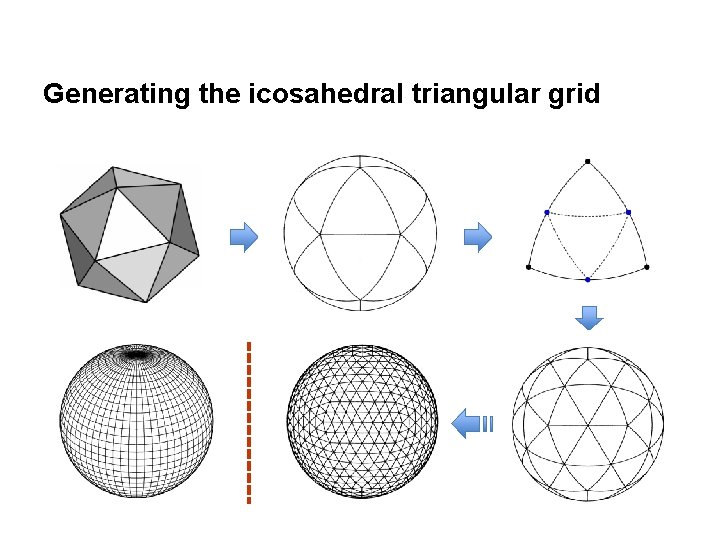

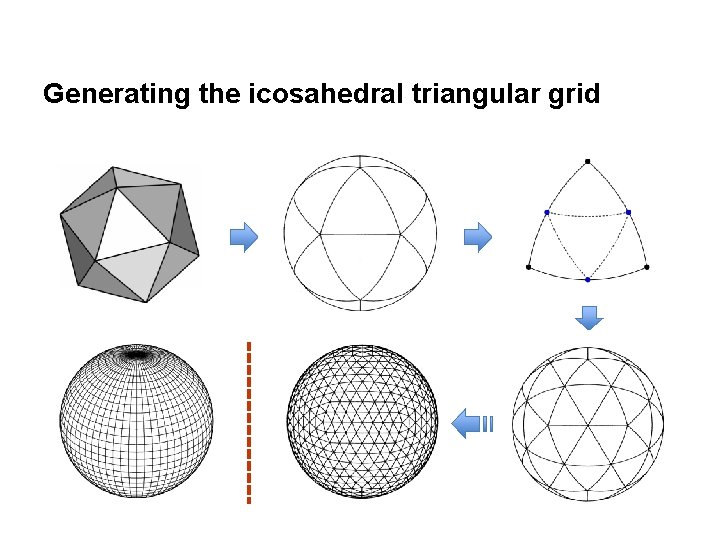

Generating the icosahedral triangular grid

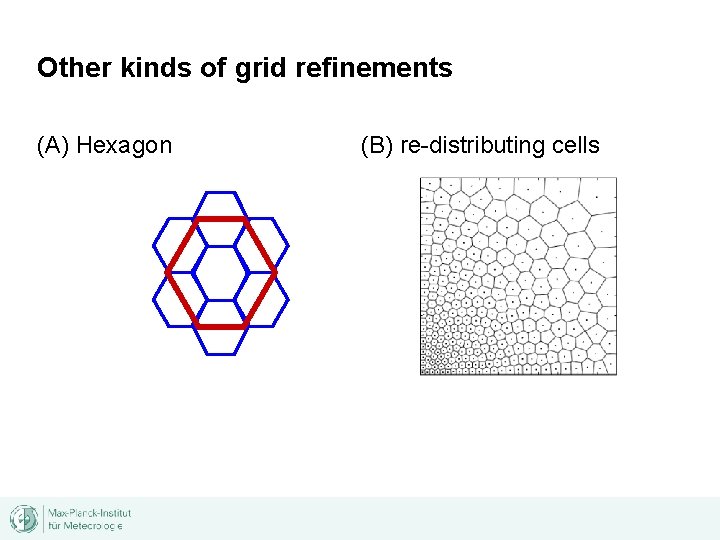

Other kinds of grid refinements (A) Hexagon (B) re-distributing cells

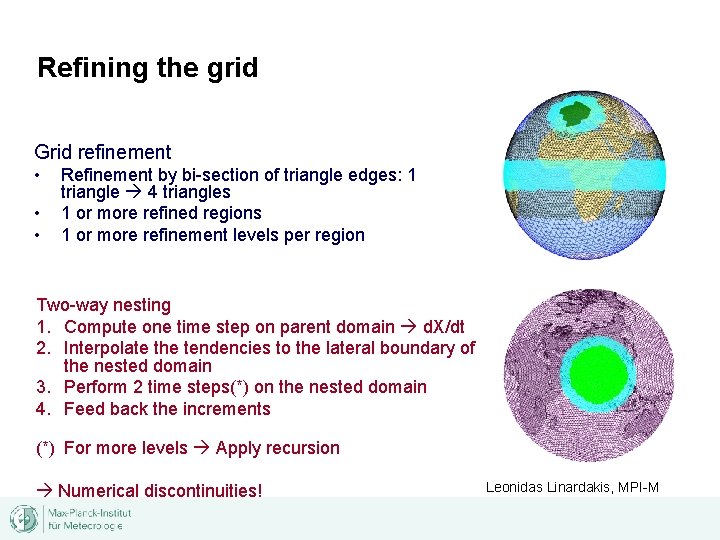

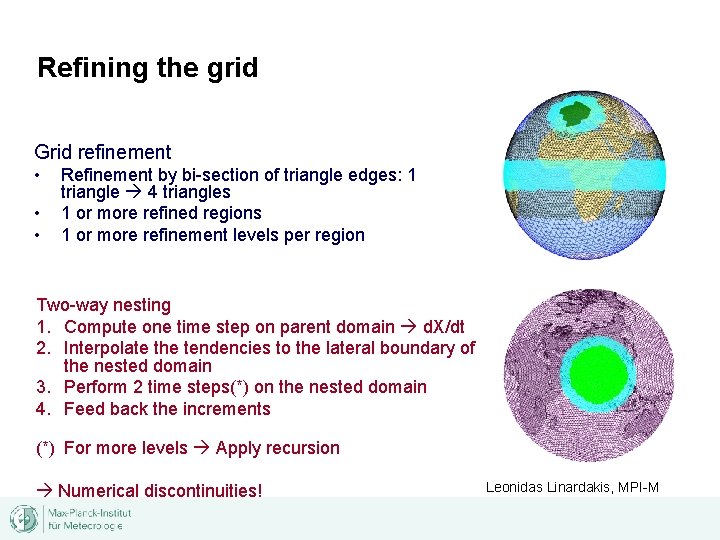

Refining the grid Grid refinement • • • Refinement by bi-section of triangle edges: 1 triangle 4 triangles 1 or more refined regions 1 or more refinement levels per region Two-way nesting 1. Compute one time step on parent domain d. X/dt 2. Interpolate the tendencies to the lateral boundary of the nested domain 3. Perform 2 time steps(*) on the nested domain 4. Feed back the increments (*) For more levels Apply recursion Numerical discontinuities! Leonidas Linardakis, MPI-M

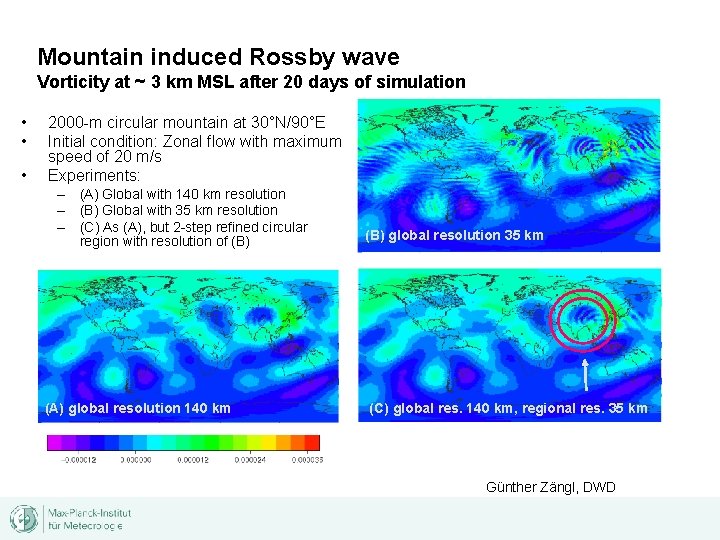

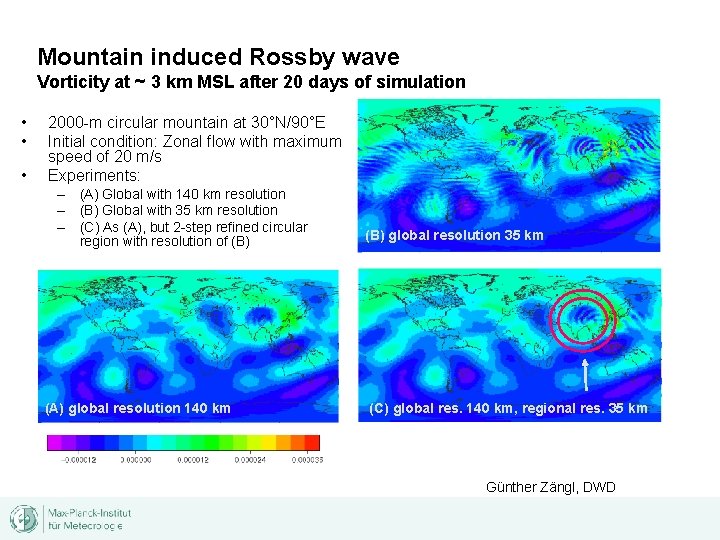

Mountain induced Rossby wave Vorticity at ~ 3 km MSL after 20 days of simulation • • • 2000 -m circular mountain at 30°N/90°E Initial condition: Zonal flow with maximum speed of 20 m/s Experiments: – (A) Global with 140 km resolution – (B) Global with 35 km resolution – (C) As (A), but 2 -step refined circular region with resolution of (B) (A) global resolution 140 km (B) global resolution 35 km (C) global res. 140 km, regional res. 35 km Günther Zängl, DWD

High Performance Computing

• How to get faster: – – – Faster CPUs Faster connections between CPU, memory and disk faster Parallelization over more CPUs • • – Modify code design to account for architecture of CPUs • • CPUs share memory CPUs have their own memory Scalar/vector CPUs Sizes of intermediate, fast access memories (“Caches”) The past was dominated by improved CPUs The future will be dominated by more CPUs

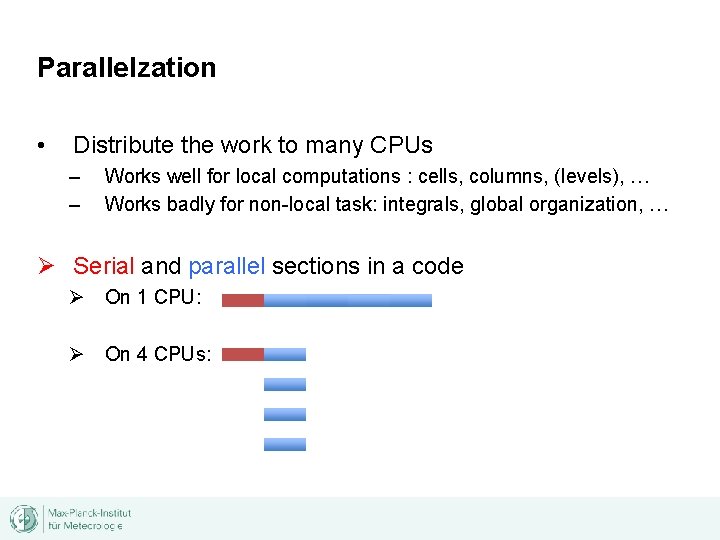

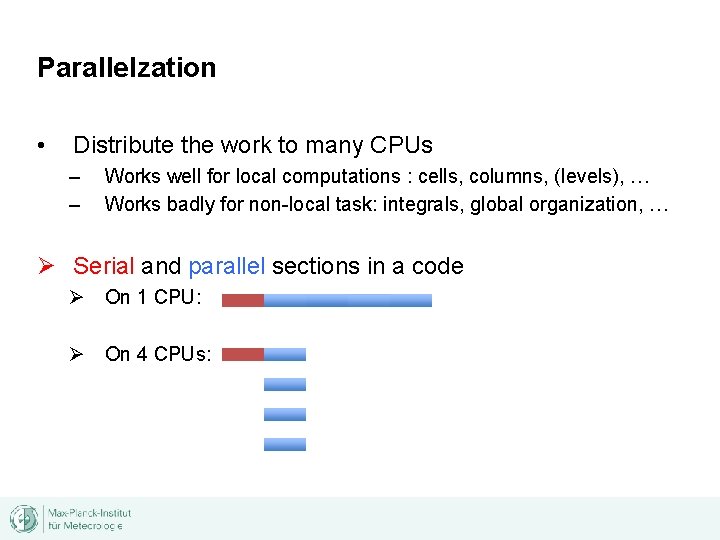

Parallelzation • Distribute the work to many CPUs – – Works well for local computations : cells, columns, (levels), … Works badly for non-local task: integrals, global organization, … Ø Serial and parallel sections in a code Ø On 1 CPU: Ø On 4 CPUs:

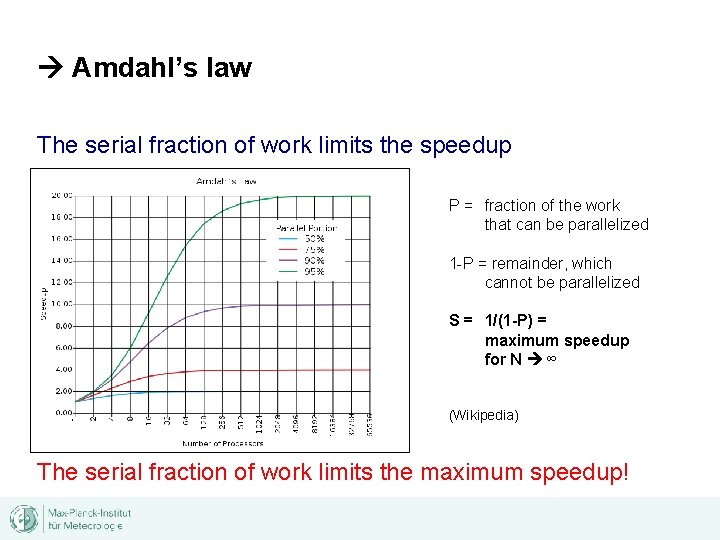

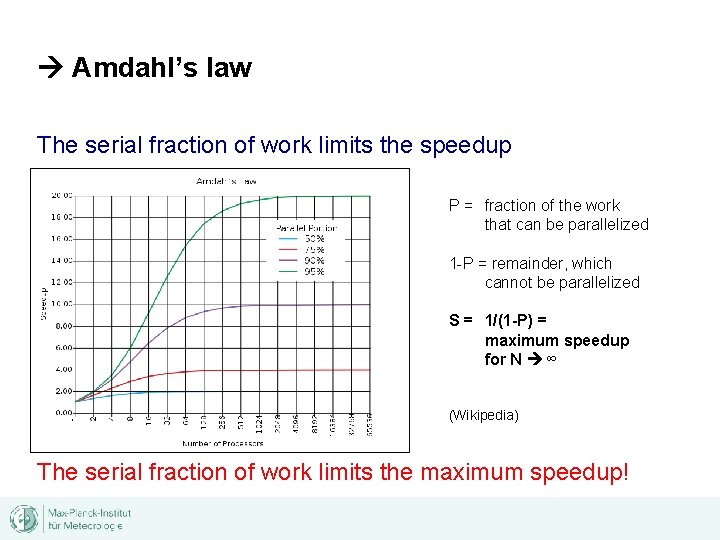

Amdahl’s law The serial fraction of work limits the speedup P = fraction of the work that can be parallelized 1 -P = remainder, which cannot be parallelized S = 1/(1 -P) = maximum speedup for N ∞ (Wikipedia) The serial fraction of work limits the maximum speedup!

For illustration: Computer at DKRZ • IBM Power 6 CPUs – – • ECHAM GCM at ~1° resolution – – • 250 nodes 16 CPUs/node total = 4000 CPUs 2 cores/CPU total = 8000 cores 2 floating point units/core 64 parallel processes/node Scales “well” up to 20 nodes = 640 cores (with parallel I/O) Problem: Spectral transform method used for dynamical core Requires transformations between spherical harmonics and grid point fields global data exchange, transpositions. Future: ~105 cores New model necessary

Strategies • Select numerical scheme, which is – – Sufficiently accurate with respect to your problem Computationally efficient • • Fast on single CPUs Minimize global data exchange (Transformations, “fixers”, I/O) Find optimal way to distribute work Practical issues: – – Optimization the code for the main computer platform Account for strengths/weaknesses of available compilers Avoid “tricks” which will stop the code to work on other platforms Optimize first the most expensive parts

Other problems in HPC • Data storage: – – • Data accessibility: – – • Bandwidth between disks/tapes and post-processing computer Post-processing software must be parallelized Data description – • Disk capacities grow less than computing power Bandwidth between computer and storage system ESMs can produce HUGE amounts of data Finite lifetime of disks or tapes backups or re-computing? Documentation of model, experimental setup, formats etc. Climate models are no longer a driver for the HPC development

END