Decision Making Under Uncertainty CMSC 471 Spring 2014

- Slides: 24

Decision Making Under Uncertainty CMSC 471 – Spring 2014 Class #12– Thursday, March 6 R&N, Chapters 15. 1 -15. 2. 1, 16. 1 -16. 3 material from Lise Getoor, Jean-Claude Latombe, and Daphne Koller 1

MODELING UNCERTAINTY OVER TIME 2

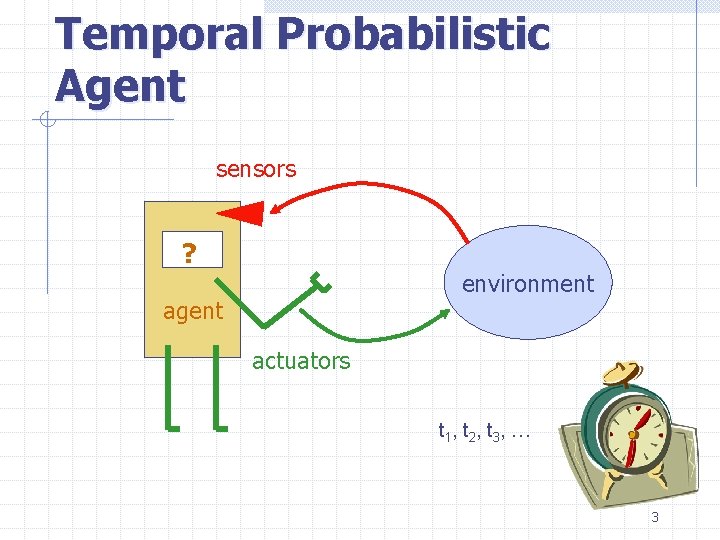

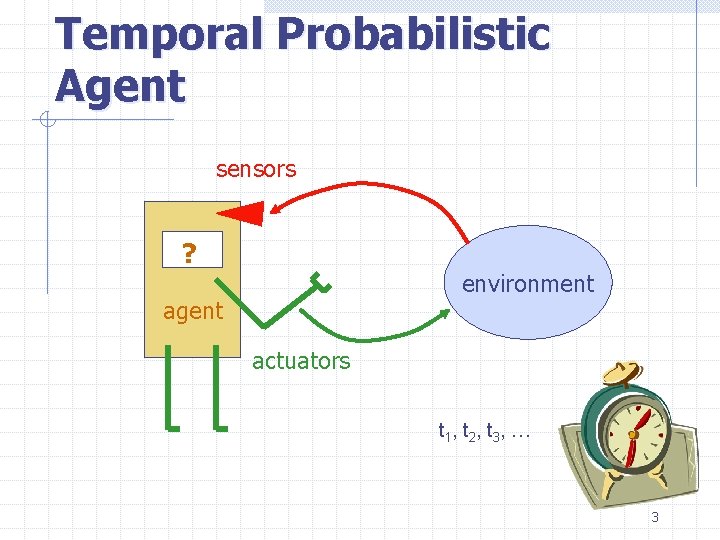

Temporal Probabilistic Agent sensors ? environment agent actuators t 1 , t 2 , t 3 , … 3

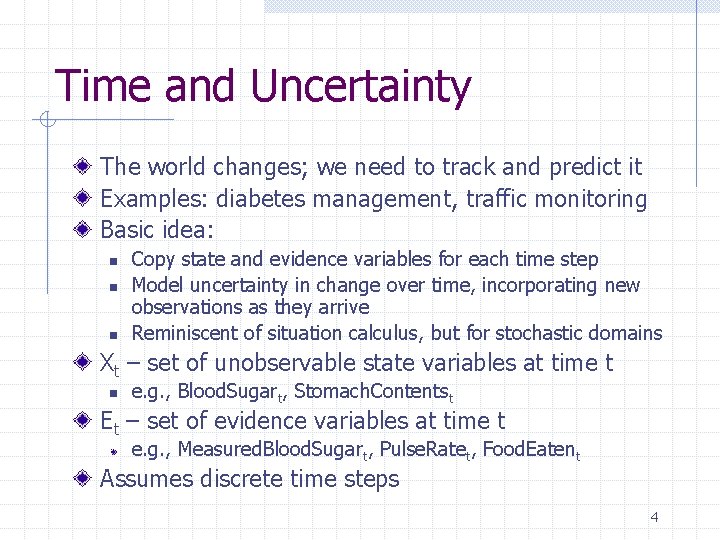

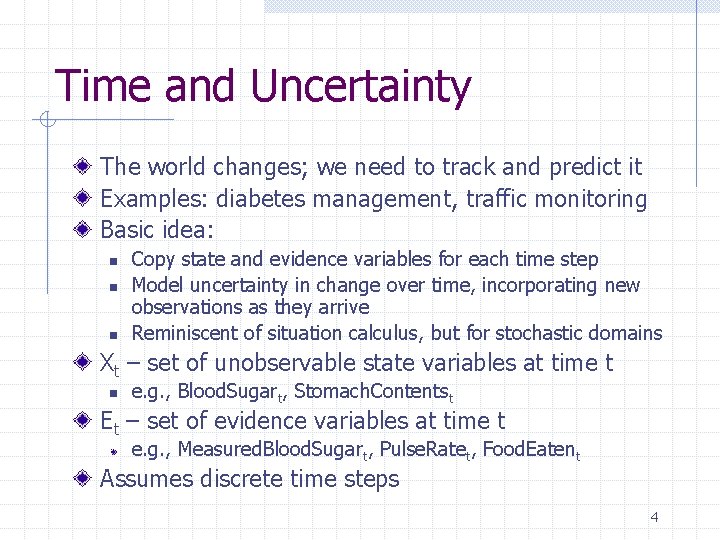

Time and Uncertainty The world changes; we need to track and predict it Examples: diabetes management, traffic monitoring Basic idea: n n n Copy state and evidence variables for each time step Model uncertainty in change over time, incorporating new observations as they arrive Reminiscent of situation calculus, but for stochastic domains Xt – set of unobservable state variables at time t n e. g. , Blood. Sugart, Stomach. Contentst Et – set of evidence variables at time t e. g. , Measured. Blood. Sugart, Pulse. Ratet, Food. Eatent Assumes discrete time steps 4

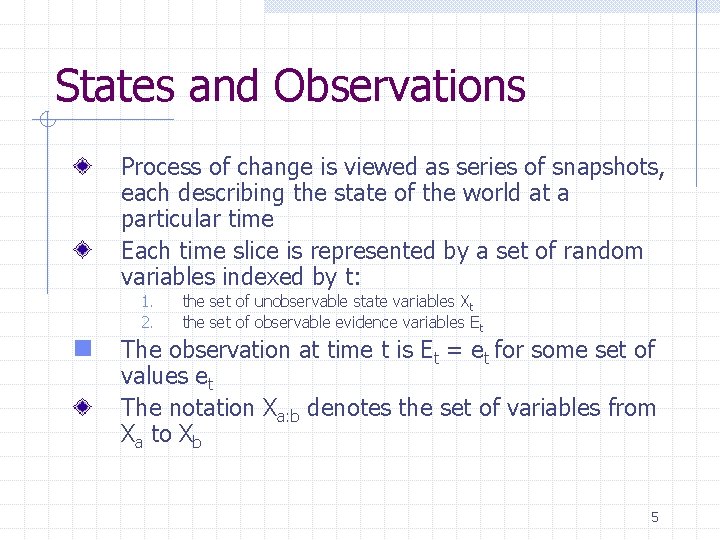

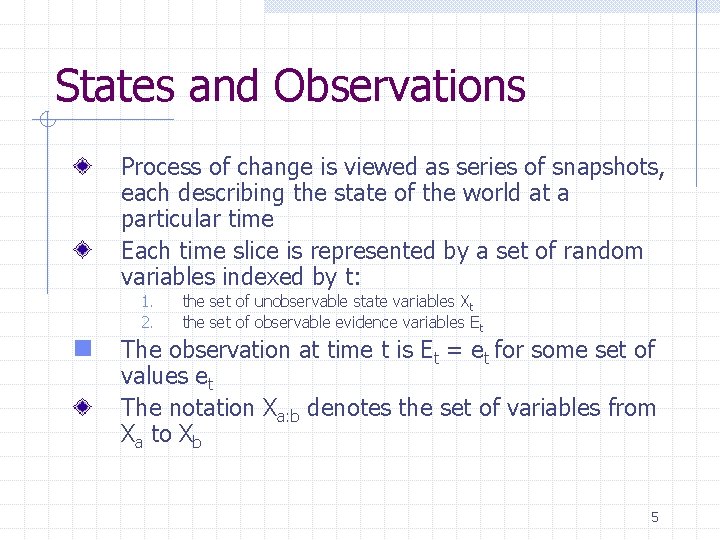

States and Observations Process of change is viewed as series of snapshots, each describing the state of the world at a particular time Each time slice is represented by a set of random variables indexed by t: 1. 2. n the set of unobservable state variables Xt the set of observable evidence variables Et The observation at time t is Et = et for some set of values et The notation Xa: b denotes the set of variables from Xa to Xb 5

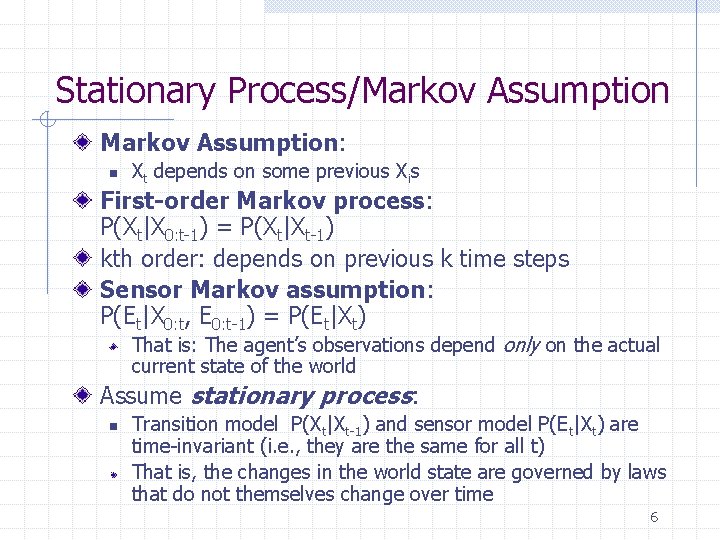

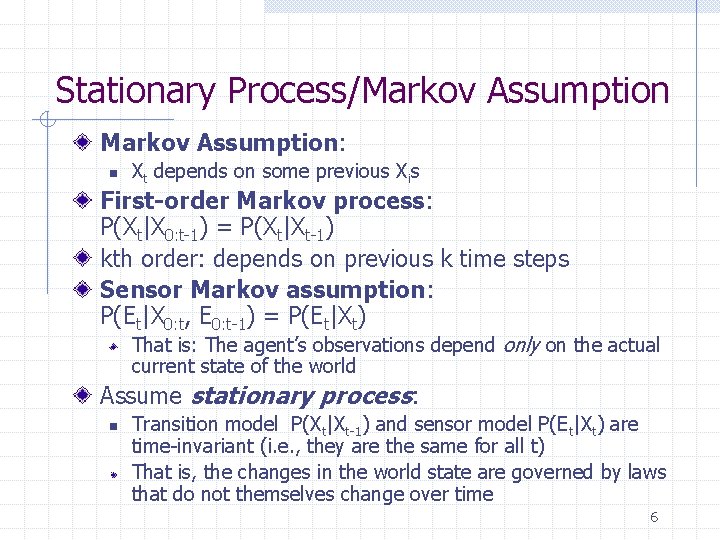

Stationary Process/Markov Assumption: n Xt depends on some previous Xis First-order Markov process: P(Xt|X 0: t-1) = P(Xt|Xt-1) kth order: depends on previous k time steps Sensor Markov assumption: P(Et|X 0: t, E 0: t-1) = P(Et|Xt) That is: The agent’s observations depend only on the actual current state of the world Assume stationary process: n Transition model P(Xt|Xt-1) and sensor model P(Et|Xt) are time-invariant (i. e. , they are the same for all t) That is, the changes in the world state are governed by laws that do not themselves change over time 6

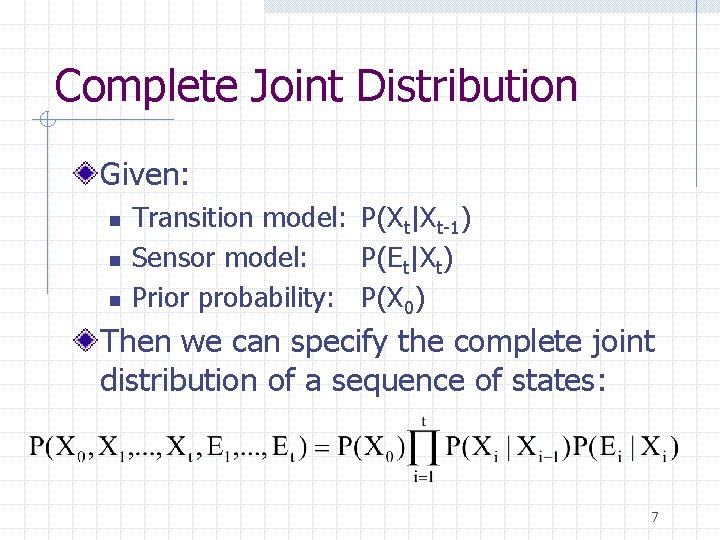

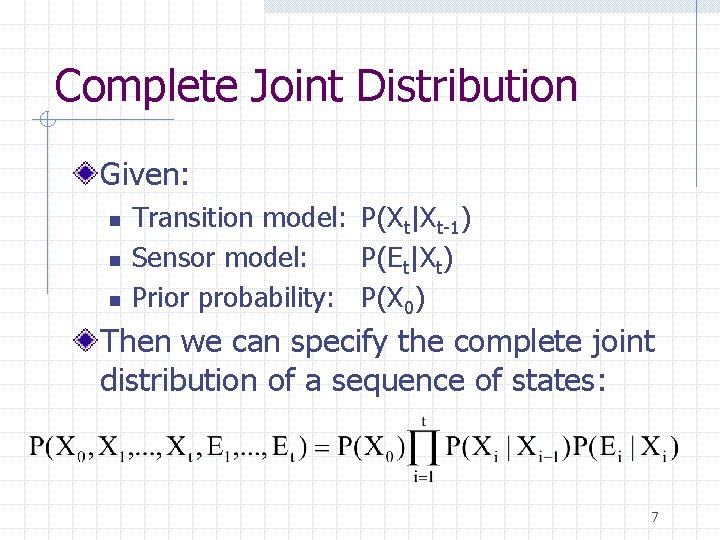

Complete Joint Distribution Given: n n n Transition model: P(Xt|Xt-1) Sensor model: P(Et|Xt) Prior probability: P(X 0) Then we can specify the complete joint distribution of a sequence of states: 7

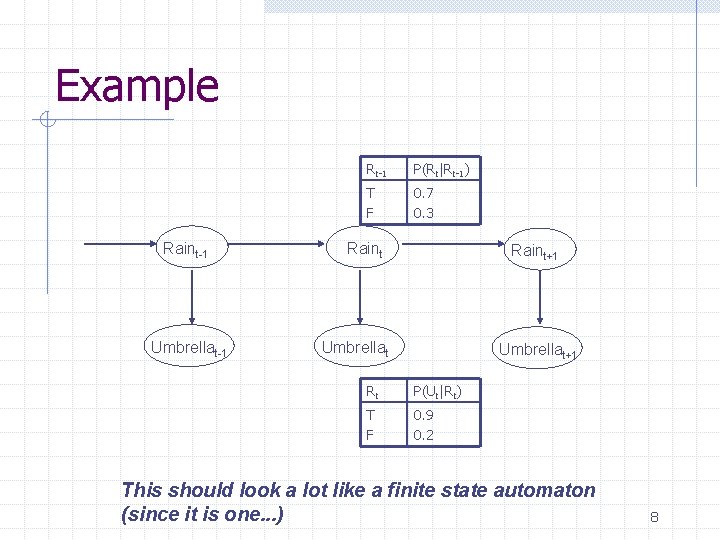

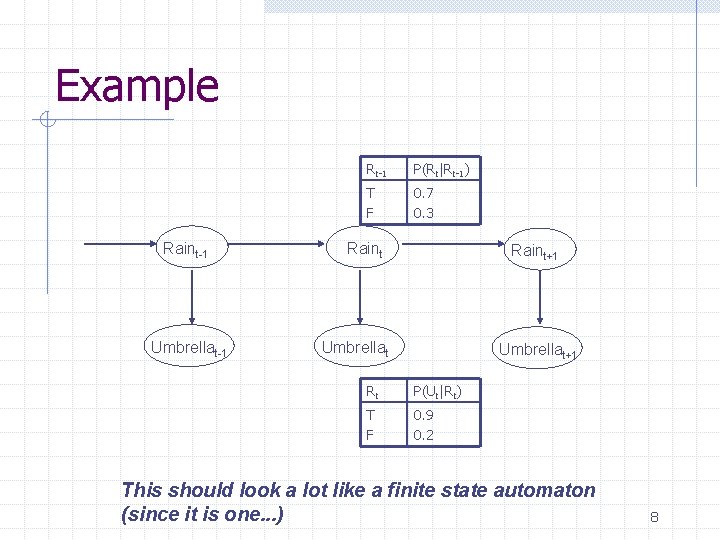

Example Raint-1 Umbrellat-1 Rt-1 P(Rt|Rt-1) T F 0. 7 0. 3 Raint+1 Umbrellat+1 Rt P(Ut|Rt) T F 0. 9 0. 2 This should look a lot like a finite state automaton (since it is one. . . ) 8

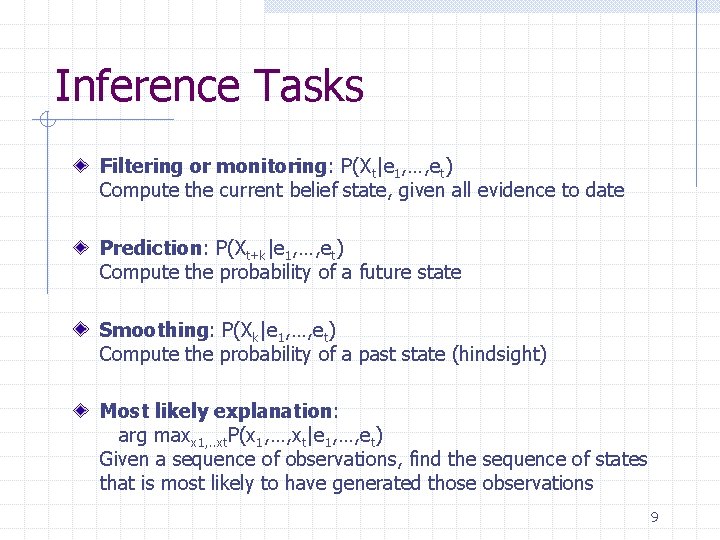

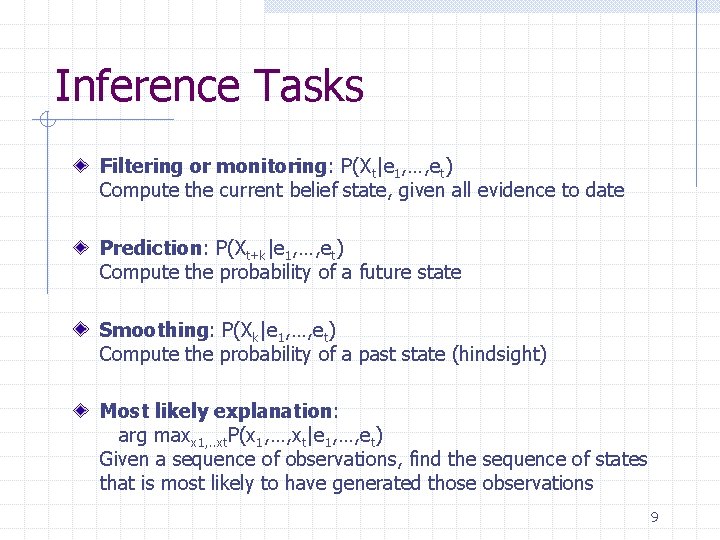

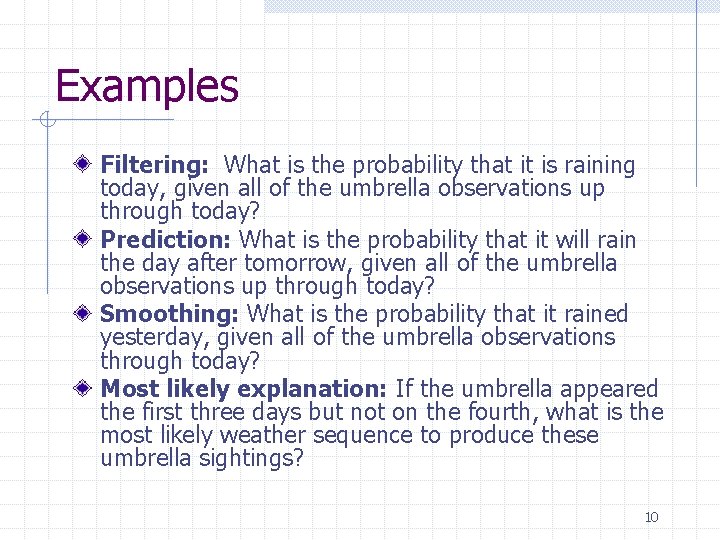

Inference Tasks Filtering or monitoring: P(Xt|e 1, …, et) Compute the current belief state, given all evidence to date Prediction: P(Xt+k|e 1, …, et) Compute the probability of a future state Smoothing: P(Xk|e 1, …, et) Compute the probability of a past state (hindsight) Most likely explanation: arg maxx 1, . . xt. P(x 1, …, xt|e 1, …, et) Given a sequence of observations, find the sequence of states that is most likely to have generated those observations 9

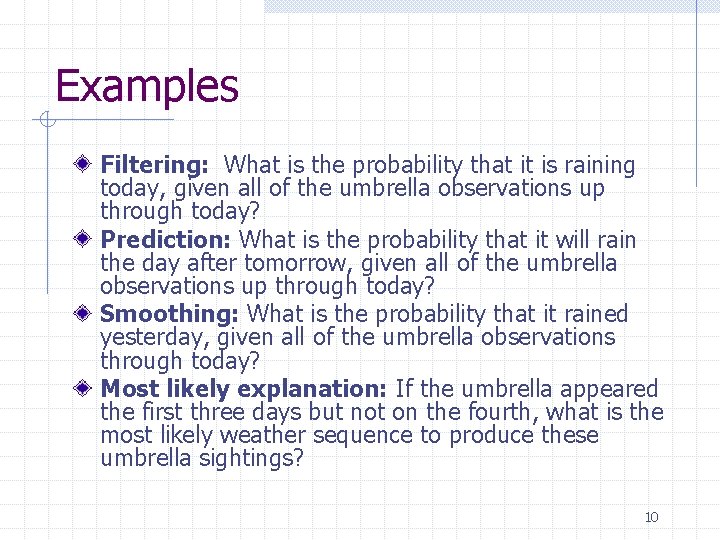

Examples Filtering: What is the probability that it is raining today, given all of the umbrella observations up through today? Prediction: What is the probability that it will rain the day after tomorrow, given all of the umbrella observations up through today? Smoothing: What is the probability that it rained yesterday, given all of the umbrella observations through today? Most likely explanation: If the umbrella appeared the first three days but not on the fourth, what is the most likely weather sequence to produce these umbrella sightings? 10

Filtering We use recursive estimation to compute P(Xt+1 | e 1: t+1) as a function of et+1 and P(Xt | e 1: t) We can write this as follows: This leads to a recursive definition: f 1: t+1 = FORWARD (f 1: t, et+1) QUIZLET: What is α? 11

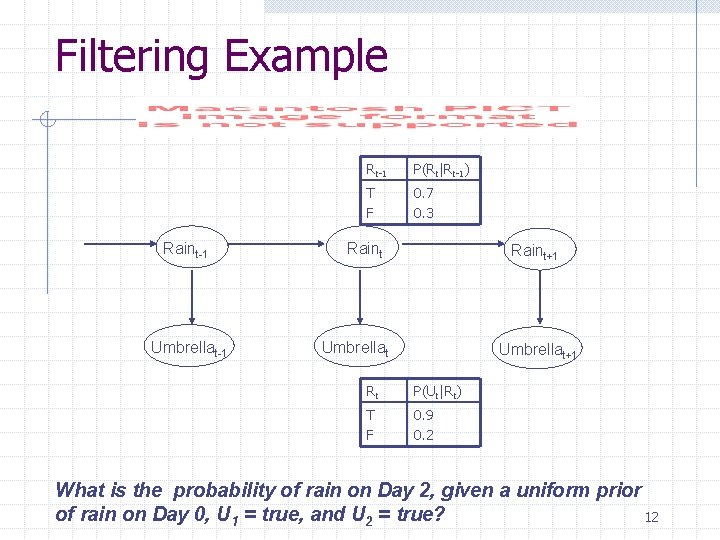

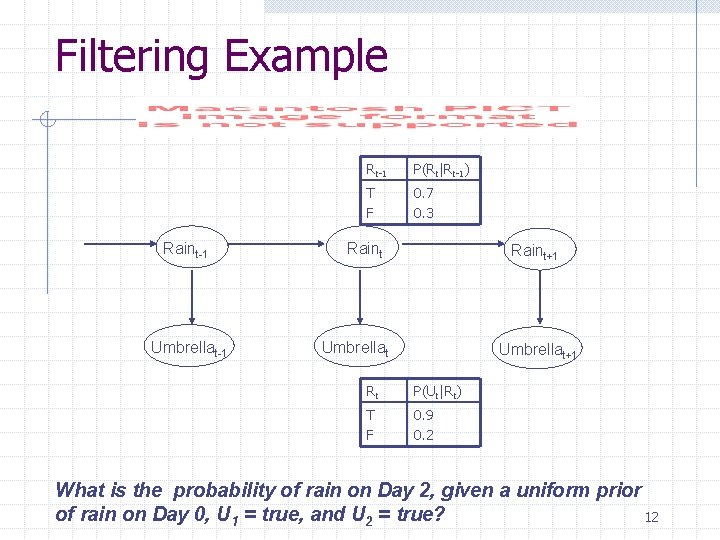

Filtering Example Raint-1 Umbrellat-1 Rt-1 P(Rt|Rt-1) T F 0. 7 0. 3 Raint+1 Umbrellat+1 Rt P(Ut|Rt) T F 0. 9 0. 2 What is the probability of rain on Day 2, given a uniform prior of rain on Day 0, U 1 = true, and U 2 = true? 12

DECISION MAKING UNDER UNCERTAINTY

Decision Making Under Uncertainty Many environments have multiple possible outcomes Some of these outcomes may be good; others may be bad Some may be very likely; others unlikely What’s a poor agent to do? ? 14

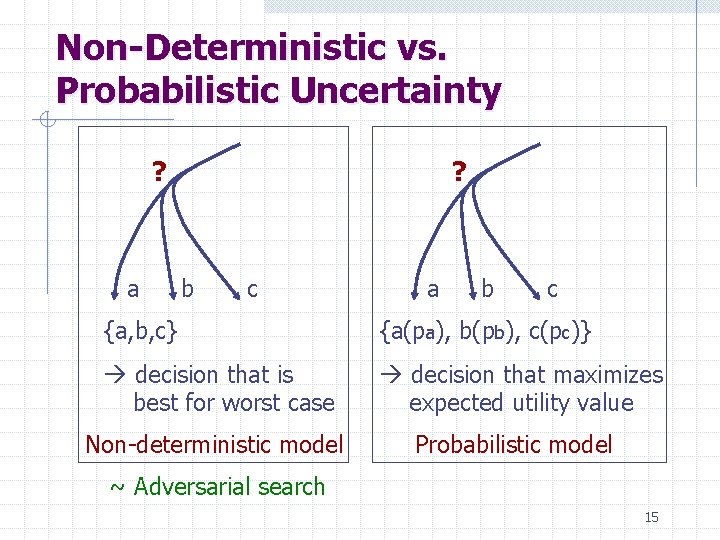

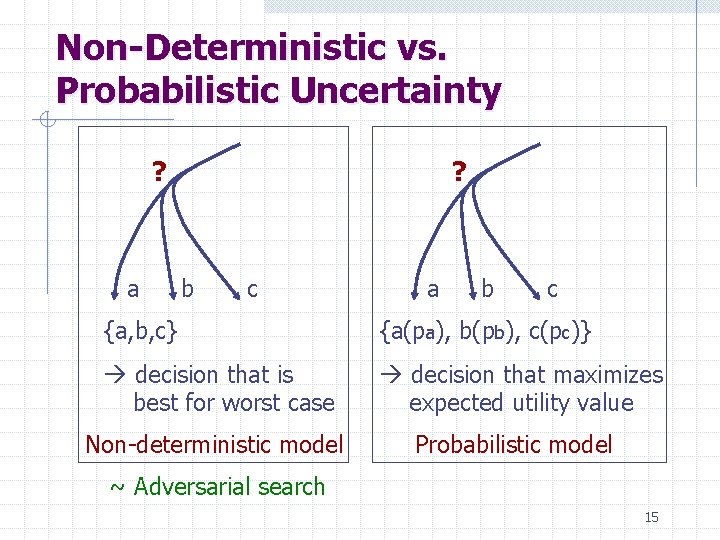

Non-Deterministic vs. Probabilistic Uncertainty ? a ? b c a b c {a, b, c} {a(pa), b(pb), c(pc)} à decision that is best for worst case à decision that maximizes expected utility value Non-deterministic model Probabilistic model ~ Adversarial search 15

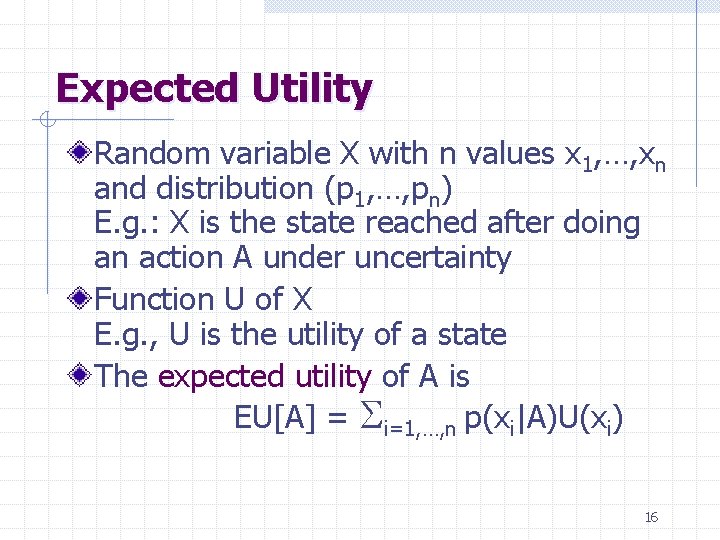

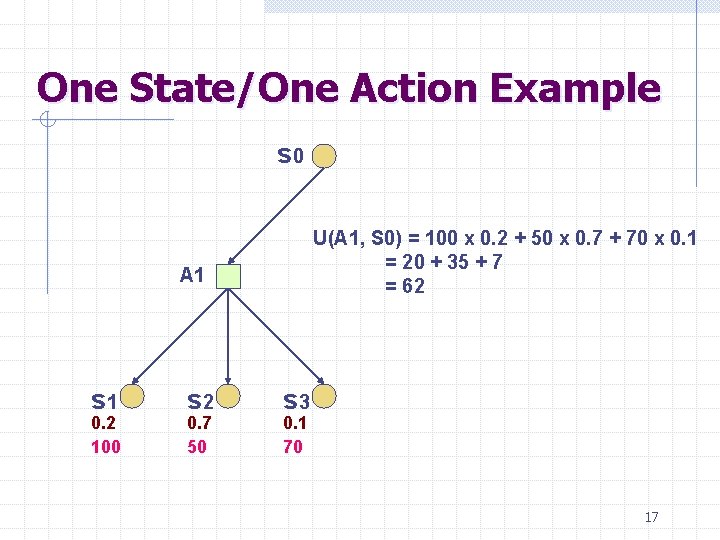

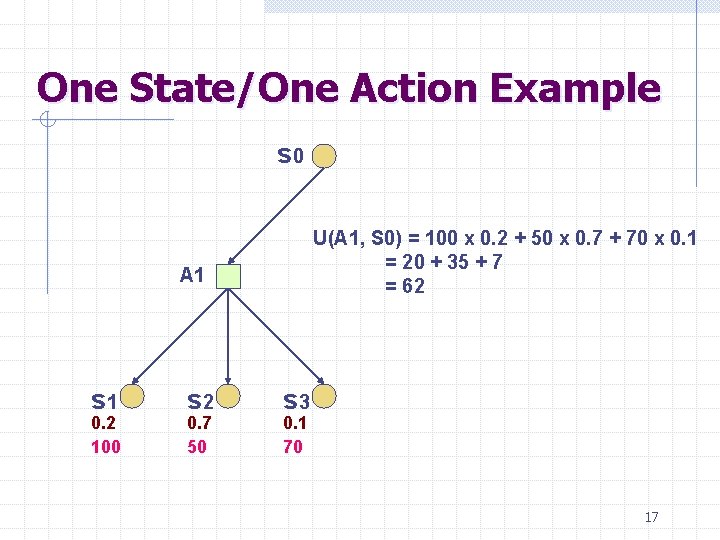

Expected Utility Random variable X with n values x 1, …, xn and distribution (p 1, …, pn) E. g. : X is the state reached after doing an action A under uncertainty Function U of X E. g. , U is the utility of a state The expected utility of A is EU[A] = Si=1, …, n p(xi|A)U(xi) 16

One State/One Action Example s 0 U(A 1, S 0) = 100 x 0. 2 + 50 x 0. 7 + 70 x 0. 1 = 20 + 35 + 7 = 62 A 1 s 1 0. 2 100 s 2 0. 7 50 s 3 0. 1 70 17

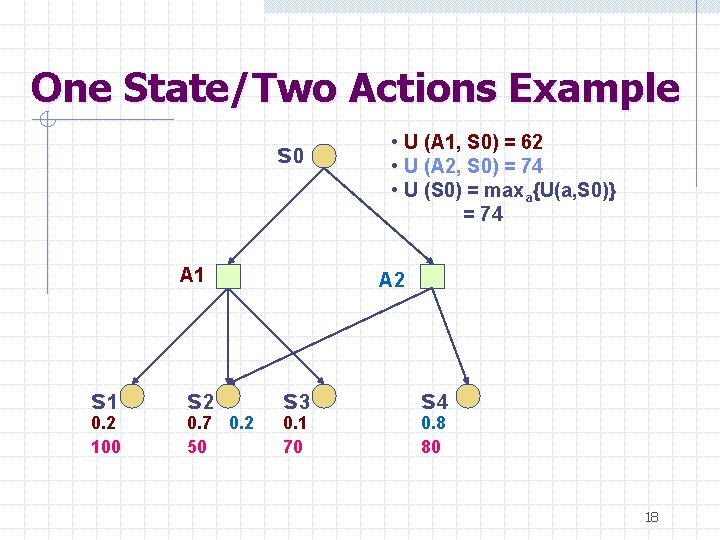

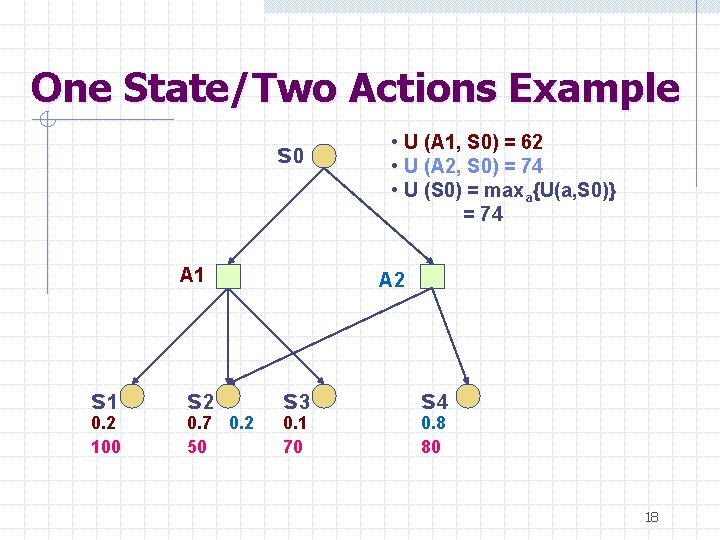

One State/Two Actions Example s 0 A 1 s 1 0. 2 100 s 2 0. 7 0. 2 50 • U (A 1, S 0) = 62 • U (A 2, S 0) = 74 • U (S 0) = maxa{U(a, S 0)} = 74 A 2 s 3 0. 1 70 s 4 0. 8 80 18

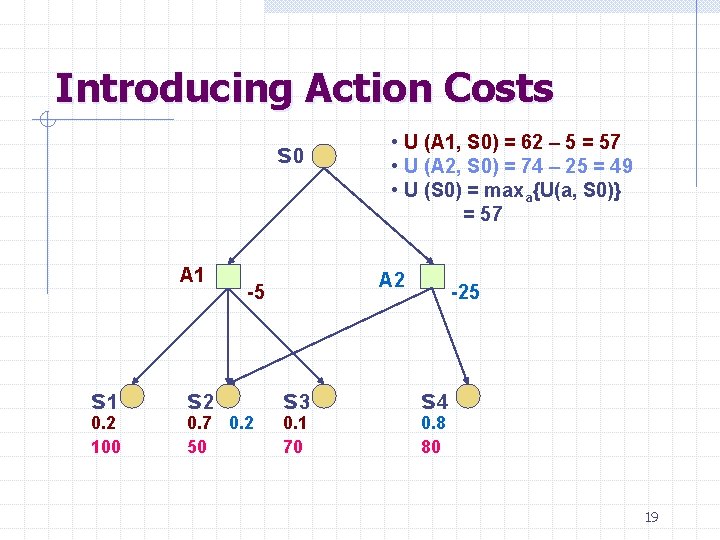

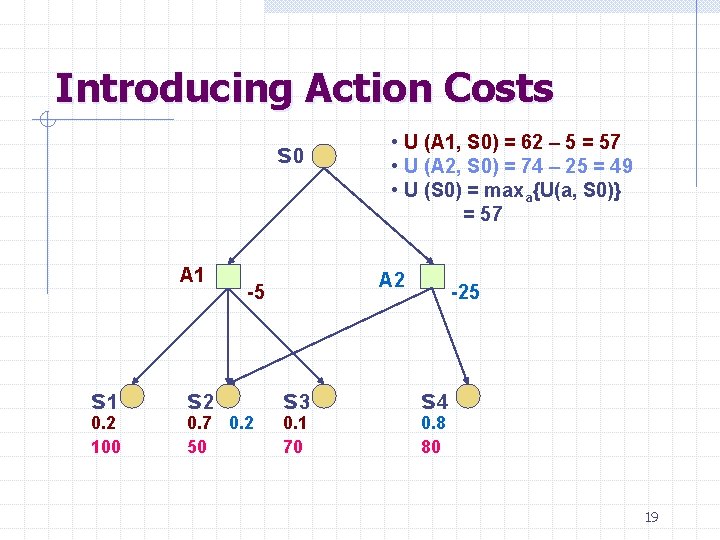

Introducing Action Costs s 0 A 1 s 1 0. 2 100 s 2 A 2 -5 0. 7 0. 2 50 • U (A 1, S 0) = 62 – 5 = 57 • U (A 2, S 0) = 74 – 25 = 49 • U (S 0) = maxa{U(a, S 0)} = 57 s 3 0. 1 70 -25 s 4 0. 8 80 19

MEU Principle A rational agent should choose the action that maximizes agent’s expected utility This is the basis of the field of decision theory The MEU principle provides a normative criterion for rational choice of action 20

Not quite… Must have a complete model of: n n n Actions Utilities States Even if you have a complete model, decision making is computationally intractable In fact, a truly rational agent takes into account the utility of reasoning as well (bounded rationality) Nevertheless, great progress has been made in this area recently, and we are able to solve much more complex decision-theoretic problems than ever before 21

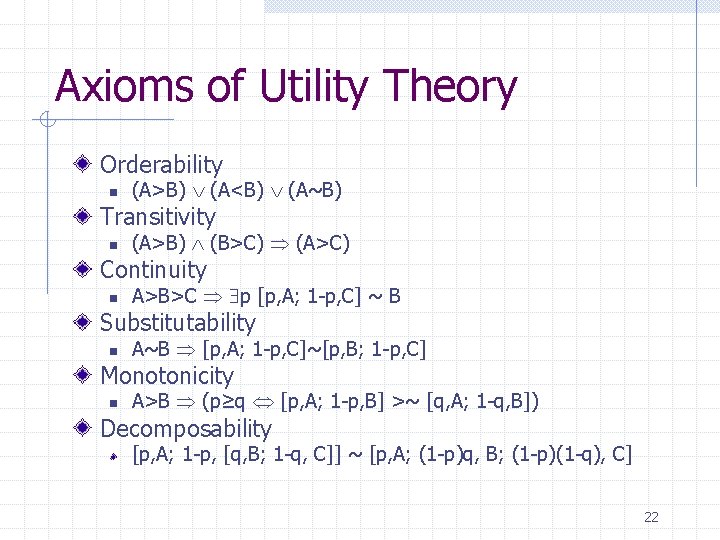

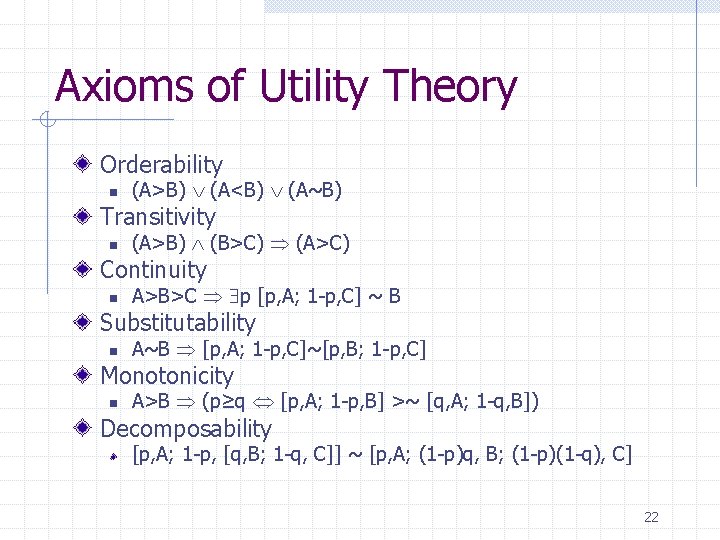

Axioms of Utility Theory Orderability n (A>B) (A<B) (A~B) Transitivity n (A>B) (B>C) (A>C) Continuity n A>B>C p [p, A; 1 -p, C] ~ B Substitutability n A~B [p, A; 1 -p, C]~[p, B; 1 -p, C] Monotonicity n A>B (p≥q [p, A; 1 -p, B] >~ [q, A; 1 -q, B]) Decomposability [p, A; 1 -p, [q, B; 1 -q, C]] ~ [p, A; (1 -p)q, B; (1 -p)(1 -q), C] 22

Money Versus Utility Money <> Utility n More money is better, but not always in a linear relationship to the amount of money Expected Monetary Value Risk-averse: U(L) < U(SEMV(L)) Risk-seeking: U(L) > U(SEMV(L)) Risk-neutral: U(L) = U(SEMV(L)) 23

Value Function Provides a ranking of alternatives, but not a meaningful metric scale Also known as an “ordinal utility function” Sometimes, only relative judgments (value functions) are necessary At other times, absolute judgments (utility functions) are required 24