CSE 53437343 Fall 2006 Case Studies Comparing Windows

- Slides: 44

CSE 5343/7343 Fall 2006 Case Studies Comparing Windows XP and Linux Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

Copyright Notice © 2000 -2005 David A. Solomon and Mark Russinovich These materials are part of the Windows Operating System Internals Curriculum Development Kit, developed by David A. Solomon and Mark E. Russinovich with Andreas Polze Microsoft has licensed these materials from David Solomon Expert Seminars, Inc. for distribution to academic organizations solely for use in academic environments (and not for commercial use) 2

Background Architecture Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

Linus and Linux In 1991 Linus Torvalds took a college computer science course that used the Minix operating system Minix is a “toy” UNIX-like OS written by Andrew Tanenbaum as a learning workbench Linus wanted to make MINIX more usable, but Tanenbaum wanted to keep it ultra-simple Linus went in his own direction and began working on Linux In October 1991 he announced Linux v 0. 02 In March 1994 he released Linux v 1. 0 4

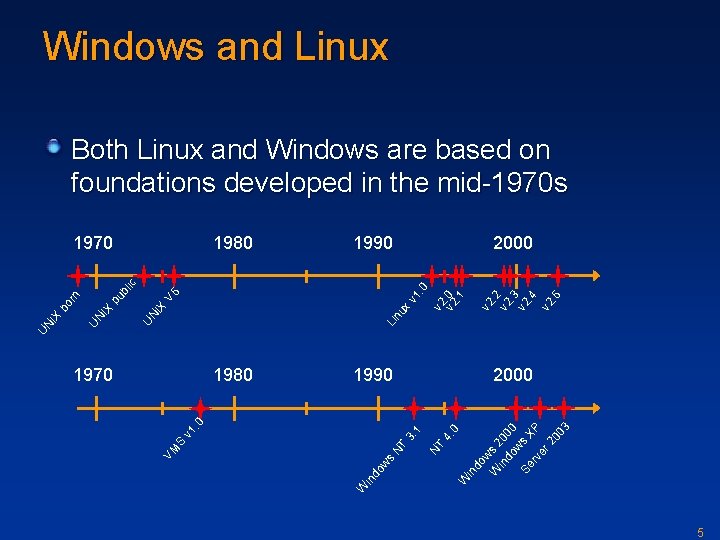

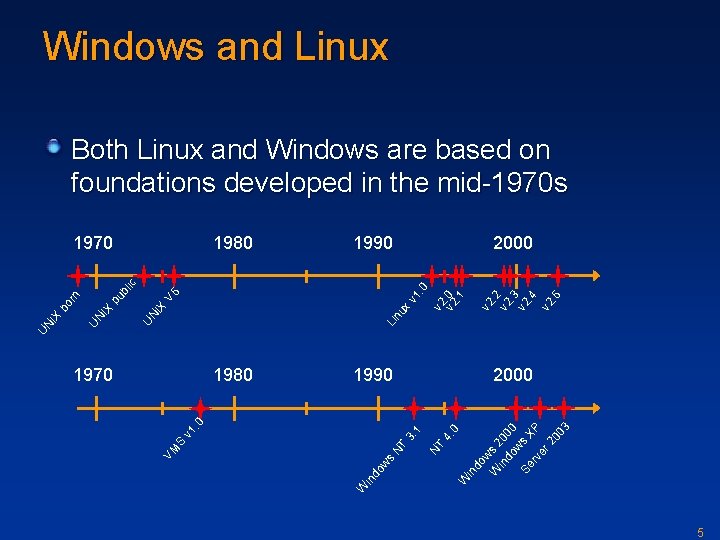

Windows and Linux Both Linux and Windows are based on foundations developed in the mid-1970 s 1980 1990 2000 v 2. 2 v 2. 3 v 2. 4 v 2. 6 v 2. 0. 1 . 0 Li UN nu x IX v 1 V 6 W in s do w in W do w W s 2 in do 000 ws Se rv XP er 20 03 NT S VM 4. 0 2000 3. 1 v 1. 0 1970 NT pu bl IX UN UN IX bo rn ic 1970 5

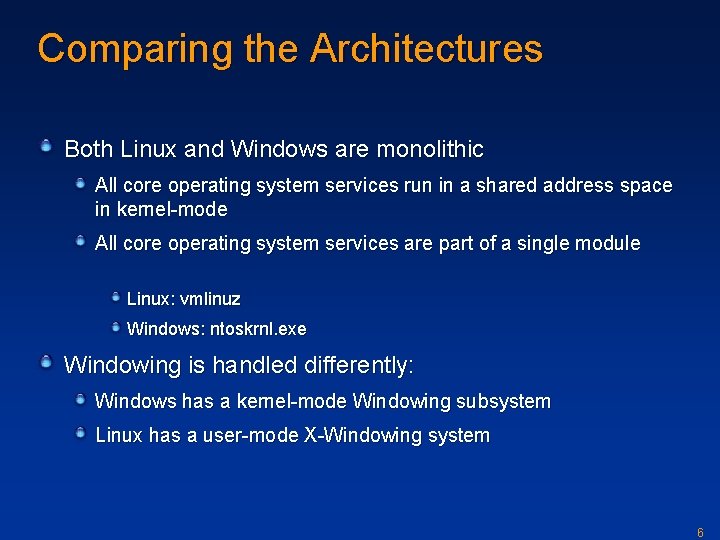

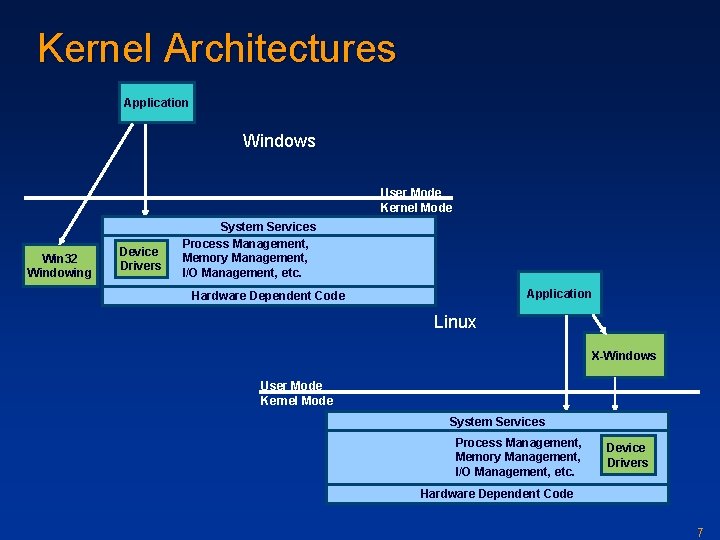

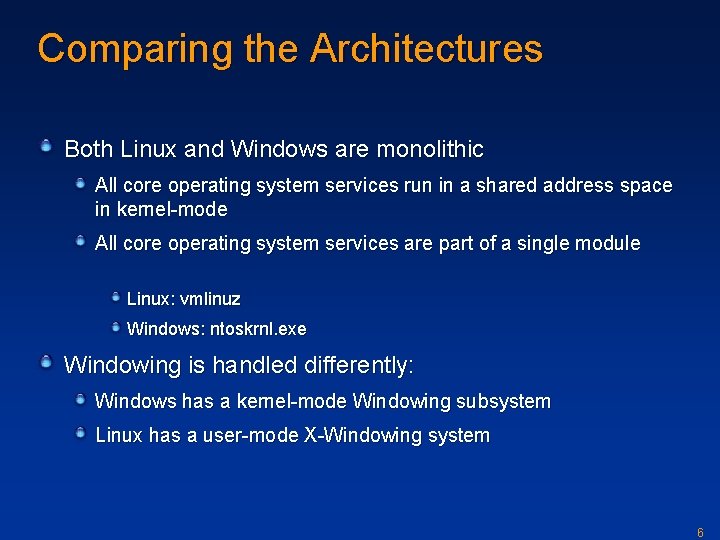

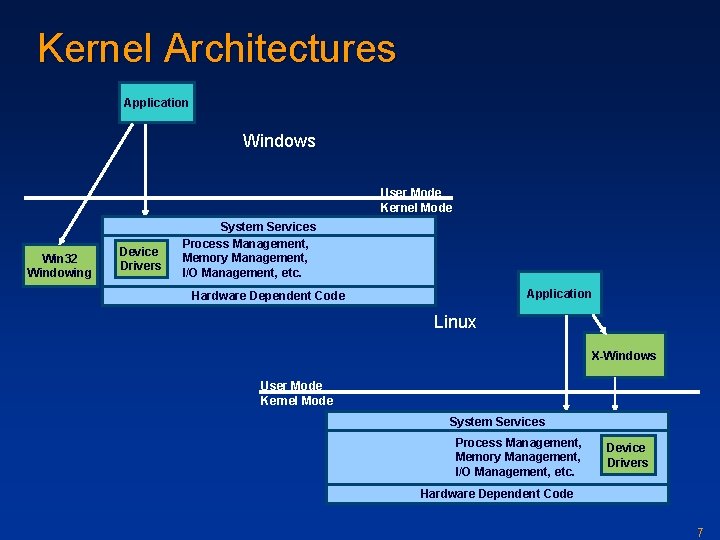

Comparing the Architectures Both Linux and Windows are monolithic All core operating system services run in a shared address space in kernel-mode All core operating system services are part of a single module Linux: vmlinuz Windows: ntoskrnl. exe Windowing is handled differently: Windows has a kernel-mode Windowing subsystem Linux has a user-mode X-Windowing system 6

Kernel Architectures Application Windows User Mode Kernel Mode Win 32 Windowing Device Drivers System Services Process Management, Memory Management, I/O Management, etc. Application Hardware Dependent Code Linux X-Windows User Mode Kernel Mode System Services Process Management, Memory Management, I/O Management, etc. Device Drivers Hardware Dependent Code 7

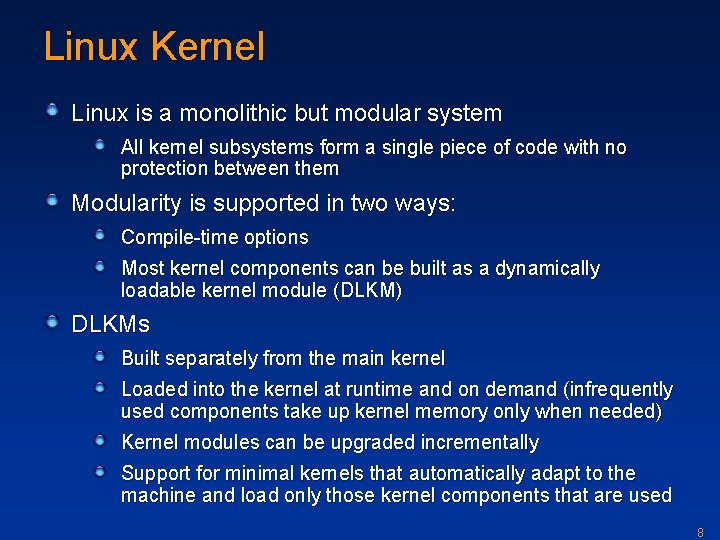

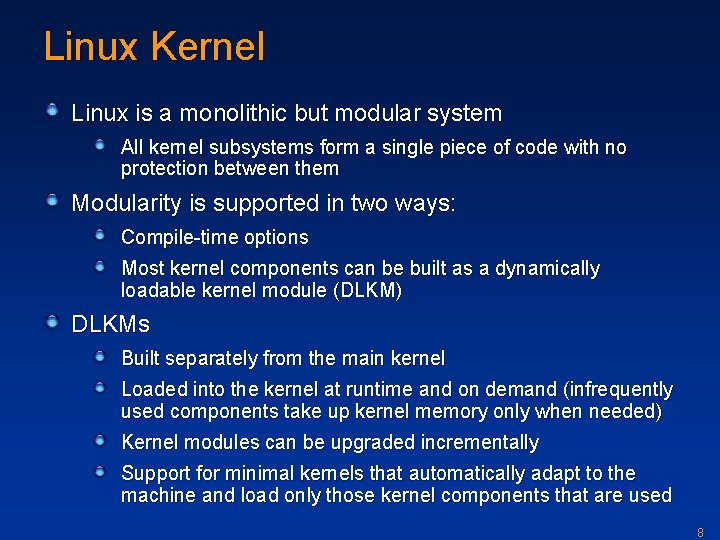

Linux Kernel Linux is a monolithic but modular system All kernel subsystems form a single piece of code with no protection between them Modularity is supported in two ways: Compile-time options Most kernel components can be built as a dynamically loadable kernel module (DLKM) DLKMs Built separately from the main kernel Loaded into the kernel at runtime and on demand (infrequently used components take up kernel memory only when needed) Kernel modules can be upgraded incrementally Support for minimal kernels that automatically adapt to the machine and load only those kernel components that are used 8

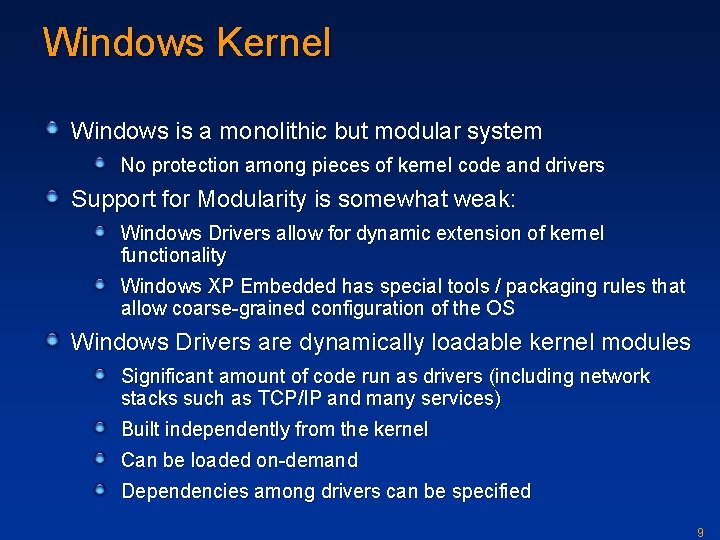

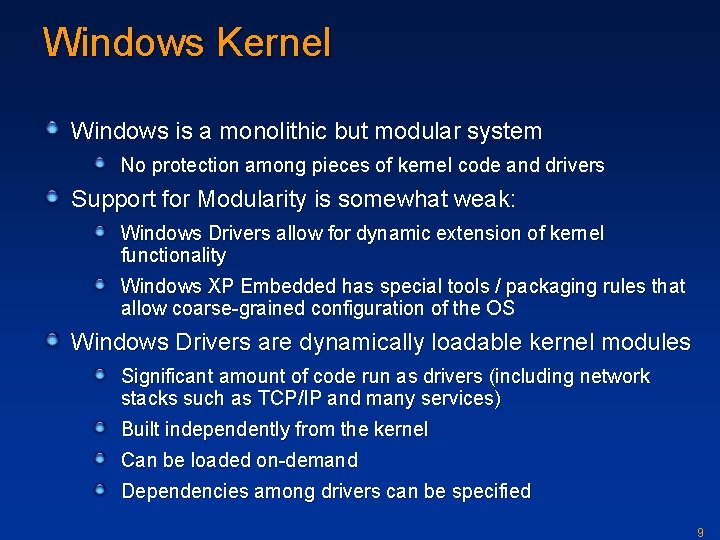

Windows Kernel Windows is a monolithic but modular system No protection among pieces of kernel code and drivers Support for Modularity is somewhat weak: Windows Drivers allow for dynamic extension of kernel functionality Windows XP Embedded has special tools / packaging rules that allow coarse-grained configuration of the OS Windows Drivers are dynamically loadable kernel modules Significant amount of code run as drivers (including network stacks such as TCP/IP and many services) Built independently from the kernel Can be loaded on-demand Dependencies among drivers can be specified 9

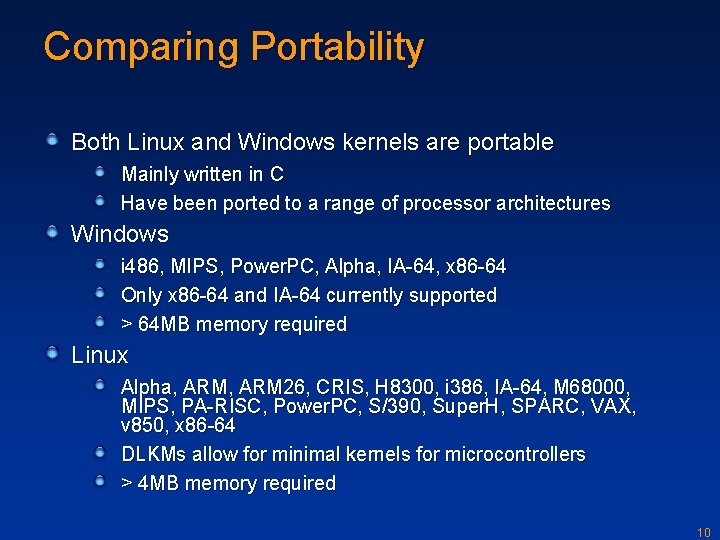

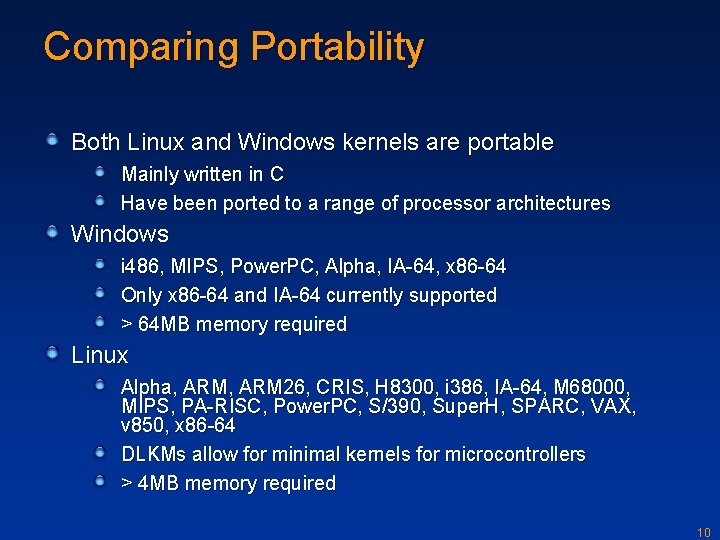

Comparing Portability Both Linux and Windows kernels are portable Mainly written in C Have been ported to a range of processor architectures Windows i 486, MIPS, Power. PC, Alpha, IA-64, x 86 -64 Only x 86 -64 and IA-64 currently supported > 64 MB memory required Linux Alpha, ARM 26, CRIS, H 8300, i 386, IA-64, M 68000, MIPS, PA-RISC, Power. PC, S/390, Super. H, SPARC, VAX, v 850, x 86 -64 DLKMs allow for minimal kernels for microcontrollers > 4 MB memory required 10

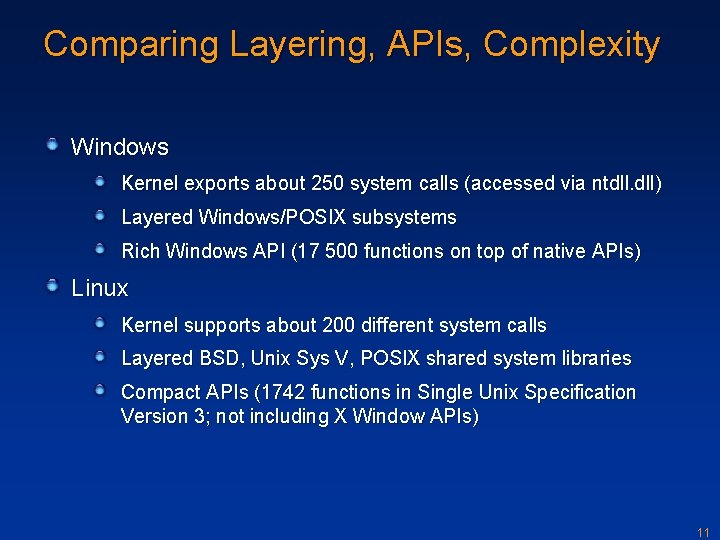

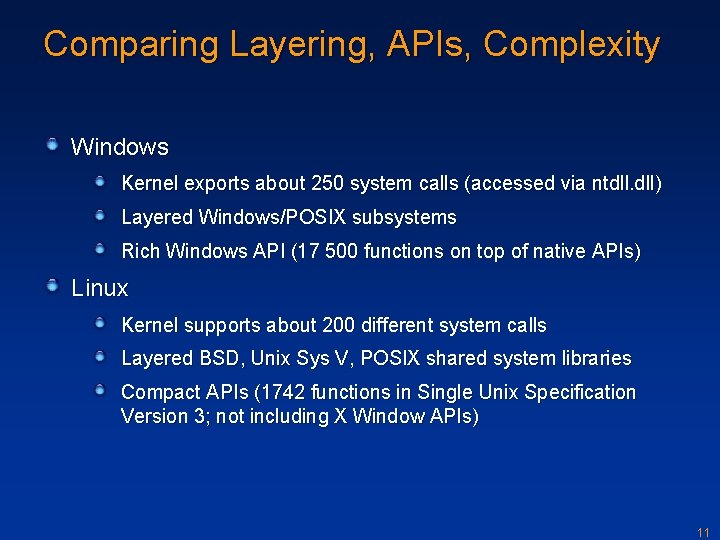

Comparing Layering, APIs, Complexity Windows Kernel exports about 250 system calls (accessed via ntdll. dll) Layered Windows/POSIX subsystems Rich Windows API (17 500 functions on top of native APIs) Linux Kernel supports about 200 different system calls Layered BSD, Unix Sys V, POSIX shared system libraries Compact APIs (1742 functions in Single Unix Specification Version 3; not including X Window APIs) 11

Comparing Architectures Processes and scheduling SMP support Memory management I/O File Caching Security 12

Process Management Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

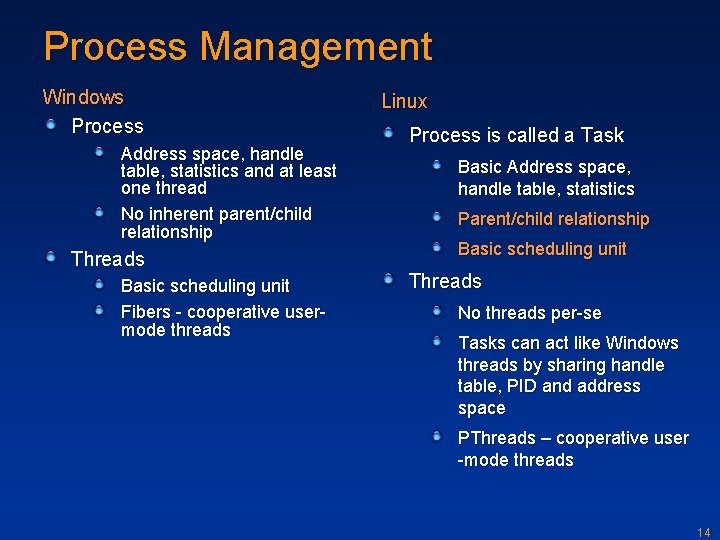

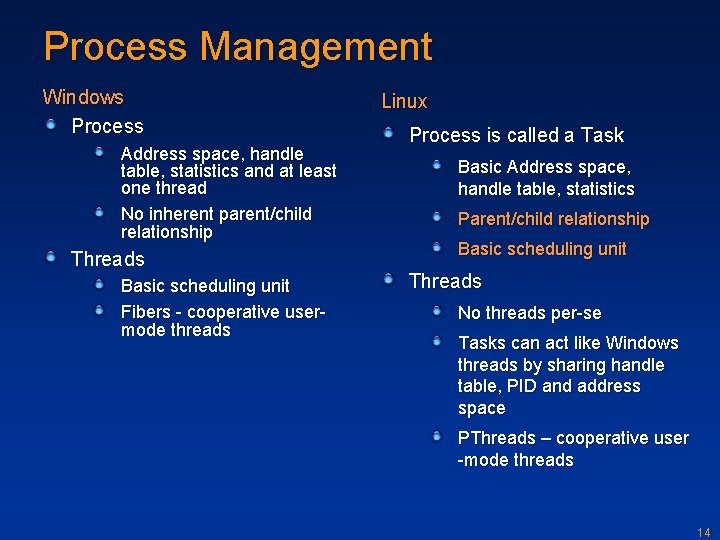

Process Management Windows Process Address space, handle table, statistics and at least one thread No inherent parent/child relationship Threads Basic scheduling unit Fibers - cooperative usermode threads Linux Process is called a Task Basic Address space, handle table, statistics Parent/child relationship Basic scheduling unit Threads No threads per-se Tasks can act like Windows threads by sharing handle table, PID and address space PThreads – cooperative user -mode threads 14

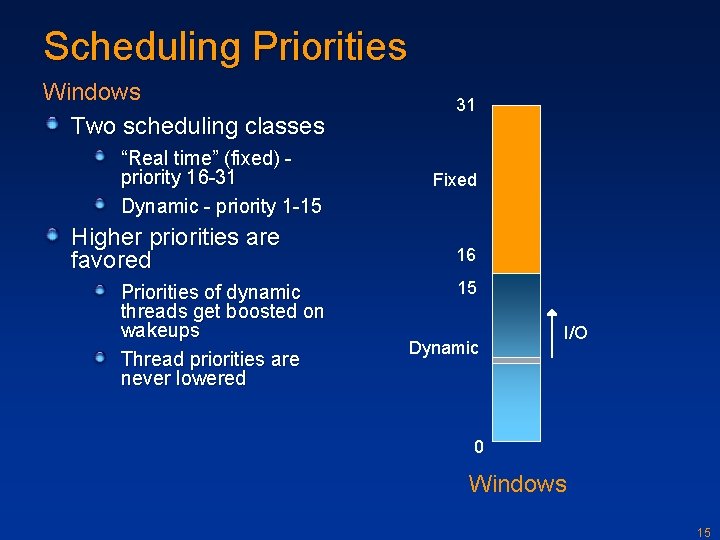

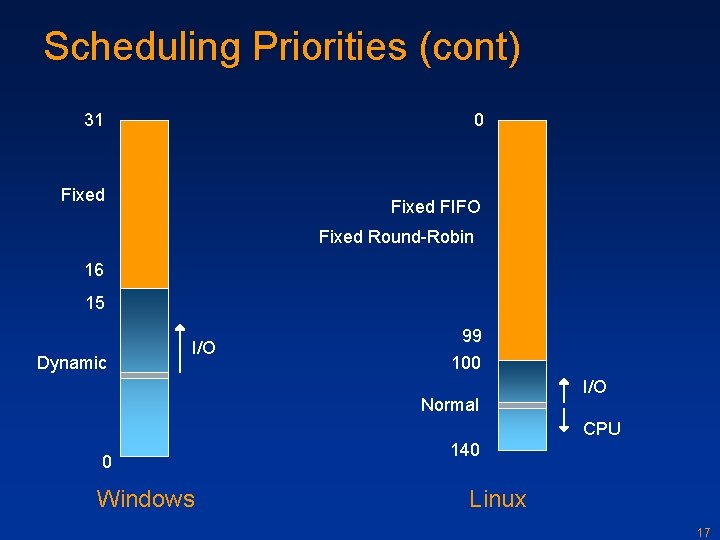

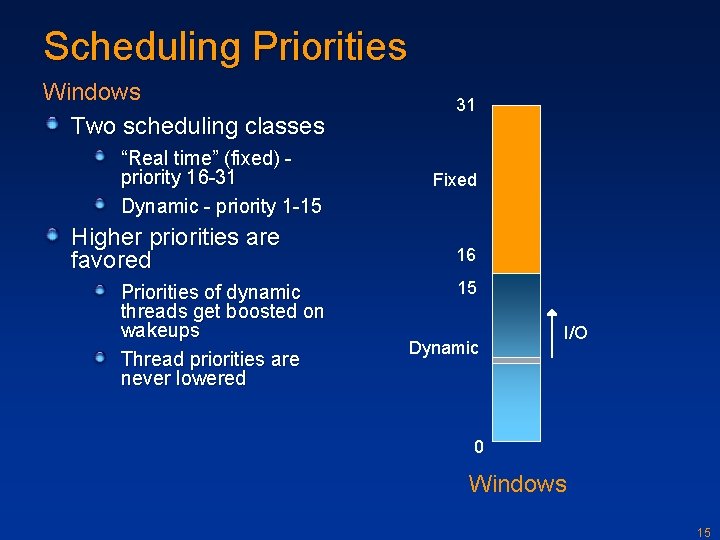

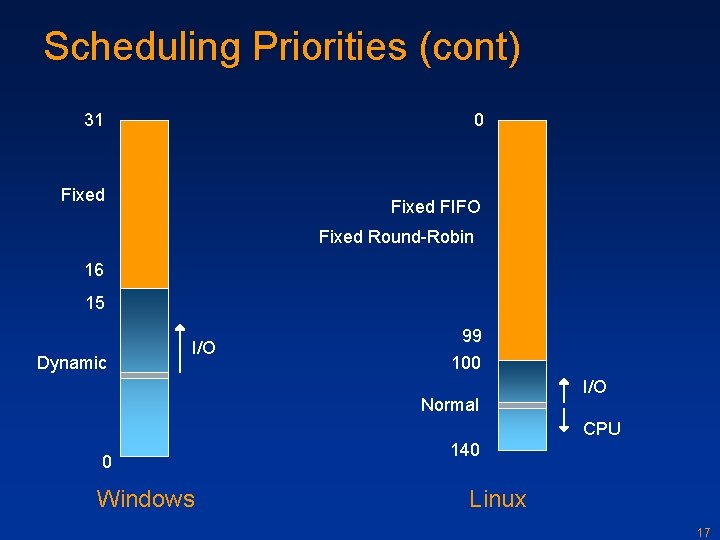

Scheduling Priorities Windows Two scheduling classes 31 “Real time” (fixed) priority 16 -31 Dynamic - priority 1 -15 Fixed Higher priorities are favored Priorities of dynamic threads get boosted on wakeups Thread priorities are never lowered 16 15 Dynamic I/O 0 Windows 15

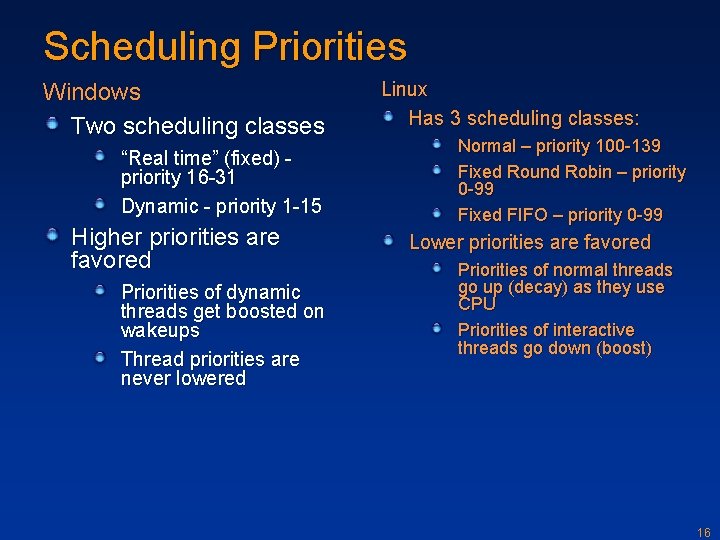

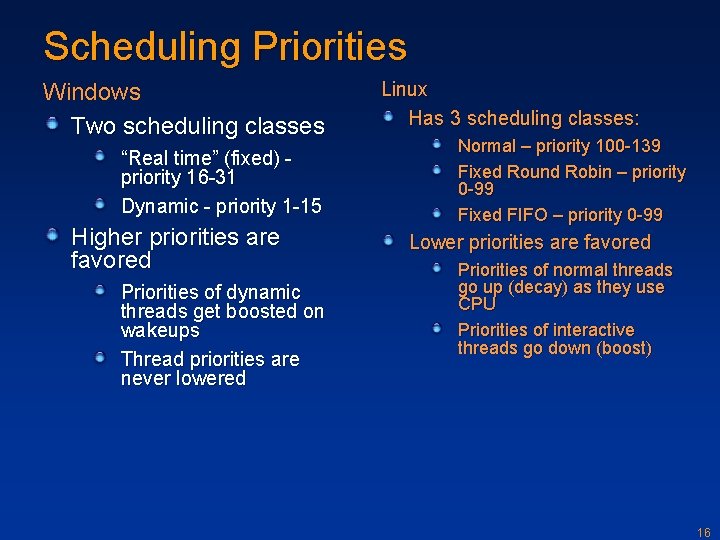

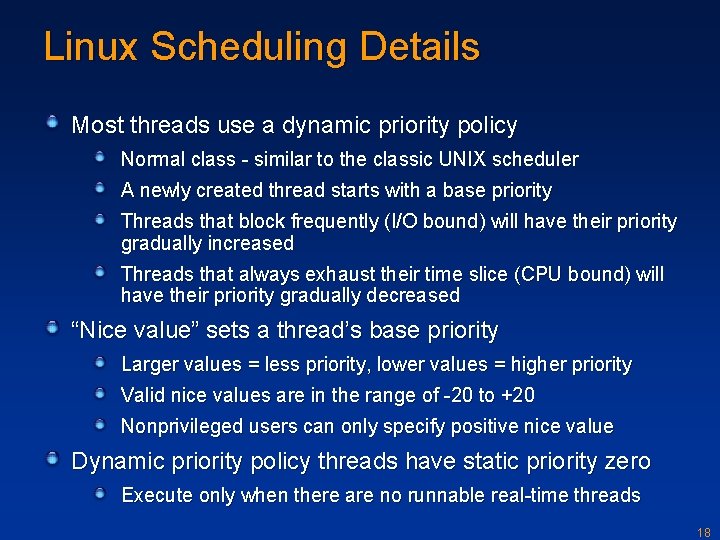

Scheduling Priorities Windows Two scheduling classes “Real time” (fixed) priority 16 -31 Dynamic - priority 1 -15 Higher priorities are favored Priorities of dynamic threads get boosted on wakeups Thread priorities are never lowered Linux Has 3 scheduling classes: Normal – priority 100 -139 Fixed Round Robin – priority 0 -99 Fixed FIFO – priority 0 -99 Lower priorities are favored Priorities of normal threads go up (decay) as they use CPU Priorities of interactive threads go down (boost) 16

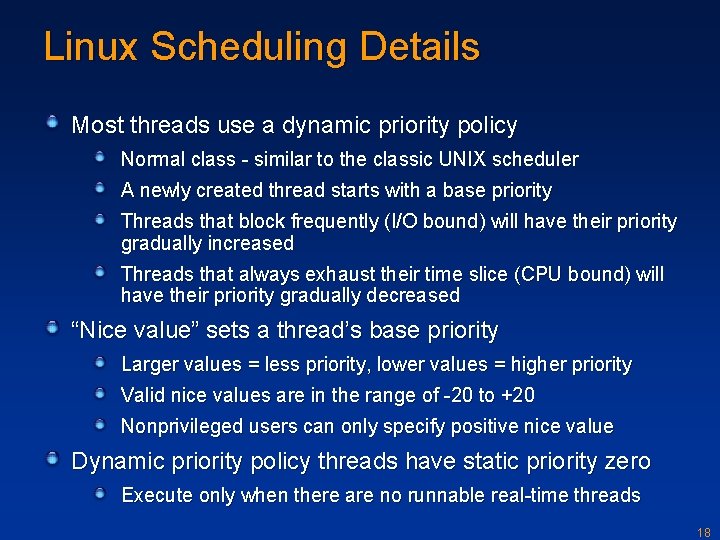

Scheduling Priorities (cont) 31 0 Fixed FIFO Fixed Round-Robin 16 15 Dynamic I/O 99 100 Normal I/O CPU 0 Windows 140 Linux 17

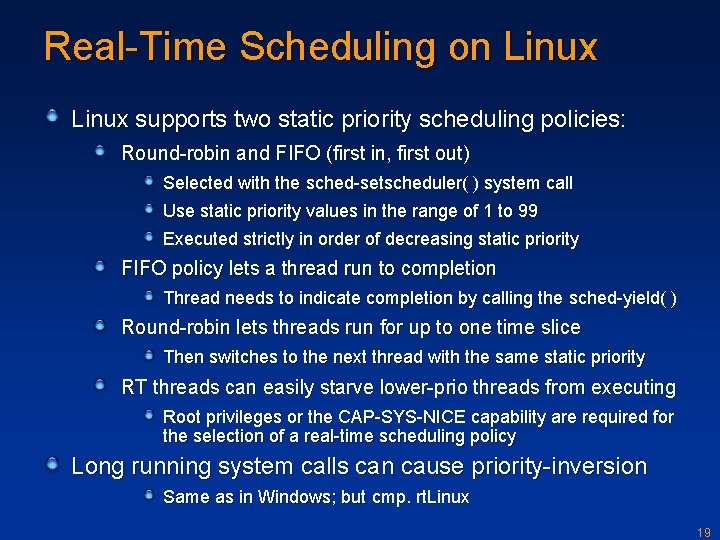

Linux Scheduling Details Most threads use a dynamic priority policy Normal class - similar to the classic UNIX scheduler A newly created thread starts with a base priority Threads that block frequently (I/O bound) will have their priority gradually increased Threads that always exhaust their time slice (CPU bound) will have their priority gradually decreased “Nice value” sets a thread’s base priority Larger values = less priority, lower values = higher priority Valid nice values are in the range of -20 to +20 Nonprivileged users can only specify positive nice value Dynamic priority policy threads have static priority zero Execute only when there are no runnable real-time threads 18

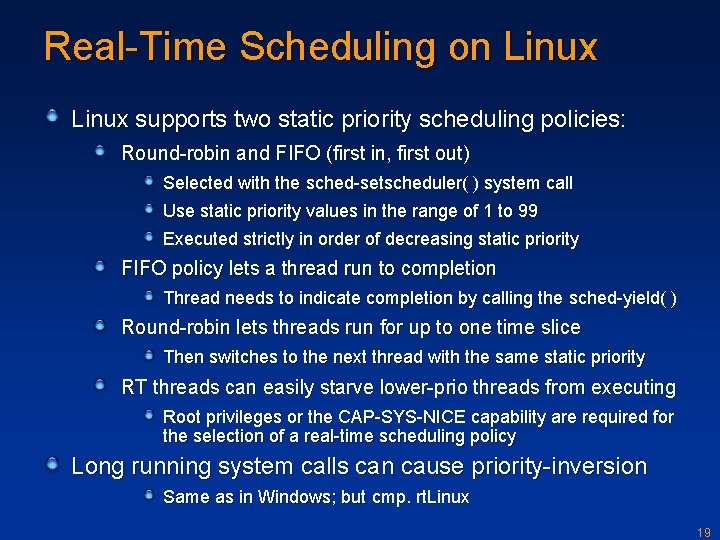

Real-Time Scheduling on Linux supports two static priority scheduling policies: Round-robin and FIFO (first in, first out) Selected with the sched-setscheduler( ) system call Use static priority values in the range of 1 to 99 Executed strictly in order of decreasing static priority FIFO policy lets a thread run to completion Thread needs to indicate completion by calling the sched-yield( ) Round-robin lets threads run for up to one time slice Then switches to the next thread with the same static priority RT threads can easily starve lower-prio threads from executing Root privileges or the CAP-SYS-NICE capability are required for the selection of a real-time scheduling policy Long running system calls can cause priority-inversion Same as in Windows; but cmp. rt. Linux 19

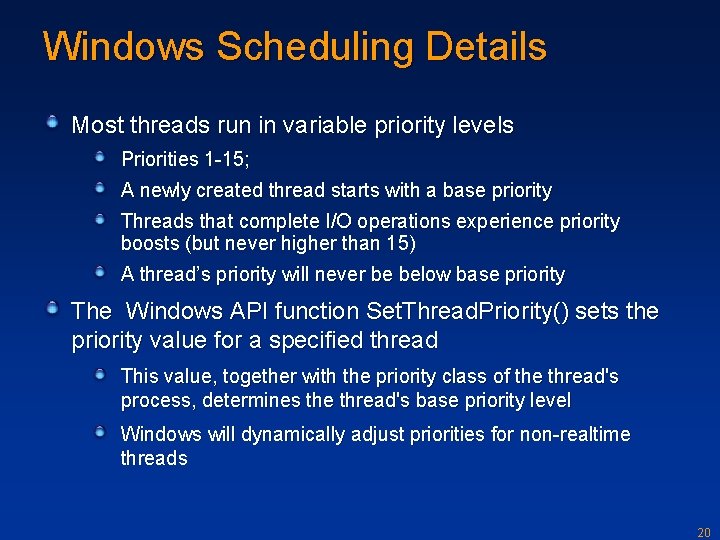

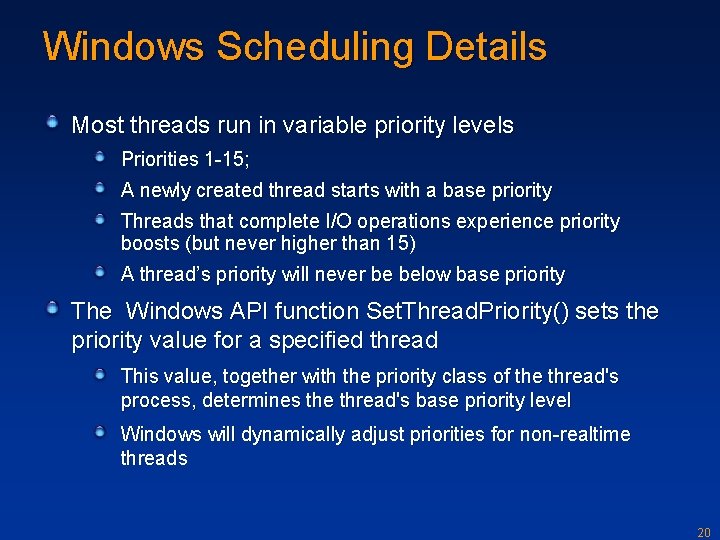

Windows Scheduling Details Most threads run in variable priority levels Priorities 1 -15; A newly created thread starts with a base priority Threads that complete I/O operations experience priority boosts (but never higher than 15) A thread’s priority will never be below base priority The Windows API function Set. Thread. Priority() sets the priority value for a specified thread This value, together with the priority class of the thread's process, determines the thread's base priority level Windows will dynamically adjust priorities for non-realtime threads 20

Real-Time Scheduling on Windows supports static round-robin scheduling policy for threads with priorities in real-time range (16 -31) Threads run for up to one quantum Quantum is reset to full turn on preemption Priorities never get boosted RT threads can starve important system services Such as CSRSS. EXE Se. Increase. Base. Priority. Privilege required to elevate a thread’s priority into real-time range (this privilege is assigned to members of Administrators group) System calls and DPC/APC handling can cause priority inversion 21

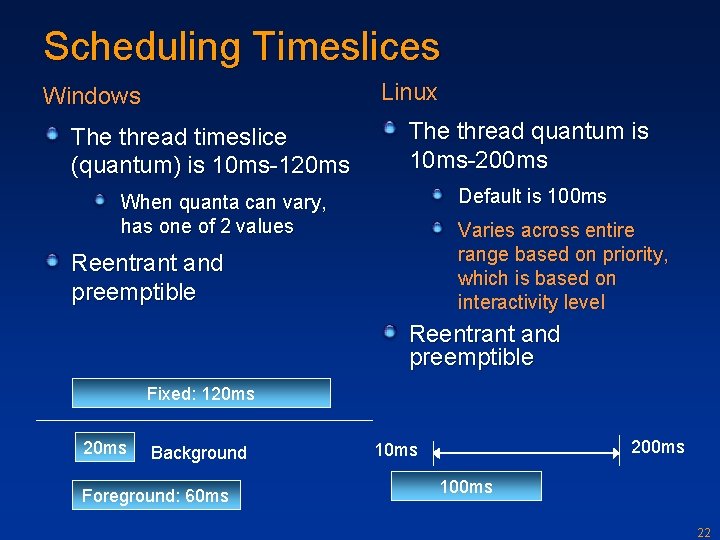

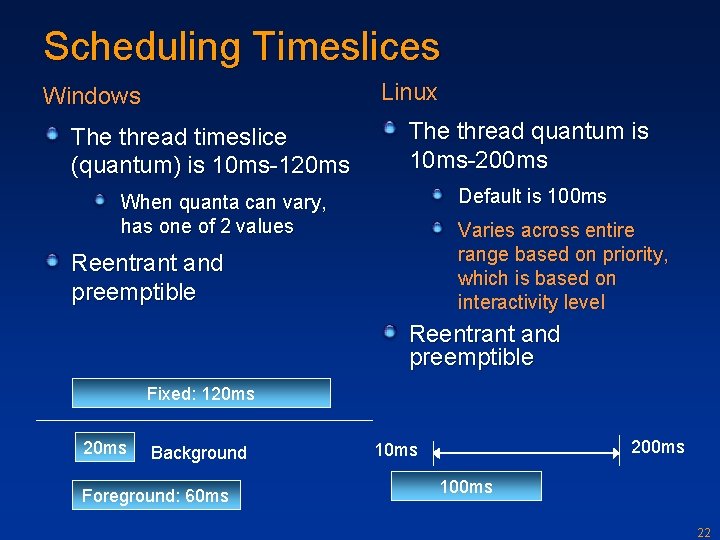

Scheduling Timeslices Linux Windows The thread timeslice (quantum) is 10 ms-120 ms The thread quantum is 10 ms-200 ms Default is 100 ms When quanta can vary, has one of 2 values Varies across entire range based on priority, which is based on interactivity level Reentrant and preemptible Fixed: 120 ms Background Foreground: 60 ms 200 ms 100 ms 22

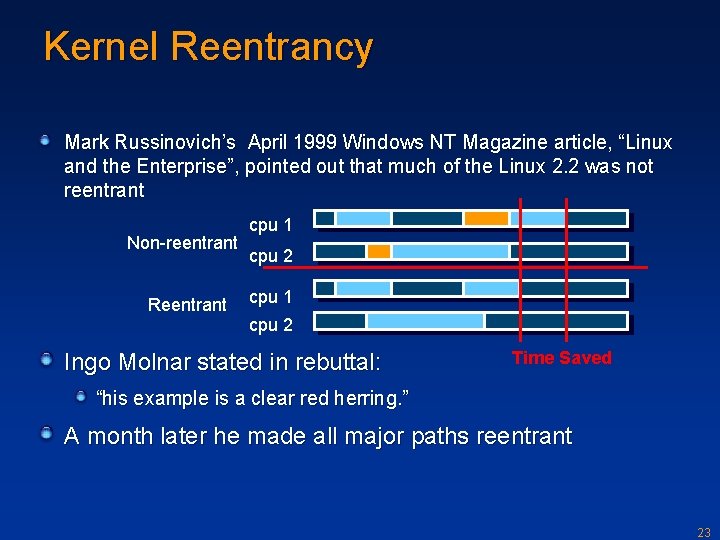

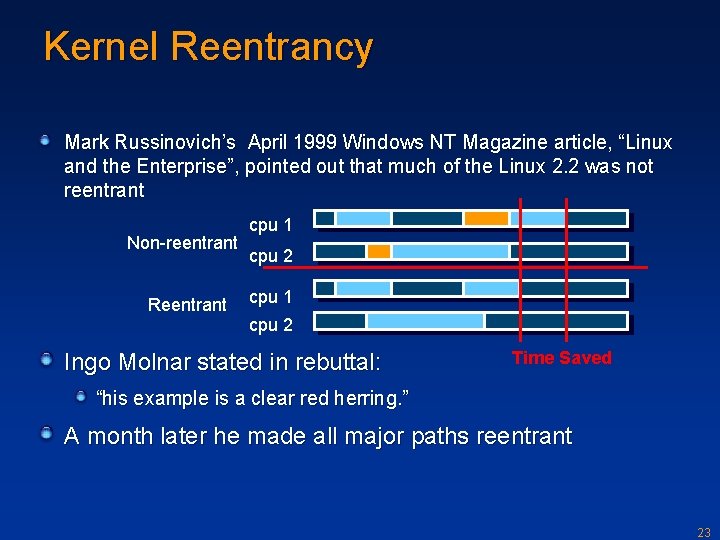

Kernel Reentrancy Mark Russinovich’s April 1999 Windows NT Magazine article, “Linux and the Enterprise”, pointed out that much of the Linux 2. 2 was not reentrant Non-reentrant Reentrant cpu 1 cpu 2 Ingo Molnar stated in rebuttal: Time Saved “his example is a clear red herring. ” A month later he made all major paths reentrant 23

Kernel Preemptibility A preemptible kernel is more responsive to high-priority tasks Through the base release of v 2. 4 Linux was only cooperatively preemptible There are well-defined safe places where a thread running in the kernel can be preempted The kernel is preemptible in v 2. 4 patches and v 2. 6 Windows NT has always been preemptible 24

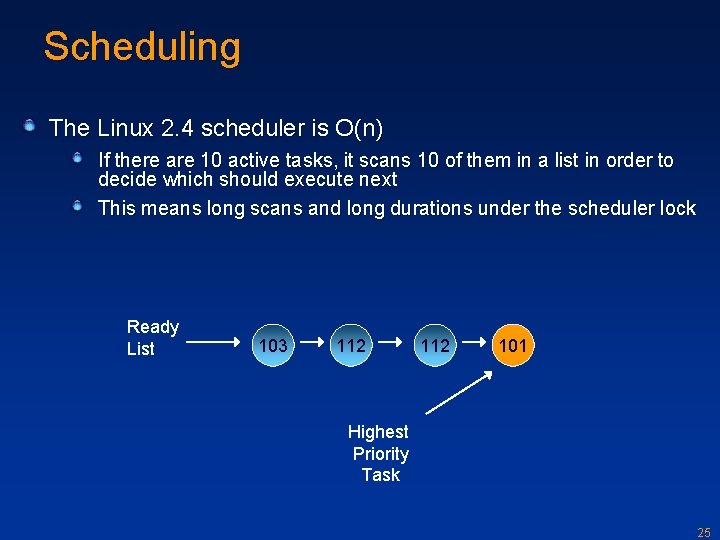

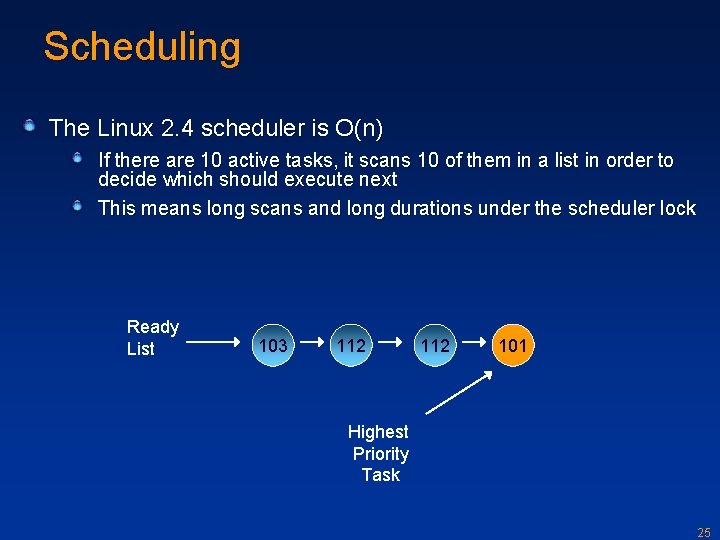

Scheduling The Linux 2. 4 scheduler is O(n) If there are 10 active tasks, it scans 10 of them in a list in order to decide which should execute next This means long scans and long durations under the scheduler lock Ready List 103 112 101 Highest Priority Task 25

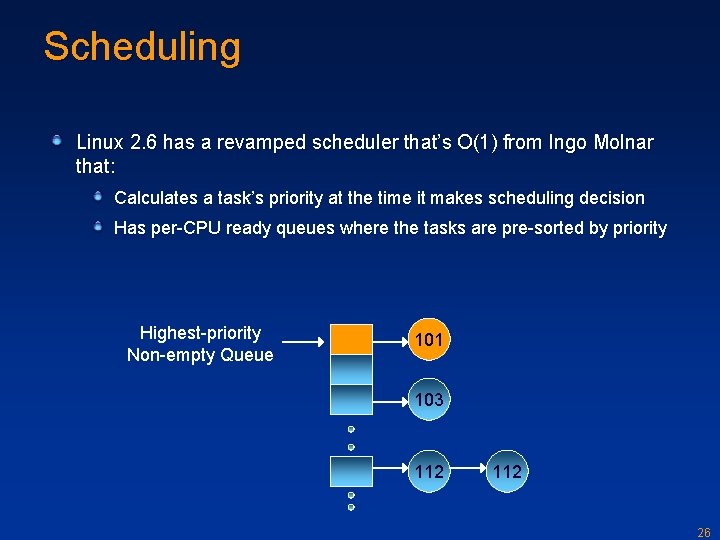

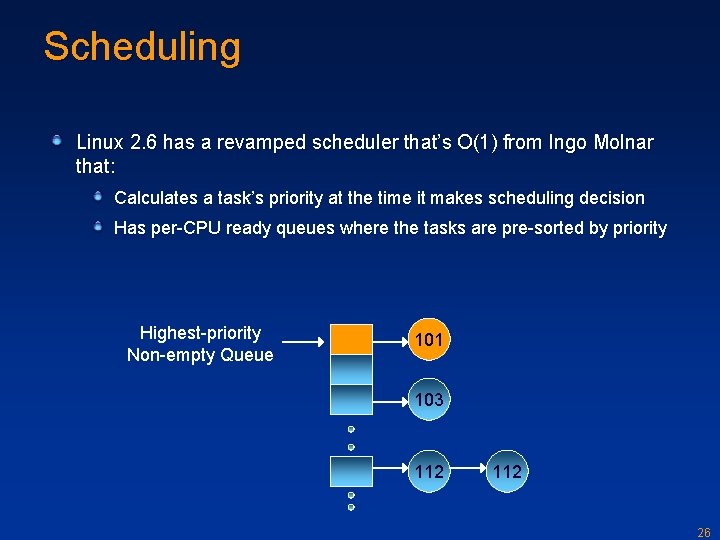

Scheduling Linux 2. 6 has a revamped scheduler that’s O(1) from Ingo Molnar that: Calculates a task’s priority at the time it makes scheduling decision Has per-CPU ready queues where the tasks are pre-sorted by priority Highest-priority Non-empty Queue 101 103 112 26

Scheduling Windows NT has always had an O(1) scheduler based on pre-sorted thread priority queues Server 2003 introduced per-CPU ready queues Linux load balances queues Windows does not Not seen as an issue in performance testing by Microsoft Applications where it might be an issue are expected to use affinity 27

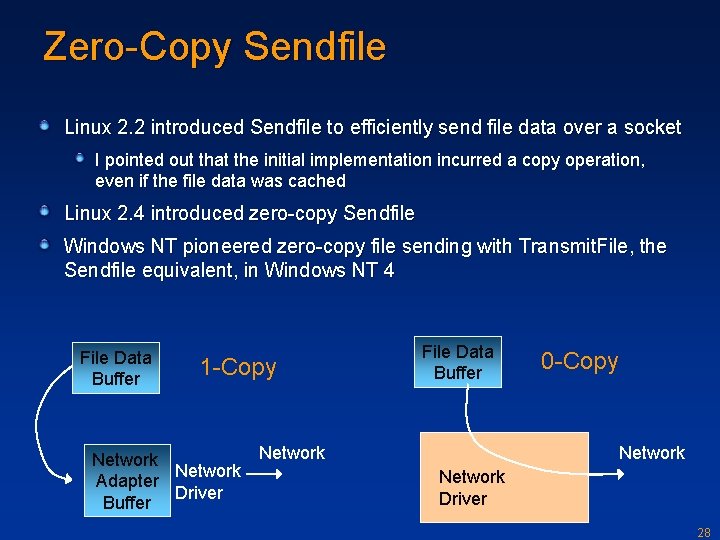

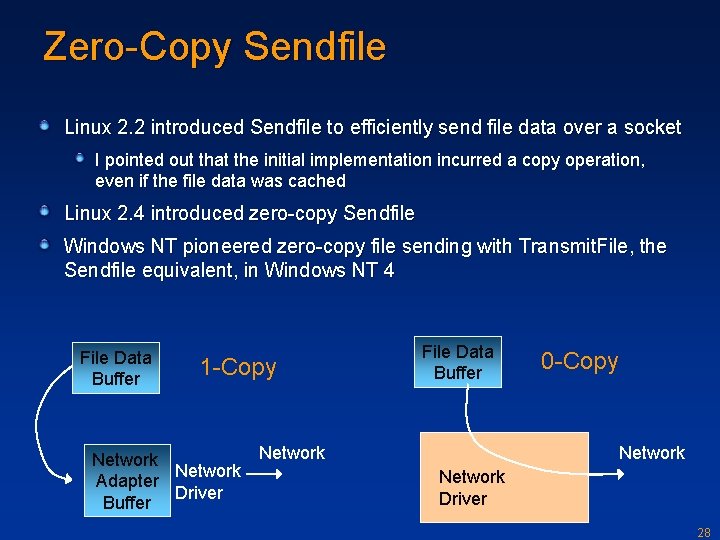

Zero-Copy Sendfile Linux 2. 2 introduced Sendfile to efficiently send file data over a socket I pointed out that the initial implementation incurred a copy operation, even if the file data was cached Linux 2. 4 introduced zero-copy Sendfile Windows NT pioneered zero-copy file sending with Transmit. File, the Sendfile equivalent, in Windows NT 4 File Data Buffer 1 -Copy Network Adapter Driver Buffer File Data Buffer Network 0 -Copy Network Driver 28

Wake-one Socket Semantics Linux 2. 2 kernel had the thundering herd or overscheduling problem In a network server application there are typically several threads waiting for a new connection In v 2. 2 when a new connection came in all the waiters would race to get it Ingo Molnar’s response: 5/2/99: “here he again forgets to _prove_ that overscheduling happens in Linux. ” 5/7/99: “as of 2. 3. 1 my wake-one implementation and waitqueues rewrite went in” In Linux 2. 4 only one thread wakes up to claim the new connection Windows NT has always had wake-1 semantics 29

Light-Weight Synchronization Linux 2. 6 introduces Futexes There’s only a transition to kernel-mode when there’s contention Windows has always had Critical. Sections Same behavior Futexes go further: Allow for prioritization of waits Works interprocess as well 30

Memory Management Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

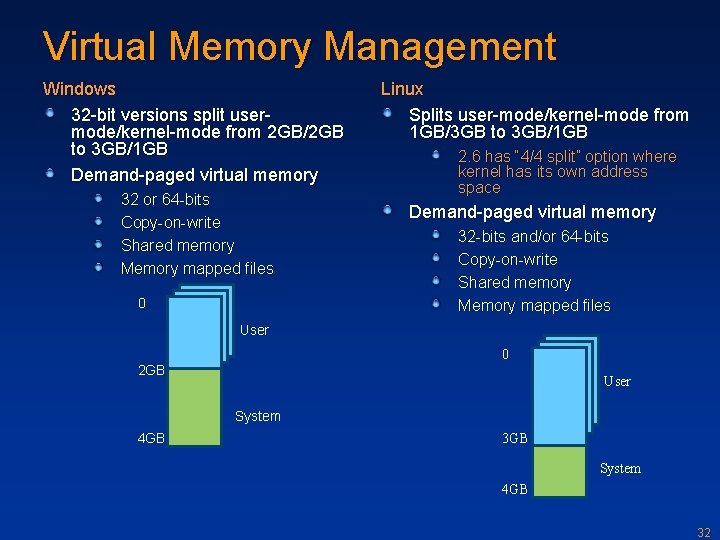

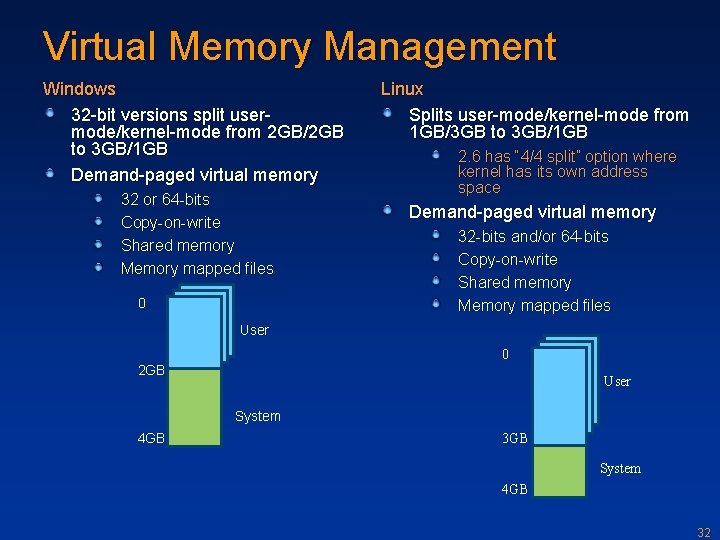

Virtual Memory Management Windows 32 -bit versions split usermode/kernel-mode from 2 GB/2 GB to 3 GB/1 GB Demand-paged virtual memory 32 or 64 -bits Copy-on-write Shared memory Memory mapped files 0 Linux Splits user-mode/kernel-mode from 1 GB/3 GB to 3 GB/1 GB 2. 6 has “ 4/4 split” option where kernel has its own address space Demand-paged virtual memory 32 -bits and/or 64 -bits Copy-on-write Shared memory Memory mapped files User 0 2 GB User System 4 GB 3 GB System 4 GB 32

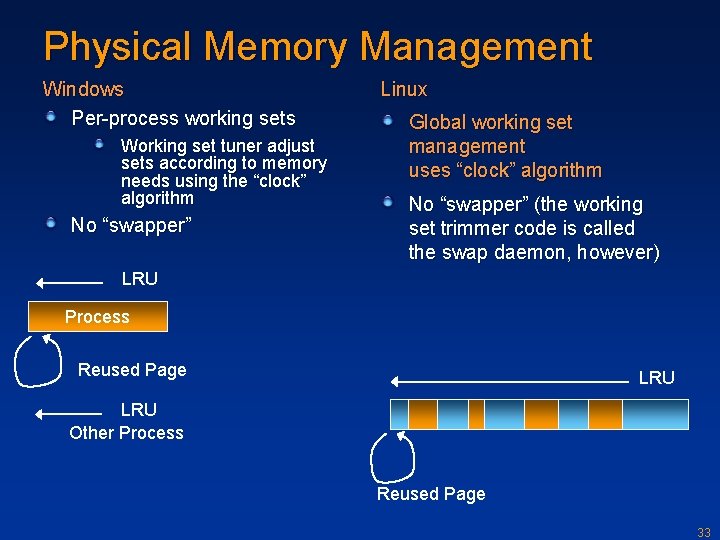

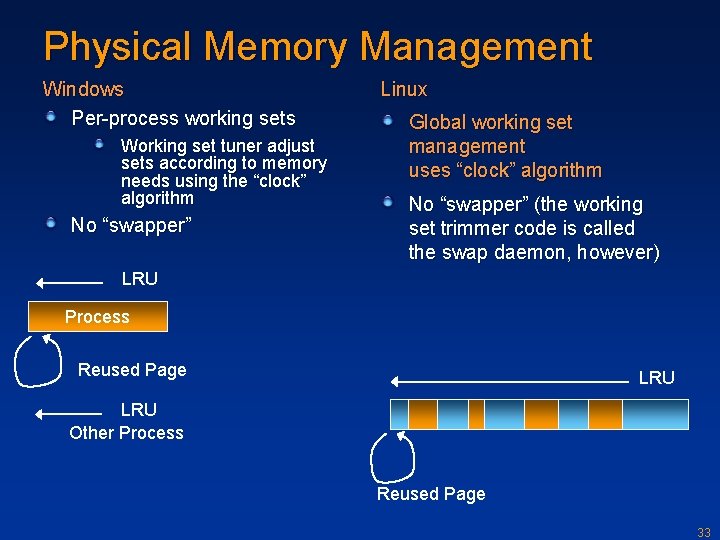

Physical Memory Management Windows Per-process working sets Working set tuner adjust sets according to memory needs using the “clock” algorithm No “swapper” Linux Global working set management uses “clock” algorithm No “swapper” (the working set trimmer code is called the swap daemon, however) LRU Process Reused Page LRU Other Process Reused Page 33

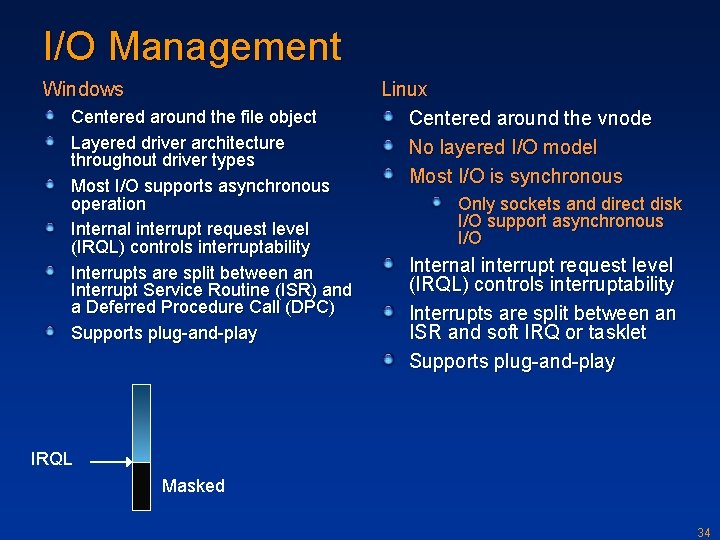

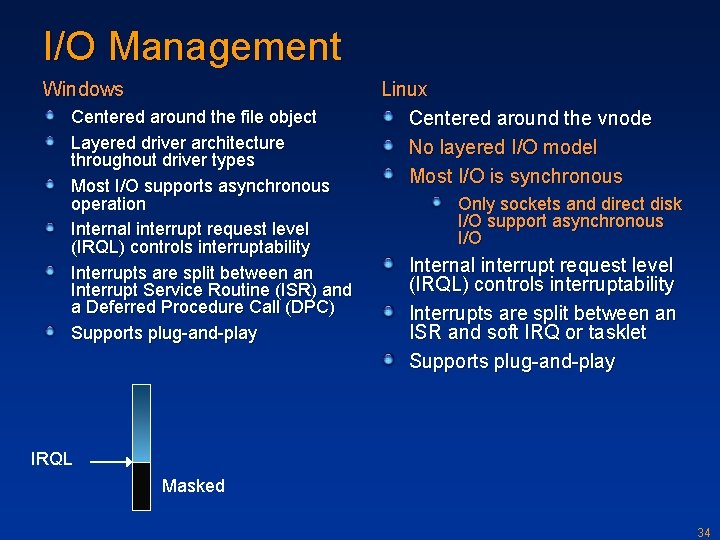

I/O Management Windows Centered around the file object Layered driver architecture throughout driver types Most I/O supports asynchronous operation Internal interrupt request level (IRQL) controls interruptability Interrupts are split between an Interrupt Service Routine (ISR) and a Deferred Procedure Call (DPC) Supports plug-and-play Linux Centered around the vnode No layered I/O model Most I/O is synchronous Only sockets and direct disk I/O support asynchronous I/O Internal interrupt request level (IRQL) controls interruptability Interrupts are split between an ISR and soft IRQ or tasklet Supports plug-and-play IRQL Masked 34

I/O & File System Management Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

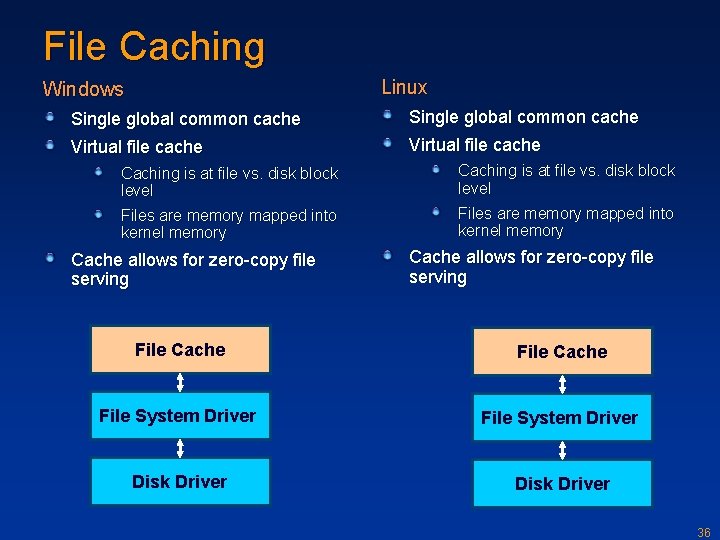

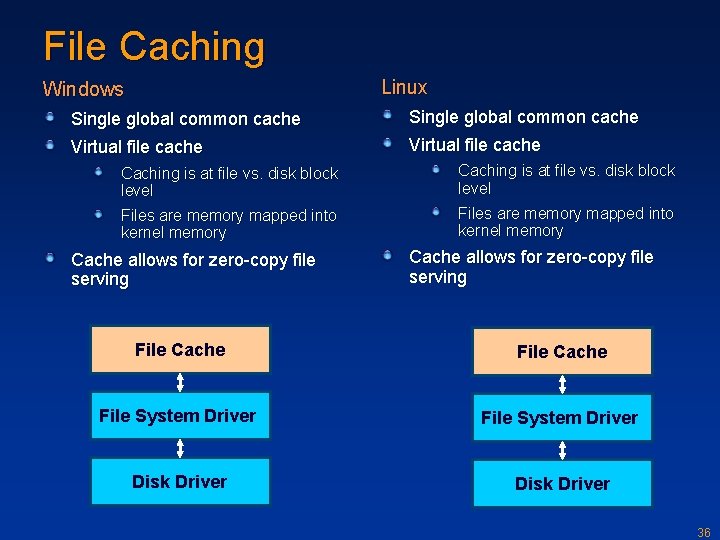

File Caching Linux Windows Single global common cache Virtual file cache Caching is at file vs. disk block level Files are memory mapped into kernel memory Cache allows for zero-copy file serving File Cache File System Driver Disk Driver 36

Monitoring - Linux procfs Linux supports a number of special filesystems Like special files, they are of a more dynamic nature and tend to have side effects when accessed Prime example is procfs (mounted at /proc) provides access to and control over various aspects of Linux (I. e. ; scheduling and memory management) /proc/meminfo contains detailed statistics on the current memory usage of Linux Content changes as memory usage changes over time Services for Unix implements procfs on Windows 37

I/O Processing Linux 2. 2 had the notion of bottom halves (BH) for lowpriority interrupt processing Fixed number of BHs Only one BH of a given type could be active on a SMP Linux 2. 4 introduced tasklets, which are non-preemptible procedures called with interrupts enabled Tasklets are the equivalent of Windows Deferred Procedure Calls (DPCs) 38

Asynchronous I/O Linux 2. 2 only supported asynchronous I/O on socket connect operations and tty’s Linux 2. 6 adds asynchronous I/O for direct-disk access AIO model includes efficient management of asynchronous I/O Also added alternate epoll model Useful for database servers managing their database on a dedicated raw partition Database servers that manage a file-based database suffer from synchronous I/O Windows I/O is inherently asynchronous Windows had completion ports since NT 3. 5 More advanced form of AIO 39

Security Windows Operating System Internals - by David A. Solomon and Mark E. Russinovich with Andreas Polze

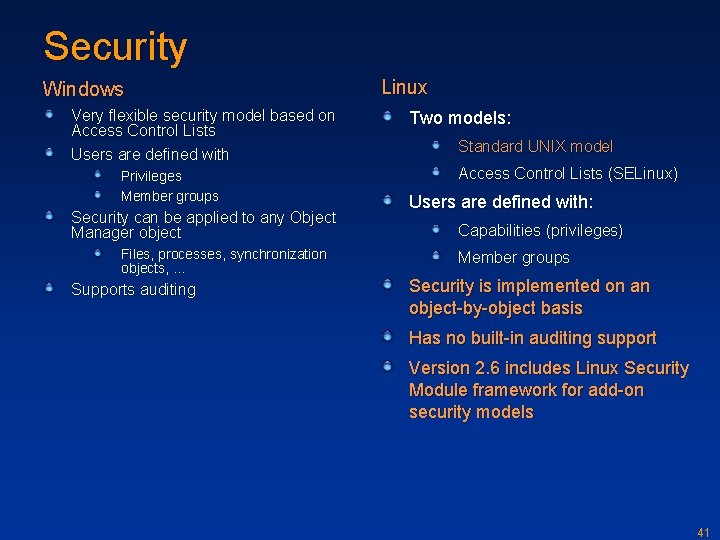

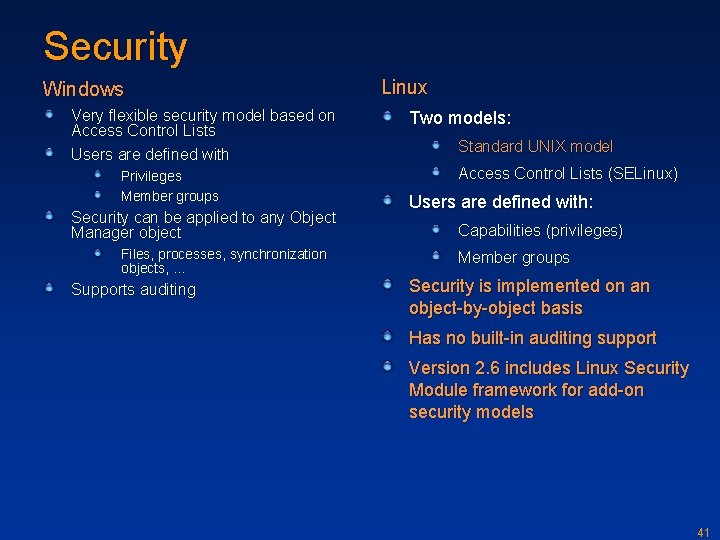

Security Windows Very flexible security model based on Access Control Lists Users are defined with Privileges Member groups Security can be applied to any Object Manager object Files, processes, synchronization objects, … Supports auditing Linux Two models: Standard UNIX model Access Control Lists (SELinux) Users are defined with: Capabilities (privileges) Member groups Security is implemented on an object-by-object basis Has no built-in auditing support Version 2. 6 includes Linux Security Module framework for add-on security models 41

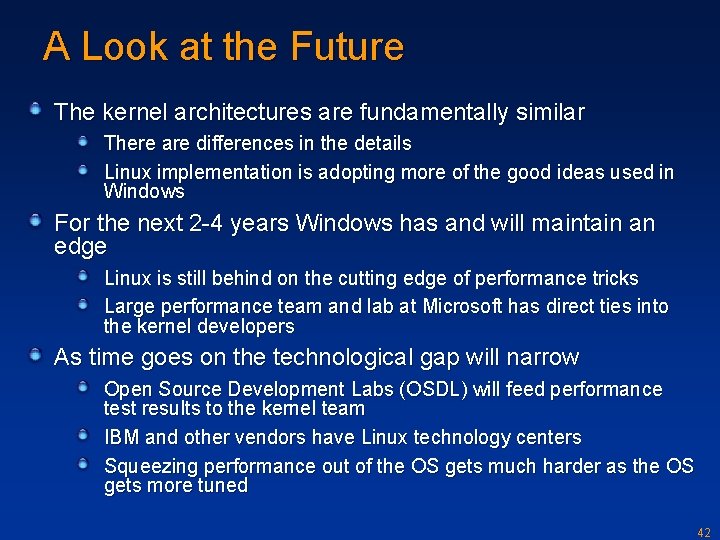

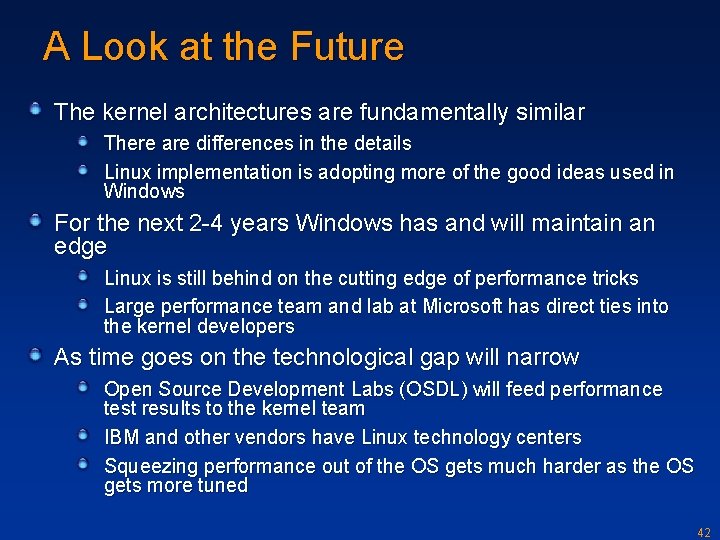

A Look at the Future The kernel architectures are fundamentally similar There are differences in the details Linux implementation is adopting more of the good ideas used in Windows For the next 2 -4 years Windows has and will maintain an edge Linux is still behind on the cutting edge of performance tricks Large performance team and lab at Microsoft has direct ties into the kernel developers As time goes on the technological gap will narrow Open Source Development Labs (OSDL) will feed performance test results to the kernel team IBM and other vendors have Linux technology centers Squeezing performance out of the OS gets much harder as the OS gets more tuned 42

Linux Technology Unknowns Linux kernel forking Red. Hat has already done it: Red Hat Enterprise Server v 3. 0 is Linux 2. 4 with some Linux 2. 6 features Backward compatibility philosophy Linus Torvalds makes decisions on kernel APIs and architecture based on technical reasons, not business reasons 43

Further Reading Transaction Processing Council: www. tpc. org SPEC: www. spec. org NT vs Linux benchmarks: www. kegel. com/nt-linuxbenchmarks. html The C 10 K problem: http: //www. kegel. com/c 10 k. html Linus Torvald’s home: http: //www. osdl. org/ Linux Kernel Archives: http: //www. kernel. org/ Linux history: http: //www. firstmonday. dk/issues/issue 5_11/moon/ Veritest Netbench result: http: //www. veritest. com/clients/reports/microsoft/ms_netbench. pdf Mark Russinovich’s 1999 article, “Linux and the Enterprise”: http: //www. winntmag. com/Articles/Index. cfm? Article. ID=5048 The Open Group's Single UNIX Specification: http: //www. unix. org/version 3/ 44