CSC 660 Advanced OS Distributed Filesystems CSC 660

CSC 660: Advanced OS Distributed Filesystems CSC 660: Advanced Operating Systems 1

Topics 1. 2. 3. 4. 5. Filesystem History Distributed Filesystems AFS Google. FS Common filesystem issues CSC 660: Advanced Operating Systems 2

Filesystem History • • • FS (1974) Fast Filesystem (FFS) / UFS (1984) Log-structured Filesystem (1991) ext 2 (1993) ext 3 (2001) WAFL (1994) XFS (1994) Reiserfs (1998) ZFS (2004) CSC 660: Advanced Operating Systems 3

FS • First UNIX filesystem (1974) • Simple – – Layout: superblock, inodes, then data blocks. Unused blocks stored in free linked list, not bitmap. 512 byte blocks, no fragments. Short filenames. • Slow: 2% of raw disk bandwidth. – Disk seeks consume most file access time due to small block size and high fragmentation. – Later doubled perf by using 1 KB blocks. CSC 660: Advanced Operating Systems 4

FFS • BSD (1984), basis for SYSV UFS • More complex – – – Cylinder groups: inodes, bitmaps, data blocks. Larger blocks (4 K) with 1 K fragments. Block layout based on physical disk parameters. Long filenames, symlinks, file locks, quotas. 10% space reserved by default. • Faster: 14 -47% of raw disk bandwidth. – Creating a new file requires 5 seeks. – 2 inode seeks, 1 file data, 1 dir inode – User/kernel memory copies take 40% of disk op time. CSC 660: Advanced Operating Systems 5

Log-structured Filesystem (LFS) • All data stored as sequential log entries. – Divided into large log segments. – Cleaner defragments, produces new segments. • Fast recovery: checkpoint + roll forward. • Performance: 70% of raw disk bandwidth. – Large sequential writes vs multiple writes/seeks. – Inode map tracks dynamic locations of inodes. CSC 660: Advanced Operating Systems 6

ext 2 and ext 3 FFS + performance features. – – – Variable block size (1 K-4 K), no fragments. Partitions disk into block groups. Data block preallocation + read ahead. Fast symlinks (stored in inode. ) 5% space reserved by default. Very fast. ext 3 adds journaling capabilities. CSC 660: Advanced Operating Systems 7

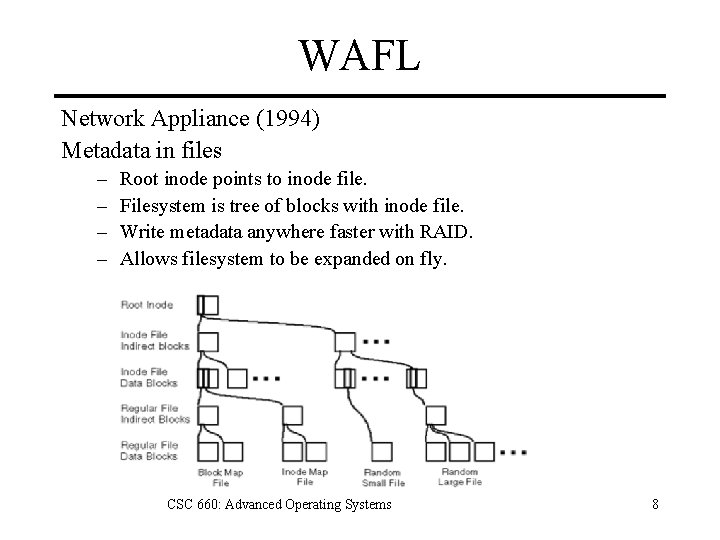

WAFL Network Appliance (1994) Metadata in files – – Root inode points to inode file. Filesystem is tree of blocks with inode file. Write metadata anywhere faster with RAID. Allows filesystem to be expanded on fly. CSC 660: Advanced Operating Systems 8

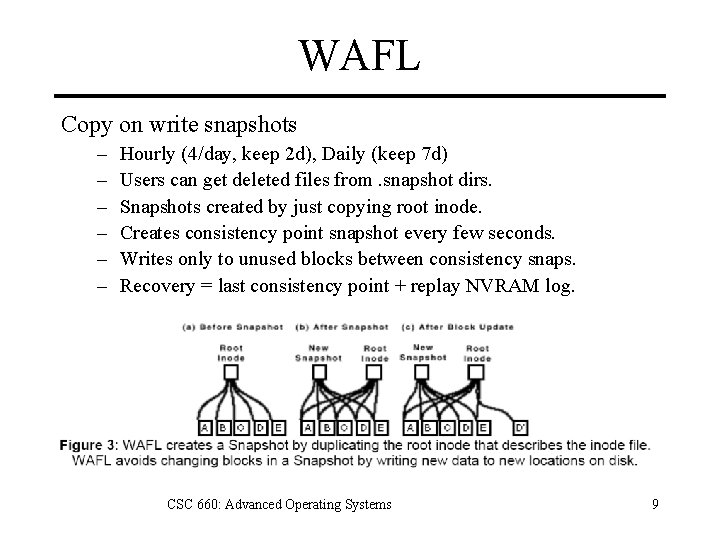

WAFL Copy on write snapshots – – – Hourly (4/day, keep 2 d), Daily (keep 7 d) Users can get deleted files from. snapshot dirs. Snapshots created by just copying root inode. Creates consistency point snapshot every few seconds. Writes only to unused blocks between consistency snaps. Recovery = last consistency point + replay NVRAM log. CSC 660: Advanced Operating Systems 9

XFS SGI (1994) Complex journaling filesystem – Uses B+ trees to track free space, index dirs, locate file blocks and inodes. – Dynamic inode allocation, metadata journaling, volume manager, multithreaded, allocate on flush. – 64 -bit filesystem (filesystems up to 263 bytes. ) – Fast: 90 -95% of raw disk bandwidth. CSC 660: Advanced Operating Systems 10

Reiserfs Multiple different versions (v 1 -4) Complex tree-based filesystem – Uses B+ trees (v 3) or dancing trees (v 4). – Journaling, allocate on flush, COW, tail-packing – High perf with small files, large directories. – Second to ext 2 in perf (v 3. ) CSC 660: Advanced Operating Systems 11

ZFS Sun (2004) Copy-on-write + volume management – Variable block size + compression. – Built-in volume manager (striping, pooling. ) – Self-healing with 64 -bit checksums + mirroring. – COW transactional model (live data never overwritten) – Fast snapshots (just don’t release old blocks. ) – 128 -bit filesystem. CSC 660: Advanced Operating Systems 12

Distributed Filesystems Use filesystem to transparently share data between computers. Accessing files via a distributed filesystem: 1. 2. 3. 4. 5. Client mounts network filesystem. Client makes a request for file access. Client kernel sends network request to server. Server performs file ops on physical disk. Server sends response across network to client. CSC 660: Advanced Operating Systems 13

Naming Mapping between logical and physical objects. UNIX filenames mapped to inodes. Network filenames map to hostname, vnode pairs. Location independent names Filename is a dynamic one-to-many mapping. Files can migrate to other servers w/o renaming. Files can be replicated across multiple servers. CSC 660: Advanced Operating Systems 14

Naming Implementation Location-dependent (non-transparent) filename -> <system, disk, inode> Location-independent (transparent) filename -> file_identifier -> <system, disk, inode> Identifiers must be unique. Identifiers must be updated to point to a new physical location when a file is moved. CSC 660: Advanced Operating Systems 15

Caching Problem: Every file access uses network. Solution: Store remote data on local system. Cache can be memory or disk based. Read-ahead can reduce accesses further. CSC 660: Advanced Operating Systems 16

Cache Update Policies Write Through Write data to server and cache at once. Return to program when server write complete. High reliability, poor performance. Delayed Write data to cache, then return to program. Modifications written through to server later. High performance, poor reliability. CSC 660: Advanced Operating Systems 17

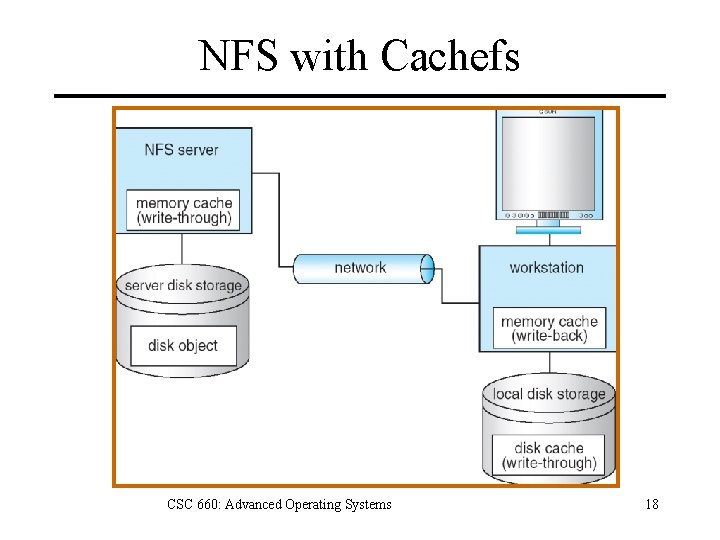

NFS with Cachefs CSC 660: Advanced Operating Systems 18

Cache Consistency Problem Keeping cached copies consistent with server. Consistency overhead can decrease performance if too many writes done on same set of files. Client-initiated consistency Client asks server if data is consistent. When: every file access, periodically. Server-initiated consistency Server detects conflicts and invalidates client caches. Server has to maintain state of what is cached where. CSC 660: Advanced Operating Systems 19

Stateful File Access Stateful process: 1. 2. 3. 4. 5. 6. Client sends open request to server. Server opens file, inserts into open file table. Server returns file identifier to client. Client uses identifier to read/write file. Client closes file. Server removes file from open file table. Features High performance, because fewer disk accesses. Problem of clients that crash without closing files. CSC 660: Advanced Operating Systems 20

Stateless File Service Every request is self contained. Must specify filename, position in every request. Server doesn’t know which files are open. Server crashes have minimal effect. Stateful servers must poll clients to recover state. CSC 660: Advanced Operating Systems 21

NFS Sun v 2 (1984) v 3 (1992) TCP + 64 -bit. Implementation – System calls via Sun RPC calls. – Stateless: client obtains filesystem ID on mount, then uses filesystem ID (like filehandle) in subsequent reqs. – UNIX-centric (UIDs, GIDs, permissions) – Server authenticates by client IP address. • Client UIDs mapped to server w/ root quashing. • Danger: Client root user can su to any desired UID. CSC 660: Advanced Operating Systems 22

CIFS Microsoft (1998) Derived from 1980 s IBM SMB net filesystem. Implementation Originally ran over Net. BIOS, not TCP/IP. \svrsharepath Universal Naming Convention Auth: NTLM (insecure), NTLMv 2, Kerberos MS Windows-centric (filenames, ACLs, EOLs) CSC 660: Advanced Operating Systems 23

AFS CMU (1983) – Sold by Transarc/IBM, then free as Open. AFS. Features – Uniform /afs name space. – Location-independent file sharing. – Whole file caching on client. – Secure authentication via Kerberos. CSC 660: Advanced Operating Systems 24

AFS Global namespace divided into cells – Cells separate authorization domains. – Cells included in pathname: /afs/CELL/ – Ex: cmu. edu, intel. com Cells contain multiple servers – Location independence managed via volume db. – Files are located on volumes. – Volumes can migrate between servers. – Volumes can be replicated in read-only fashion. CSC 660: Advanced Operating Systems 25

NFSv 4 IETF (2000) Based on 1998 Sun draft. New Features – Only one protocol. – Global namespace. – Security (ACLs, Kerberos, encryption) – Cross platform + internationalized. – Better caching via delegation of files to clients. CSC 660: Advanced Operating Systems 26

Google. FS Assumptions 1. 2. 3. 4. 5. 6. High rate of commodity hardware failures. Small number of huge files (multi-GB +). Reads: large streaming + small random. Most modifications are appends. High bandwidth >> low latency. Applications / filesystem co-designed. CSC 660: Advanced Operating Systems 27

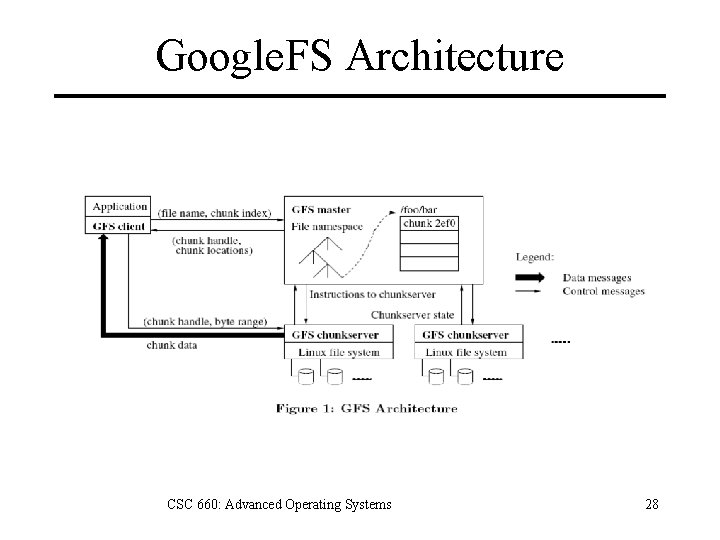

Google. FS Architecture CSC 660: Advanced Operating Systems 28

Google. FS Architecture • Master server – Metadata: namespace, ACL, chunk mapping. – Chunk lease management, garbage collection, chunk migration. • Chunk servers – Serve chunks (64 MB + checksum) of files. – Chunks replicated on multiple (3) servers. CSC 660: Advanced Operating Systems 29

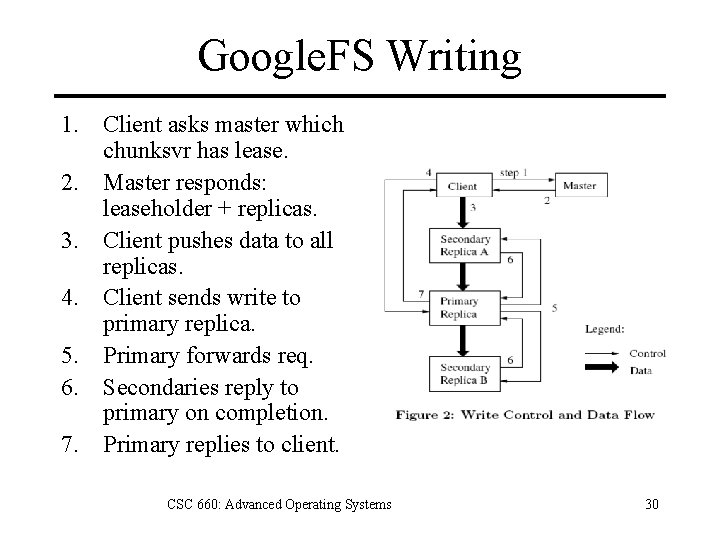

Google. FS Writing 1. Client asks master which chunksvr has lease. 2. Master responds: leaseholder + replicas. 3. Client pushes data to all replicas. 4. Client sends write to primary replica. 5. Primary forwards req. 6. Secondaries reply to primary on completion. 7. Primary replies to client. CSC 660: Advanced Operating Systems 30

Google. FS Consistency File regions can be Consistent: all clients see the same data. Defined: consistent + clients will see entire write. Inconsistent: different clients see different data. Files can be modified by Random write: data written at specified offset. Record append: data is appended atomically at least once. Padding or record duplicates may be inserted as part of an append operation. CSC 660: Advanced Operating Systems 31

Google. FS Consistency Writers deal with consistency issues by 1. Preferring appends to random writes. 2. Application-level checkpoints. 3. Self-identifying records with checksums. Readers deal with consistency issues by 1. Processing file only up until checkpoint. 2. Ignoring padding. 3. Discarding records with duplicate checksums. CSC 660: Advanced Operating Systems 32

Chunk Replication New Chunks – Replicate new chunks on servers with below-average disk utilization. – Limit the number of recent chunk creations on each server, due to iminent writes. Re-replication – Prioritize chunks based on how far chunk is away from replication goal. – Master clones chunk by choosing a server and telling it to replicate chunk from closest replica. – Master re-balances chunk distribution periodically. CSC 660: Advanced Operating Systems 33

Google. FS Reliability Chunk level reliability Incremental checksums on each chunk Chunks replicated by default across 3 servers. Single master server Metadata stored in memory, operation log. Metadata recovered by polling chunk servers. Shadow masters provide ro access if primary down. CSC 660: Advanced Operating Systems 34

Common Problems 1. Consistency after crash. 2. Large contiguous allocations. 3. Metadata allocation. CSC 660: Advanced Operating Systems 35

Consistency • Detect + Repair – Use fsck to repair. – Journal replay. • Always Consistent – Copy on write. CSC 660: Advanced Operating Systems 36

Large Contiguous Allocations • Pre-allocation. • Block groups. • Multiple block sizes. CSC 660: Advanced Operating Systems 37

Metadata Allocation • Fixed number in one location. • Fixed number spread across disk. • Dynamically allocated in files. CSC 660: Advanced Operating Systems 38

References 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. Jerry Breecher, “Distributed Filesystems, ” http: //cs. clarku. edu/~jbreecher/os/lectures/Section 17 -Dist_File_Sys. ppt Florian Buchholz, “The structure of the Reiser file system, ” http: //homes. cerias. purdue. edu/~florian/reiserfs. php, 2006. Remy Card, Theodore T’so, Stephen Tweedie, “Design and Impementation of the Second Extended Filesystem, ” http: //web. mit. edu/tytso/www/linux/ext 2 intro. html, 1994. Sanjay Ghemawat et. al. , “The Google File System, ” SOSP, 2003. Christopher Hertel, Implementing CIFS, Prentice Hall, 2003. Val Henson, “A Brief History of UNIX Filesystems, ” http: //infohost. nmt. edu/~val/fs_slides. pdf Dave Hitz, James Lau, Michael Malcolm, “File System Design for an NFS File Server Appliance , ” Proceedings of the USENIX Winter 1994 Technical Conference, http: //www. netapp. com/library/tr/3002. pdf John Howard et. al. , “Scale and Performance in a Distributed File System, ” ACM Transactions on Computer Systems 6(1), 1988. Marshall K. Mc. Kusick, “A Fast File System for Unix, ” Transactions on Computer Systems 2(3), 1984. Brian Powlowski et. a. , “The NFS Version 4 Protocol, ” SANE 2000. Daniel Robbins, “Advanced File System Implementor’s Guide, ” IBM Developer Works, http: //www 128. ibm. com/developerworks/linux/library/l-fs 9. html, 2002. Claudia Rodriguez et al, The Linux Kernel Primer, Prentice-Hall, 2005. Mendel Rosenblum and John K. Osterhout, “The Design and Implementation of a Log-structured Filesystem, ” 13 th ACM SOSP, 1991. R. Sandberg, “Design and Implementation of the Sun Network Filesystem, ” Proceedings of the USENIX 1985 Summer Conference, 1985. Adam Sweeney et. al. , “Scalability in the XFS File System, ” Proceedings of the USENIX 1996 Annual Technical Conference, 1996. Wikipedia, http: //en. wikipedia. org/wiki/Comparison_of_file_systems CSC 660: Advanced Operating Systems 39

- Slides: 39