COP 4600 Summer 2012 Introduction To Operating Systems

- Slides: 52

COP 4600 – Summer 2012 Introduction To Operating Systems Distributed Process Management Instructor : Dr. Mark Llewellyn markl@cs. ucf. edu HEC 236, 407 -823 -2790 http: //www. cs. ucf. edu/courses/cop 4600/sum 2012 Department of Electrical Engineering and Computer Science Division University of Central Florida COP 4600: Intro To OS (Distributed Process Management) Page 1 © Dr. Mark Llewellyn

Distributed Process Management • In this set of notes we’ll examine some of the key mechanisms used in distributed operating systems. • Process migration: the movement of an active process from one machine in the network to another machine in the network. • Distributed global states: how processes on different systems can coordinate their activities when each is governed by a local clock and when the network imposes a delay in the exchange of information. • Distributed mutual exclusion: how to ensure mutually exclusive access in a distributed environment. • Distributed deadlock: how to prevent or detect and resolve deadlock in a distributed environment. COP 4600: Intro To OS (Distributed Process Management) Page 2 © Dr. Mark Llewellyn

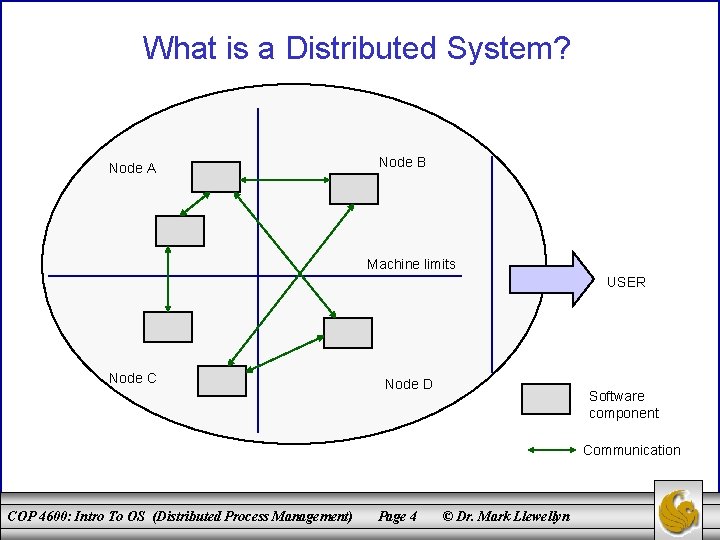

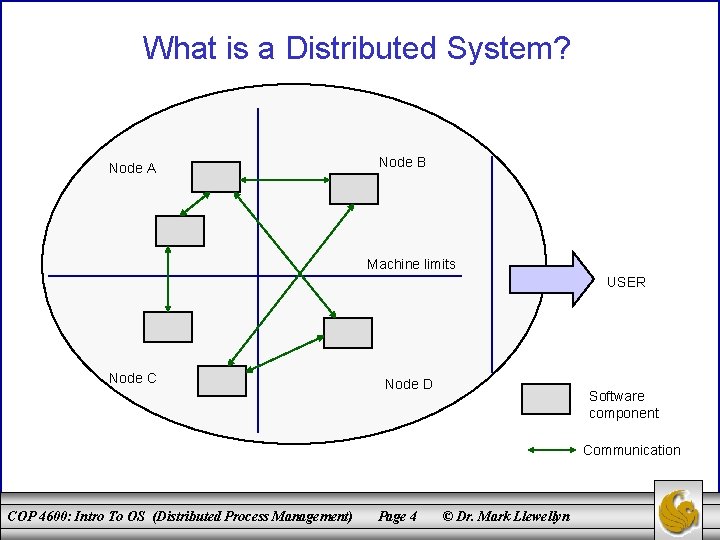

What is a Distributed System? • While many different definitions of what constitutes a distributed system have been put forth, there is general consensus that there are several central components that a distributed system must contain: – A set of autonomous computers. – A communication network, connecting those computers. – Software which integrates communication system. COP 4600: Intro To OS (Distributed Process Management) these Page 3 components © Dr. Mark Llewellyn with a

What is a Distributed System? Node A Node B Machine limits USER Node C Node D Software component Communication COP 4600: Intro To OS (Distributed Process Management) Page 4 © Dr. Mark Llewellyn

Important Characteristics of Distributed Systems • Based on our simple definition, there are several important characteristics of distributed systems that need a closer look. • All of these characteristics are based on the concept of transparency. • In the context of information technology, the concept of transparency literally means that certain things should be invisible to the user. The manner in which the problem is solved is largely irrelevant to the user. • The following transparency properties play a large role in achieving this result for the user: COP 4600: Intro To OS (Distributed Process Management) Page 5 © Dr. Mark Llewellyn

Transparency Properties of Distributed Systems Location Transparency – users do not necessarily need to know where exactly within the system a resource is located which they wish to utilize. Resources are typically identified by name, which has no bearing on their location. Access Transparency – the way in which a resource is access is uniform for all resources. For example, in a distributed database system consisting of several databases of different technologies, there should also be a common user interface (such as SQL). Replication Transparency – the fact that there may be several copies of a resource is not disclosed to the user. The user has no need to know whether they are accessing the original or the copy. The altering of the resource also must occur transparently. COP 4600: Intro To OS (Distributed Process Management) Page 6 © Dr. Mark Llewellyn

Transparency Properties of Distributed Systems (cont. ) Error Transparency – users will not necessarily be informed of all errors occurring in the system. Some errors may be irrelevant, and others may well be masked, as in the case of replication. Concurrency Transparency – distributed systems are usually used by several users simultaneously. It often happens that two or more users access the same resource at the same time, such as a database table, printer, or file. Concurrency transparency ensures that simultaneous access is feasible without mutual interference or incorrect results. Migration Transparency – using this form of transparency, resources can be moved over the network without the user noticing. A typical example is today’s mobile telephone network in which the device can be moved around freely, without any loss of communication when leaving the vicinity of a sender station. COP 4600: Intro To OS (Distributed Process Management) Page 7 © Dr. Mark Llewellyn

Transparency Properties of Distributed Systems (cont. ) Process Transparency – It is irrelevant on which computer a certain task (process) is executed, provided it is guaranteed that the results are the same. This form of transparency is an important prerequisite for the successful implementation of a balanced workload between computers. Performance Transparency – when increasing the system load, a dynamic reconfiguration may well be required. This measure for performance optimization should be unnoticed by other users. Scaling Transparency – if a system is to be expanded so as to incorporate more computers or applications, this should be feasible without modifying the system structure or application algorithms. Language Transparency – the programming language in which the individual subcomponents of the distributed system or application were created must not play any role in the ensemble. This is a fairly new requirement of distributed systems and is only supported by more recently developed systems. COP 4600: Intro To OS (Distributed Process Management) Page 8 © Dr. Mark Llewellyn

Process Migration • Process migration is the transfer of a sufficient amount of the state of a process from one computer to another for the process to execute on the target machine. • Interest in this concept arose from research into methods of load balancing across multiple networked systems. • True process migration includes the ability to preempt a process on one machine and reactivate it later on another machine. • Process migration is desirable in a distributed system for a number of reasons, including those listed on the next page. COP 4600: Intro To OS (Distributed Process Management) Page 9 © Dr. Mark Llewellyn

Motivation For Process Migration • Load sharing – By moving processes from heavily loaded to lightly loaded systems, the load can be balanced to improve overall performance. While empirical data suggests that significant improvements are possible, care must be taken in the design of load-balancing algorithms. The more communication that is necessary for the distributed system to perform the balancing, the worse the performance becomes. • Communication performance – Processes that interact intensively can be moved to the same node to reduce communications cost for the duration of their interaction. Also, if a process is performing data analysis on some file or set of files which are larger than the process’s size, it may be advantageous to move the process to the data rather than moving the data to the process. • Availability – Long running processes may need to move to survive in the face of faults for which advance notice is possible or in advance of scheduled down-time. If the OS provides such notification, a process that wants to continue can either migrate to another system or ensure that it can be restarted on the current system at some later time. • Utilizing special capabilities – A process can move to take advantage of unique hardware or software capabilities on a particular node. COP 4600: Intro To OS (Distributed Process Management) Page 10 © Dr. Mark Llewellyn

Process Migration Mechanisms • A number of issues need to be addressed in designing a process migration facility. • Among these issues are the following: 1. Who initiates the migration? 2. What portion of the process is migrated? 3. What happens to outstanding messages and signals? COP 4600: Intro To OS (Distributed Process Management) Page 11 © Dr. Mark Llewellyn

Initiation of Migration • Who initiates the migration depends on the goal of the migration facility. • If the goal is load balancing, then some module in the OS that is monitoring system load will generally be responsible for deciding when a migration should take place. – The module will be responsible for preempting or signaling a process to be migrated. – To determine where to migrate, the module will need to be in communication with peer modules in other systems so that load patterns on other systems can be monitored. – In this case, the entire migration function and even the existence of multiple systems may be transparent to the process. • If the goal is to reach a particular resource, then a process may initiate the migration as the need arises. – In this case, the process must be aware of the existence of a distributed system. COP 4600: Intro To OS (Distributed Process Management) Page 12 © Dr. Mark Llewellyn

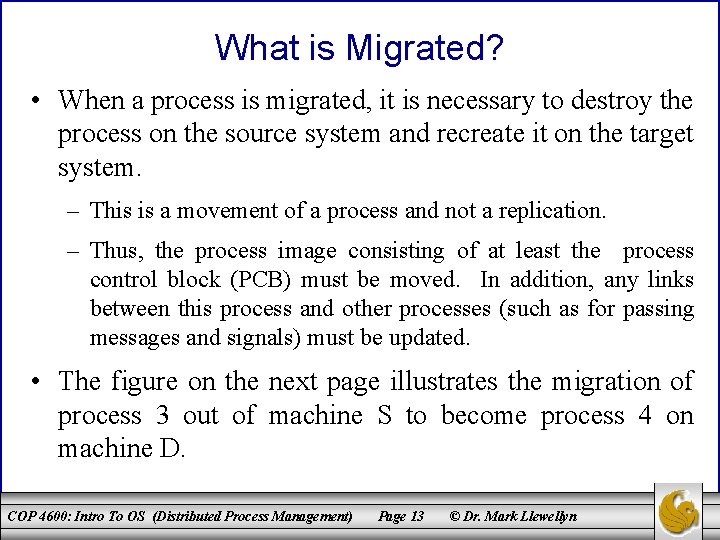

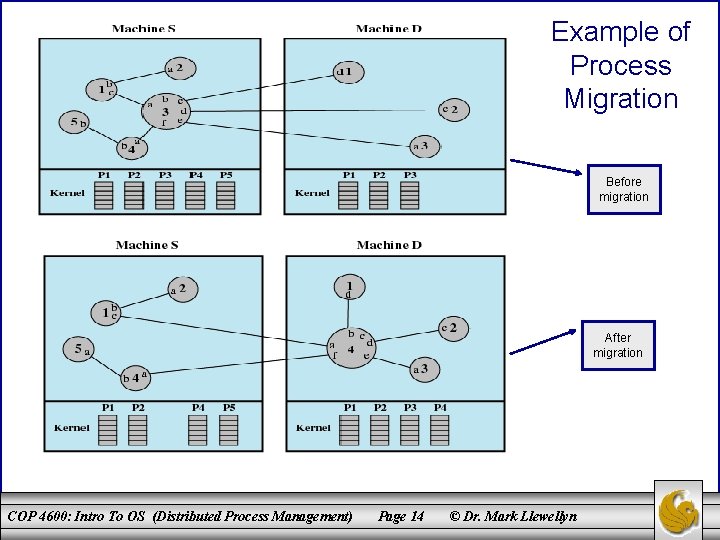

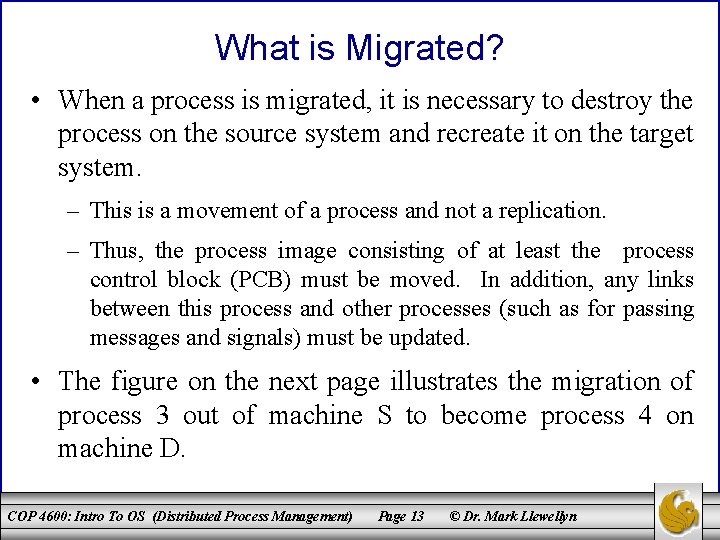

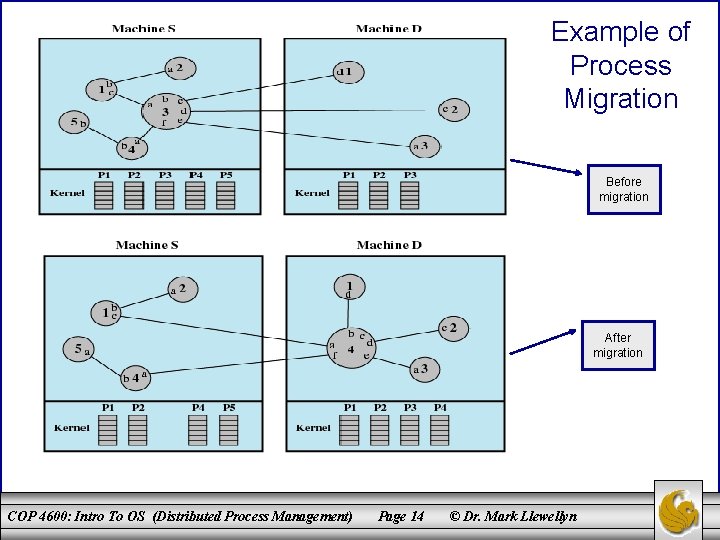

What is Migrated? • When a process is migrated, it is necessary to destroy the process on the source system and recreate it on the target system. – This is a movement of a process and not a replication. – Thus, the process image consisting of at least the process control block (PCB) must be moved. In addition, any links between this process and other processes (such as for passing messages and signals) must be updated. • The figure on the next page illustrates the migration of process 3 out of machine S to become process 4 on machine D. COP 4600: Intro To OS (Distributed Process Management) Page 13 © Dr. Mark Llewellyn

Example of Process Migration Before migration After migration COP 4600: Intro To OS (Distributed Process Management) Page 14 © Dr. Mark Llewellyn

What is Migrated? (cont. ) • The movement of the PCB is straightforward in process migration. The difficulty, from a performance point of view, concerns the process address space and any open files assigned to the process. • Let’s first consider only the process address space and assume that a virtual memory scheme utilizing paging is being utilized. • Several different strategies can be employed for what is migrated in this environment. – – – Eager (all) – transfers entire address space Precopy – Eager (dirty) – limited transfer of pages Copy-on-reference Flushing COP 4600: Intro To OS (Distributed Process Management) Page 15 © Dr. Mark Llewellyn

What is Migrated? (cont. ) • Eager (all): Transfer entire address space at time of migration. – No trace of process is left behind in the old system. – If address space is large and if the process does not need most of it, then this approach my be unnecessarily expensive. • Precopy: Process continues to execute on the source node while the address space is copied to the target node. – Pages modified on the source during precopy operation have to be copied a second time. – Reduces the time that a process is frozen and cannot execute during migration. COP 4600: Intro To OS (Distributed Process Management) Page 16 © Dr. Mark Llewellyn

What is Migrated? (cont. ) • Eager (dirty): Transfer only the pages of the address space that are in main memory and have been modified. – Any additional blocks of the virtual address space are transferred on demand only. – While this approach minimizes the amount of data that will be transferred, it does require that the source machine be involved throughout the life of the process by maintaining page table entries. This implies that the process requires remote paging support. COP 4600: Intro To OS (Distributed Process Management) Page 17 © Dr. Mark Llewellyn

What is Migrated? (cont. ) • Copy-on-reference: Pages are only brought over when referenced. – Has the lowest initial cost of process migration. • Flushing: Pages are cleared from main memory of the source by flushing dirty pages to disk. – The pages are accessed as needed from disk instead of main memory on the source node. – This strategy relieves the source of holding any pages of the migrated process in main memory, immediately freeing a block of memory to be used for other processes. COP 4600: Intro To OS (Distributed Process Management) Page 18 © Dr. Mark Llewellyn

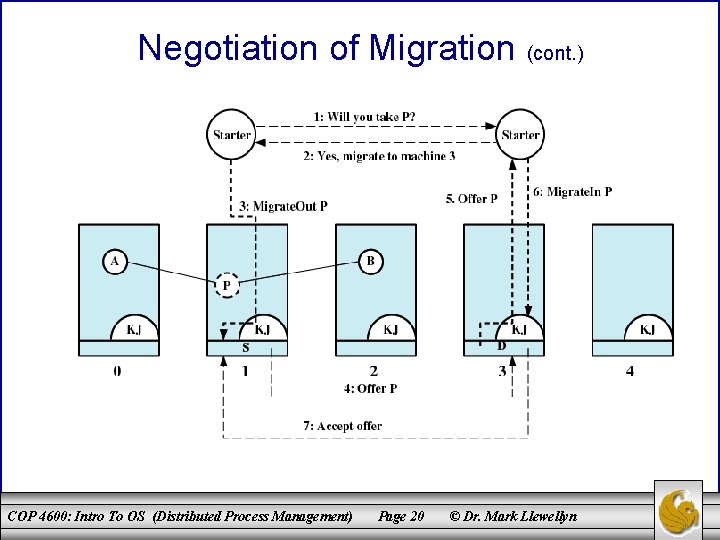

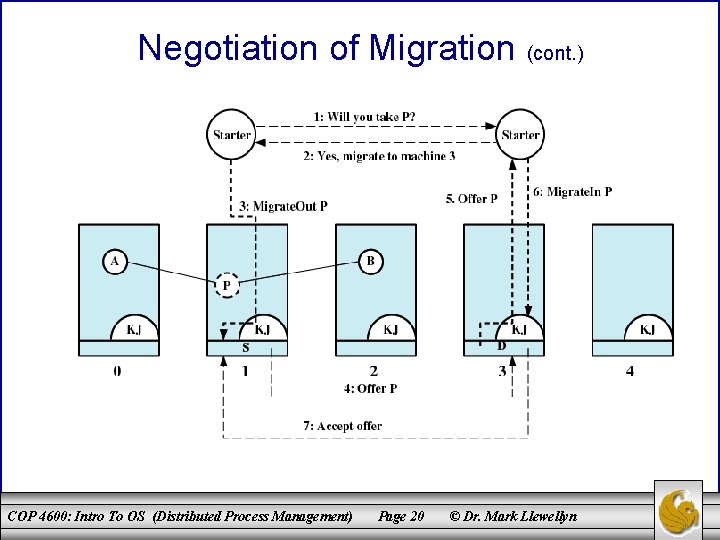

Negotiation of Migration • Migration policy is responsibility of Starter utility. • Starter utility is also responsible for long-term scheduling and memory allocation. • Decision to migrate must be reached jointly by two Starter processes (one on the source and one on the destination). COP 4600: Intro To OS (Distributed Process Management) Page 19 © Dr. Mark Llewellyn

Negotiation of Migration (cont. ) COP 4600: Intro To OS (Distributed Process Management) Page 20 © Dr. Mark Llewellyn

Eviction • Destination system may refuse to accept the migration of a process to itself. • If a workstation is idle, process may have been migrated to it – Once the workstation is active, it may be necessary to evict the migrated processes to provide adequate response time. COP 4600: Intro To OS (Distributed Process Management) Page 21 © Dr. Mark Llewellyn

Distributed Global States • Operating system cannot know the current state of all process in the distributed system. • A process can only know the current state of all processes on the local system. • Remote processes only know state information that is received by messages. – These messages represent the state at some time in the past. – Analogous to the situation in astronomy, our knowledge of an object 5 light-years away from the observation point is 5 yearsold. COP 4600: Intro To OS (Distributed Process Management) Page 22 © Dr. Mark Llewellyn

A Running Example • A bank account is distributed over two branches. • The total amount in the account is the sum at each branch. • At 3 PM the account balance is to be determined. • Messages are sent to request the information. COP 4600: Intro To OS (Distributed Process Management) Page 23 © Dr. Mark Llewellyn

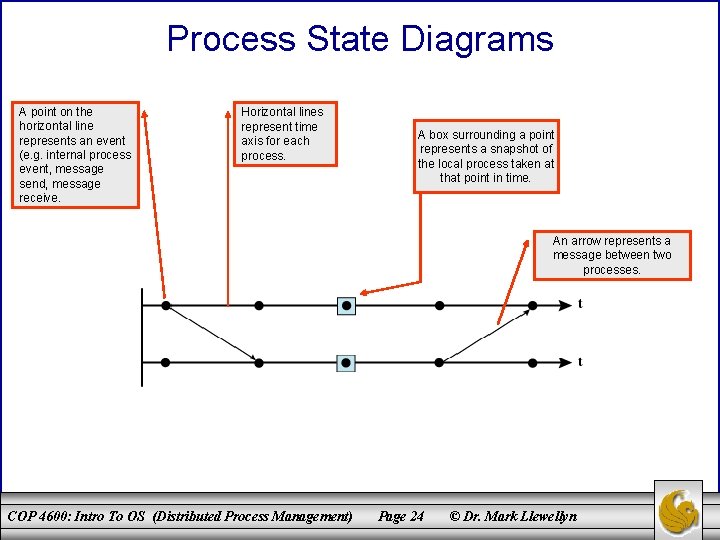

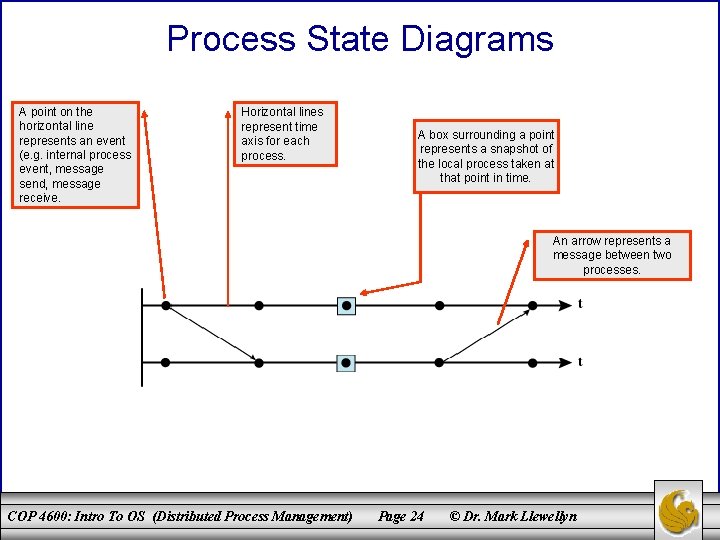

Process State Diagrams A point on the horizontal line represents an event (e. g. internal process event, message send, message receive. Horizontal lines represent time axis for each process. A box surrounding a point represents a snapshot of the local process taken at that point in time. An arrow represents a message between two processes. COP 4600: Intro To OS (Distributed Process Management) Page 24 © Dr. Mark Llewellyn

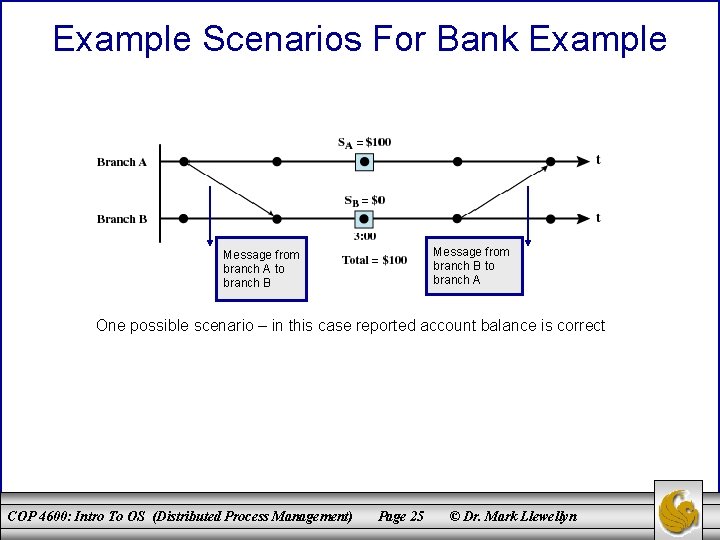

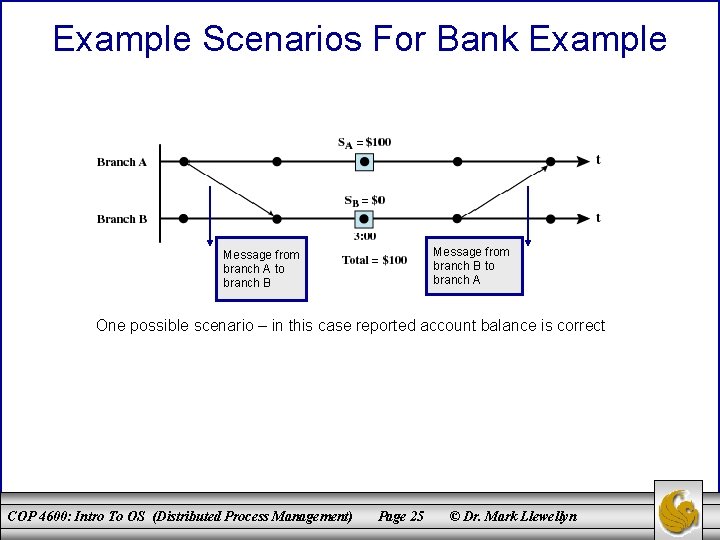

Example Scenarios For Bank Example Message from branch B to branch A Message from branch A to branch B One possible scenario – in this case reported account balance is correct COP 4600: Intro To OS (Distributed Process Management) Page 25 © Dr. Mark Llewellyn

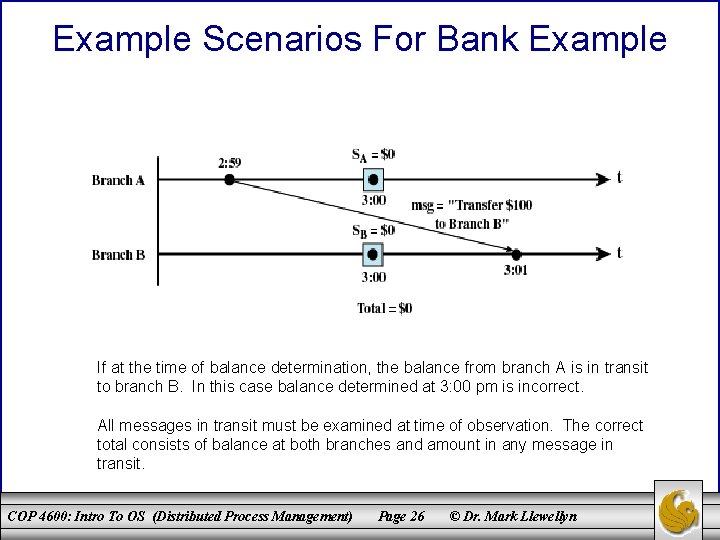

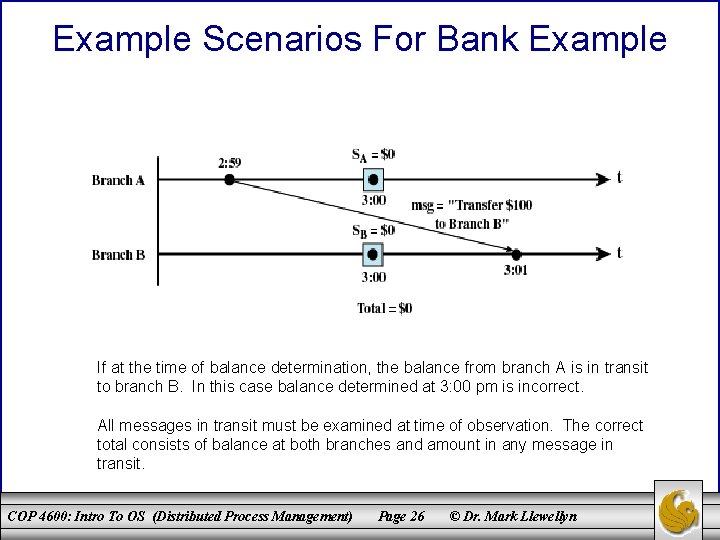

Example Scenarios For Bank Example If at the time of balance determination, the balance from branch A is in transit to branch B. In this case balance determined at 3: 00 pm is incorrect. All messages in transit must be examined at time of observation. The correct total consists of balance at both branches and amount in any message in transit. COP 4600: Intro To OS (Distributed Process Management) Page 26 © Dr. Mark Llewellyn

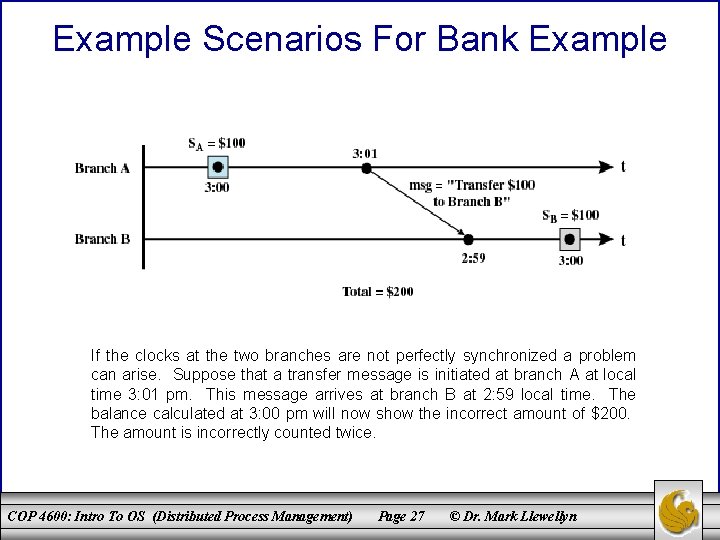

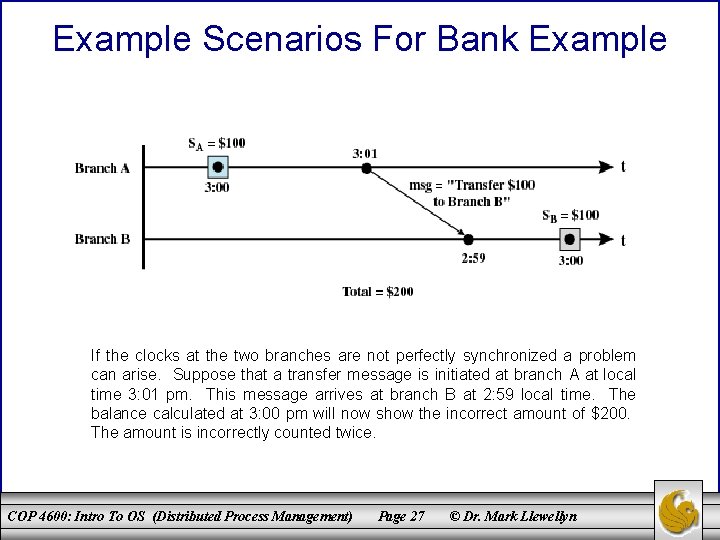

Example Scenarios For Bank Example If the clocks at the two branches are not perfectly synchronized a problem can arise. Suppose that a transfer message is initiated at branch A at local time 3: 01 pm. This message arrives at branch B at 2: 59 local time. The balance calculated at 3: 00 pm will now show the incorrect amount of $200. The amount is incorrectly counted twice. COP 4600: Intro To OS (Distributed Process Management) Page 27 © Dr. Mark Llewellyn

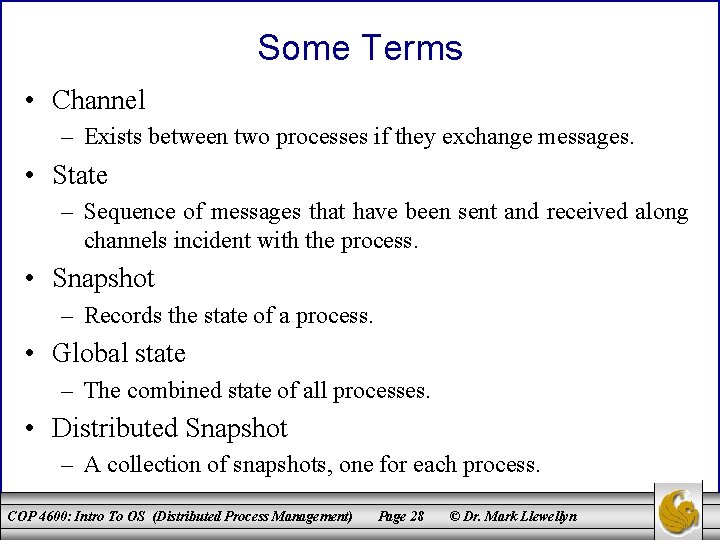

Some Terms • Channel – Exists between two processes if they exchange messages. • State – Sequence of messages that have been sent and received along channels incident with the process. • Snapshot – Records the state of a process. • Global state – The combined state of all processes. • Distributed Snapshot – A collection of snapshots, one for each process. COP 4600: Intro To OS (Distributed Process Management) Page 28 © Dr. Mark Llewellyn

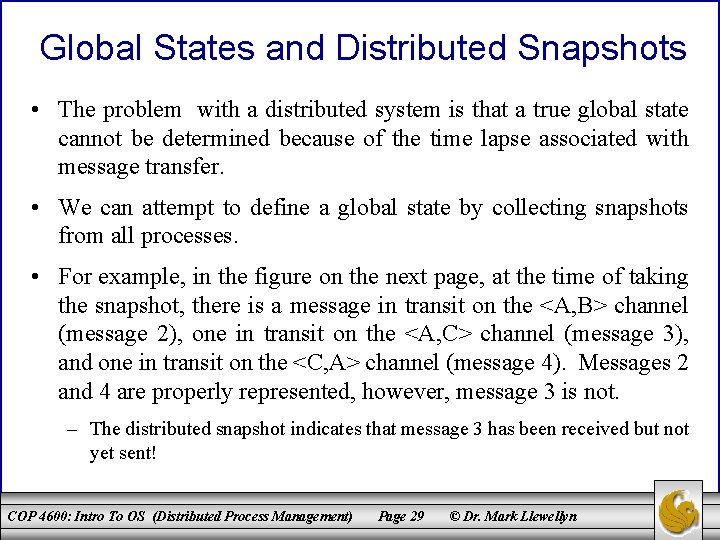

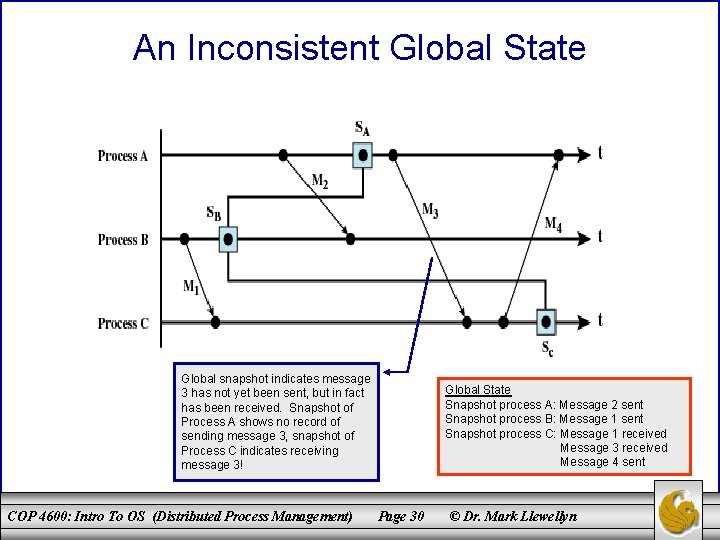

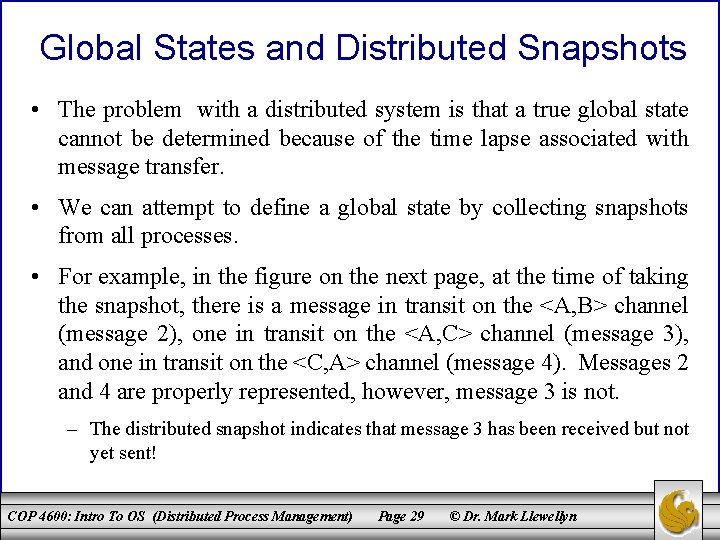

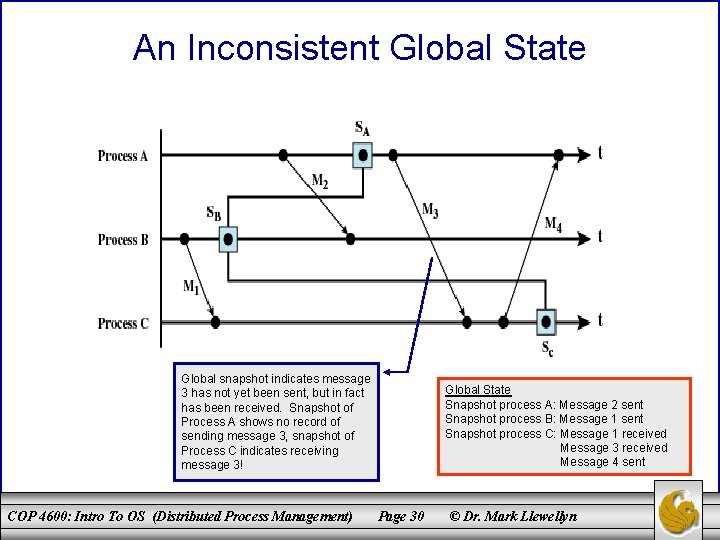

Global States and Distributed Snapshots • The problem with a distributed system is that a true global state cannot be determined because of the time lapse associated with message transfer. • We can attempt to define a global state by collecting snapshots from all processes. • For example, in the figure on the next page, at the time of taking the snapshot, there is a message in transit on the <A, B> channel (message 2), one in transit on the <A, C> channel (message 3), and one in transit on the <C, A> channel (message 4). Messages 2 and 4 are properly represented, however, message 3 is not. – The distributed snapshot indicates that message 3 has been received but not yet sent! COP 4600: Intro To OS (Distributed Process Management) Page 29 © Dr. Mark Llewellyn

An Inconsistent Global State Global snapshot indicates message 3 has not yet been sent, but in fact has been received. Snapshot of Process A shows no record of sending message 3, snapshot of Process C indicates receiving message 3! COP 4600: Intro To OS (Distributed Process Management) Global State Snapshot process A: Message 2 sent Snapshot process B: Message 1 sent Snapshot process C: Message 1 received Message 3 received Message 4 sent Page 30 © Dr. Mark Llewellyn

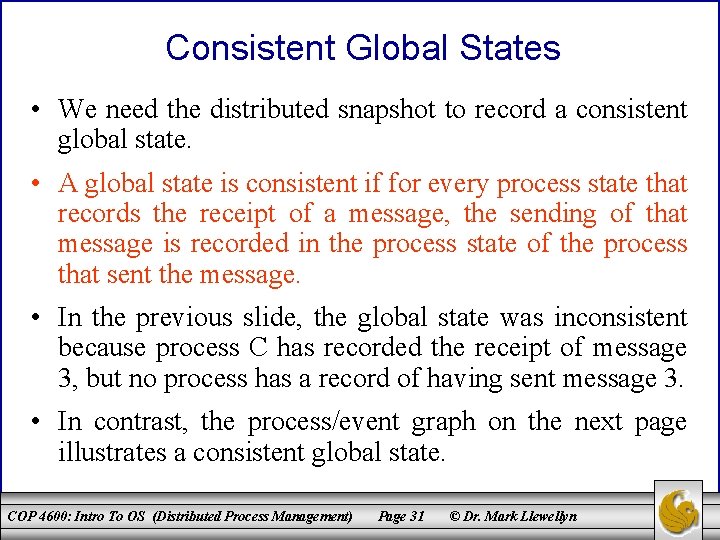

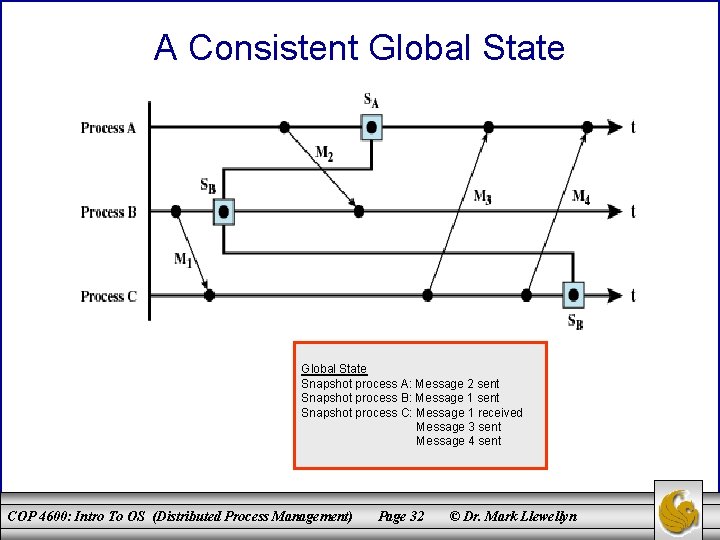

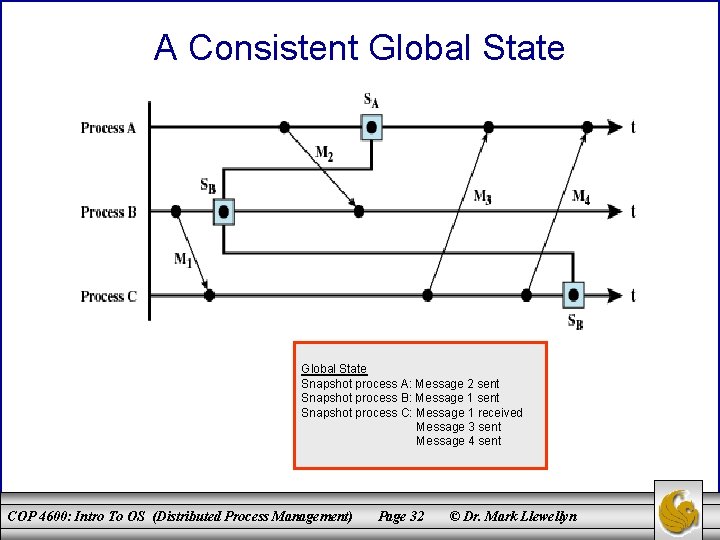

Consistent Global States • We need the distributed snapshot to record a consistent global state. • A global state is consistent if for every process state that records the receipt of a message, the sending of that message is recorded in the process state of the process that sent the message. • In the previous slide, the global state was inconsistent because process C has recorded the receipt of message 3, but no process has a record of having sent message 3. • In contrast, the process/event graph on the next page illustrates a consistent global state. COP 4600: Intro To OS (Distributed Process Management) Page 31 © Dr. Mark Llewellyn

A Consistent Global State Snapshot process A: Message 2 sent Snapshot process B: Message 1 sent Snapshot process C: Message 1 received Message 3 sent Message 4 sent COP 4600: Intro To OS (Distributed Process Management) Page 32 © Dr. Mark Llewellyn

Distributed Snapshot Algorithm • Several different algorithms which record a consistent global state have been developed. We’ll examine a fairly popular one as follows: • The algorithm assumes that messages are delivered in the order that they are sent and no messages are lost. ( A reliable transport protocol such as TCP satisfies these requirements. ) • The algorithm uses a special control message, called a marker. • Some process initiates the algorithm by recording its state and sending a marker on all outgoing channels before any more messages are sent. • Each process p then proceeds as follows. Upon the first receipt of the marker (say from process q), receiving process p performs the following: 1. Process p records its local state. 2. Process p records the state of the incoming channel from q to p as empty. 3. Process p propagates the marker to all of its neighbors along all outgoing channels. COP 4600: Intro To OS (Distributed Process Management) Page 33 © Dr. Mark Llewellyn

Distributed Snapshot Algorithm (cont. ) • The previous three steps must be performed atomically; i. e. , no messages can be sent or received by process p until all three steps are performed. • At any time after recording its state, when process p receives a marker from another incoming channel (say from process r), it performs the following action: 1. • Process p records the state of the channel from r to p as the sequence of messages process p has received from process r from the time process p recorded its local state Sp to the time it received the marker from process r. The algorithm terminates at a process once the marker has been received along every incoming channel. COP 4600: Intro To OS (Distributed Process Management) Page 34 © Dr. Mark Llewellyn

Distributed Snapshot Algorithm • (cont. ) The following points can be made about this algorithm: – Since any process may start the algorithm by sending out a marker, if several nodes independently decided to record their state and send out the marker, the algorithm will still work properly. – The algorithm will terminate in a finite amount of time, if every message is delivered in finite time. – Since this is a distributed algorithm, each process is responsible for recording its own state and the state of all incoming channels. – Once all of the states have been recorded (the algorithm has terminated at all processes), the consistent global state obtained by the algorithm can be assembled by having every process send the state data that it has recorded along every outgoing channel and having every process forward the state data that it receives along every outgoing channel. Alternatively, the initiating process could poll all processes to acquire the global state. COP 4600: Intro To OS (Distributed Process Management) Page 35 © Dr. Mark Llewellyn

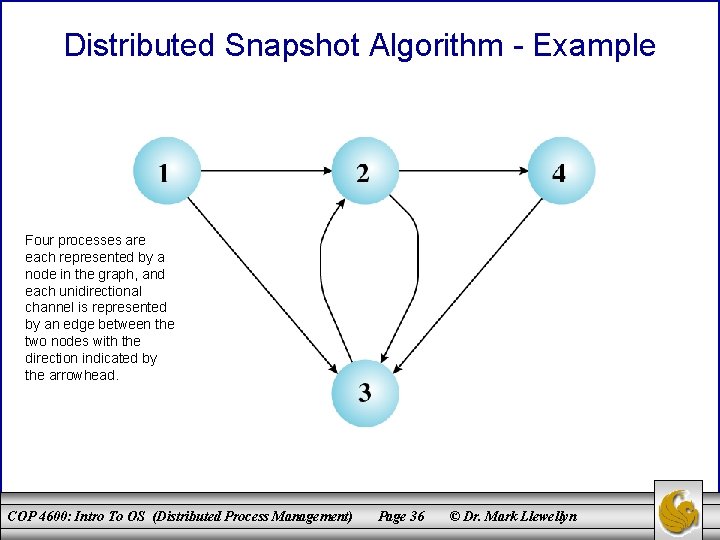

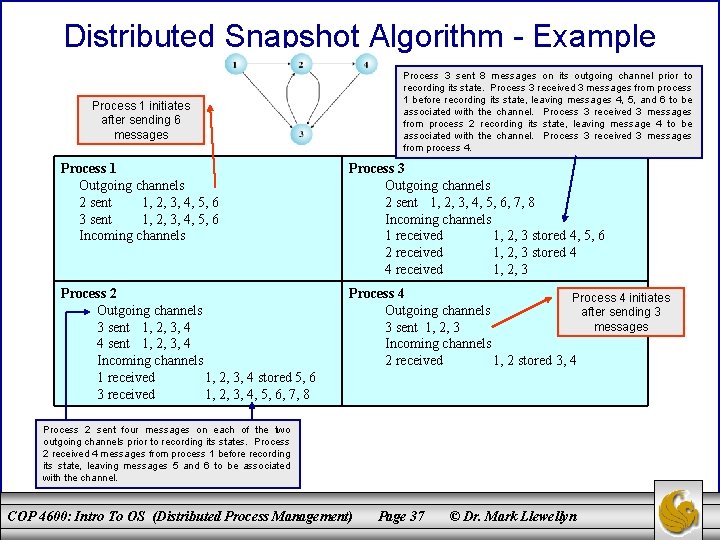

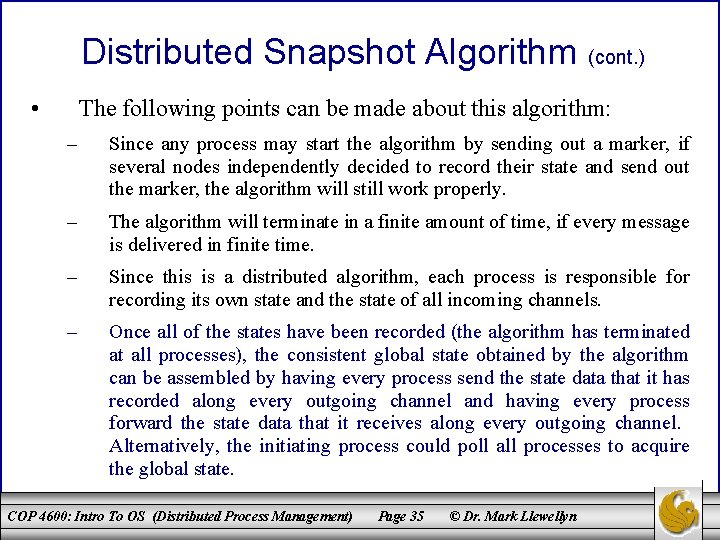

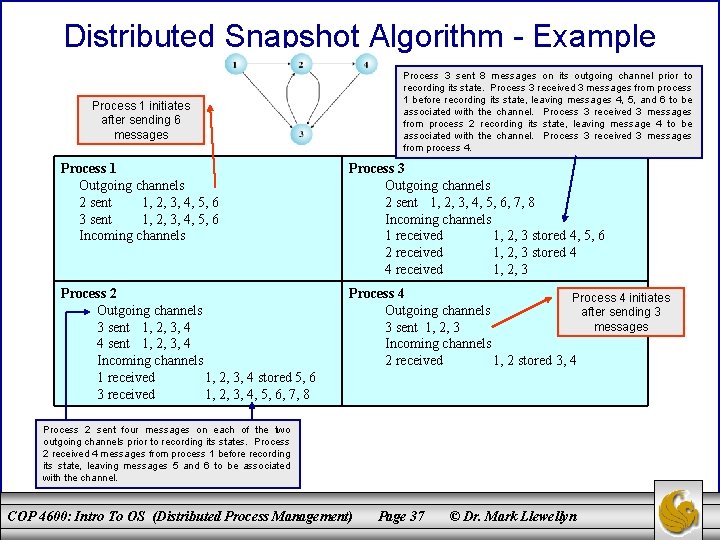

Distributed Snapshot Algorithm - Example Four processes are each represented by a node in the graph, and each unidirectional channel is represented by an edge between the two nodes with the direction indicated by the arrowhead. COP 4600: Intro To OS (Distributed Process Management) Page 36 © Dr. Mark Llewellyn

Distributed Snapshot Algorithm - Example Process 3 sent 8 messages on its outgoing channel prior to recording its state. Process 3 received 3 messages from process 1 before recording its state, leaving messages 4, 5, and 6 to be associated with the channel. Process 3 received 3 messages from process 2 recording its state, leaving message 4 to be associated with the channel. Process 3 received 3 messages from process 4. Process 1 initiates after sending 6 messages Process 1 Outgoing channels 2 sent 1, 2, 3, 4, 5, 6 3 sent 1, 2, 3, 4, 5, 6 Incoming channels Process 3 Outgoing channels 2 sent 1, 2, 3, 4, 5, 6, 7, 8 Incoming channels 1 received 1, 2, 3 stored 4, 5, 6 2 received 1, 2, 3 stored 4 4 received 1, 2, 3 Process 2 Outgoing channels 3 sent 1, 2, 3, 4 4 sent 1, 2, 3, 4 Incoming channels 1 received 1, 2, 3, 4 stored 5, 6 3 received 1, 2, 3, 4, 5, 6, 7, 8 Process 4 initiates Outgoing channels after sending 3 messages 3 sent 1, 2, 3 Incoming channels 2 received 1, 2 stored 3, 4 Process 2 sent four messages on each of the two outgoing channels prior to recording its states. Process 2 received 4 messages from process 1 before recording its state, leaving messages 5 and 6 to be associated with the channel. COP 4600: Intro To OS (Distributed Process Management) Page 37 © Dr. Mark Llewellyn

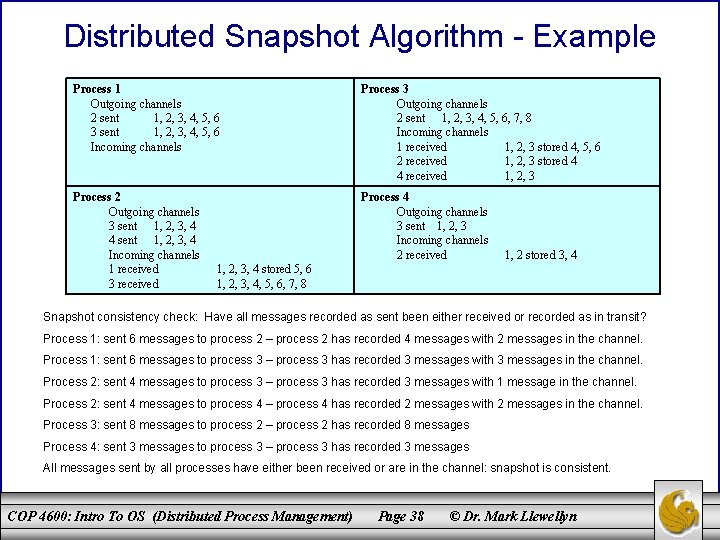

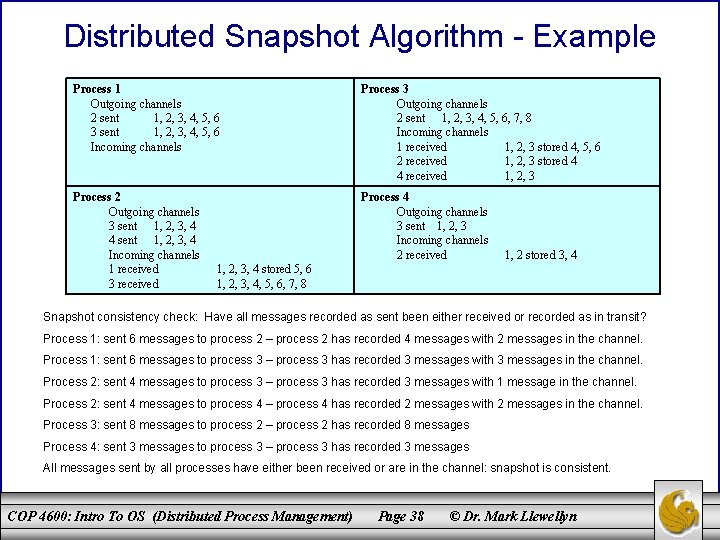

Distributed Snapshot Algorithm - Example Process 1 Outgoing channels 2 sent 1, 2, 3, 4, 5, 6 3 sent 1, 2, 3, 4, 5, 6 Incoming channels Process 3 Outgoing channels 2 sent 1, 2, 3, 4, 5, 6, 7, 8 Incoming channels 1 received 1, 2, 3 stored 4, 5, 6 2 received 1, 2, 3 stored 4 4 received 1, 2, 3 Process 2 Outgoing channels 3 sent 1, 2, 3, 4 4 sent 1, 2, 3, 4 Incoming channels 1 received 3 received Process 4 Outgoing channels 3 sent 1, 2, 3 Incoming channels 2 received 1, 2 stored 3, 4 1, 2, 3, 4 stored 5, 6 1, 2, 3, 4, 5, 6, 7, 8 Snapshot consistency check: Have all messages recorded as sent been either received or recorded as in transit? Process 1: sent 6 messages to process 2 – process 2 has recorded 4 messages with 2 messages in the channel. Process 1: sent 6 messages to process 3 – process 3 has recorded 3 messages with 3 messages in the channel. Process 2: sent 4 messages to process 3 – process 3 has recorded 3 messages with 1 message in the channel. Process 2: sent 4 messages to process 4 – process 4 has recorded 2 messages with 2 messages in the channel. Process 3: sent 8 messages to process 2 – process 2 has recorded 8 messages Process 4: sent 3 messages to process 3 – process 3 has recorded 3 messages All messages sent by all processes have either been received or are in the channel: snapshot is consistent. COP 4600: Intro To OS (Distributed Process Management) Page 38 © Dr. Mark Llewellyn

Distributed Mutual Exclusion Concepts • Whenever two or more processes compete for the use of system resources, there is a need for a mechanism to enforce mutual exclusion. • Any facility that is to provide support for mutual exclusion should meet the following criteria: – Mutual exclusion must be enforced: only one process at a time is allowed in its critical section. – A process that halts in its noncritical section must do so without interfering with other processes. – It must not be possible for a process requiring access to a critical section to be delayed indefinitely: no deadlock or starvation. – When no process is in a critical section, any process that requests entry to its critical section must be permitted to enter without delay. – No assumptions are made about relative process speeds or number of processors. – A process remains inside its critical section for a finite time only. COP 4600: Intro To OS (Distributed Process Management) Page 39 © Dr. Mark Llewellyn

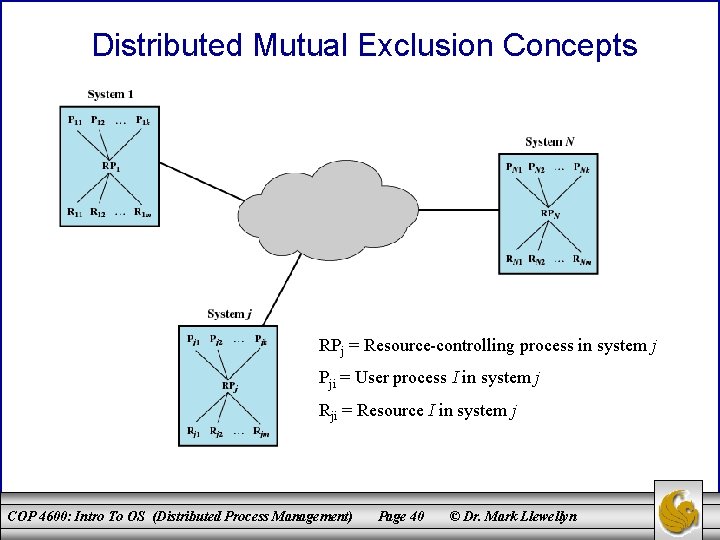

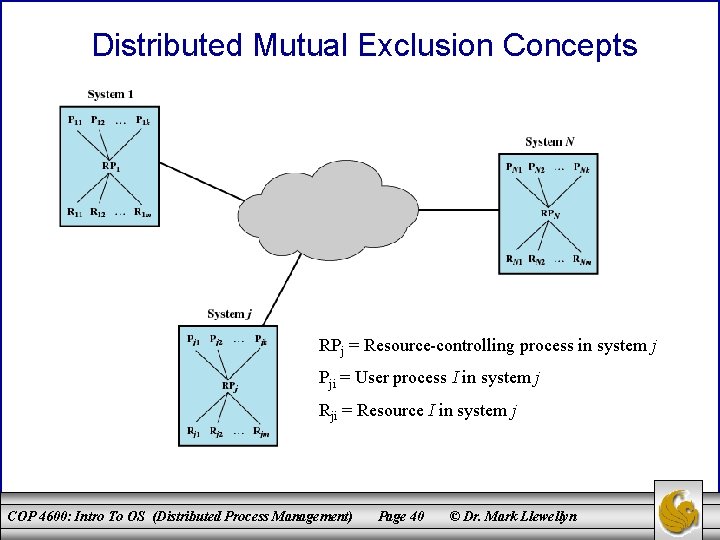

Distributed Mutual Exclusion Concepts RPj = Resource-controlling process in system j Pji = User process I in system j Rji = Resource I in system j COP 4600: Intro To OS (Distributed Process Management) Page 40 © Dr. Mark Llewellyn

Centralized Algorithm for Mutual Exclusion • One node is designated as the control node. • This node control access to all shared objects. • Two key properties of the centralized algorithm are: – Only the control node makes resource-allocation decision. – All necessary information is concentrated in the control node, including the identity and location of all resources and the allocation status of each resource. • The centralized approach is straightforward, and it is easy to see how mutual exclusion is enforced: The control node will not grant a request for a resource until that resource is released by the process currently holding it. • The centralized approach has severe drawbacks: (1) If the control node fails, mutual exclusion breaks down. (2) every allocation/deallocation requires an exchange of messages resulting in a bottleneck at the control node. COP 4600: Intro To OS (Distributed Process Management) Page 41 © Dr. Mark Llewellyn

Distributed Algorithm • All nodes have equal amount of information, on average. • Each node has only a partial picture of the total system and must make decisions based on this information. • All nodes bear equal responsibility for the final decision. • All nodes expend equal effort, on average, in effecting a final decision. • Failure of a node, in general, does not result in a total system collapse. • There exists no system-wide common clock with which to regulate the time of events. COP 4600: Intro To OS (Distributed Process Management) Page 42 © Dr. Mark Llewellyn

Ordering of Events • Events must be ordered to ensure mutual exclusion and avoid deadlock. • Clocks are not synchronized. • Communication delays exist. • Need to consistently say that one event occurs before another event. • Messages are sent when a process wants to enter critical section and when leaving critical section. • Time-stamping – Orders events on a distributed system – System clock is not used COP 4600: Intro To OS (Distributed Process Management) Page 43 © Dr. Mark Llewellyn

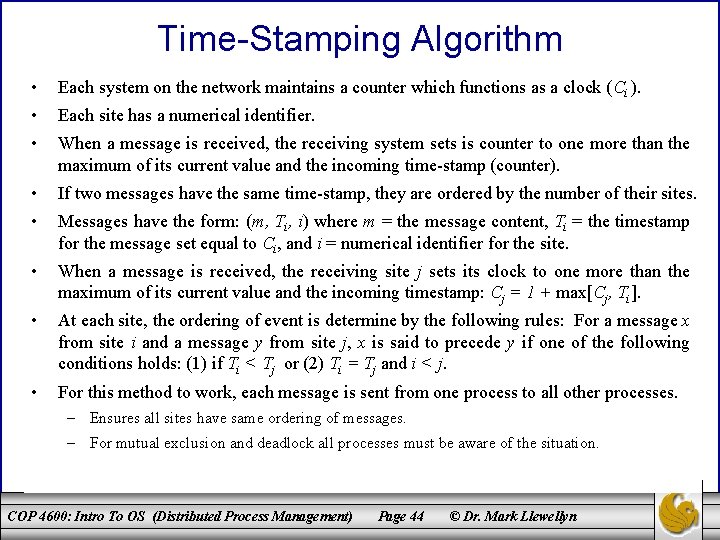

Time-Stamping Algorithm • Each system on the network maintains a counter which functions as a clock (Ci ). • Each site has a numerical identifier. • When a message is received, the receiving system sets is counter to one more than the maximum of its current value and the incoming time-stamp (counter). • If two messages have the same time-stamp, they are ordered by the number of their sites. • Messages have the form: (m, Ti, i) where m = the message content, Ti = the timestamp for the message set equal to Ci, and i = numerical identifier for the site. • When a message is received, the receiving site j sets its clock to one more than the maximum of its current value and the incoming timestamp: Cj = 1 + max[Cj, Ti]. • At each site, the ordering of event is determine by the following rules: For a message x from site i and a message y from site j, x is said to precede y if one of the following conditions holds: (1) if Ti < Tj or (2) Ti = Tj and i < j. • For this method to work, each message is sent from one process to all other processes. – Ensures all sites have same ordering of messages. – For mutual exclusion and deadlock all processes must be aware of the situation. COP 4600: Intro To OS (Distributed Process Management) Page 44 © Dr. Mark Llewellyn

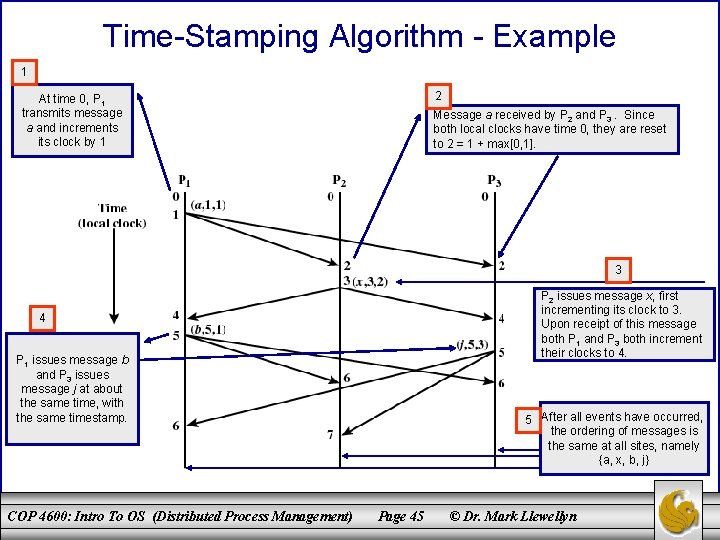

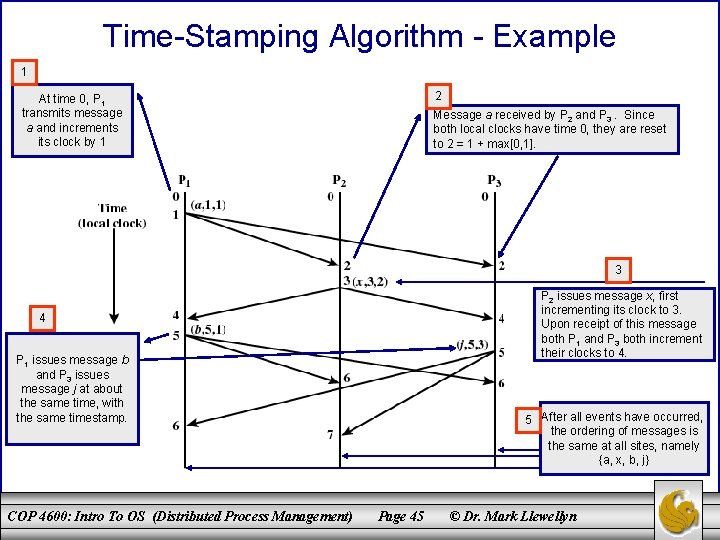

Time-Stamping Algorithm - Example 1 2 At time 0, P 1 transmits message a and increments its clock by 1 Message a received by P 2 and P 3. Since both local clocks have time 0, they are reset to 2 = 1 + max[0, 1]. 3 P 2 issues message x, first incrementing its clock to 3. Upon receipt of this message both P 1 and P 3 both increment their clocks to 4. 4 P 1 issues message b and P 3 issues message j at about the same time, with the same timestamp. COP 4600: Intro To OS (Distributed Process Management) 5 After all events have occurred, the ordering of messages is the same at all sites, namely {a, x, b, j} Page 45 © Dr. Mark Llewellyn

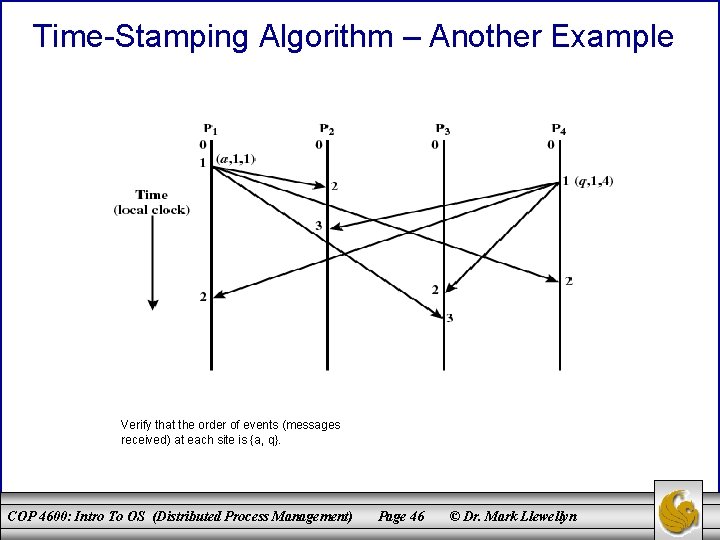

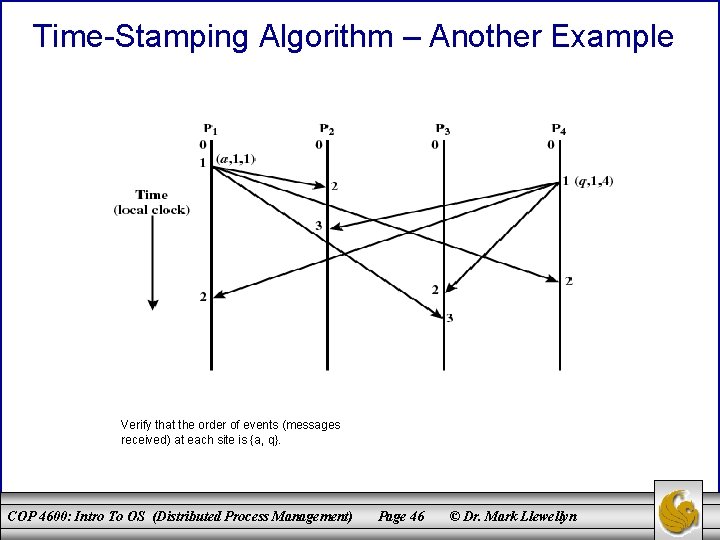

Time-Stamping Algorithm – Another Example Verify that the order of events (messages received) at each site is {a, q}. COP 4600: Intro To OS (Distributed Process Management) Page 46 © Dr. Mark Llewellyn

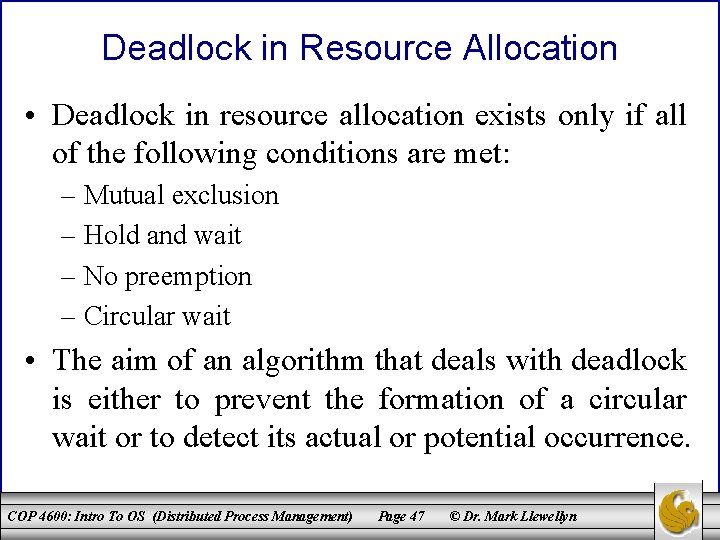

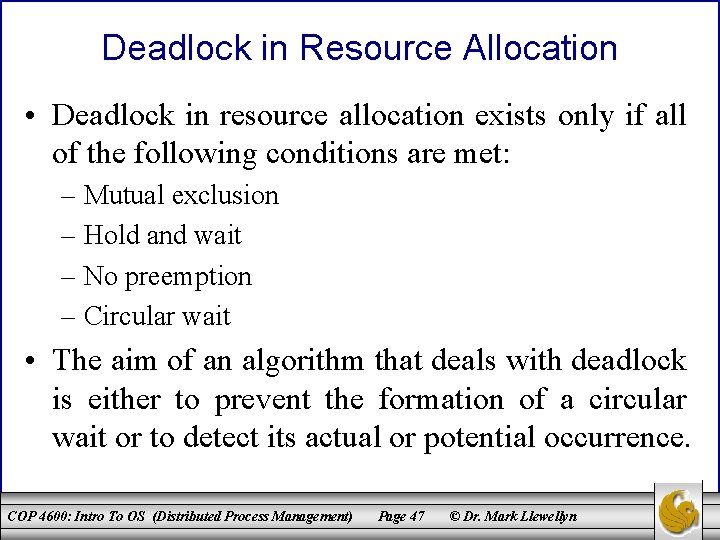

Deadlock in Resource Allocation • Deadlock in resource allocation exists only if all of the following conditions are met: – Mutual exclusion – Hold and wait – No preemption – Circular wait • The aim of an algorithm that deals with deadlock is either to prevent the formation of a circular wait or to detect its actual or potential occurrence. COP 4600: Intro To OS (Distributed Process Management) Page 47 © Dr. Mark Llewellyn

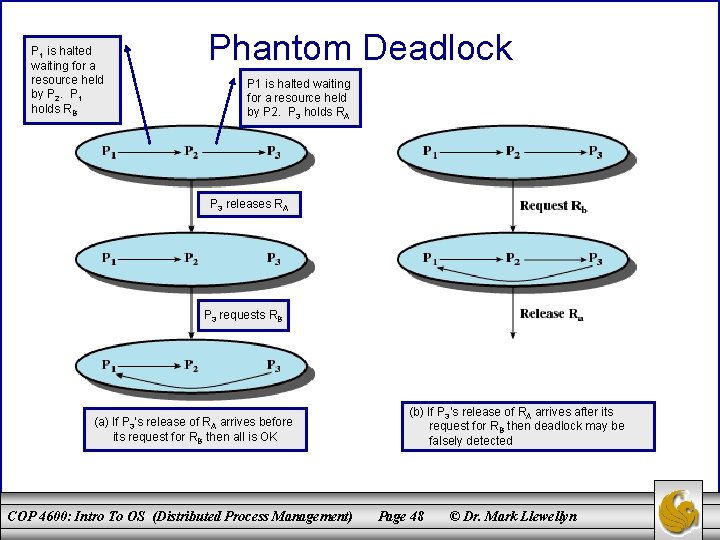

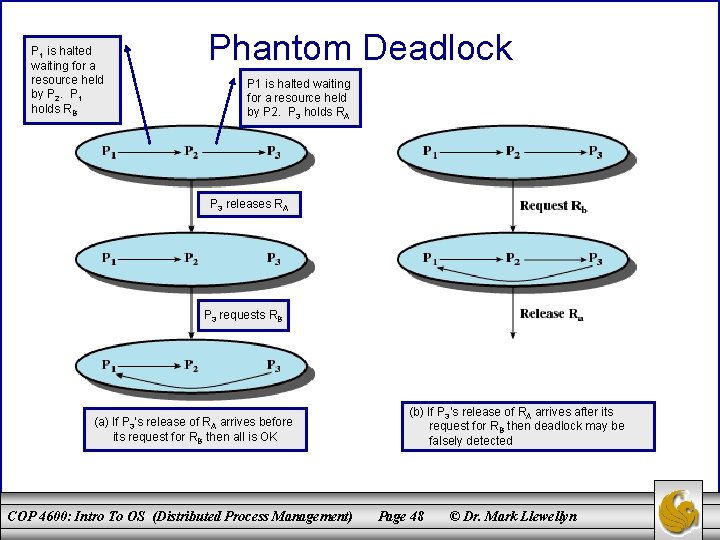

P 1 is halted waiting for a resource held by P 2. P 1 holds RB Phantom Deadlock P 1 is halted waiting for a resource held by P 2. P 3 holds RA P 3 releases RA P 3 requests RB (a) If P 3’s release of RA arrives before its request for RB then all is OK COP 4600: Intro To OS (Distributed Process Management) (b) If P 3’s release of RA arrives after its request for RB then deadlock may be falsely detected Page 48 © Dr. Mark Llewellyn

Deadlock Prevention • Circular-wait condition can be prevented by defining a linear ordering of resource types. • Hold-and-wait condition can be prevented by requiring that a process request all of its required resource at one time, and blocking the process until all requests can be granted simultaneously. COP 4600: Intro To OS (Distributed Process Management) Page 49 © Dr. Mark Llewellyn

Deadlock Avoidance • Distributed deadlock avoidance is impractical – Every node must keep track of the global state of the system. – The process of checking for a safe global state must be mutually exclusive. – Checking for safe states involves considerable processing overhead for a distributed system with a large number of processes and resources. COP 4600: Intro To OS (Distributed Process Management) Page 50 © Dr. Mark Llewellyn

Distributed Deadlock Detection • Each site only knows about its own resources. – Deadlock may involve distributed resources • Centralized control – one site is responsible for deadlock detection. • Hierarchical control – lowest node above the nodes involved in deadlock. • Distributed control – all processes cooperate in the deadlock detection function. COP 4600: Intro To OS (Distributed Process Management) Page 51 © Dr. Mark Llewellyn

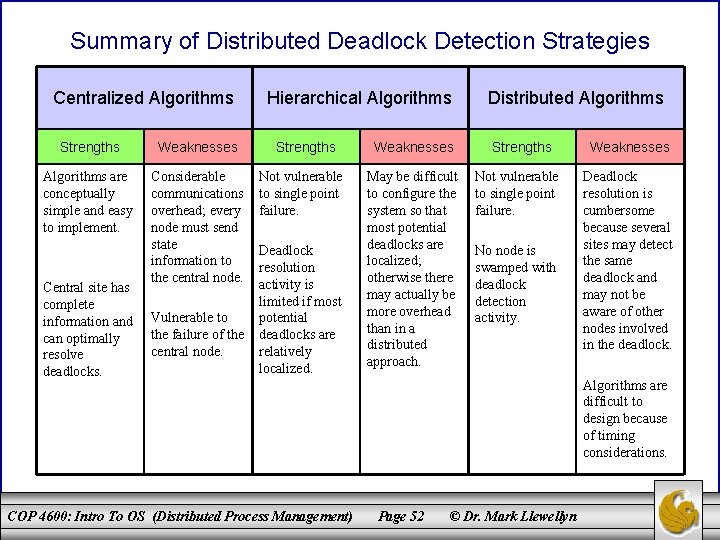

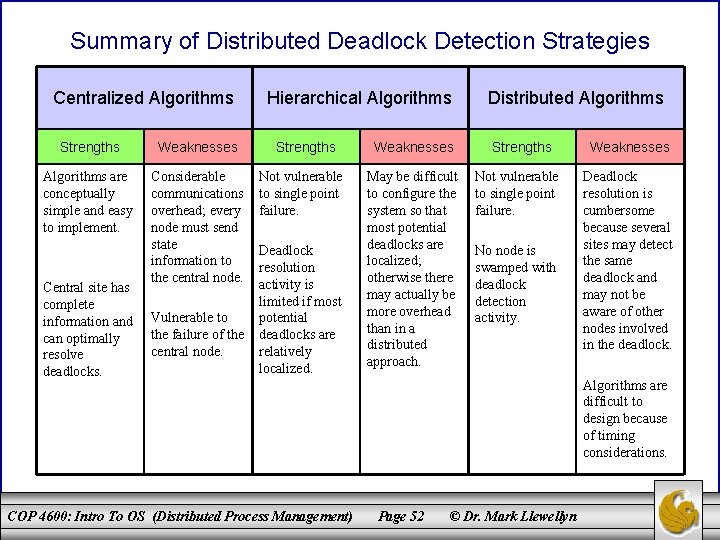

Summary of Distributed Deadlock Detection Strategies Centralized Algorithms Strengths Weaknesses Algorithms are conceptually simple and easy to implement. Considerable communications overhead; every node must send state information to the central node. Central site has complete information and can optimally resolve deadlocks. Vulnerable to the failure of the central node. Hierarchical Algorithms Strengths Not vulnerable to single point failure. Deadlock resolution activity is limited if most potential deadlocks are relatively localized. COP 4600: Intro To OS (Distributed Process Management) Weaknesses May be difficult to configure the system so that most potential deadlocks are localized; otherwise there may actually be more overhead than in a distributed approach. Distributed Algorithms Strengths Not vulnerable to single point failure. No node is swamped with deadlock detection activity. Weaknesses Deadlock resolution is cumbersome because several sites may detect the same deadlock and may not be aware of other nodes involved in the deadlock. Algorithms are difficult to design because of timing considerations. Page 52 © Dr. Mark Llewellyn