Conducting High Quality Surveys Frameworks and Strategies for

- Slides: 45

Conducting High Quality Surveys: Frameworks and Strategies for Reducing Error and Improving Quality Lija O. Greenseid, Ph. D. Senior Evaluator, Professional Data Analysts Julie Rainey Vice President, Professional Data Analysts Demonstration session for the American Evaluation Association Conference November 5, 2011

Today 1. Overview of Survey Quality Framework and Total Survey Error 2. Discuss four strategies for increasing quality of surveys 3. Time to reflect on survey constructs, critique a survey, and hear case-study examples 2 2

Why is survey quality important? • Poor quality surveys can lead to poor predictions or decisions (e. g. 1936 Presidential Election Landon vs. FDR) • Poor quality surveys waste time and resources 3 3

Survey Quality Framework • Survey quality is a complex, multidimensional concept (Juran & Gryna, 1980) • Different perspectives on survey quality by producers of survey data (e. g. , evaluators and survey researchers) and users of survey data (e. g. , clients, stakeholders, the public) 4 4

Evaluator Values in Survey Quality Survey producers (evaluators and researchers) value data quality attributes: • Large sample sizes • High response rates • Internally consistent responses • Good coverage of target populations 5 5

Client Values in Survey Quality Survey users (evaluation clients) take data accuracy for granted. Clients prioritize survey implementation factors: • • 6 Timeliness Accessibility and interpretability Usability of data Cost 6

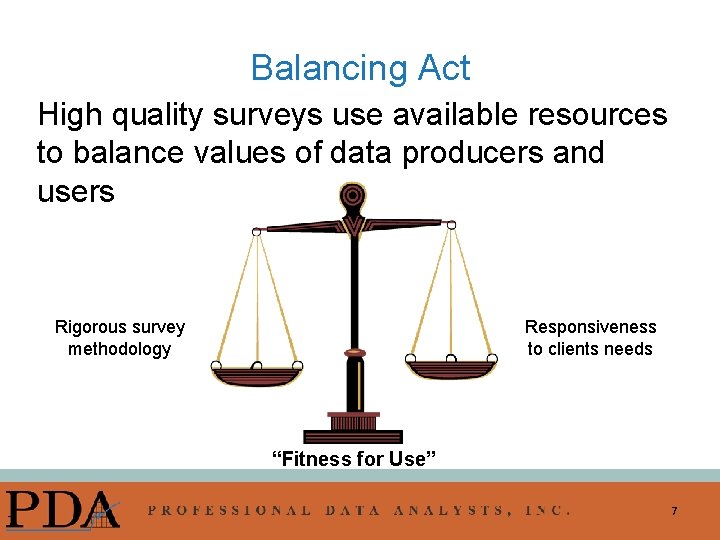

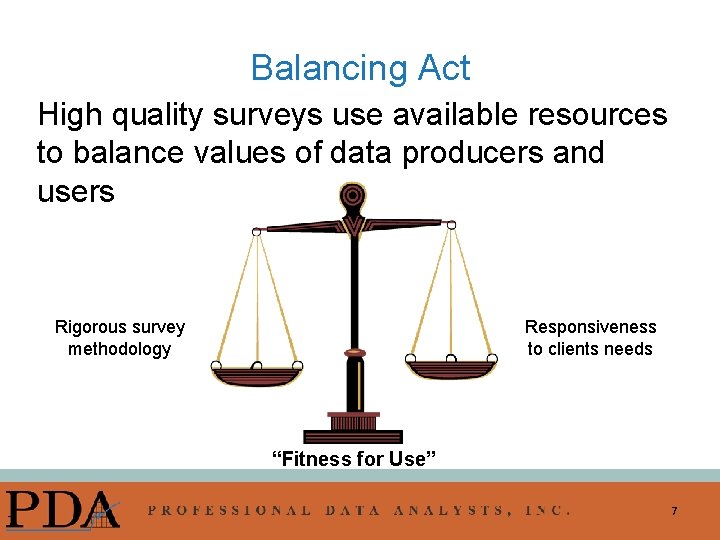

Balancing Act High quality surveys use available resources to balance values of data producers and users Rigorous survey methodology Responsiveness to clients needs “Fitness for Use” 7 7

A Utilization-focused Approach to Methods Decisions “The primary focus in making evaluation methods decisions should be on getting the best possible data to adequately answer primary users’ evaluation questions given available time and resources. ” Patton, 1997, p. 247 8 8

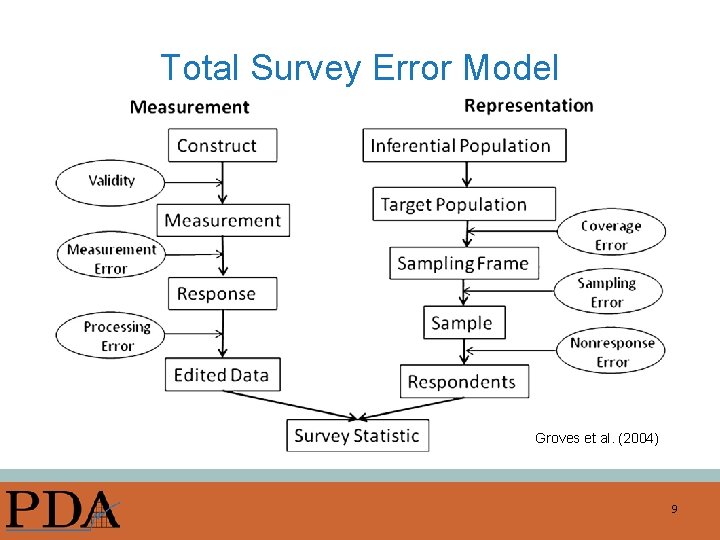

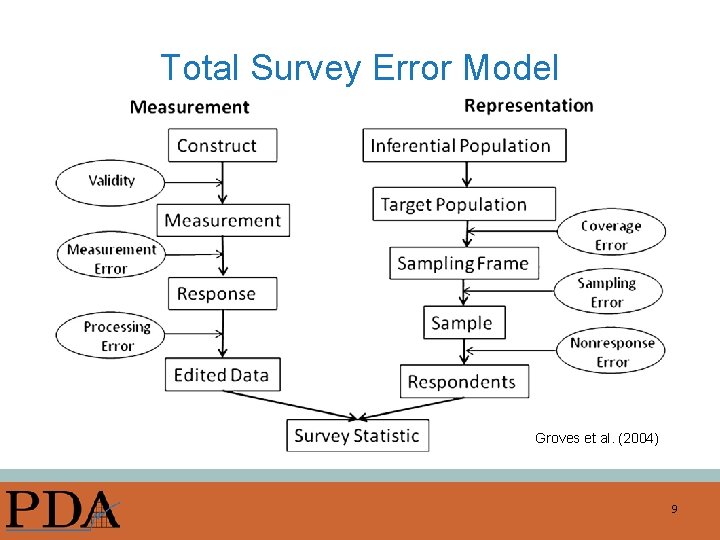

Total Survey Error Model Groves et al. (2004) 9

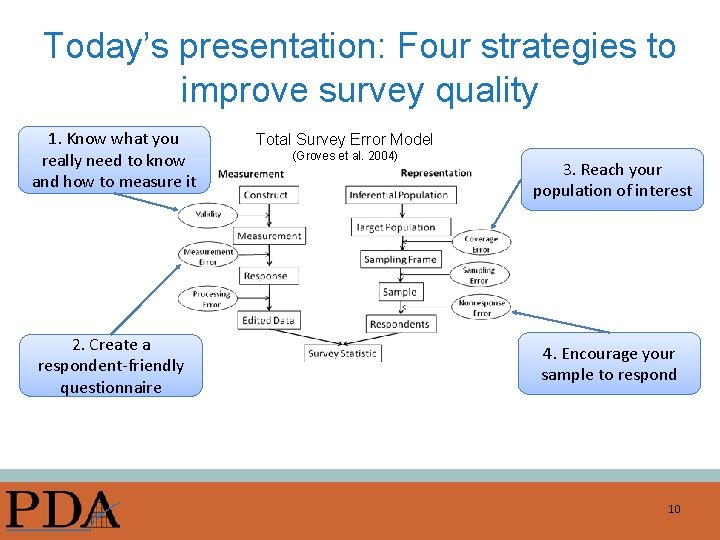

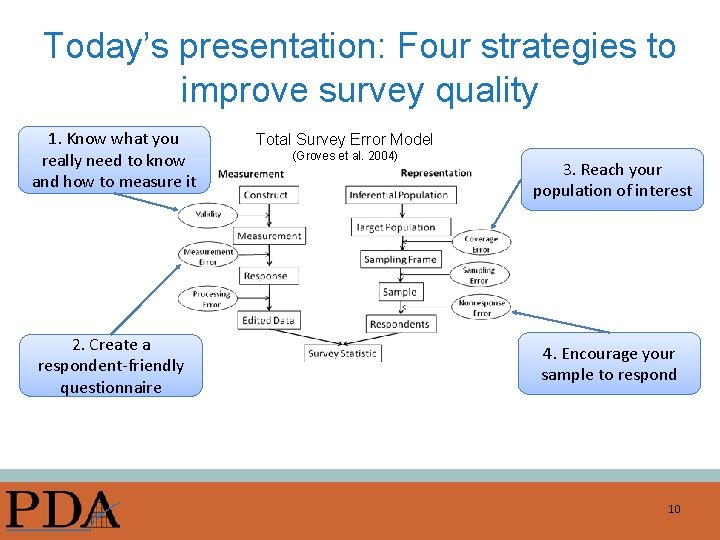

Today’s presentation: Four strategies to improve survey quality 1. Know what you really need to know and how to measure it 2. Create a respondent-friendly questionnaire Total Survey Error Model (Groves et al. 2004) 3. Reach your population of interest 4. Encourage your sample to respond 10

Strategy 1: Know what you really need to know and how to measure it Construct validity = agreement between a theoretical concept and a specific measurement (“did it measure what it was supposed to measure”) • Intelligence vs. IQ test • Program impact vs. Quit rate for a stop-smoking program 11

Survey question development process 1. Define your constructs of interest 2. Decide how to measure your constructs – Review existing validated instruments – Modify existing items (with permission) – Create your own instrument 3. Craft good questions (see Dillman’s work) 4. Conduct cognitive interviews (see Tourangeau’s work) 12

Example: Program Satisfaction Overall, how satisfied were you with the service you received from the stop-smoking program? q q Very satisfied Mostly satisfied Somewhat satisfied Not at all satisfied 13

Exercise: Think, Pair, Share Let’s think more deeply about “program satisfaction. ” 1. Think: What do you really need to know about “program satisfaction”? (Dig deep) 2. Write: Write one good survey question and appropriate response options related to “program satisfaction. ” 3. Pair & Share: Find a partner. Exchange your questions, read, and respond as if you were taking the survey. Then discuss: 1. 2. 3. 4. What do you believe the question is asking? What do the specific words mean to you? What information do you need to be able to recall to answer the question? Do the response categories match your own internally generated answer? 14

Strategy 2: Create a respondentfriendly questionnaire • Understand how participants interact with mail and online survey questionnaires • Consider impact of technology on survey experience and responses 15

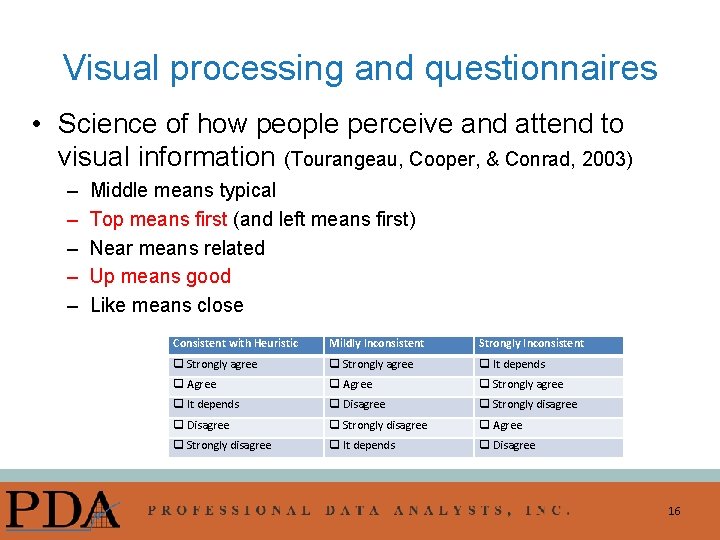

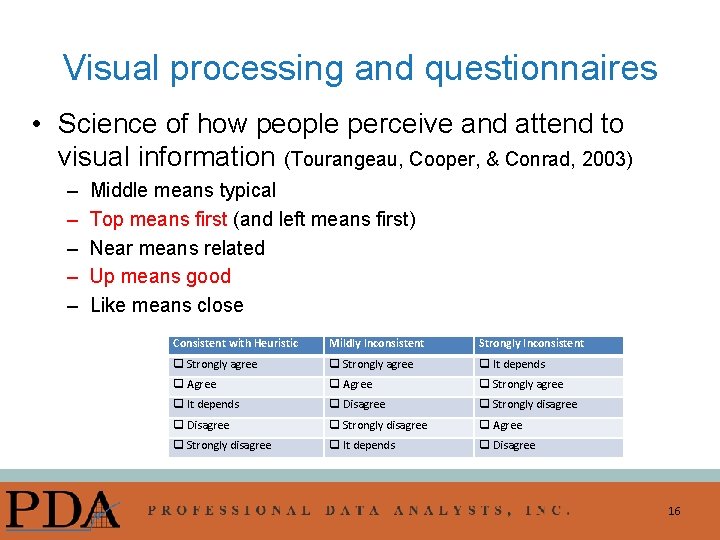

Visual processing and questionnaires • Science of how people perceive and attend to visual information (Tourangeau, Cooper, & Conrad, 2003) – – – Middle means typical Top means first (and left means first) Near means related Up means good Like means close Consistent with Heuristic Mildly Inconsistent Strongly Inconsistent q Strongly agree q It depends q Agree q Strongly agree q It depends q Disagree q Strongly disagree q Agree q Strongly disagree q It depends q Disagree 16

Solutions • Help respondents organize information on page quickly and accurately – Navigational clues – Use of graphic design principles: color, font, size, spacing • Pilot test, including on a variety of platforms for web surveys 17

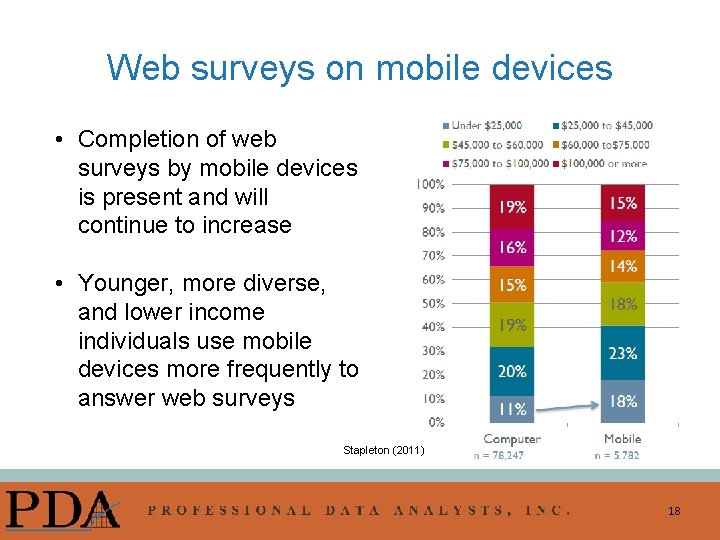

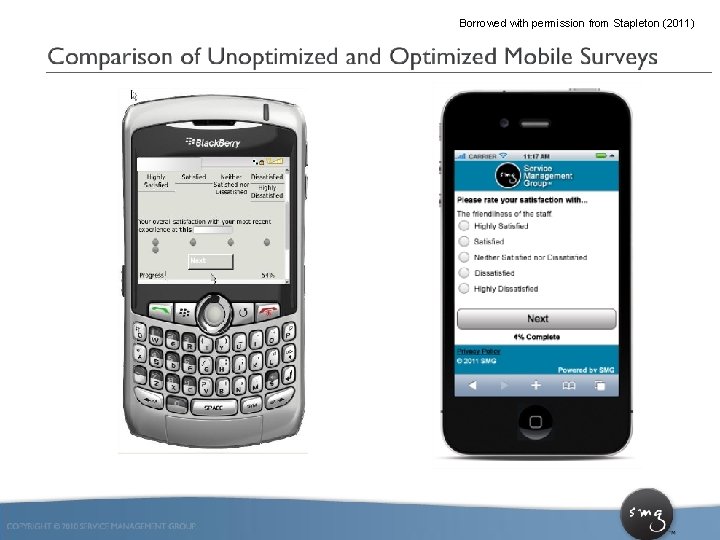

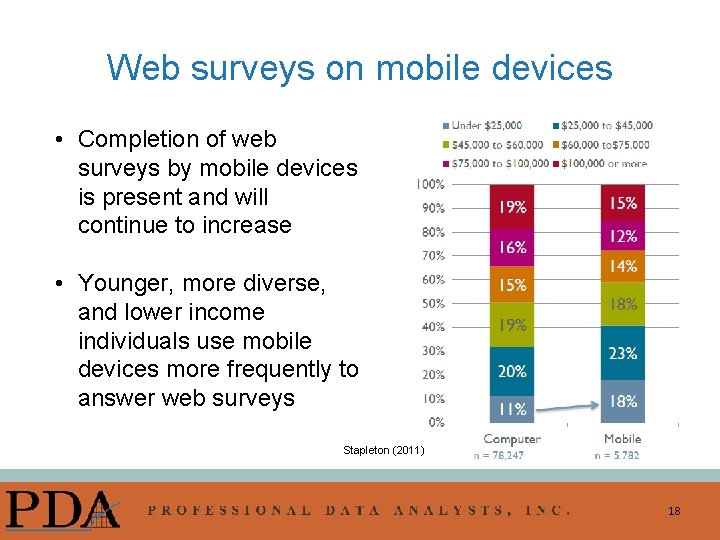

Web surveys on mobile devices • Completion of web surveys by mobile devices is present and will continue to increase • Younger, more diverse, and lower income individuals use mobile devices more frequently to answer web surveys Stapleton (2011) 18

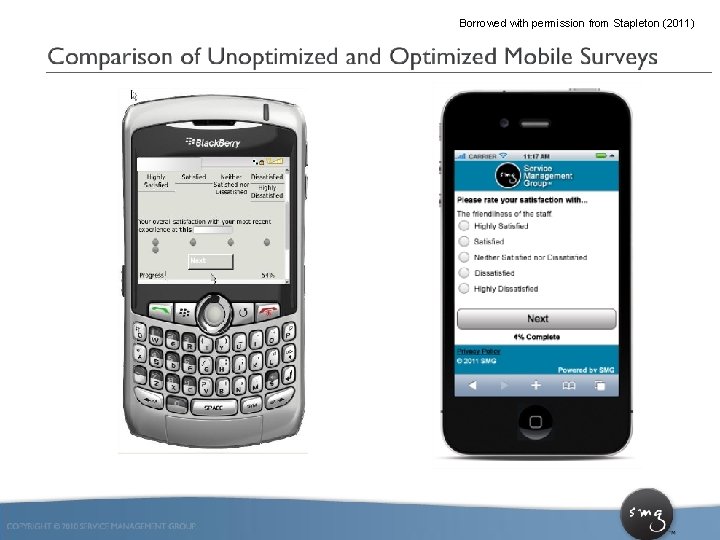

Borrowed with permission from Stapleton (2011) 19

Internet surveys: Promise and peril • Web surveys allow for inexpensive, quick access to survey data • Tools like Survey. Monkey and Zoomerang allow anyone to conduct a professional looking web-survey • Low barriers to entry can lead to “garbage in – garbage out” 20

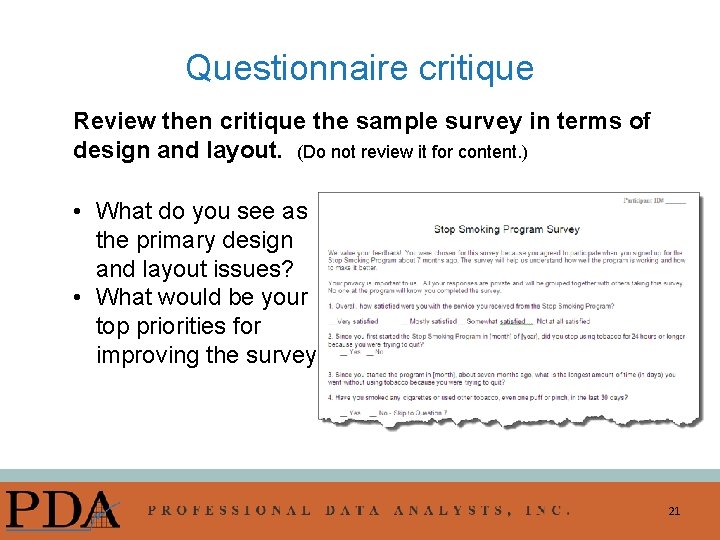

Questionnaire critique Review then critique the sample survey in terms of design and layout. (Do not review it for content. ) • What do you see as the primary design and layout issues? • What would be your top priorities for improving the survey? 21

Strategy 3: Reach your population of interest 22

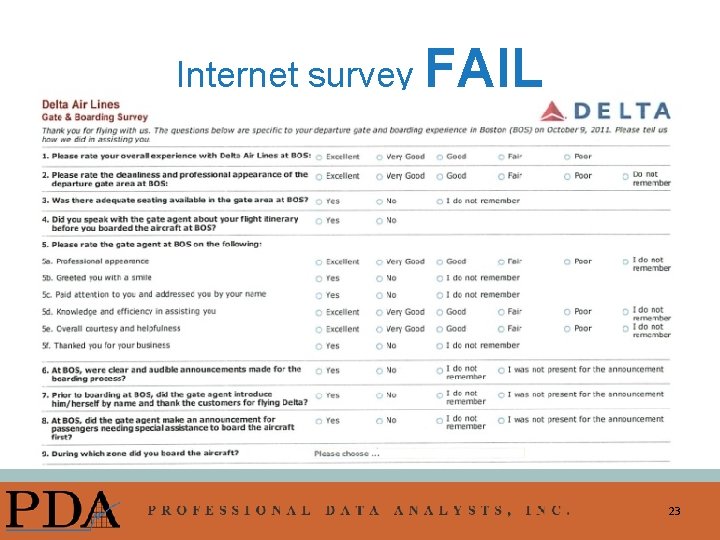

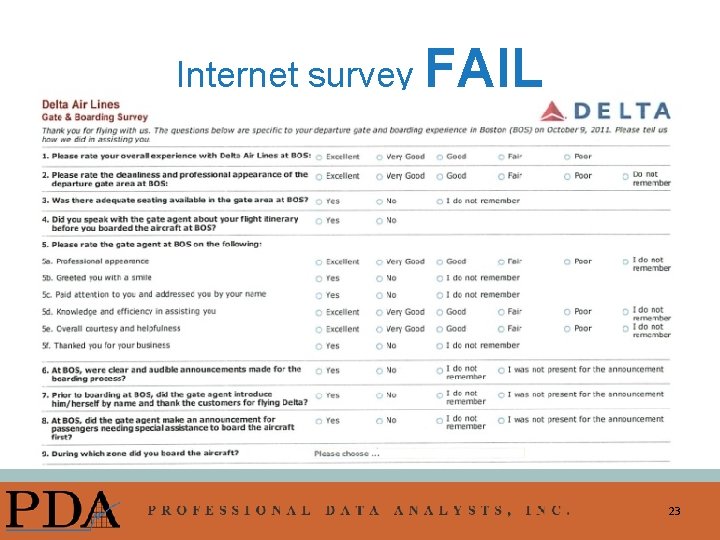

Internet survey FAIL 23

Program evaluations & sampling • Often surveying a known population – Program participants – Organizational staff – Parents of school children, etc. • Sometimes necessary to sample from a population of interest (use a “sampling frame”) – Community members – Individuals with certain characteristics – Program stakeholders 24

Sample frame selection Possible population-based sampling frames: – Landline telephone numbers (RDD) – Cell phone numbers – Postal addresses (postal delivery service file) Sample frame selection dependent on time, resources, and population of interest 25

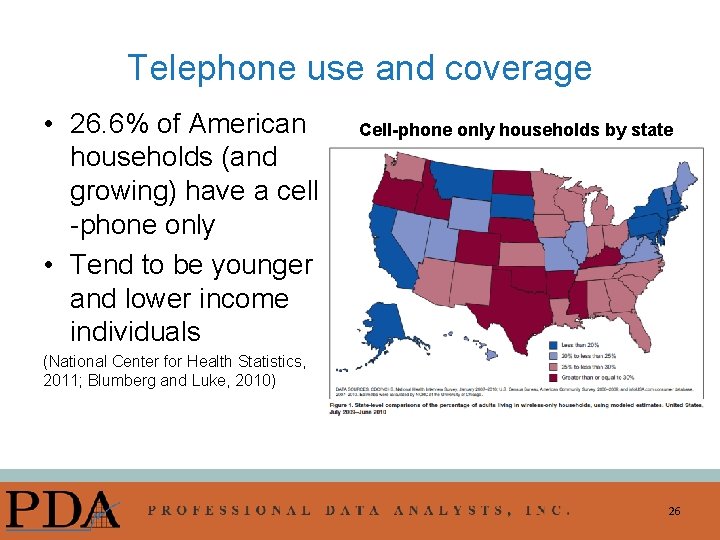

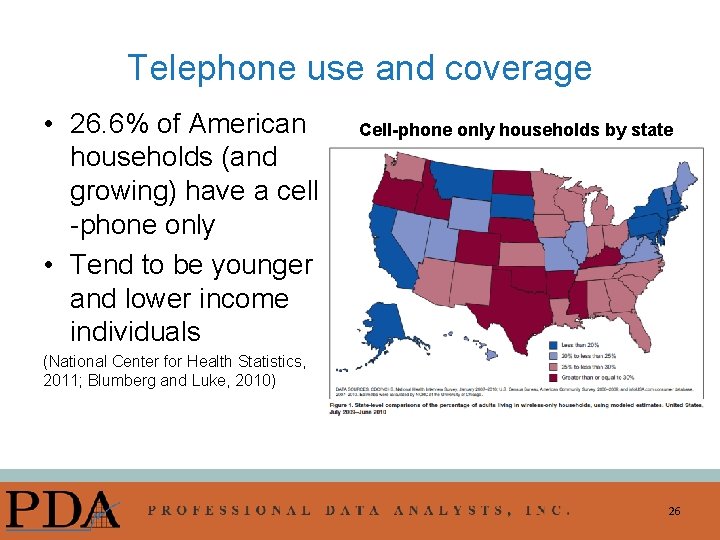

Telephone use and coverage • 26. 6% of American households (and growing) have a cell -phone only • Tend to be younger and lower income individuals Cell-phone only households by state (National Center for Health Statistics, 2011; Blumberg and Luke, 2010) 26

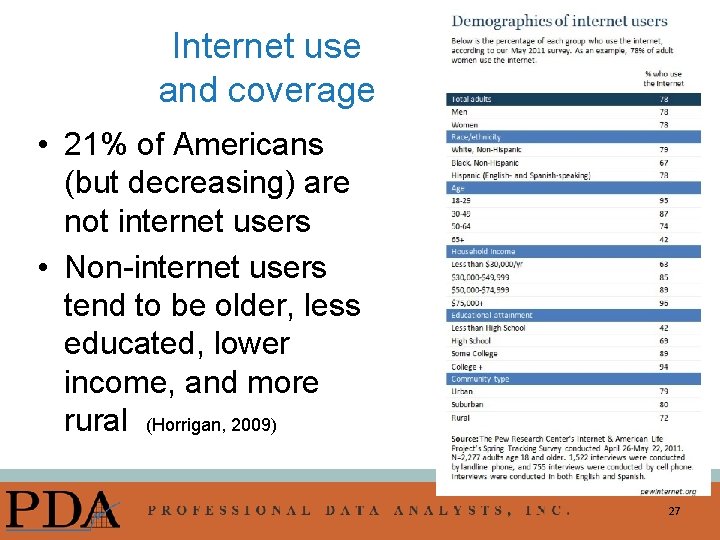

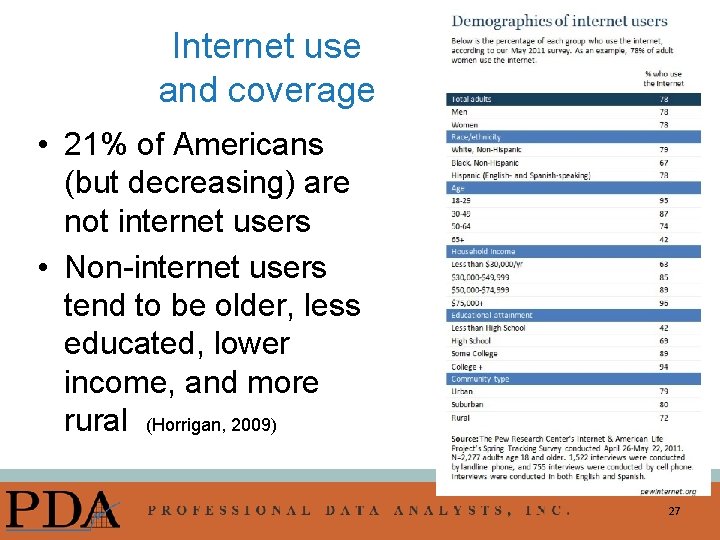

Internet use and coverage • 21% of Americans (but decreasing) are not internet users • Non-internet users tend to be older, less educated, lower income, and more rural (Horrigan, 2009) 27

Postal address coverage • Best coverage, however home addresses are not stable for all populations • Lower coverage in urban high density areas and rural areas • Mail survey administration time longer than phone or web-based 28

Survey frames: New options • • Landline + cell phone samples Address-based sampling Opt-in online panels Online probability-based panels 29

How does this relate to your work? Turn to a partner. Discuss: • What populations are you trying to reach? • What options are best for reaching these populations? (e. g. , landline phone surveys, cell phone surveys, online panels, mailed surveys) • How will choices for how you attempt to reach them bias who you reach? 30 30

Strategy 4: Encourage your sample to respond Surveying as social exchange theory (Dillman, Smyth & Christian, 2009) – Increase perceived rewards – Reduce perceived costs – Establish trust Strategies to encourage response: – Mixed-mode surveys – Incentives 31

Mixed-mode surveys • Mixed-mode surveys use more than one survey mode to collect data. • Examples of survey modes: – – – telephone mail internet face-to-face interactive voice response text messaging • Different than “mixed methods” studies in which both qualitative and quantitative methods are used within one study. 32 32

Mixed-mode surveys and error Using more than one survey mode can improve the validity and reliability of survey data: • Increase response rates (decrease non-response error) • Improve survey coverage, especially from among harder-to-reach populations (decrease coverage error) • Shorten survey administration timeframes (protects against threats to external validity, provide timely data to clients) 33 33

Data Quality • Measurement effects can be strong across modes – Social-desirability can play a strong role in inperson modes (telephone and in-person) – Primacy/recency effects an issue with visual modes (paper and web) • Unimode construction (same wording and construction across modes) a best practice (Dillman, Smyth, Christian, 2009) 34 34

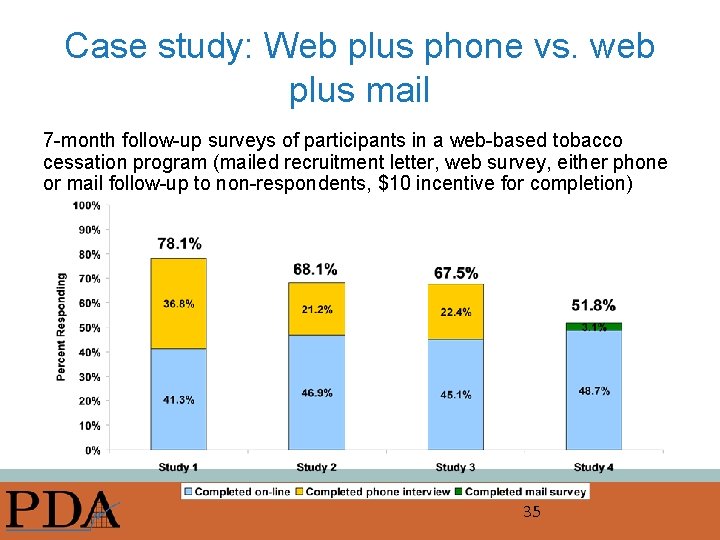

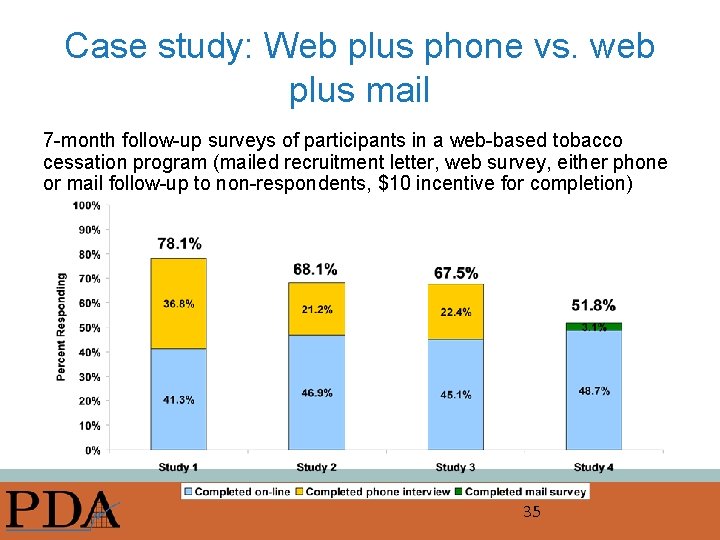

Case study: Web plus phone vs. web plus mail 7 -month follow-up surveys of participants in a web-based tobacco cessation program (mailed recruitment letter, web survey, either phone or mail follow-up to non-respondents, $10 incentive for completion) 35

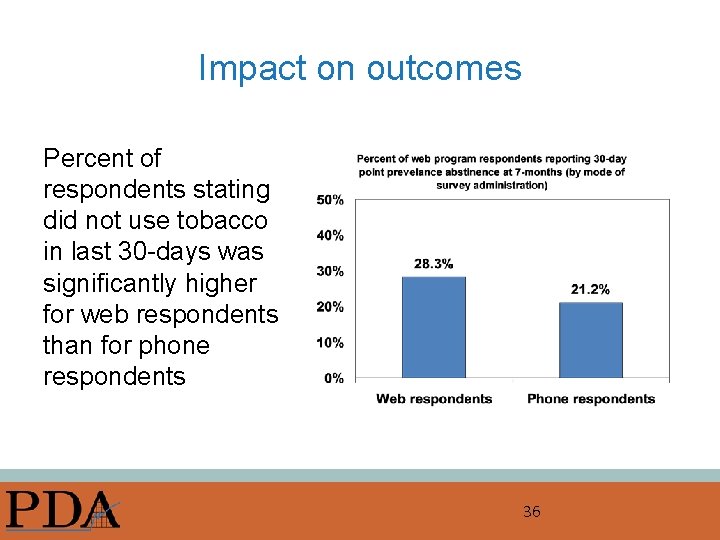

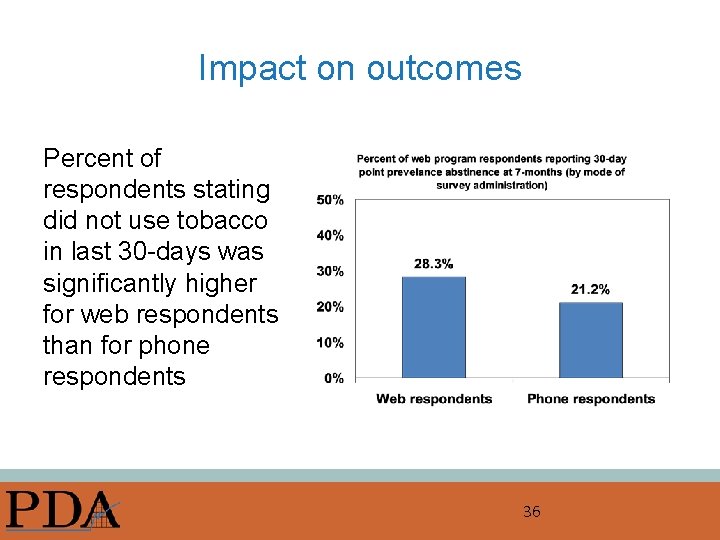

Impact on outcomes Percent of respondents stating did not use tobacco in last 30 -days was significantly higher for web respondents than for phone respondents 36

Incentives • Small pre-paid cash incentives work to boost response rates ($1 -$5) • Incentives are also cost-effective • Incentives bring in people less interested / less inclined to participate • Effect of incentives on response bias being studied but appear promising 37 37

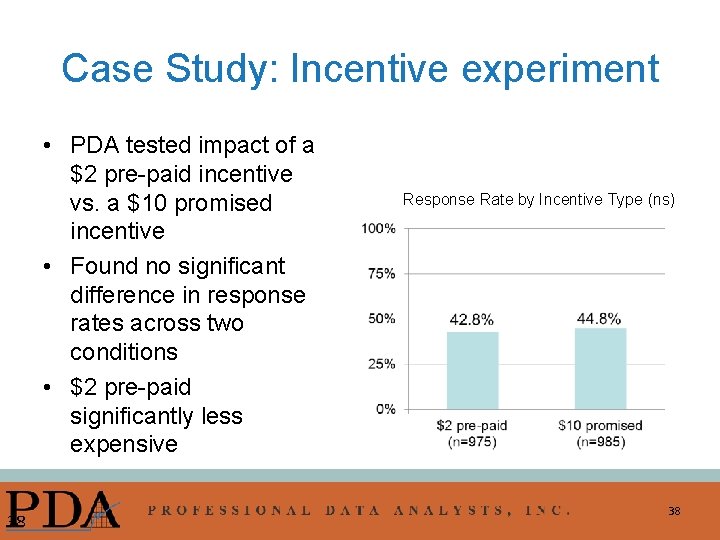

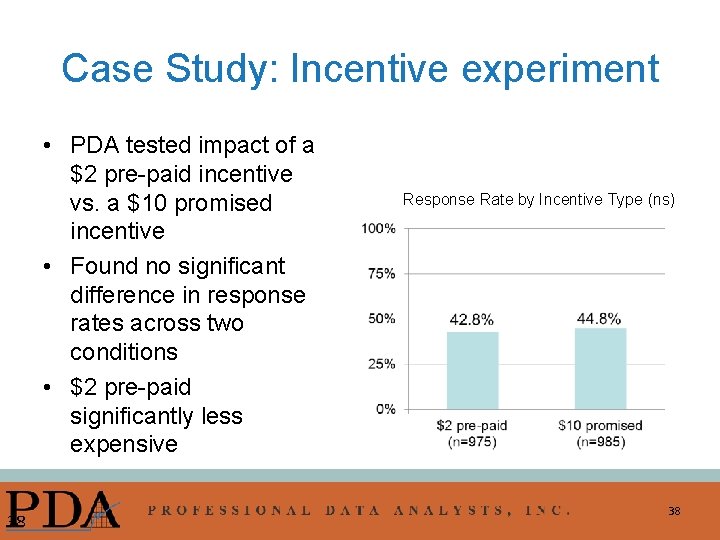

Case Study: Incentive experiment • PDA tested impact of a $2 pre-paid incentive vs. a $10 promised incentive • Found no significant difference in response rates across two conditions • $2 pre-paid significantly less expensive 38 Response Rate by Incentive Type (ns) 38

Final thoughts: Understand respect your respondents 39

Respect the privilege of asking people to trust you with their honest answers • Abuse of survey privileges hurts other legitimate needs to gather data • Always try to minimize respondent burden and maximize their feelings of contributing to a useful study • Survey “karma” – answer surveys if you want others to answer yours 40

General approach to improving quality • Design survey studies to meet client’s needs • Consider impact of survey choices on sources of error • Know your population and tailor your survey • Survey field is changing rapidly: – – 41 Keep up on literature Join American Association of Public Opinion Research Read Survey Practice (surveypractice. org) Contribute to knowledge in field 41

AAPOR American Association of Public Opinion Research www. aapor. org Memberships (includes Public Opinion Quarterly journal): • • • Student memberships: First year free; renewals are $25 per year. Individual memberships range from $55 - $130 per year depending on income. Employer paid: $150. AAPOR T-shirt Slogan Contest Past Winners: –“I lost my validity at AAPOR” –“AAPOR: Freqs and Geeks” –“Public Opinion Research: Fighting the war against error” –“Trust us – we’re 95% confident” –“If you don’t like the estimate, just weight” 42 42

References & Recommended Reading American Association of Public Opinion Research. (2010, March). AAPOR Report on Online Panels. Available online from: http: //www. aapor. org/AM/Template. cfm? Section=AAPOR_Committee_and_Task_For ce_Reports&Template=/CM/Content. Display. cfm&Content. ID=2223 American Association of Public Opinion Research. (2010, March). AAPOR Cell Phone Task Force Report. Available online from: http: //www. aapor. org/Cell_Phone_Task_Force. htm Biemer, P. P. (2010). Total survey error: Design, Implementation, and Evaluation. Public Opinion Quarterly, 74 (5), pp. 817 -848. Blumberg S. J. et al. (2011, April). Wireless substitution: State-level estimates from the National Health Interview Survey. National Center for Health Statistics. Available online from: http: //www. cdc. gov/nchs/nhis. htm. Dillman, D. et al. (2009, March). Response rate and measurement differences in mixed mode surveys using mail, telephone, interactive voice response, and the internet. Social Science Research, 38(1), pp. 1 -18. Dillman, D. , Smyth, J. D. , and Christian, L. M. (2009). Internet, mail, and mixed-mode surveys: The tailored design method, 3 rd edition. Hoboken, NJ: Wiley Fahimi, Monsour, (2010). Address-Based Sampling (ABS): Merits, Design, and Implementation. Presentation to National Conference on Health Statistics. Available online from: www. cdc. gov/nchs/ppt/nchs 2010/17_Fahimi. pdf 43 43

Groves, R. et al. (2004). Survey Methodology. New York: Wiley. Horrigan, J. B. (2009, June). Home Broadband Adoption 2009. Pew Internet and American Life Project. Available from: http: //www. pewinternet. org/Reports/2009/10 Home-Broadband-Adoption-2009. aspx Juran, J. & Gryna, F. (1980). Quality planning and analysis, 2 nd edition. New York: Mc. Graw Hill. Stapleton, C. (2011, May). The Smart(Phone) Way to Collect Data. Presentation to the American Association of Public Opinion Researchers, Phoenix, Arizona. Tourangeau, R. , Couper, M. , & Conrad, F. (2003). Spacing, Position, and Order: Interpretive Heuristics for Visual Features of Survey Questions. University of Michigan: Institute for Social Research. Available from: http: //www. isr. umich. edu/src/smp/Electronic%20 Copies/119. pdf Tourangeau, Rips, & Rasinski. (2000). The Psychology of Survey Response. New York: Cambridge University Press. Weisberg, H. F. , Krosnick, J. , & Bowen, B. D. (1996). An introduction to survey research, polling, and data analysis, 3 rd edition. Sage Press. Yeager, D. S. , Krosnick, J. , Chang, L-C. , Javitz, H. , Levendusky, M. , Simpser, A. & Wang, R. (2009). Comparing the accuracy of RDD telephone surveys and Internet surveys conducted with probability and non-probability samples. Available from: http: //www. knowledgenetworks. com/insights/docs/Mode-04_2. pdf 44 44

Contact Information Lija Greenseid, Ph. D. Senior Evaluator lija@pdastats. com Julie Rainey Vice President julie@pdastats. com 612 -623 -9110 www. PDAstats. com 45 45

What is survey experiment or observation?

What is survey experiment or observation? Living tree of nursing theories

Living tree of nursing theories Nursing informatics theories, models and frameworks

Nursing informatics theories, models and frameworks Food security concepts and frameworks

Food security concepts and frameworks Beneficence

Beneficence Strategic management frameworks

Strategic management frameworks 3 example of paragraph

3 example of paragraph Non traditional art

Non traditional art Parcc model content frameworks

Parcc model content frameworks Java e commerce frameworks

Java e commerce frameworks List of theoretical frameworks

List of theoretical frameworks Architecture frameworks

Architecture frameworks Enterprise agile frameworks

Enterprise agile frameworks Why i hate frameworks

Why i hate frameworks Social studies toolkit

Social studies toolkit Describe trust frameworks.

Describe trust frameworks. Actor frameworks

Actor frameworks Gary ives west yorkshire study

Gary ives west yorkshire study Software architecture frameworks

Software architecture frameworks Interpretive framework

Interpretive framework Regional construction frameworks

Regional construction frameworks Interpretive framework examples

Interpretive framework examples Php frameworks

Php frameworks Net frameworks 4

Net frameworks 4 Local development frameworks

Local development frameworks Knowledge frameworks

Knowledge frameworks Types of executional framework

Types of executional framework Surveys, experiments, and observational studies worksheet

Surveys, experiments, and observational studies worksheet Lesson 3.5 what is wrong with these surveys

Lesson 3.5 what is wrong with these surveys Quality control and quality assurance

Quality control and quality assurance Concepts of quality control

Concepts of quality control Lanham act surveys

Lanham act surveys Snap survey software

Snap survey software Real eyes surveys

Real eyes surveys Survey rebuttals

Survey rebuttals Physical security surveys

Physical security surveys What is literature

What is literature Surveys.panoramaed.com/everett

Surveys.panoramaed.com/everett Highway surveys

Highway surveys Partial discharge survey

Partial discharge survey Syndicated services

Syndicated services Survey.panoramaed.com/ecisd

Survey.panoramaed.com/ecisd A veterinarian surveys 26 of his patrons

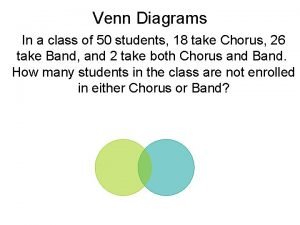

A veterinarian surveys 26 of his patrons Types of land surveys

Types of land surveys Double barreled question

Double barreled question Surveys.pano

Surveys.pano