Actor Frameworks for the JVM Platform Rajesh Karmani

![References [1] Gul Agha. Actors: a model of concurrent computation in distributed systems. MIT References [1] Gul Agha. Actors: a model of concurrent computation in distributed systems. MIT](https://slidetodoc.com/presentation_image/2798702233ebf5c074dddeeda0478a1f/image-38.jpg)

- Slides: 38

Actor Frameworks for the JVM Platform Rajesh Karmani*, Amin Shali, Gul Agha University of Illinois at Urbana-Champaign 08/27/2009

Multi-core, many-core programming n n n n Erlang E Axum Stackless Python Theron (C++) Rev. Actor (Ruby) … still growing 2

and on the JVM n Scala Actors n n n n n Actor. Foundry SALSA Kilim Jetlang Actor’s Guild Clojure Fan Jsasb … still growing 3

Actor model of programming n Autonomous, concurrent actors Inherently concurrent model of programming n No shared state No data races n Asynchronous message-passing Uniform, high-level primitive for both data exchange and synchronization “Actors: A Model of Concurrent Computation in Distributed Systems, " Gul Agha. MIT Press, 1986. 4

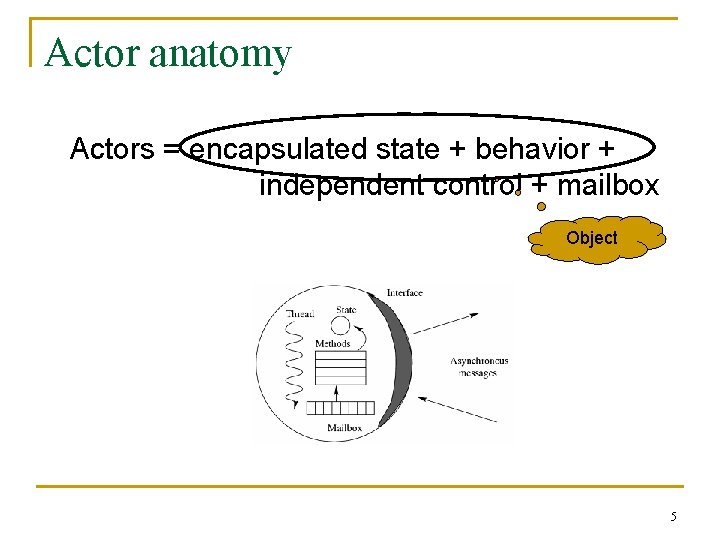

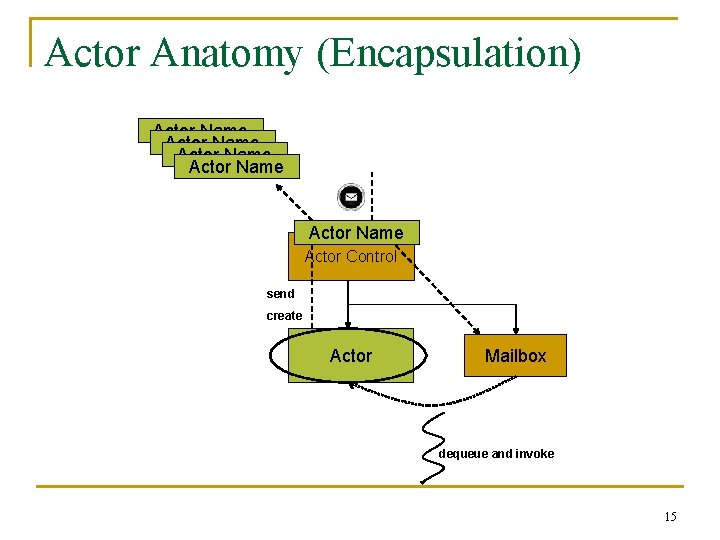

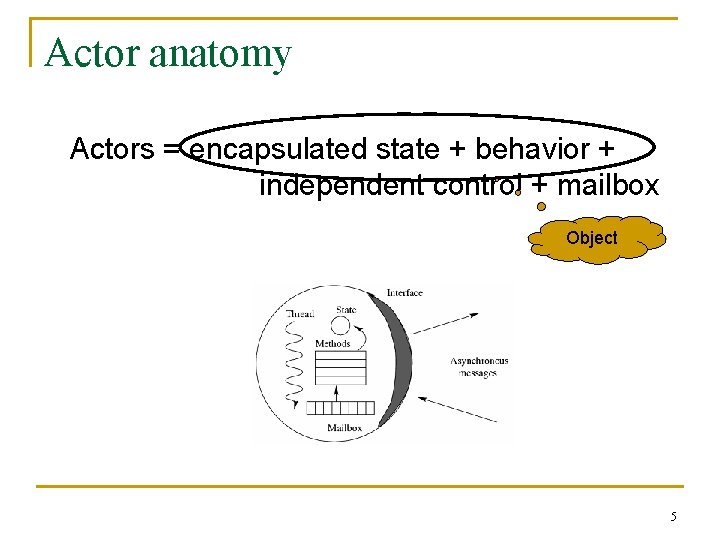

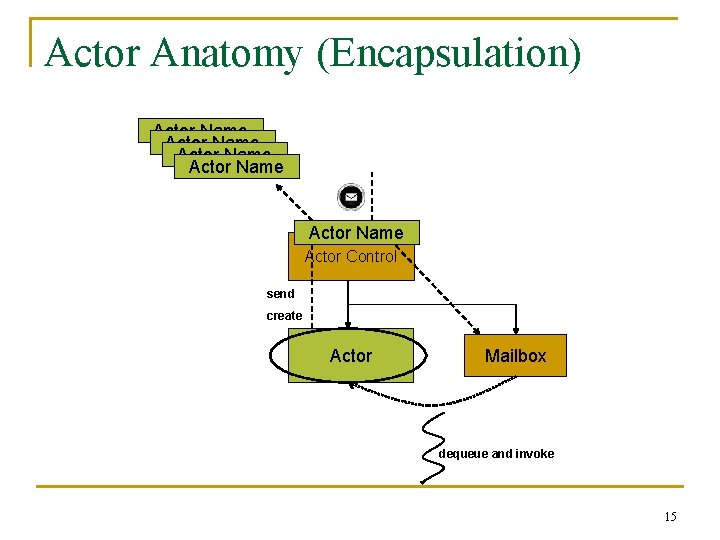

Actor anatomy Actors = encapsulated state + behavior + independent control + mailbox Object 5

Standard Actor Semantics n n Encapsulation Fairness Location Transparency Mobility 6

Actor Encapsulation There is no shared state among actors 1. n Local (private) state Access another actor’s state only by sending messages 2. n n Messages have send-by-value semantics Implementation can be relaxed on shared memory platforms, if “safe” 7

Why Encapsulation? n Reasoning about safety properties becomes “easier” n JVM memory safety is not sufficient for Actor semantics n Libraries like Scala and Kilim impose conventions instead of enforcement q Makes it easier to make mistakes 8

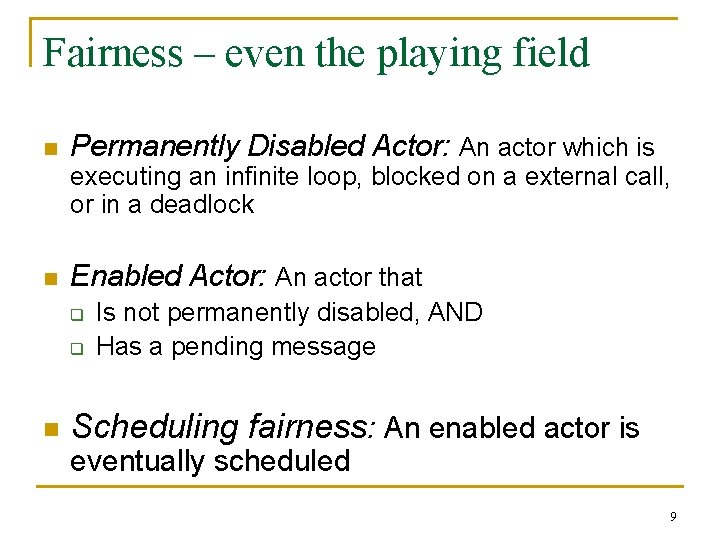

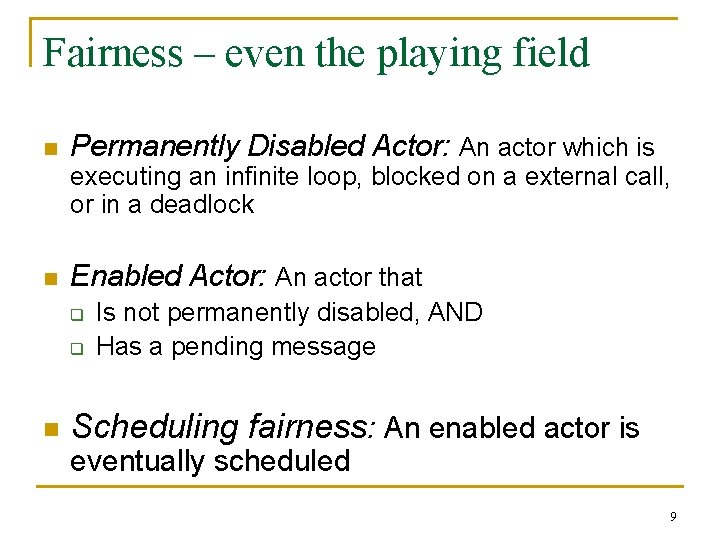

Fairness – even the playing field n Permanently Disabled Actor: An actor which is executing an infinite loop, blocked on a external call, or in a deadlock n Enabled Actor: An actor that q q n Is not permanently disabled, AND Has a pending message Scheduling fairness: An enabled actor is eventually scheduled 9

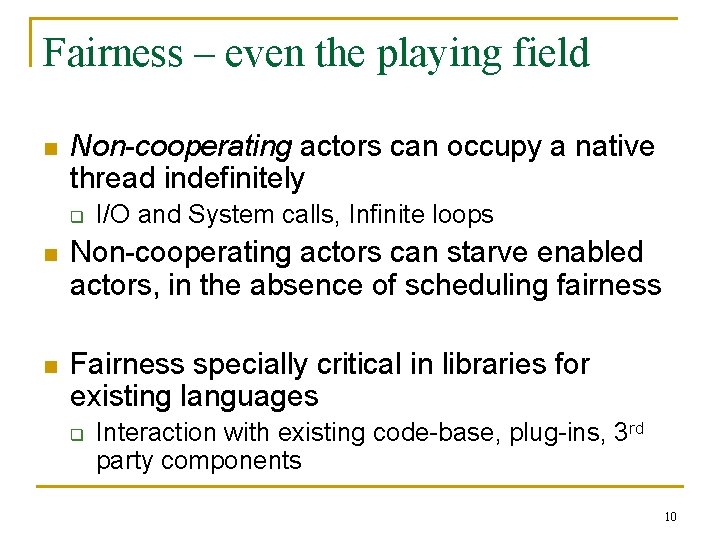

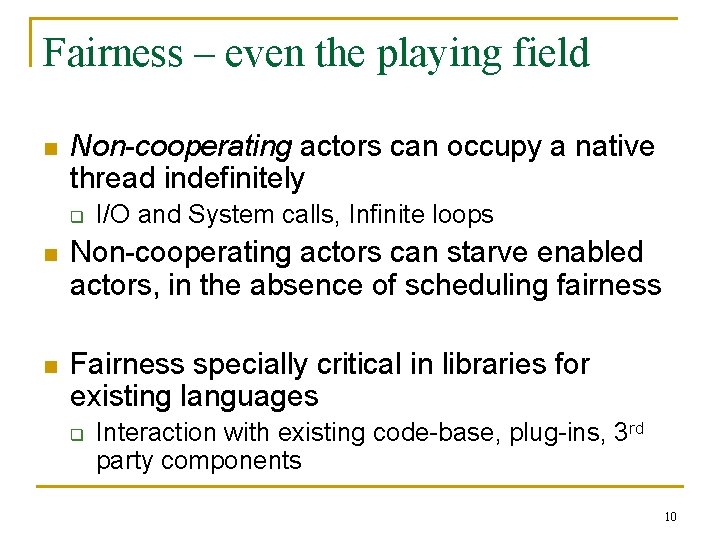

Fairness – even the playing field n Non-cooperating actors can occupy a native thread indefinitely q I/O and System calls, Infinite loops n Non-cooperating actors can starve enabled actors, in the absence of scheduling fairness n Fairness specially critical in libraries for existing languages q Interaction with existing code-base, plug-ins, 3 rd party components 10

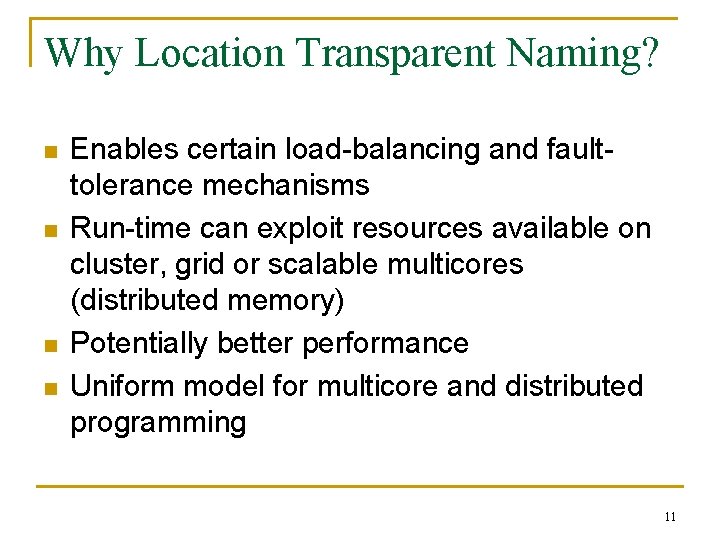

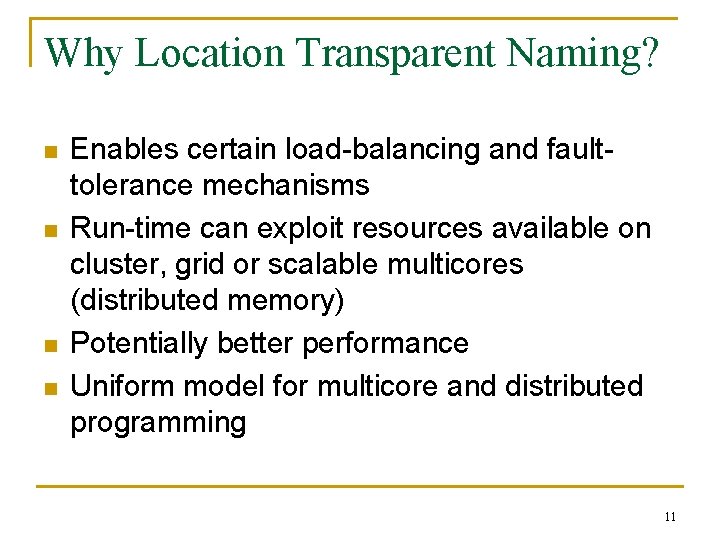

Why Location Transparent Naming? n n Enables certain load-balancing and faulttolerance mechanisms Run-time can exploit resources available on cluster, grid or scalable multicores (distributed memory) Potentially better performance Uniform model for multicore and distributed programming 11

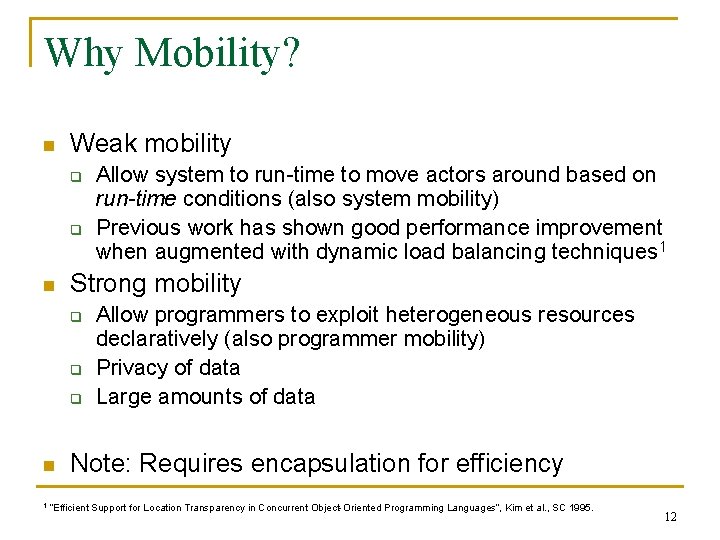

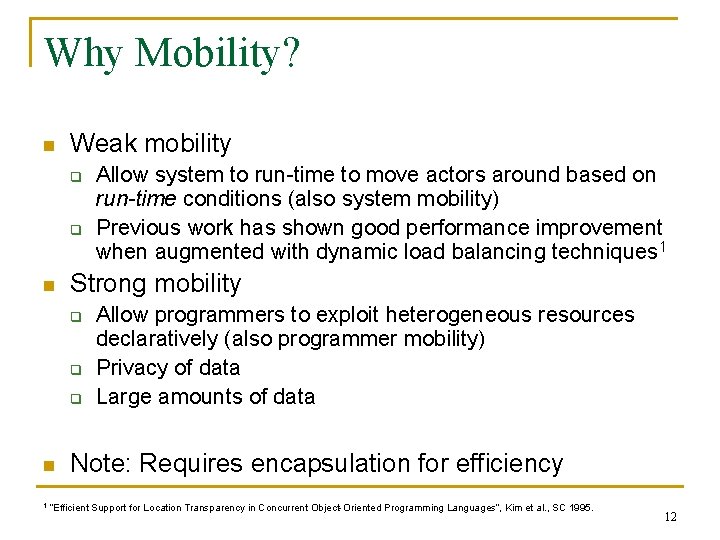

Why Mobility? n Weak mobility q q n Strong mobility q q q n 1 Allow system to run-time to move actors around based on run-time conditions (also system mobility) Previous work has shown good performance improvement when augmented with dynamic load balancing techniques 1 Allow programmers to exploit heterogeneous resources declaratively (also programmer mobility) Privacy of data Large amounts of data Note: Requires encapsulation for efficiency “Efficient Support for Location Transparency in Concurrent Object-Oriented Programming Languages”, Kim et al. , SC 1995. 12

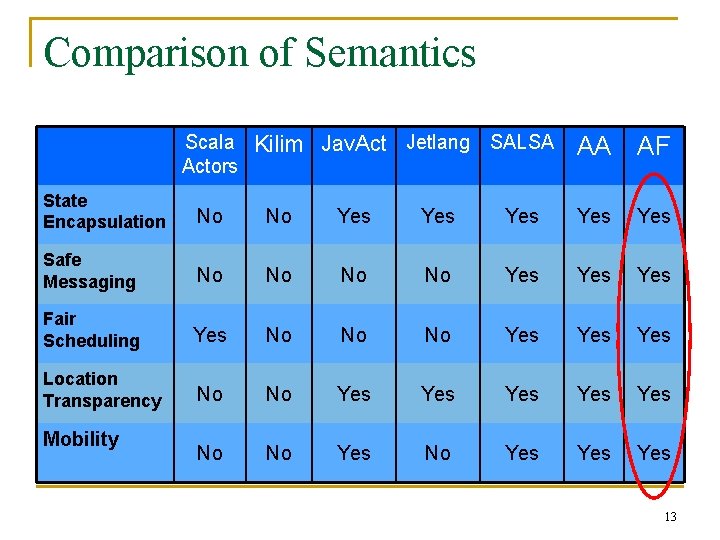

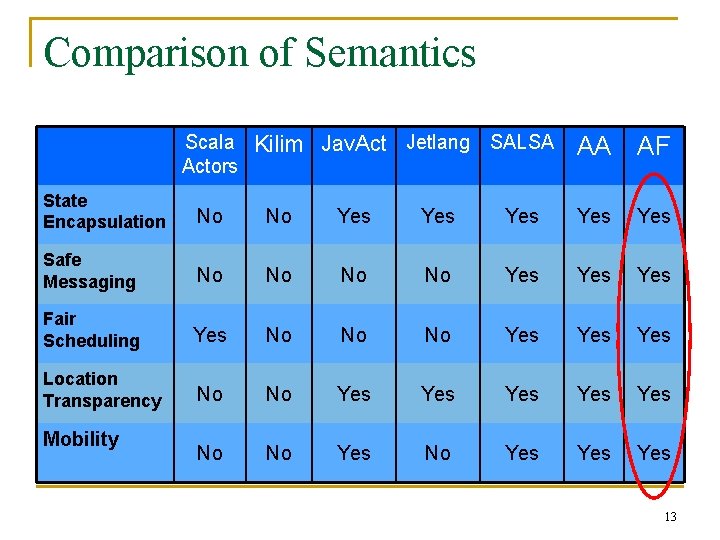

Comparison of Semantics Scala Kilim Jav. Act Jetlang SALSA Actors AA AF State Encapsulation No No Yes Yes Yes Safe Messaging No No Yes Yes Fair Scheduling Yes No No No Yes Yes Location Transparency No No Yes Yes Yes No No Yes Yes Mobility 13

Actor. Foundry n Off-the-web library for Actor programming Major goals: Usability and Extensibility Other goals: Reasonable performance n Supports standard Actor semantics n n 14

Actor Anatomy (Encapsulation) Actor Name Actor Name Actor Control send create Actor Mailbox dequeue and invoke 15

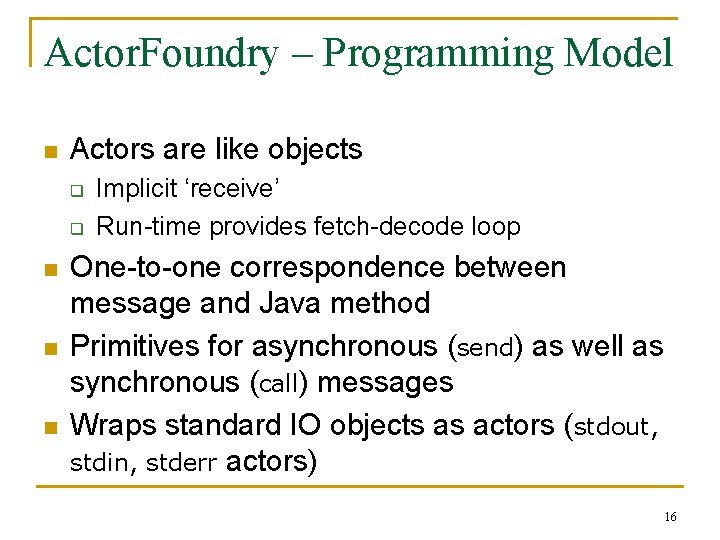

Actor. Foundry – Programming Model n Actors are like objects q q n n n Implicit ‘receive’ Run-time provides fetch-decode loop One-to-one correspondence between message and Java method Primitives for asynchronous (send) as well as synchronous (call) messages Wraps standard IO objects as actors (stdout, stdin, stderr actors) 16

Actor. Foundry - basic API n create(node, class, params) q Locally or at remote nodes n send(actor, method, params) n call(actor, method, params) q n Request-reply messages destroy(reason) q Explicit memory management 17

Actor. Foundry - Implementation n n Maps each actor onto a Java thread Actor = Java Object + Java Thread + Mailbox + Actor. Name q n n n Actor. Name provides encapsulation as well as location transparency Message contents are deep copied Fairness: Reliable delivery (but unordered messages) and fair scheduling Support for distribution and actor migration 18

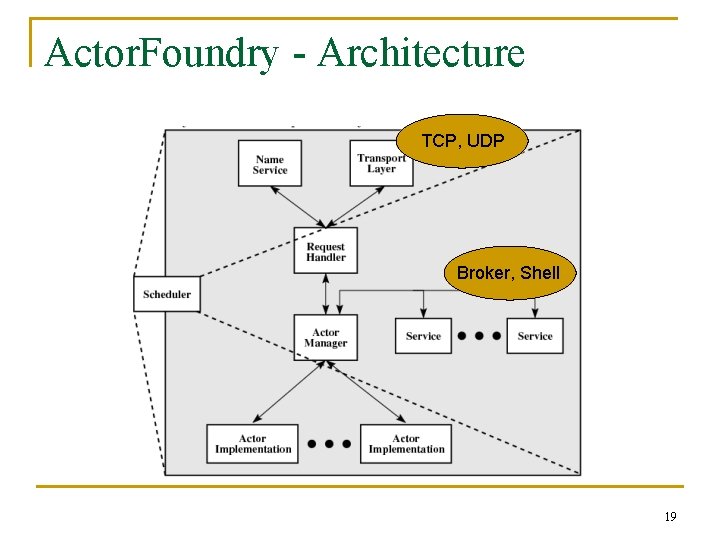

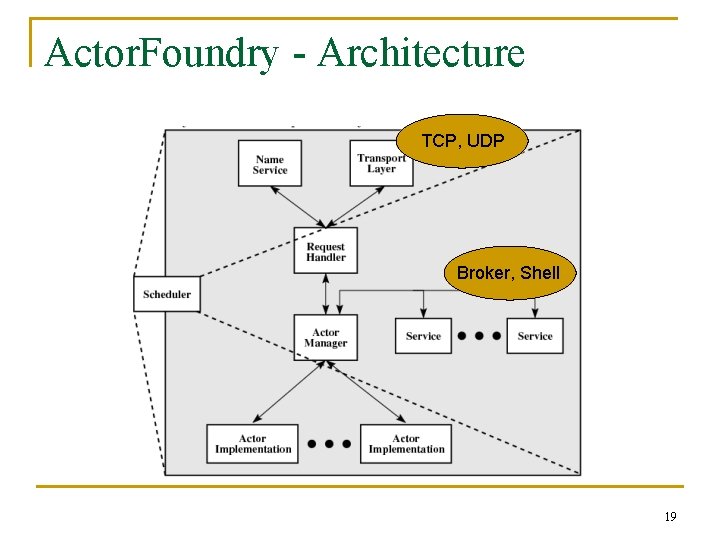

Actor. Foundry - Architecture TCP, UDP Broker, Shell 19

Motivation Revisited – Why Actor. Foundry? n Extensibility q n Usability q q n Modular and hence extensible Actors as objects + small set of library calls Leverage Java libraries and expertise Performance q Let’s check… 20

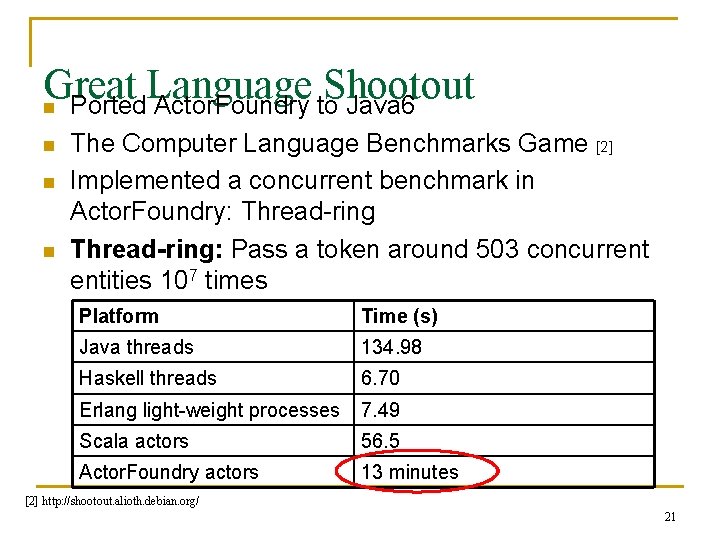

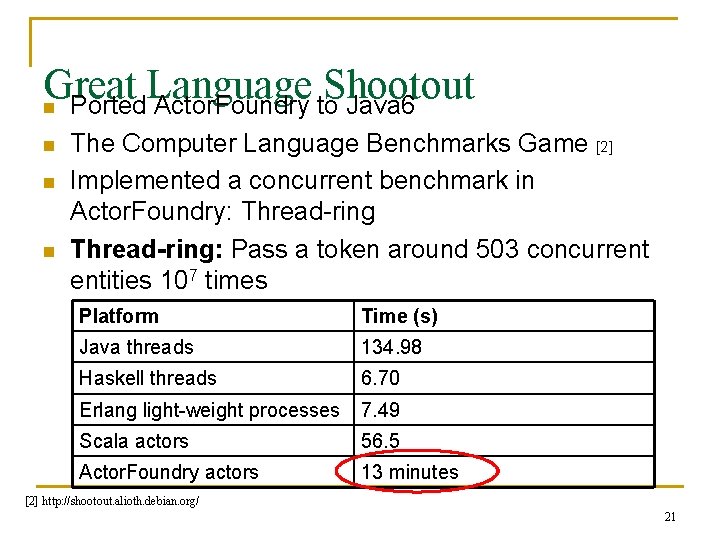

Great Language Shootout n Ported Actor. Foundry to Java 6 n n n The Computer Language Benchmarks Game [2] Implemented a concurrent benchmark in Actor. Foundry: Thread-ring: Pass a token around 503 concurrent entities 107 times Platform Time (s) Java threads 134. 98 Haskell threads 6. 70 Erlang light-weight processes 7. 49 Scala actors 56. 5 Actor. Foundry actors 13 minutes [2] http: //shootout. alioth. debian. org/ 21

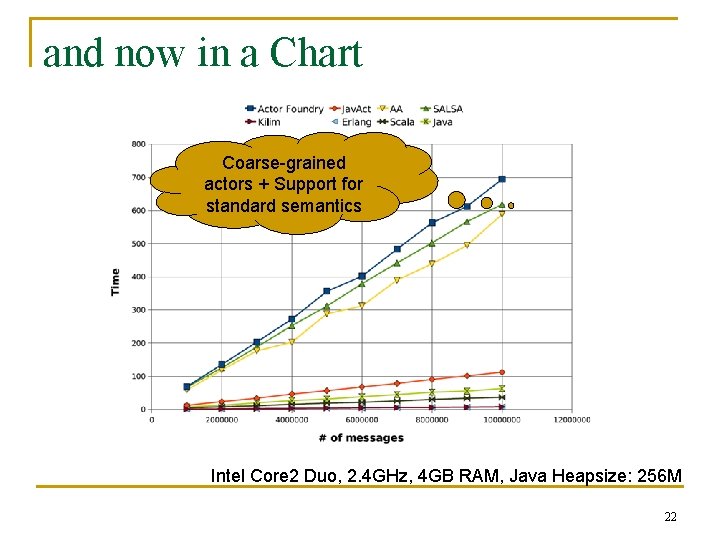

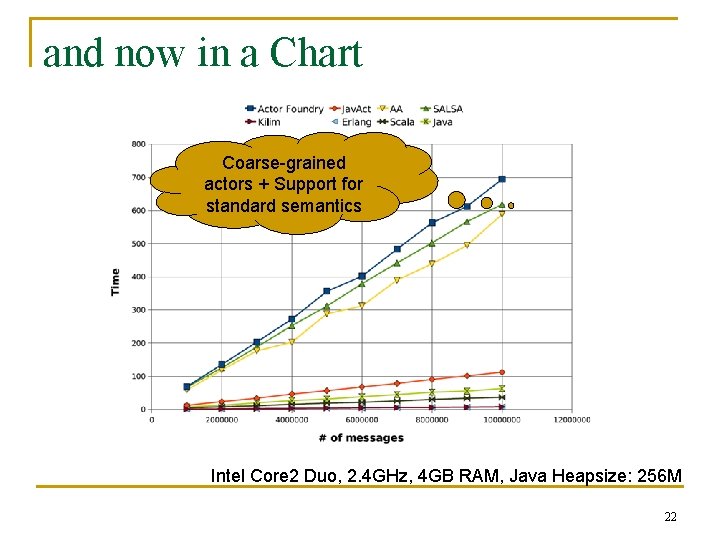

and now in a Chart Coarse-grained actors + Support for standard semantics Intel Core 2 Duo, 2. 4 GHz, 4 GB RAM, Java Heapsize: 256 M 22

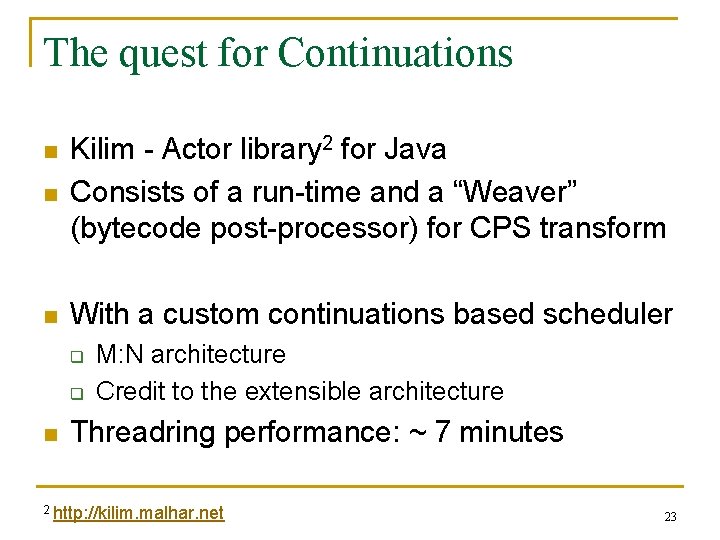

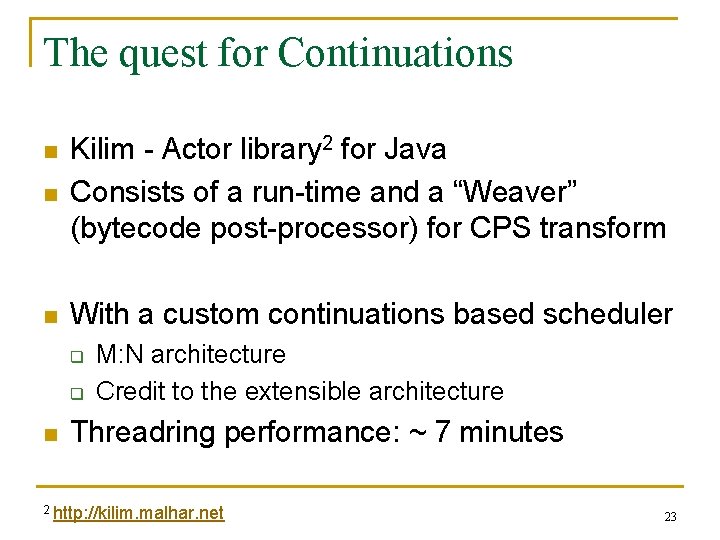

The quest for Continuations n Kilim - Actor library 2 for Java Consists of a run-time and a “Weaver” (bytecode post-processor) for CPS transform n With a custom continuations based scheduler n q q n M: N architecture Credit to the extensible architecture Threadring performance: ~ 7 minutes 2 http: //kilim. malhar. net 23

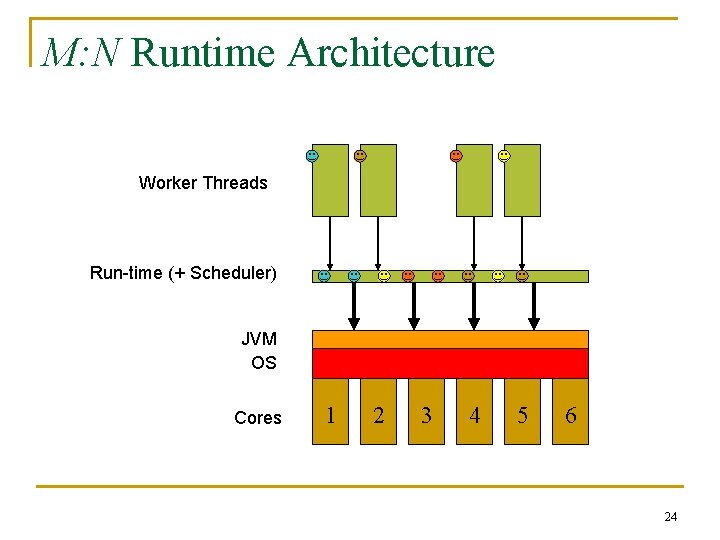

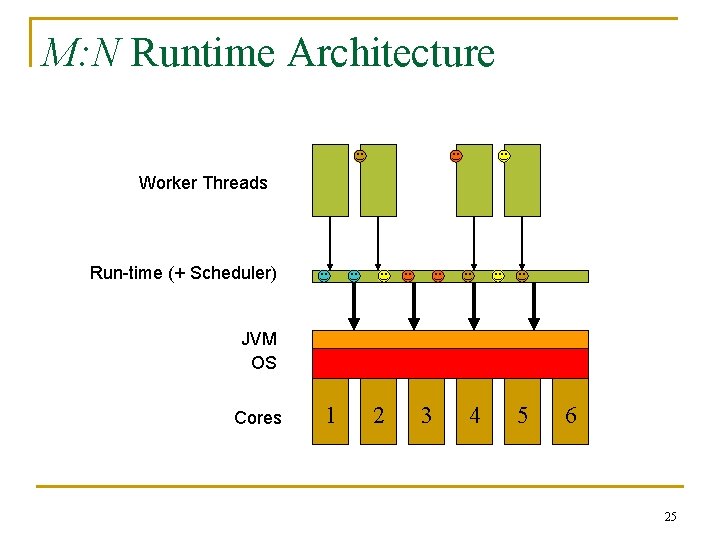

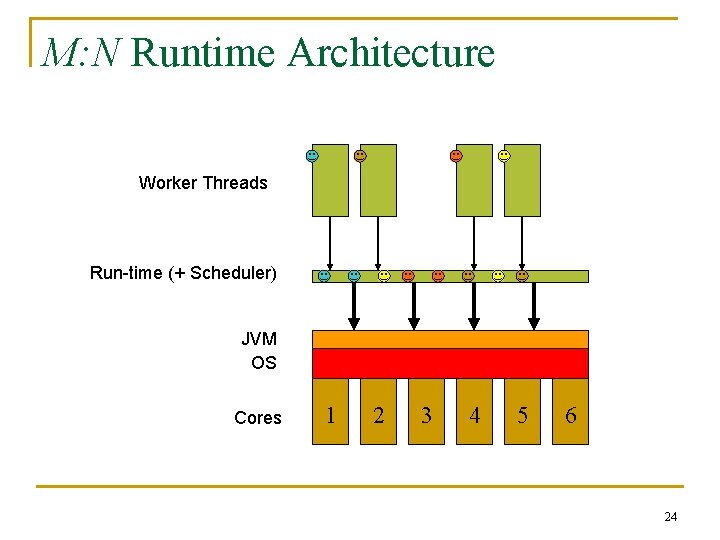

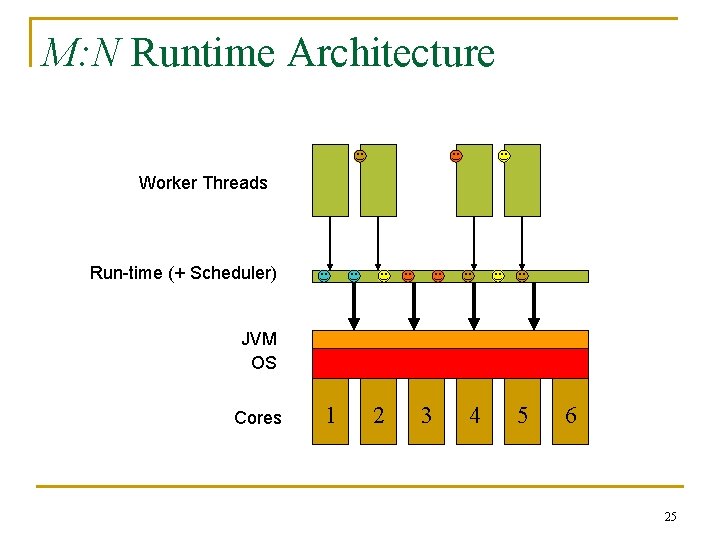

M: N Runtime Architecture Worker Threads Run-time (+ Scheduler) JVM OS Cores 1 2 3 4 5 6 24

M: N Runtime Architecture Worker Threads Run-time (+ Scheduler) JVM OS Cores 1 2 3 4 5 6 25

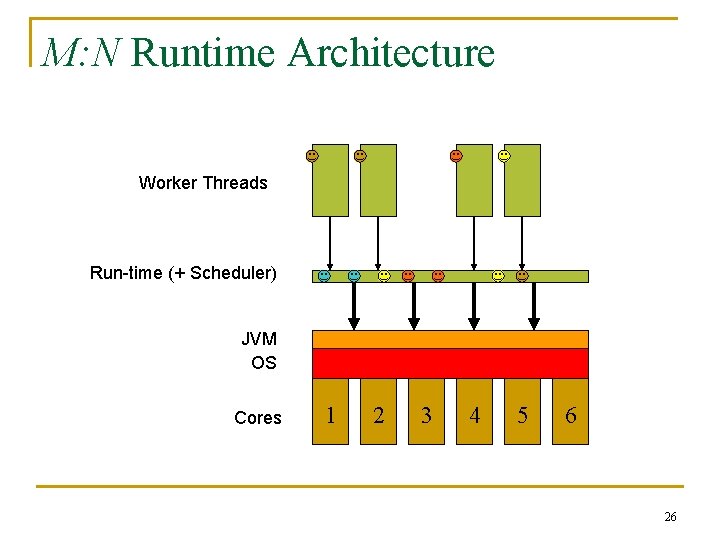

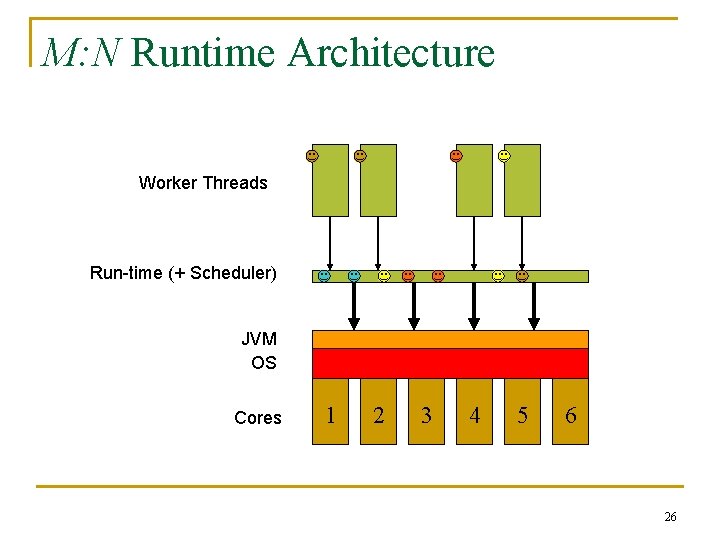

M: N Runtime Architecture Worker Threads Run-time (+ Scheduler) JVM OS Cores 1 2 3 4 5 6 26

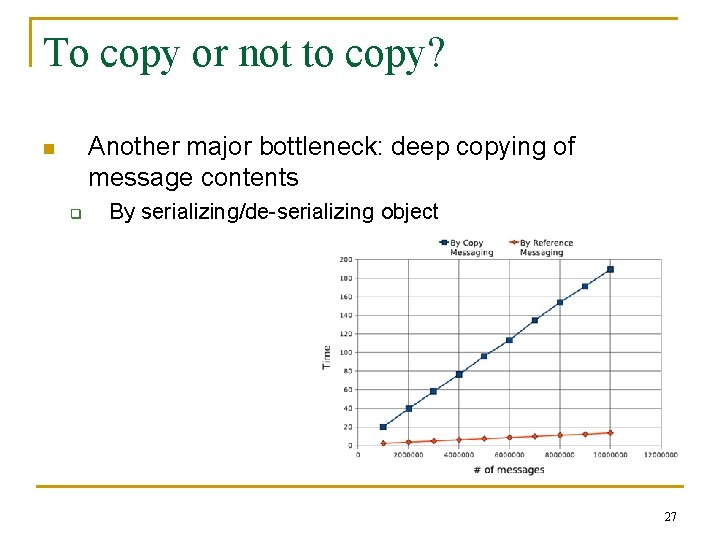

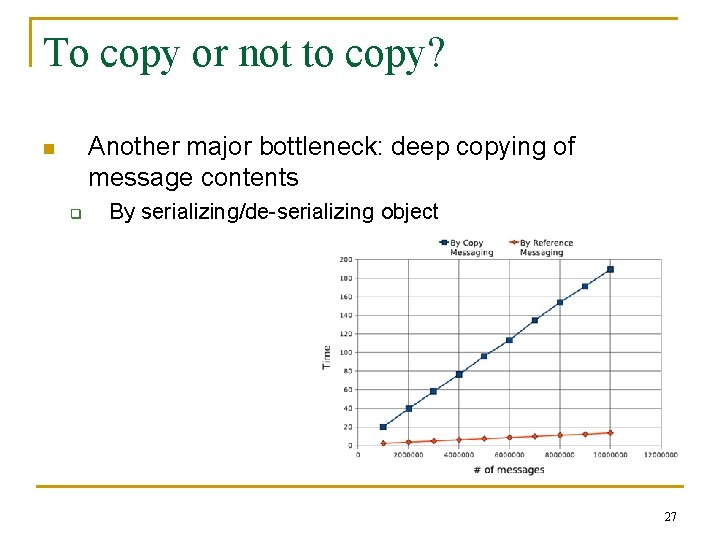

To copy or not to copy? Another major bottleneck: deep copying of message contents n q By serializing/de-serializing object 27

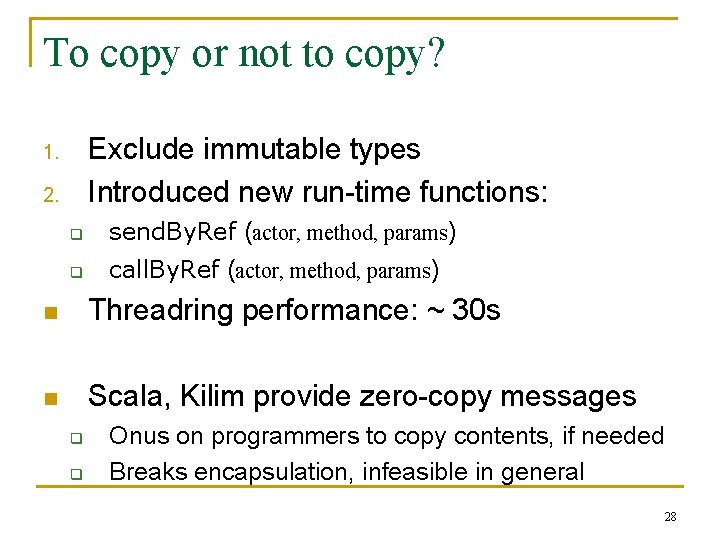

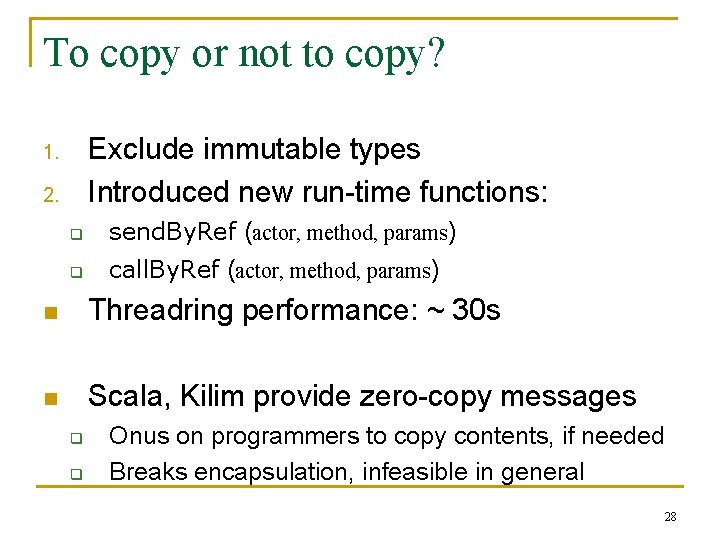

To copy or not to copy? Exclude immutable types Introduced new run-time functions: 1. 2. q send. By. Ref (actor, method, params) q call. By. Ref (actor, method, params) n Threadring performance: ~ 30 s n Scala, Kilim provide zero-copy messages q q Onus on programmers to copy contents, if needed Breaks encapsulation, infeasible in general 28

Fairness – Actor. Foundry approach n Scheduler thread periodically monitors “progress” of worker threads q n Progress means executing an actor from schedule queue If no progress has been made by any worker thread => possible starvation q q Launch a new worker thread Conservative but not incorrect 29

Performance – crude comparison Threadring benchmark on Intel Core 2 Duo, 2. 4 GHz, 4 GB RAM, Heapsize: 256 M 30

Performance – crude comparison Chameneos-redux benchmark on Intel Core 2 Duo, 2. 4 GHz, 4 GB RAM, Heapsize: 256 M 31

Actor Foundry - Discussion n n Elegant, object-like syntax and implicit control Leverage existing Java code and libraries Zero-copy as well as by-copy message passing Reasonably efficient run-time q q n With encapsulation and fair scheduling Support for distributed actors, migration Modular and extensible 32

Using Actor Foundry n Download binary distribution 3 q q n n Ant build. xml file included Launch foundry node manager q n Win 32, Solaris, Linux, Mac. OS Bundled with a bunch of example programs Specify the first actor and first message Launch ashell for command-line interaction with foundry node (optional) 3 http: //osl. cs. uiuc. edu/af 33

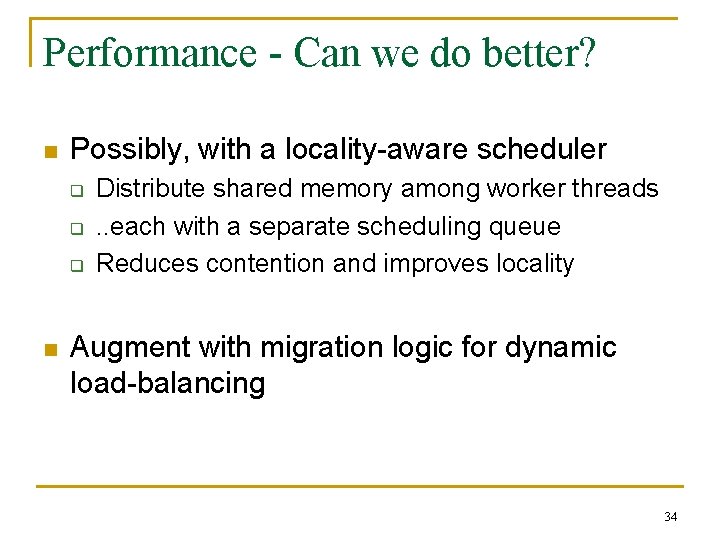

Performance - Can we do better? n Possibly, with a locality-aware scheduler q q q n Distribute shared memory among worker threads. . each with a separate scheduling queue Reduces contention and improves locality Augment with migration logic for dynamic load-balancing 34

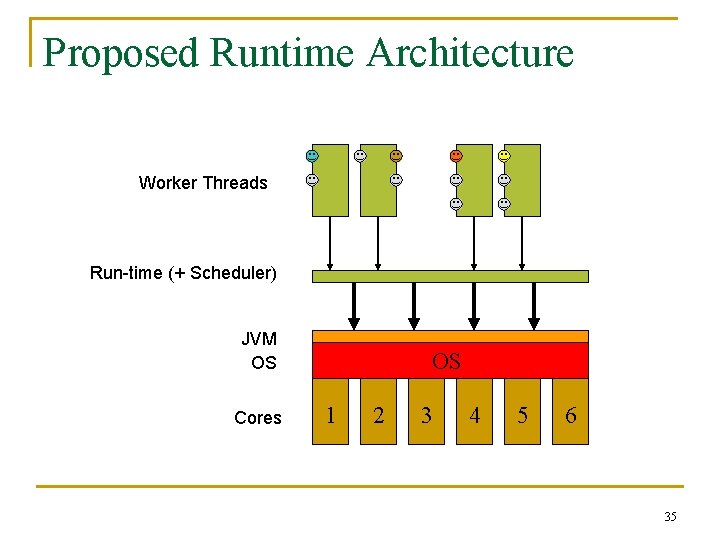

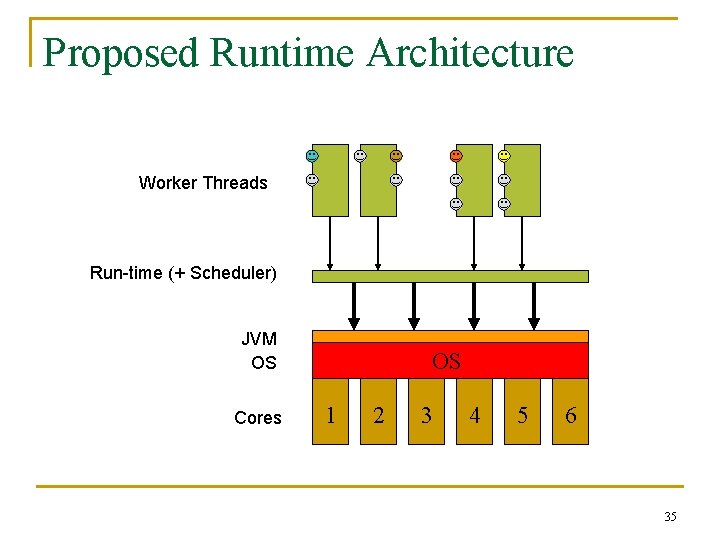

Proposed Runtime Architecture Worker Threads Run-time (+ Scheduler) JVM OS Cores OS 1 2 3 4 5 6 35

Promise for scalable performance? n n n Over-decompose application into fine-grained actors As the number of cores increase, spread out the actors Of course, speed-up is constrained by parallelism in application q Parallelism is bounded by # of actors 36

Other Future Directions n n n Garbage collection Debugging Automated testing Model checking Type-checking messages Support for ad-hoc actor definitions 37

![References 1 Gul Agha Actors a model of concurrent computation in distributed systems MIT References [1] Gul Agha. Actors: a model of concurrent computation in distributed systems. MIT](https://slidetodoc.com/presentation_image/2798702233ebf5c074dddeeda0478a1f/image-38.jpg)

References [1] Gul Agha. Actors: a model of concurrent computation in distributed systems. MIT Press, Cambridge, MA, USA, 1986. [2] Rajesh K. Karmani, Amin Shali, and Gul Agha. Actor frameworks for the JVM platform: a comparative analysis. In PPPJ ’ 09: Proceedings of the 7 th International Conference on Principles and Practice of Programming in Java, 2009. ACM. [3] Gul Agha, Ian A. Mason, Scott Smith, and Carolyn Talcott. A Foundation for Actor Computation. Journal of Functional Programming, 1997. [4] Rajendra Panwar and Gul Agha. A methodology for programming scalable architectures. In Journal of Parallel and Distributed Computing, 1994. 38