Chapter 5 Large and Fast Exploiting Memory Hierarchy

- Slides: 24

Chapter 5 Large and Fast: Exploiting Memory Hierarchy 1

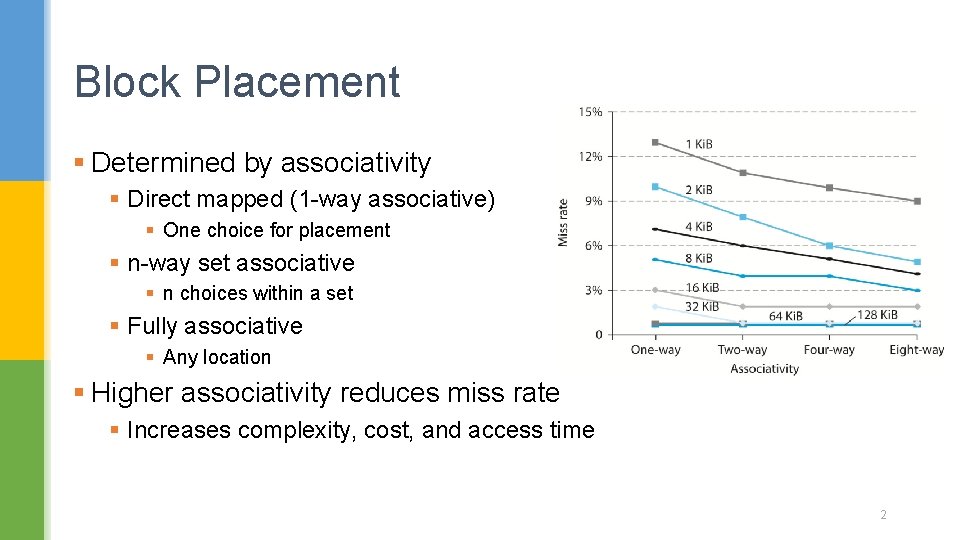

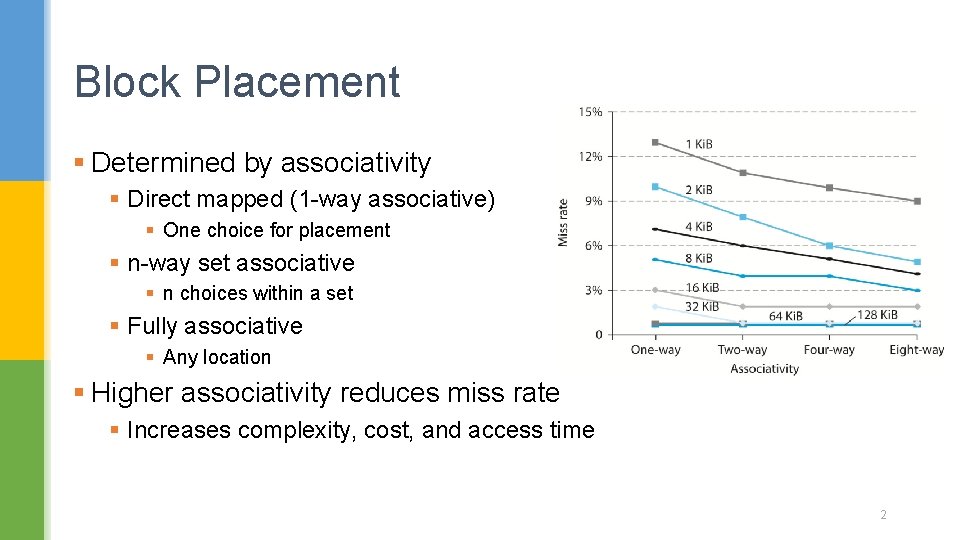

Block Placement § Determined by associativity § Direct mapped (1 -way associative) § One choice for placement § n-way set associative § n choices within a set § Fully associative § Any location § Higher associativity reduces miss rate § Increases complexity, cost, and access time 2

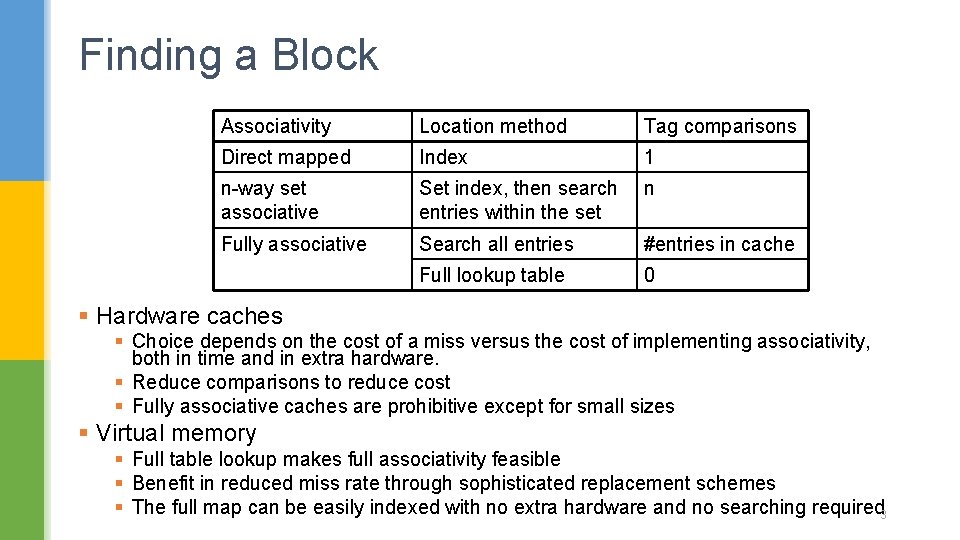

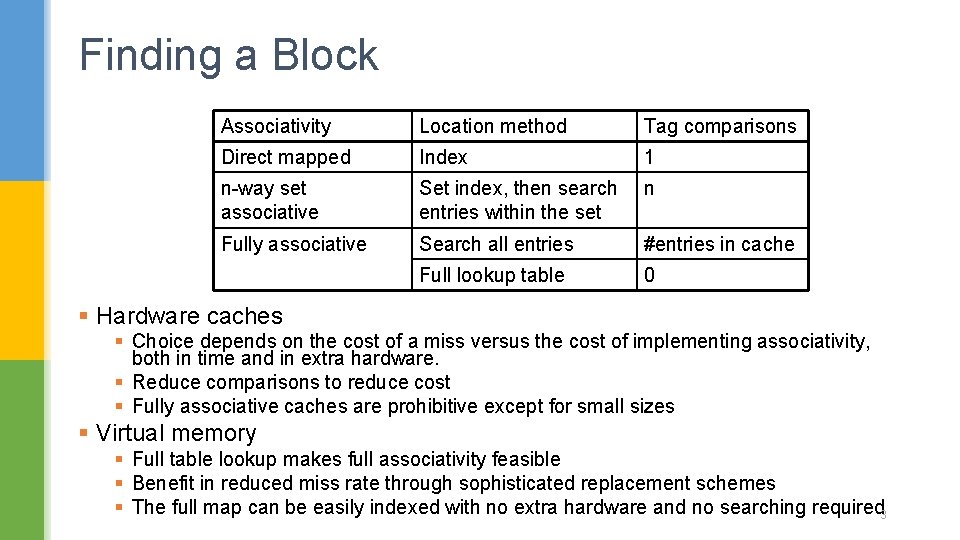

Finding a Block Associativity Location method Tag comparisons Direct mapped Index 1 n-way set associative Set index, then search entries within the set n Fully associative Search all entries #entries in cache Full lookup table 0 § Hardware caches § Choice depends on the cost of a miss versus the cost of implementing associativity, both in time and in extra hardware. § Reduce comparisons to reduce cost § Fully associative caches are prohibitive except for small sizes § Virtual memory § Full table lookup makes full associativity feasible § Benefit in reduced miss rate through sophisticated replacement schemes § The full map can be easily indexed with no extra hardware and no searching required 3

Replacement § Choice of entry to replace on a miss for set associative and fully associative caches § Least recently used (LRU) § Complex and costly hardware for high associativity § For larger associativity, either LRU is approximated or random replacement is used § Random § Close to LRU, easier to implement in hardware § For a two-way set-associative cache, random replacement has a miss rate about 1. 1 times higher than LRU replacement. § Virtual memory § LRU approximation § as tiny reduction in the miss rate can be important when the cost of a miss is enormous § Because misses are so expensive and relatively infrequent, approximating this information primarily in software is acceptable. 4

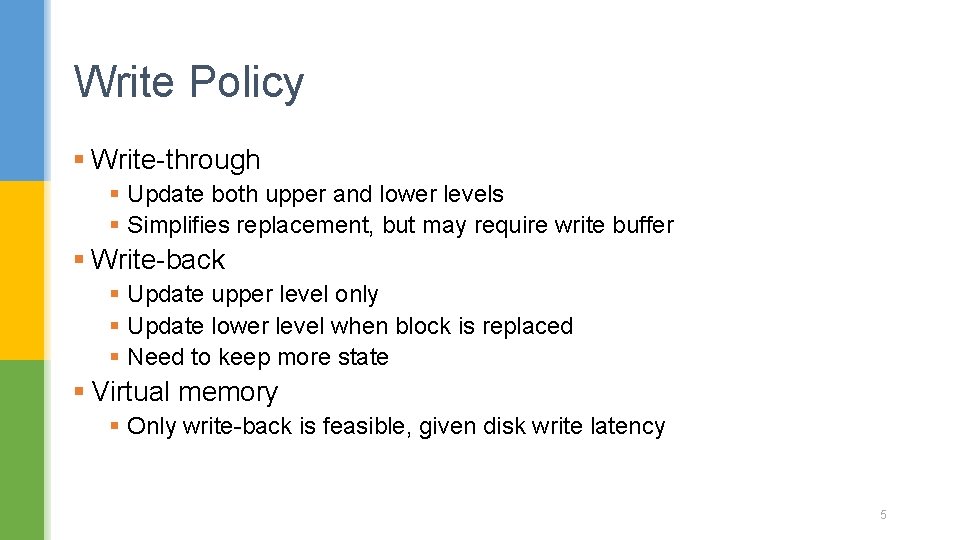

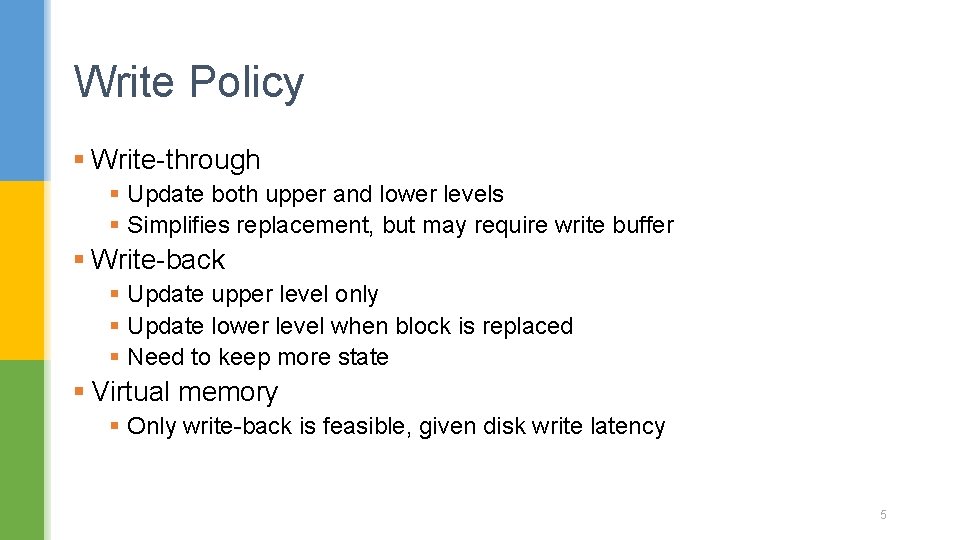

Write Policy § Write-through § Update both upper and lower levels § Simplifies replacement, but may require write buffer § Write-back § Update upper level only § Update lower level when block is replaced § Need to keep more state § Virtual memory § Only write-back is feasible, given disk write latency 5

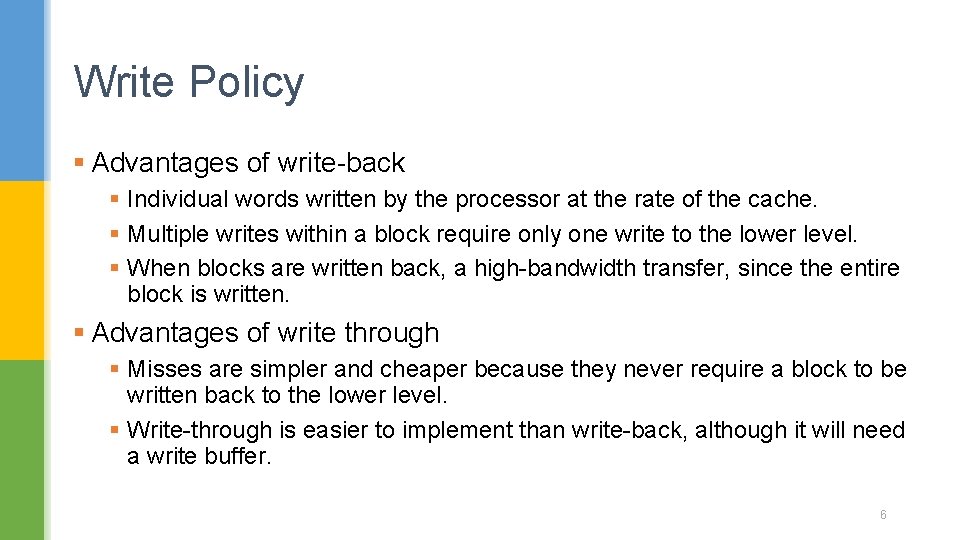

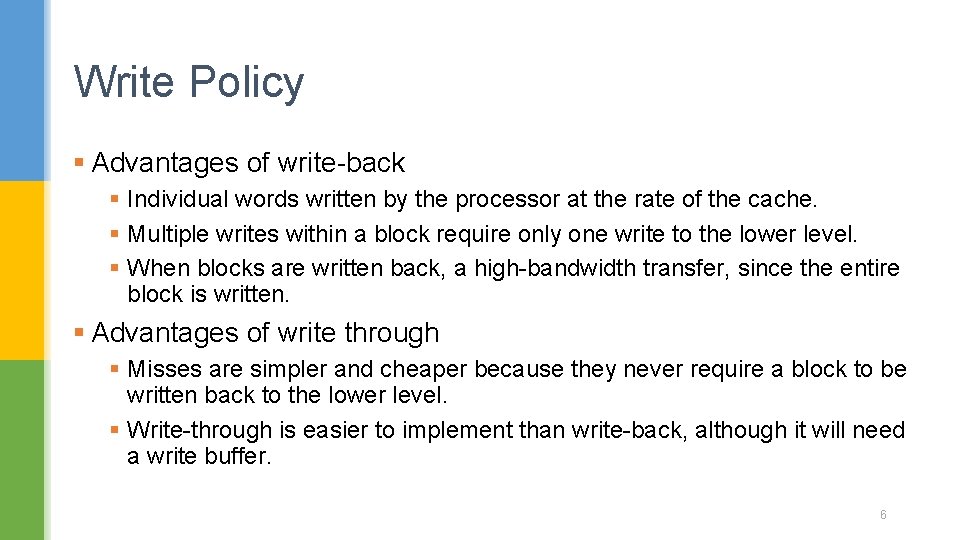

Write Policy § Advantages of write-back § Individual words written by the processor at the rate of the cache. § Multiple writes within a block require only one write to the lower level. § When blocks are written back, a high-bandwidth transfer, since the entire block is written. § Advantages of write through § Misses are simpler and cheaper because they never require a block to be written back to the lower level. § Write-through is easier to implement than write-back, although it will need a write buffer. 6

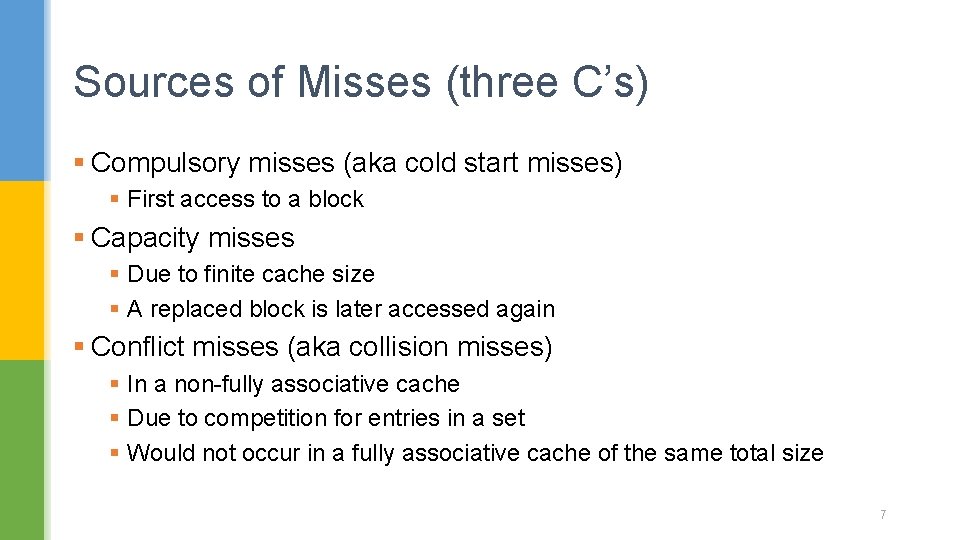

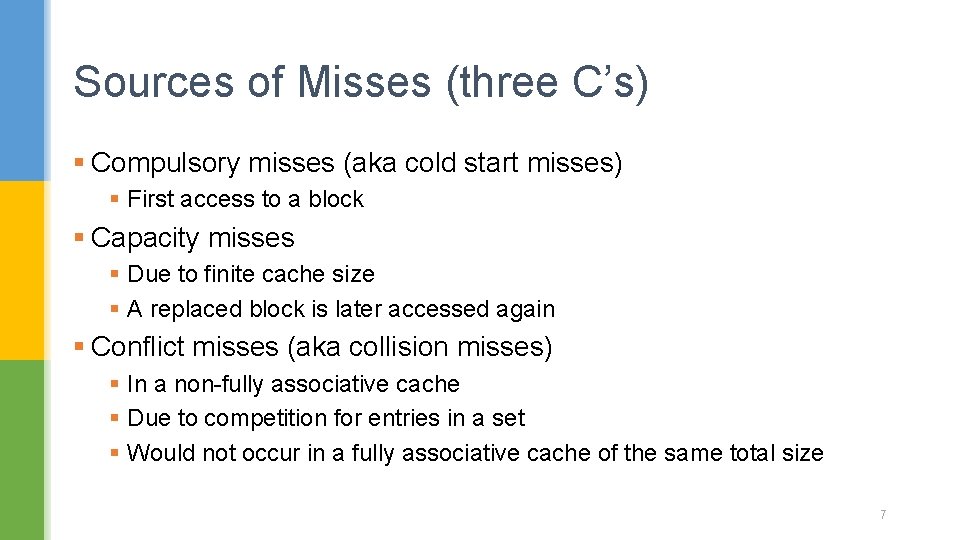

Sources of Misses (three C’s) § Compulsory misses (aka cold start misses) § First access to a block § Capacity misses § Due to finite cache size § A replaced block is later accessed again § Conflict misses (aka collision misses) § In a non-fully associative cache § Due to competition for entries in a set § Would not occur in a fully associative cache of the same total size 7

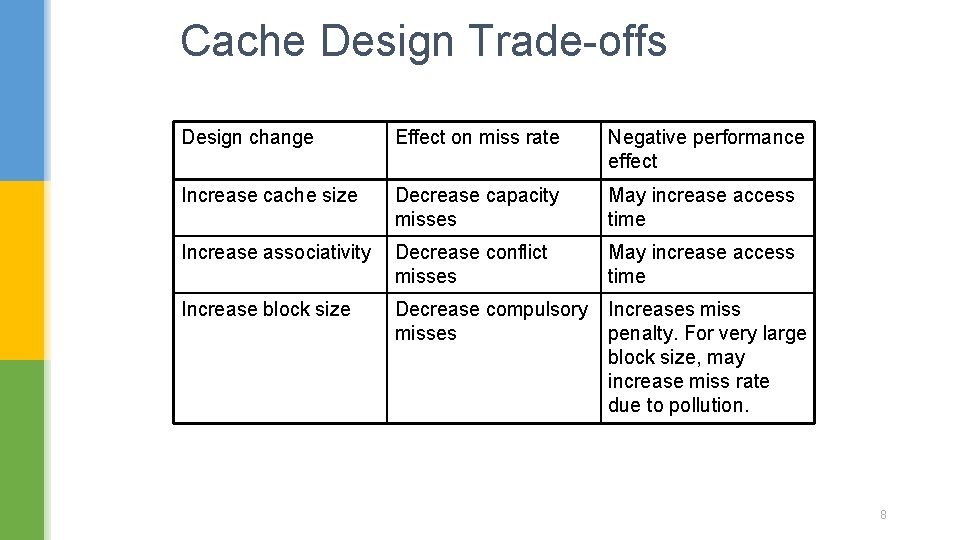

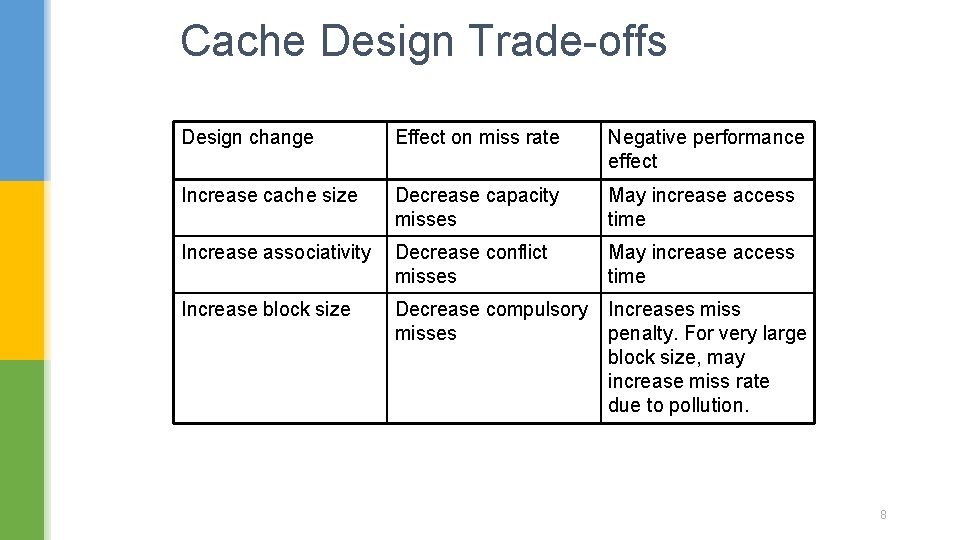

Cache Design Trade-offs Design change Effect on miss rate Negative performance effect Increase cache size Decrease capacity misses May increase access time Increase associativity Decrease conflict misses May increase access time Increase block size Decrease compulsory misses Increases miss penalty. For very large block size, may increase miss rate due to pollution. 8

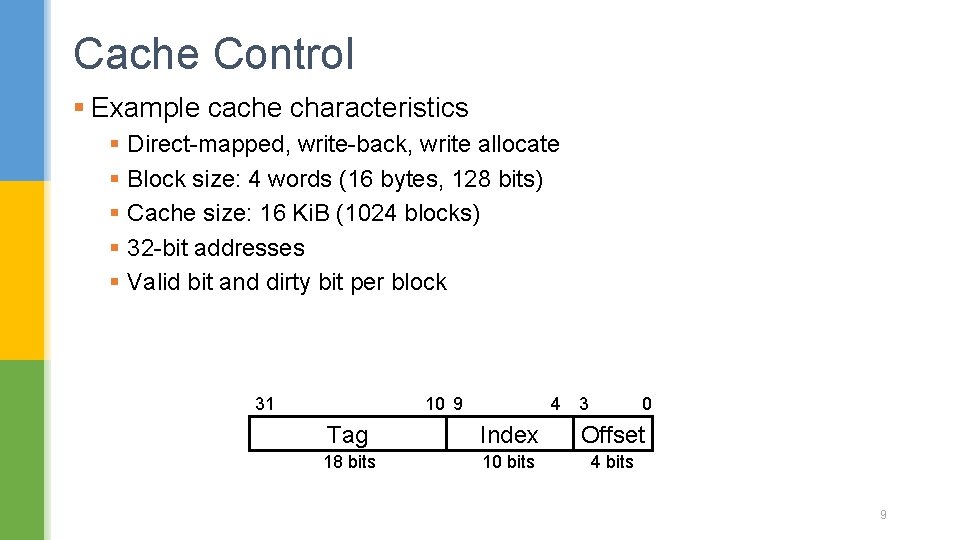

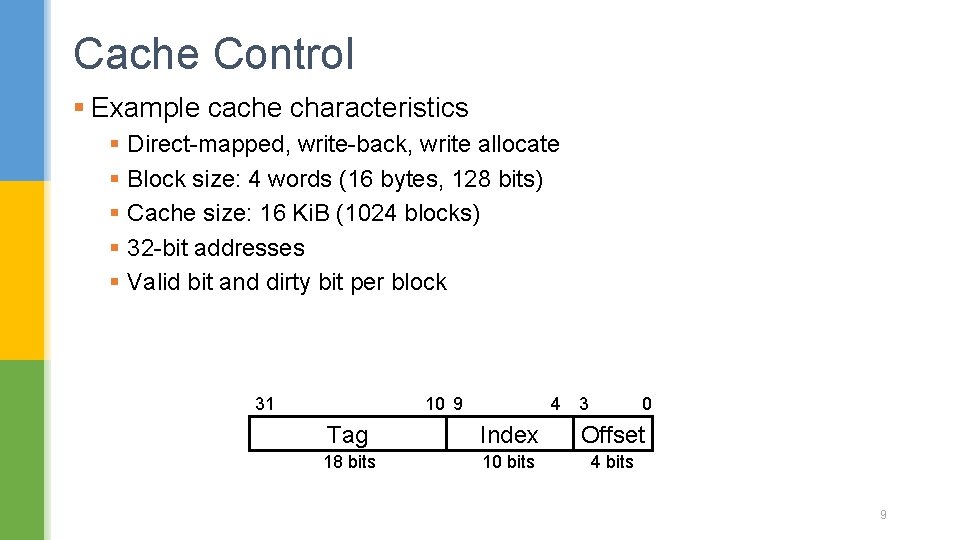

Cache Control § Example cache characteristics § Direct-mapped, write-back, write allocate § Block size: 4 words (16 bytes, 128 bits) § Cache size: 16 Ki. B (1024 blocks) § 32 -bit addresses § Valid bit and dirty bit per block 31 10 9 4 3 0 Tag Index Offset 18 bits 10 bits 4 bits 9

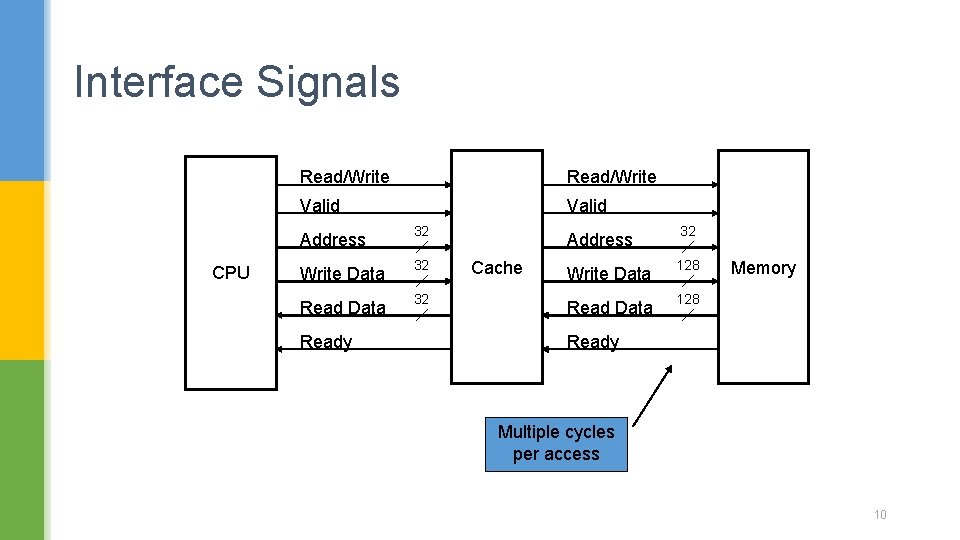

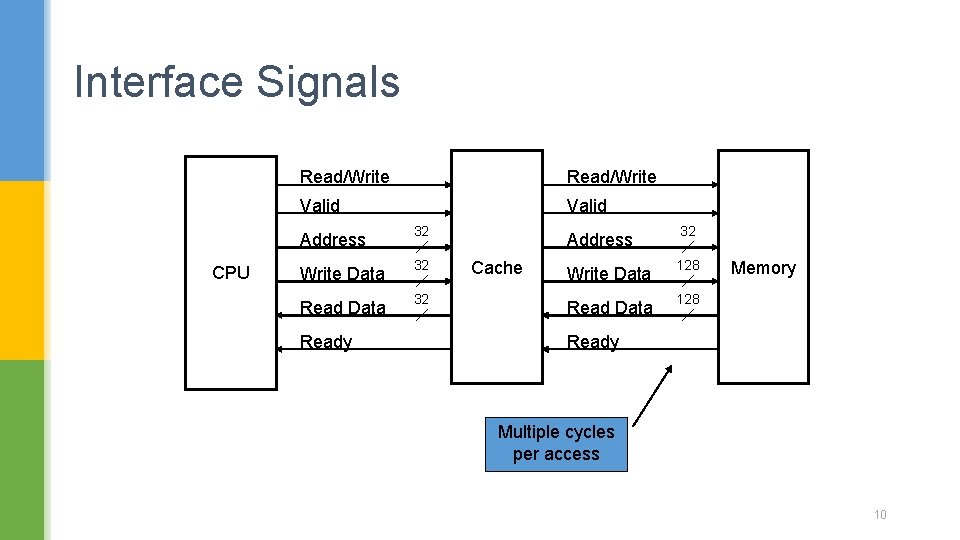

Interface Signals CPU Read/Write Valid Address 32 Write Data 32 Ready Cache Address 32 Write Data 128 Read Data 128 Memory Ready Multiple cycles per access 10

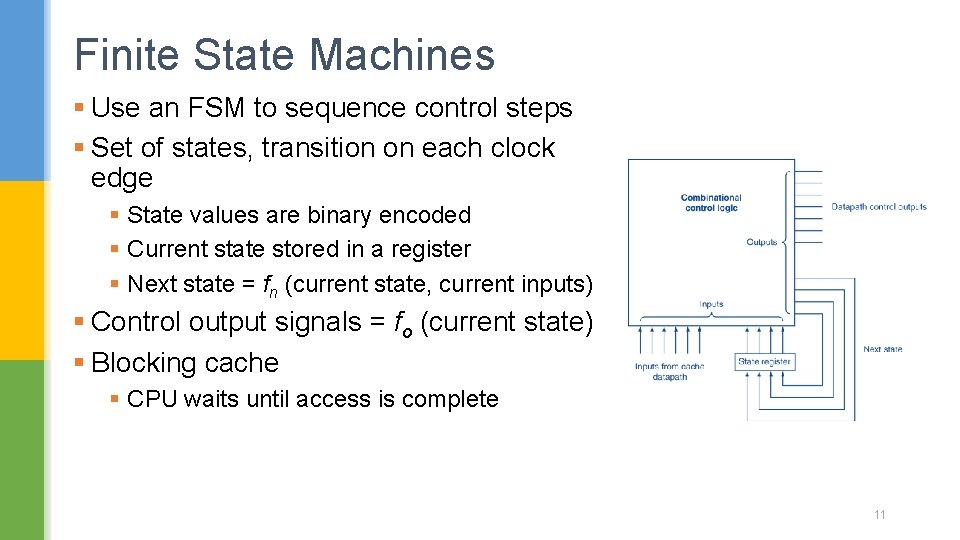

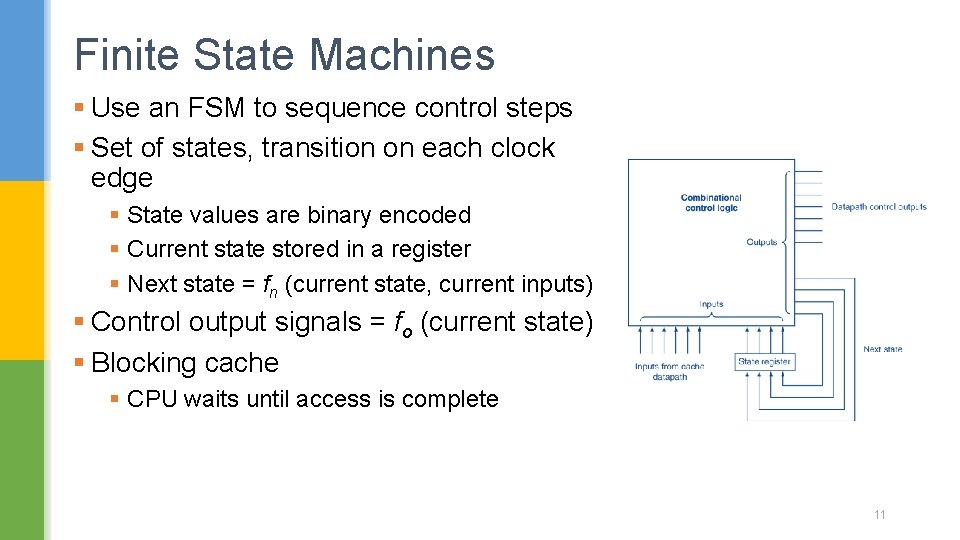

Finite State Machines § Use an FSM to sequence control steps § Set of states, transition on each clock edge § State values are binary encoded § Current state stored in a register § Next state = fn (current state, current inputs) § Control output signals = fo (current state) § Blocking cache § CPU waits until access is complete 11

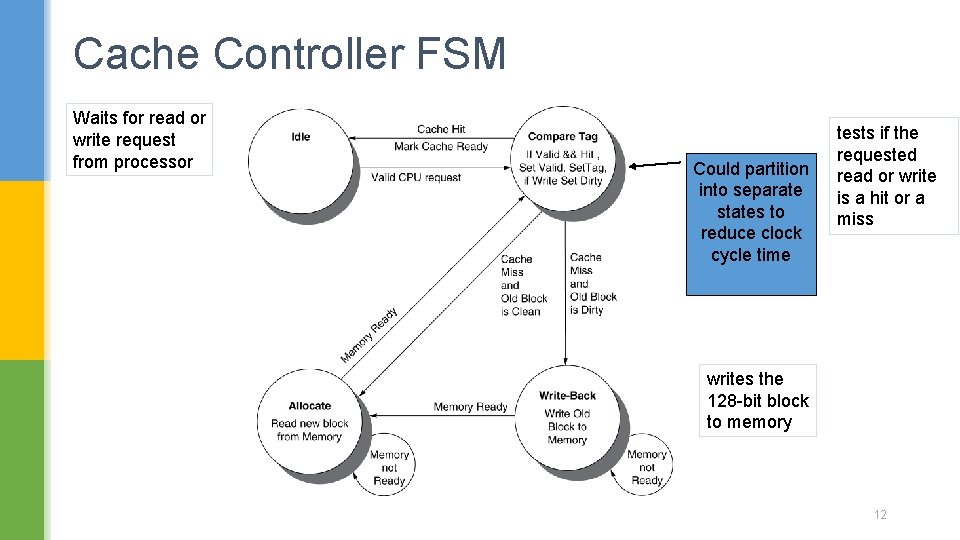

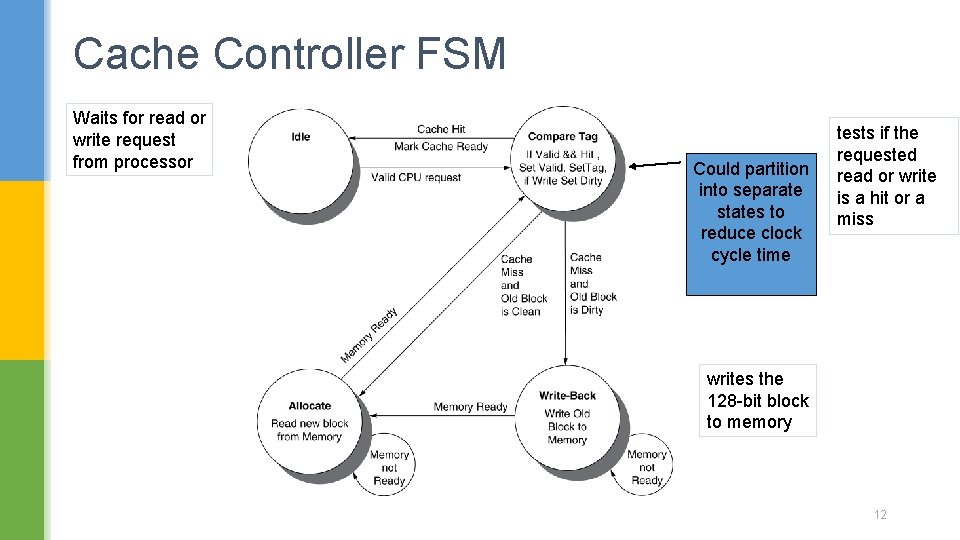

Cache Controller FSM Waits for read or write request from processor Could partition into separate states to reduce clock cycle time tests if the requested read or write is a hit or a miss writes the 128 -bit block to memory 12

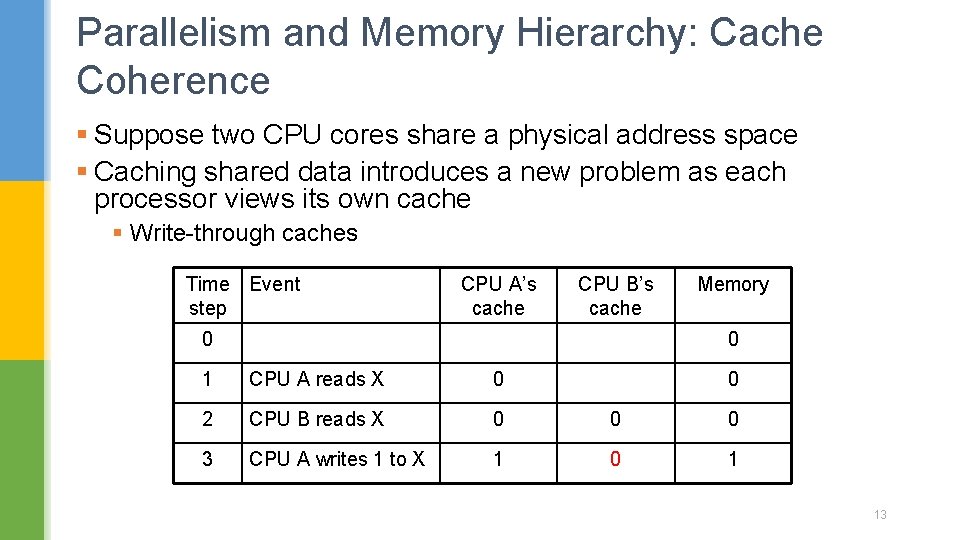

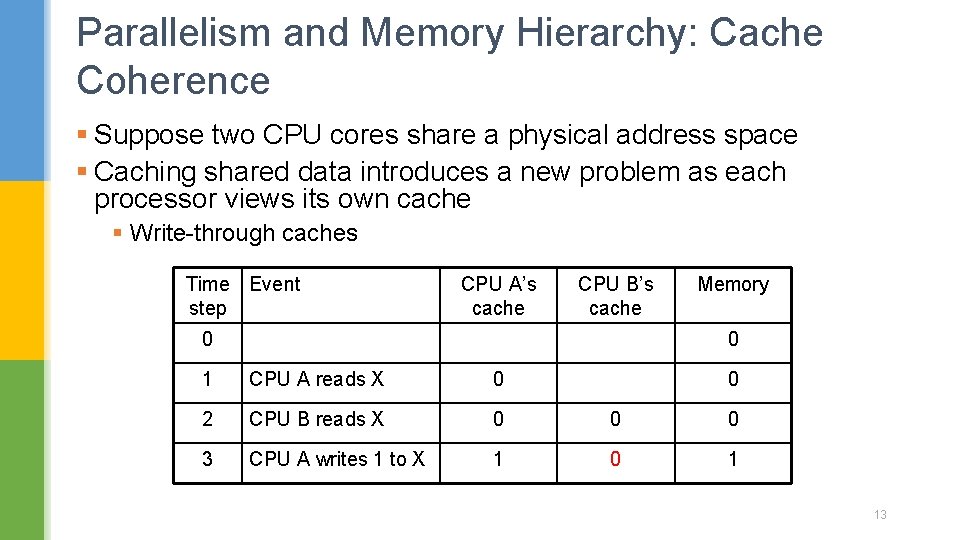

Parallelism and Memory Hierarchy: Cache Coherence § Suppose two CPU cores share a physical address space § Caching shared data introduces a new problem as each processor views its own cache § Write-through caches Time Event step CPU A’s cache CPU B’s cache 0 Memory 0 1 CPU A reads X 0 0 2 CPU B reads X 0 0 0 3 CPU A writes 1 to X 1 0 1 13

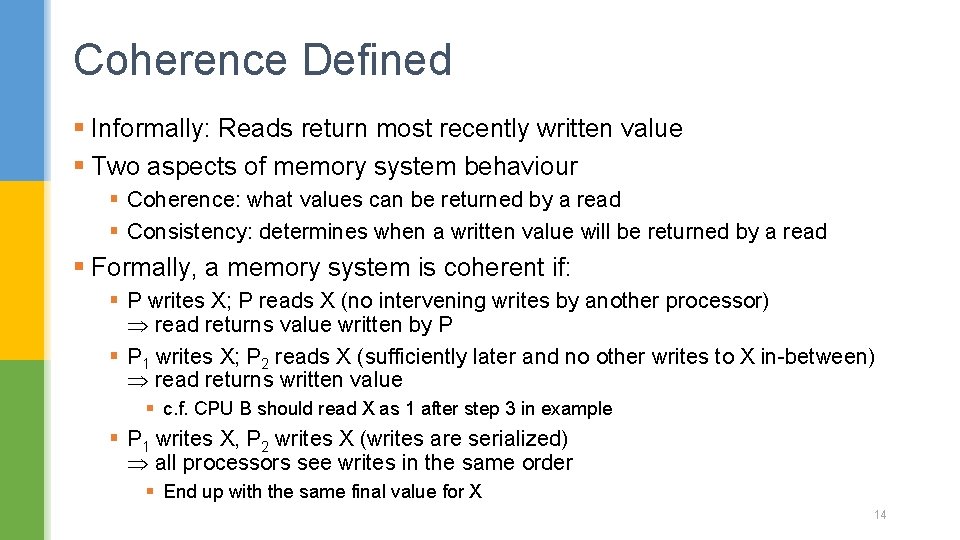

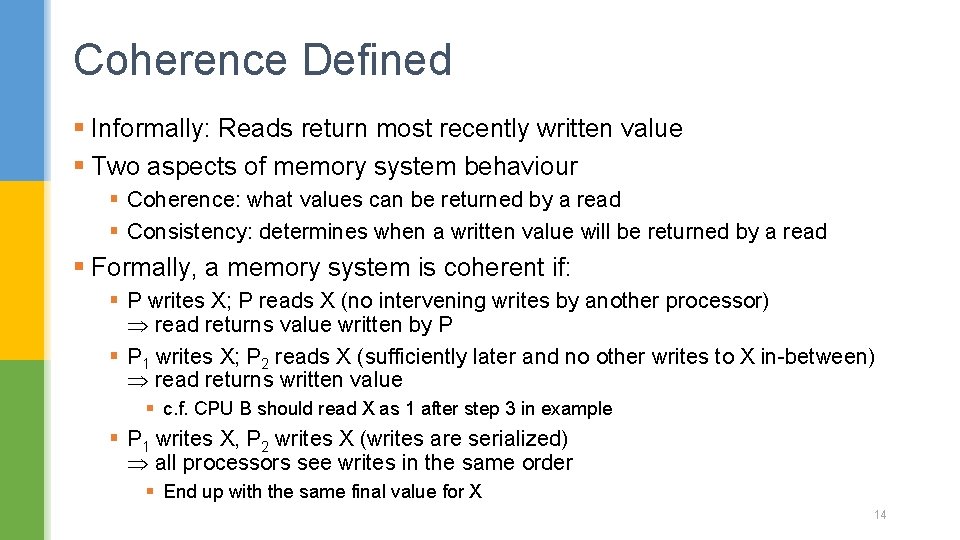

Coherence Defined § Informally: Reads return most recently written value § Two aspects of memory system behaviour § Coherence: what values can be returned by a read § Consistency: determines when a written value will be returned by a read § Formally, a memory system is coherent if: § P writes X; P reads X (no intervening writes by another processor) read returns value written by P § P 1 writes X; P 2 reads X (sufficiently later and no other writes to X in-between) read returns written value § c. f. CPU B should read X as 1 after step 3 in example § P 1 writes X, P 2 writes X (writes are serialized) all processors see writes in the same order § End up with the same final value for X 14

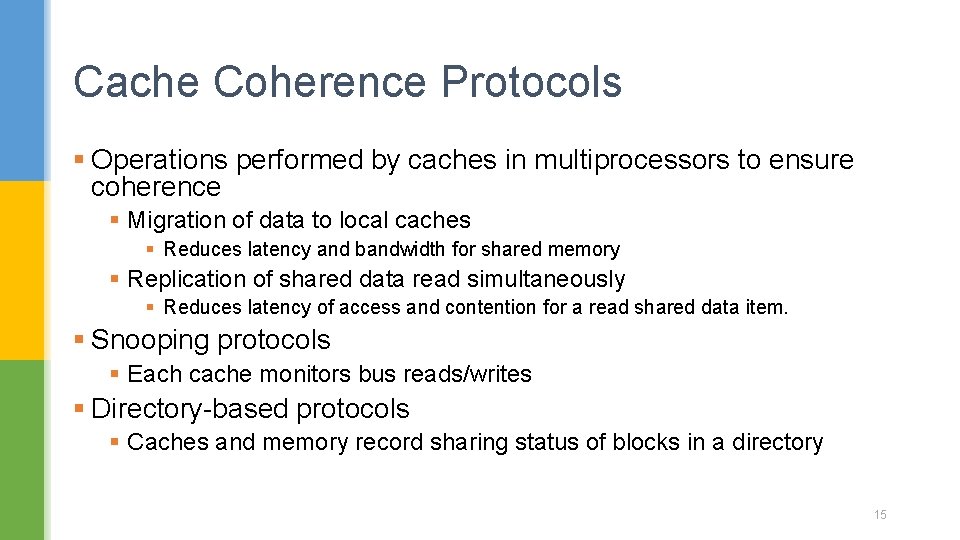

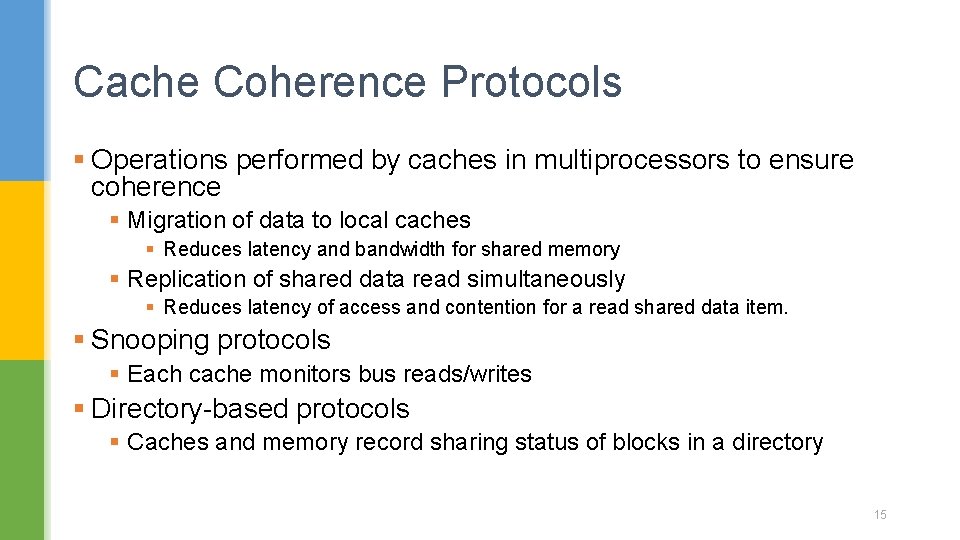

Cache Coherence Protocols § Operations performed by caches in multiprocessors to ensure coherence § Migration of data to local caches § Reduces latency and bandwidth for shared memory § Replication of shared data read simultaneously § Reduces latency of access and contention for a read shared data item. § Snooping protocols § Each cache monitors bus reads/writes § Directory-based protocols § Caches and memory record sharing status of blocks in a directory 15

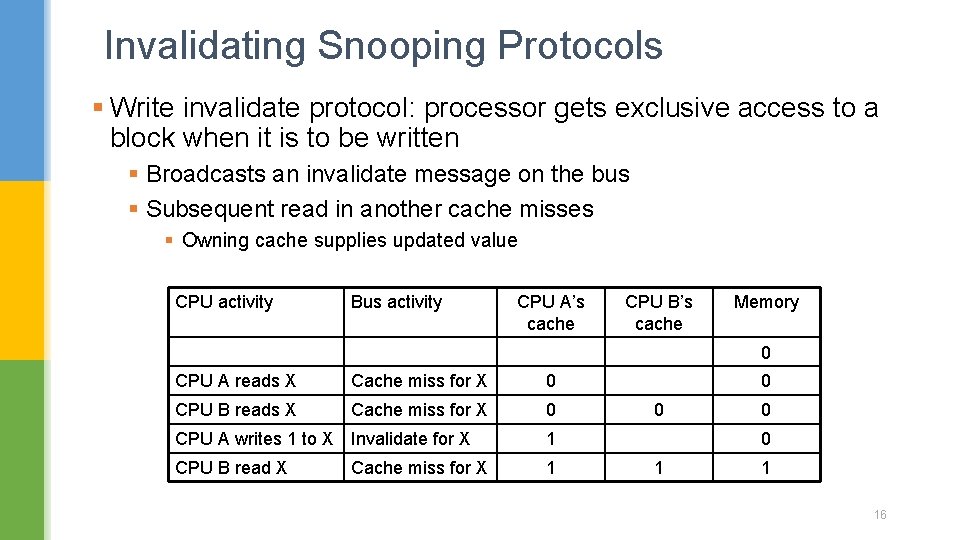

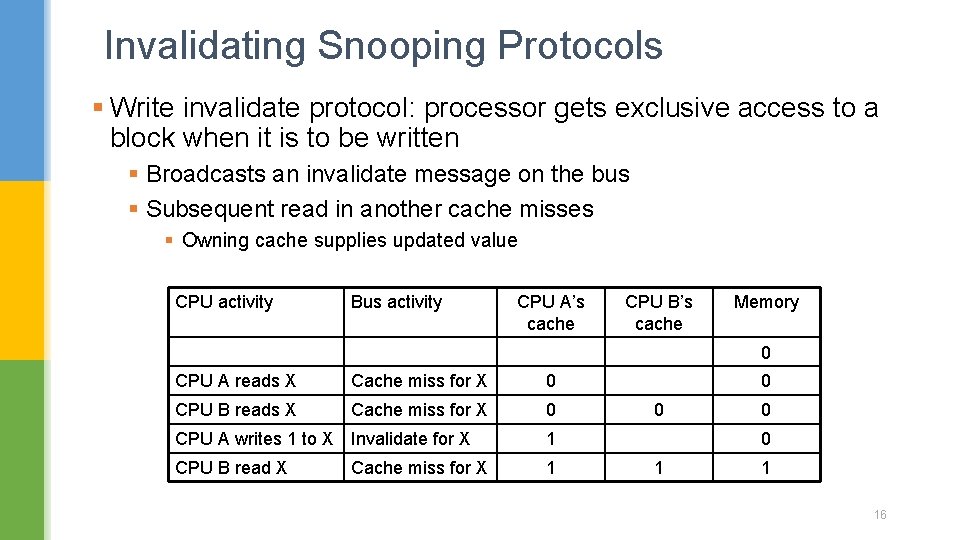

Invalidating Snooping Protocols § Write invalidate protocol: processor gets exclusive access to a block when it is to be written § Broadcasts an invalidate message on the bus § Subsequent read in another cache misses § Owning cache supplies updated value CPU activity Bus activity CPU A’s cache CPU B’s cache Memory 0 CPU A reads X Cache miss for X 0 CPU B reads X Cache miss for X 0 CPU A writes 1 to X Invalidate for X 1 CPU B read X 1 Cache miss for X 0 0 1 1 16

Memory Consistency § When are writes seen by other processors § “Seen” means a read returns the written value § Can’t be instantaneously § Assumptions § A write completes only when all processors have seen it § A processor does not reorder writes with other accesses § Consequence § P writes X then writes Y all processors that see new Y also see new X § Processors can reorder reads, but not writes 17

Redundant Arrays of Inexpensive Disks (RAID) § Impact of I/O on System Performance § elapsed time =100 s, CPU time = 90 s, rest is I/O time § #of processors double every 2 years, speed of processor remains same, I/O time remains same § I/O time = elapsed time – CPU time = 10 s § After 0 years, CPU time = 90 s, I/O time = 10 s, elapsed time= 100 s, %I/O time = 10% § After 2 years, CPU time = 45 s, I/O time = 10 s, elapsed time= 55 s, %I/O time = 18% § After 0 years, CPU time = 23 s, I/O time = 10 s, elapsed time= 33 s, %I/O time = 31% § After 0 years, CPU time = 11 s, I/O time = 10 s, elapsed time= 21 s, %I/O time = 47% § Improvement in CPU = 90/11=8, improvement in elapsed time = 100/21=4. 7, increase in I/O time from 10% to 47% 18

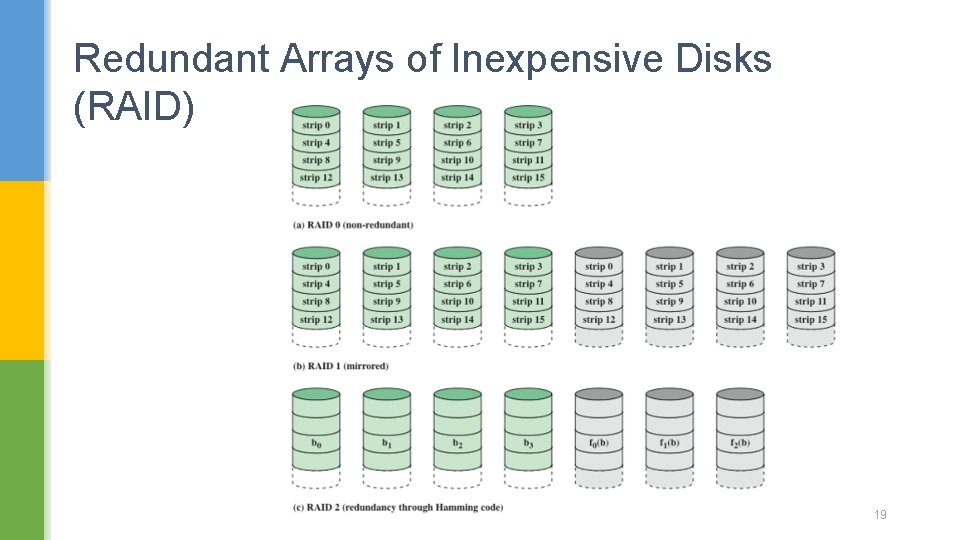

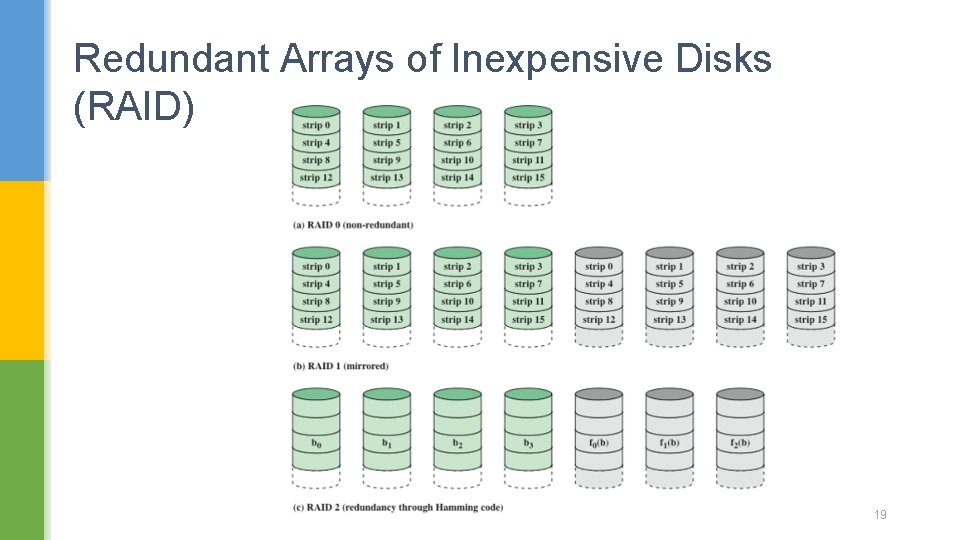

Redundant Arrays of Inexpensive Disks (RAID) 19

Redundant Arrays of Inexpensive Disks (RAID) § RAID 0 § No redundancy § Data striped across all disks § Increase speed § Multiple data requests probably not on same disk § Disks seek in parallel § A set of data is likely to be striped across multiple disks § RAID 1 § Mirroring or shadowing, uses twice as many disks. § On a write, every strip is written twice. § On a read, either copy can be used, distributing the load over more drives. § Most expensive RAID solution, since it requires the most disks. 20

Redundant Arrays of Inexpensive Disks (RAID) § RAID 2 § Each byte is split into a pair of 4 -bit nibbles, and Hamming code added to form a 7 -bit word, of which bits 1, 2 and 4 are parity bits. § all drives have to be rotationally synchronized § Not in use now 21

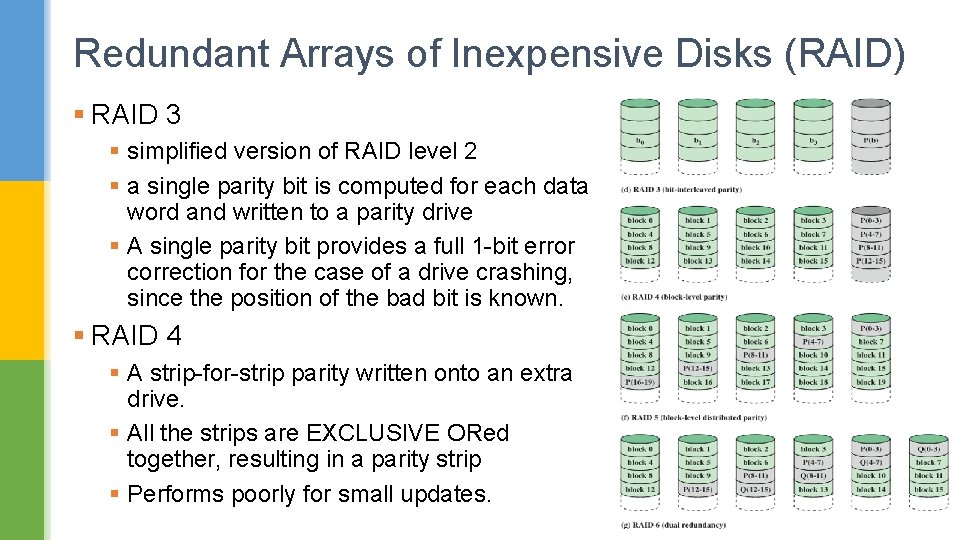

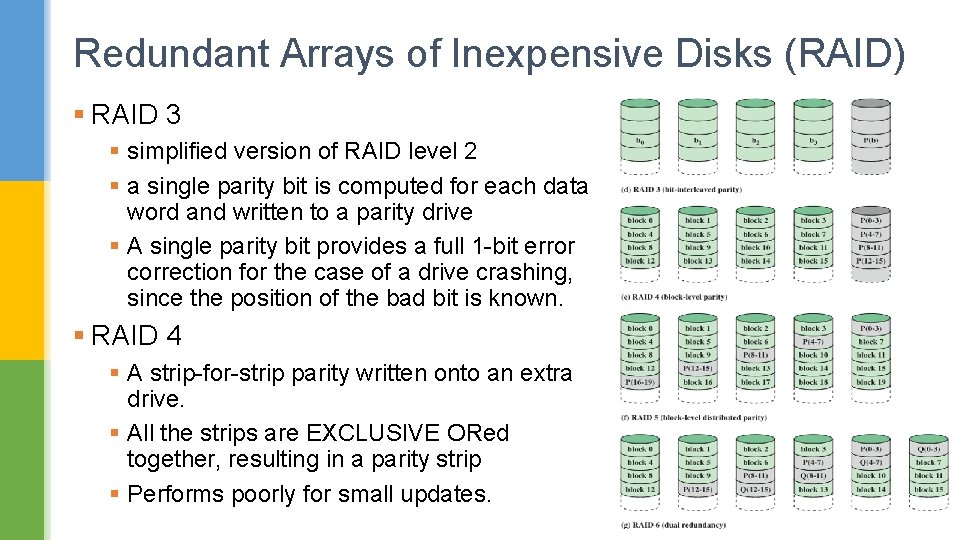

Redundant Arrays of Inexpensive Disks (RAID) § RAID 3 § simplified version of RAID level 2 § a single parity bit is computed for each data word and written to a parity drive § A single parity bit provides a full 1 -bit error correction for the case of a drive crashing, since the position of the bad bit is known. § RAID 4 § A strip-for-strip parity written onto an extra drive. § All the strips are EXCLUSIVE ORed together, resulting in a parity strip § Performs poorly for small updates. 22

Redundant Arrays of Inexpensive Disks (RAID) § RAID 5 § The bottleneck in RAID 4 is eliminated by distributing the parity bits uniformly over all the drives, round robin fashion. § In the event of a drive crash, reconstructing the contents of the failed drive is a complex process. § RAID 6 § Two different parity calculations are carried out and stored in separate blocks on different disks § Advantage: provides extremely high data availability § Three disks would have to fail within the mean time to repair (MTTR) interval to cause data to be lost § Incurs a substantial write penalty because each write affects two parity blocks § Performance benchmarks show a RAID 6 controller can suffer more than a 30% drop in overall write performance compared with a RAID 5 implementation. 23

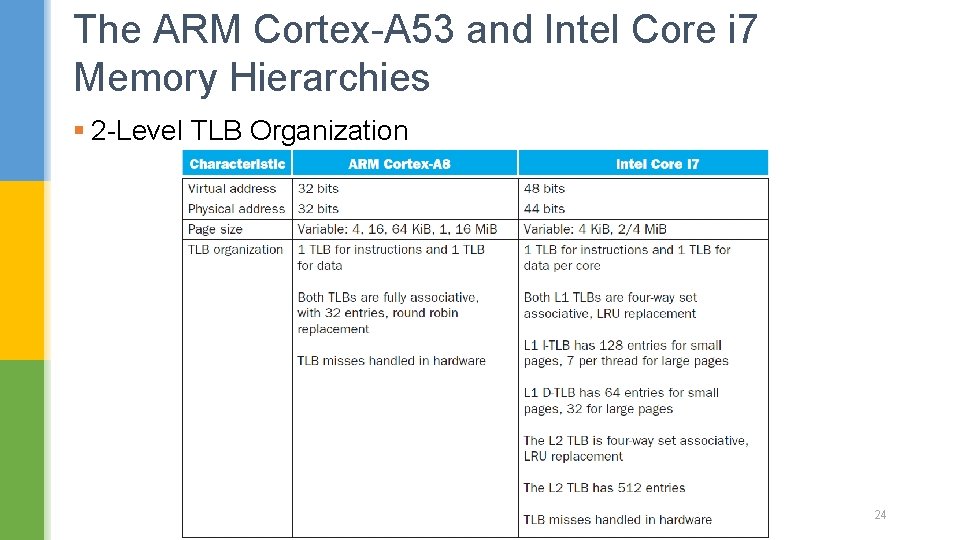

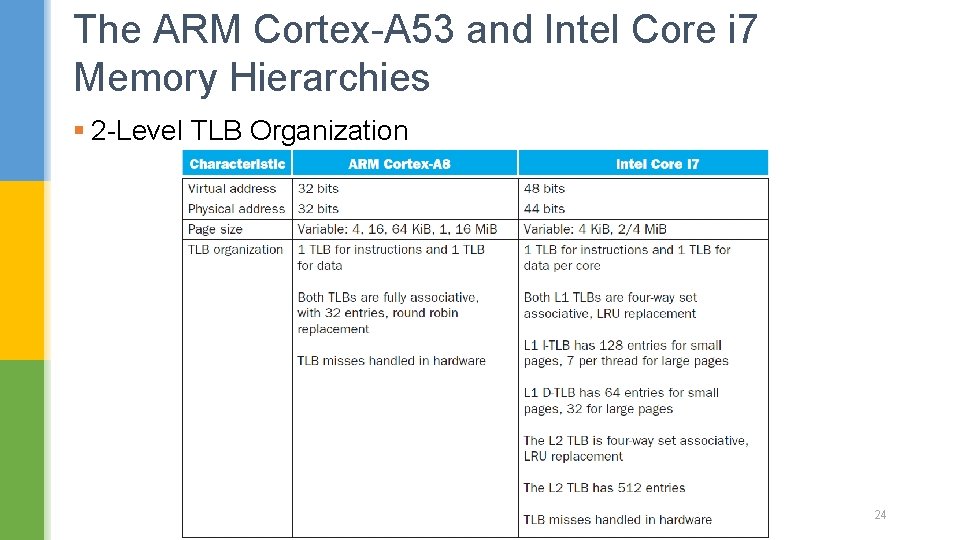

The ARM Cortex-A 53 and Intel Core i 7 Memory Hierarchies § 2 -Level TLB Organization 24