Chapter 5 Large and Fast Exploiting Memory Hierarchy

- Slides: 12

Chapter 5 Large and Fast: Exploiting Memory Hierarchy 1

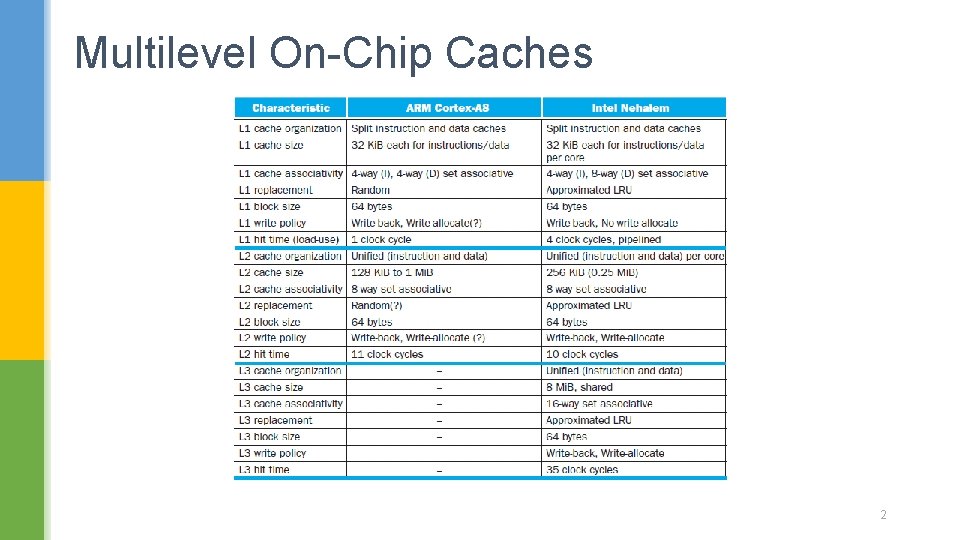

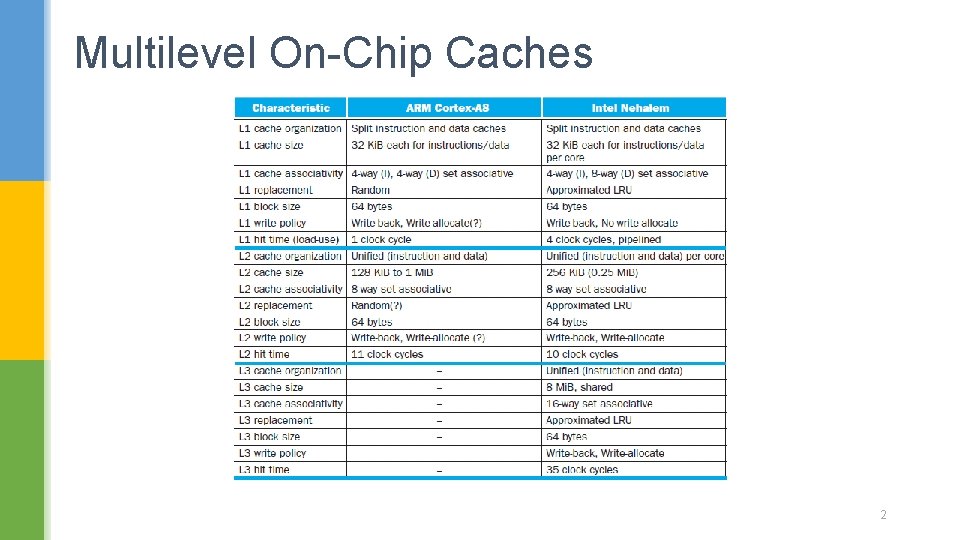

Multilevel On-Chip Caches 2

Supporting Multiple Issue § Both have multi-banked caches that allow multiple accesses per cycle assuming no bank conflicts § Cortex-A 53 and Core i 7 cache optimizations § Return requested word first § Non-blocking cache § Hit under miss allows additional cache hits during a miss § hides some miss latency with other work § Miss under miss allows multiple outstanding cache misses § overlap the latency of two different misses § Data prefetching § look at a pattern of data misses and predict the next address to start fetching the data before the miss occurs. 3

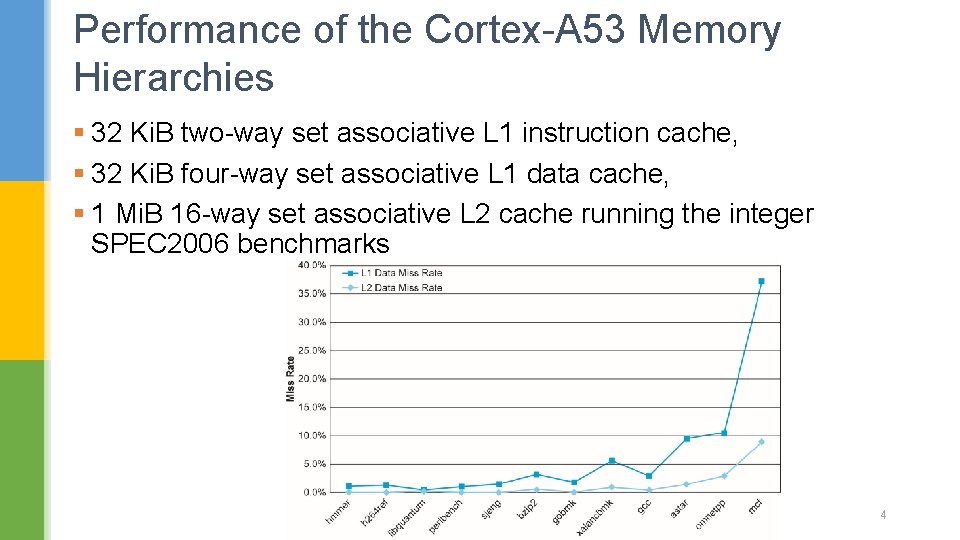

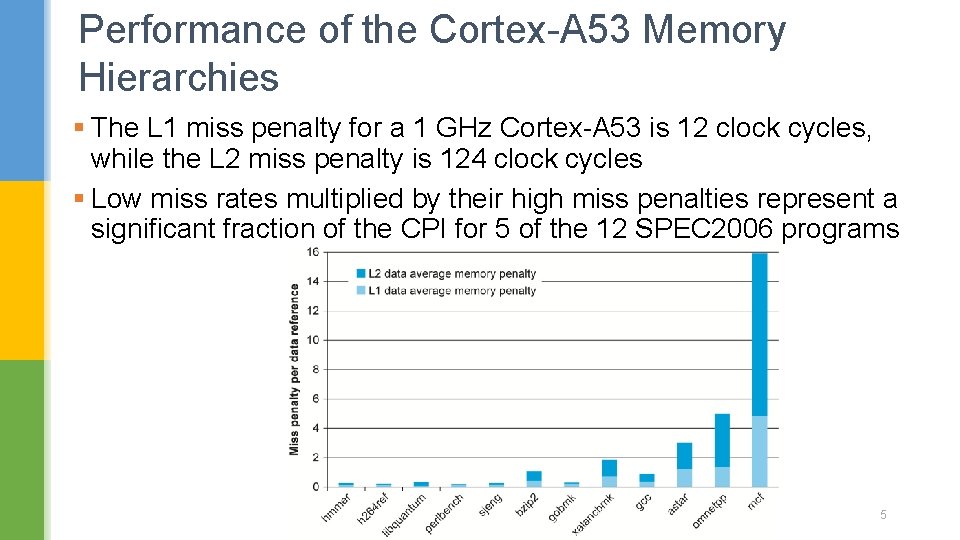

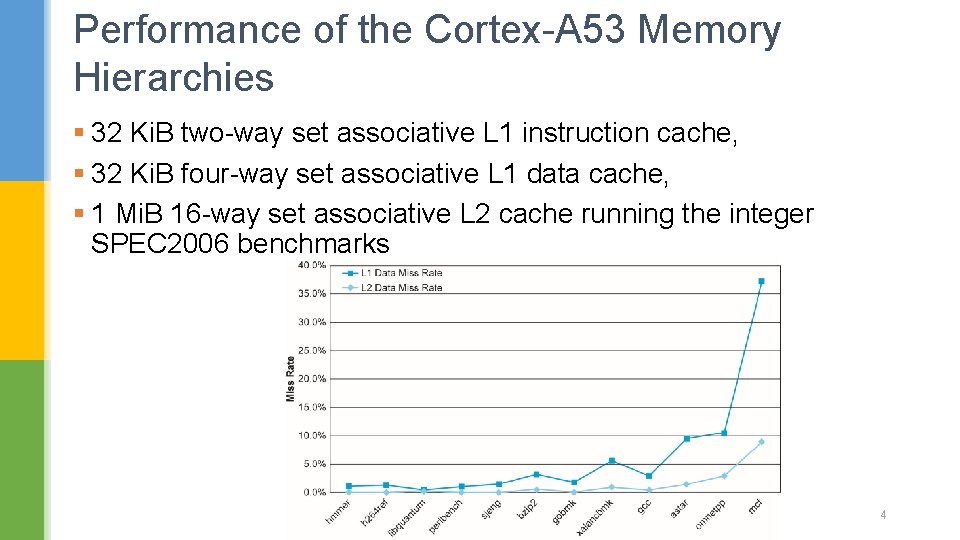

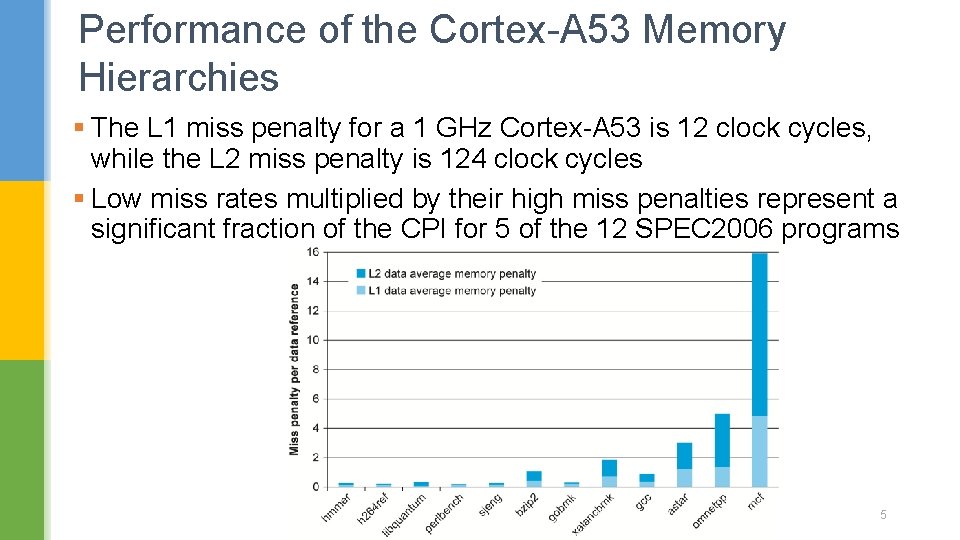

Performance of the Cortex-A 53 Memory Hierarchies § 32 Ki. B two-way set associative L 1 instruction cache, § 32 Ki. B four-way set associative L 1 data cache, § 1 Mi. B 16 -way set associative L 2 cache running the integer SPEC 2006 benchmarks 4

Performance of the Cortex-A 53 Memory Hierarchies § The L 1 miss penalty for a 1 GHz Cortex-A 53 is 12 clock cycles, while the L 2 miss penalty is 124 clock cycles § Low miss rates multiplied by their high miss penalties represent a significant fraction of the CPI for 5 of the 12 SPEC 2006 programs 5

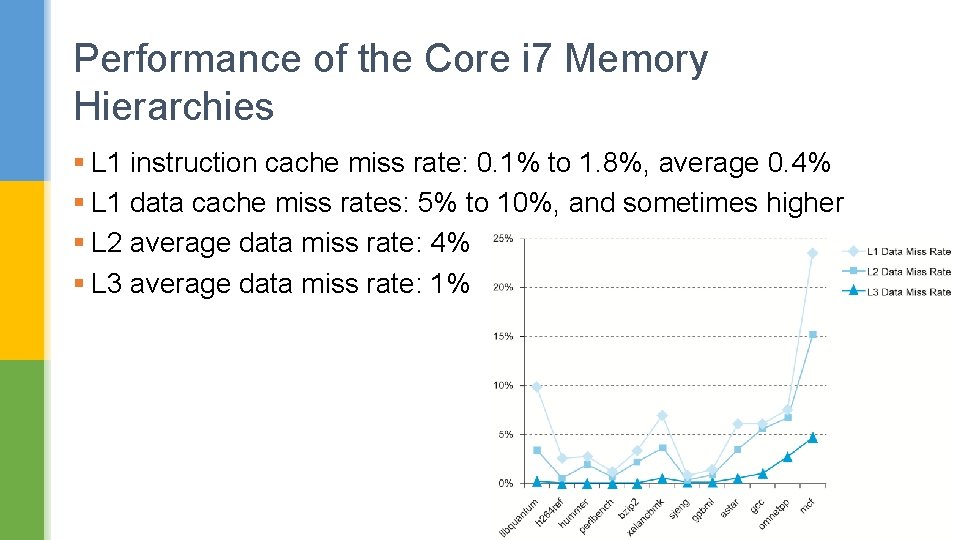

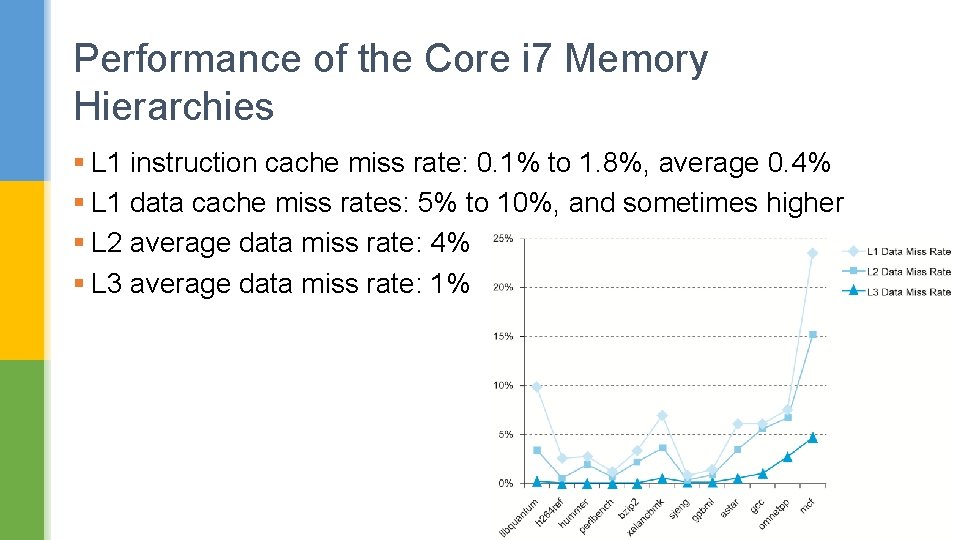

Performance of the Core i 7 Memory Hierarchies § L 1 instruction cache miss rate: 0. 1% to 1. 8%, average 0. 4% § L 1 data cache miss rates: 5% to 10%, and sometimes higher § L 2 average data miss rate: 4% § L 3 average data miss rate: 1% 6

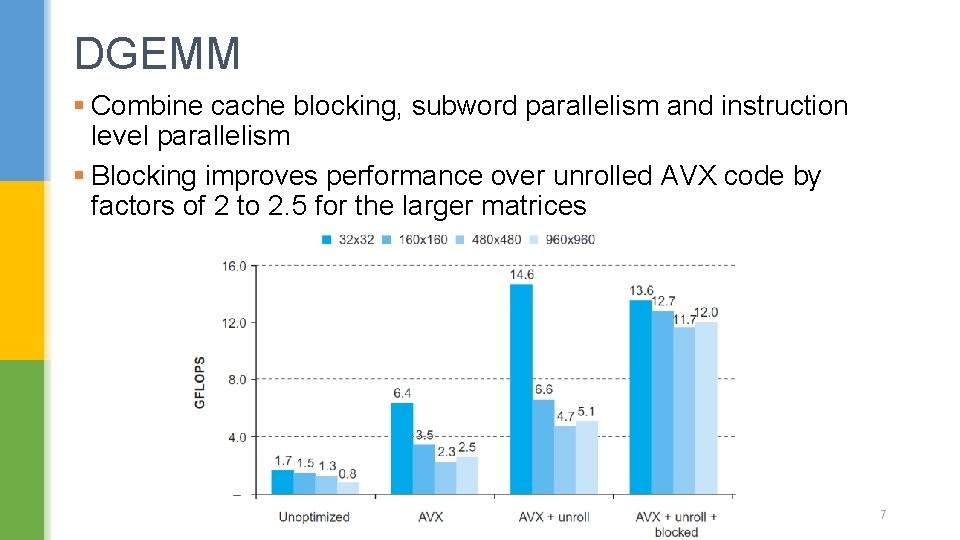

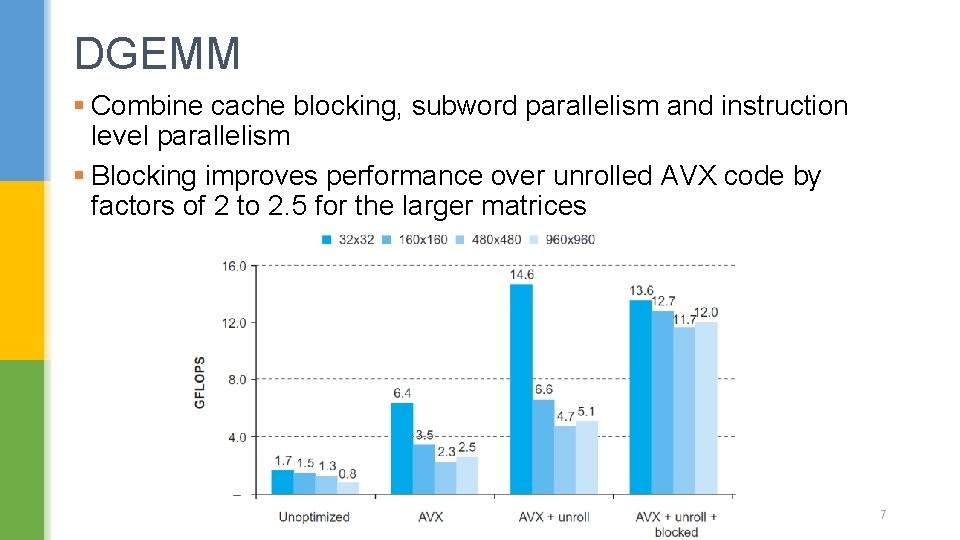

DGEMM § Combine cache blocking, subword parallelism and instruction level parallelism § Blocking improves performance over unrolled AVX code by factors of 2 to 2. 5 for the larger matrices 7

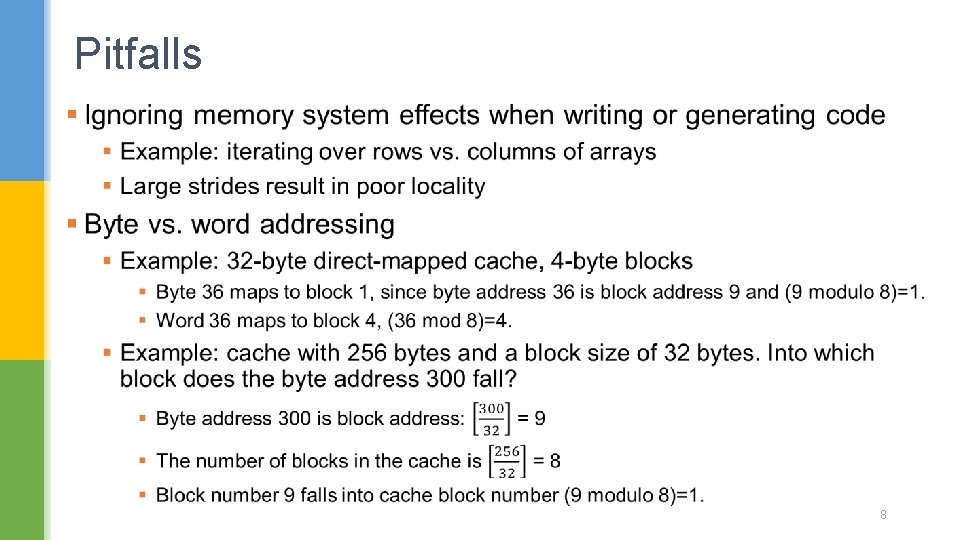

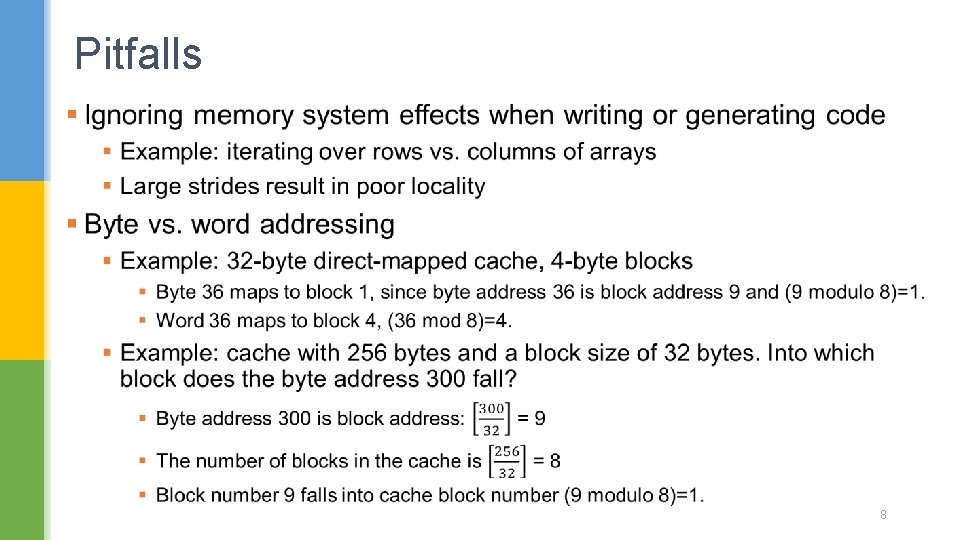

Pitfalls § 8

Pitfalls § In multiprocessor with shared L 2 or L 3 cache § Less associativity than cores results in conflict misses § More cores need to increase associativity § Using AMAT (Average Memory Access Time) to evaluate performance of out-of-order processors § Ignores effect of non-blocked accesses § Instead, evaluate performance by simulation 9

Pitfalls § Extending address range using segments § E. g. , Intel 80286 § But a segment is not always big enough § Makes address arithmetic complicated § Implementing a VMM on an ISA not designed for virtualization § E. g. , non-privileged instructions accessing hardware resources § Either extend ISA, or require guest OS not to use problematic instructions 10

Fallacies § Disk failure rates in the field match their specifications § 100, 000 disks quoted MTTF of 1, 000 to 1, 500, 000 hours, or AFR of 0. 6% to 0. 8%. AFRs of 2% to 4%, often 3 -5 times higher than the specified rates § more than 100, 000 disks at Google, quoted AFR of 1. 5%, failure rates of 1. 7% for drives in their first year rise to 8. 6% for drives in their third year, or about 5 -6 times the declared rate § Operating systems are the best place to schedule disk accesses § OS sorts the LBA into increasing order to improve performance § Disk knows the actual mapping of the logical addresses onto the physical geometry of sectors, tracks, and surfaces, it can reduce the rotational and seek latencies by rescheduling 11

Concluding Remarks § Fast memories are small, large memories are slow § We really want fast, large memories § Caching gives this illusion § Principle of locality § Programs use a small part of their memory space frequently § Memory hierarchy § L 1 cache L 2 cache … DRAM memory disk § Multilevel caches make it possible to use more cache optimizations more easily § Memory system design is critical for multiprocessors § Compiler enhancements such as restructuring the loops that access the arrays, substantially improves locality and cache performance § Prefetching - a block of data is brought into the cache before it is actually referenced 12