CGS 3763 Operating Systems Concepts Spring 2013 Dan

- Slides: 25

CGS 3763 Operating Systems Concepts Spring 2013 Dan C. Marinescu Office: HEC 304 Office hours: M-Wd 11: 30 - 12: 30 AM

Lecture 37 – Wednesday, April 17, 2013 n Last time: n n ¨ Today n n n Performance penalties Spatial and temporal locality of reference Virtual memory Page fault handling Working set concept Next time ¨ n Page replacement algorithms Implementation of paging Hit and miss ratios Performance penalties Class review Reading assignments ¨ Chapter 9 of the textbook Lecture 38 2

Two-level memory system n To understand the effect of the hit/miss ratios we consider only a two level memory system P a faster and smaller primary memory ¨ S A slower and larger secondary memory. ¨ n The two levels could be: P L 1 cache and S main memory P main memory and S disk Lecture 38 3

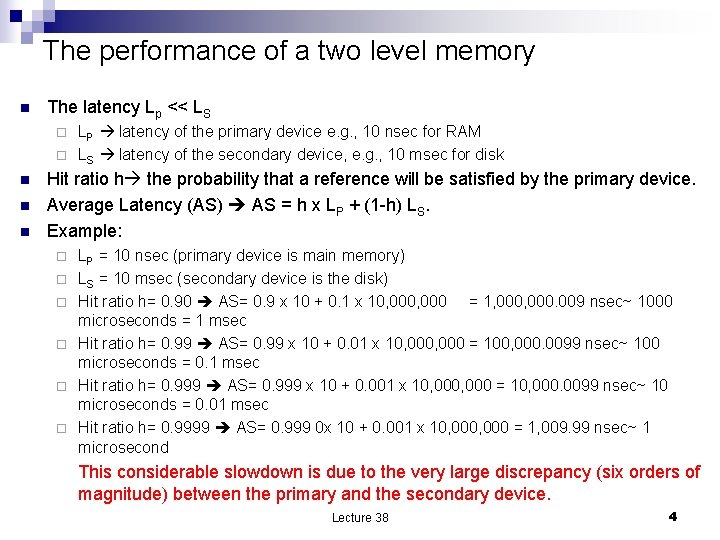

The performance of a two level memory n The latency Lp << LS LP latency of the primary device e. g. , 10 nsec for RAM ¨ LS latency of the secondary device, e. g. , 10 msec for disk ¨ n n n Hit ratio h the probability that a reference will be satisfied by the primary device. Average Latency (AS) AS = h x LP + (1 -h) LS. Example: ¨ ¨ ¨ LP = 10 nsec (primary device is main memory) LS = 10 msec (secondary device is the disk) Hit ratio h= 0. 90 AS= 0. 9 x 10 + 0. 1 x 10, 000 = 1, 000. 009 nsec~ 1000 microseconds = 1 msec Hit ratio h= 0. 99 AS= 0. 99 x 10 + 0. 01 x 10, 000 = 100, 000. 0099 nsec~ 100 microseconds = 0. 1 msec Hit ratio h= 0. 999 AS= 0. 999 x 10 + 0. 001 x 10, 000 = 10, 000. 0099 nsec~ 10 microseconds = 0. 01 msec Hit ratio h= 0. 9999 AS= 0. 999 0 x 10 + 0. 001 x 10, 000 = 1, 009. 99 nsec~ 1 microsecond This considerable slowdown is due to the very large discrepancy (six orders of magnitude) between the primary and the secondary device. Lecture 38 4

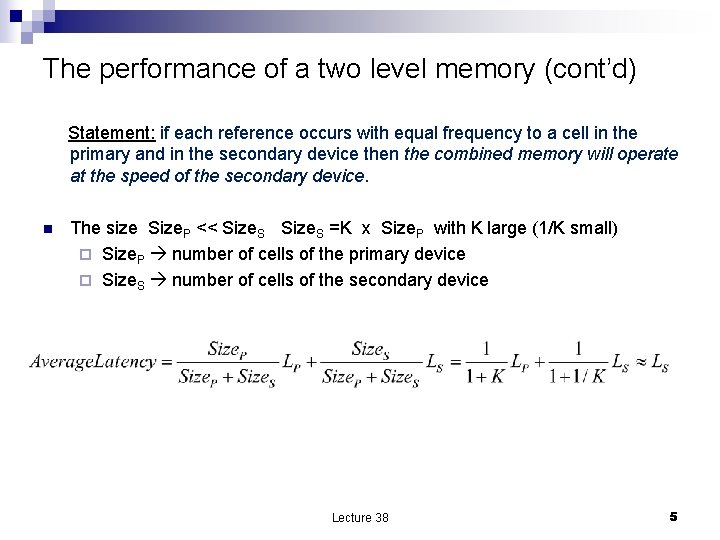

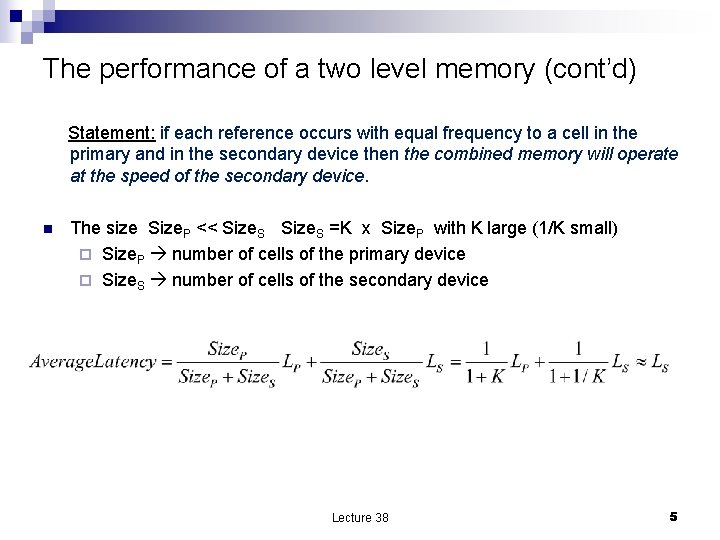

The performance of a two level memory (cont’d) Statement: if each reference occurs with equal frequency to a cell in the primary and in the secondary device then the combined memory will operate at the speed of the secondary device. n The size Size. P << Size. S =K x Size. P with K large (1/K small) ¨ Size. P number of cells of the primary device ¨ Size. S number of cells of the secondary device Lecture 38 5

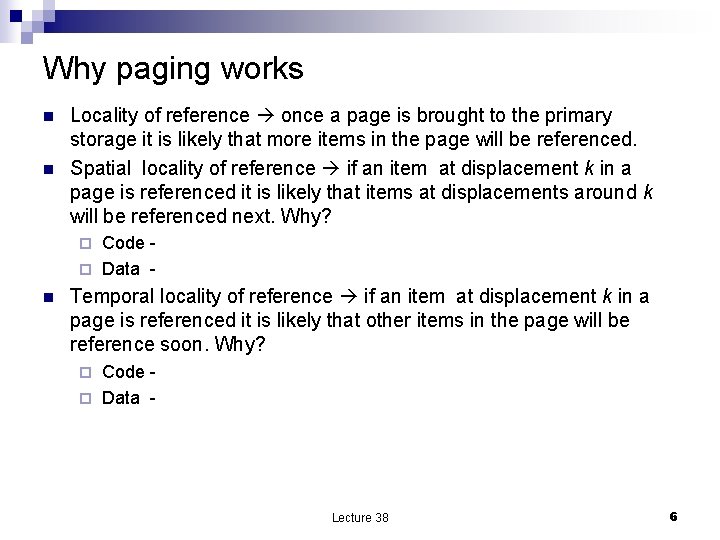

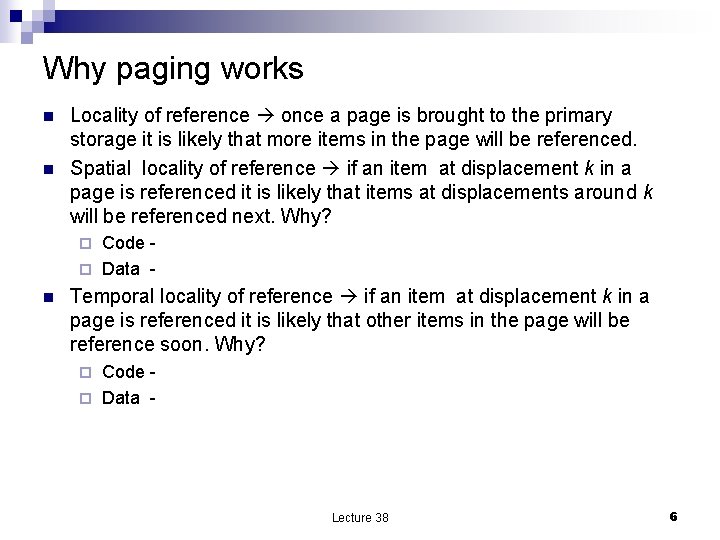

Why paging works n n Locality of reference once a page is brought to the primary storage it is likely that more items in the page will be referenced. Spatial locality of reference if an item at displacement k in a page is referenced it is likely that items at displacements around k will be referenced next. Why? Code ¨ Data ¨ n Temporal locality of reference if an item at displacement k in a page is referenced it is likely that other items in the page will be reference soon. Why? Code ¨ Data ¨ Lecture 38 6

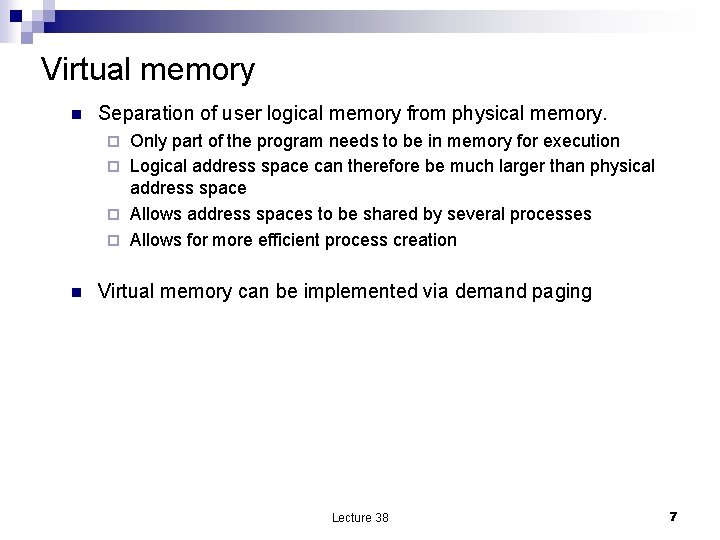

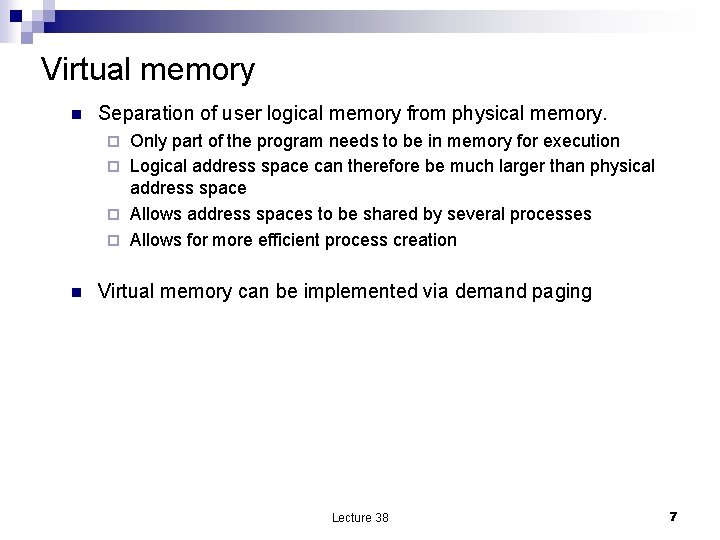

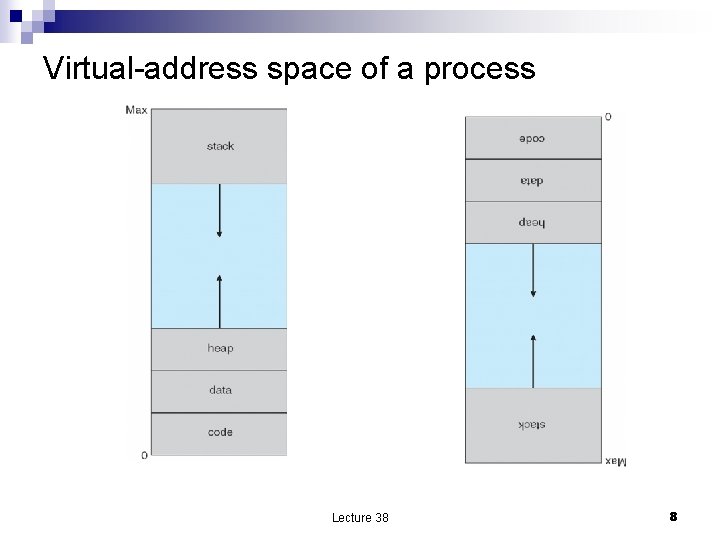

Virtual memory n Separation of user logical memory from physical memory. Only part of the program needs to be in memory for execution ¨ Logical address space can therefore be much larger than physical address space ¨ Allows address spaces to be shared by several processes ¨ Allows for more efficient process creation ¨ n Virtual memory can be implemented via demand paging Lecture 38 7

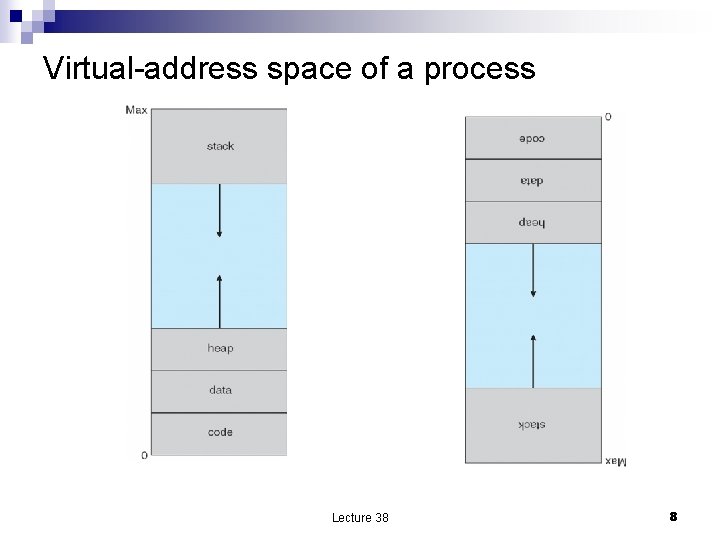

Virtual-address space of a process Lecture 38 8

Demand paging n Bring a page into memory only when it is needed n n n Less I/O needed Less memory needed Faster response More users Page is needed reference to it n n invalid reference abort not-in-memory bring to memory Lecture 38 9

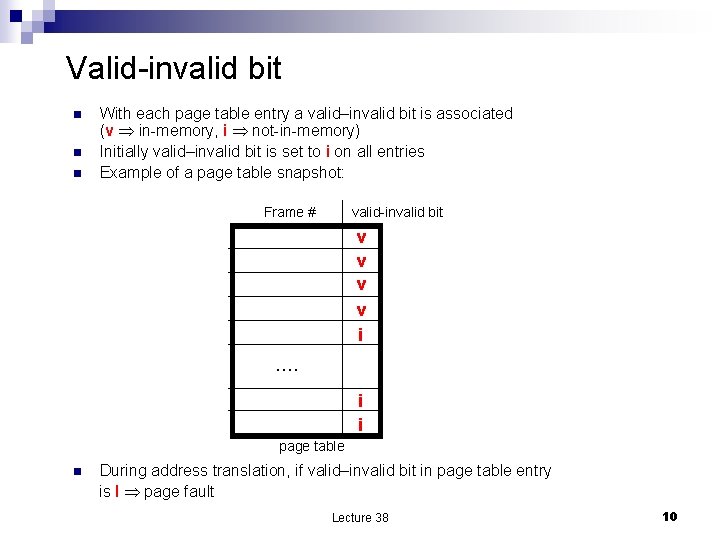

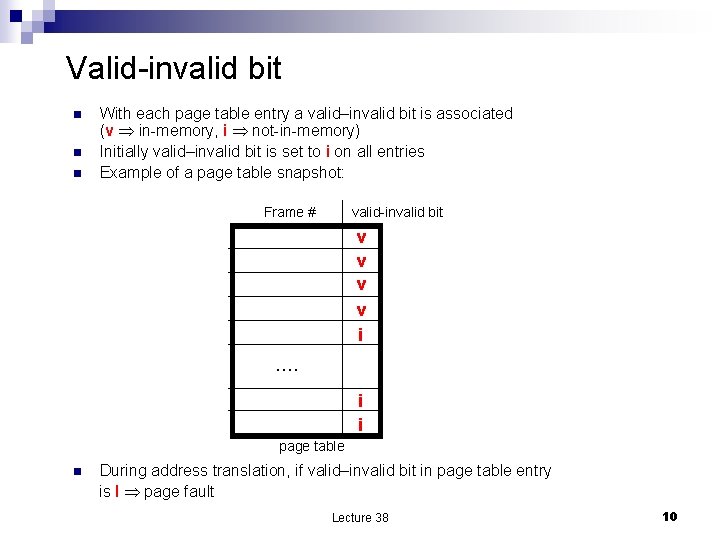

Valid-invalid bit n n n With each page table entry a valid–invalid bit is associated (v in-memory, i not-in-memory) Initially valid–invalid bit is set to i on all entries Example of a page table snapshot: Frame # valid-invalid bit v v i …. i i page table n During address translation, if valid–invalid bit in page table entry is I page fault Lecture 38 10

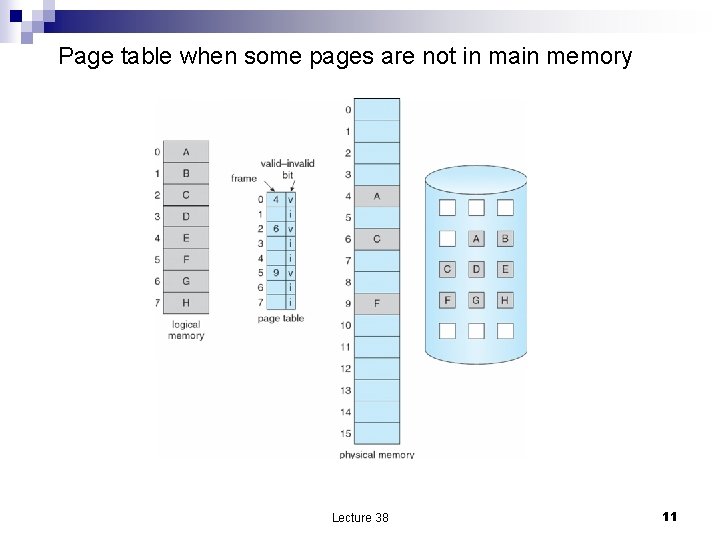

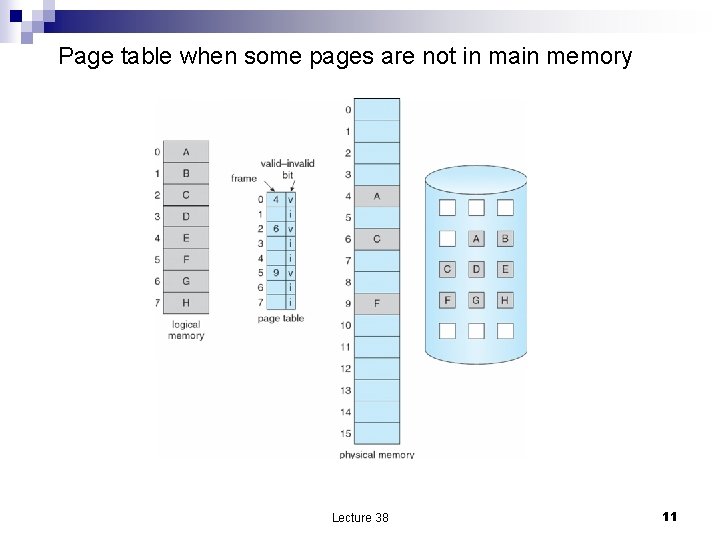

Page table when some pages are not in main memory Lecture 38 11

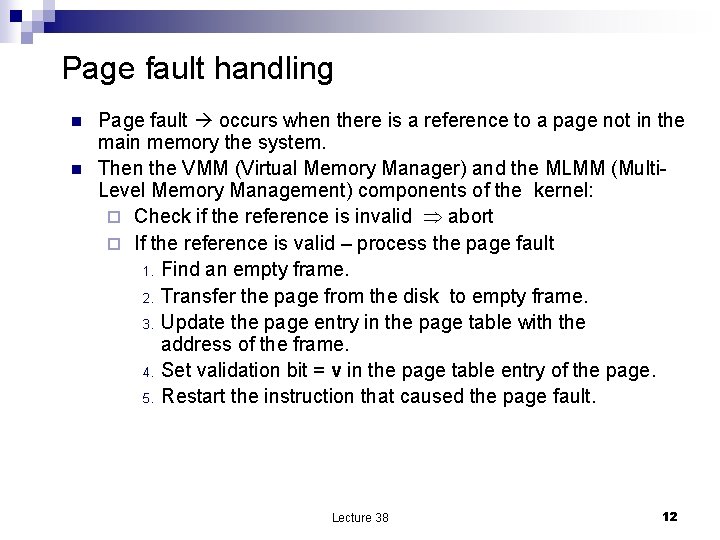

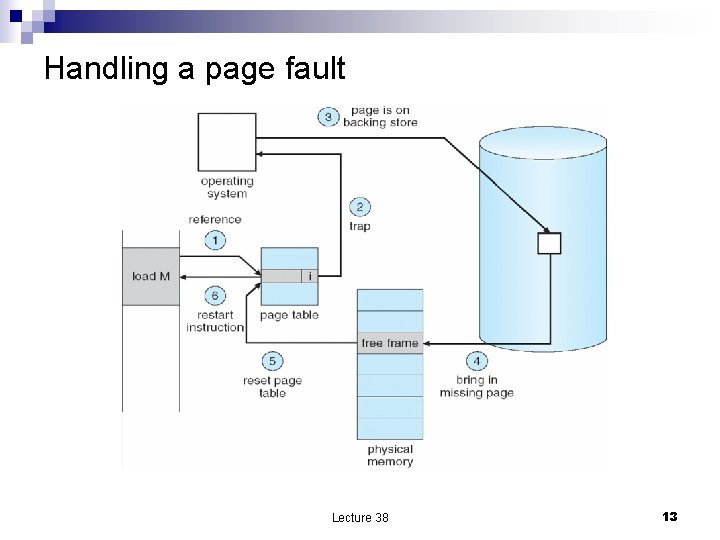

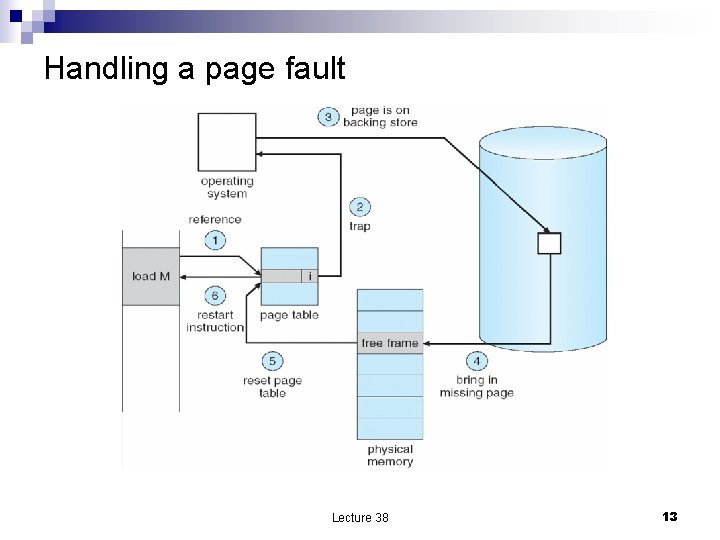

Page fault handling n n Page fault occurs when there is a reference to a page not in the main memory the system. Then the VMM (Virtual Memory Manager) and the MLMM (Multi. Level Memory Management) components of the kernel: ¨ Check if the reference is invalid abort ¨ If the reference is valid – process the page fault 1. Find an empty frame. 2. Transfer the page from the disk to empty frame. 3. Update the page entry in the page table with the address of the frame. 4. Set validation bit = v in the page table entry of the page. 5. Restart the instruction that caused the page fault. Lecture 38 12

Handling a page fault Lecture 38 13

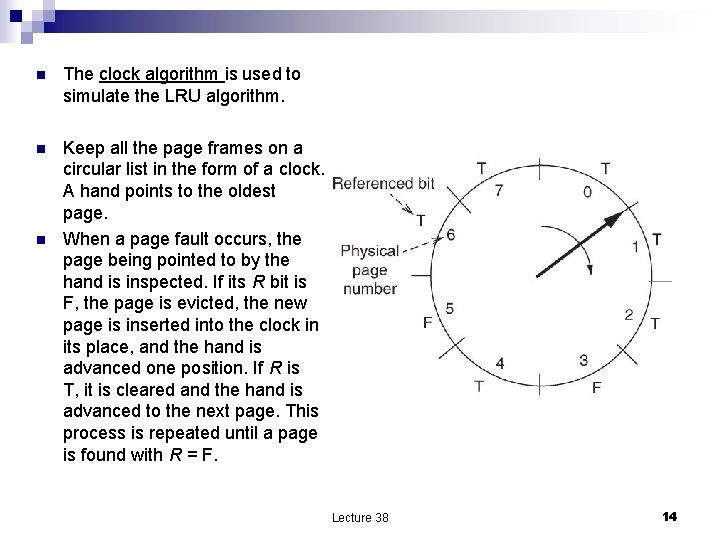

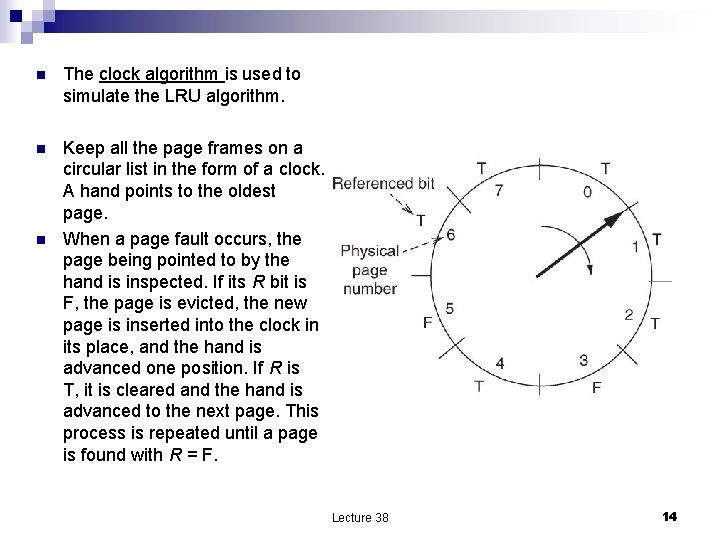

n The clock algorithm is used to simulate the LRU algorithm. n Keep all the page frames on a circular list in the form of a clock. A hand points to the oldest page. When a page fault occurs, the page being pointed to by the hand is inspected. If its R bit is F, the page is evicted, the new page is inserted into the clock in its place, and the hand is advanced one position. If R is T, it is cleared and the hand is advanced to the next page. This process is repeated until a page is found with R = F. n Lecture 38 14

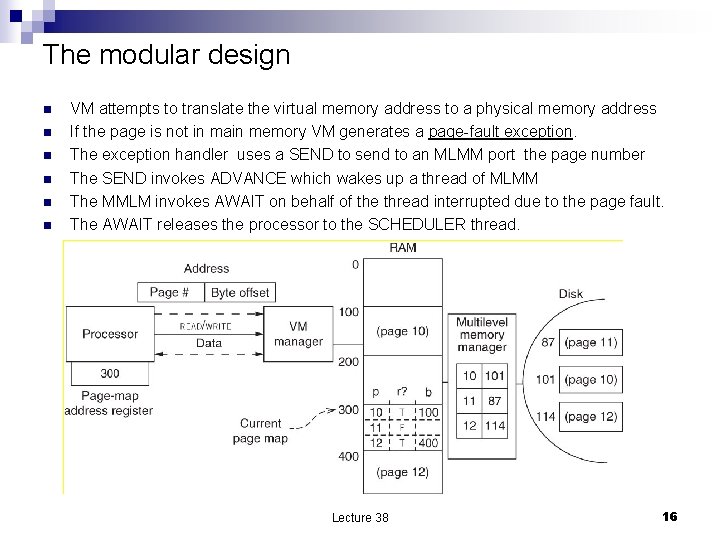

System components involved in memory management n n Virtual memory manager – VMM dynamic address translation Multi level memory management – MLMM Lecture 38 15

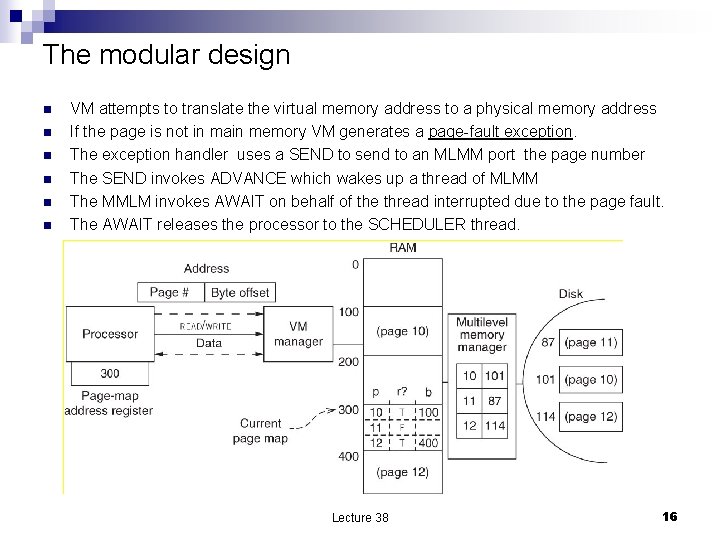

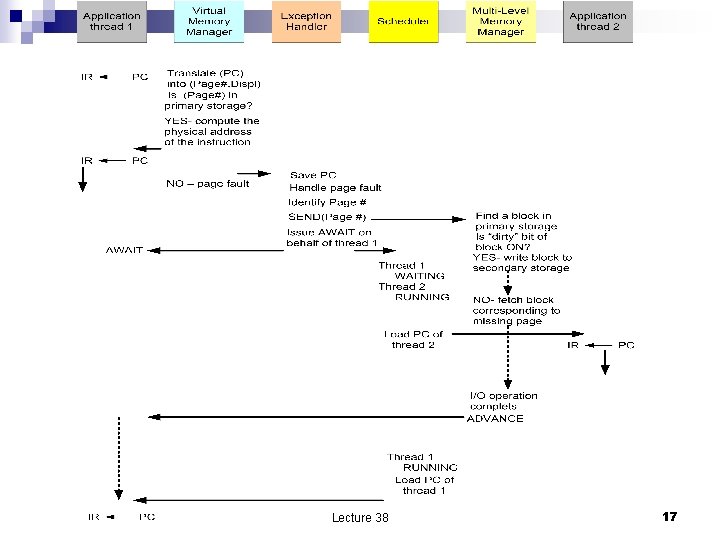

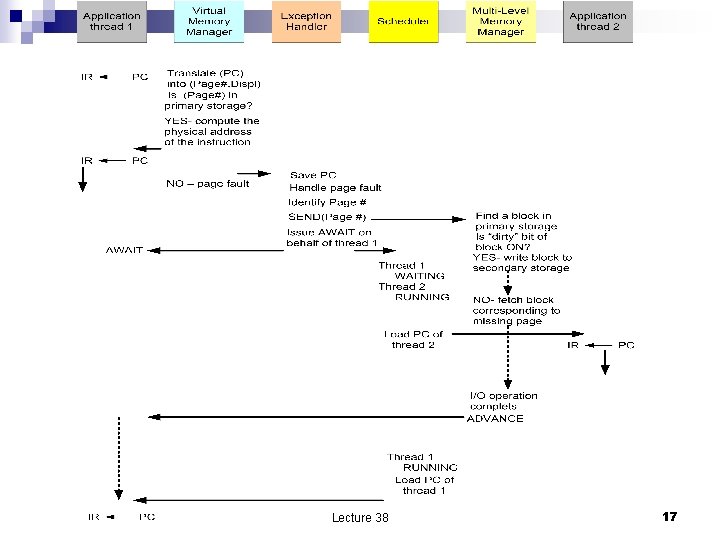

The modular design n n n VM attempts to translate the virtual memory address to a physical memory address If the page is not in main memory VM generates a page-fault exception. The exception handler uses a SEND to send to an MLMM port the page number The SEND invokes ADVANCE which wakes up a thread of MLMM The MMLM invokes AWAIT on behalf of the thread interrupted due to the page fault. The AWAIT releases the processor to the SCHEDULER thread. Lecture 38 16

Lecture 38 17

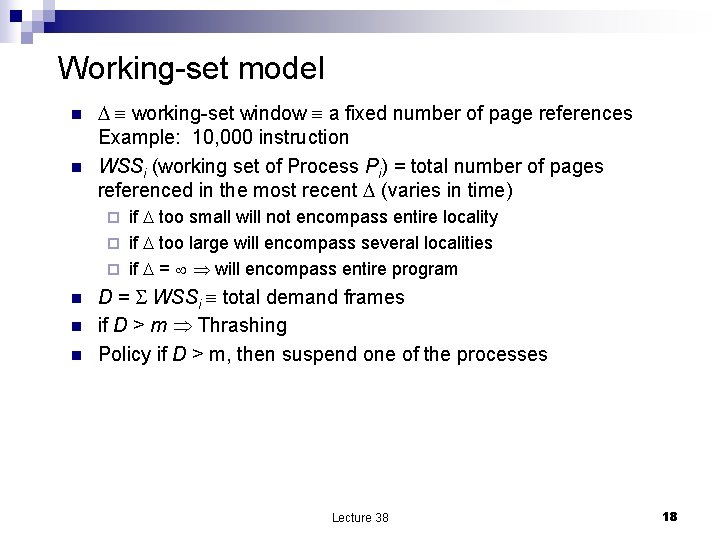

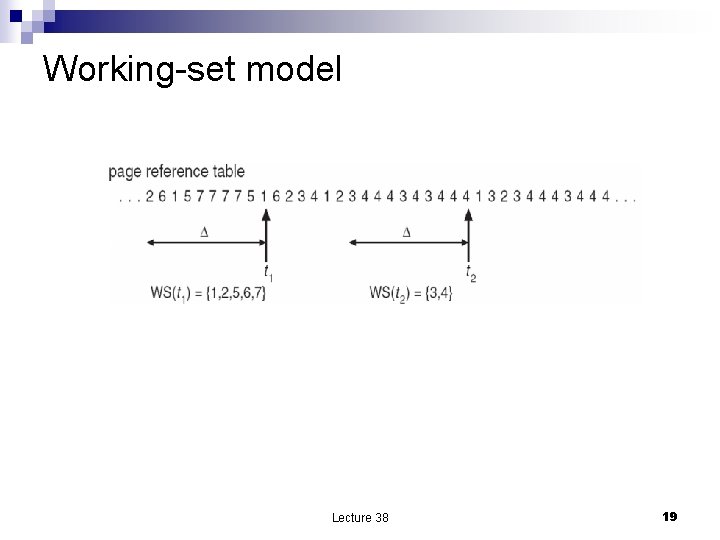

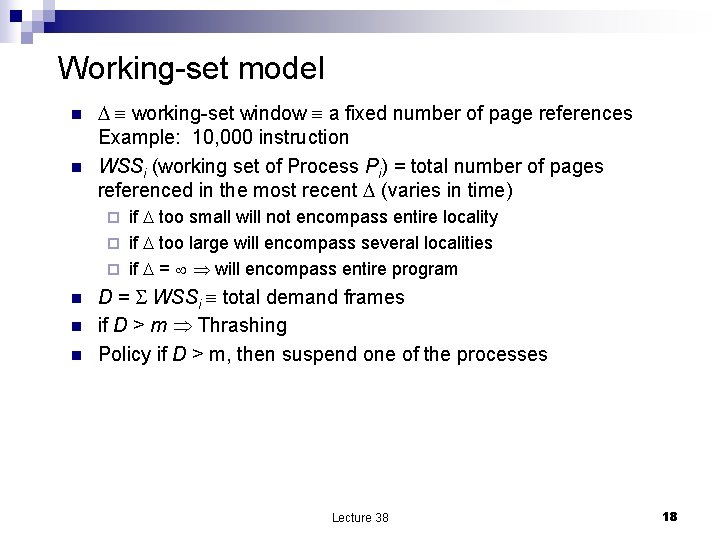

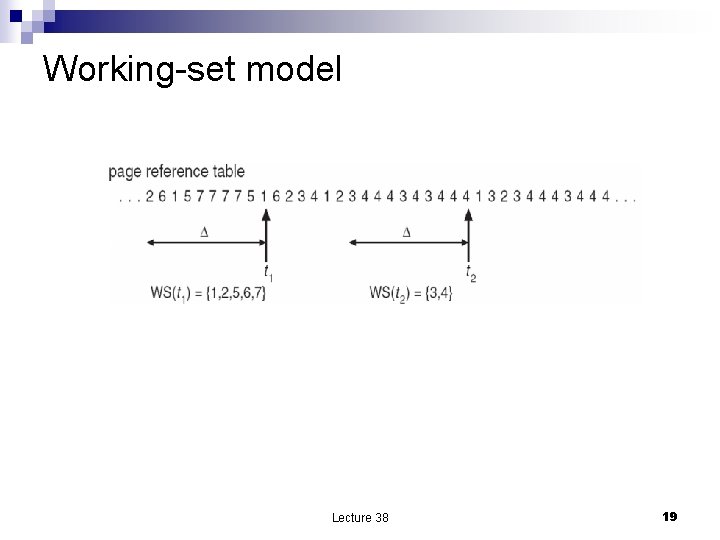

Working-set model n n working-set window a fixed number of page references Example: 10, 000 instruction WSSi (working set of Process Pi) = total number of pages referenced in the most recent (varies in time) if too small will not encompass entire locality ¨ if too large will encompass several localities ¨ if = will encompass entire program ¨ n n n D = WSSi total demand frames if D > m Thrashing Policy if D > m, then suspend one of the processes Lecture 38 18

Working-set model Lecture 38 19

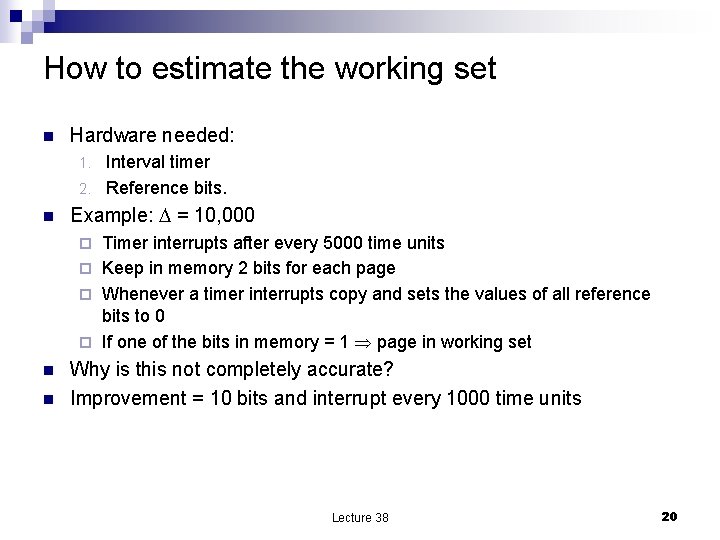

How to estimate the working set n Hardware needed: Interval timer 2. Reference bits. 1. n Example: = 10, 000 Timer interrupts after every 5000 time units ¨ Keep in memory 2 bits for each page ¨ Whenever a timer interrupts copy and sets the values of all reference bits to 0 ¨ If one of the bits in memory = 1 page in working set ¨ n n Why is this not completely accurate? Improvement = 10 bits and interrupt every 1000 time units Lecture 38 20

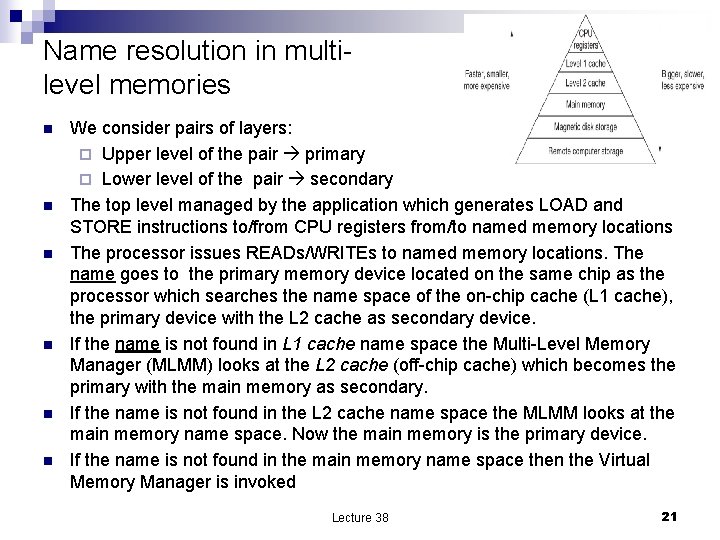

Name resolution in multilevel memories n n n We consider pairs of layers: ¨ Upper level of the pair primary ¨ Lower level of the pair secondary The top level managed by the application which generates LOAD and STORE instructions to/from CPU registers from/to named memory locations The processor issues READs/WRITEs to named memory locations. The name goes to the primary memory device located on the same chip as the processor which searches the name space of the on-chip cache (L 1 cache), the primary device with the L 2 cache as secondary device. If the name is not found in L 1 cache name space the Multi-Level Memory Manager (MLMM) looks at the L 2 cache (off-chip cache) which becomes the primary with the main memory as secondary. If the name is not found in the L 2 cache name space the MLMM looks at the main memory name space. Now the main memory is the primary device. If the name is not found in the main memory name space then the Virtual Memory Manager is invoked Lecture 38 21

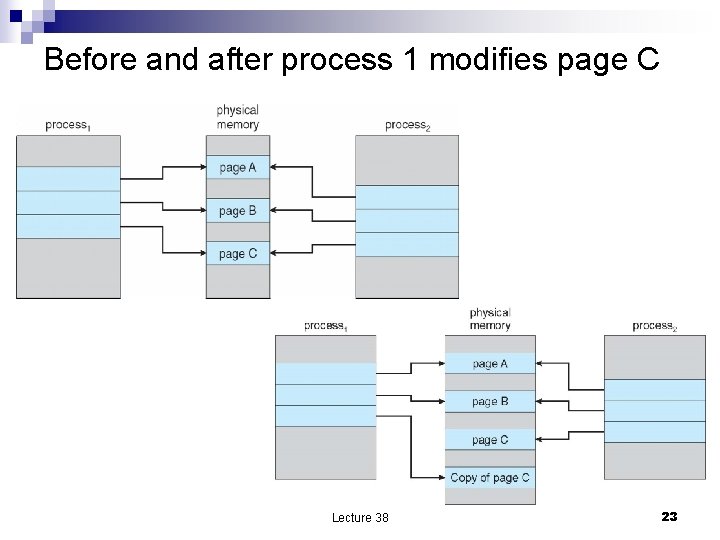

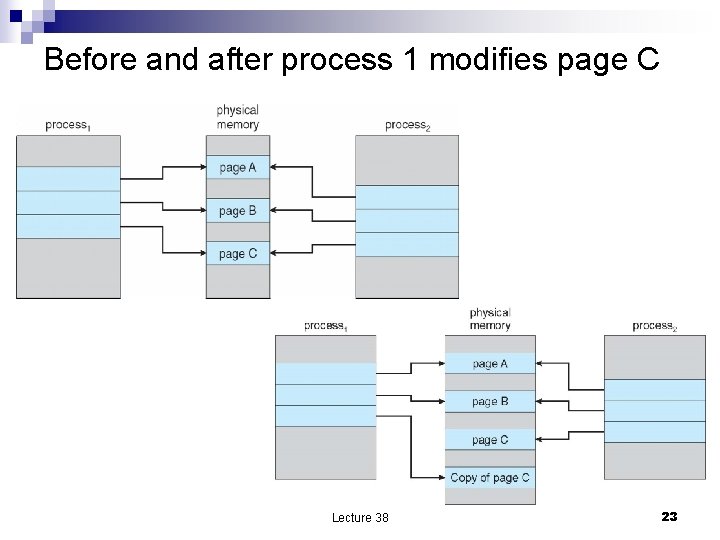

Process creation n n Virtual memory allows other benefits during process creation: Copy-on-Write (COW) allows both parent and child processes to initially share the same pages in memory. If either process modifies a shared page, only then is the page is copied. ¨ COW allows more efficient process creation as only modified pages are copied ¨ Lecture 38 22

Before and after process 1 modifies page C Lecture 38 23

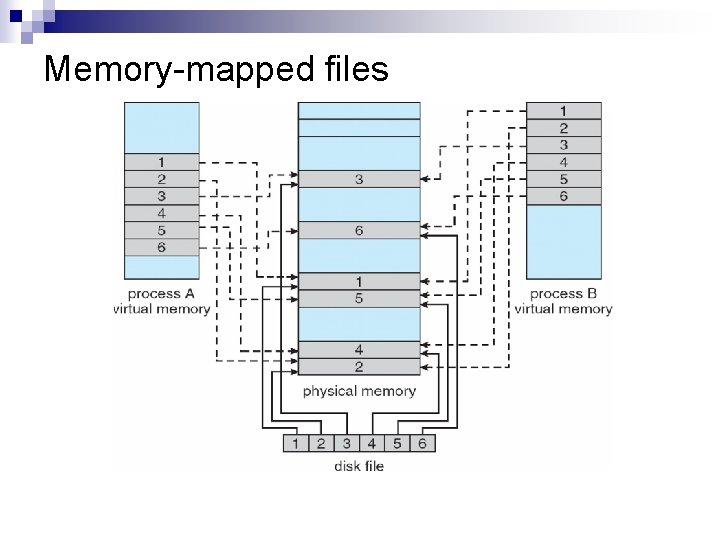

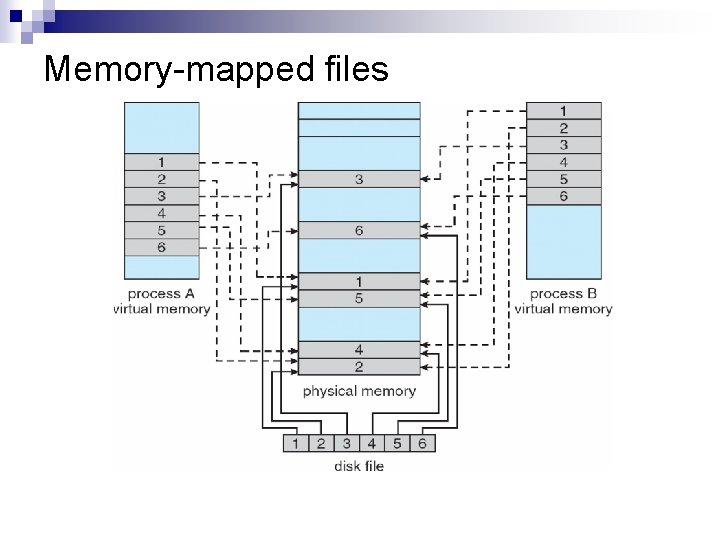

Memory-mapped files n n Memory-mapped file I/O allows file I/O to be treated as routine memory access by mapping a disk block to a page in memory A file is initially read using demand paging. A page-sized portion of the file is read from the file system into a physical page. Subsequent reads/writes to/from the file are treated as ordinary memory accesses. Simplifies file access by treating file I/O through memory rather than read() write() system calls Also allows several processes to map the same file allowing the pages in memory to be shared

Memory-mapped files