CGS 3763 Operating Systems Concepts Spring 2013 Dan

- Slides: 43

CGS 3763 Operating Systems Concepts Spring 2013 Dan C. Marinescu Office: HEC 304 Office hours: M-Wd 11: 30 - 12: 30 AM

Lecture 22 – Friday, February 29, 2013 n Last time: ¨ n Answers to the midterm Today: Answers from week 7 questions ¨ CPU Scheduling ¨ n Next time ¨ n CPU scheduling Reading assignments ¨ Chapter 5 and 6 of the textbook Lecture 22 2

n n n n Student Review Form 7 Summary BO KANG Feb 18 th Monday: Key Points: Threads: single and multithreading processes. Questions: How threads, programs, and processes differ? The classification between user-level threads and kernel-level threads, and the reasons that make each other unique. Among the different threading APIs, is there one that is more effective than the other? What is meant by “race” conditions in relation to thread safeness? How do you detect a race condition, and how are they handled? Can you explain why barrier synchronization is a challenge for multithreading? Are there any advantages to single threading over multithreading? Since threads share data, do the other threads have to wait to access the information if one thread is using the data? How does a programmer know which thread model to use when writing code? Lecture 22 3

Threads versus processes n n n In the traditional view a process has two roles: ¨ consumer of resources, e. g. , address space, open files. ¨ code executing on a CPU, in one address space, there is a single line of execution. The multi-threaded view of processes and threads the two roles are distinct: ¨ A process is a consumer of resources, it owns an address space and a set of open files. ¨ A thread is an instance of code executing sequentially on a CPU. A thread needs an address space in which to execute, so we think of a thread as belonging to a process. A single process may own many threads, running concurrently within its address space. Threads are “lightweight processes” less state information must be kept to maintain a thread than a process. Lecture 22 4

Threads versus processes n n Any applications of threads can be implemented with separate processes. Advantages of using threads instead of processes: Threads share data in memory and can communicate without IPC (Inter. Process Communication) mechanisms, which require expensive system calls. ¨ Thread creation and context switching are much less time-consuming than the same operations for processes. ¨ n Disadvantages Use of shared memory results in a need to synchronize access to shared data. ¨ Libraries may need to be rewritten to make them “thread-safe” ¨ Lecture 22 5

User and kernel threads n n User threads – run in user mode, are supported by a user-level thread library. Kernel threads – run in user mode when executing user functions or library calls; switch to kernel mode when executing system calls. Provide privileged services to applications e. g. , system calls. ¨ The entities handled by the system scheduler. Used by the kernel to keep track of all processes in the system and how much resources are allocated to each process. ¨ When multiple user threads are mapped to a single kernel thread then this thread schedules individual user threads. ¨ n A kernel-only thread - executes only in kernel mode environment. Lecture 22 6

User and kernel threads (cont’d) n n Library code decides which user threads the mapping of user to kernel threads. The use of system calls or other kernel services by an application: If uses heavily system calls, the more user threads per kernel thread, the slower the applications will run, because the kernel thread becomes a bottleneck, all system calls pass through it. ¨ If it uses rarely system calls, a large number of user threads can be assigned to a kernel thread without much performance penalty, other than the overhead of the context switch. ¨ n n Increasing the number of kernel threads, adds overhead to the kernel in general, so while individual threads will be more responsive with respect to system calls, the system as a whole will become slower. It is important to find a good balance between the number of kernel threads and the number of user threads per kernel thread. Lecture 22 7

Race condition A race condition occurs in concurrent execution of multiple threads/processes when the output is dependent on the timing or other uncontrollable events. n Example: two threads T 1 and T 2 which share a variable x. in T 1 there is a statement (SA): x = -2 in T 2 there is a statement (SB): x=+17 The result depends the scheduler. If the order of execution is: SA then SB then the final result is x=+17 SB then SA then the final result is x=-2 n Lecture 22 8

Barrier synchronization n A barrier for a group of n threads /processes means any of them must stop and cannot proceed until all other threads/processes reach this barrier. Barrier synchronization increases the execution time and must be avoided when possible. Example: 10 threads, 9 of them finish after 10 seconds ¨ one needs 100 seconds. ¨ the first 9 processes have to wait 90 seconds. ¨ Lecture 22 9

n n n n n Feb 20 th Wednesday: Key Points: Pthreads, Java threads, CPU Scheduling, CPU burst, I/O burst Questions: Why would you want to use a thread instead of a pthread? If pthreads take less time, why not just use pthreads instead of threads all the time? Are Pthreads just universally accepted threads used across different OS’s? Pthreads is a library that allows multiple threads to use the same code. Can multiple threads access the same code simultaneously? Is Pthreads a set of instructions that can be used in any programming language or what is specifically? What decides what type of scheduling is used, preemptive vs. nonpreemptive)? What are the advantages and disadvantages of preemptive vs. non-preemptive modes? CPU scheduling, and the two subtypes of scheduling that it also uses, nonpreemptive and preemptive. I don’t know what each of the subcategories specifically Lecture 22 10

n n n n n Feb 22 th Friday: Key Points: CPU scheduling metrics, Scheduling Objectives, Scheduling Policies – first-come, first-served (FCFS) Questions: What are batch and interactive systems used for? Please further clarify the difference between interactive and batch processing policies. When to use these different forms of scheduling? Are rules for scheduling polices such as one being faster than another or when to use one over anther? Can a higher priority thread prevent a lower priority from executing? Which example of process scheduling is most efficient? FCFS, SJF, RR or priority? Where and when does Marshaling take place? Is it required for all processes? Lecture 22 11

Scheduling policies n n First-Come First-Serve (FCFS) Shortest Job First (SJF) Round Robin (RR) Priority scheduling Lecture 22 12

Desirable properties of scheduling policies n n n Fairness - Prevent starvation – ensure that a process/thread is able to run at some point in time. Efficiency – context switching takes some time and if occurs very often the overhead of it lowers the CPU utilization. Lecture 22 13

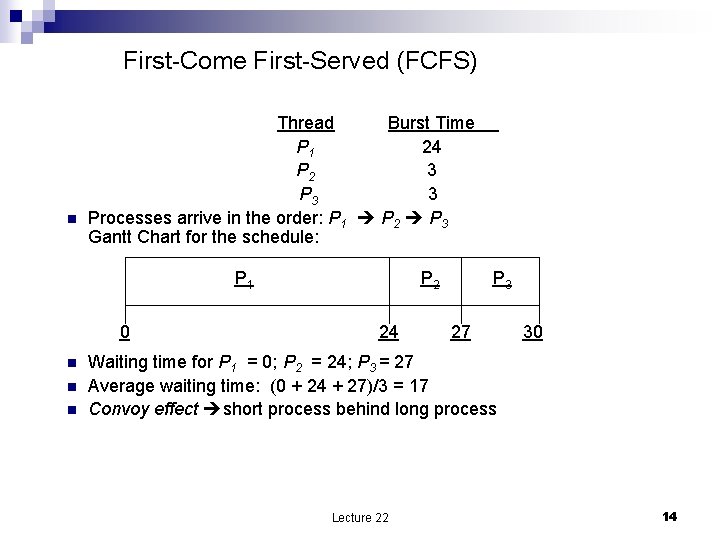

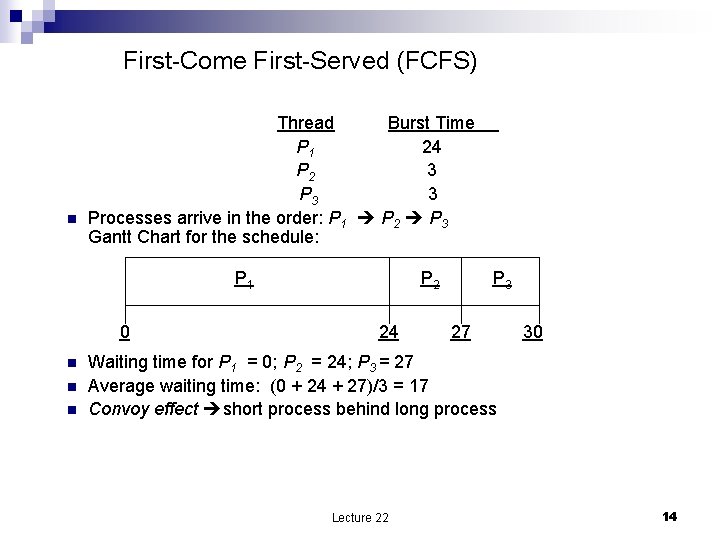

First-Come First-Served (FCFS) n Thread Burst Time P 1 24 P 2 3 P 3 3 Processes arrive in the order: P 1 P 2 P 3 Gantt Chart for the schedule: P 1 0 n n n P 2 24 P 3 27 30 Waiting time for P 1 = 0; P 2 = 24; P 3 = 27 Average waiting time: (0 + 24 + 27)/3 = 17 Convoy effect short process behind long process Lecture 22 14

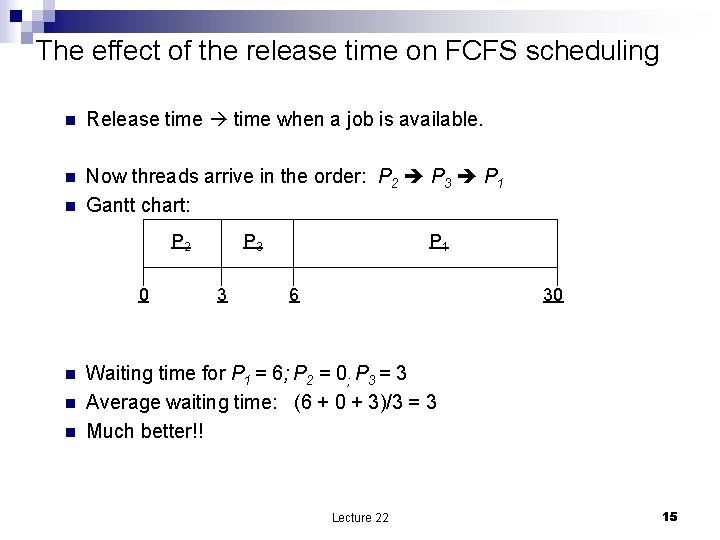

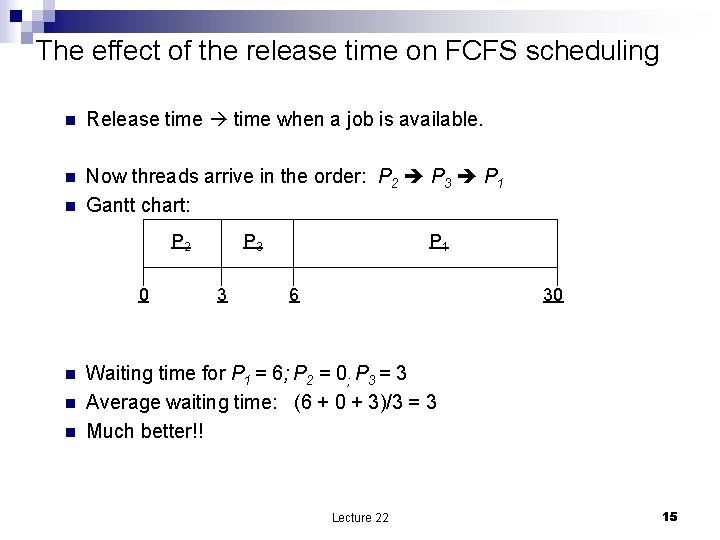

The effect of the release time on FCFS scheduling n Release time when a job is available. n Now threads arrive in the order: P 2 P 3 P 1 Gantt chart: n P 2 0 n n n P 3 3 P 1 6 30 Waiting time for P 1 = 6; P 2 = 0; P 3 = 3 Average waiting time: (6 + 0 + 3)/3 = 3 Much better!! Lecture 22 15

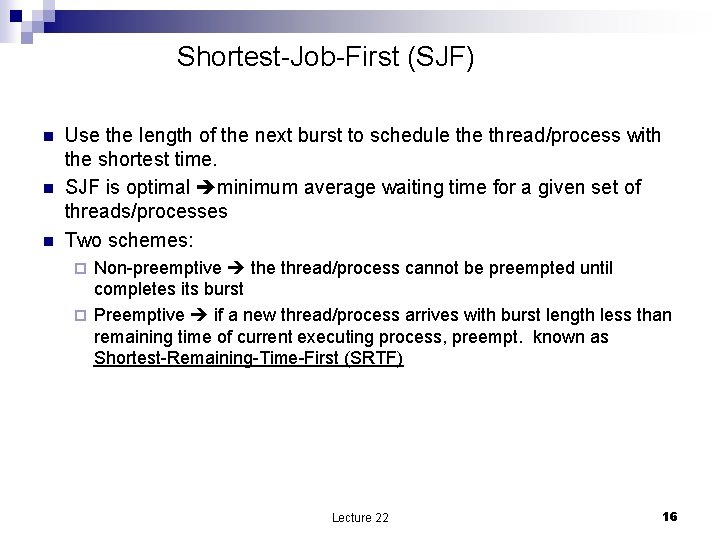

Shortest-Job-First (SJF) n n n Use the length of the next burst to schedule thread/process with the shortest time. SJF is optimal minimum average waiting time for a given set of threads/processes Two schemes: Non-preemptive the thread/process cannot be preempted until completes its burst ¨ Preemptive if a new thread/process arrives with burst length less than remaining time of current executing process, preempt. known as Shortest-Remaining-Time-First (SRTF) ¨ Lecture 22 16

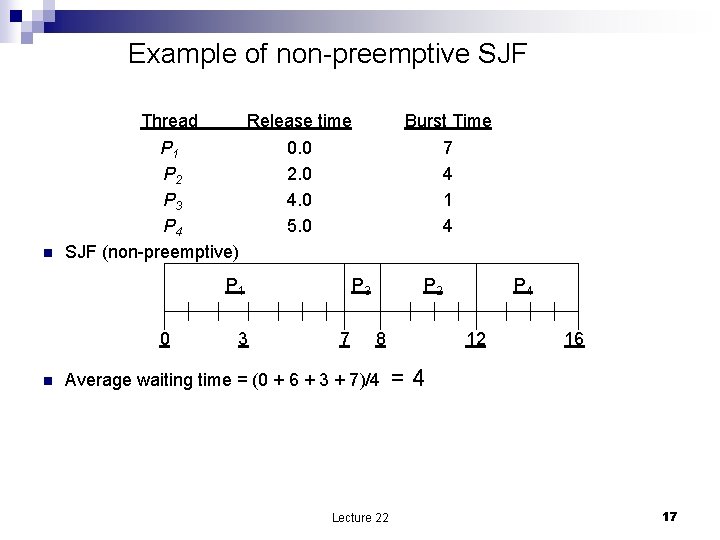

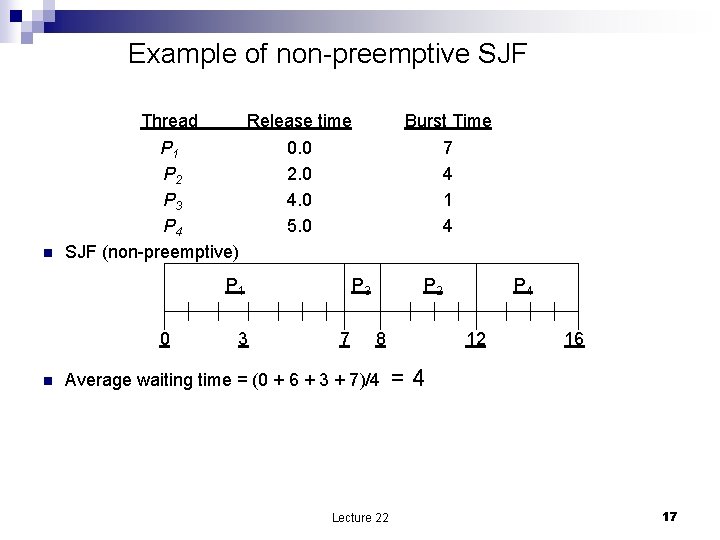

Example of non-preemptive SJF Thread n Release time Burst Time 0. 0 2. 0 4. 0 5. 0 7 4 1 4 P 1 P 2 P 3 P 4 SJF (non-preemptive) P 1 0 n 3 P 3 7 P 2 8 P 4 12 16 Average waiting time = (0 + 6 + 3 + 7)/4 = 4 Lecture 22 17

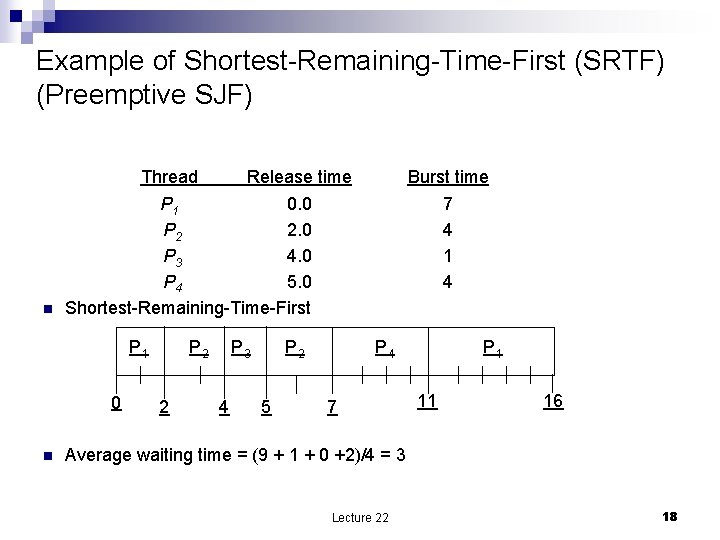

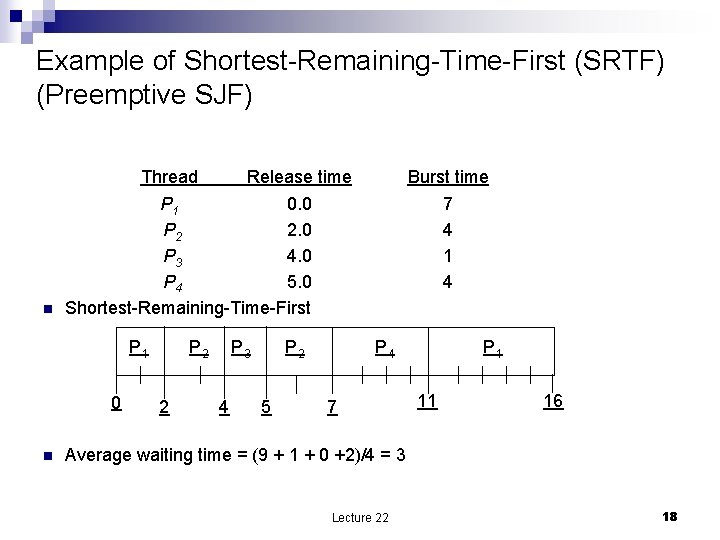

Example of Shortest-Remaining-Time-First (SRTF) (Preemptive SJF) Thread n Burst time P 1 0. 0 P 2 2. 0 P 3 4. 0 P 4 5. 0 Shortest-Remaining-Time-First P 1 0 n Release time P 2 2 P 3 4 7 4 1 4 P 2 5 P 4 7 P 1 11 16 Average waiting time = (9 + 1 + 0 +2)/4 = 3 Lecture 22 18

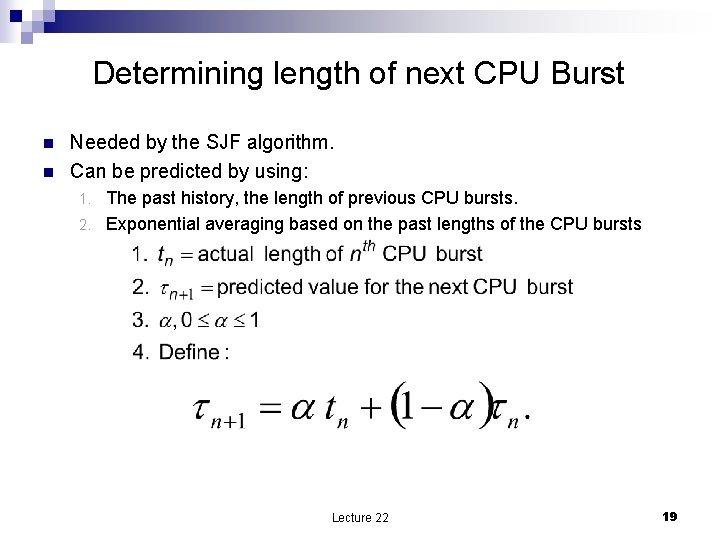

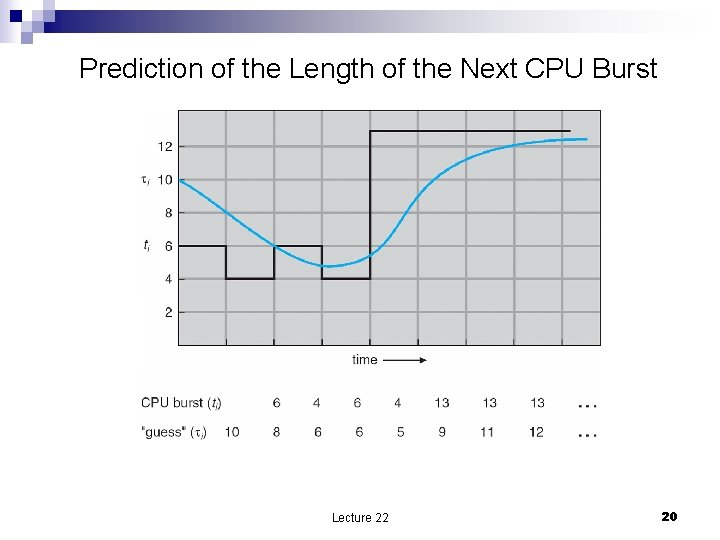

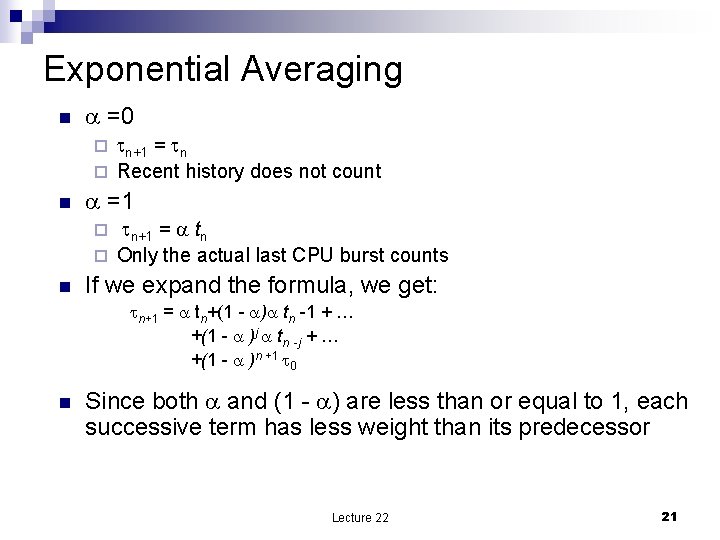

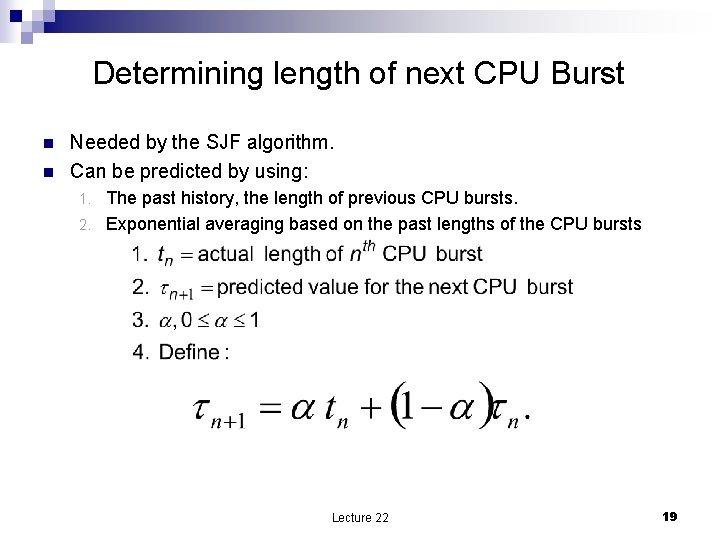

Determining length of next CPU Burst n n Needed by the SJF algorithm. Can be predicted by using: The past history, the length of previous CPU bursts. 2. Exponential averaging based on the past lengths of the CPU bursts 1. Lecture 22 19

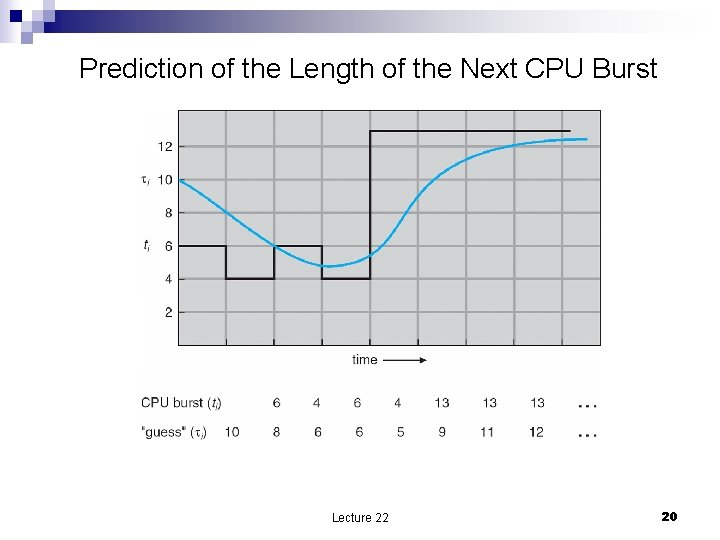

Prediction of the Length of the Next CPU Burst Lecture 22 20

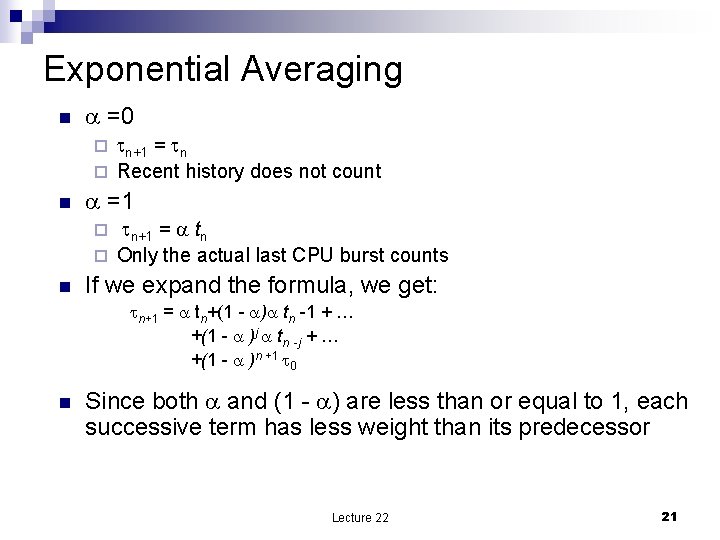

Exponential Averaging n =0 n+1 = n ¨ Recent history does not count ¨ n =1 n+1 = tn ¨ Only the actual last CPU burst counts ¨ n If we expand the formula, we get: n+1 = tn+(1 - ) tn -1 + … +(1 - )j tn -j + … +(1 - )n +1 0 n Since both and (1 - ) are less than or equal to 1, each successive term has less weight than its predecessor Lecture 22 21

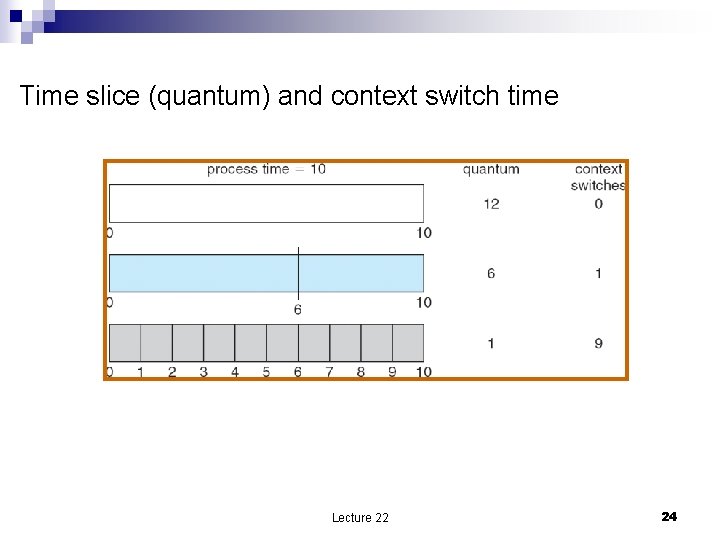

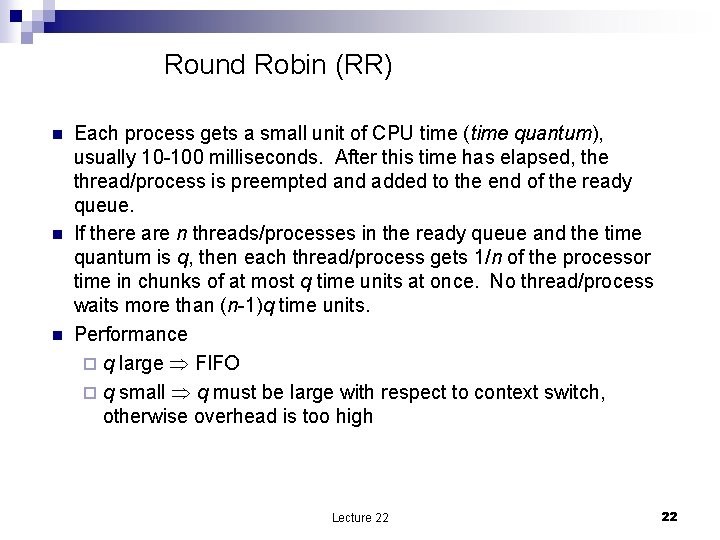

Round Robin (RR) n n n Each process gets a small unit of CPU time (time quantum), usually 10 -100 milliseconds. After this time has elapsed, the thread/process is preempted and added to the end of the ready queue. If there are n threads/processes in the ready queue and the time quantum is q, then each thread/process gets 1/n of the processor time in chunks of at most q time units at once. No thread/process waits more than (n-1)q time units. Performance ¨ q large FIFO ¨ q small q must be large with respect to context switch, otherwise overhead is too high Lecture 22 22

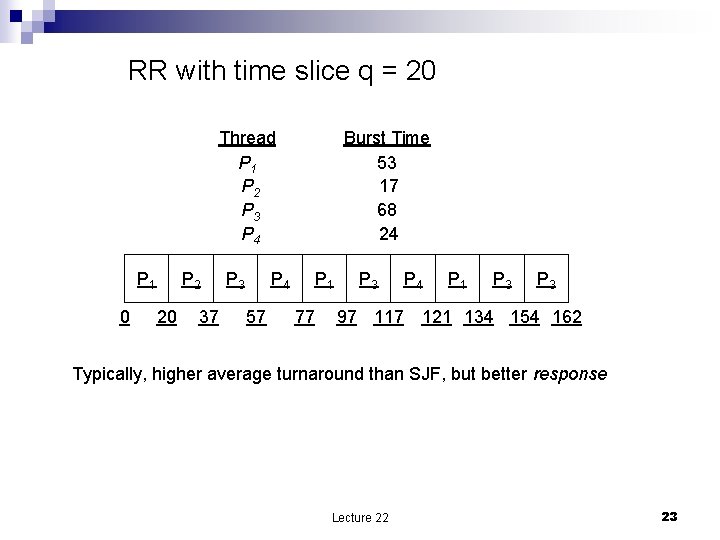

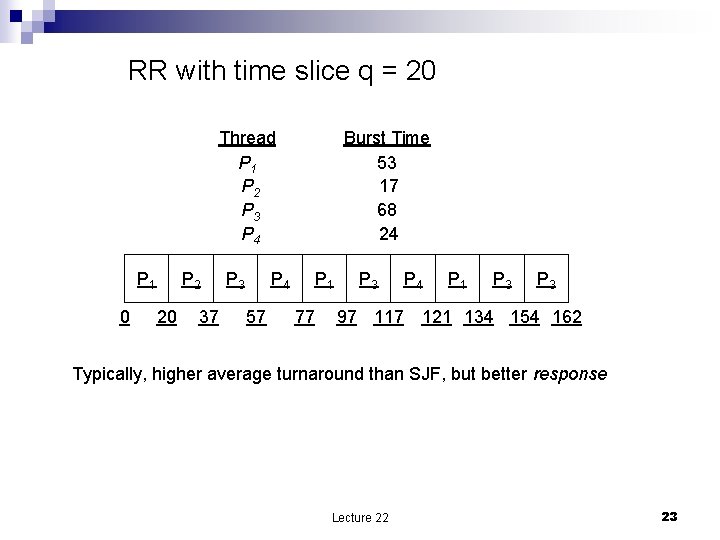

RR with time slice q = 20 Thread P 1 P 2 P 3 P 4 P 1 0 P 2 20 37 P 3 Burst Time 53 17 68 24 P 4 57 P 1 77 P 3 P 4 P 1 P 3 97 117 121 134 154 162 Typically, higher average turnaround than SJF, but better response Lecture 22 23

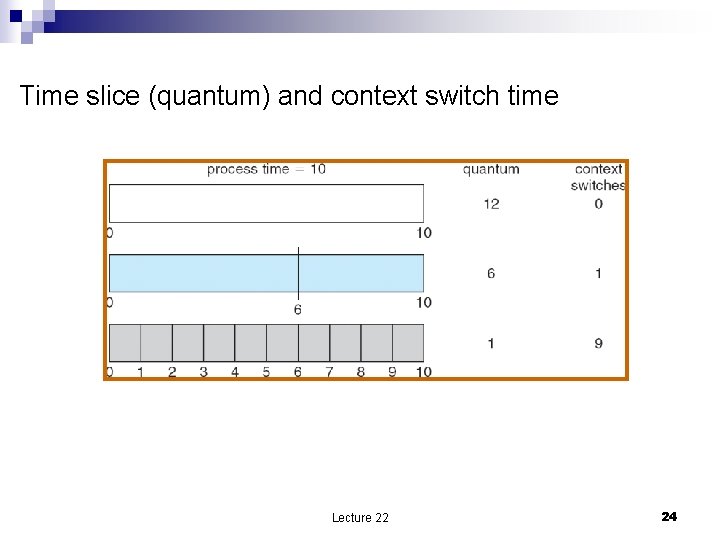

Time slice (quantum) and context switch time Lecture 22 24

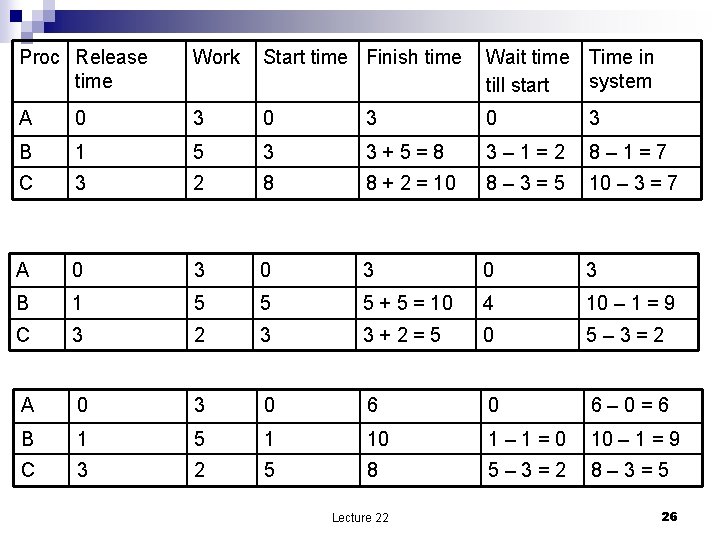

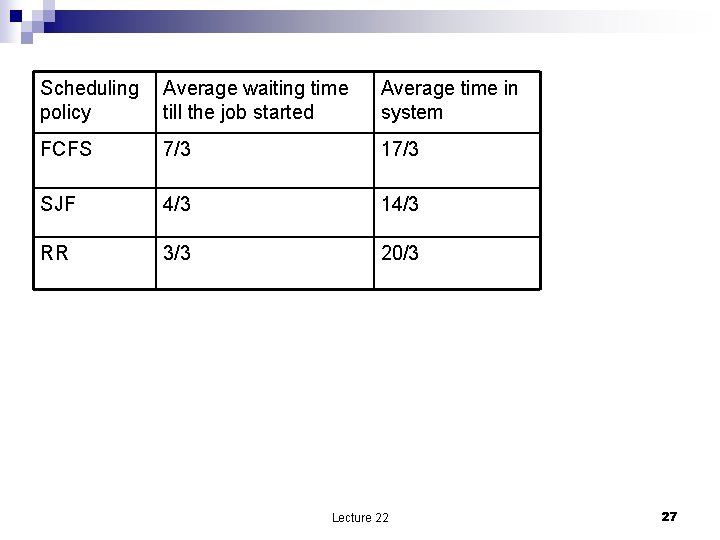

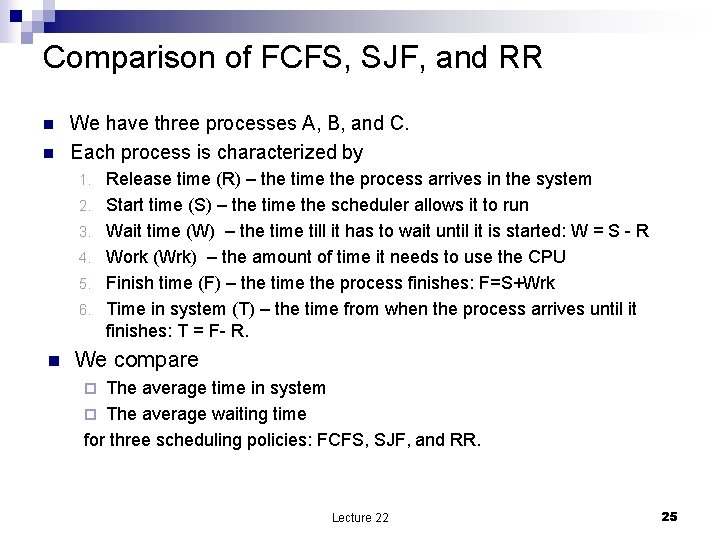

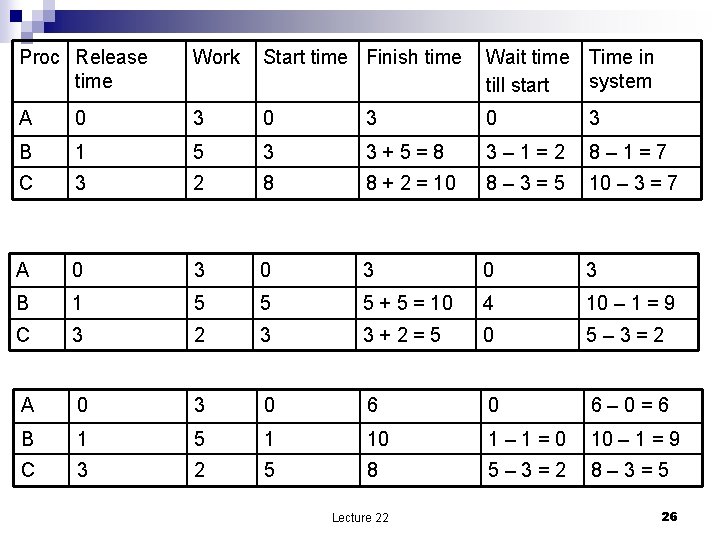

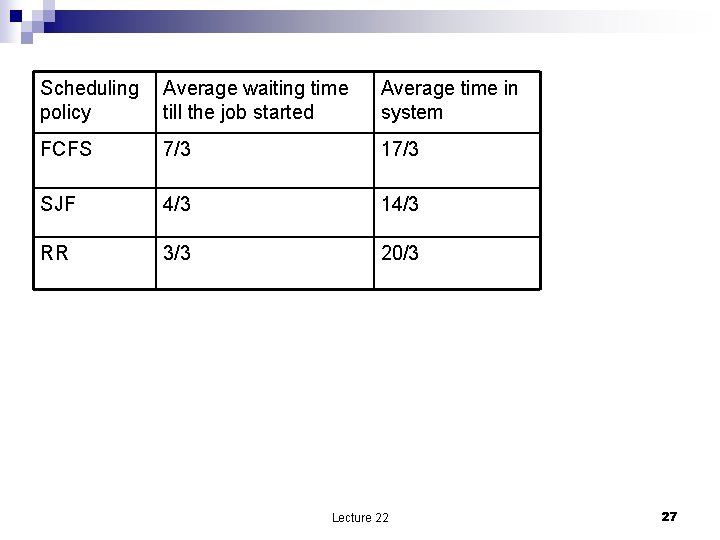

Comparison of FCFS, SJF, and RR n n We have three processes A, B, and C. Each process is characterized by 1. 2. 3. 4. 5. 6. n Release time (R) – the time the process arrives in the system Start time (S) – the time the scheduler allows it to run Wait time (W) – the time till it has to wait until it is started: W = S - R Work (Wrk) – the amount of time it needs to use the CPU Finish time (F) – the time the process finishes: F=S+Wrk Time in system (T) – the time from when the process arrives until it finishes: T = F- R. We compare The average time in system ¨ The average waiting time for three scheduling policies: FCFS, SJF, and RR. ¨ Lecture 22 25

Proc Release time Work Start time Finish time Wait time Time in system till start A 0 3 0 3 B 1 5 3 3 + 5 = 8 3 – 1 = 2 8 – 1 = 7 C 3 2 8 8 + 2 = 10 8 – 3 = 5 10 – 3 = 7 A 0 3 0 3 B 1 5 5 5 + 5 = 10 4 10 – 1 = 9 C 3 2 3 3 + 2 = 5 0 5 – 3 = 2 A 0 3 0 6 – 0 = 6 B 1 5 1 10 1 – 1 = 0 10 – 1 = 9 C 3 2 5 8 5 – 3 = 2 8 – 3 = 5 Lecture 22 26

Scheduling Average waiting time policy till the job started Average time in system FCFS 7/3 17/3 SJF 4/3 14/3 RR 3/3 20/3 Lecture 22 27

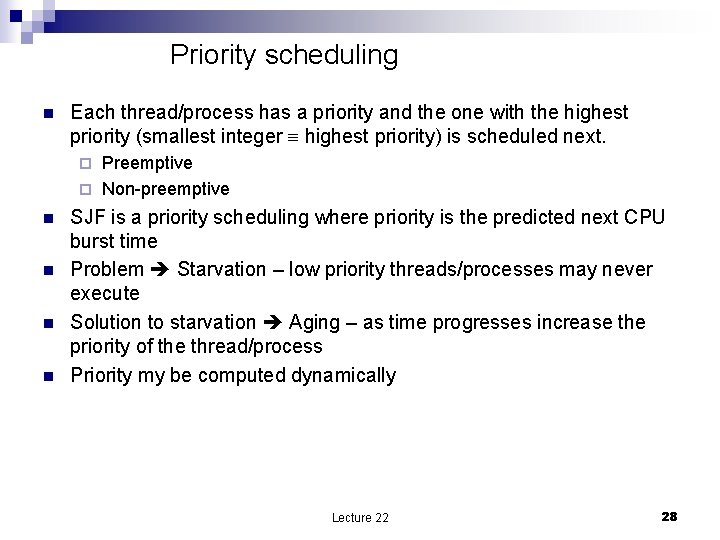

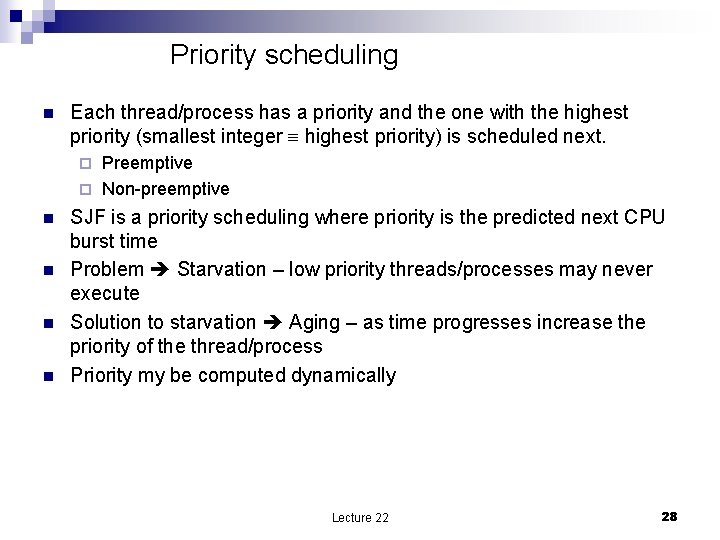

Priority scheduling n Each thread/process has a priority and the one with the highest priority (smallest integer highest priority) is scheduled next. Preemptive ¨ Non-preemptive ¨ n n SJF is a priority scheduling where priority is the predicted next CPU burst time Problem Starvation – low priority threads/processes may never execute Solution to starvation Aging – as time progresses increase the priority of the thread/process Priority my be computed dynamically Lecture 22 28

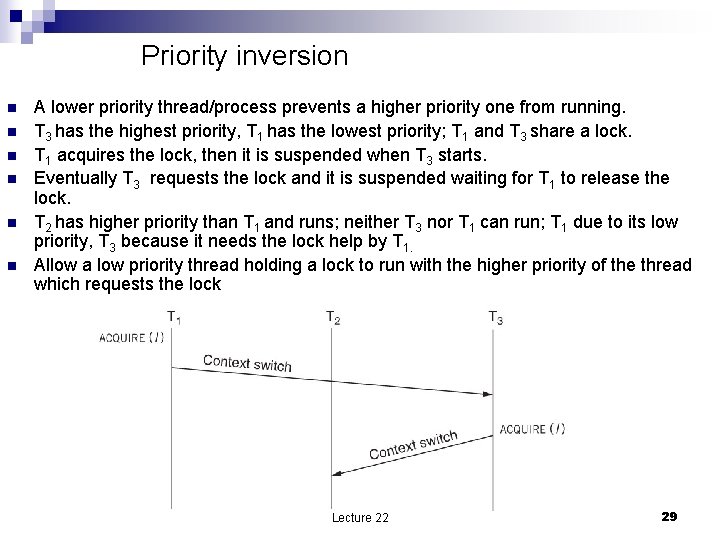

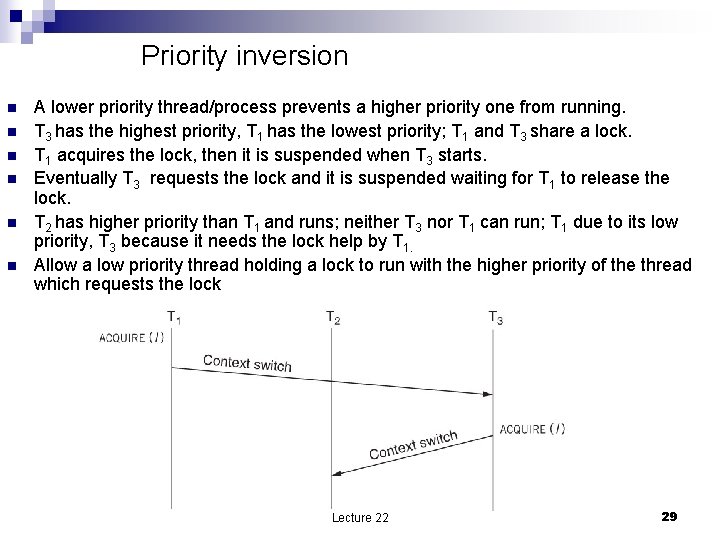

Priority inversion n n n A lower priority thread/process prevents a higher priority one from running. T 3 has the highest priority, T 1 has the lowest priority; T 1 and T 3 share a lock. T 1 acquires the lock, then it is suspended when T 3 starts. Eventually T 3 requests the lock and it is suspended waiting for T 1 to release the lock. T 2 has higher priority than T 1 and runs; neither T 3 nor T 1 can run; T 1 due to its low priority, T 3 because it needs the lock help by T 1. Allow a low priority thread holding a lock to run with the higher priority of the thread which requests the lock Lecture 22 29

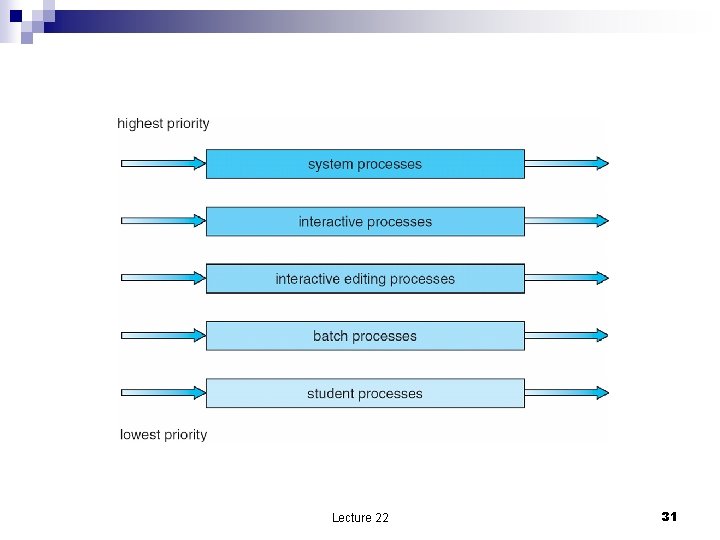

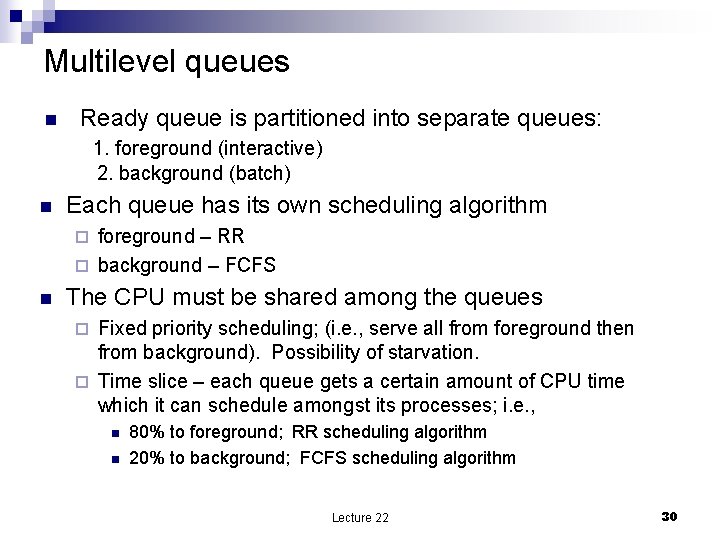

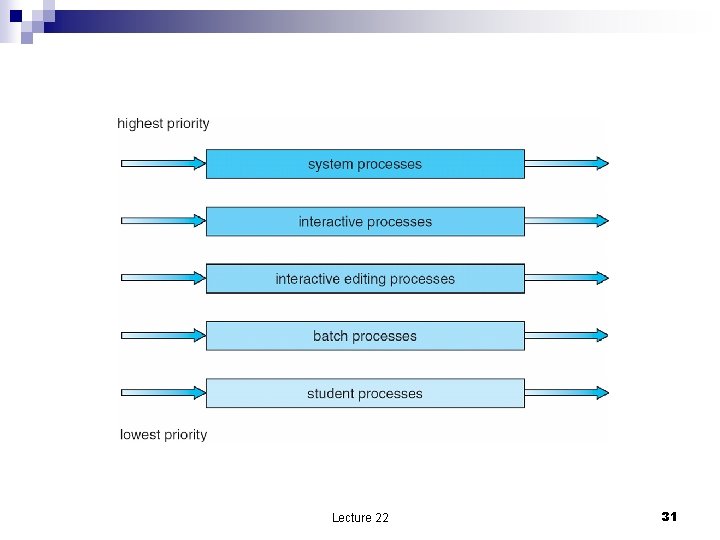

Multilevel queues n Ready queue is partitioned into separate queues: 1. foreground (interactive) 2. background (batch) n Each queue has its own scheduling algorithm foreground – RR ¨ background – FCFS ¨ n The CPU must be shared among the queues Fixed priority scheduling; (i. e. , serve all from foreground then from background). Possibility of starvation. ¨ Time slice – each queue gets a certain amount of CPU time which it can schedule amongst its processes; i. e. , ¨ n n 80% to foreground; RR scheduling algorithm 20% to background; FCFS scheduling algorithm Lecture 22 30

Lecture 22 31

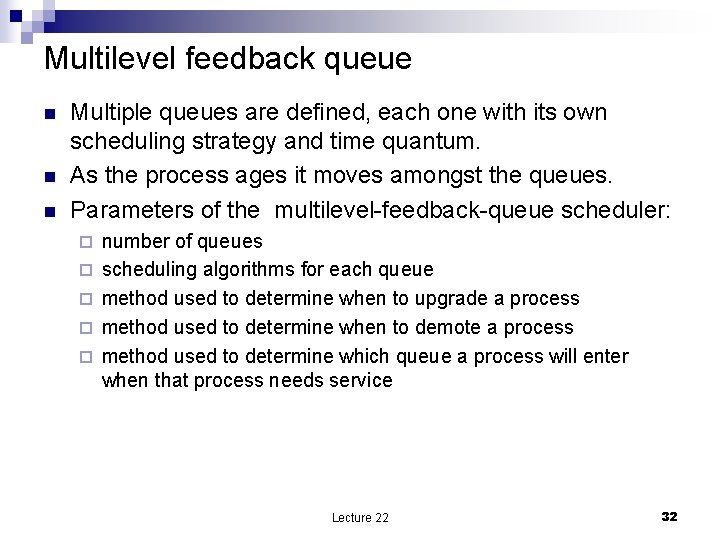

Multilevel feedback queue n n n Multiple queues are defined, each one with its own scheduling strategy and time quantum. As the process ages it moves amongst the queues. Parameters of the multilevel-feedback-queue scheduler: ¨ ¨ ¨ number of queues scheduling algorithms for each queue method used to determine when to upgrade a process method used to determine when to demote a process method used to determine which queue a process will enter when that process needs service Lecture 22 32

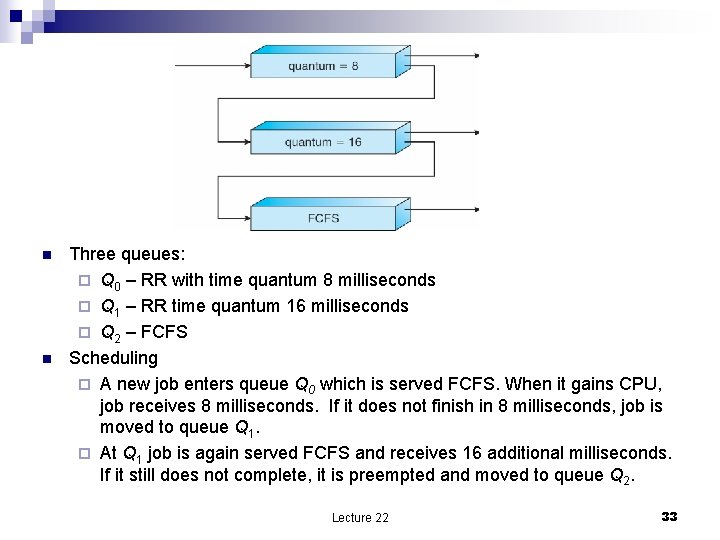

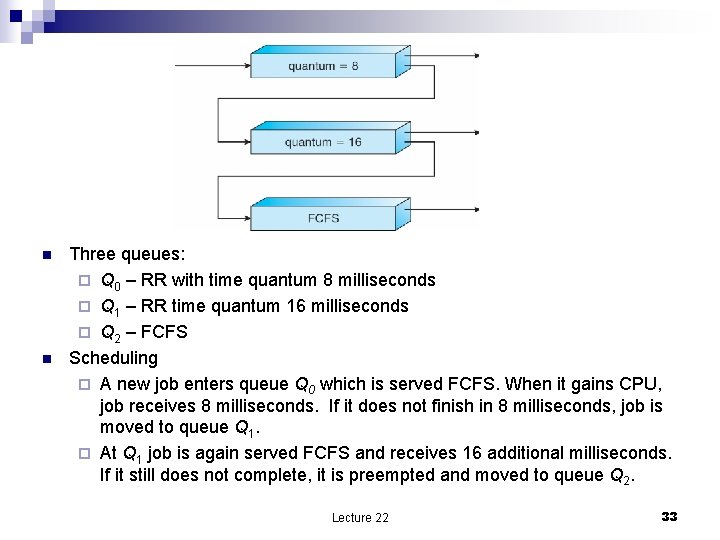

n n Three queues: ¨ Q 0 – RR with time quantum 8 milliseconds ¨ Q 1 – RR time quantum 16 milliseconds ¨ Q 2 – FCFS Scheduling ¨ A new job enters queue Q 0 which is served FCFS. When it gains CPU, job receives 8 milliseconds. If it does not finish in 8 milliseconds, job is moved to queue Q 1. ¨ At Q 1 job is again served FCFS and receives 16 additional milliseconds. If it still does not complete, it is preempted and moved to queue Q 2. Lecture 22 33

Thread scheduling n n n Distinction between user-level and kernel-level threads Many-to-one and many-to-many models, thread library schedules user-level threads to run on LWP ¨ Known as process-contention scope (PCS) since scheduling competition is within the process Kernel thread scheduled onto available CPU is system-contention scope (SCS) – competition among all threads in system Lecture 22 34

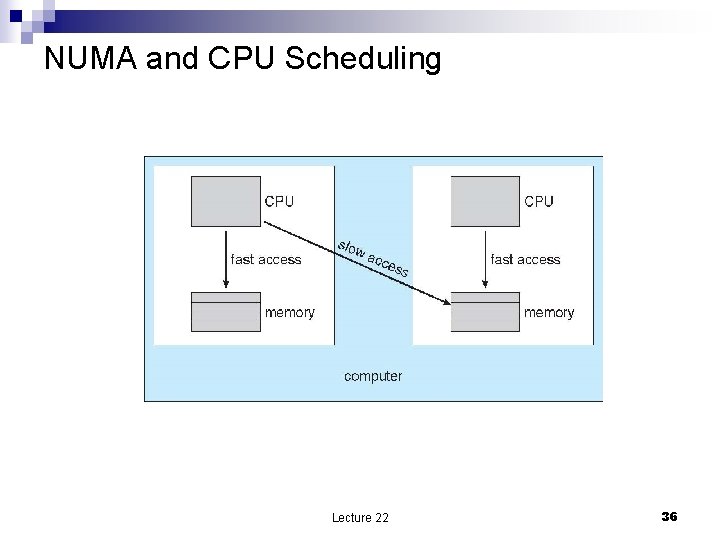

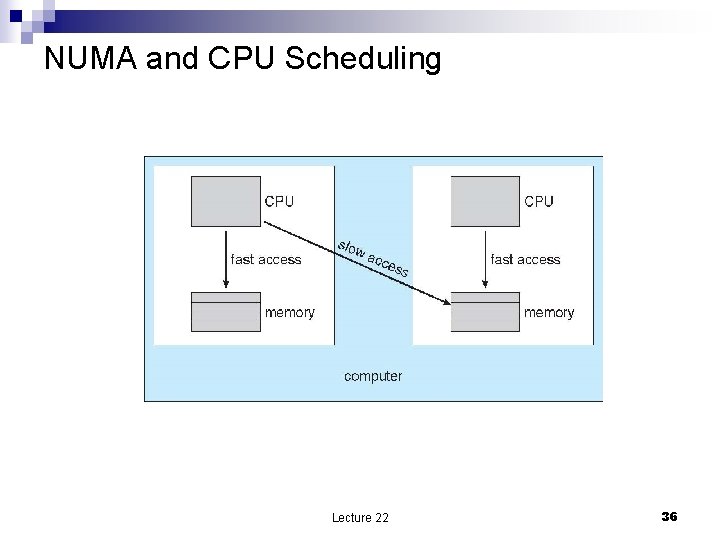

Multiple-Processor Scheduling n n n CPU scheduling more complex when multiple CPUs are available Homogeneous processors within a multiprocessor Asymmetric multiprocessing – only one processor accesses the system data structures, alleviating the need for data sharing Symmetric multiprocessing (SMP) – each processor is selfscheduling, all processes in common ready queue, or each has its own private queue of ready processes. NUMA – Non-Uniform Memory Access. Processor affinity – process has affinity for processor on which it is currently running ¨ soft affinity ¨ hard affinity Lecture 22 35

NUMA and CPU Scheduling Lecture 22 36

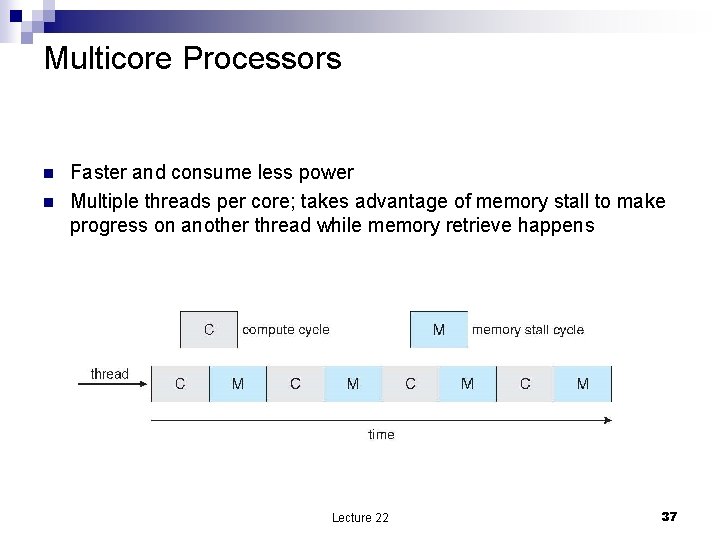

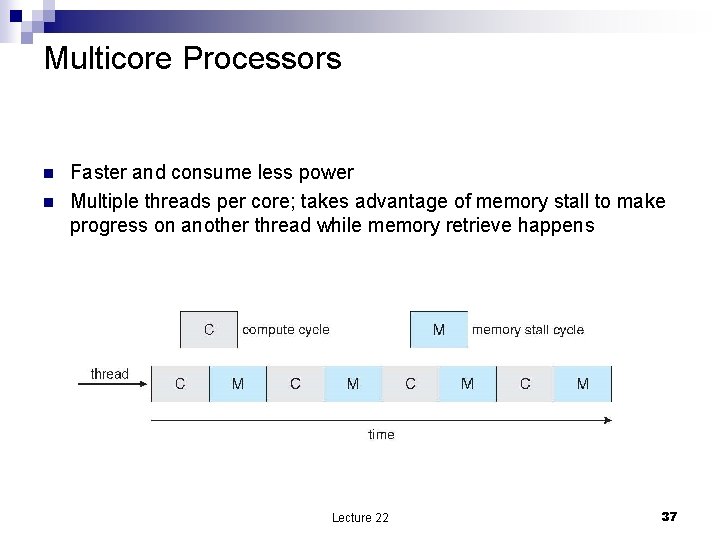

Multicore Processors n n Faster and consume less power Multiple threads per core; takes advantage of memory stall to make progress on another thread while memory retrieve happens Lecture 22 37

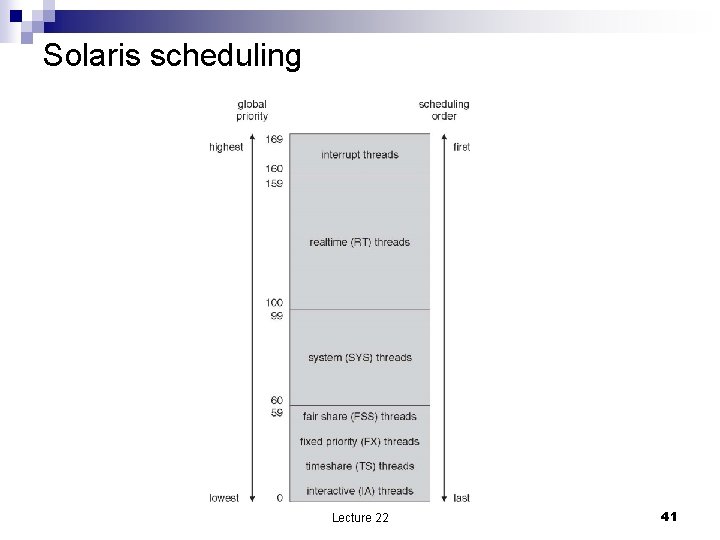

Solaris scheduling n The Solaris 10 kernel threads model consists of the following objects: kernel threads This is what is scheduled/executed on a processor ¨ user threads The user-level thread state within a process. ¨ process The object that tracks the execution environment of a program. ¨ lightweight process (lwp) Execution context for a user thread. Associates a user thread with a kernel thread. ¨ n Fair Share Scheduler (FSS) allows more flexible process priority management. Each project is allocated a certain number of CPU shares via the project. cpu-shares resource control. ¨ Each project is allocated CPU time based on its cpu-shares value divided by the sum of the cpu-shares values for all active projects. ¨ Anything with a zero cpu-shares value will not be granted CPU time until all projects with non-zero cpu-shares are done with the CPU. ¨ Lecture 22 38

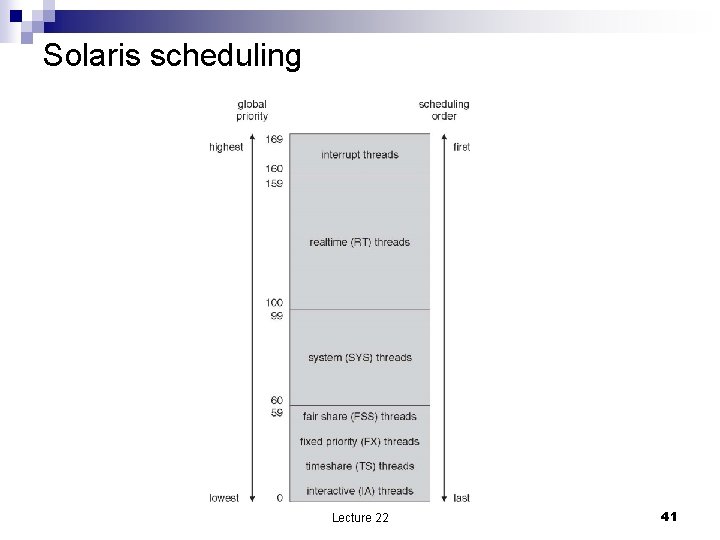

Scheduling classes n n n TS (timeshare): default class for processes and their associated kernel threads. Priority range 0 -59; dynamically adjusted (vary during the lifetime of a process to allocate processor resources evenly. IA (interactive): enhanced version of the TS class that applies to the infocus window in the GUI. Its intent is to give extra resources to processes associated with that specific window. Like TS, IA's range is 0 -59. FSS (fair-share scheduler): Share-based rather than priority- based. Threads scheduled based on their associated shares and the processor's utilization. FSS also has a range 0 -59. FX (fixed-priority): The priorities for threads associated with this class are fixed, do not vary dynamically over the lifetime of the thread. Range 0 -59. SYS (system): Used to schedule kernel threads. Threads in this class are "bound" threads, which means that they run until they block or complete. Priorities for SYS threads are in the 60 -99 range. RT (real-time): Threads in the RT class are fixed-priority, with a fixed time quantum. Their priorities range 100 -159, so an RT thread will preempt a system thread. Lecture 22 39

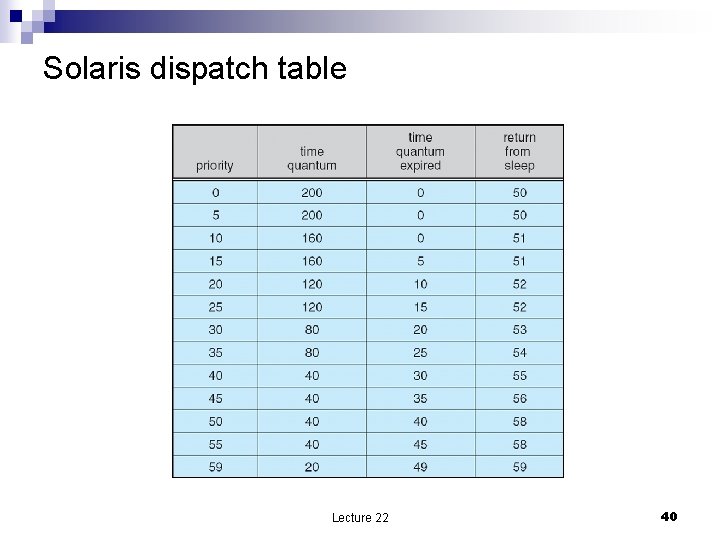

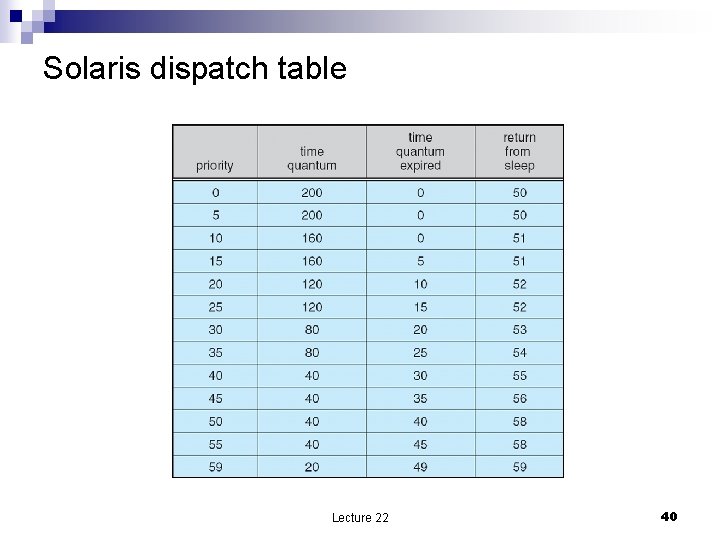

Solaris dispatch table Lecture 22 40

Solaris scheduling Lecture 22 41

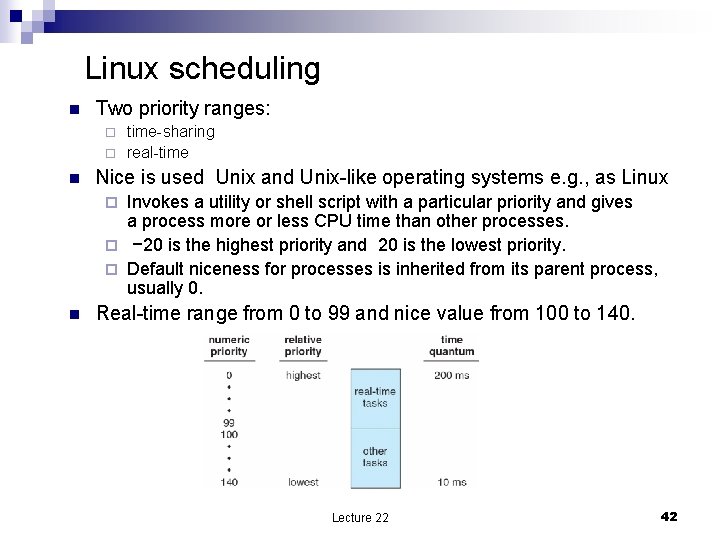

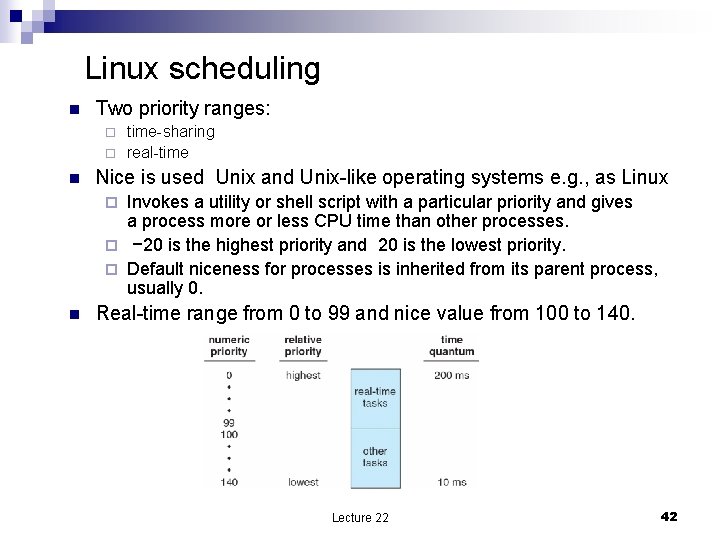

Linux scheduling n Two priority ranges: time-sharing ¨ real-time ¨ n Nice is used Unix and Unix-like operating systems e. g. , as Linux Invokes a utility or shell script with a particular priority and gives a process more or less CPU time than other processes. ¨ − 20 is the highest priority and 20 is the lowest priority. ¨ Default niceness for processes is inherited from its parent process, usually 0. ¨ n Real-time range from 0 to 99 and nice value from 100 to 140. Lecture 22 42

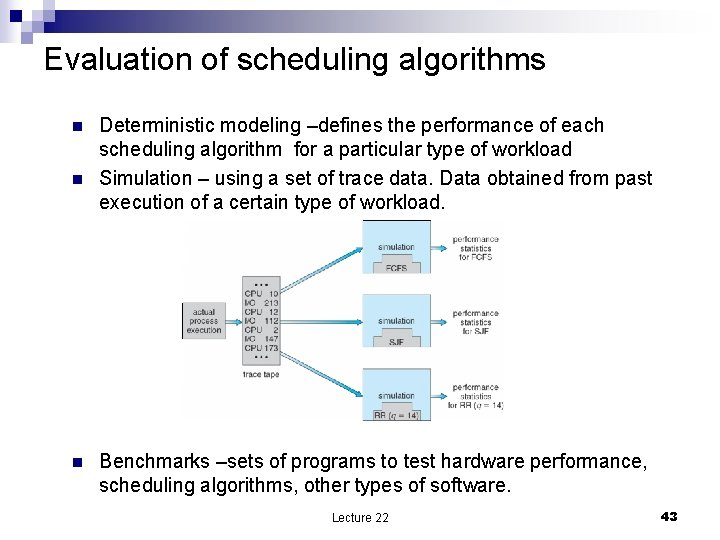

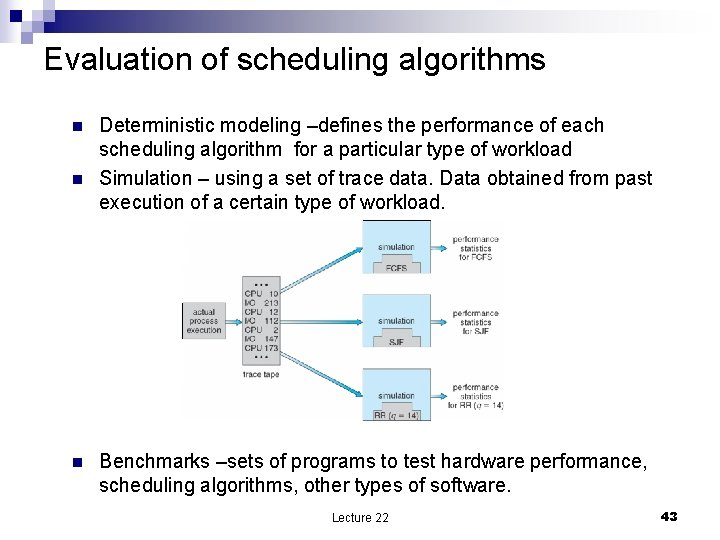

Evaluation of scheduling algorithms n n n Deterministic modeling –defines the performance of each scheduling algorithm for a particular type of workload Simulation – using a set of trace data. Data obtained from past execution of a certain type of workload. Benchmarks –sets of programs to test hardware performance, scheduling algorithms, other types of software. Lecture 22 43