Caption Generation with Attention Model 2015 11 17

![Existing Methods Using detectors Using neural network • Embedding[farhadi 10] • Encoder-Decoder[Vinyals 15] • Existing Methods Using detectors Using neural network • Embedding[farhadi 10] • Encoder-Decoder[Vinyals 15] •](https://slidetodoc.com/presentation_image_h2/487e3c5487a4170007bc8784bd8fb7a2/image-3.jpg)

- Slides: 17

Caption Generation with Attention Model 2015. 11. 17 Jonghwan Mun

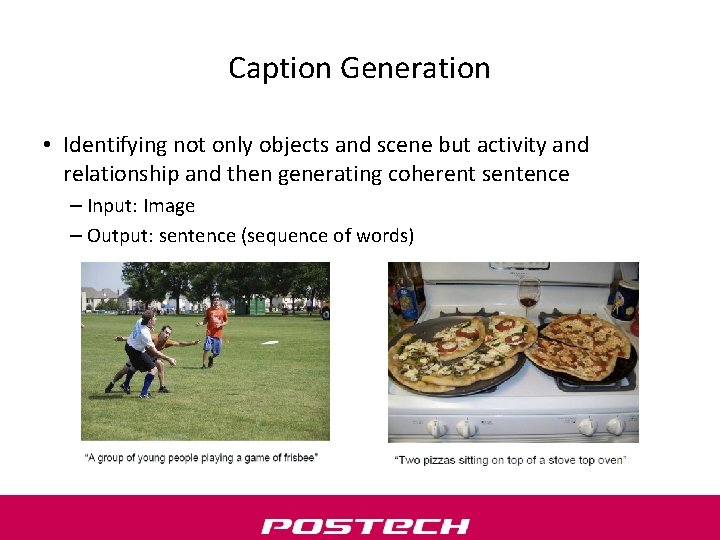

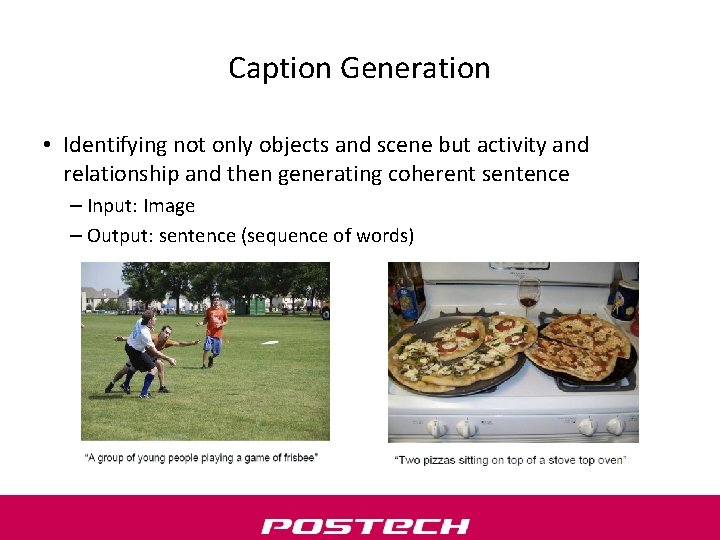

Caption Generation • Identifying not only objects and scene but activity and relationship and then generating coherent sentence – Input: Image – Output: sentence (sequence of words)

![Existing Methods Using detectors Using neural network Embeddingfarhadi 10 EncoderDecoderVinyals 15 Existing Methods Using detectors Using neural network • Embedding[farhadi 10] • Encoder-Decoder[Vinyals 15] •](https://slidetodoc.com/presentation_image_h2/487e3c5487a4170007bc8784bd8fb7a2/image-3.jpg)

Existing Methods Using detectors Using neural network • Embedding[farhadi 10] • Encoder-Decoder[Vinyals 15] • Fill the pre-defined template[Kulkarni 11] • Attention model[Xu 15] L S T M

Attention Model • Select feature relevant to word at every step man is standing <EOS> <INIT> rnn Attention <BOS> rnn Attention man Attention is standing

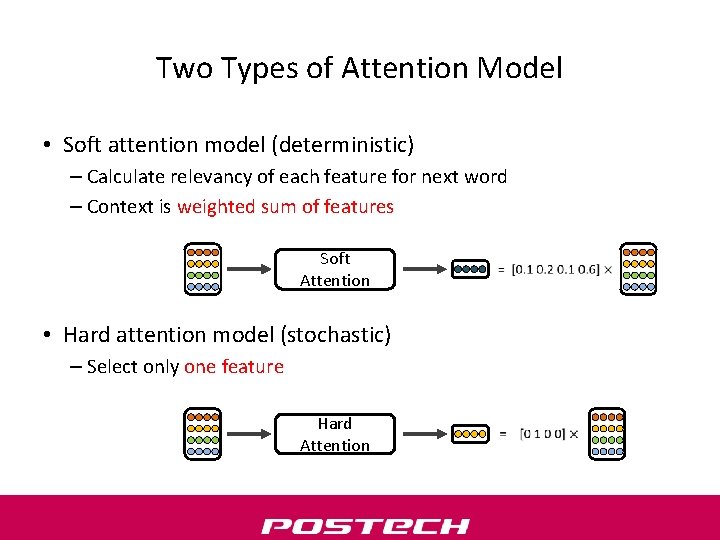

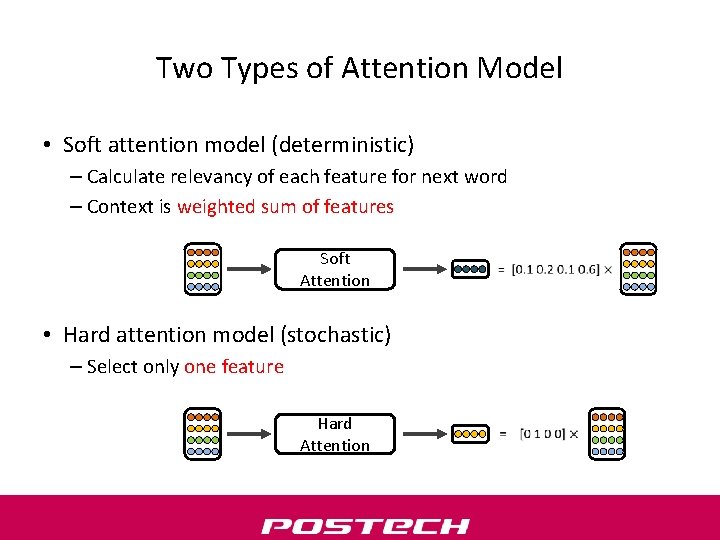

Two Types of Attention Model • Soft attention model (deterministic) – Calculate relevancy of each feature for next word – Context is weighted sum of features Soft Attention • Hard attention model (stochastic) – Select only one feature Hard Attention

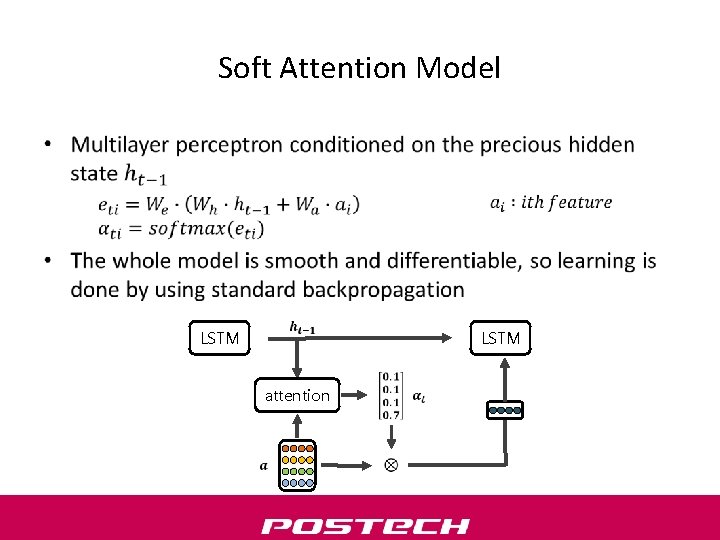

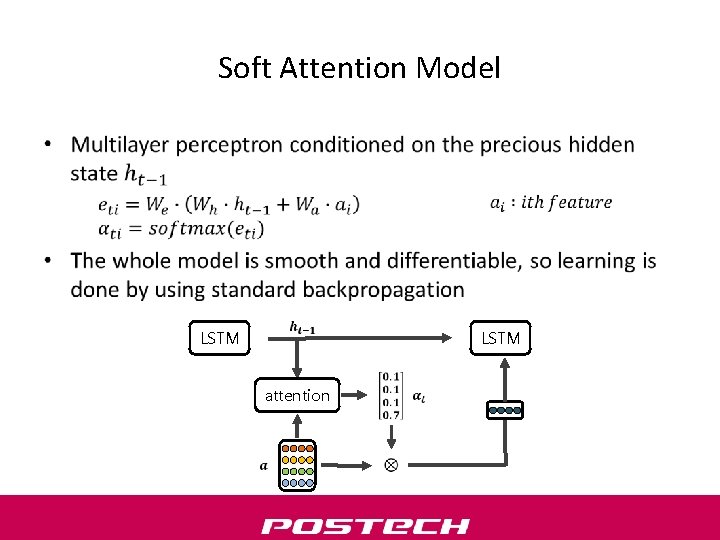

Soft Attention Model • LSTM attention

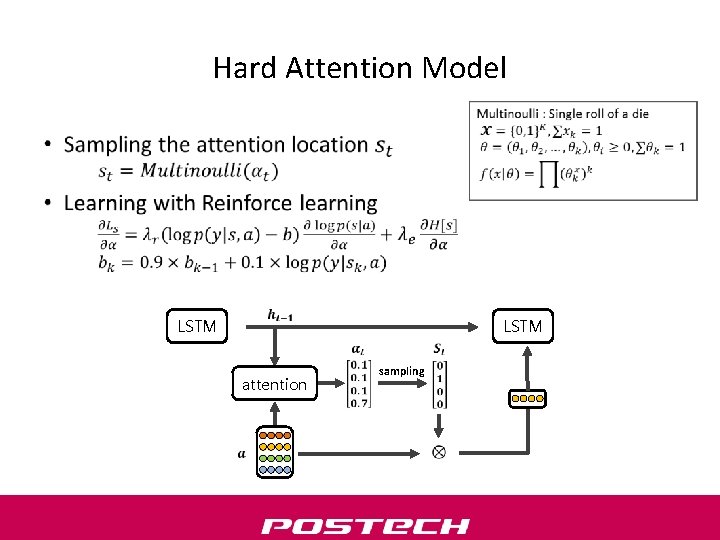

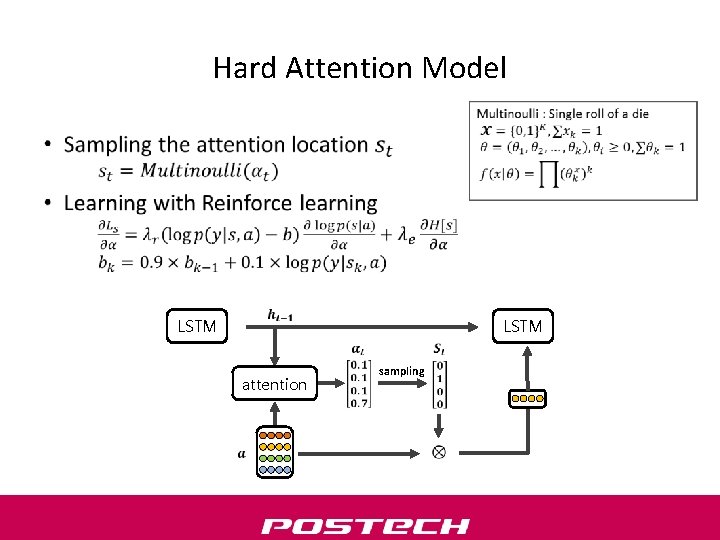

Hard Attention Model • LSTM attention sampling

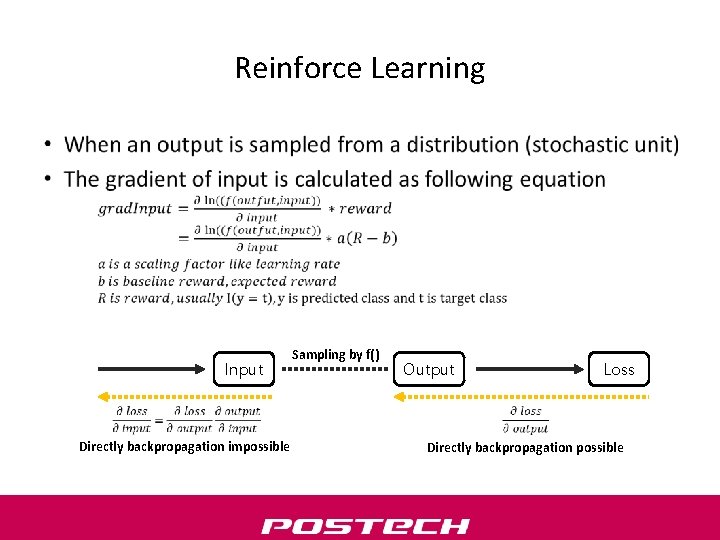

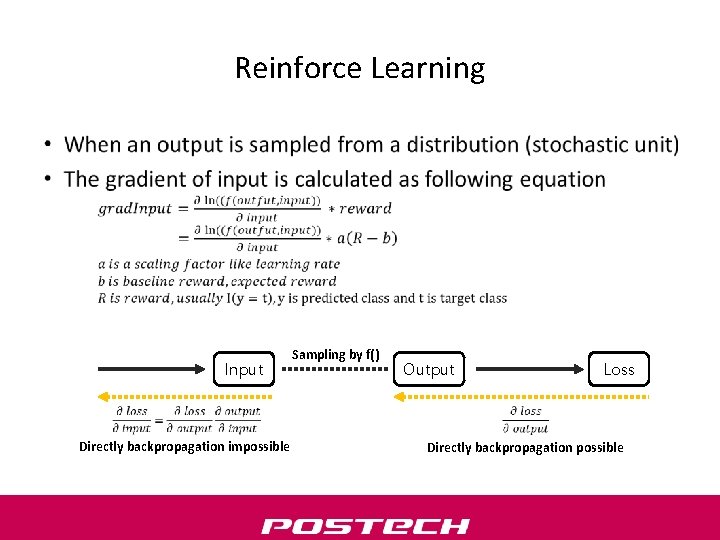

Reinforce Learning • Input Directly backpropagation impossible Sampling by f() Output Loss Directly backpropagation possible

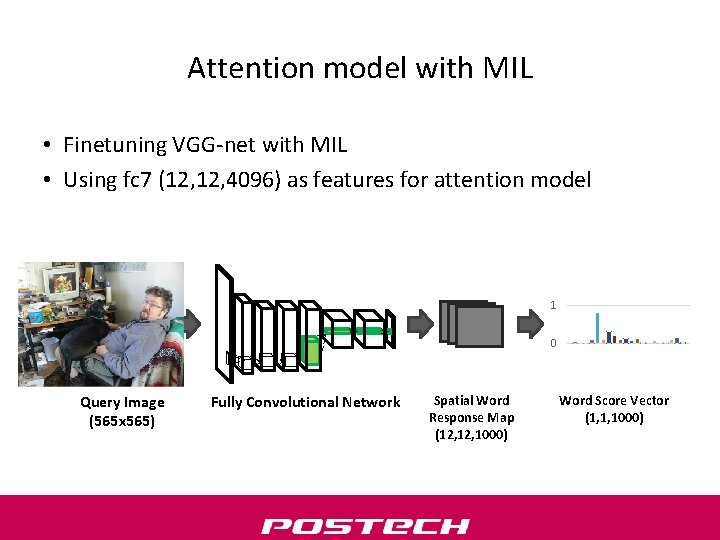

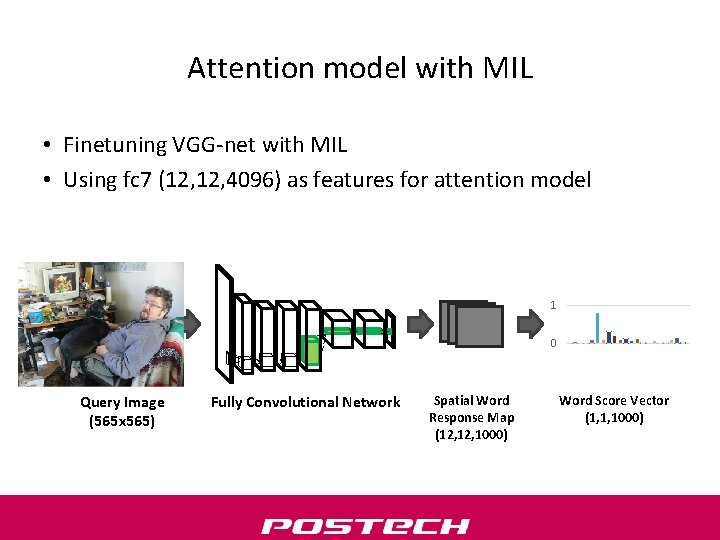

Attention model with MIL • Finetuning VGG-net with MIL • Using fc 7 (12, 4096) as features for attention model 1 0 Query Image (565 x 565) Fully Convolutional Network Spatial Word Response Map (12, 1000) Word Score Vector (1, 1, 1000)

Experiment Result BLEU-1 CIDEr Conv 5 Fc 7 -all Fc 7 -topk-word 65. 7 67. 5 68. 5 81. 9 85. 9 • Training on full train data and additional 30, 000 validation data • Test on 5, 000 validation data

Current Research Direction • Hierarchical attention model – First, select between high-level and low-level layer (hard attention) – Second, attend to region related to word (soft attention) man <INIT> RNN Select Attention Features Select Feature level RNN Attention is Attention <EOS> RNN Attention man Attention standing RNN Attention <BOS> Attention is standing Attention

Experiment Result BLEU-1 Conv 5 Fc 7 -all Fc 7 -sample Hierarchical 61. 9 65. 4 64. 6 64. 7 • Training on 5, 000 validation data • Test on different 5, 000 validation data • Hierarchical is soft-soft attention model

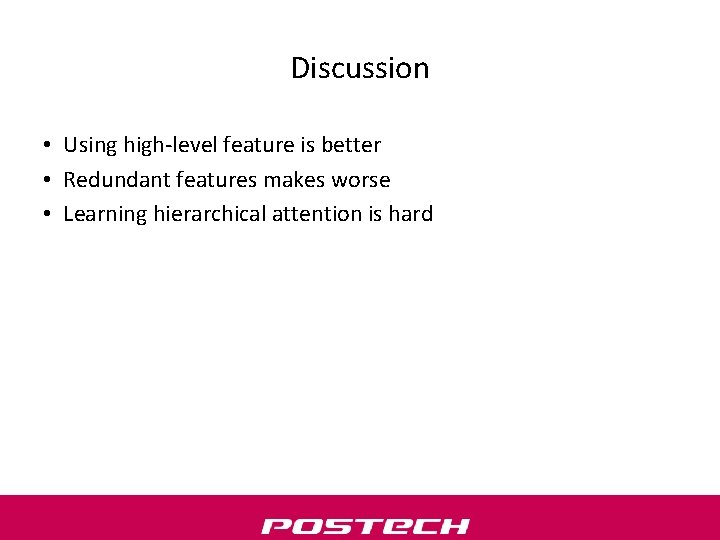

Discussion • Using high-level feature is better • Redundant features makes worse • Learning hierarchical attention is hard

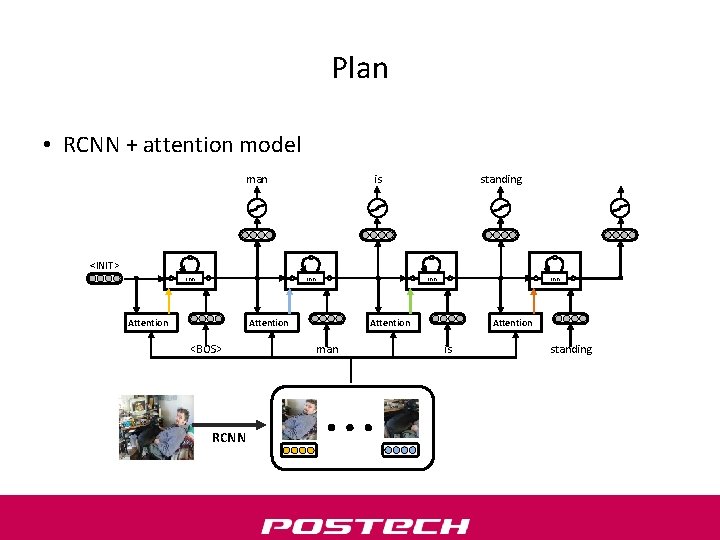

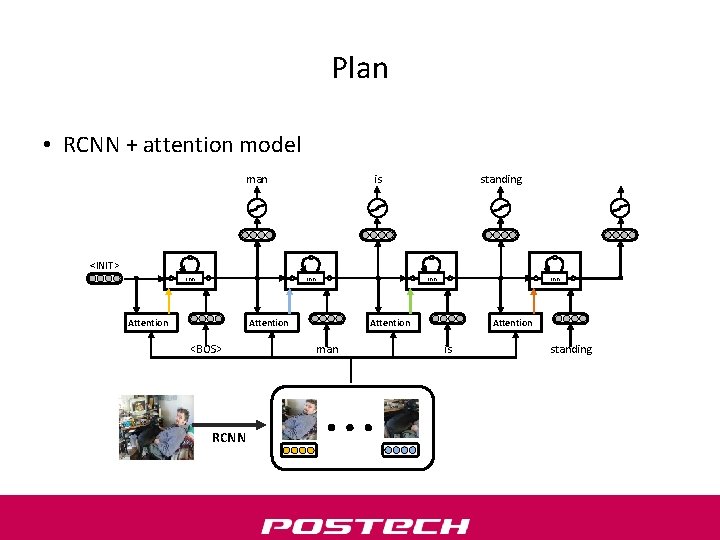

Plan • RCNN + attention model man is standing <INIT> rnn Attention <BOS> RCNN rnn Attention man Attention is standing

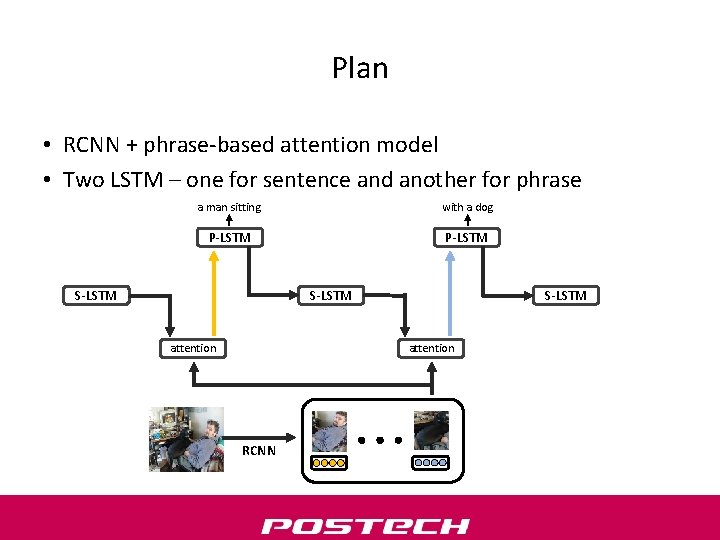

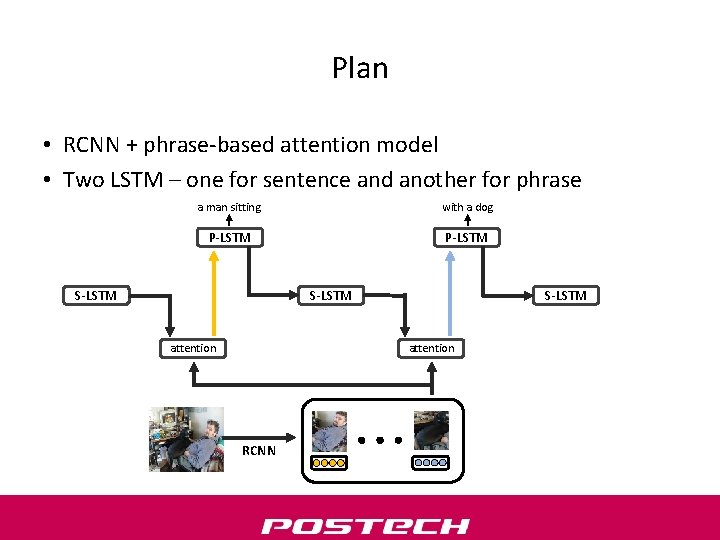

Plan • RCNN + phrase-based attention model • Two LSTM – one for sentence and another for phrase a man sitting with a dog P-LSTM S-LSTM attention RCNN

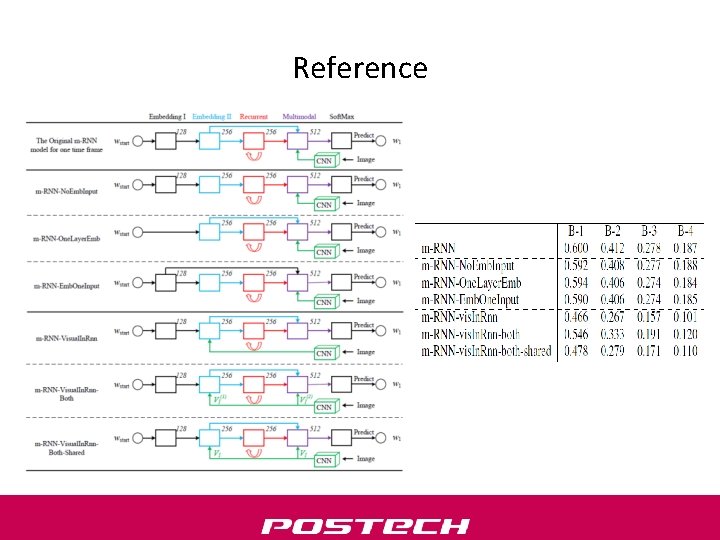

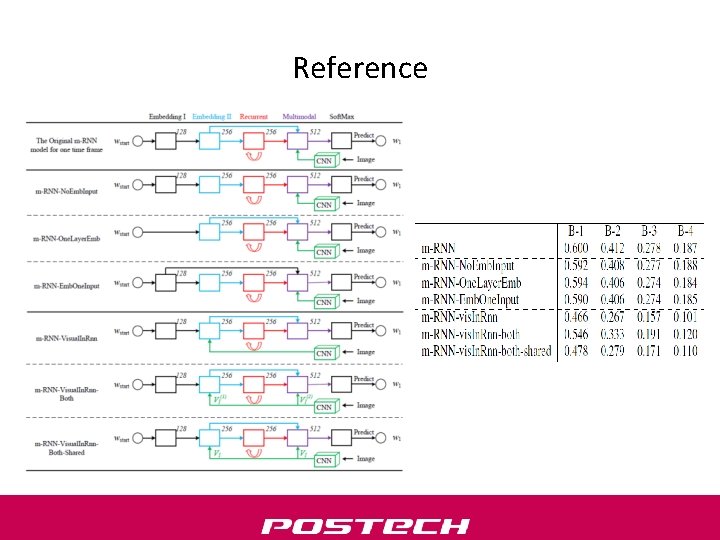

Reference

Reference