Caption Description 2015 6 30 Mun Jonghwan Caption

- Slides: 26

Caption Description 2015. 6. 30 Mun Jonghwan

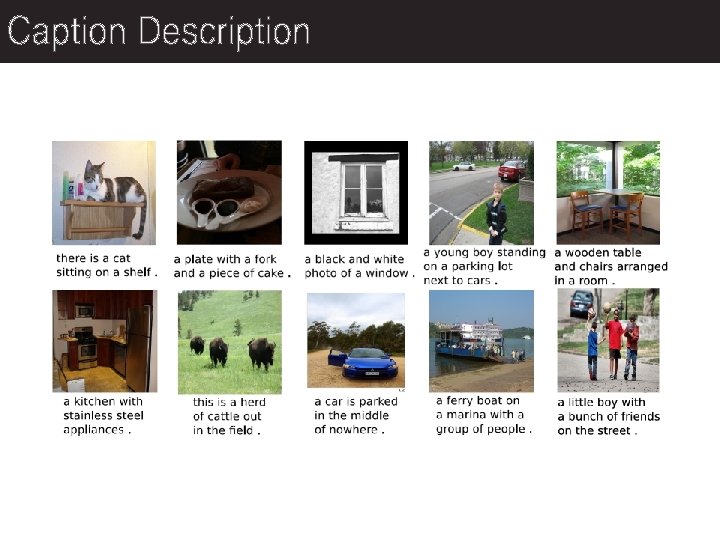

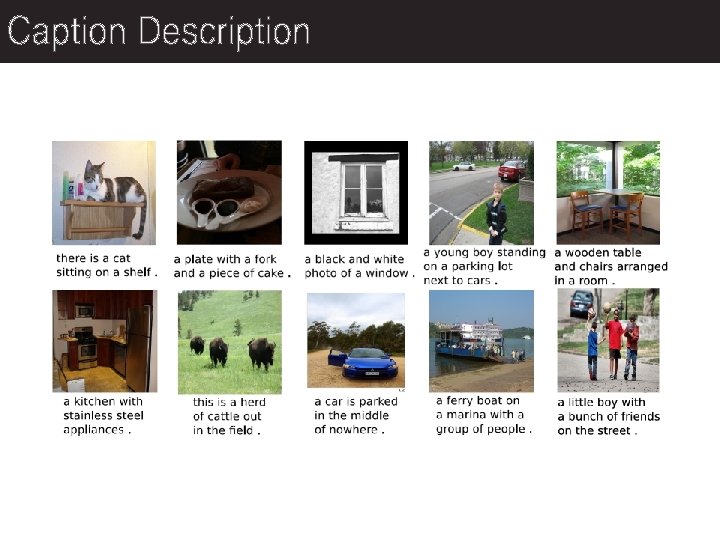

Caption Description

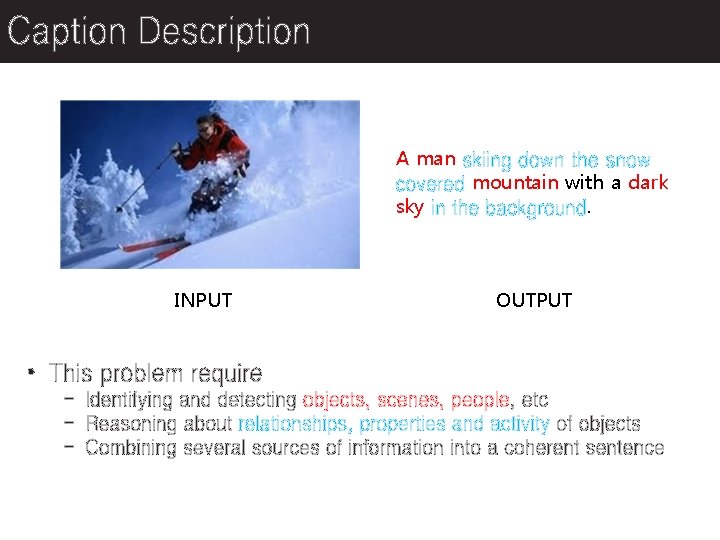

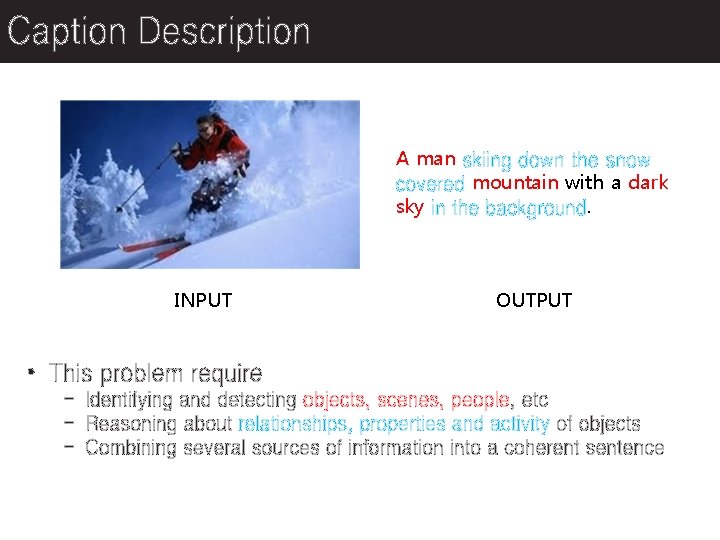

Caption Description A man skiing down the snow covered mountain with a dark sky in the background. INPUT OUTPUT • This problem require - Identifying and detecting objects, scenes, people, etc - Reasoning about relationships, properties and activity of objects - Combining several sources of information into a coherent sentence

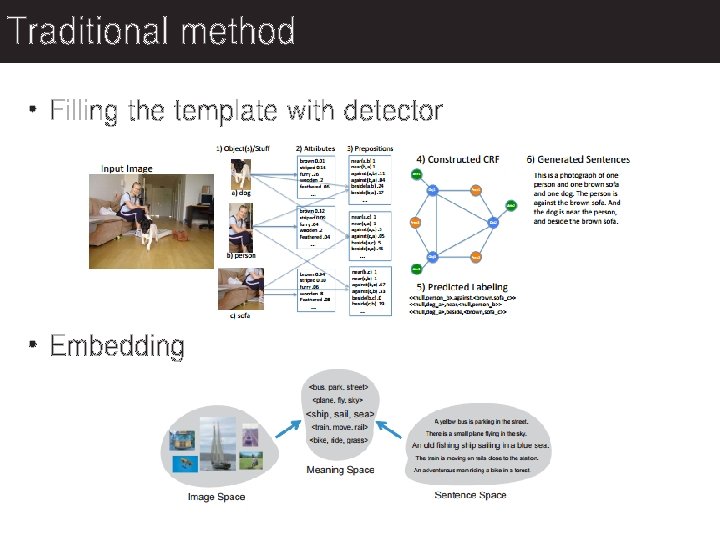

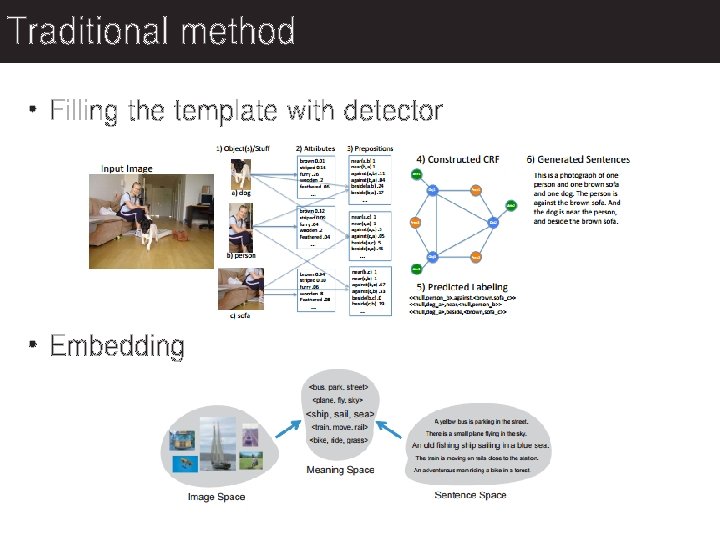

Traditional method • Filling the template with detector • Embedding

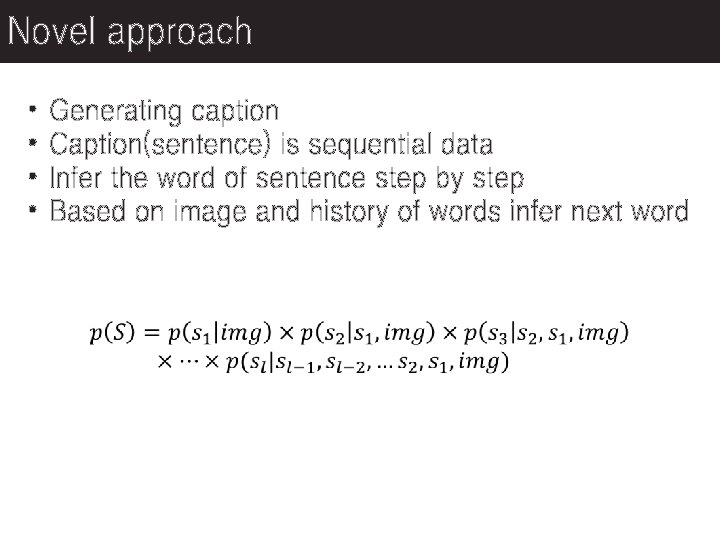

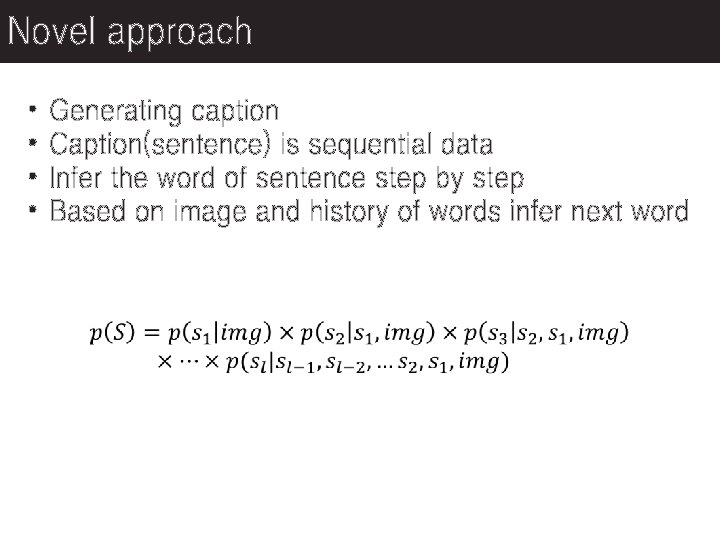

Novel approach • • Generating caption Caption(sentence) is sequential data Infer the word of sentence step by step Based on image and history of words infer next word

Show and Tell: A Neural Image Caption Generator - 2015 CVPR Show, Attend and Tell: Neural Image Caption Generation with Visual Attention - 2015 ICML

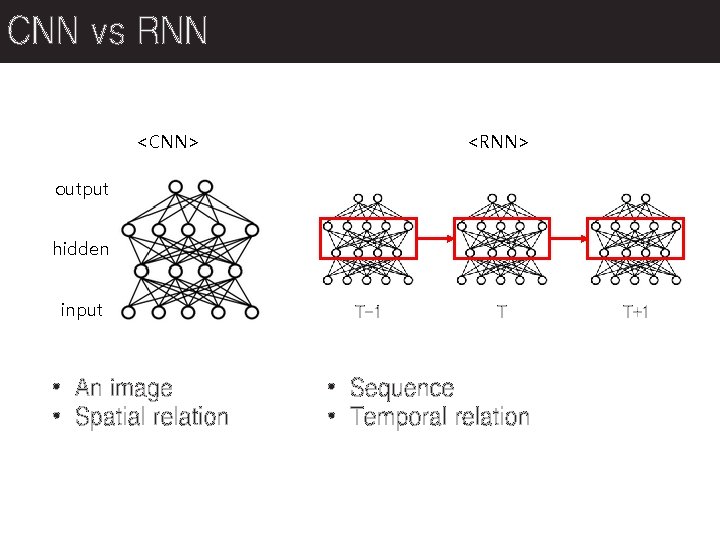

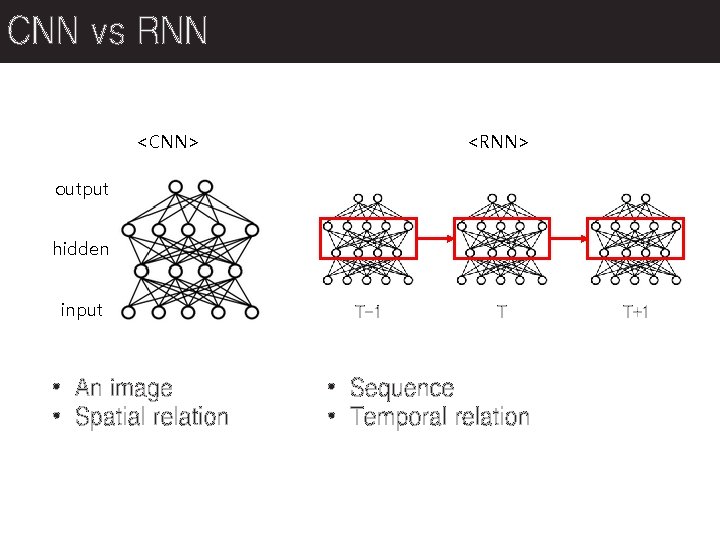

CNN vs RNN <RNN> <CNN> output hidden input • An image • Spatial relation T-1 T • Sequence • Temporal relation T+1

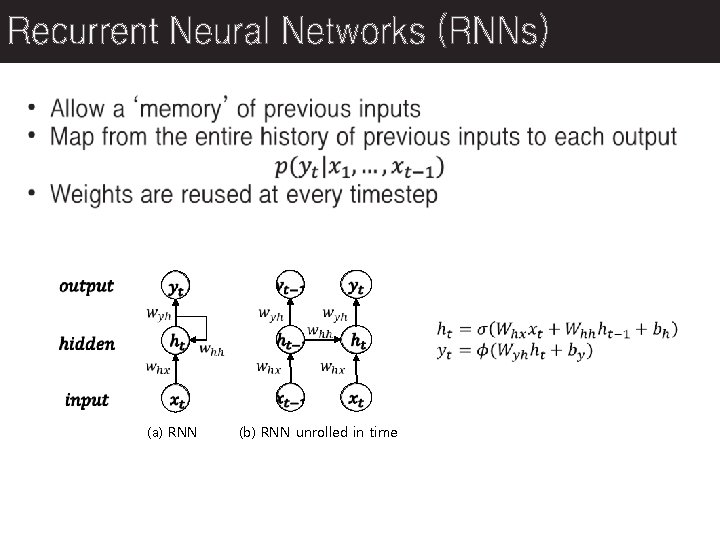

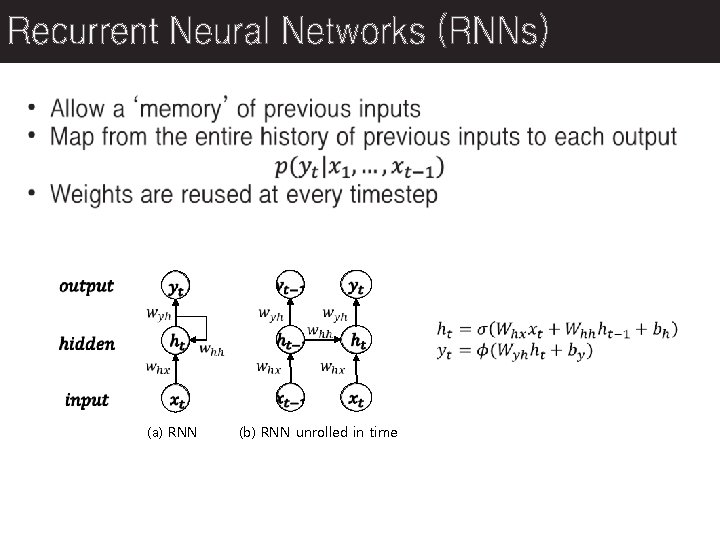

Recurrent Neural Networks (RNNs) (a) RNN (b) RNN unrolled in time

Vanishing gradient problem • Gradient decays over time as new inputs overwrite the activation of hidden layer • The network forget the first inputs • Difficult to learn long-term dependecies

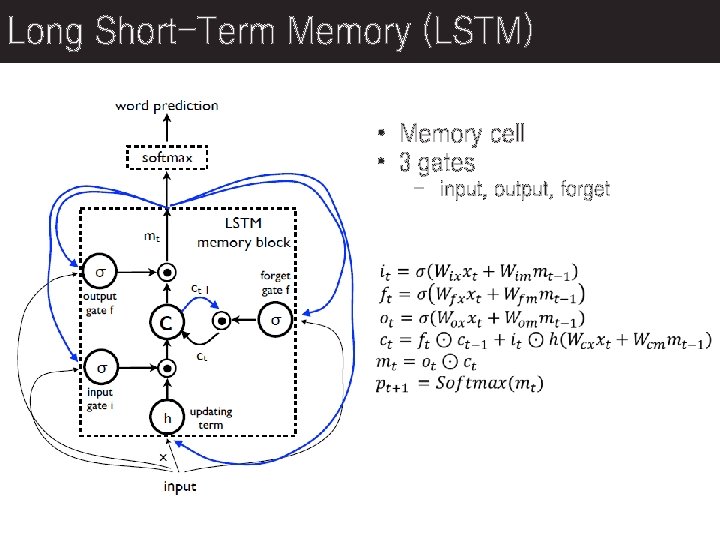

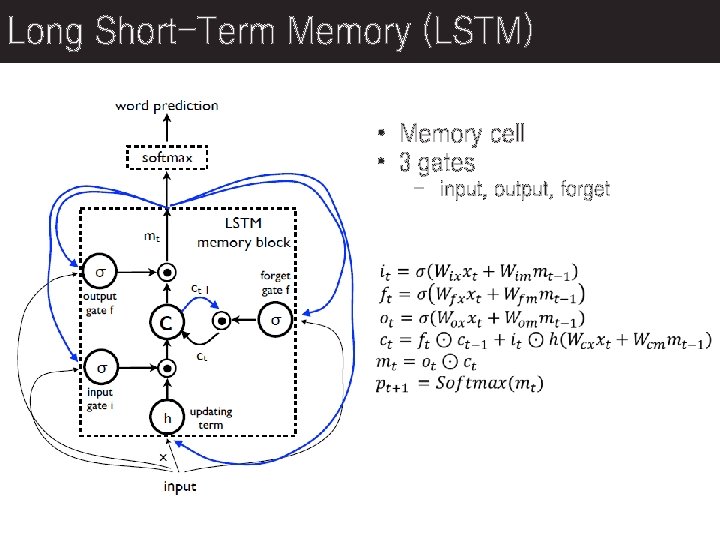

Long Short-Term Memory (LSTM) • Memory cell • 3 gates - input, output, forget

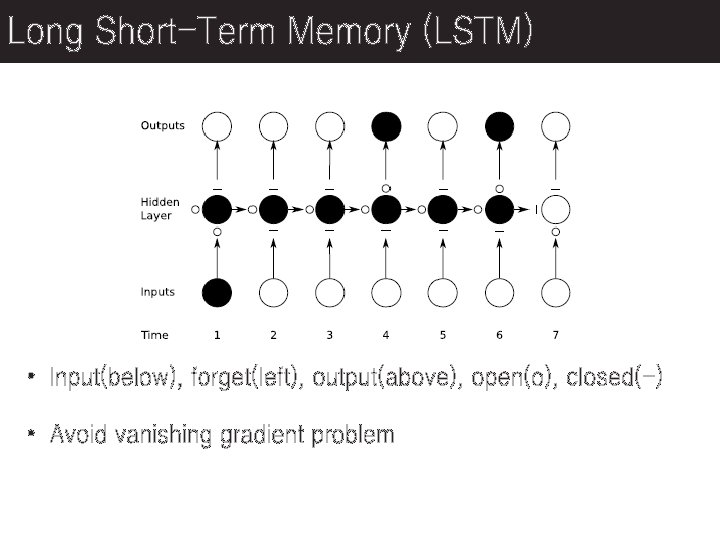

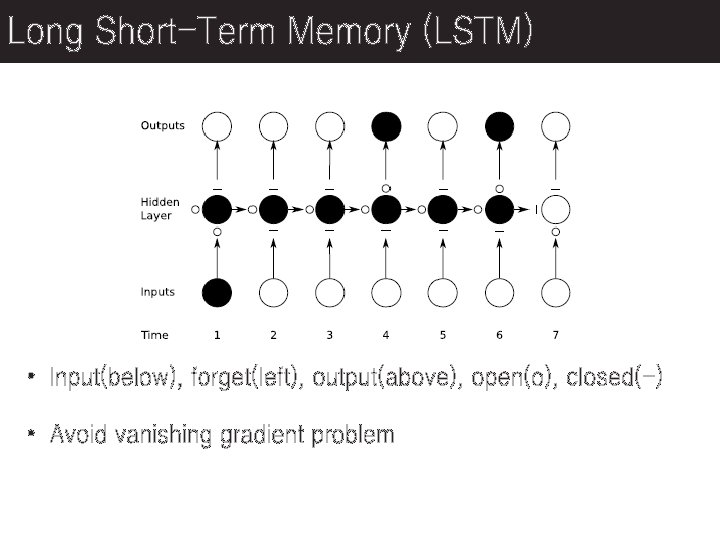

Long Short-Term Memory (LSTM) • Input(below), forget(left), output(above), open(o), closed(-) • Avoid vanishing gradient problem

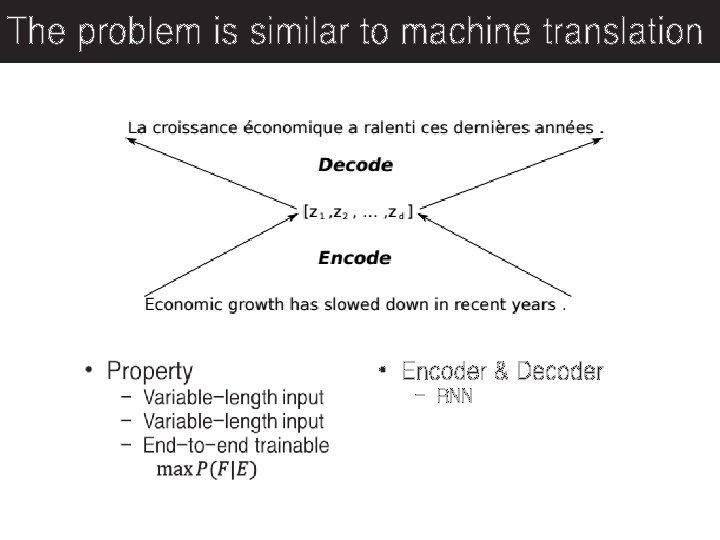

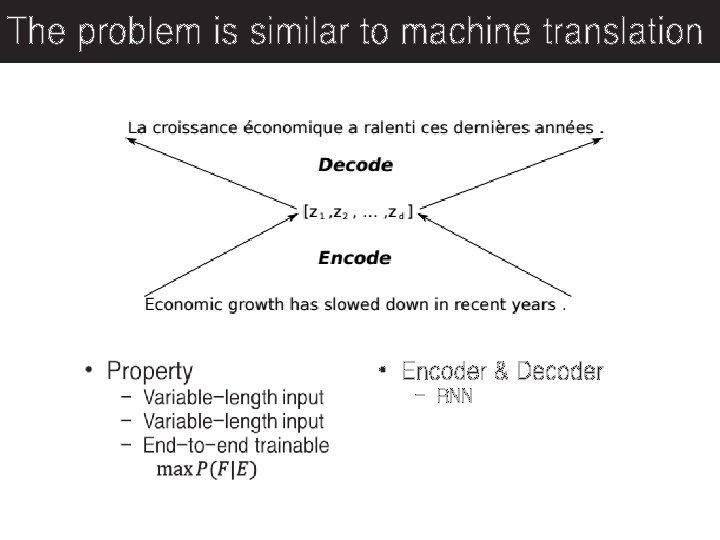

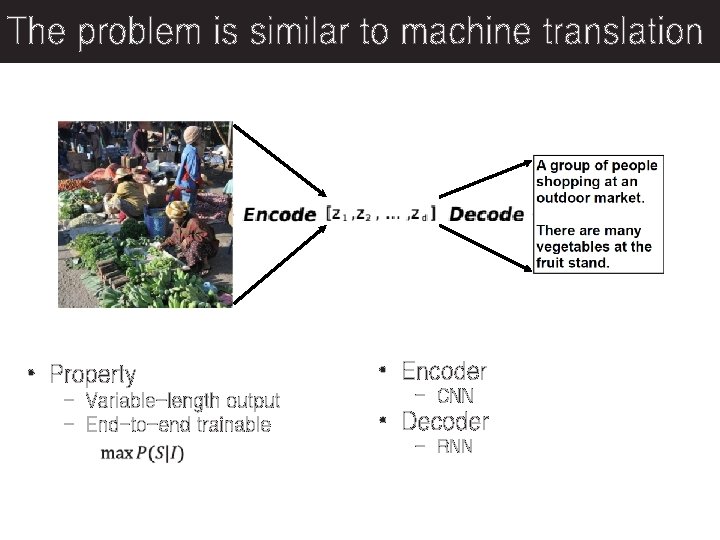

The problem is similar to machine translation • Encoder & Decoder - RNN

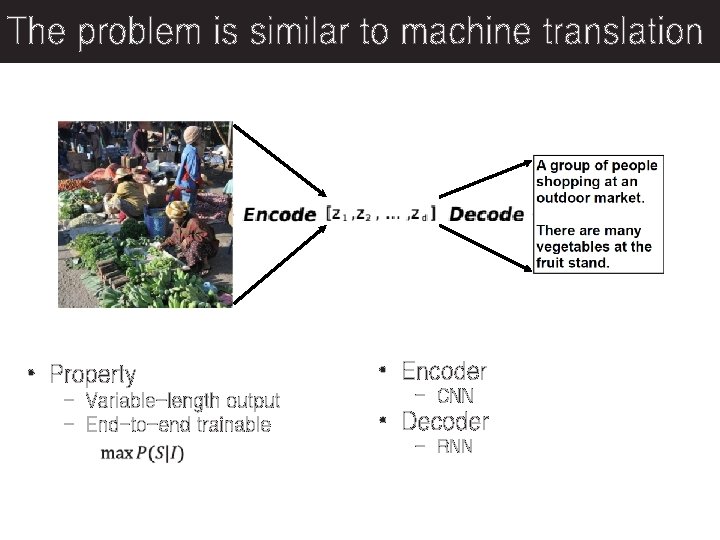

The problem is similar to machine translation • Property - Variable-length output - End-to-end trainable • Encoder - CNN • Decoder - RNN

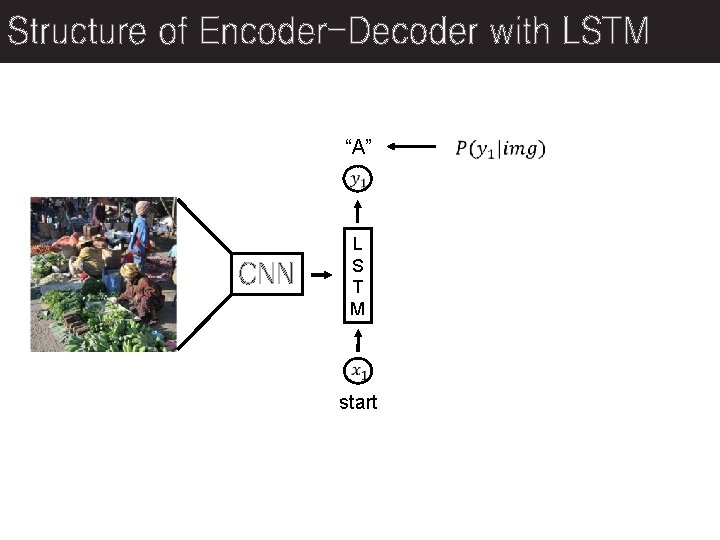

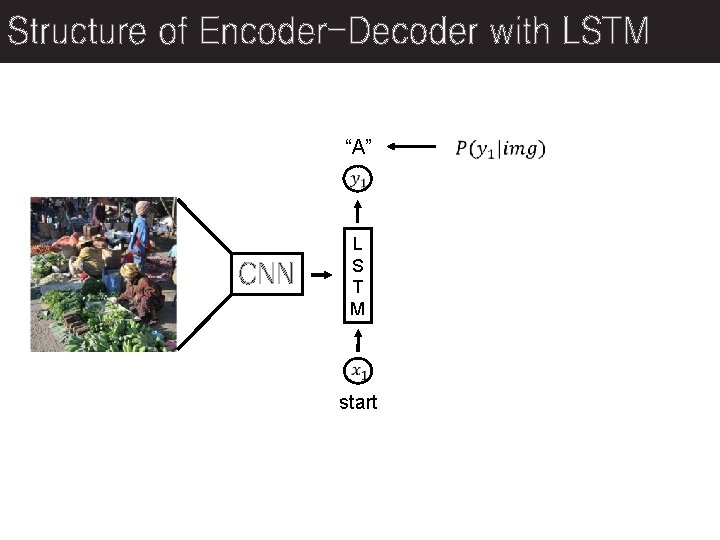

Structure of Encoder-Decoder with LSTM “A” CNN L S T M start

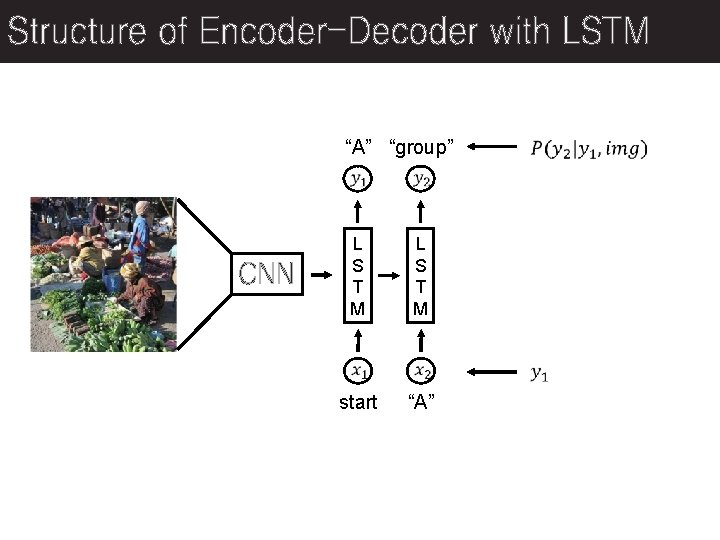

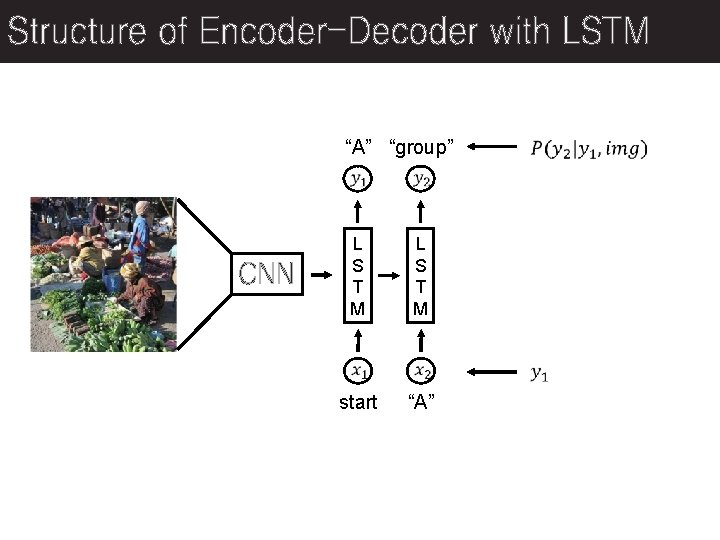

Structure of Encoder-Decoder with LSTM “A” “group” CNN L S T M start “A”

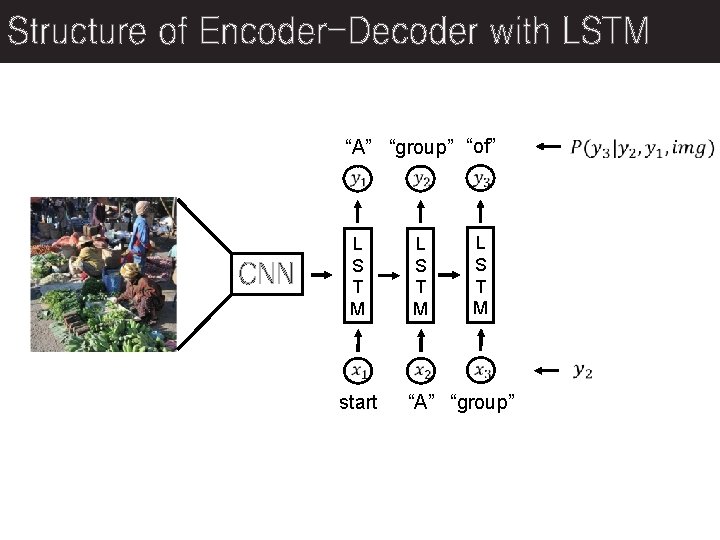

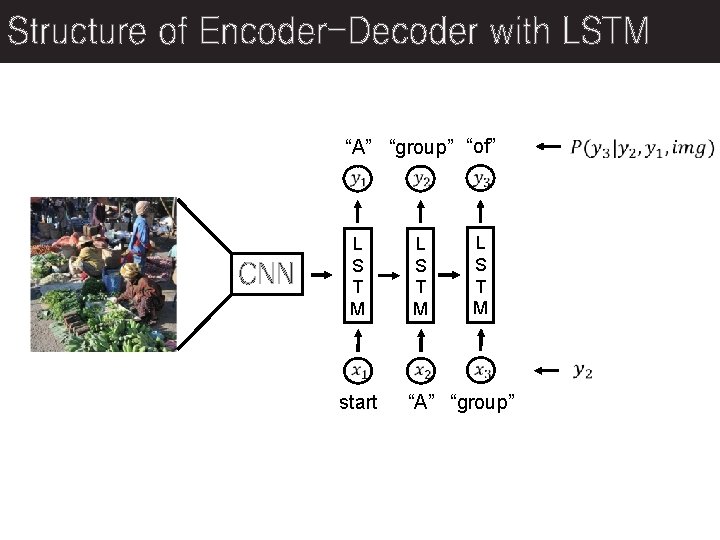

Structure of Encoder-Decoder with LSTM “A” “group” “of” CNN L S T M start L S T M “A” “group”

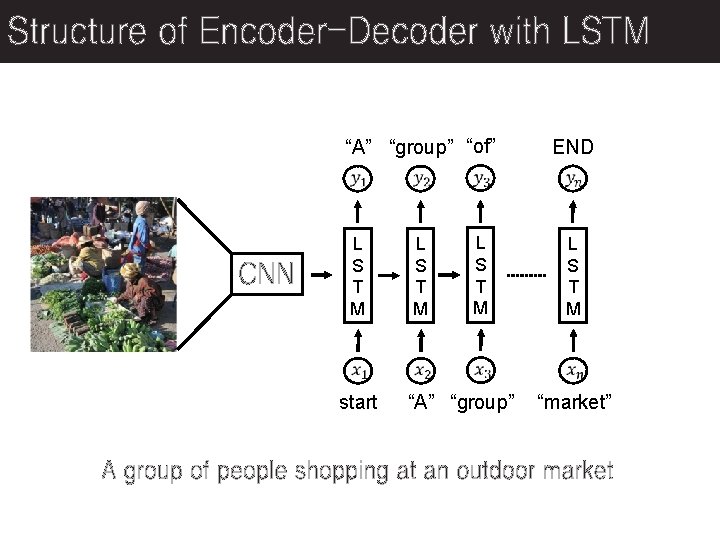

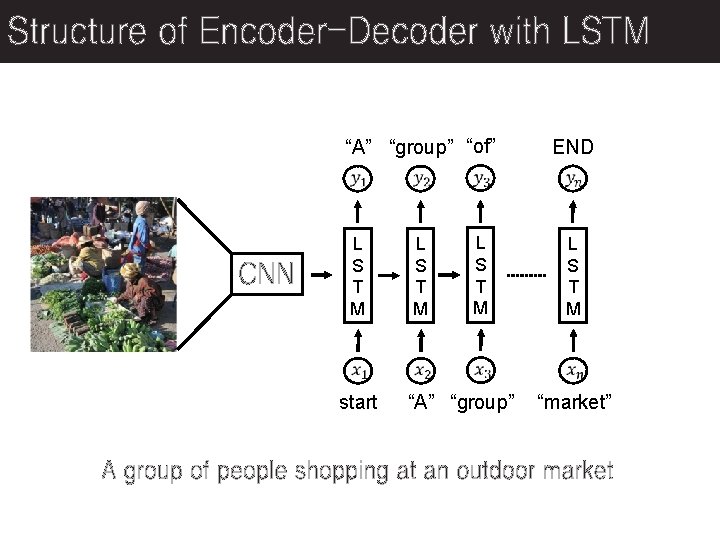

Structure of Encoder-Decoder with LSTM “A” “group” “of” CNN L S T M start L S T M “A” “group” END L S T M “market” A group of people shopping at an outdoor market

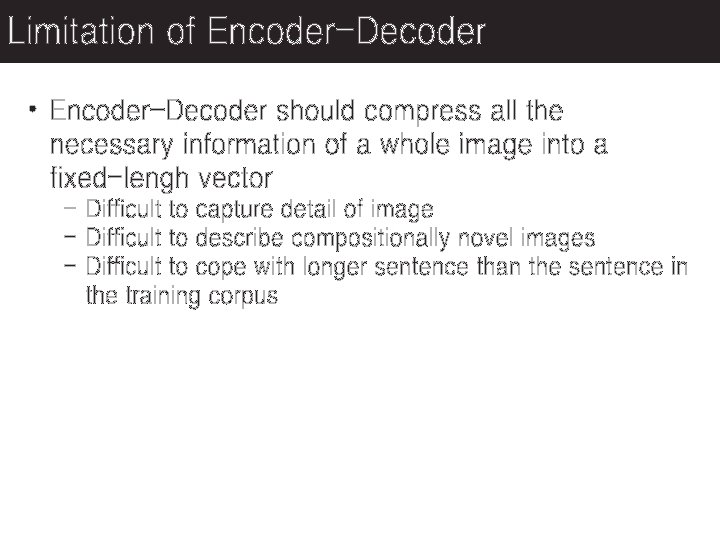

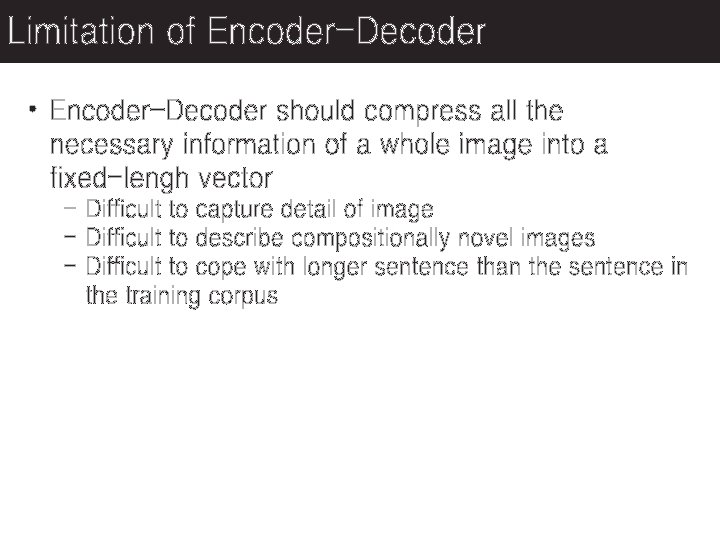

Limitation of Encoder-Decoder • Encoder-Decoder should compress all the necessary information of a whole image into a fixed-lengh vector - Difficult to capture detail of image - Difficult to describe compositionally novel images - Difficult to cope with longer sentence than the sentence in the training corpus

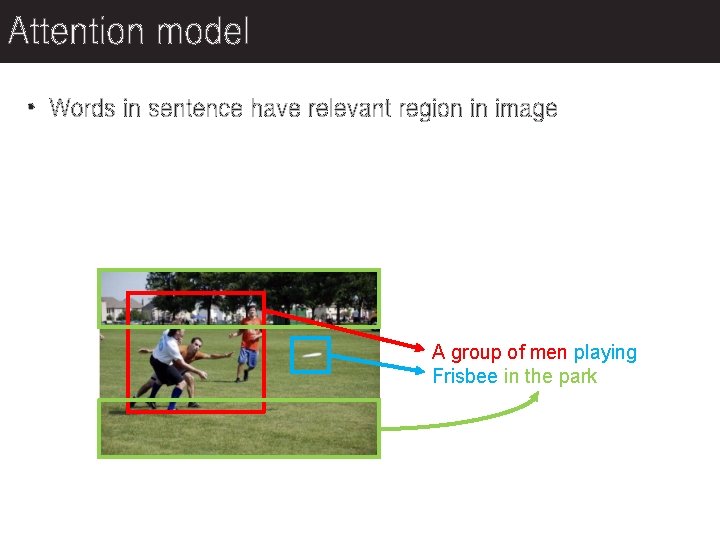

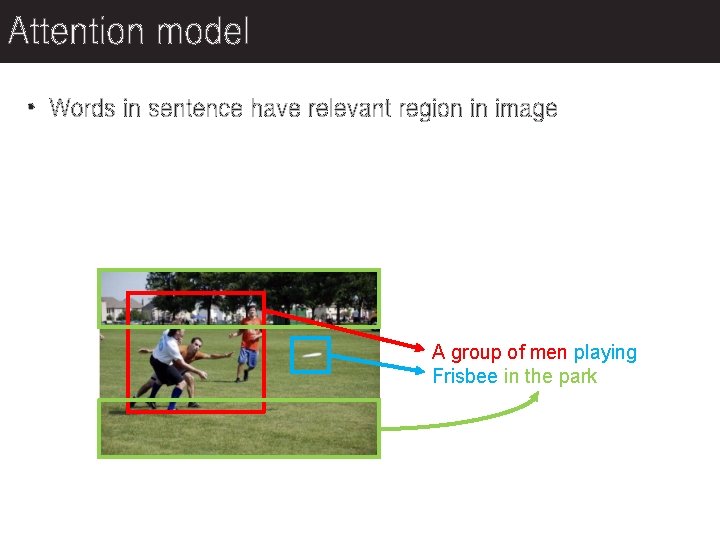

Attention model • Words in sentence have relevant region in image A group of men playing Frisbee in the park

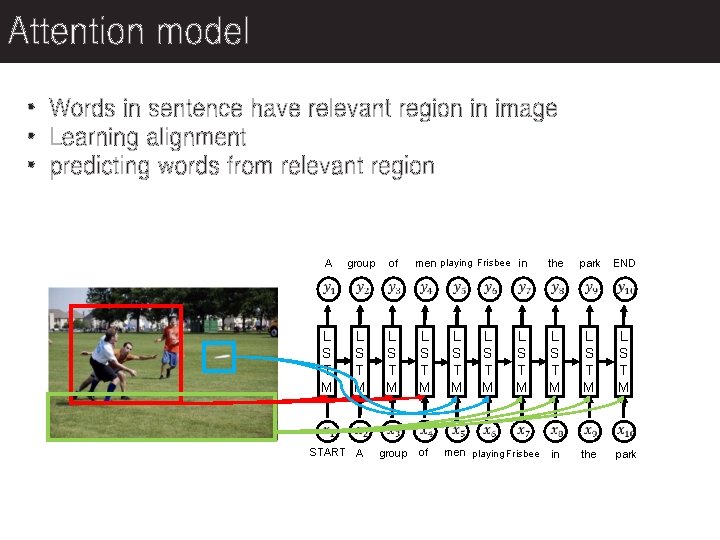

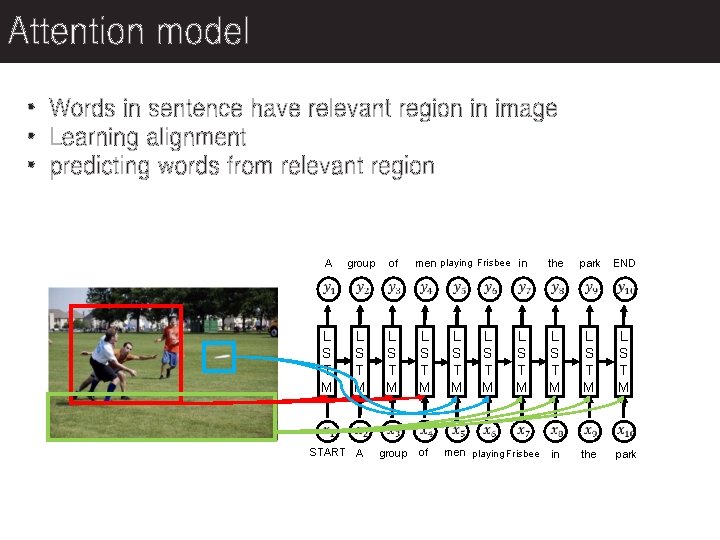

Attention model • Words in sentence have relevant region in image • Learning alignment • predicting words from relevant region A group of men playing Frisbee in the park END L S T M L S T M group of in the park START A L S T M men playing Frisbee

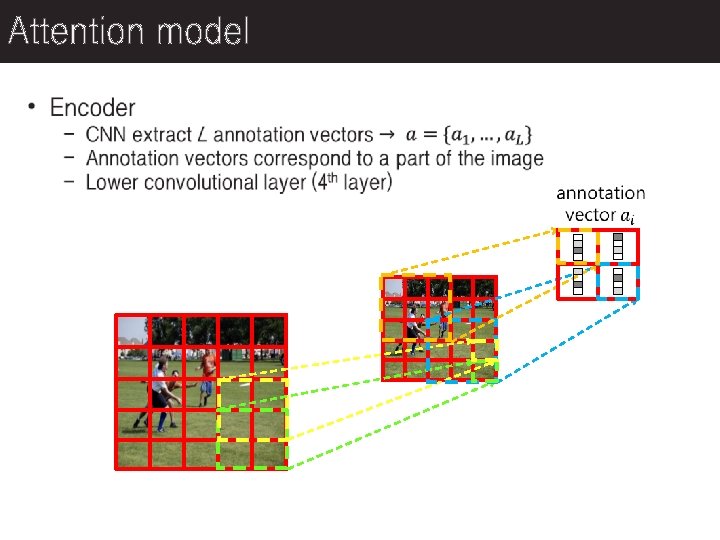

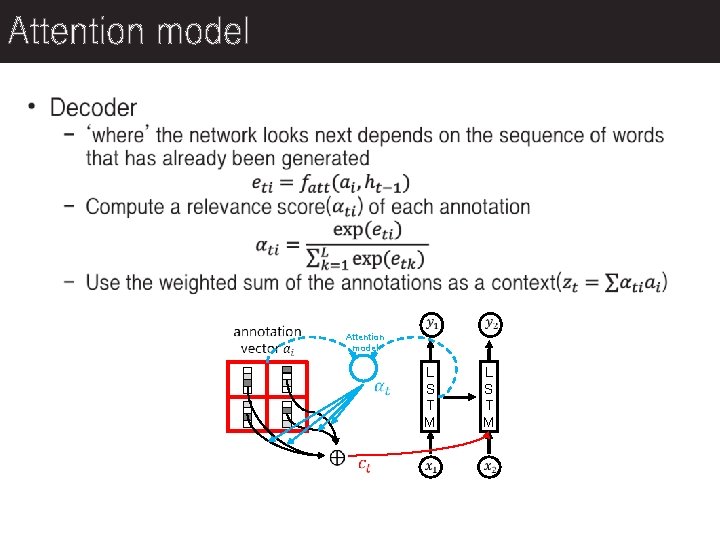

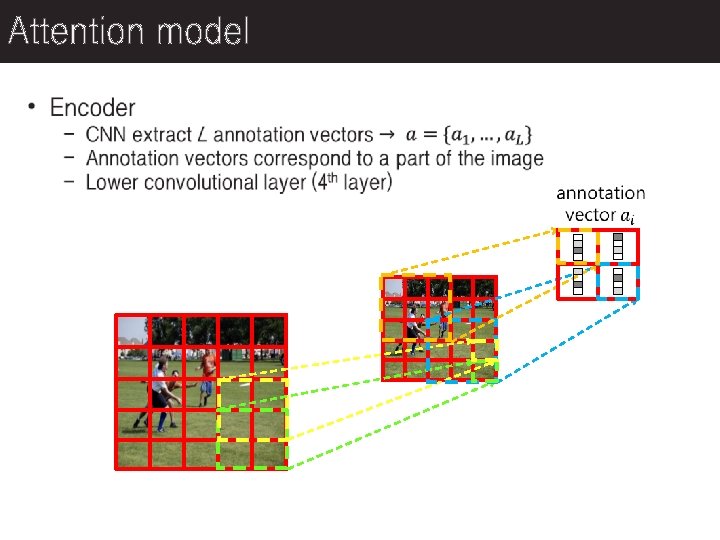

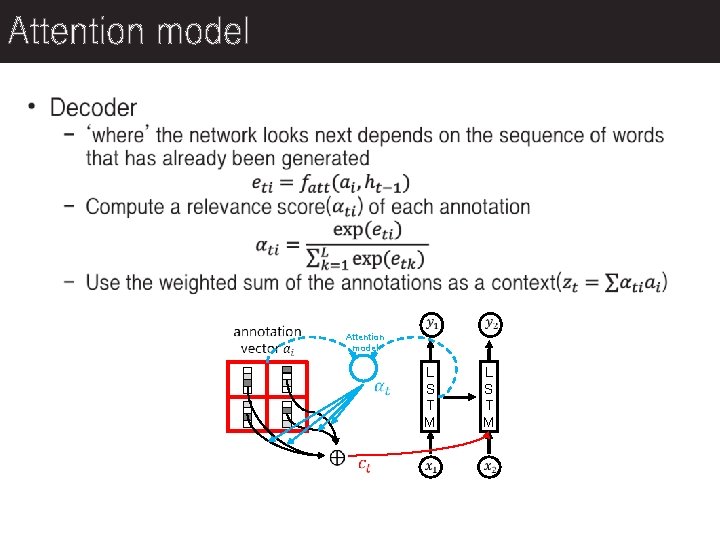

Attention model

Attention model L S T M

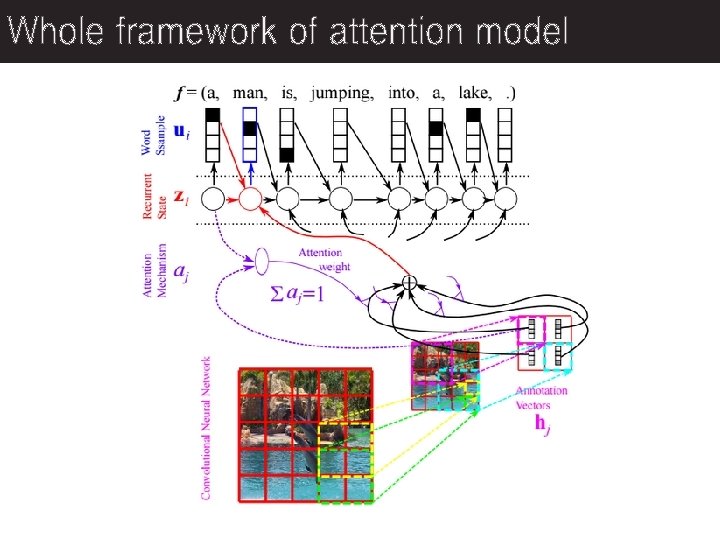

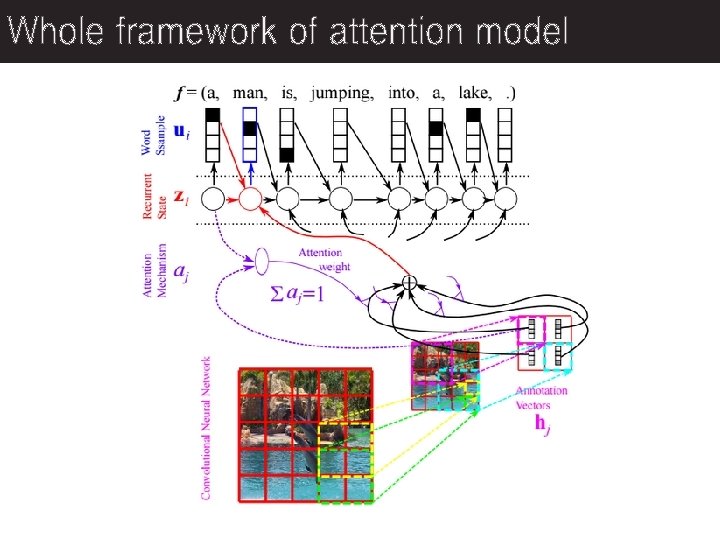

Whole framework of attention model

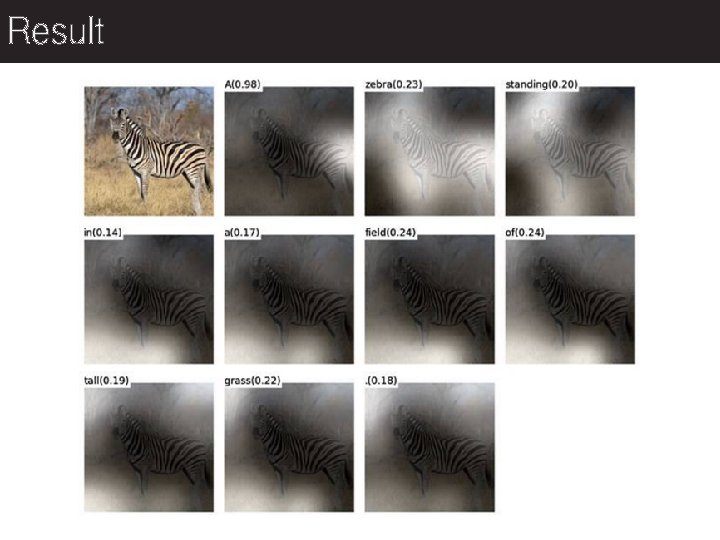

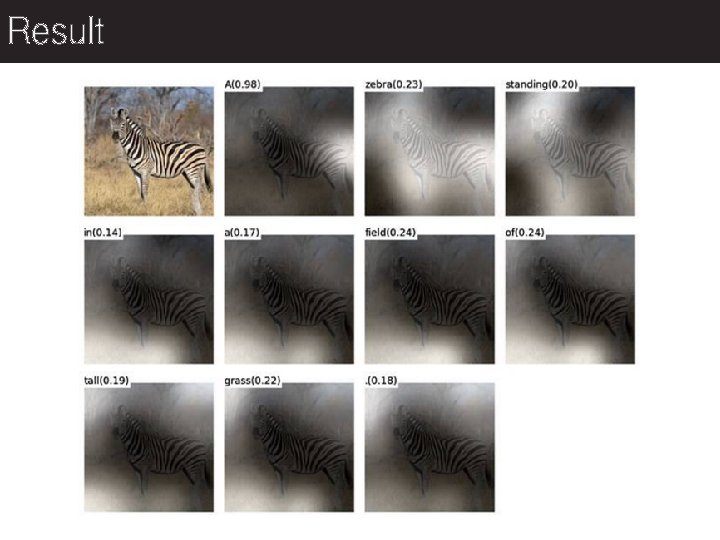

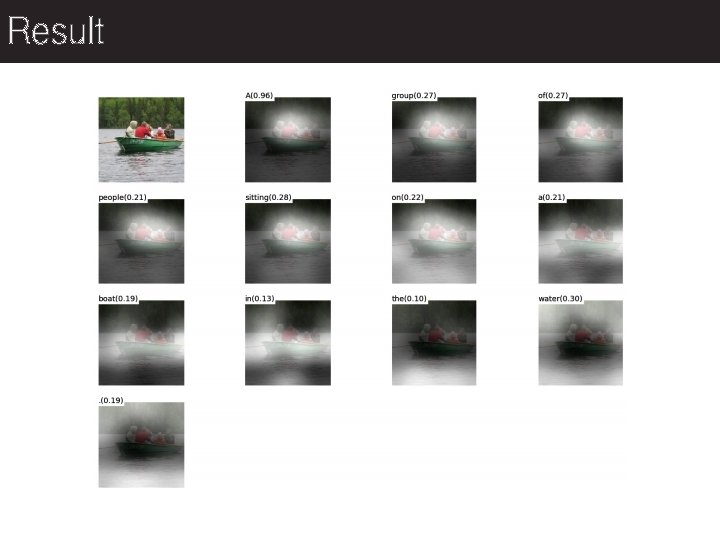

Result

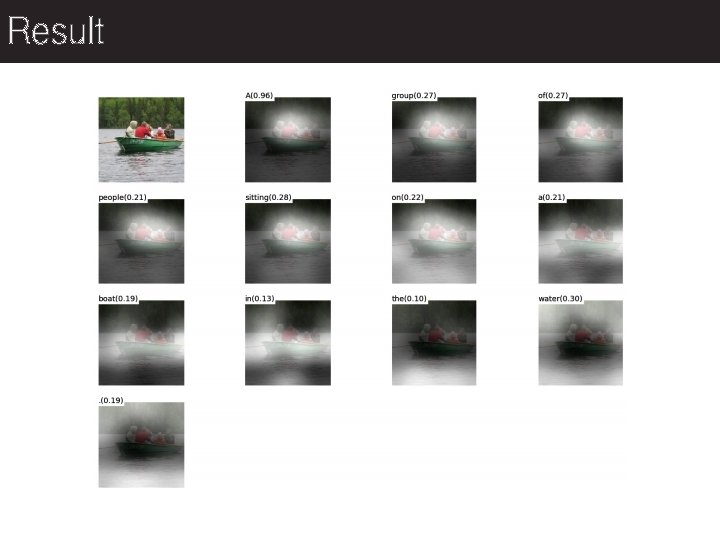

Result

Thank you