Automatic Performance Tuning Jeremy Johnson Dept of Computer

- Slides: 25

Automatic Performance Tuning Jeremy Johnson Dept. of Computer Science Drexel University

Outline • Scientific Computation Kernels – Matrix Multiplication – Fast Fourier Transform (FFT) – Integer Multiplication • Automated Performance Tuning (IEEE Proc. Vol. 93, No. 2, Feb. 2005) – – ATLAS FFTW SPIRAL GMP

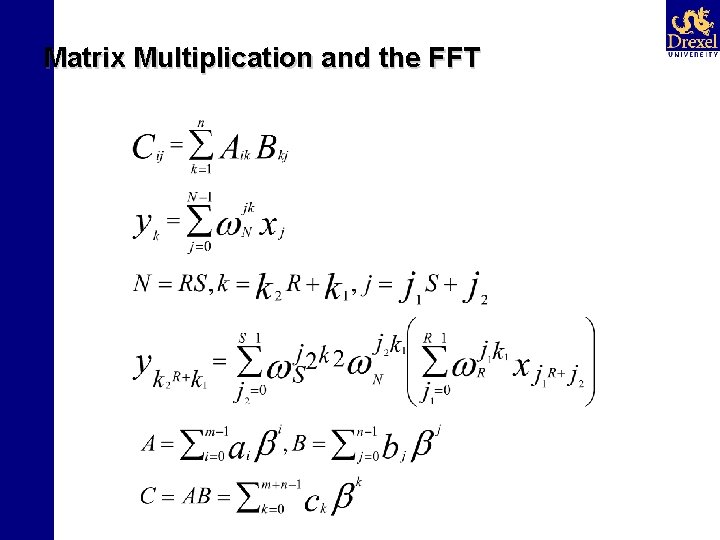

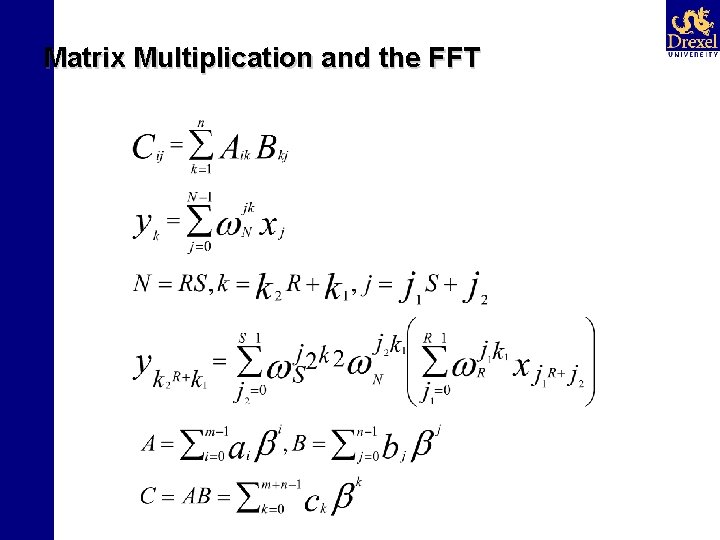

Matrix Multiplication and the FFT

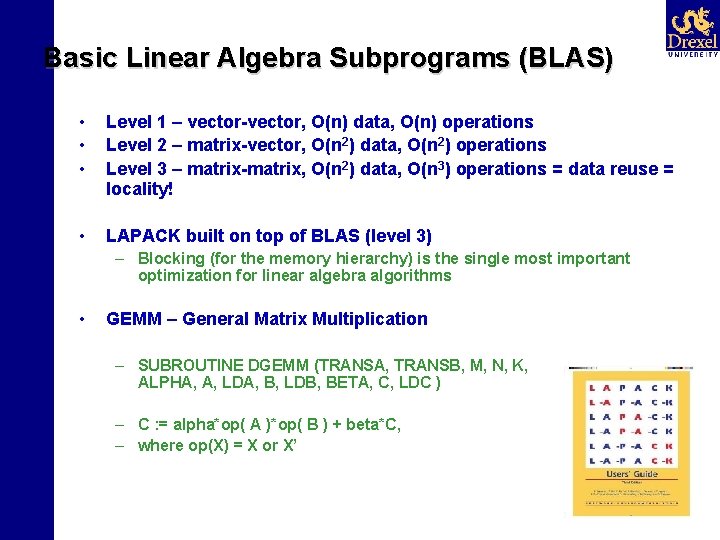

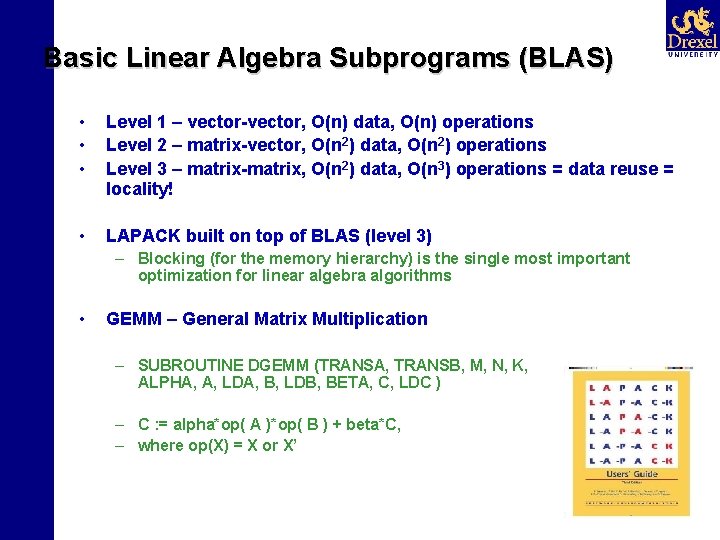

Basic Linear Algebra Subprograms (BLAS) • • • Level 1 – vector-vector, O(n) data, O(n) operations Level 2 – matrix-vector, O(n 2) data, O(n 2) operations Level 3 – matrix-matrix, O(n 2) data, O(n 3) operations = data reuse = locality! • LAPACK built on top of BLAS (level 3) – Blocking (for the memory hierarchy) is the single most important optimization for linear algebra algorithms • GEMM – General Matrix Multiplication – SUBROUTINE DGEMM (TRANSA, TRANSB, M, N, K, ALPHA, A, LDA, B, LDB, BETA, C, LDC ) – C : = alpha*op( A )*op( B ) + beta*C, – where op(X) = X or X’

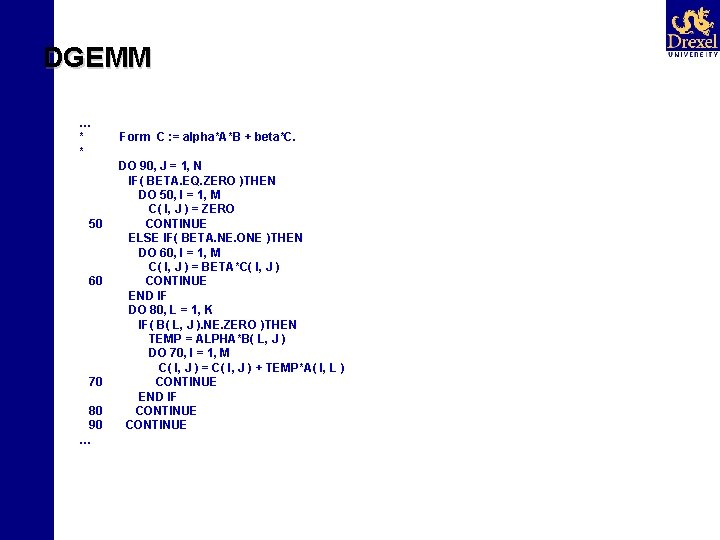

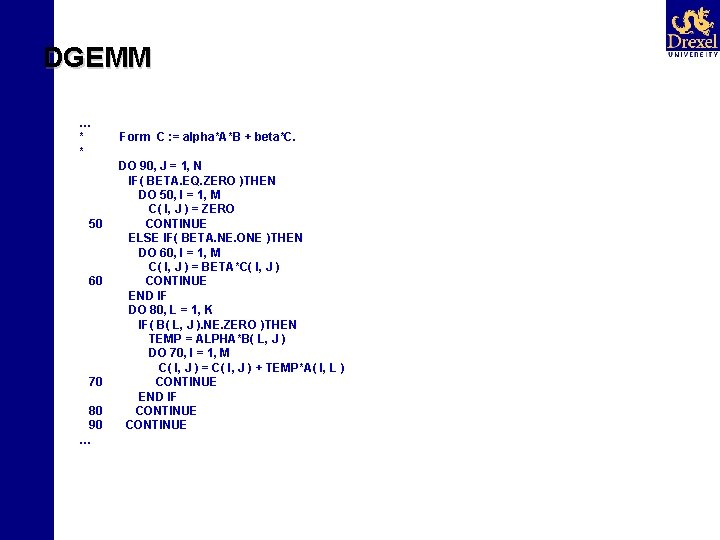

DGEMM … * * 50 60 70 80 90 … Form C : = alpha*A*B + beta*C. DO 90, J = 1, N IF( BETA. EQ. ZERO )THEN DO 50, I = 1, M C( I, J ) = ZERO CONTINUE ELSE IF( BETA. NE. ONE )THEN DO 60, I = 1, M C( I, J ) = BETA*C( I, J ) CONTINUE END IF DO 80, L = 1, K IF( B( L, J ). NE. ZERO )THEN TEMP = ALPHA*B( L, J ) DO 70, I = 1, M C( I, J ) = C( I, J ) + TEMP*A( I, L ) CONTINUE END IF CONTINUE

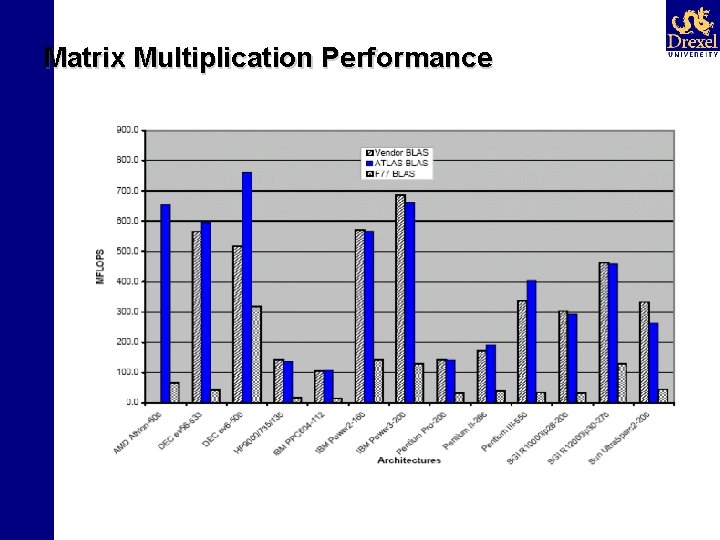

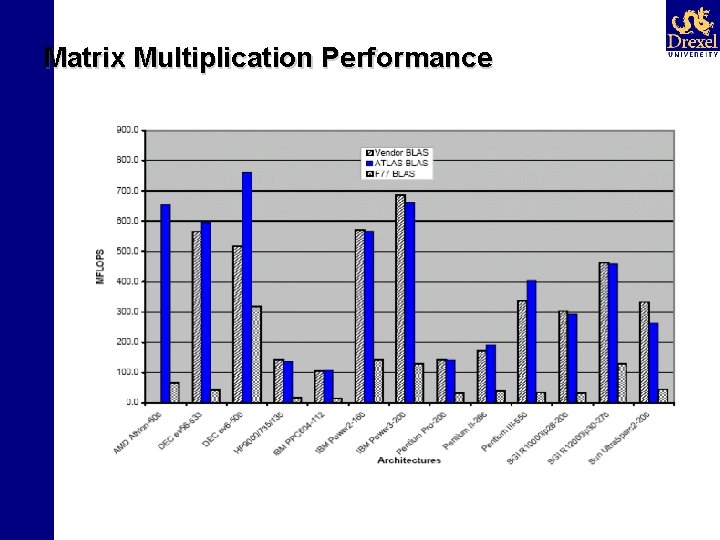

Matrix Multiplication Performance

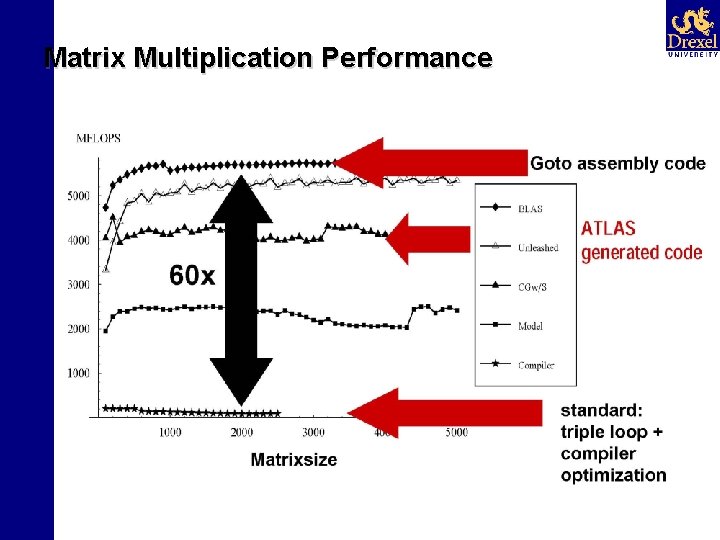

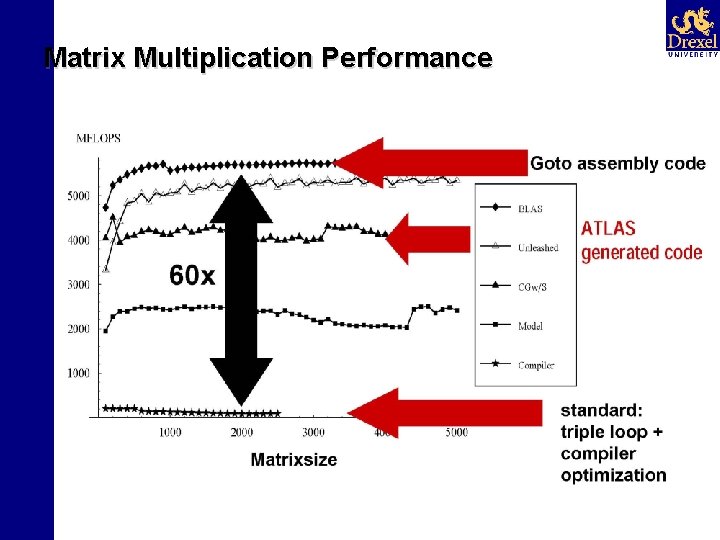

Matrix Multiplication Performance

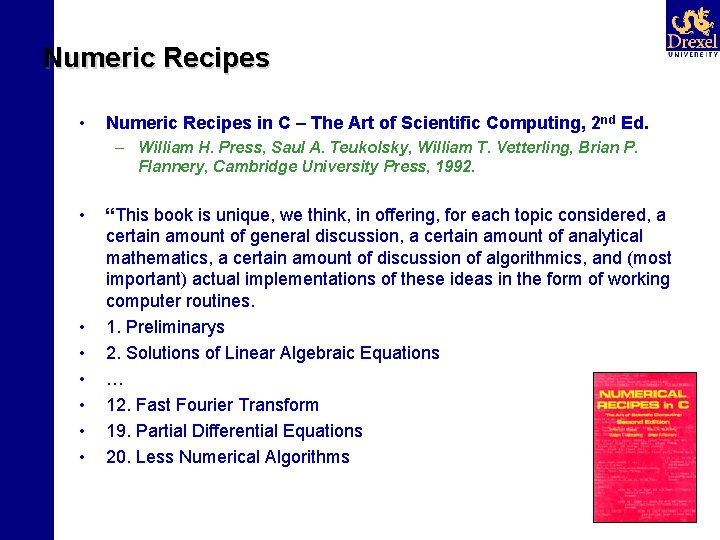

Numeric Recipes • Numeric Recipes in C – The Art of Scientific Computing, 2 nd Ed. – William H. Press, Saul A. Teukolsky, William T. Vetterling, Brian P. Flannery, Cambridge University Press, 1992. • • “This book is unique, we think, in offering, for each topic considered, a certain amount of general discussion, a certain amount of analytical mathematics, a certain amount of discussion of algorithmics, and (most important) actual implementations of these ideas in the form of working computer routines. 1. Preliminarys 2. Solutions of Linear Algebraic Equations … 12. Fast Fourier Transform 19. Partial Differential Equations 20. Less Numerical Algorithms

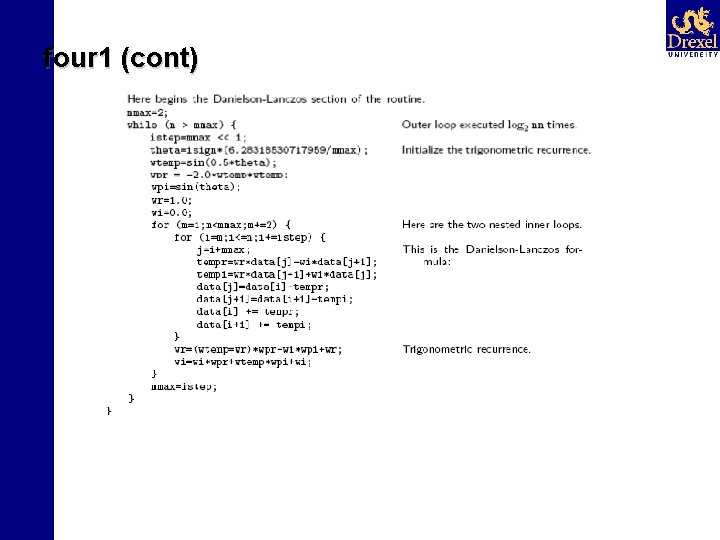

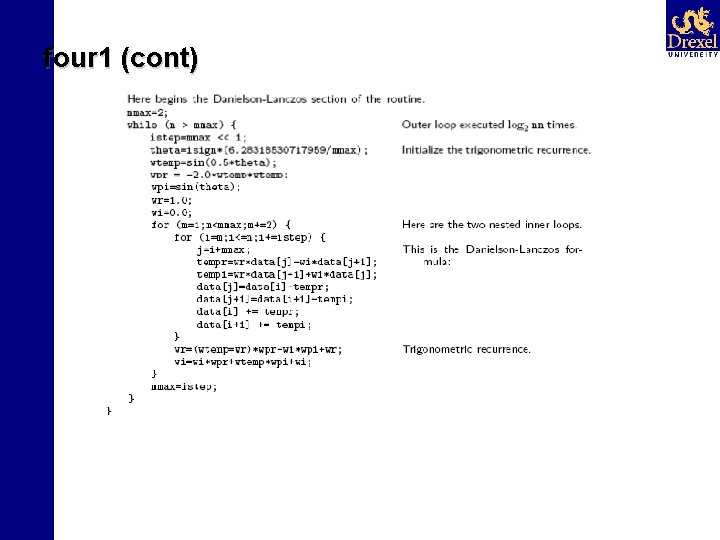

four 1

four 1 (cont)

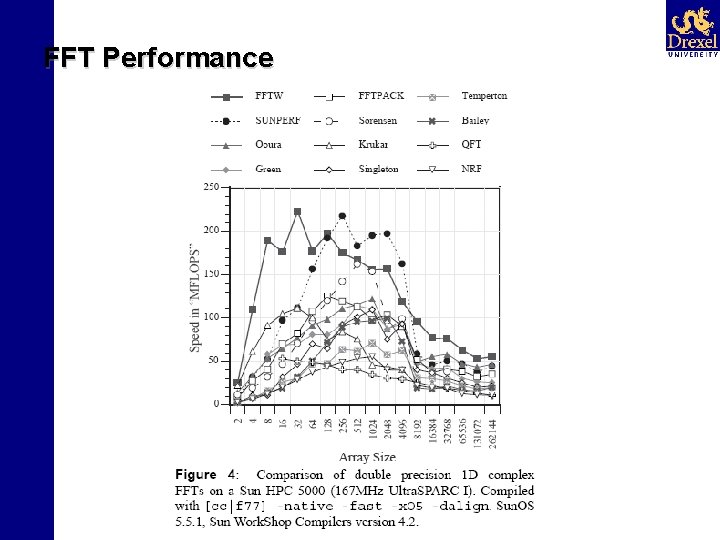

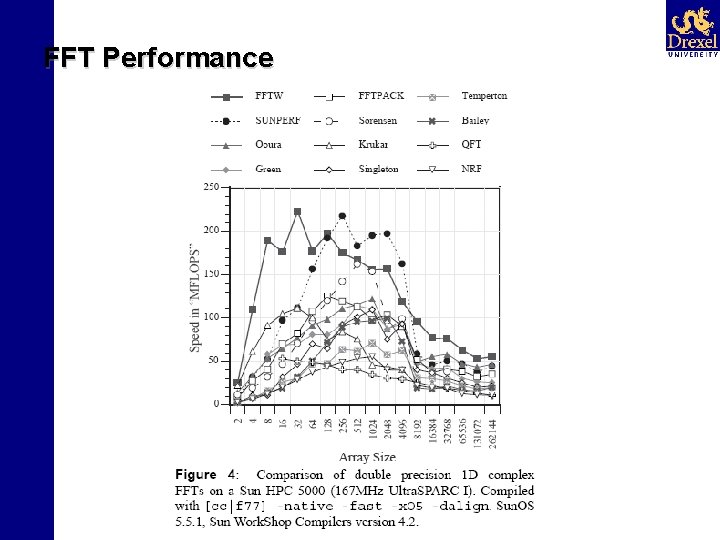

FFT Performance

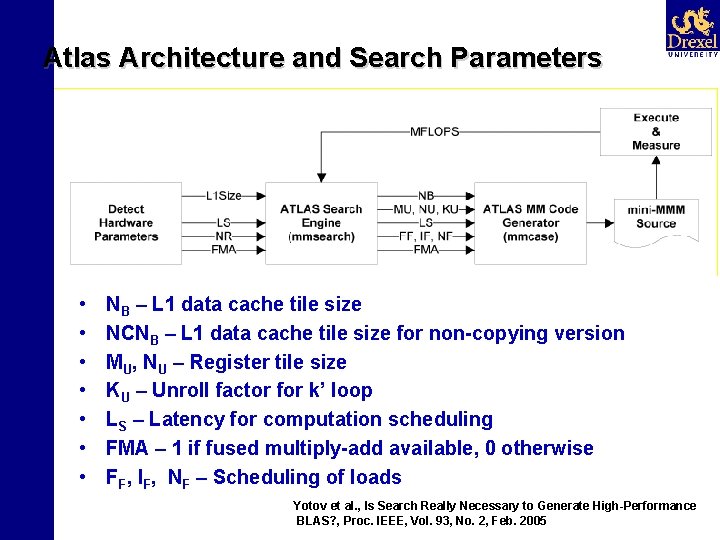

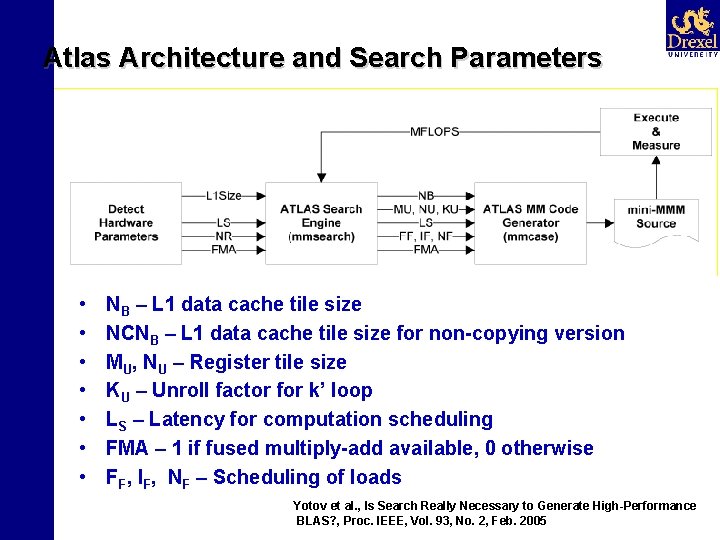

Atlas Architecture and Search Parameters • • NB – L 1 data cache tile size NCNB – L 1 data cache tile size for non-copying version MU, NU – Register tile size KU – Unroll factor for k’ loop LS – Latency for computation scheduling FMA – 1 if fused multiply-add available, 0 otherwise FF, IF, NF – Scheduling of loads Yotov et al. , Is Search Really Necessary to Generate High-Performance BLAS? , Proc. IEEE, Vol. 93, No. 2, Feb. 2005

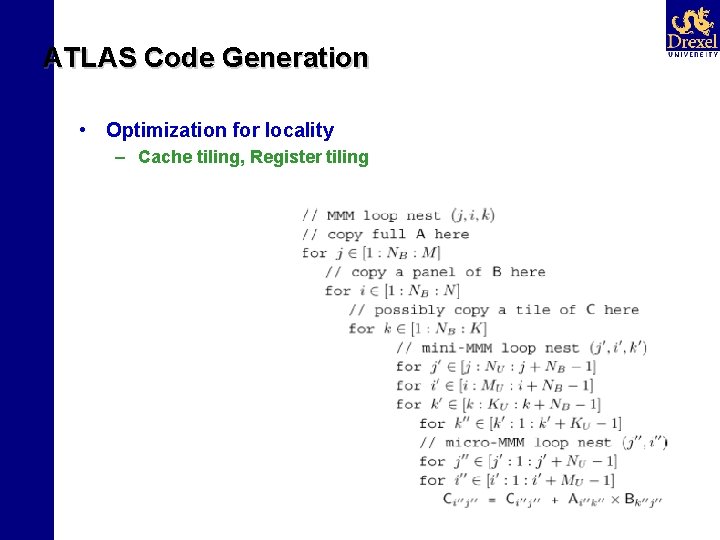

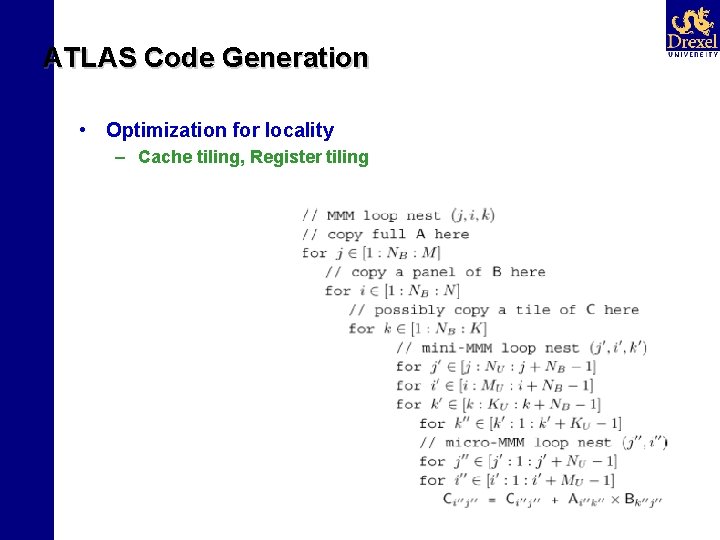

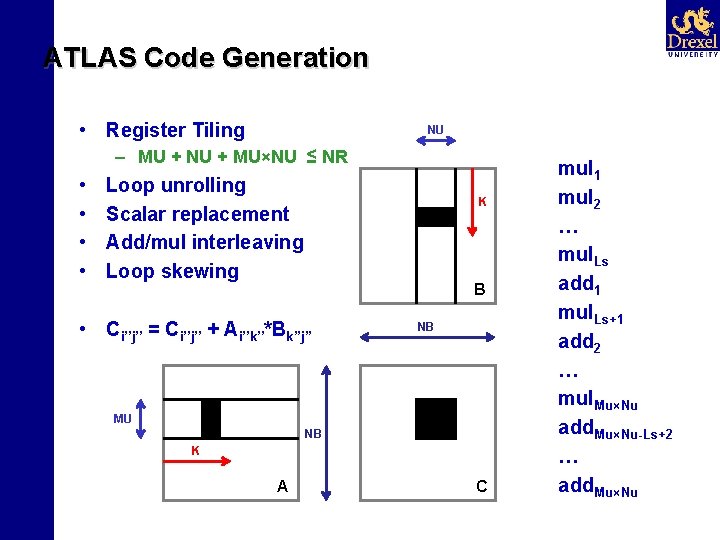

ATLAS Code Generation • Optimization for locality – Cache tiling, Register tiling

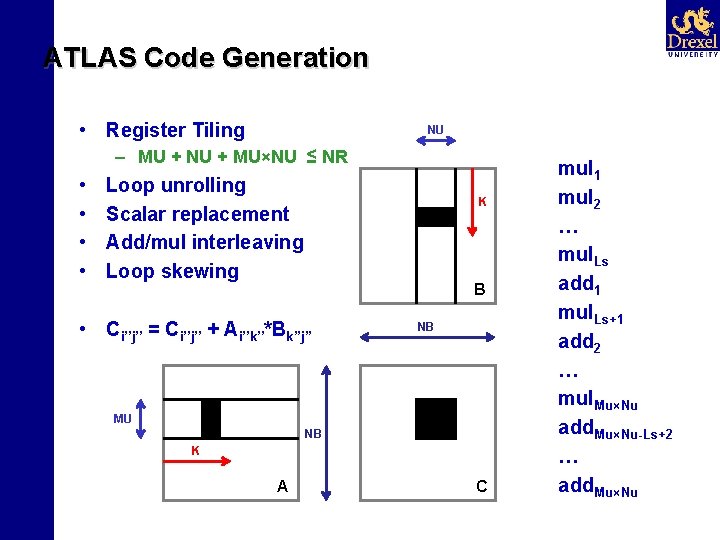

ATLAS Code Generation • Register Tiling NU – MU + NU + MU×NU ≤ NR • • Loop unrolling Scalar replacement Add/mul interleaving Loop skewing K B • Ci’’j’’ = Ci’’j’’ + Ai’’k’’*Bk’’j’’ NB MU NB K A C mul 1 mul 2 … mul. Ls add 1 mul. Ls+1 add 2 … mul. Mu×Nu add. Mu×Nu-Ls+2 … add. Mu×Nu

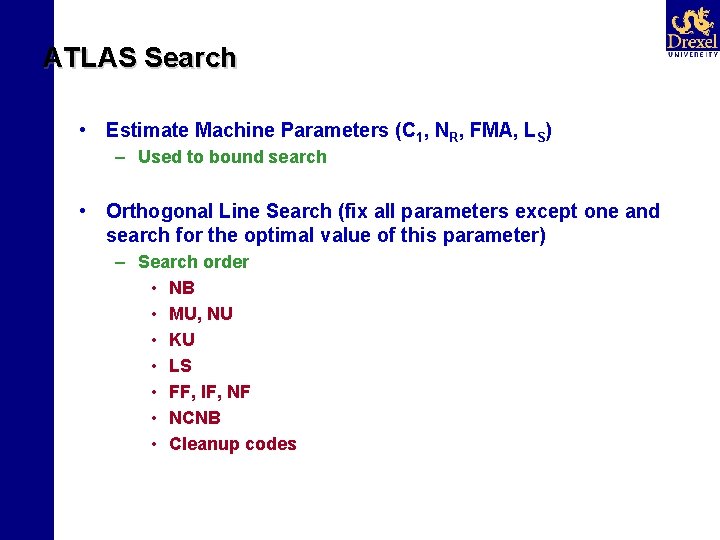

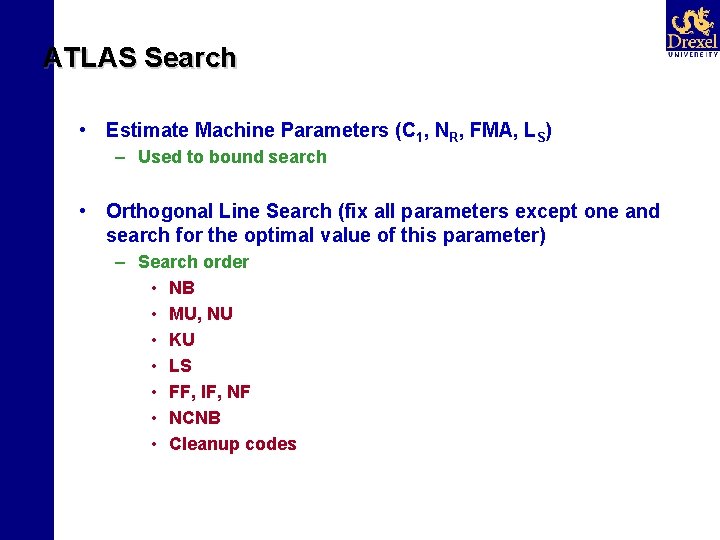

ATLAS Search • Estimate Machine Parameters (C 1, NR, FMA, LS) – Used to bound search • Orthogonal Line Search (fix all parameters except one and search for the optimal value of this parameter) – Search order • NB • MU, NU • KU • LS • FF, IF, NF • NCNB • Cleanup codes

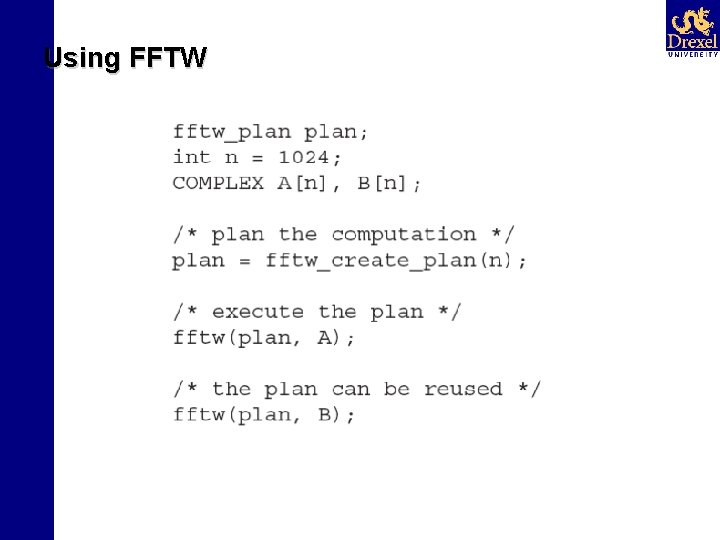

Using FFTW

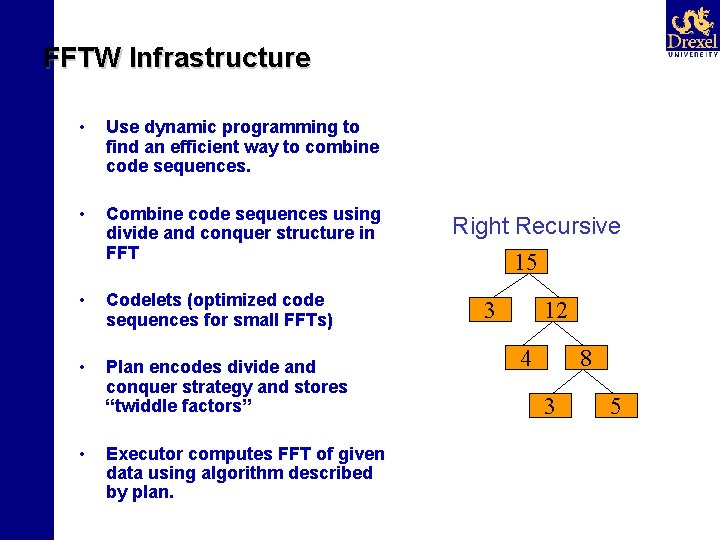

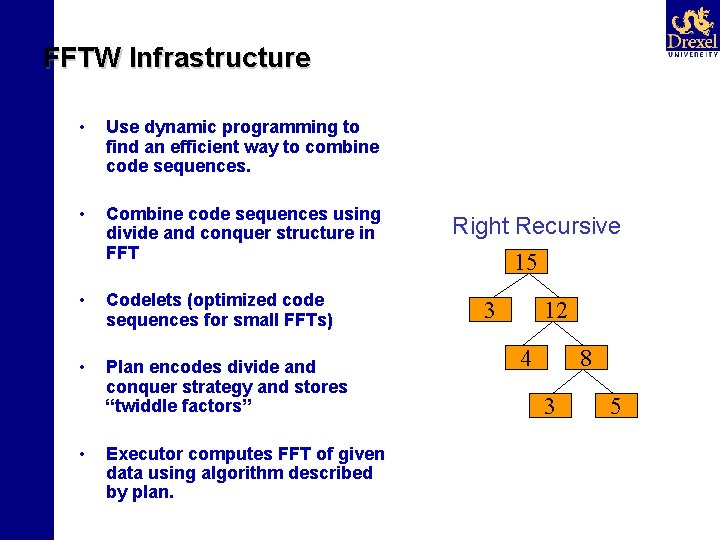

FFTW Infrastructure • Use dynamic programming to find an efficient way to combine code sequences. • Combine code sequences using divide and conquer structure in FFT • Codelets (optimized code sequences for small FFTs) • Plan encodes divide and conquer strategy and stores “twiddle factors” • Executor computes FFT of given data using algorithm described by plan. Right Recursive 15 3 12 4 8 3 5

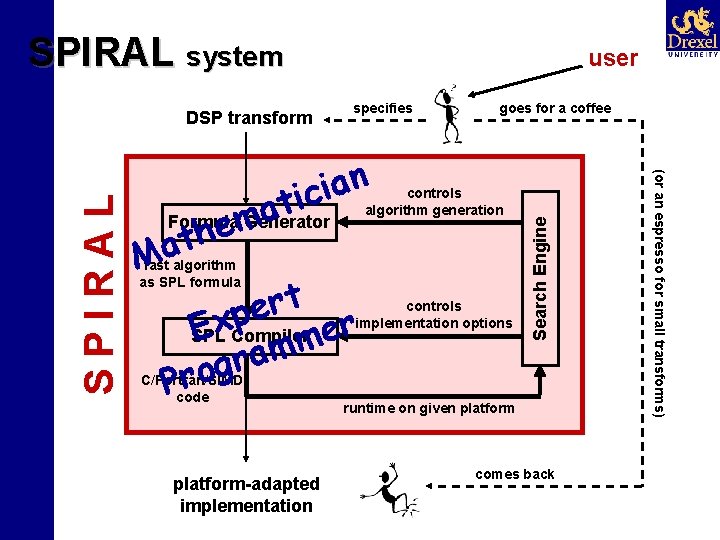

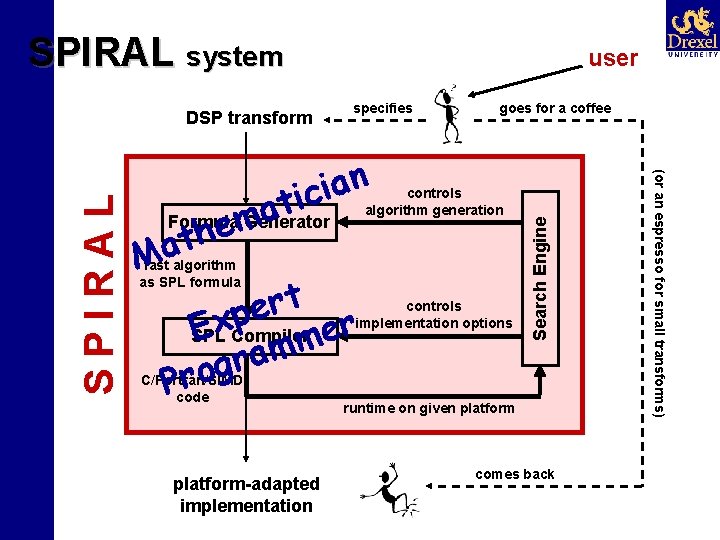

SPIRAL system n a i tic a m he controls algorithm generation Formula Generator t a M goes for a coffee fast algorithm as SPL formula t r e p x E Compilermer SPL m a r g o r P controls implementation options C/Fortran/SIMD code platform-adapted implementation Search Engine specifies runtime on given platform comes back (or an espresso for small transforms) SPIRAL DSP transform user

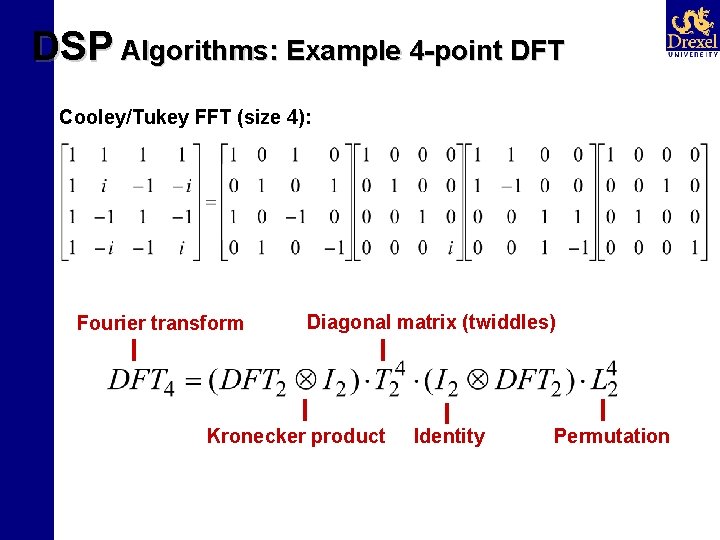

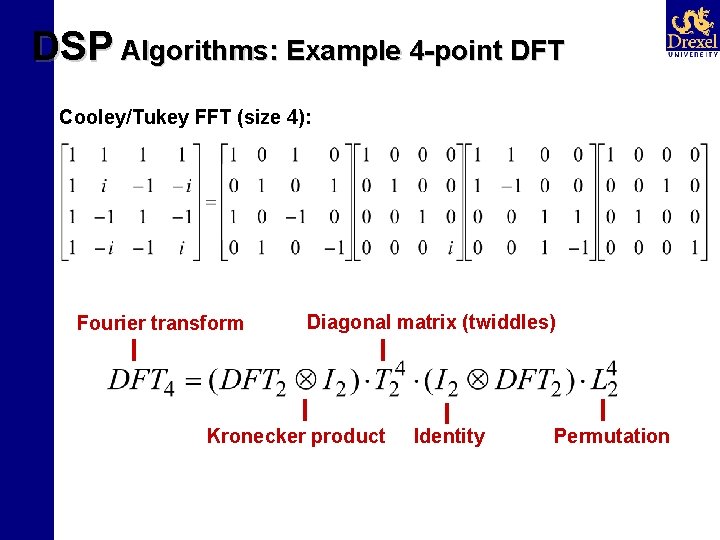

DSP Algorithms: Example 4 -point DFT Cooley/Tukey FFT (size 4): Fourier transform Diagonal matrix (twiddles) Kronecker product Identity Permutation

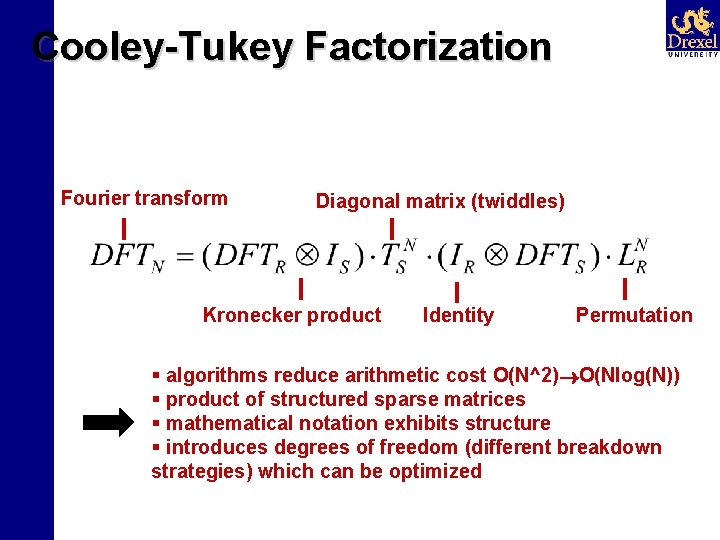

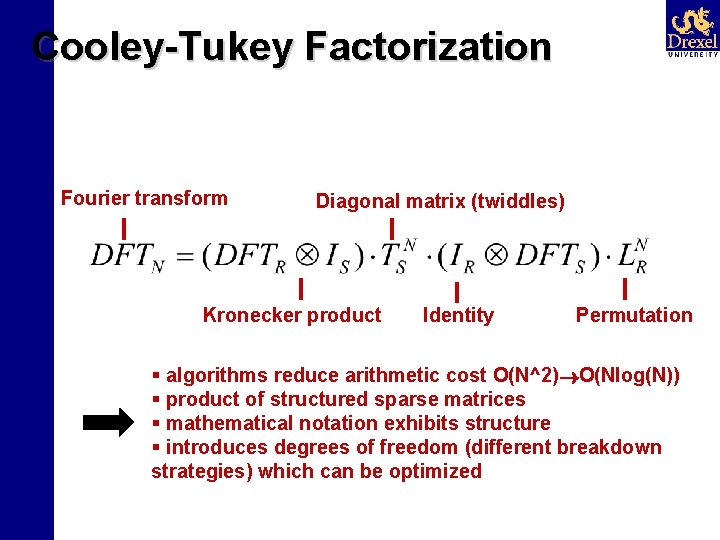

Cooley-Tukey Factorization Fourier transform Diagonal matrix (twiddles) Kronecker product Identity Permutation § algorithms reduce arithmetic cost O(N^2) O(Nlog(N)) § product of structured sparse matrices § mathematical notation exhibits structure § introduces degrees of freedom (different breakdown strategies) which can be optimized

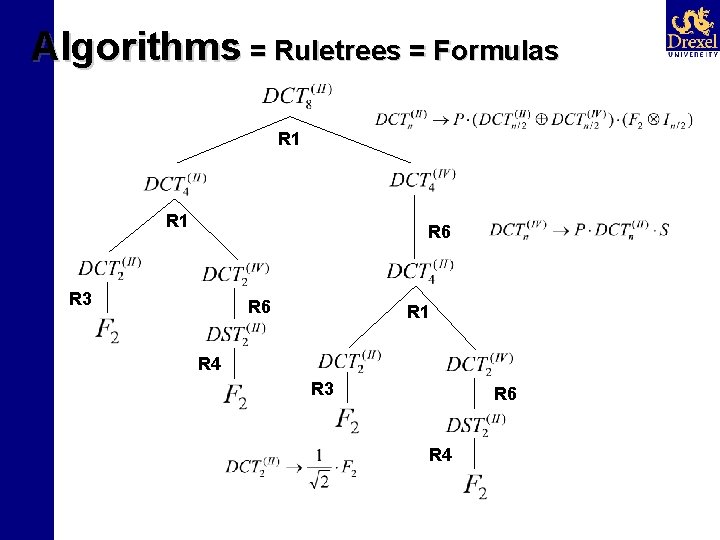

Algorithms = Ruletrees = Formulas R 1 R 6 R 3 R 6 R 1 R 4 R 3 R 6 R 4

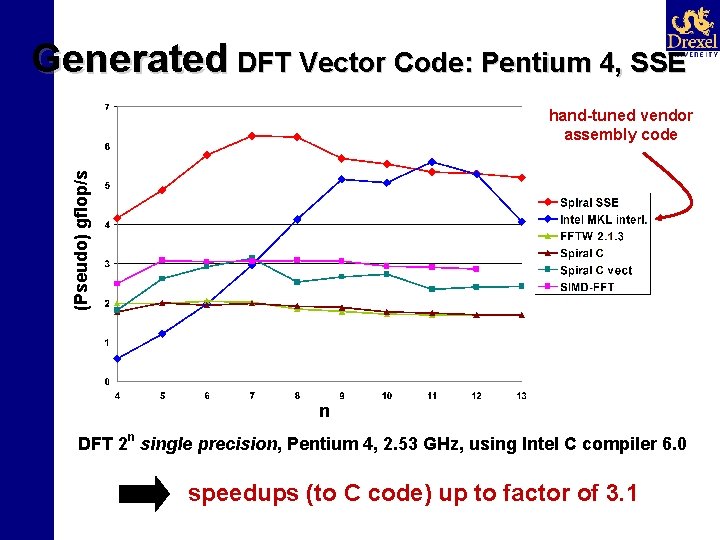

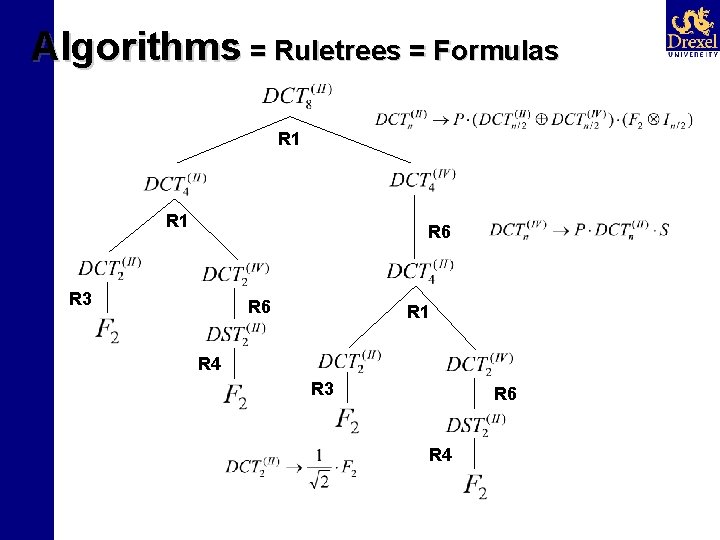

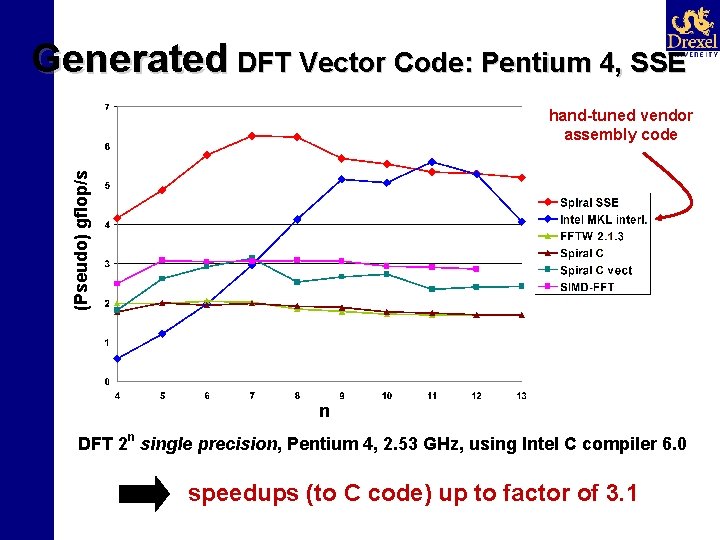

Generated DFT Vector Code: Pentium 4, SSE (Pseudo) gflop/s hand-tuned vendor assembly code n DFT 2 n single precision, Pentium 4, 2. 53 GHz, using Intel C compiler 6. 0 speedups (to C code) up to factor of 3. 1

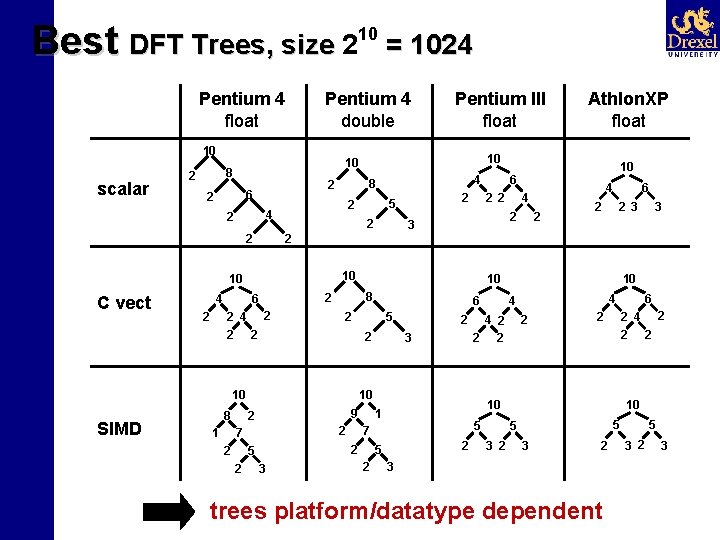

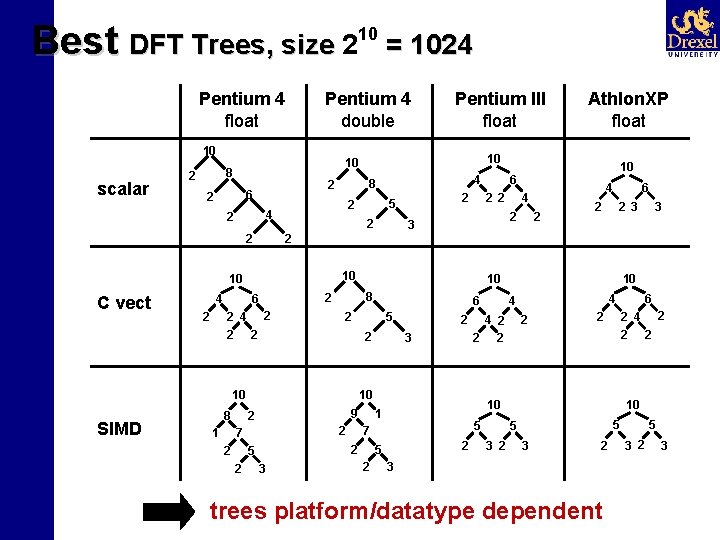

Best DFT Trees, size 2 Pentium 4 float scalar 8 2 2 1 2 5 2 2 2 3 3 3 9 2 3 5 7 2 5 2 4 4 2 2 2 10 5 5 3 2 6 2 4 10 1 2 5 10 4 2 2 2 10 7 2 6 2 2 6 10 2 10 SIMD 3 4 4 2 8 2 2 2 4 8 5 2 2 10 6 2 10 4 4 2 4 Athlon. XP float 10 8 2 6 2 2 Pentium III float 10 2 C vect = 1024 Pentium 4 double 10 2 10 3 2 3 trees platform/datatype dependent 5 3 2 3

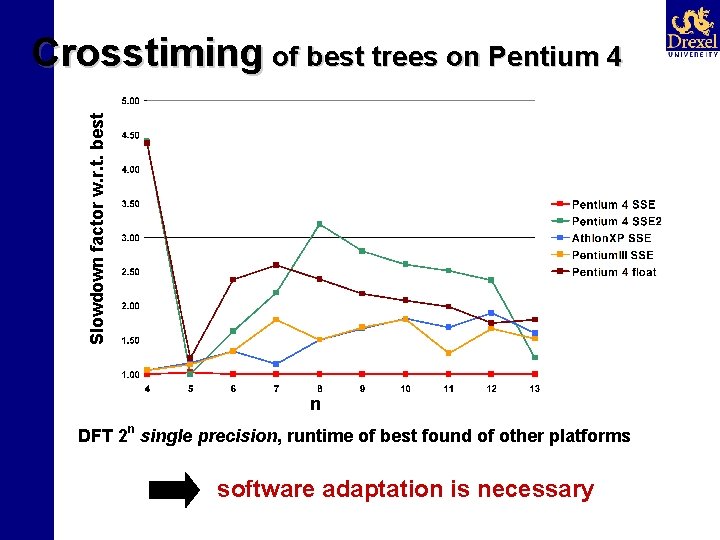

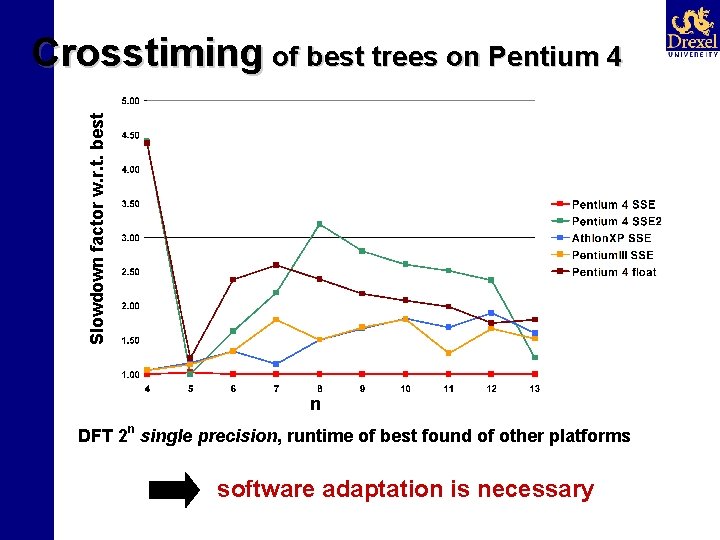

Slowdown factor w. r. t. best Crosstiming of best trees on Pentium 4 n DFT 2 n single precision, runtime of best found of other platforms software adaptation is necessary

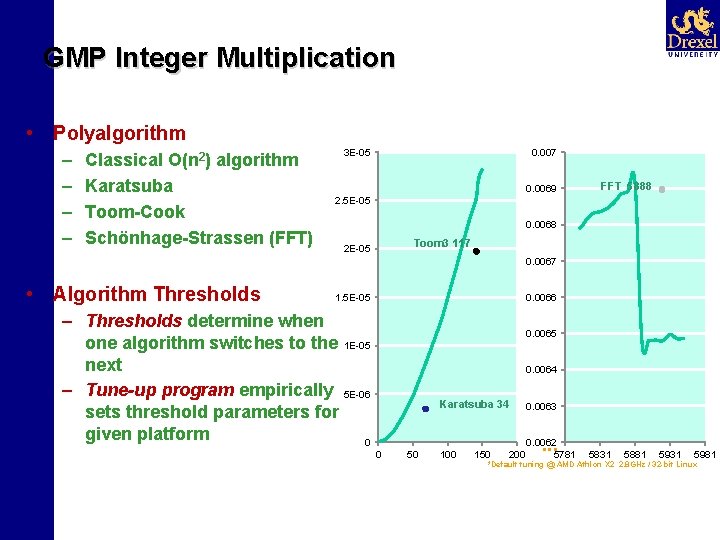

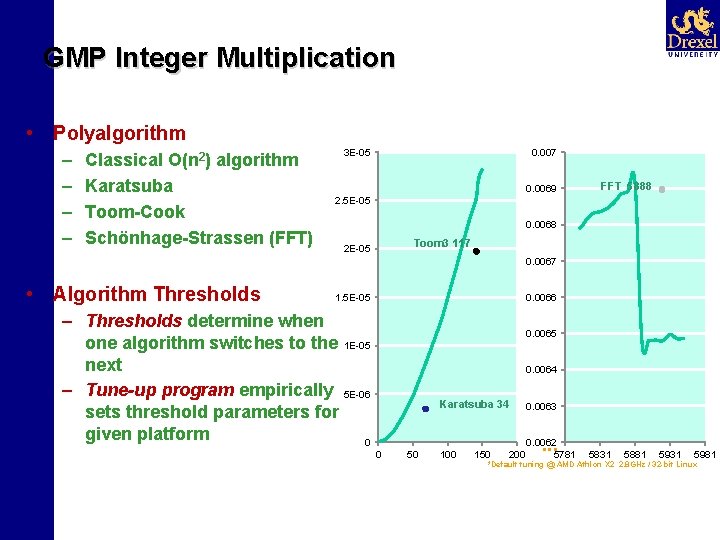

GMP Integer Multiplication • Polyalgorithm – – Classical O(n 2) algorithm Karatsuba Toom-Cook Schönhage-Strassen (FFT) 3 E-05 0. 007 0. 0069 FFT 5888 2. 5 E-05 0. 0068 Toom 3 117 2 E-05 0. 0067 • Algorithm Thresholds 1. 5 E-05 0. 0066 – Thresholds determine when one algorithm switches to the 1 E-05 next – Tune-up program empirically 5 E-06 sets threshold parameters for given platform 0 0. 0065 0. 0064 0 50 Karatsuba 34 0. 0063 100 0. 0062 5781 150 200 . . . 5831 5881 5931 5981 *Default tuning @ AMD Athlon X 2 2. 8 GHz / 32 -bit Linux