Assessing quality in systematic reviews of the effectiveness

- Slides: 17

Assessing quality in systematic reviews of the effectiveness of health promotion and public health (HP/PH): Areas of consensus and dissension Dr Jonathan Shepherd Principal Research Fellow Southampton Health Technology Assessments Centre (SHTAC), School of Medicine, University of Southampton, UK 1

Objectives of today’s presentation Ø Present results of a series of interviews with systematic reviewers in HP/PH l l l Challenges faced by systematic reviewers Barriers / facilitators around assessing quality Barriers / facilitators around learning to do systematic reviews of HP/PH 2

Methods Ø Stage 1 – detailed methodological mapping of random sample of HP/PH systematic reviews (n=30) Ø Stage 2 – Semi-structured interviews with a sample of systematic reviewers in HP/PH (n=17) 3

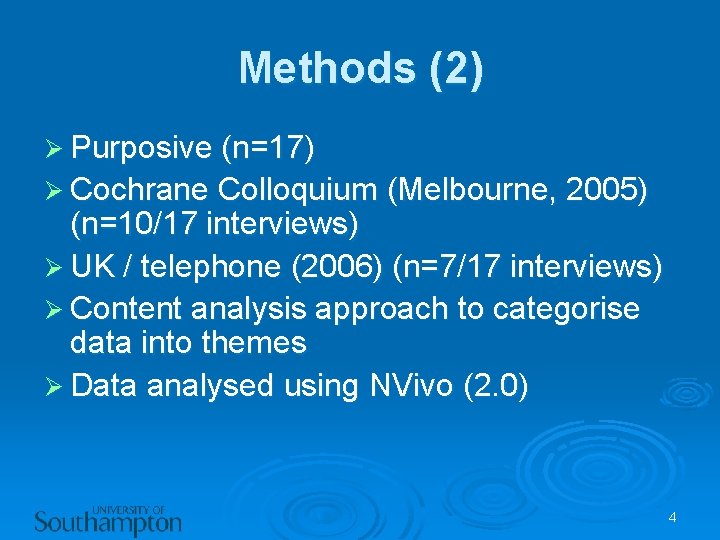

Methods (2) Ø Purposive (n=17) Ø Cochrane Colloquium (Melbourne, 2005) (n=10/17 interviews) Ø UK / telephone (2006) (n=7/17 interviews) Ø Content analysis approach to categorise data into themes Ø Data analysed using NVivo (2. 0) 4

Methods (3): Interviewees Interviewee’s country Number (%) interviewed Australia Canada 4 (26) 2 (12) Nigeria South Africa 1 (6) United Kingdom USA 8 (47) 1 (6) 5

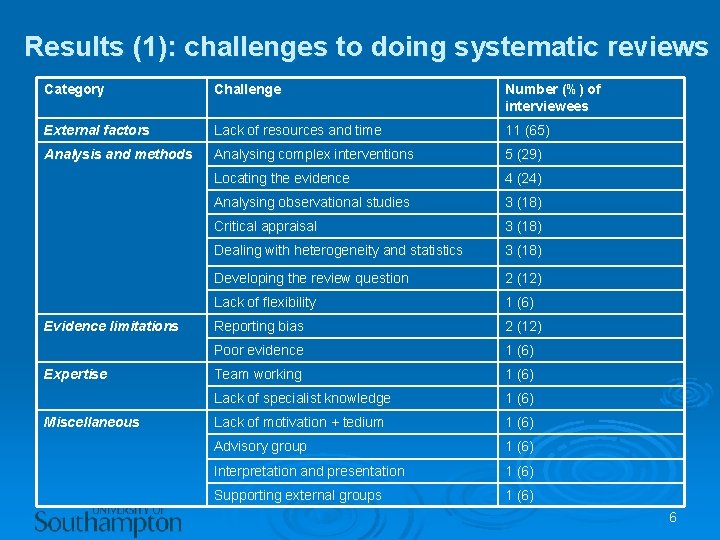

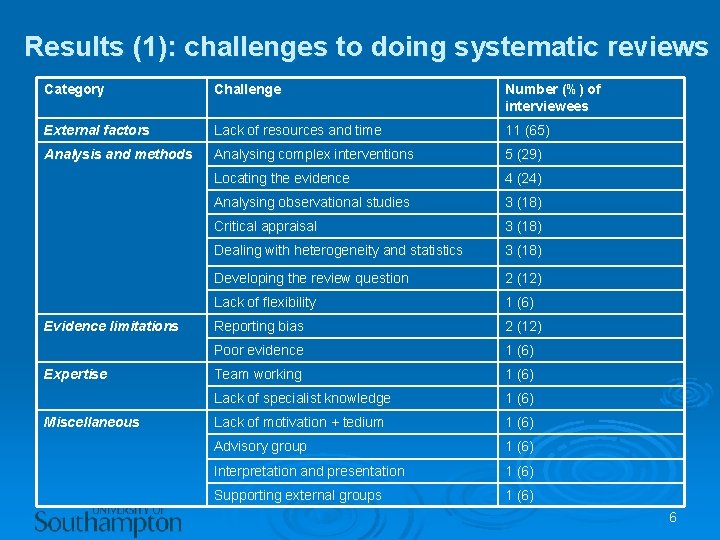

Results (1): challenges to doing systematic reviews Category Challenge Number (%) of interviewees External factors Lack of resources and time 11 (65) Analysis and methods Analysing complex interventions 5 (29) Locating the evidence 4 (24) Analysing observational studies 3 (18) Critical appraisal 3 (18) Dealing with heterogeneity and statistics 3 (18) Developing the review question 2 (12) Lack of flexibility 1 (6) Reporting bias 2 (12) Poor evidence 1 (6) Team working 1 (6) Lack of specialist knowledge 1 (6) Lack of motivation + tedium 1 (6) Advisory group 1 (6) Interpretation and presentation 1 (6) Supporting external groups 1 (6) Evidence limitations Expertise Miscellaneous 6

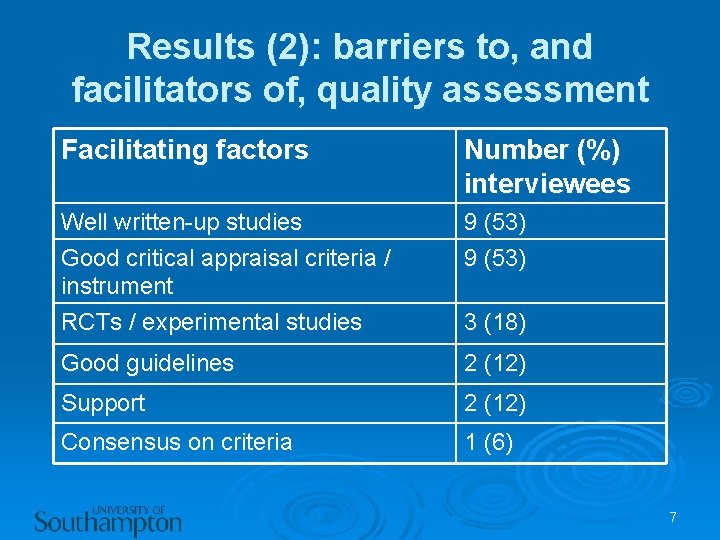

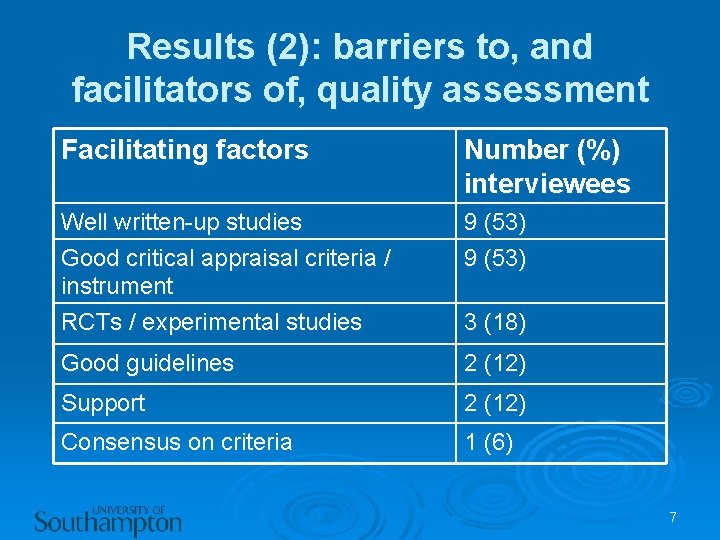

Results (2): barriers to, and facilitators of, quality assessment Facilitating factors Number (%) interviewees Well written-up studies Good critical appraisal criteria / instrument RCTs / experimental studies 9 (53) Good guidelines 2 (12) Support 2 (12) Consensus on criteria 1 (6) 3 (18) 7

Results (3): Barriers to, and facilitators of, quality assessment (cont) “I mean it is difficult in, you know that there are judgement calls all the time, it isn’t you know it, none of these things are nice easy tick boxes and there’s so many judgements right the way through” “I’ve never met a set of guidelines where they completely dispense with the idea that you make a new judgement” Interviewee 14 8

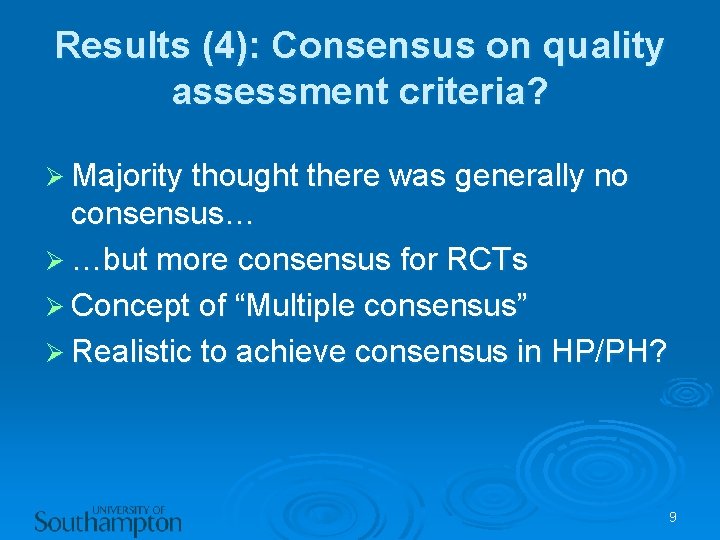

Results (4): Consensus on quality assessment criteria? Ø Majority thought there was generally no consensus… Ø …but more consensus for RCTs Ø Concept of “Multiple consensus” Ø Realistic to achieve consensus in HP/PH? 9

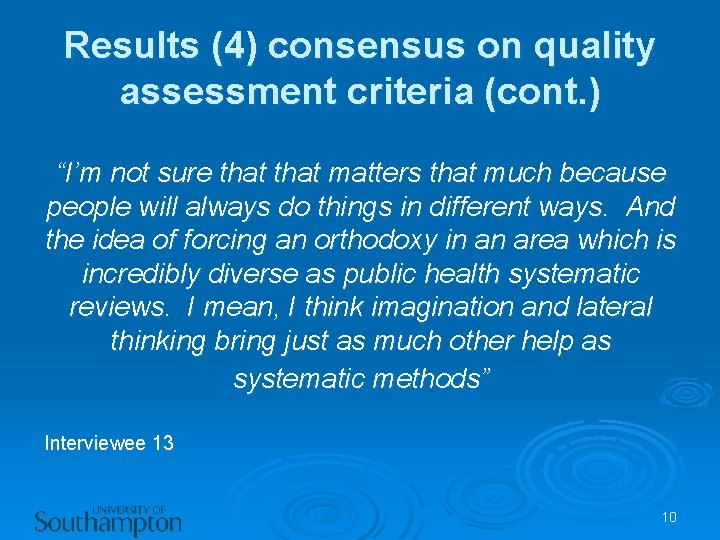

Results (4) consensus on quality assessment criteria (cont. ) “I’m not sure that matters that much because people will always do things in different ways. And the idea of forcing an orthodoxy in an area which is incredibly diverse as public health systematic reviews. I mean, I think imagination and lateral thinking bring just as much other help as systematic methods” Interviewee 13 10

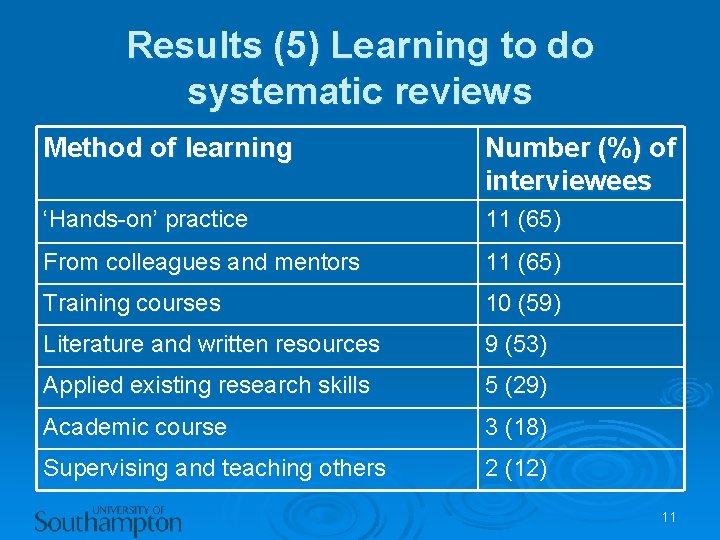

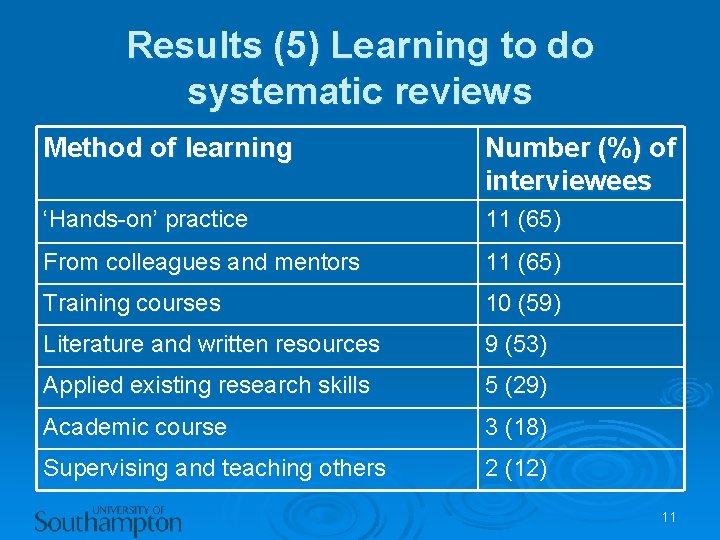

Results (5) Learning to do systematic reviews Method of learning Number (%) of interviewees ‘Hands-on’ practice 11 (65) From colleagues and mentors 11 (65) Training courses 10 (59) Literature and written resources 9 (53) Applied existing research skills 5 (29) Academic course 3 (18) Supervising and teaching others 2 (12) 11

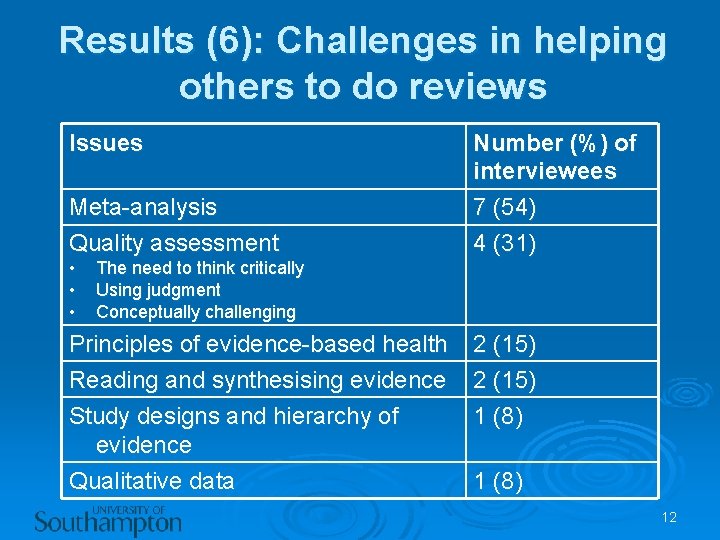

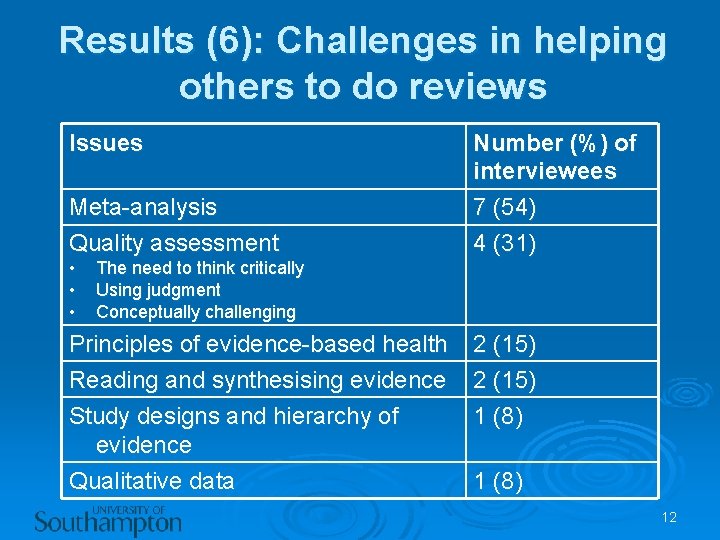

Results (6): Challenges in helping others to do reviews Issues Meta-analysis Quality assessment • • • Number (%) of interviewees 7 (54) 4 (31) The need to think critically Using judgment Conceptually challenging Principles of evidence-based health Reading and synthesising evidence Study designs and hierarchy of evidence Qualitative data 2 (15) 1 (8) 12

Conclusions: key overarching themes 1. Complexity of many HP/PH reviews 13

Conclusions: key overarching themes (cont) “It’s big and diverse, that’s why it’s so much easy just to stick with randomised control trials and be done with it. Or even if you do community trials, cluster randomised, still it’s easier. I think once you move beyond it gets very difficult” Interviewee 4 14

Conclusions: key overarching themes (cont) Complexity of many HP/PH reviews 2. Time and resources 3. Subjectivity 1. 15

Recommendations Ø Adequate planning and resourcing of complex HP/PH systematic reviews Ø Better reporting of HP/PH studies Ø Training for critical appraisal l opportunities for ‘hands on’ learning l acknowledging subjectivity 16

Acknowledgements Ø University of Southampton Ø Professor Katherine Weare Ø Interviewees who took part in the research Ø EPPI-Centre, London Ø Cochrane Public Health Group email: jps@soton. ac. uk 17

Prospero systematic review

Prospero systematic review Assessing the internal environment of the firm

Assessing the internal environment of the firm Assessing cardiorespiratory fitness

Assessing cardiorespiratory fitness Assessing the situation

Assessing the situation Ways to address grammar in the writing classroom ppt

Ways to address grammar in the writing classroom ppt Methodology for assessing procurement systems

Methodology for assessing procurement systems A nine box matrix requires assessing employees on ________.

A nine box matrix requires assessing employees on ________. Group discussion images

Group discussion images Aashto manual for assessing safety hardware

Aashto manual for assessing safety hardware Chapter 22 assessing health status

Chapter 22 assessing health status Assessing leadership and measuring its effects

Assessing leadership and measuring its effects Ppst domain 5 in mind

Ppst domain 5 in mind Cultural dynamics in assessing global markets

Cultural dynamics in assessing global markets Core graded high frequency word survey

Core graded high frequency word survey Dominant work values in today's workforce

Dominant work values in today's workforce Chapter 4 cultural dynamics in assessing global markets

Chapter 4 cultural dynamics in assessing global markets Assessing hrd needs

Assessing hrd needs Assessing motivation to change

Assessing motivation to change