Anatomy of a LargeScale Hypertextual Web Search Engine

Anatomy of a Large-Scale Hypertextual Web Search Engine (e. g. Google)

The Web Index sizes – 1994: World Wide Web Worm (Mc. Bryan) indexes 110, 000 pages/documents – 1997: search engines claim from 2 -100 million pages indexed – 2005: Google claims 8 billion – later claim of 25 billion was removed Queries – – 1994: WWWW had 1500 queries per day 1997: Alta. Vista had 20 million queries per day 2002: Google had 250 million queries a day 2004: 2. 5 billion queries per day Clearly Web search engines must scale to increased corpus size and increased use

Google Design Goals Scale up to work with much larger collections – In 1997, older search engines were breaking down due to manipulations by advertisers, etc. Exhibit high precision among top-ranked documents – Many documents are kind-of relevant – For the Web, “relevant” should be just the very best documents Provide data sets for academic research on search engines

Changes from Earlier Engines Page. Rank – – Rank importance of pages Use citation patterns like for academic research Normalize for number of links on page Variation on probability that a random surfer would view a page Anchor Text – Store text of anchors with the pages they link to – Incoming link anchors can be better index than page content – Allows uncrawled and nontextual content to be indexed – Not a new idea: used by WWWW in 1994

Related Work Information retrieval – Traditional IR metrics (TREC 96) called 20 GB a very large corpus – Term vectors assume all documents have some value • short documents (few words) that match query term get ranked highest • “bill clinton” returns page that only says “bill clinton sucks” Different from well-controlled collections – Content has abnormal vocabulary (e. g. product codes) – Content generated by systems (e. g. complex ids, binhexed data) – Content includes bugs/flakiness/disinformation

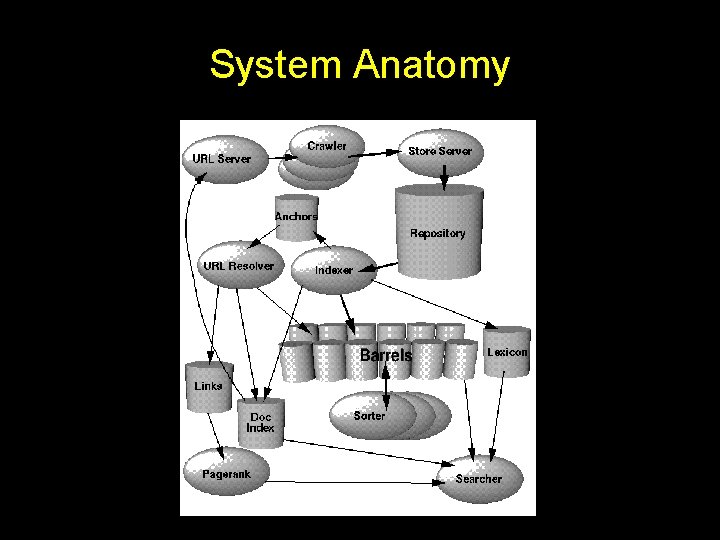

System Anatomy

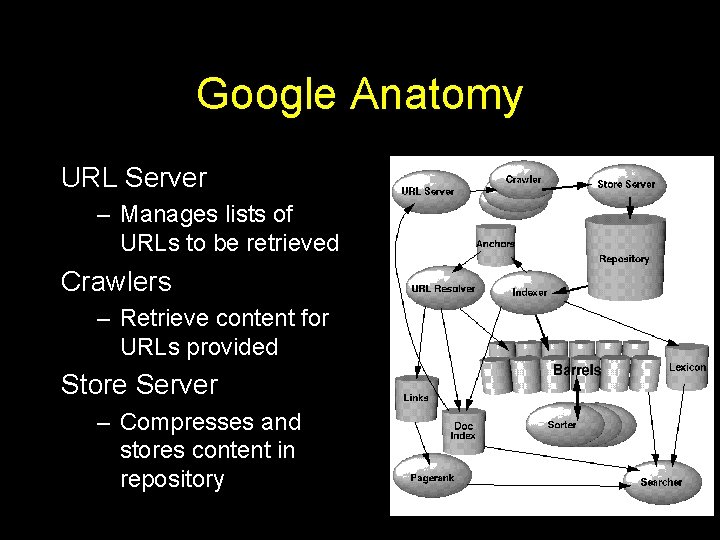

Google Anatomy URL Server – Manages lists of URLs to be retrieved Crawlers – Retrieve content for URLs provided Store Server – Compresses and stores content in repository

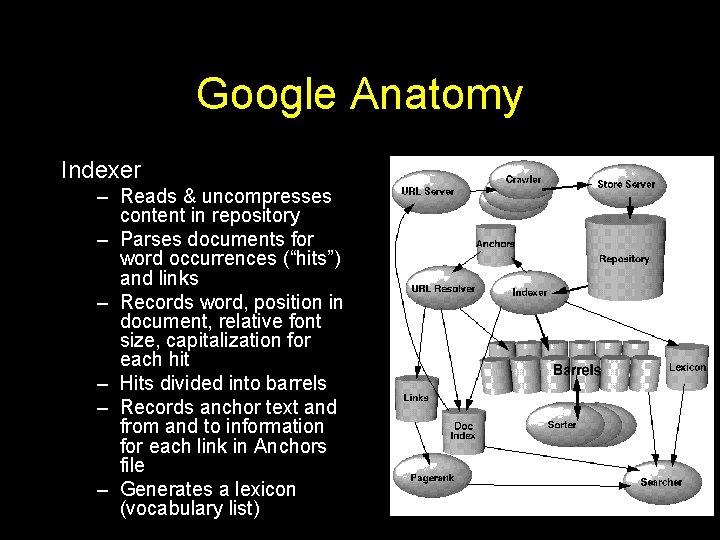

Google Anatomy Indexer – Reads & uncompresses content in repository – Parses documents for word occurrences (“hits”) and links – Records word, position in document, relative font size, capitalization for each hit – Hits divided into barrels – Records anchor text and from and to information for each link in Anchors file – Generates a lexicon (vocabulary list)

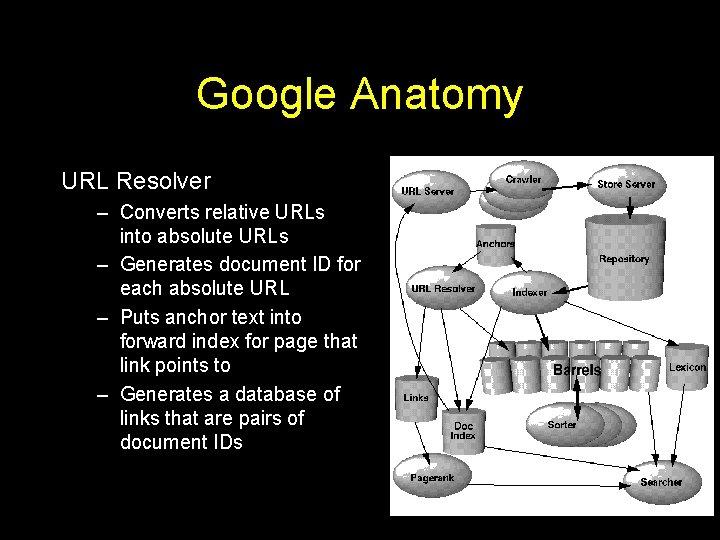

Google Anatomy URL Resolver – Converts relative URLs into absolute URLs – Generates document ID for each absolute URL – Puts anchor text into forward index for page that link points to – Generates a database of links that are pairs of document IDs

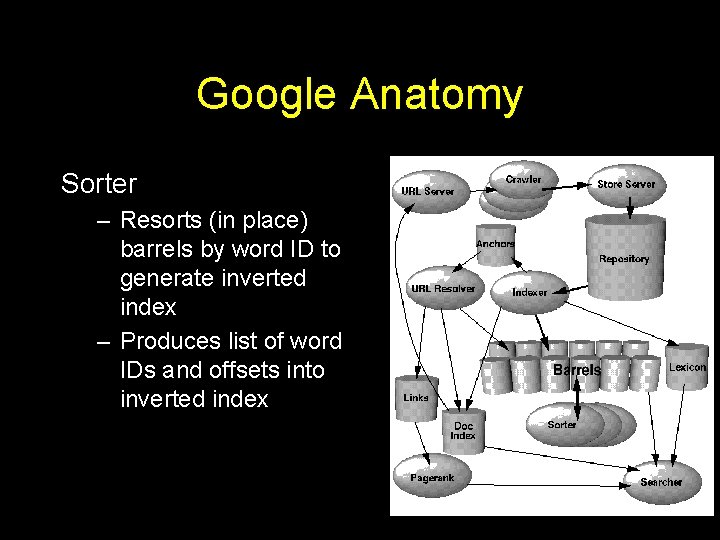

Google Anatomy Sorter – Resorts (in place) barrels by word ID to generate inverted index – Produces list of word IDs and offsets into inverted index

Google Anatomy Page. Rank – Generates page ranking based on links Dump. Lexicon – Takes lexicon and inverted index and generates lexicon used by Searcher

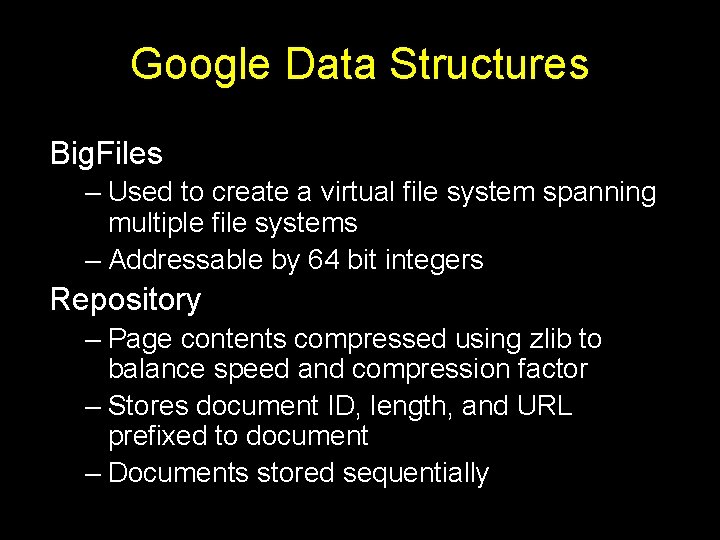

Google Data Structures Big. Files – Used to create a virtual file system spanning multiple file systems – Addressable by 64 bit integers Repository – Page contents compressed using zlib to balance speed and compression factor – Stores document ID, length, and URL prefixed to document – Documents stored sequentially

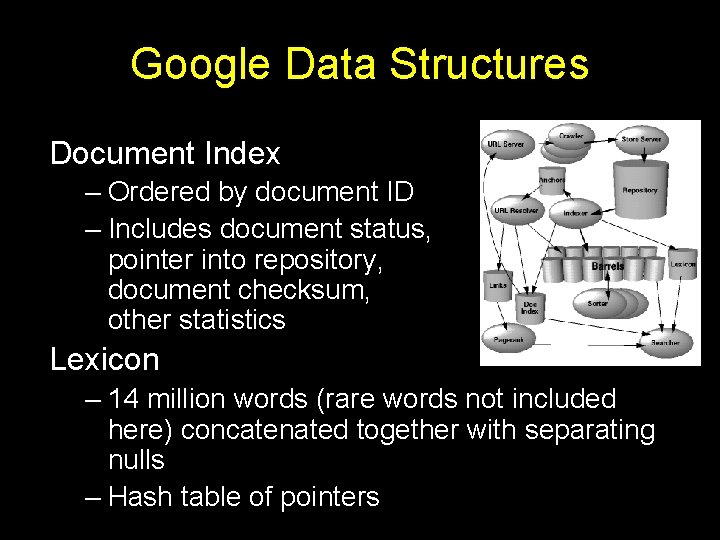

Google Data Structures Document Index – Ordered by document ID – Includes document status, pointer into repository, document checksum, other statistics Lexicon – 14 million words (rare words not included here) concatenated together with separating nulls – Hash table of pointers

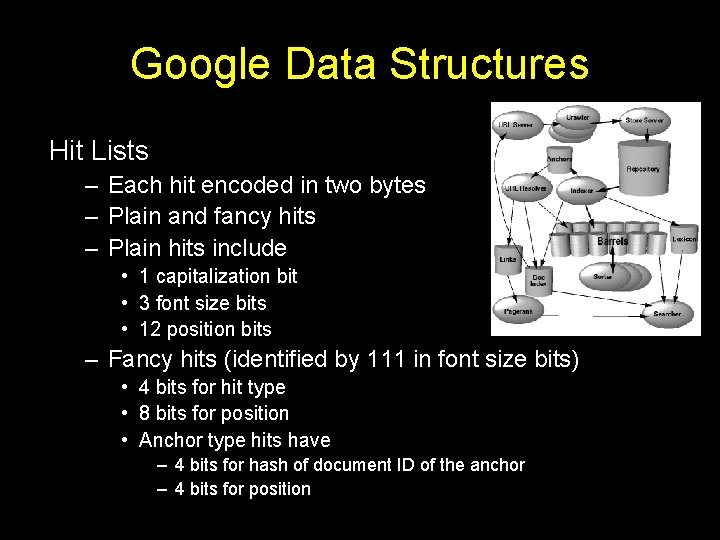

Google Data Structures Hit Lists – Each hit encoded in two bytes – Plain and fancy hits – Plain hits include • 1 capitalization bit • 3 font size bits • 12 position bits – Fancy hits (identified by 111 in font size bits) • 4 bits for hit type • 8 bits for position • Anchor type hits have – 4 bits for hash of document ID of the anchor – 4 bits for position

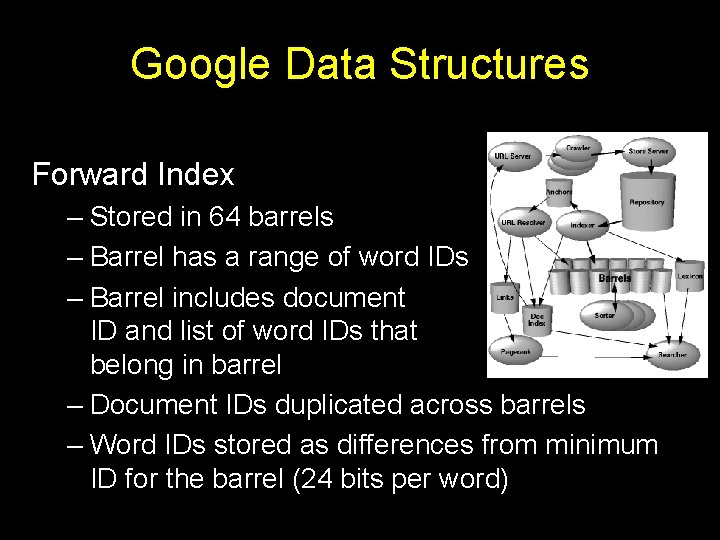

Google Data Structures Forward Index – Stored in 64 barrels – Barrel has a range of word IDs – Barrel includes document ID and list of word IDs that belong in barrel – Document IDs duplicated across barrels – Word IDs stored as differences from minimum ID for the barrel (24 bits per word)

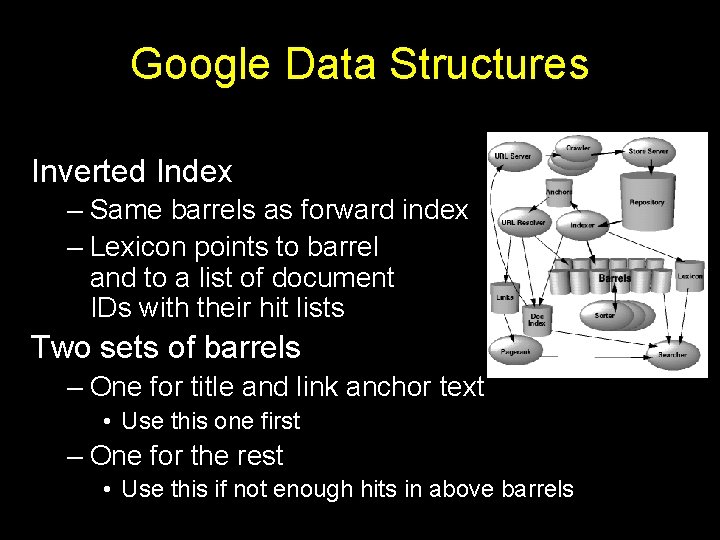

Google Data Structures Inverted Index – Same barrels as forward index – Lexicon points to barrel and to a list of document IDs with their hit lists Two sets of barrels – One for title and link anchor text • Use this one first – One for the rest • Use this if not enough hits in above barrels

Crawling, Indexing, Searching Web sites were surprised to be crawled in 1997 – Requires people to deal with email questions Parsing HTML is a challenge – Does not use YACC to generate CFG parser • Too much overhead (too slow) – Uses flex to generate a lexical analyzer Ranking – Use vector of count weights and vector of type weights – Bias towards proximity of search terms – Use Page. Rank to give a final rank

- Slides: 17