1 2 The Anatomy of a LargeScale Hypertextual

- Slides: 53

1) 2) The Anatomy of a Large-Scale Hypertextual Web Search engine, Sergey Brin and Lawrence page, 7 th WWW Conf. Graph Structure in the Web, Andrei Broder stal, WWW 9 Instructor: P. Krishna Reddy E-mail: pkreddy@iiit. net http: //www. iiit. net/~pkreddy

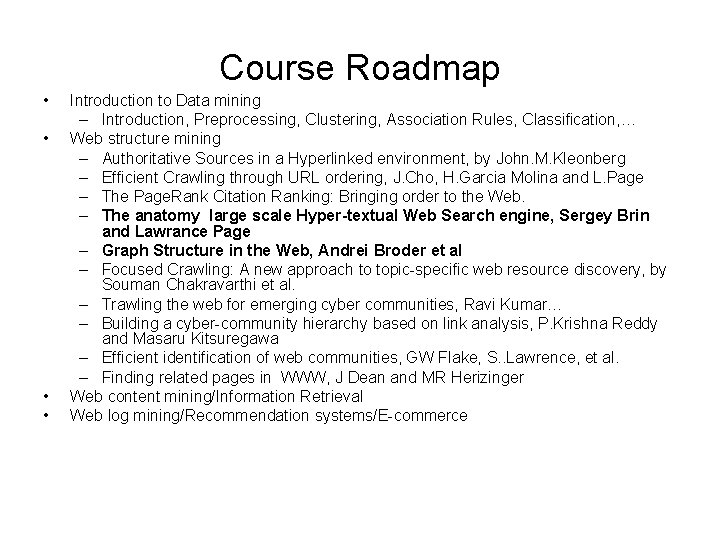

Course Roadmap • • Introduction to Data mining – Introduction, Preprocessing, Clustering, Association Rules, Classification, … Web structure mining – Authoritative Sources in a Hyperlinked environment, by John. M. Kleonberg – Efficient Crawling through URL ordering, J. Cho, H. Garcia Molina and L. Page – The Page. Rank Citation Ranking: Bringing order to the Web. – The anatomy large scale Hyper-textual Web Search engine, Sergey Brin and Lawrance Page – Graph Structure in the Web, Andrei Broder et al – Focused Crawling: A new approach to topic-specific web resource discovery, by Souman Chakravarthi et al. – Trawling the web for emerging cyber communities, Ravi Kumar… – Building a cyber-community hierarchy based on link analysis, P. Krishna Reddy and Masaru Kitsuregawa – Efficient identification of web communities, GW Flake, S. . Lawrence, et al. – Finding related pages in WWW, J Dean and MR Herizinger Web content mining/Information Retrieval Web log mining/Recommendation systems/E-commerce

The Anatomy of a Large-Scale Hypertextual Web Search Engine By Sergey Brin and Lawrence Page developers of Google (1997) Intructor: P. Krishna Reddy

The Problem • The Web continues to grow rapidly • So do its inexperienced users • Human-maintained lists: – Cannot to keep up with volume of changes, – Subjective – Do not cover all topics • Automated search engines – Bring too many low relevant matches – Advertisers mislead them for commercial purposes

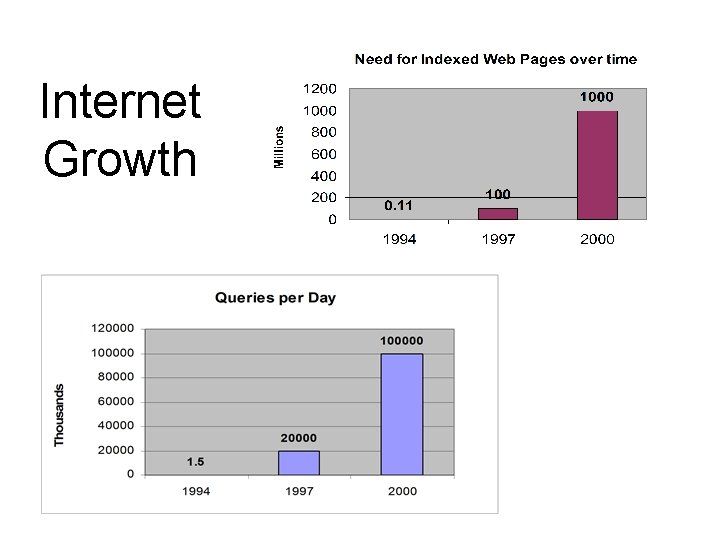

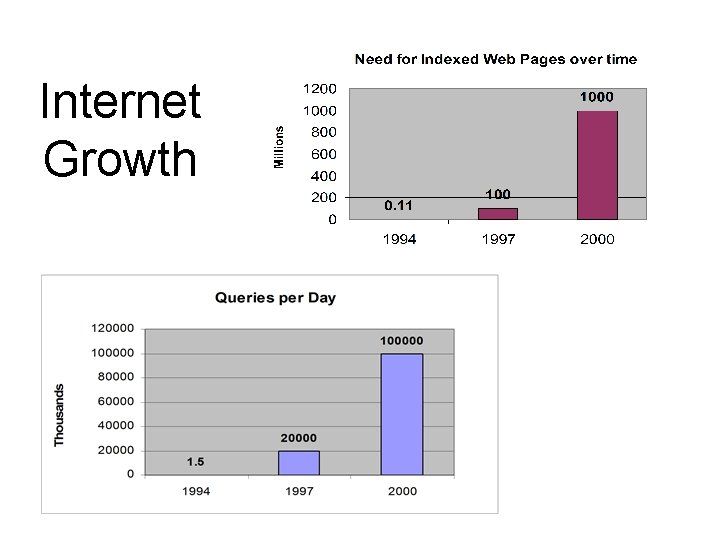

Internet Growth

Keeping up with the Web Requirements (challenges): • Fast crawling technology to keep the web documents up to date. • Efficient use of storage (indices and possibly documents) • Hundreds of thousands index queries per second Mitigating factors: • Technology performance improves (exceptions: disk seek time, OS stability) while its cost tends to decline.

Design Goals

Design Goal: Search Quality • By 1994: All it is needed is a complete index of the Web. • By 1997: An index may be complete and still return many junk results that tarnish relevant ones. – Index size has increased, and so does the number of matches, but… – people is still willing to look only a handful of results. • Only top relevant documents should be returned. • Theory expects the Web’s link structure and link text help finding such relevant documents.

Design Goal: Academic Research • The Web orientation passed from academic to commercial. • Search engine development had remained an obscure and propietary area. • Google wants to make it more understandable to the academic level and promote continuing research. • By caching parts of the Web, Google itself is considered a research platform from where new results can be derived quickly.

Google Features

Prioritizing Pages: Page. Rank • The Web can be described as a huge graph • Such graph can be used to make a fast calculation of the importance of a result item, based on the keywords given by the user. • This resource had been unused at large until Google.

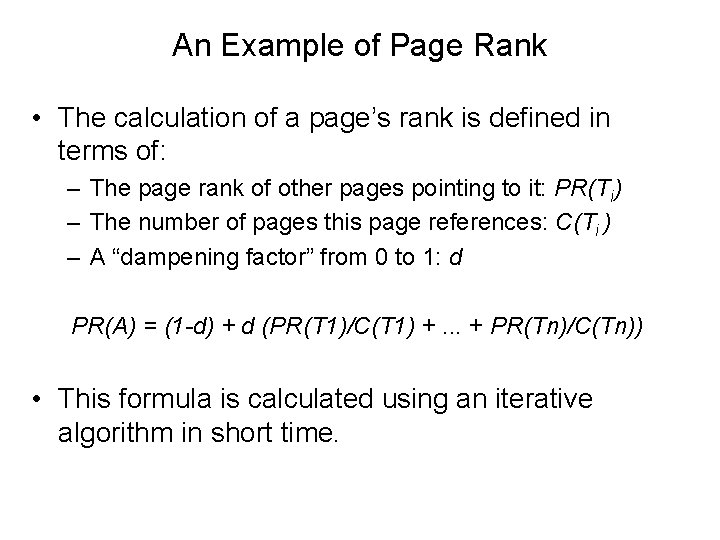

An Example of Page Rank • The calculation of a page’s rank is defined in terms of: – The page rank of other pages pointing to it: PR(Ti) – The number of pages this page references: C(Ti ) – A “dampening factor” from 0 to 1: d PR(A) = (1 -d) + d (PR(T 1)/C(T 1) +. . . + PR(Tn)/C(Tn)) • This formula is calculated using an iterative algorithm in short time.

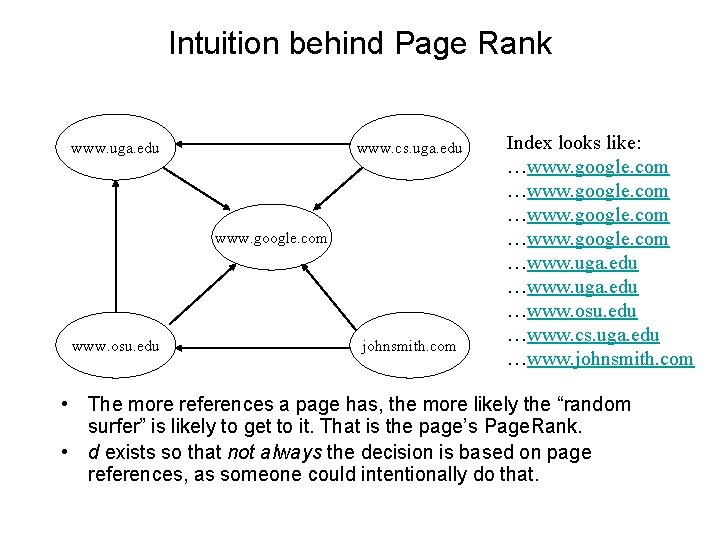

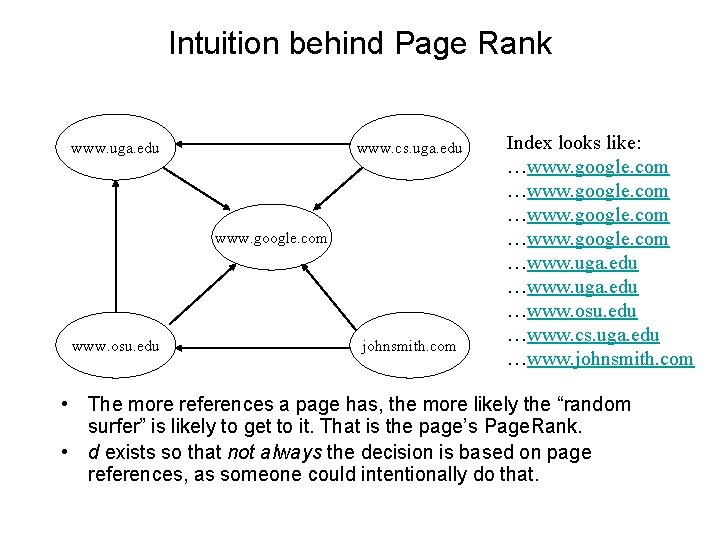

Intuition behind Page Rank 1. A “random surfer” starts from a random page, clicking random links again and again. • The probability of him visiting page A is P(A) = PR(A). http: //www. bleb. org/random 2. At times he requests to start again. • The probability of him starting again is the dampening factor d, used to avoid misleading the system intentionally.

Intuition behind Page Rank www. uga. edu www. cs. uga. edu www. google. com www. osu. edu johnsmith. com Index looks like: …www. google. com …www. uga. edu …www. osu. edu …www. cs. uga. edu …www. johnsmith. com • The more references a page has, the more likely the “random surfer” is likely to get to it. That is the page’s Page. Rank. • d exists so that not always the decision is based on page references, as someone could intentionally do that.

<a>Anchor Text</a> • Usually describes better a page than the page itself. • Associated not only to the page where it is found, but the one it points to. • Makes possible to index non-text content. • Downside: The destination of these links is not verified, so they may even not exist.

Other Features • Takes into account the in-page position of hits. • Presentation of words (big size, bold, etc. ), weighting them accordingly. • The HTML of pages is cached in a repository.

Related Research (1997)

Related Research (1997) • World Wide Web Worm was one of the first search engines. • Many former search engines turned into public companies. Details of such search engines is usually confidential. • There is known work on post-processing of results of major search engines.

Research on Information Retrieval • Produced results based on a controlled set of documents on a specific area. • Even the largest benchmark (TREC-96) would not scale well in an much bigger and heterogeneous place like the Web. • Given a popular topic, users should not need to give many details on it in order to get relevant results.

The Web vs. Controlled Collections • The Web is a completely uncontrolled collection of documents varying in their… – – – languages: both human and programming vocabulary: from zip codes to product numbers format: text, HTML, PDF, images, sounds source: human or machine-generated External meta information: source reputation, update frequency, etc. are all valuable but hard to measure. • Any type of content + influence of search engines + intentional for-profit mislead <> controlled!!

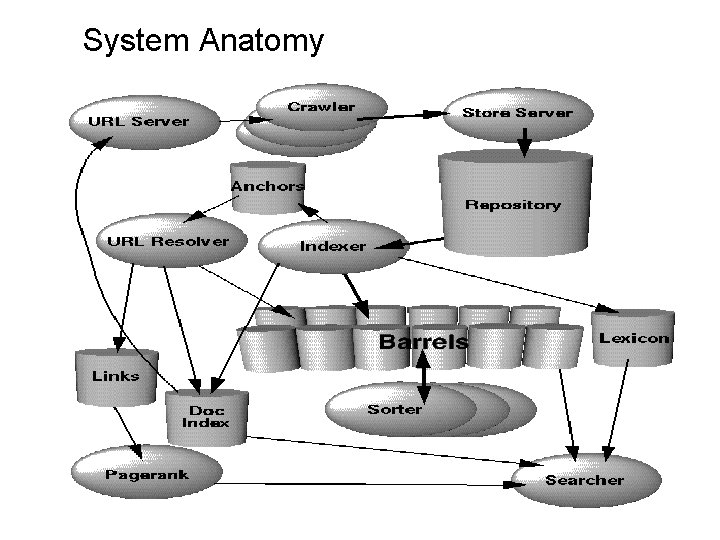

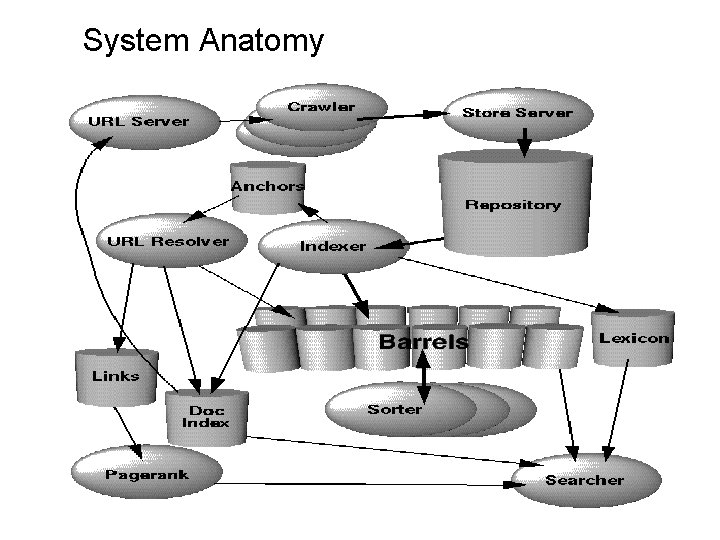

System Anatomy

Architecture Overview Implemented in C/C++, can run in Solaris or Linux. 1. 2. 3. A URLServer sends lists of URLs to be fetched by a set of crawlers Fetched pages are given a doc. ID and sent to a Store. Server which compresses and stores them in a repository The indexer extracts pages from the repository and parses them to classify their words into hits 1. 4. 5. The hits record the word, position in document, and approximation of font size and capitalization. These hits are distributed into barrels, or partially sorted indexes It also builds the anchors file from links in the page, recording to and from information

Architecture Overview 5. The URLResolver reads the anchors file, converting relative URLs into absolute, and assigning doc. IDs 6. The forward index is updated with doc. IDs the links point to. 7. The links database is also created as pairs of doc. IDs. This is used to calculate the Page. Rank 8. The sorter takes barrels and sorts them by word. ID (inverted index). A list of word. IDs points to different offsets in the inverted index 9. This list is converted into a lexicon (vocabulary) 10. The searcher is run by a Web server and uses the lexicon, inverted index and Page. Rank to answer queries

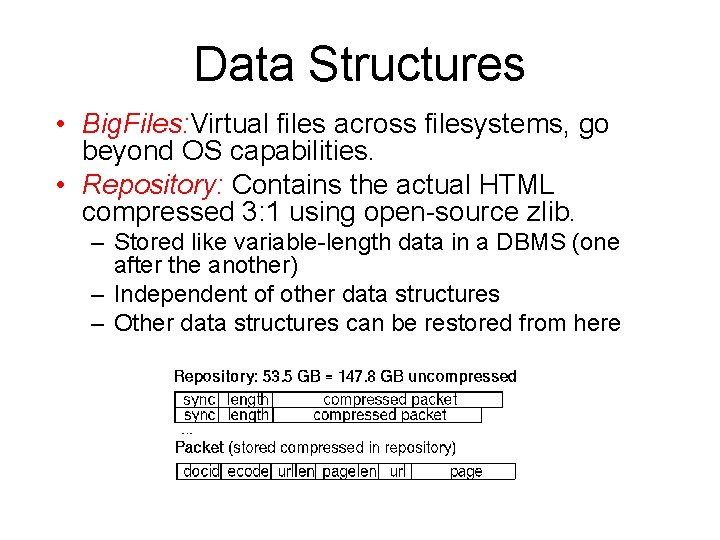

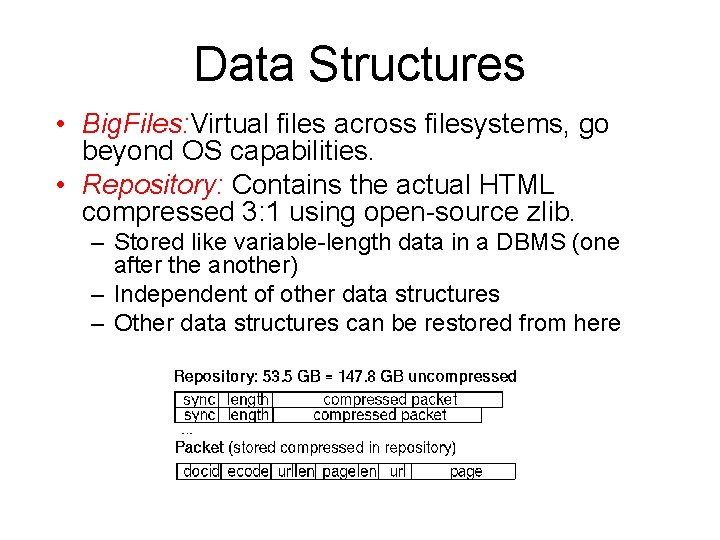

Data Structures • Big. Files: Virtual files across filesystems, go beyond OS capabilities. • Repository: Contains the actual HTML compressed 3: 1 using open-source zlib. – Stored like variable-length data in a DBMS (one after the another) – Independent of other data structures – Other data structures can be restored from here

Data Structures • Document Index: Indexed sequential file (ISAM: Indexed sequential file access mode) with status information about each document. – The information stored in each entry includes the current document status, a pointer to the repository, a document checksum and various statistics. • Additionally, there is a file which is used to convert URLs into doc. IDs. – It is a list of URL checksums with corresponding doc. IDs and is sorted by checksum. – URLs can be converted into doc. IDs in batcch by doing a merge with this file. – To search URL, the checksum is computed and binary serach is performed.

Data Structures • Lexicon: Or list of words, is kept on 256 MB of main memory, allocating 14 million words and hash pointers. • Laxicon can fit in memory for a resonable price. • It is a list of words and a hash table of pointers.

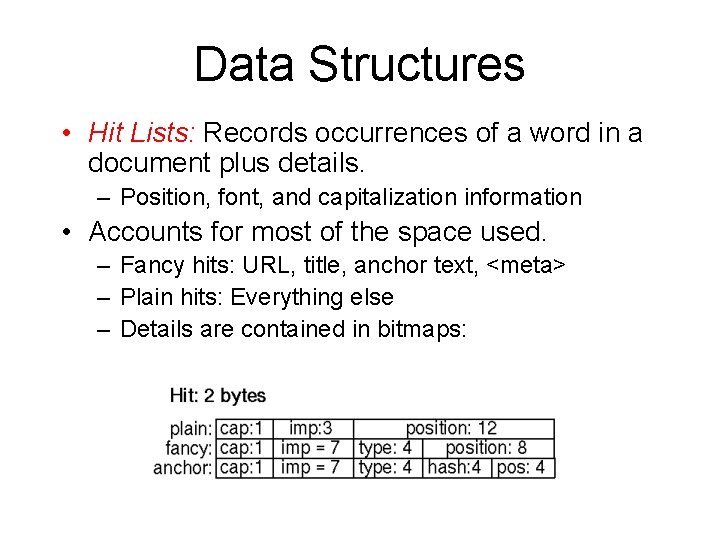

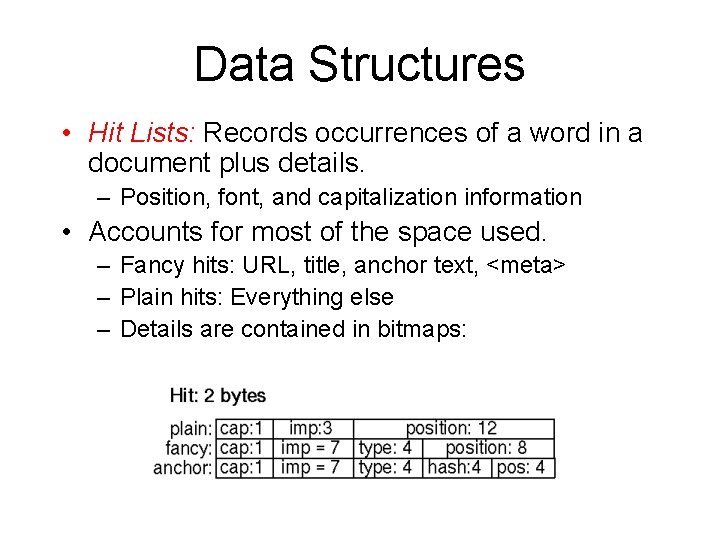

Data Structures • Hit Lists: Records occurrences of a word in a document plus details. – Position, font, and capitalization information • Accounts for most of the space used. – Fancy hits: URL, title, anchor text, <meta> – Plain hits: Everything else – Details are contained in bitmaps:

Data Structures • Forward Index: Stores word. IDs and references to documents containing them. Stored in partially sorted indexes called “barrels”. • Inverted Index: Same as above but after sorting by doc. ID. Stores doc. IDs pointing to hits.

A Simple Inverted Index • Example: Pages containing the words "i love you" "god is love, " "love is blind, " and "blind justice. “ blind (3, 8); (4, 0) god (2, 0) i (1, 0) is (2, 4); (3, 5) justice (4, 6) love (1, 2); (2, 7); (3, 0) you (1, 7)

Crawling the Web • Distributed crawling system, 3 crawlers @300 concurrent connections, or 100 pages per second@600 KB/sec. – A single URL server serves list of URLs to a number of crawlers (implemented in Python). – Each crawler keeps roughly 300 connections open at once. – Each connection is in one of four states: looking up DNS, connecting to host, sending request, and receiving response. – At peak the system can crawl 100 web pages per second. • Stress on DNS lookup is reduced by having a DNS cache in each crawler. • Social consequences due to lack of knowledge (“This page is copyrighted and should not be indexed”) • Any behavior can be expected of software crawling the net. Intensive testing required.

Indexing the Web • Parser: Must be validated to expect and deal with a huge number of special situations. – Typos in HTML tags, Kilobytes of zeros, non-ASCII characters. • Indexing into barrels: Word > word. ID > update lexicon > hit lists > forward barrels. There is high contention for the lexicon. • Sorting: Inverted index is generated from forward barrels by sorting them individually to avoid temp storage, using TPMMS.

Searching • The ranking system is better because more data (font, position and capitalization) is maintained about documents. This and Page. Rank help in finding better results. • Feedback: Selected users can grade search engine results to help recalculate efficiency.

Results and Performance • “The most important measure of a search engine is the quality of its search results. ” • Results are correct even for non-commercial or rarely referenced sites. • Cost-effective: A significant part of 1997’s Web was held in a 53 GB repository, and all other data could fit in additional 55 GB. • Google use proximity search heavily. – For example for a query Bill Clinton, heavy importance is given on the proximity of word occurances.

Performance • Google is designed to scale cost effectively to the size of the Web as it grows. • Crawling and indexing is important – Google major operations • Crawling, Indexing, Sorting • It took 9 days to download the 26 million pages. • The indexer runs 53 pages per secoond • Most queries are answered within 1 and 10 seconds. – The time is dominated by disk I/O’s.

Google’s Philosophy • “…it is crucial to have a competitive search engine that is transparent and in the academic realm. ” • Google is probably the only leading IT company that is loved by everyone, and remains attached to its principles despite its amazing profit potential.

Conclusions • Google goal is to provide high quality search. • Google employs a number of techniques to improve search quality – Page rank – Anchor text – Proximity information • Google is a complete architecture for gathering web pages, indexing them and performing search queries over them

Conclusions: Future work • Immediate goal: improve search efficiency. – Query caching • Research in updates • Supporting user context and result summarization • Link structure, link text, text surrounding links. • Web search engine is a rich environments for research ideas.

Conclusions: High quality search • Users problem: quality of search results • Google makes high use of link information and anchor text. • It also uses proximity and FONT information. – Page rank evaluates quality of pages. – Use of link text helps to return relevant pages. – Proximity search helps to increase relevance further

Conclusions: Scalable architecture • Google : quality and scalability • Efficient in both space and time. • There are bottlenecks – CPU, memory access, memory capacity, disk seeks, disk throughput, disk capacoty and network I/O. • Googles major data structures use efficient use of available space. • Crawling, indexing, soarting operations are efficient enough to build an index of 100 million pages in less than a month.

Conclusions: Research tool • Google is also a research tool. • The data set can be used to build next generation search engines.

Graph Structure in the Web • Outline • Report experiments on local and global properties of Web graph using two Altavista Crawls with over 200 million pages and 1. 5 billion links. • The experiments indicate that the macroscopic structure of web is more puzzling than earlier experiments. Slide 1 -42

Architecture of the web • Earlier perception (Until 1999) – The web is like a ‘Ball of highly-connected Spaghetti’ • Most pairs of pages on the web are separated by handful of links, almost always under 20 • The research done by ‘Barabasi’ involved about 40 million pages • Now it is viewed by some people as “Small World “ Phenomenon Slide 1 -43

Architecture of the web • A new research – Graph structure in the web (May 2000) – Conducted by researchers at Alta. Vista, IBM and Compaq – Presented at May 2000 World Wide Web Conference in Amsterdam – Alta. Vista crawler used to gather information about pages and links Slide 1 -44

Architecture of the web • A new research – Graph structure in the web (continued) – A database of over 200 million pages and 1. 5 billion links (5 times bigger than Barabasi’s research) – Compaq Connectivity Server 2 (CS 2) with 12 GB RAM used for used to store and process the database Slide 1 -45

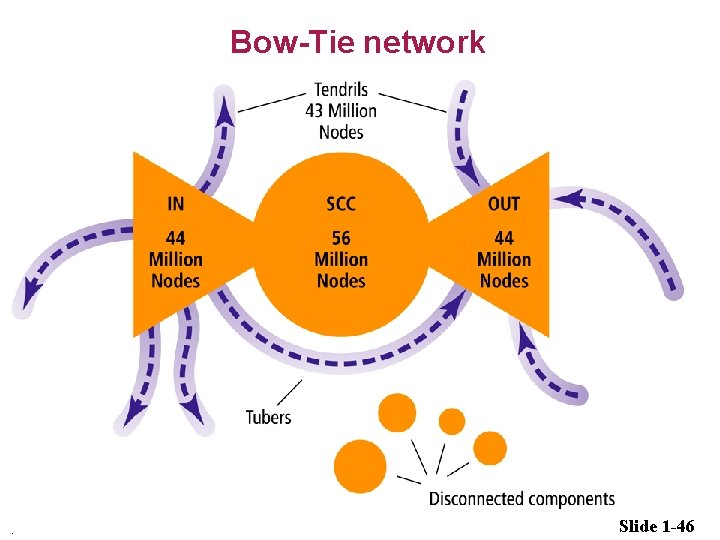

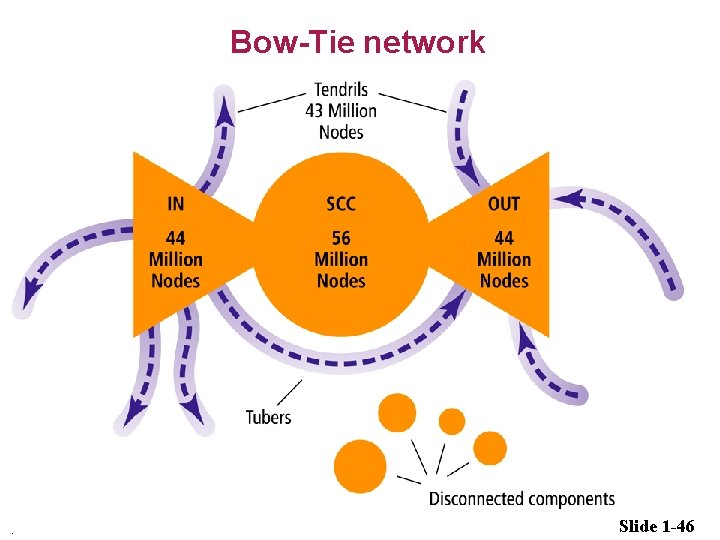

Bow-Tie network . Slide 1 -46

Architecture of the web • Five classes of nodes o o o Strongly Connected Components (SCC) IN pages OUT pages Tendrils Disconnected Components Slide 1 -47

Architecture of the web • Strongly Connected Components (SCC) o Web pages in the core of the internet, which are strongly connected to each other o Any two pages in SCC have a navigational path between them (average 19 clicks needed) o Size of SCC is about 56 million pages o Examples: MSN, yahoo, CNN etc Slide 1 -48

Architecture of the web • IN Pages o These are the pages that have links to the pages in SCC, but there is no navigational path from SCC to these pages o Examples: These are possibly new sites that people have not yet discovered and linked to o Size of IN is about 44 million pages Slide 1 -49

Architecture of the web • OUT Pages o These are the pages that are accessible from the SCC, but do not link back to it o Examples: Corporate sites that contain only internal links. These pages are intentionally built that way so that you spend more time on the corporate site o Size of OUT is also about 44 million pages Slide 1 -50

Architecture of the web • Tendrils o These are pages that can not reach SCC and can not be reached from SCC o But they can either access IN pages or they are accessible from OUT pages o Size of Tendrils is about 43 million pages • Disconnected Components o Pages which do not have any connection to other types of pages Slide 1 -51

Architecture of the web • How is the knowledge being used o Alta. Vista and Google are using ‘Link Popularity’ of the page to determine how useful the page is in the index o The location of of the page in the graph structure is one of the factors in determining ‘Link Popularity’ o To improve Link Popularity of you page create links to the topic relevant pages in SCC and seek links from pages in SCC to your page Slide 1 -52

Architecture of the web • References • • http: //www 9. org/w 9 cdrom/160. html http: //www. searchengineposition. com/info/articles/bowtie. asp http: //www 9. org/w 9 cdrom/159. html http: //www 9. org/w 9 cdrom/293. html Slide 1 -53

The anatomy of a large scale hypertextual web search engine

The anatomy of a large scale hypertextual web search engine The anatomy of a large scale hypertextual web search engine

The anatomy of a large scale hypertextual web search engine The anatomy of a large scale hypertextual web search engine

The anatomy of a large scale hypertextual web search engine The anatomy of a large-scale hypertextual web search engine

The anatomy of a large-scale hypertextual web search engine Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan Cong thức tính động năng

Cong thức tính động năng Fecboak

Fecboak điện thế nghỉ

điện thế nghỉ Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Một số thể thơ truyền thống

Một số thể thơ truyền thống Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Hệ hô hấp

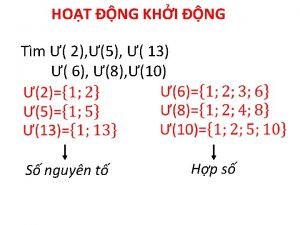

Hệ hô hấp Số nguyên tố là

Số nguyên tố là đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ Các môn thể thao bắt đầu bằng tiếng chạy

Các môn thể thao bắt đầu bằng tiếng chạy Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Hát kết hợp bộ gõ cơ thể

Hát kết hợp bộ gõ cơ thể ưu thế lai là gì

ưu thế lai là gì Tư thế ngồi viết

Tư thế ngồi viết Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Cái miệng xinh xinh thế chỉ nói điều hay thôi

Cái miệng xinh xinh thế chỉ nói điều hay thôi Cách giải mật thư tọa độ

Cách giải mật thư tọa độ Từ ngữ thể hiện lòng nhân hậu

Từ ngữ thể hiện lòng nhân hậu Tư thế ngồi viết

Tư thế ngồi viết Thế nào là giọng cùng tên

Thế nào là giọng cùng tên Thẻ vin

Thẻ vin Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Thơ thất ngôn tứ tuyệt đường luật

Thơ thất ngôn tứ tuyệt đường luật Lp html

Lp html Hổ đẻ mỗi lứa mấy con

Hổ đẻ mỗi lứa mấy con Diễn thế sinh thái là

Diễn thế sinh thái là Glasgow thang điểm

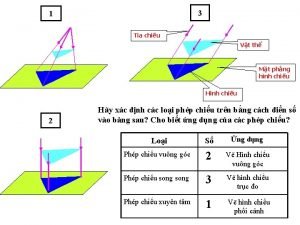

Glasgow thang điểm Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Làm thế nào để 102-1=99

Làm thế nào để 102-1=99 Lời thề hippocrates

Lời thề hippocrates Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể đại từ thay thế

đại từ thay thế Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Bổ thể

Bổ thể Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Khi nào hổ con có thể sống độc lập

Khi nào hổ con có thể sống độc lập Thế nào là mạng điện lắp đặt kiểu nổi

Thế nào là mạng điện lắp đặt kiểu nổi Các loại đột biến cấu trúc nhiễm sắc thể

Các loại đột biến cấu trúc nhiễm sắc thể Hát lên người ơi

Hát lên người ơi Biện pháp chống mỏi cơ

Biện pháp chống mỏi cơ Phản ứng thế ankan

Phản ứng thế ankan Podbřišek

Podbřišek Superior mouth fish

Superior mouth fish What is the function of the golgi apparatus

What is the function of the golgi apparatus Central groove of mandibular molar

Central groove of mandibular molar Composition of urine slideshare

Composition of urine slideshare Neck anatomical term

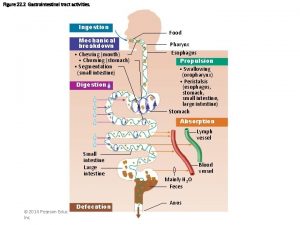

Neck anatomical term Diagram of peristalsis

Diagram of peristalsis Sustentaculum tali

Sustentaculum tali