AI Neural Networks lecture 7 Neural Networks Tony

- Slides: 13

AI: Neural Networks lecture 7 Neural Networks Tony Allen School of Computing & Informatics Nottingham Trent University

Unsupervised learning • Self-organising neural nets group similar input vectors together without the use of target vectors. Only input vectors are given to the network during training. The learning algorithm then modifies the network weights so that similar input vectors produce the same output vector. • Unsupervised training is good for finding hidden structure or features within a set of patterns. E. g. the network is allowed to cluster without being biased towards looking for a pre-defined output or classification. What the network learns is entirely data driven. After learning it is often necessary to examine what the clusters or output units have come to mean. 10/31/2021

Hebbian learning One common way to calculate changes in connection strengths in an unsupervised neural network is the so called hebbian learning rule – “when neuron A repeatedly participates in firing neuron B, the strength of the action of A onto B increases” ΔWij (t) = η*Xi*Yj • • Xi is the output of the input layer neuron Yj is the output of the output layer neuron Wij is the strength of the connection between them η is the learning rate A more general form of a hebbian learning rule would be: ΔWij (t) = f(Yj, Xi, η, t, θ) in which time and learning thresholds can be taken into account. 10/31/2021

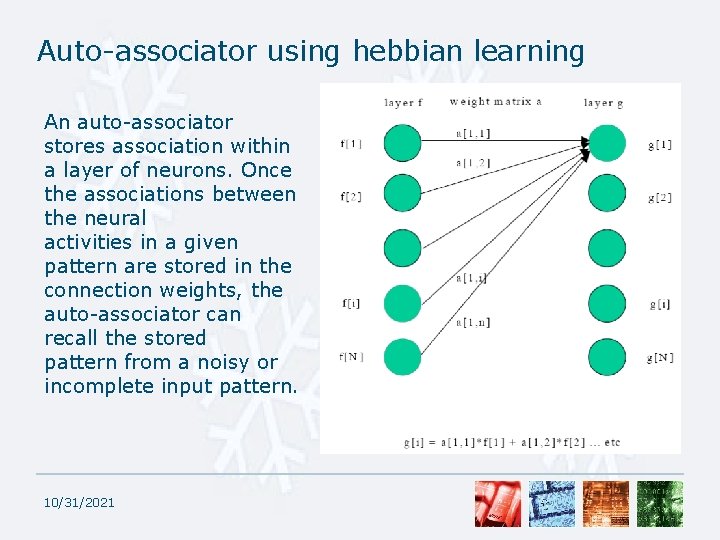

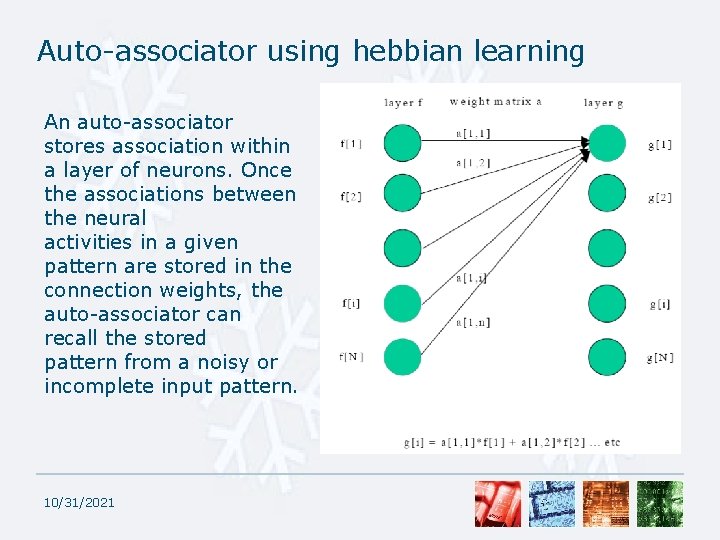

Auto-associator using hebbian learning An auto-associator stores association within a layer of neurons. Once the associations between the neural activities in a given pattern are stored in the connection weights, the auto-associator can recall the stored pattern from a noisy or incomplete input pattern. 10/31/2021

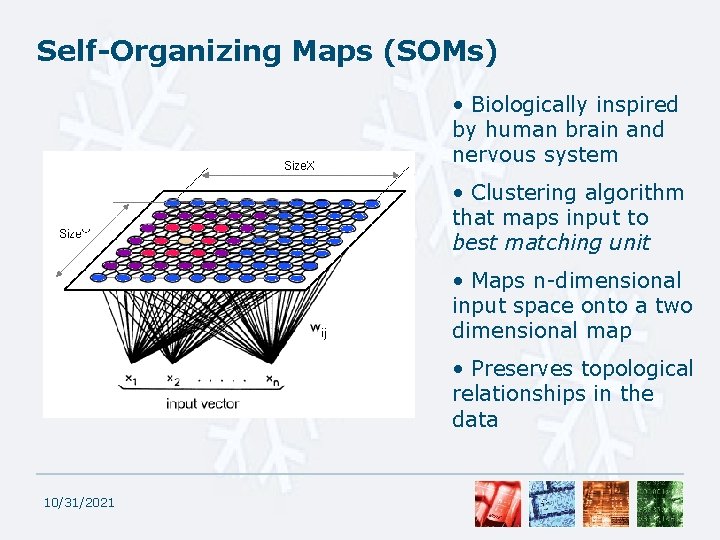

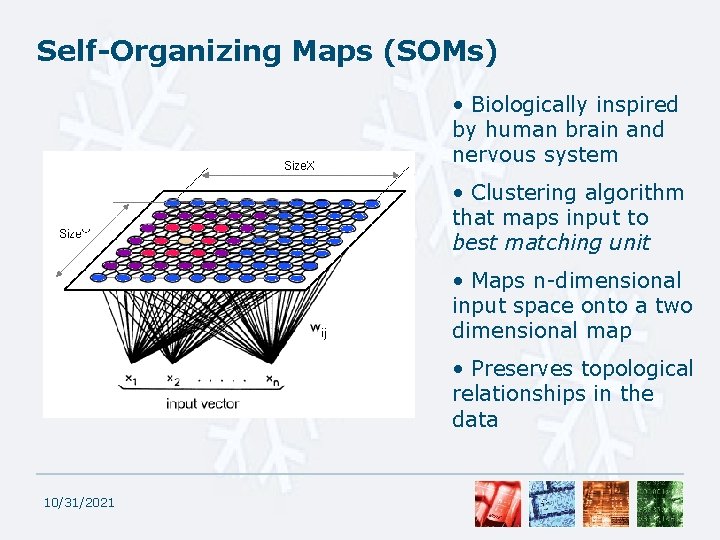

Self-Organizing Maps (SOMs) • Biologically inspired by human brain and nervous system • Clustering algorithm that maps input to best matching unit • Maps n-dimensional input space onto a two dimensional map • Preserves topological relationships in the data 10/31/2021

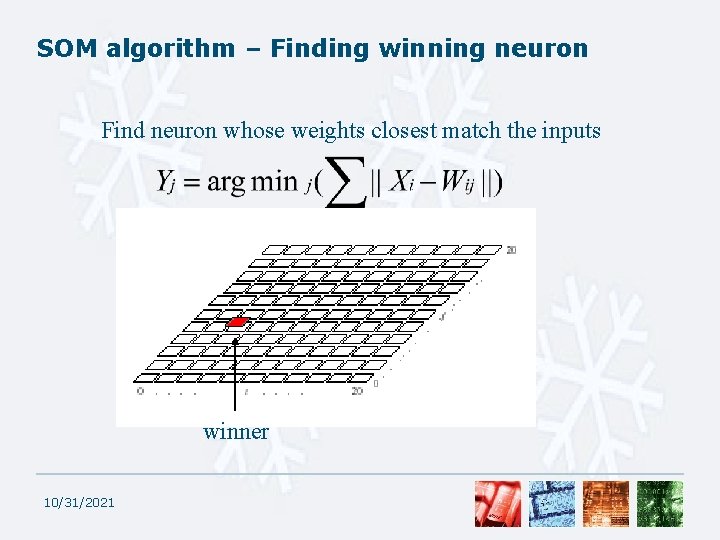

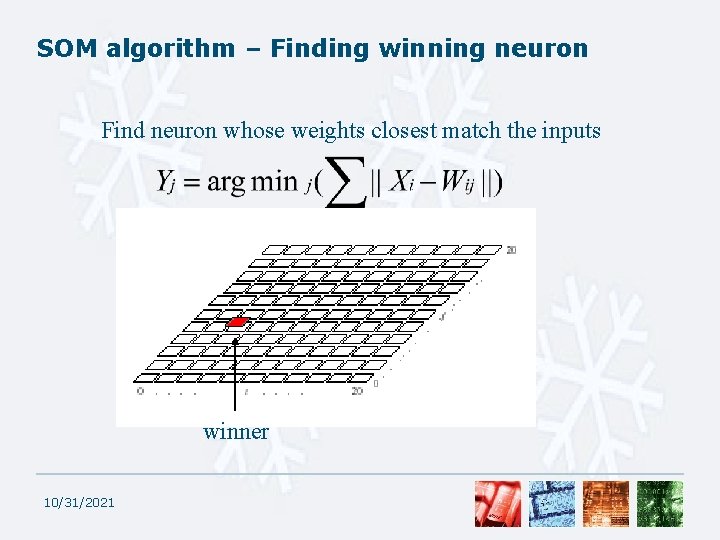

SOM algorithm – Finding winning neuron Find neuron whose weights closest match the inputs winner 10/31/2021

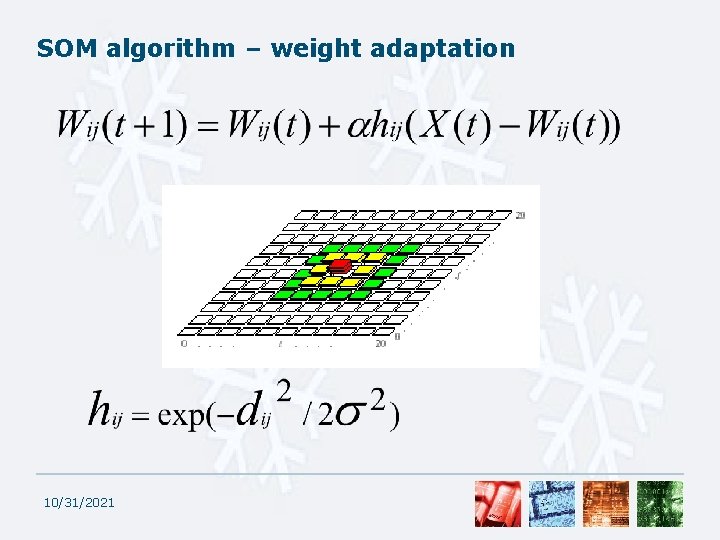

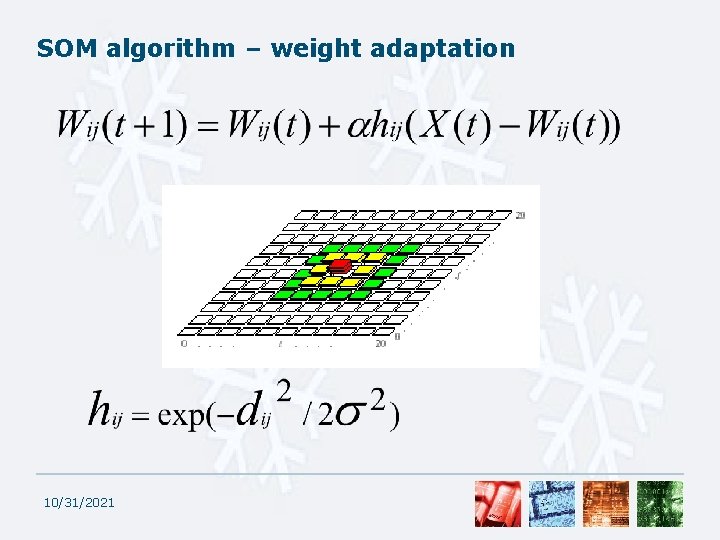

SOM algorithm – weight adaptation 10/31/2021

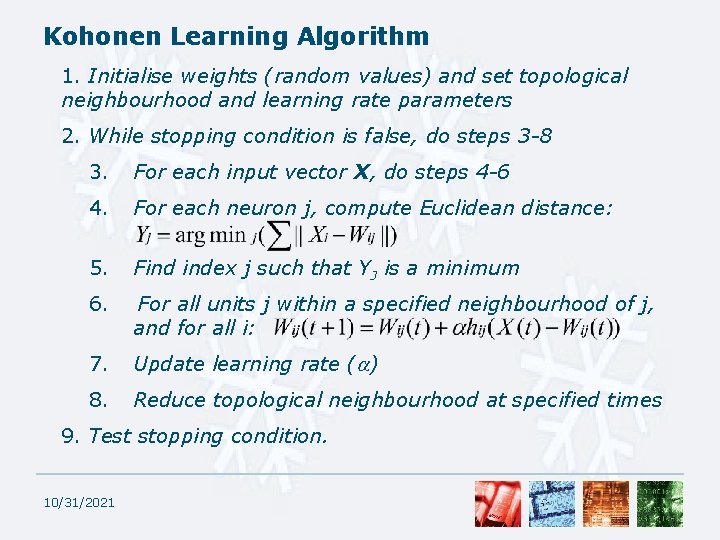

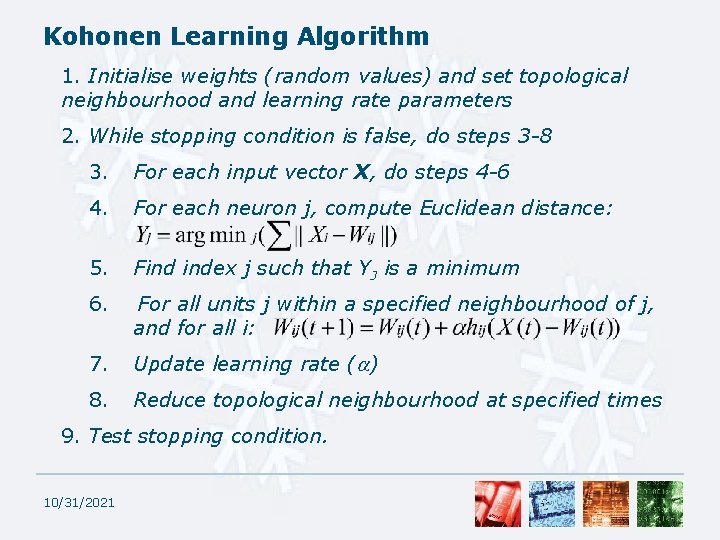

Kohonen Learning Algorithm 1. Initialise weights (random values) and set topological neighbourhood and learning rate parameters 2. While stopping condition is false, do steps 3 -8 3. For each input vector X, do steps 4 -6 4. For each neuron j, compute Euclidean distance: 5. Find index j such that YJ is a minimum 6. For all units j within a specified neighbourhood of j, and for all i: 7. Update learning rate ( ) 8. Reduce topological neighbourhood at specified times 9. Test stopping condition. 10/31/2021

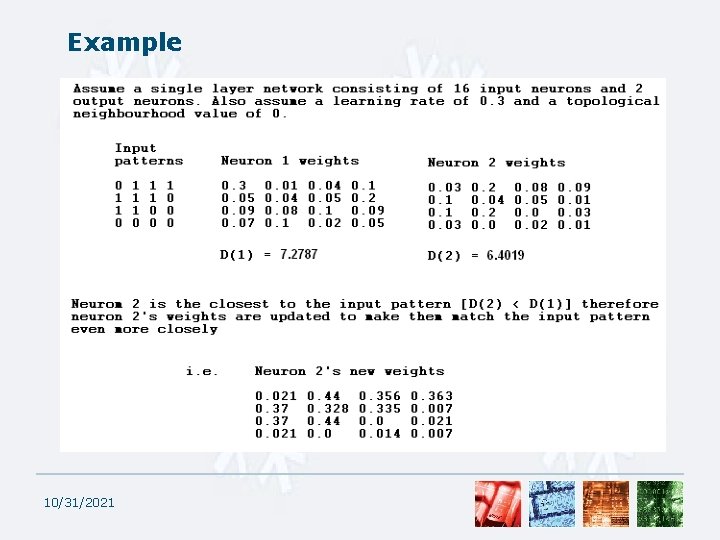

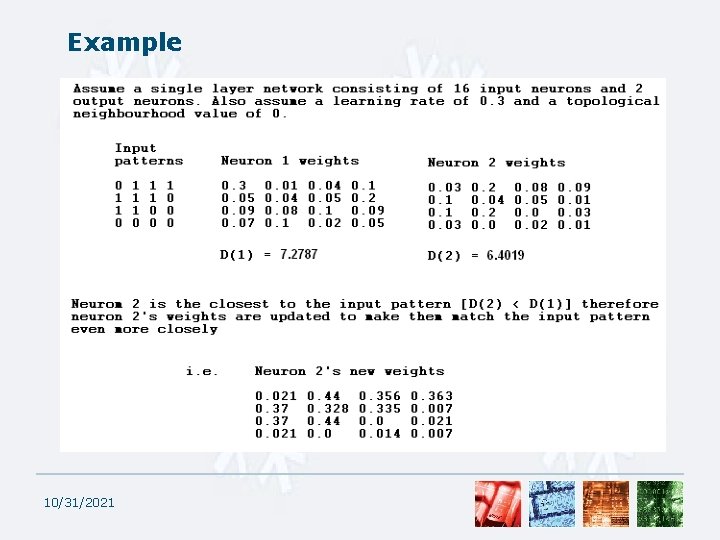

Example 10/31/2021

SOM applications • Soms have been used in many applications; most often in vision systems for vector quantisation. • Vision based autonomous robot navigation systems historically use predetermined models of the environment. • Kohonen SOMs have recently been investigated to allow such systems to develop their own internal representation of the external environment. 10/31/2021

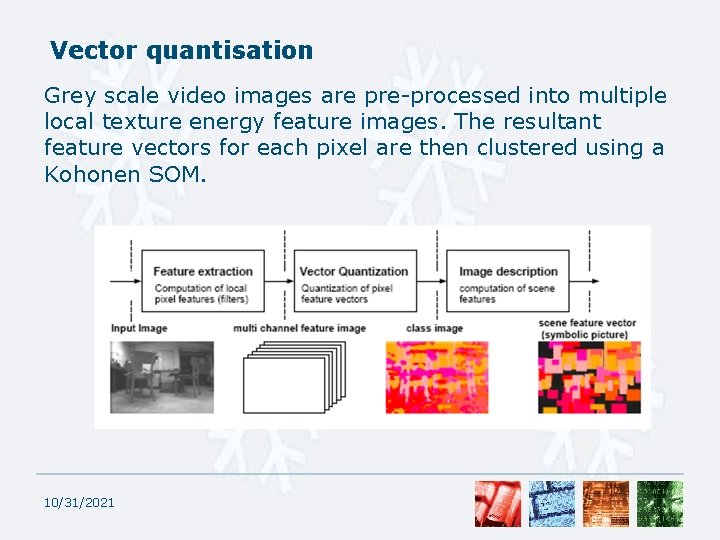

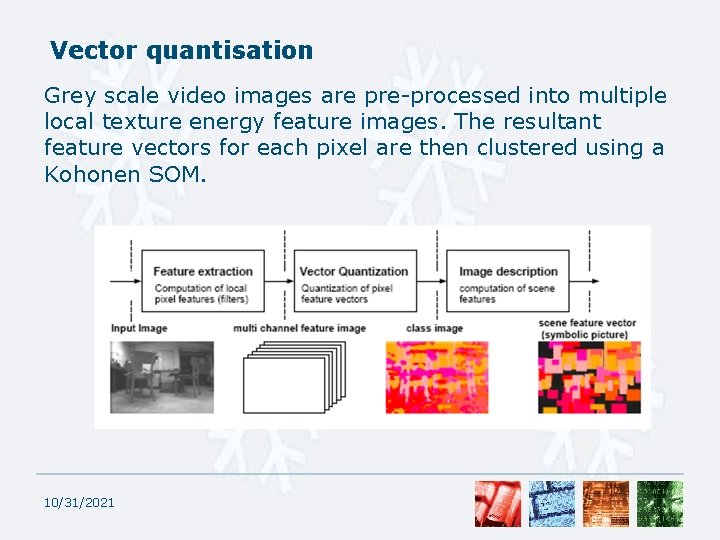

Vector quantisation Grey scale video images are pre-processed into multiple local texture energy feature images. The resultant feature vectors for each pixel are then clustered using a Kohonen SOM. 10/31/2021

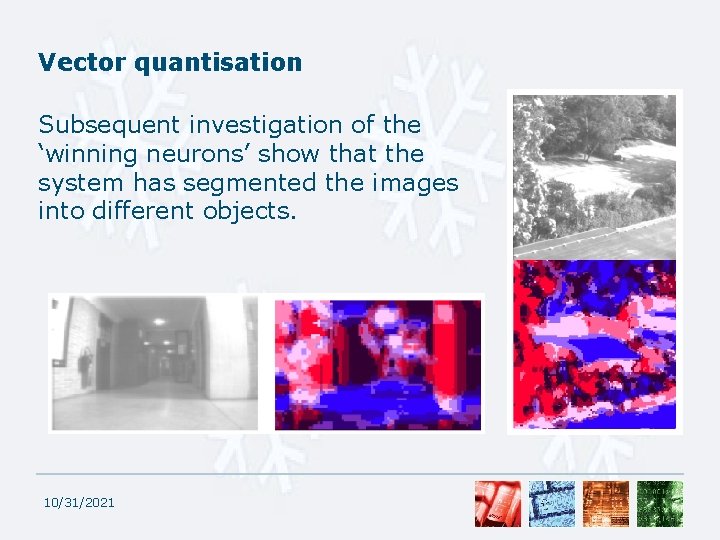

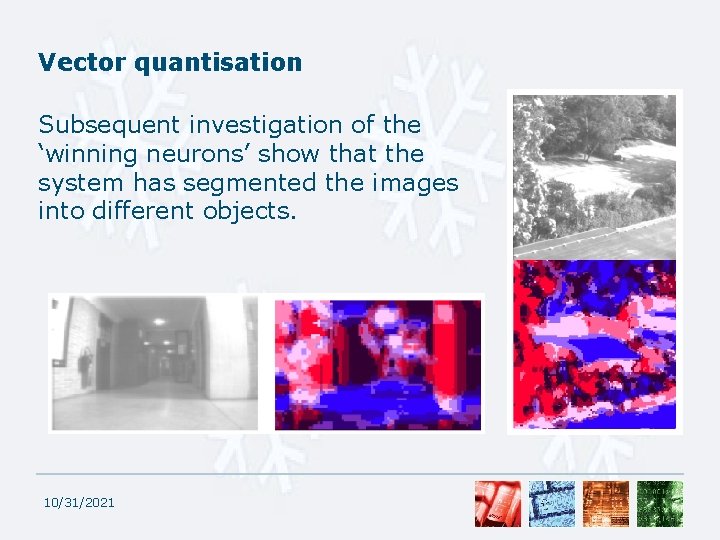

Vector quantisation Subsequent investigation of the ‘winning neurons’ show that the system has segmented the images into different objects. 10/31/2021

References Hebb, D. O. (1949) “The organization of behavior. ” Wiley, New York Kohonen, T. (1989). “Self-Organizing and Associative Memory. ” 3 rd ed. Berlin: Springer. Verlag. Kohonen, T. (1990). “The Self-Organizing Map. ” Proceedings of the IEEE, 78(9): pp 1464 -1480. Von Wichert. G. “Selforganizing Visual Perception for Mobile Robot navigation”. 10/31/2021

01:640:244 lecture notes - lecture 15: plat, idah, farad

01:640:244 lecture notes - lecture 15: plat, idah, farad Graph neural network lecture

Graph neural network lecture Matlab neural network toolbox

Matlab neural network toolbox Convolutional neural networks

Convolutional neural networks Convolutional neural networks for visual recognition

Convolutional neural networks for visual recognition The wake-sleep algorithm for unsupervised neural networks

The wake-sleep algorithm for unsupervised neural networks On the computational efficiency of training neural networks

On the computational efficiency of training neural networks Neural networks and learning machines

Neural networks and learning machines What is stride in cnn

What is stride in cnn Lmu cis

Lmu cis Vc dimension of neural networks

Vc dimension of neural networks 11-747 neural networks for nlp

11-747 neural networks for nlp Neural networks for rf and microwave design

Neural networks for rf and microwave design Neuraltools neural networks

Neuraltools neural networks