Adding Value to the ValueAdded Evaluation Approach Linking

- Slides: 22

Adding Value to the Value-Added Evaluation Approach: Linking to the Context of Educational Programs Chi-Keung (Alex) Chan, Mary Pickart, David Heistad, Jon Peterson, & Colleen Sanders Minneapolis Public Schools Presentation at the 2009 American Evaluation Association Annual Conference, Orlando, Florida, November 2009

What is “Value-Added” Value-added measures how much a program (teacher/school) adds value to the performance of a subject above his/her expected growth, ruling out other factors (e. g. demographic characteristics, prior achievement, school characteristics etc. )

References about Value-added AERA. (2004). Value-added assessment special issue. Journal of Educational and Behavioral Statistics, 29(1), 1 -144. Lissitz, R. (2005). Value added models in education: Theory and application. Maple Grove, MN: JAM Press. Lissitz, R. (2006). Longitudinal and value-added models of student performance. Maple Grove, MN: JAM Press. Mc. Caffrey, D. , Lockwood, J. R. , Koretz, D. , & Hamilton, L. (2003). Evaluating value-added models for teacher accountability. Santa Monica, CA: RAND Corporation. Meyer, R. (1997). Value-added indicators of school performance: A primer. Economics of Education Review, 16, 283 -201.

Value-Added Application - Evaluate School Effectiveness (Dallas)- Evaluate Teacher Effectiveness (TVASS) - Evaluate Federal Programs (VARC, WCER) - Accountability (NCLB) Debate: What models? Precise? Stable?

Limitations of Value-Added Stufflebeam, Madaus, and Kellaghan (2000, Evaluation Models) commented on the value-added approach that “this approach does not provide indepth documentation of program inputs and processes”. Koretz (Fall 2008, American Educator) commented that value-added models are not a silver bullet and are not adequate measure of overall education quality.

Objective The objective of this presentation is to illustrate how to link the value-added evaluation results on student performance to the context of three educational program evaluations in Minneapolis Public Schools (MPS). ADDING VALUE TO VALUE-ADDED

Background of Evaluations Minneapolis Public Schools 75% Minority, 60% Low-SES, 25% ELL, 8% HHM 51% Reading Proficient, 43% Math Proficient (1) Beat the Odds Kindergarten Teachers (2) Supplemental Educational Services (3) 21 st Century Community Learning Centers

Kindergarten Teachers Who Beat the Odds n Identified Empirically Using Value-added n End of Kindergarten Assessment Results as predicted from Beginning of Kindergarten, Poverty, ELL, Special Education, Gender, Age, Racial/Ethnic background, Classroom/Teacher Effects n Ten top teachers were interviewed and video taped 8

Behavioral Management n n n n Positive and consistent feedback High expectations for all students High percentage of student time on task Materials are organized and readily available Students Know the Daily Routine Models appropriate classroom behavior Establishes welcoming environment

Kindergarten Literacy Instruction n n n Utilizes small flexible group instruction based on progress monitoring data High expectations for academic achievement Structures time and materials to maximize student learning Makes connections to previous learning Uses variety of instructional approaches Uses writing activities to support other literacy instruction

Teacher Videos More Videos: http: //rea. mpls. k 12. mn. us/BEAT_THE_ODDS_-_Kindergarten_Teachers. html

SES Evaluation We adopted the mixed-method evaluation approach to examine the effectiveness and implementation of SES. (1) Probability match-sample (propensity score matching) value-added analyses; (2) Field observations at the SES provider sites; (3) Semi-structured interviews with the SES providers.

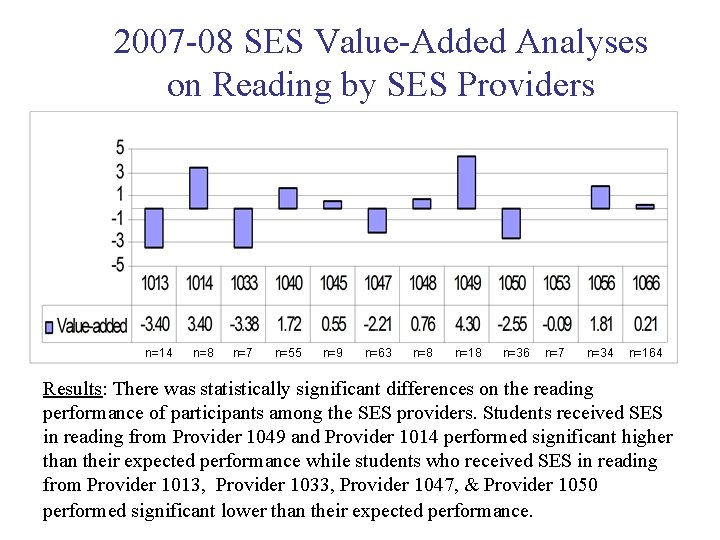

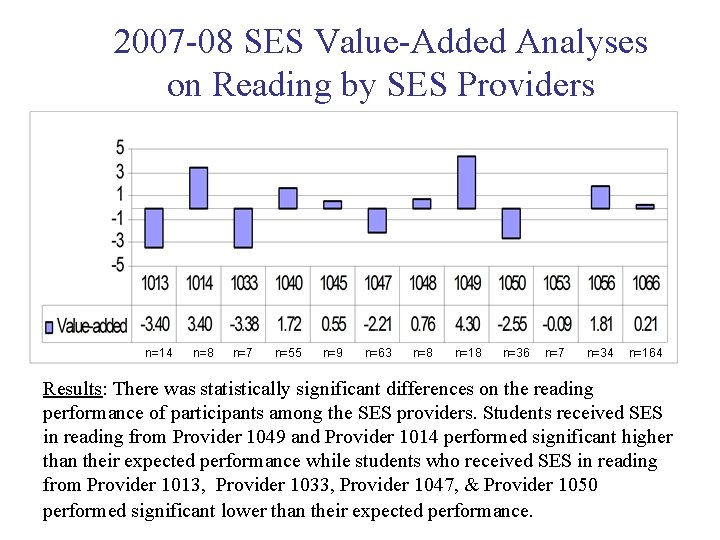

2007 -08 SES Value-Added Analyses on Reading by SES Providers n=14 n=8 n=7 n=55 n=9 n=63 n=8 n=18 n=36 n=7 n=34 n=164 Results: There was statistically significant differences on the reading performance of participants among the SES providers. Students received SES in reading from Provider 1049 and Provider 1014 performed significant higher than their expected performance while students who received SES in reading from Provider 1013, Provider 1033, Provider 1047, & Provider 1050 performed significant lower than their expected performance.

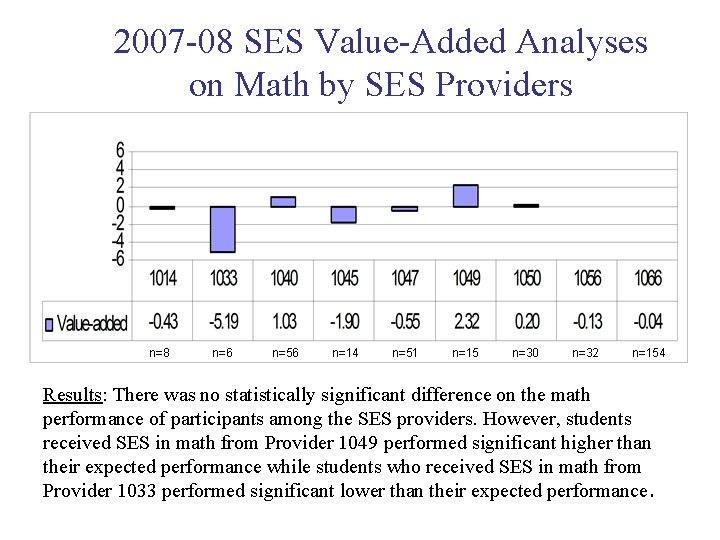

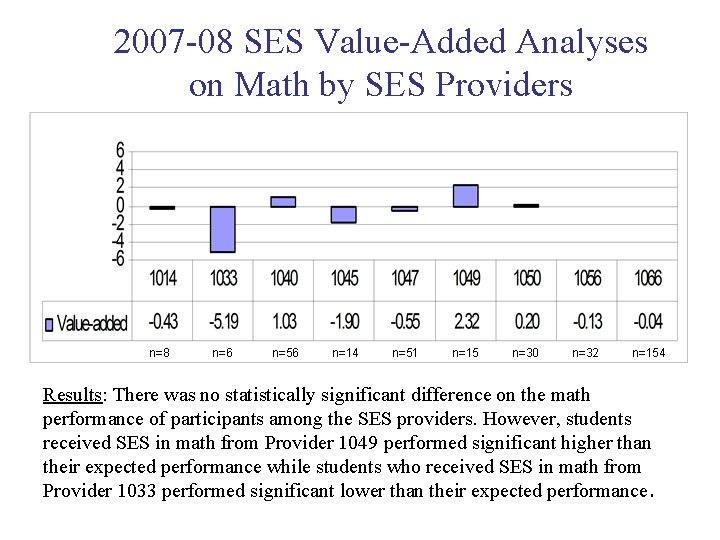

2007 -08 SES Value-Added Analyses on Math by SES Providers n=8 n=6 n=56 n=14 n=51 n=15 n=30 n=32 n=154 Results: There was no statistically significant difference on the math performance of participants among the SES providers. However, students received SES in math from Provider 1049 performed significant higher than their expected performance while students who received SES in math from Provider 1033 performed significant lower than their expected performance.

Suggestions by SES Providers to Improve Implementation 1) Improving communication - improve communication among various stakeholders and District, school, & SES providers need to provide adequate information to parents how SES is beneficial. 2) Building authentic relationships - build authentic relationships between SES providers and stakeholders (including school staff at the day-t time school). 3) Organization for alignment - ensure the SES is aligned with the District’s curricula standards, professional development, quality of tutoring, and connection with day-time instruction and other after school programs.

Best SES Practices from Field Observations 1) Small group learning 2) One-on-one tutoring 3) Computerized instruction 4) Use of frequent assessments to monitor student learning

21 st Century Evaluation We adopted the mixed-method evaluation approach to examine the effectiveness and implementation of 21 st Century. (1) Value-added analyses (participants versus non-participants) (2) Student surveys (Community Education) (3) Staff surveys (Community Education) (4) Parent surveys (Community Education)

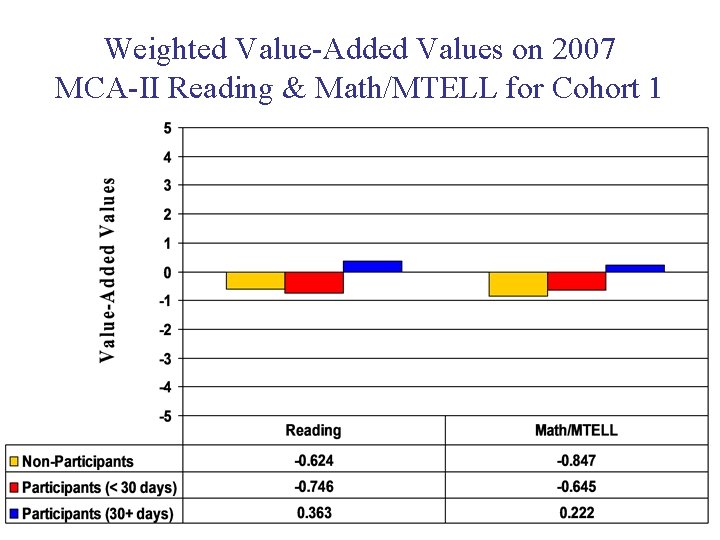

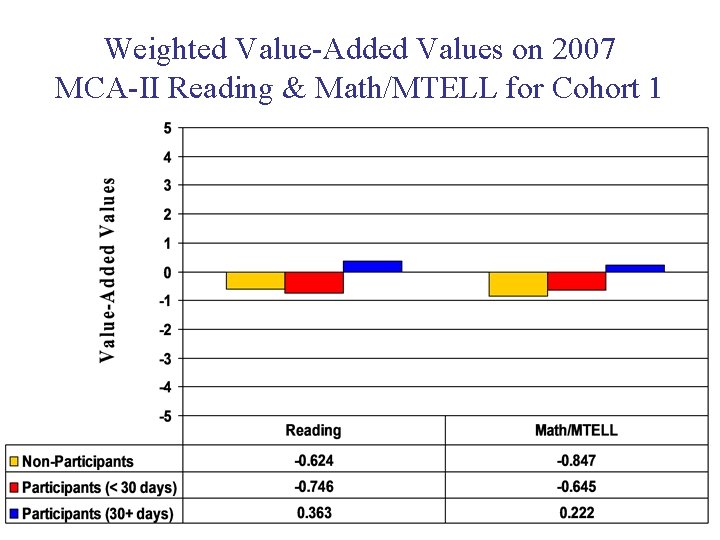

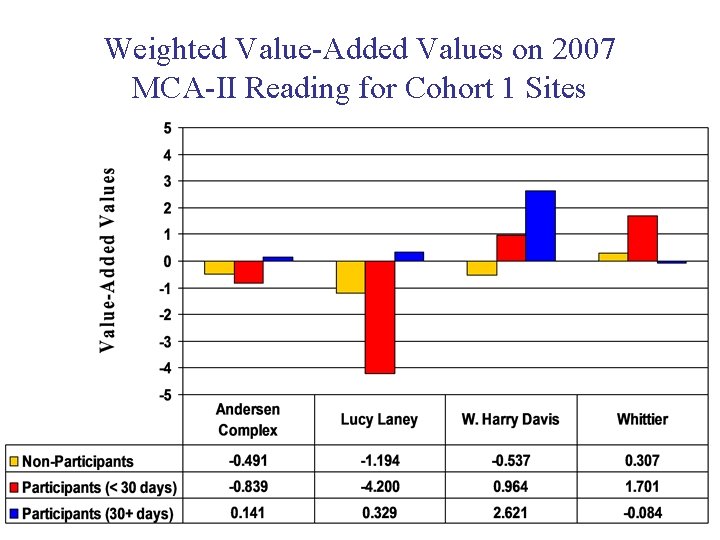

Weighted Value-Added Values on 2007 MCA-II Reading & Math/MTELL for Cohort 1

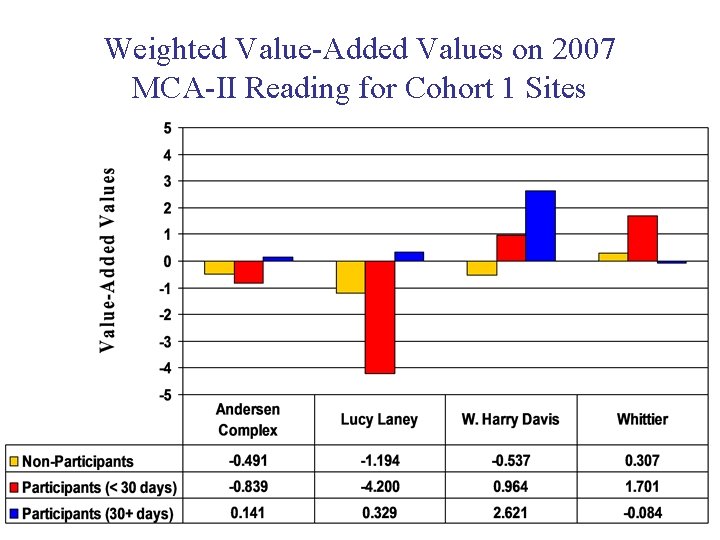

Weighted Value-Added Values on 2007 MCA-II Reading for Cohort 1 Sites

21 st Century Student Survey Sample

21 st Century Parent/Teacher Survey Sample

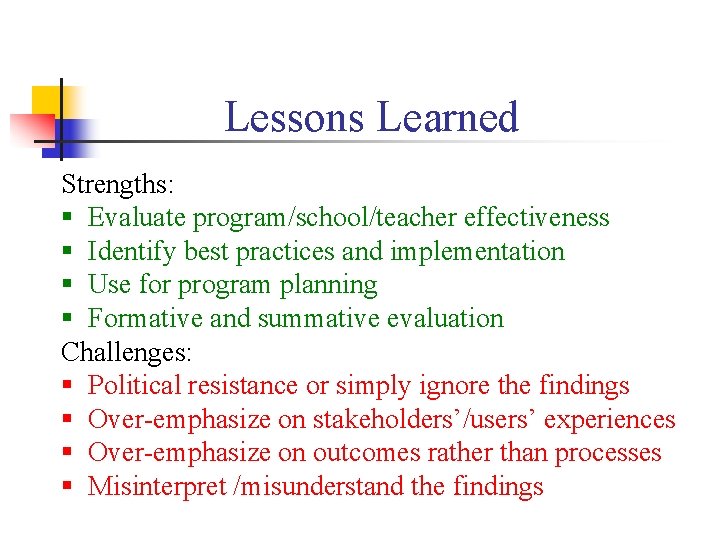

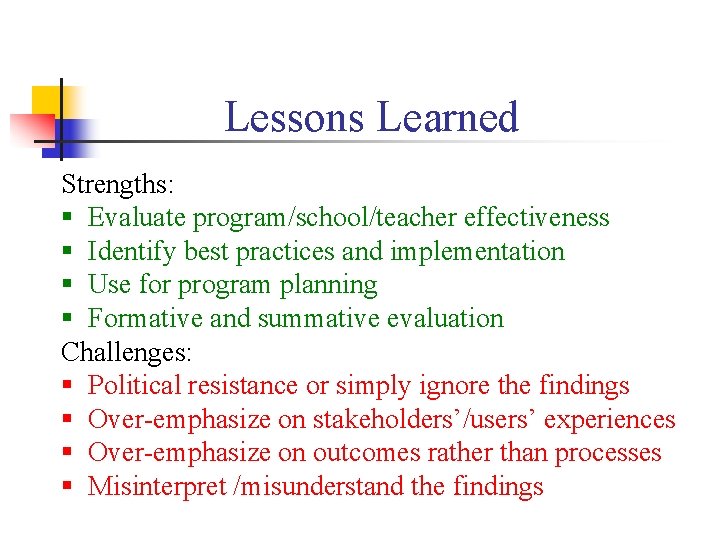

Lessons Learned Strengths: § Evaluate program/school/teacher effectiveness § Identify best practices and implementation § Use for program planning § Formative and summative evaluation Challenges: § Political resistance or simply ignore the findings § Over-emphasize on stakeholders’/users’ experiences § Over-emphasize on outcomes rather than processes § Misinterpret /misunderstand the findings