A Study of Smoothing Methods for Language Models

- Slides: 21

A Study of Smoothing Methods for Language Models Applied to Ad Hoc Information Retrieval Chengxiang Zhai, John Lafferty School of Computer Science Carnegie Mellon University

Research Questions • General: What role is smoothing playing in the language modeling approach? • Specific: – Is the good performance due to smoothing? – How sensitive is retrieval performance to smoothing? – Which smoothing method is the best? – How do we set smoothing parameters?

Outline • A General Smoothing Scheme and TF-IDF weighting • Three Smoothing Methods • Experiments and Results

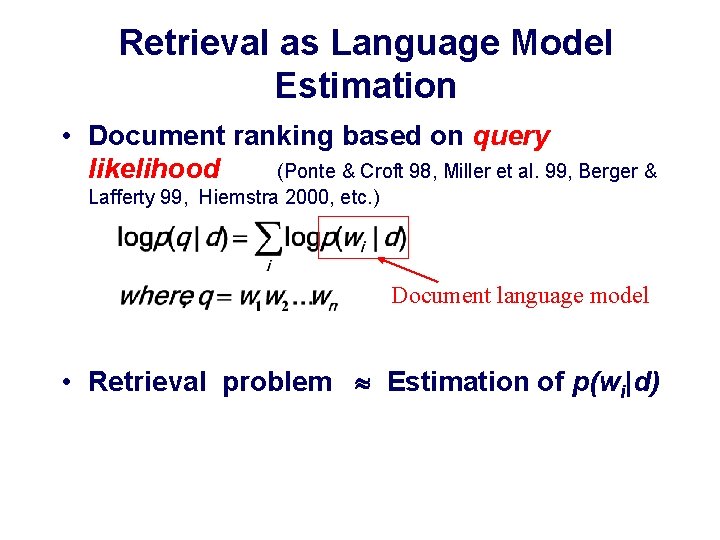

Retrieval as Language Model Estimation • Document ranking based on query likelihood (Ponte & Croft 98, Miller et al. 99, Berger & Lafferty 99, Hiemstra 2000, etc. ) Document language model • Retrieval problem Estimation of p(wi|d)

Why Smoothing? • Zero probability – If w does not occur in d, then p(w|d) =0, and any query with word w will have a zero probability. • Estimation inaccuracy – A document is a very small sample of words, and the maximum likelihood estimate will be inaccurate.

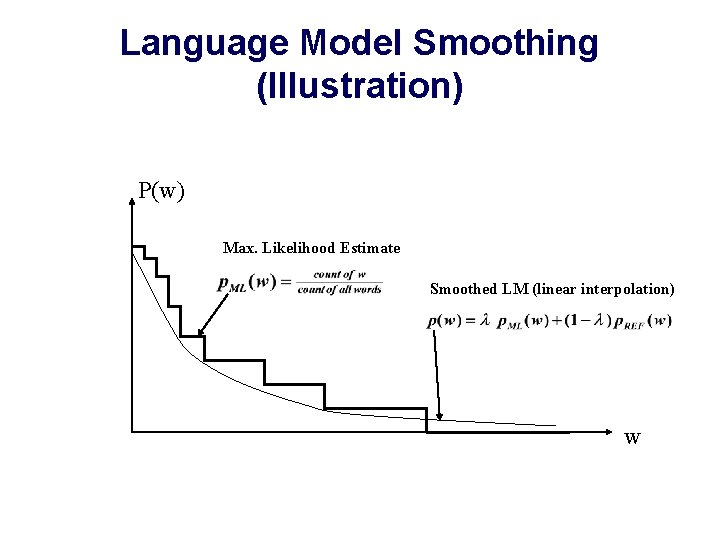

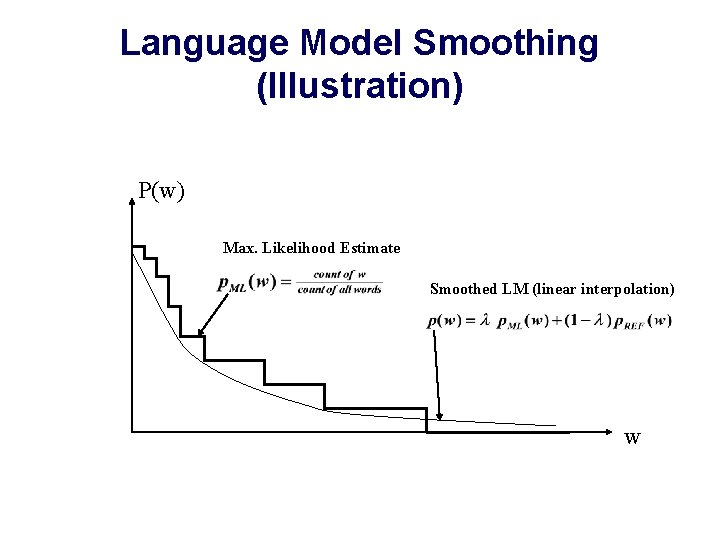

Language Model Smoothing (Illustration) P(w) Max. Likelihood Estimate Smoothed LM (linear interpolation) w

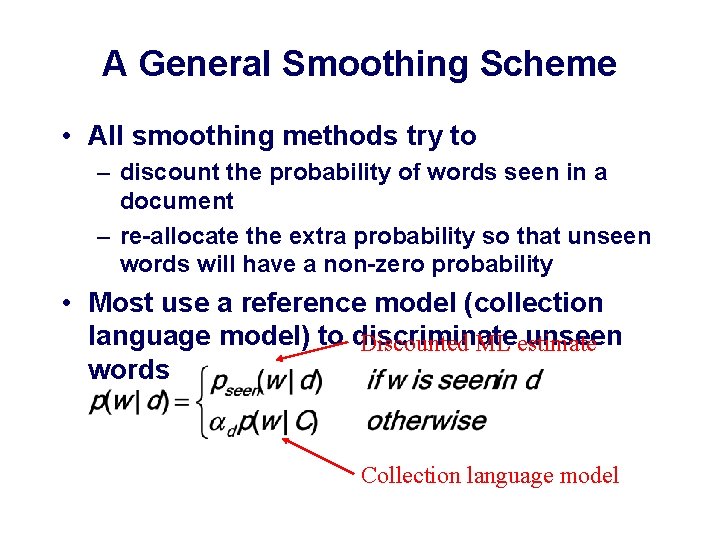

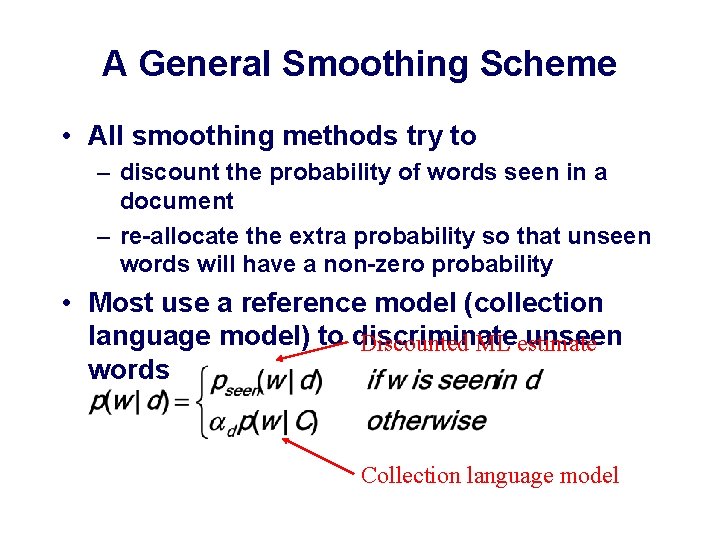

A General Smoothing Scheme • All smoothing methods try to – discount the probability of words seen in a document – re-allocate the extra probability so that unseen words will have a non-zero probability • Most use a reference model (collection language model) to discriminate unseen Discounted ML estimate words Collection language model

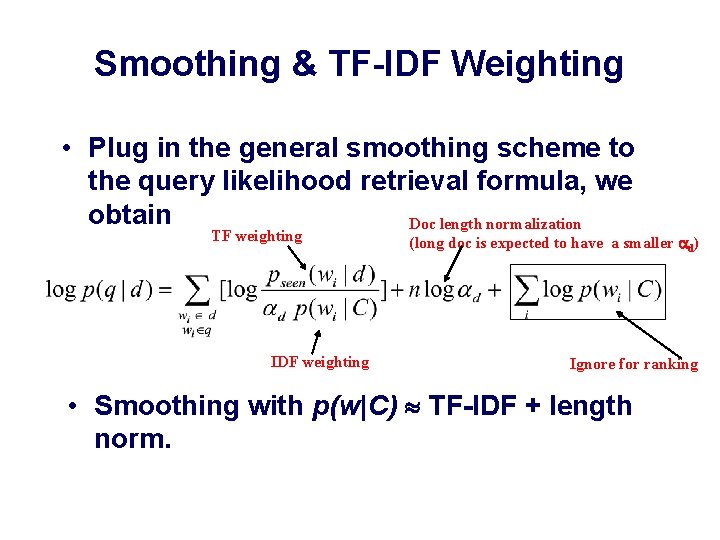

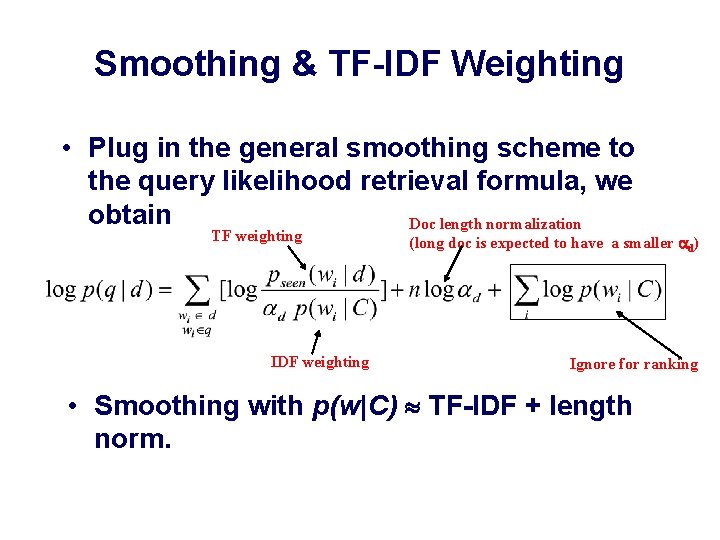

Smoothing & TF-IDF Weighting • Plug in the general smoothing scheme to the query likelihood retrieval formula, we obtain Doc length normalization TF weighting IDF weighting (long doc is expected to have a smaller d) Ignore for ranking • Smoothing with p(w|C) TF-IDF + length norm.

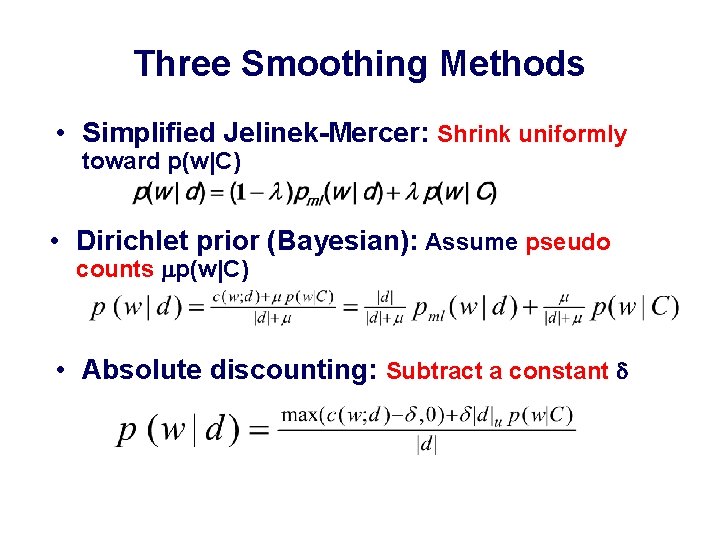

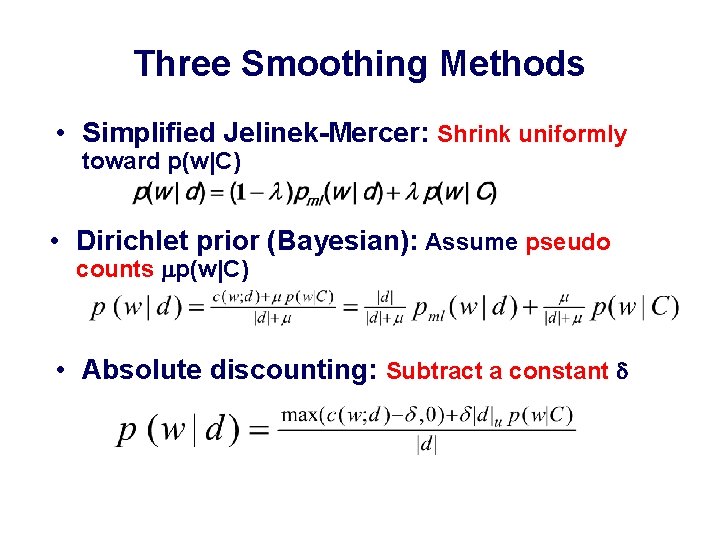

Three Smoothing Methods • Simplified Jelinek-Mercer: Shrink uniformly toward p(w|C) • Dirichlet prior (Bayesian): Assume pseudo counts p(w|C) • Absolute discounting: Subtract a constant

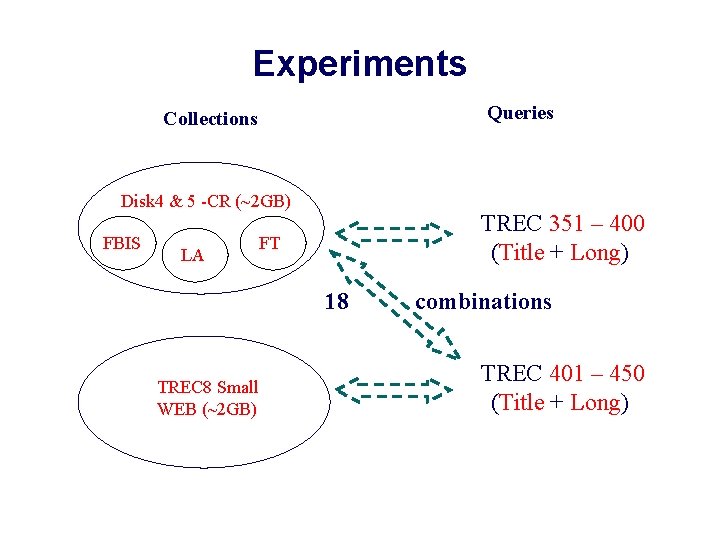

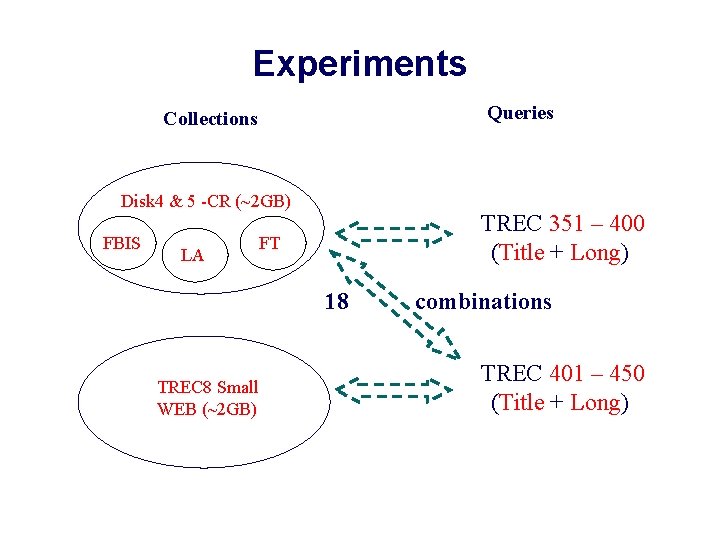

Experiments Queries Collections Disk 4 & 5 -CR (~2 GB) FBIS LA TREC 351 – 400 (Title + Long) FT 18 TREC 8 Small WEB (~2 GB) combinations TREC 401 – 450 (Title + Long)

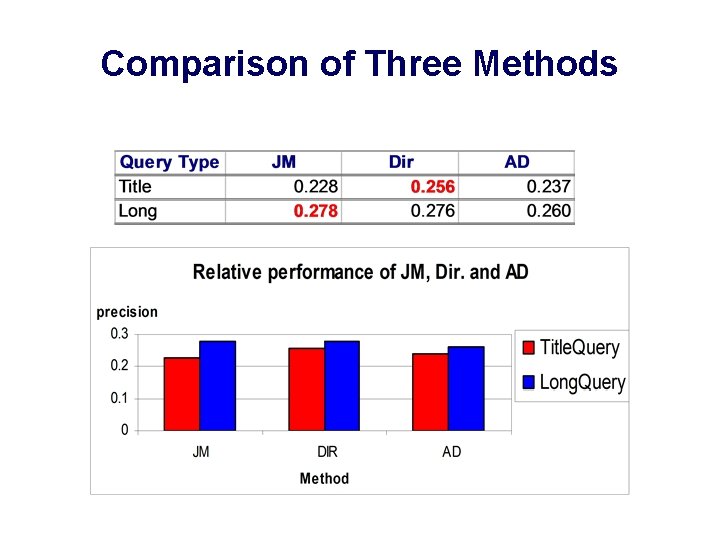

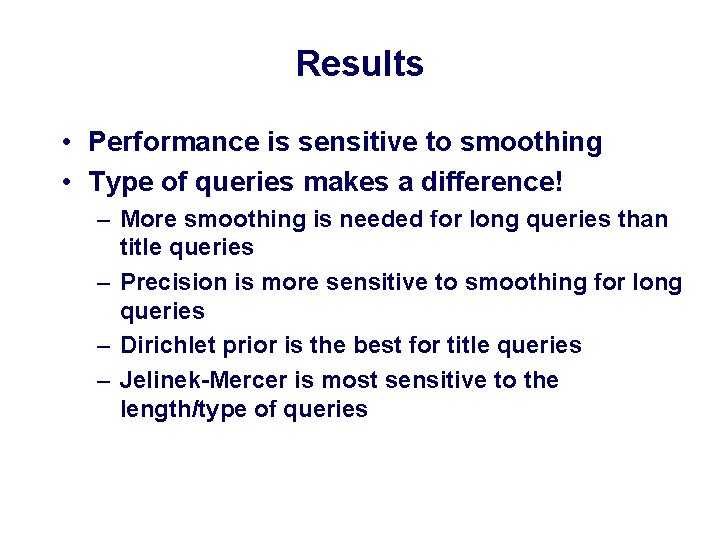

Results • Performance is sensitive to smoothing • Type of queries makes a difference! – More smoothing is needed for long queries than title queries – Precision is more sensitive to smoothing for long queries – Dirichlet prior is the best for title queries – Jelinek-Mercer is most sensitive to the length/type of queries

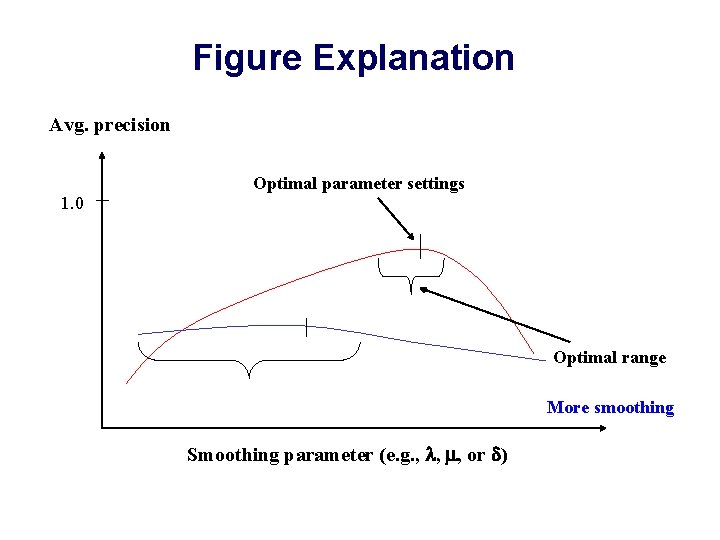

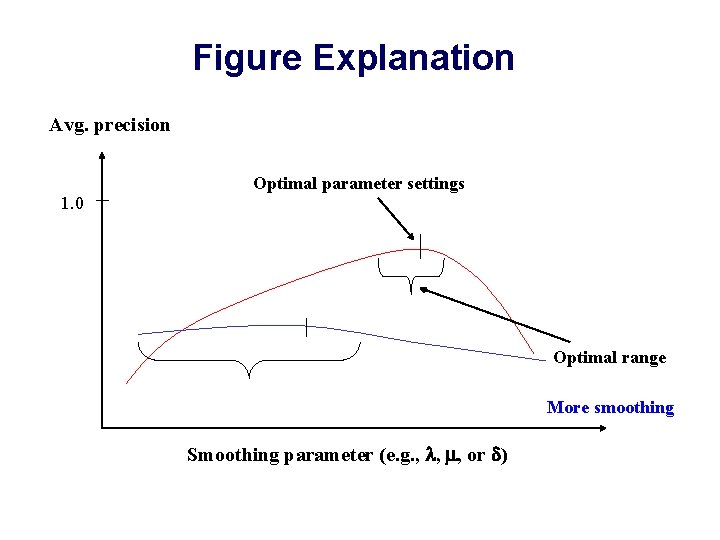

Figure Explanation Avg. precision 1. 0 Optimal parameter settings Optimal range More smoothing Smoothing parameter (e. g. , , , or )

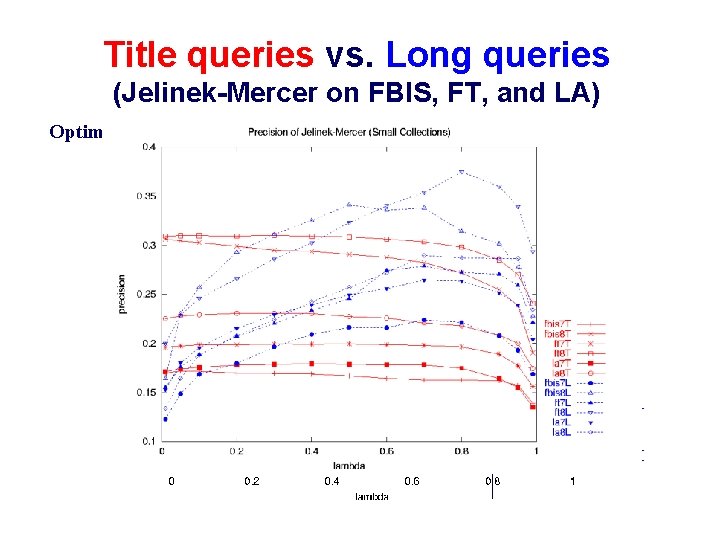

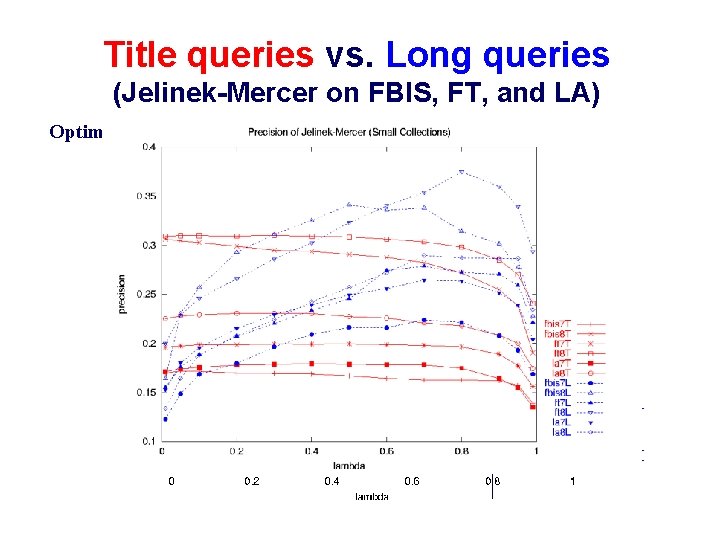

Title queries vs. Long queries (Jelinek-Mercer on FBIS, FT, and LA) Optimal Title query Long query

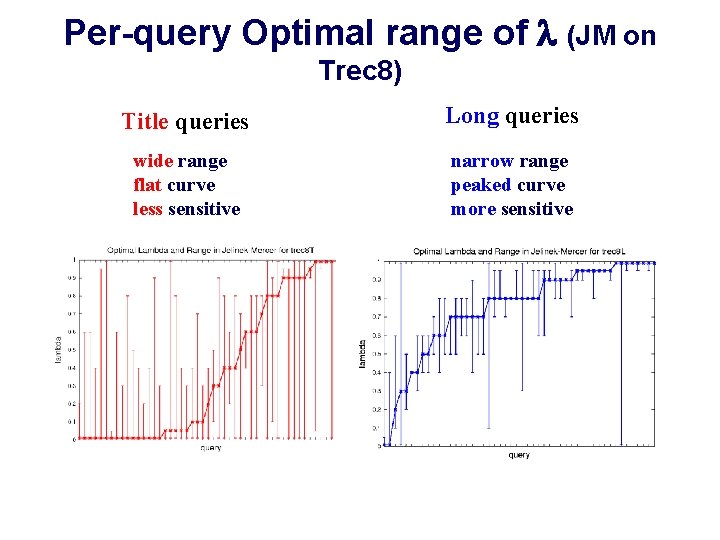

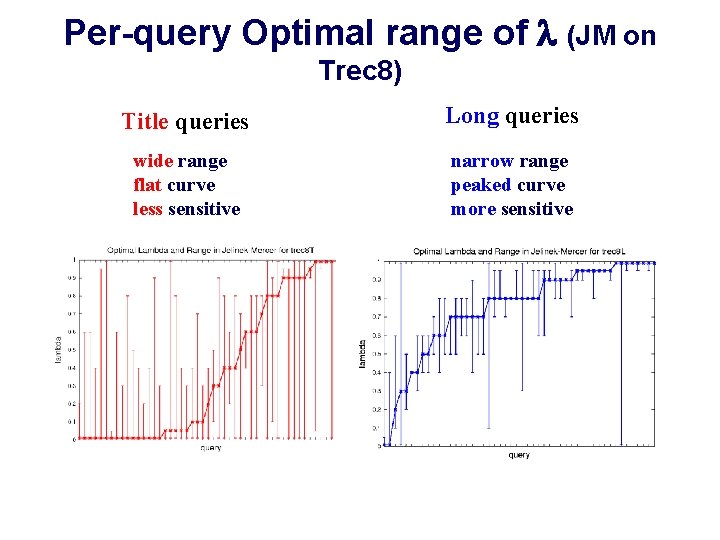

Per-query Optimal range of (JM on Trec 8) Title queries Long queries wide range flat curve less sensitive narrow range peaked curve more sensitive

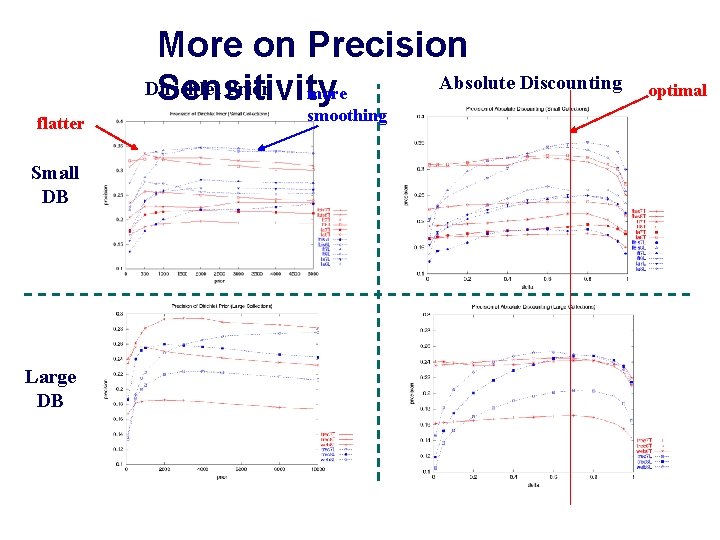

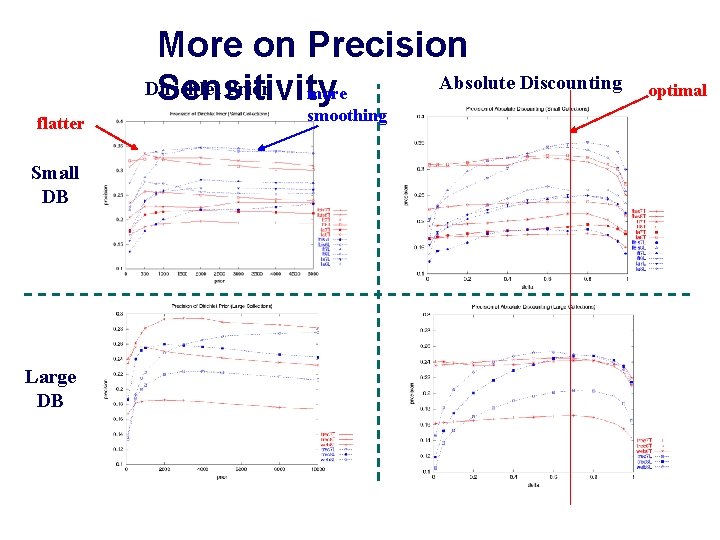

More on Precision Absolute Discounting Dirichlet Prior more Sensitivity flatter Small DB Large DB smoothing optimal

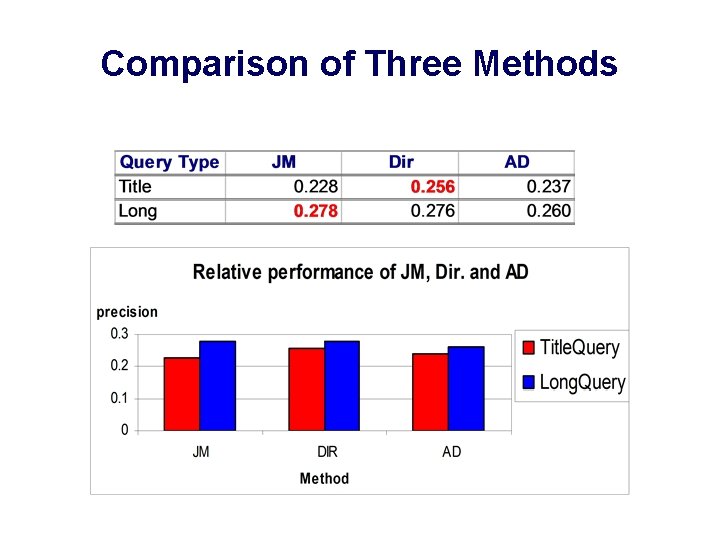

Comparison of Three Methods

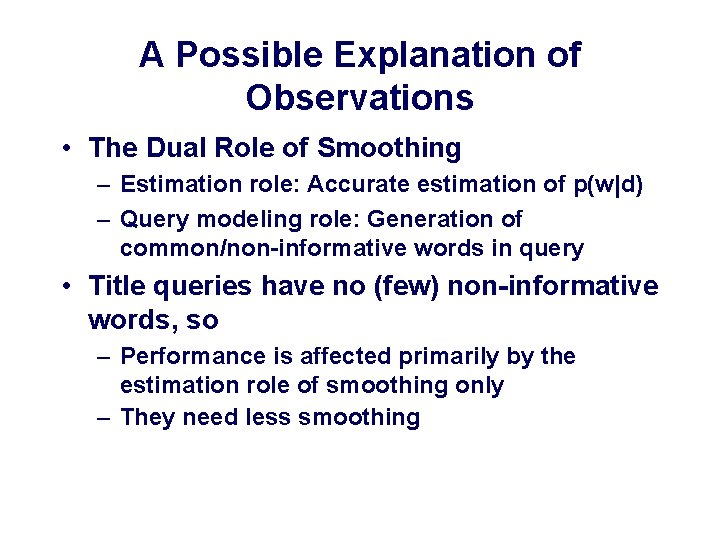

A Possible Explanation of Observations • The Dual Role of Smoothing – Estimation role: Accurate estimation of p(w|d) – Query modeling role: Generation of common/non-informative words in query • Title queries have no (few) non-informative words, so – Performance is affected primarily by the estimation role of smoothing only – They need less smoothing

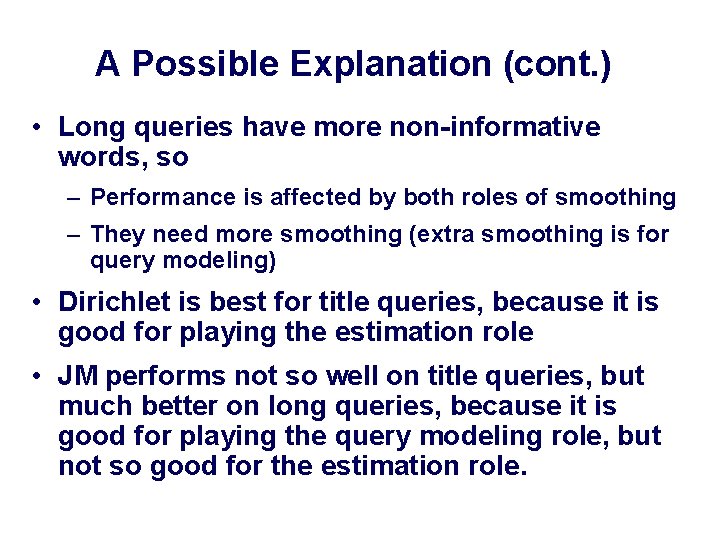

A Possible Explanation (cont. ) • Long queries have more non-informative words, so – Performance is affected by both roles of smoothing – They need more smoothing (extra smoothing is for query modeling) • Dirichlet is best for title queries, because it is good for playing the estimation role • JM performs not so well on title queries, but much better on long queries, because it is good for playing the query modeling role, but not so good for the estimation role.

The Lemur Toolkit • Language Modeling and Information Retrieval Toolkit • Under development at CMU and Umass • All experiments reported here were run using Lemur • http: //www. cs. cmu. edu/~lemur • Contact us if you are interested in using it

Conclusions and Future Work • Smoothing TF-IDF + doc length normalization • Retrieval performance is sensitive to smoothing • Sensitivity depends on query type – More sensitive for long queries than for title queries – More smoothing is needed for long queries • All three methods can perform well when optimized – Dirichlet prior is especially good for title queries – Both Dirichlet prior and JM are good for long queries

Conclusions and Future Work (cont. ) • Smoothing plays two different roles – Better estimation of p(w|d) – Generation of common/non-informative words in query • Future work – More evaluation (types of queries, smoothing methods) – De-couple the dual role of smoothing (e. g. , twostage smoothing strategy) – Train query-specific smoothing parameters with past relevance judgments and other data (e. g. , position selection translation model)