Language Models Hongning Wang CSUVa Twostage smoothing Zhai

![Two-stage smoothing [Zhai & Lafferty 02] Stage-1 Stage-2 -Explain unseen words -Dirichlet prior (Bayesian) Two-stage smoothing [Zhai & Lafferty 02] Stage-1 Stage-2 -Explain unseen words -Dirichlet prior (Bayesian)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-2.jpg)

![Estimating using EM algorithm [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) Estimating using EM algorithm [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-3.jpg)

![Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-16.jpg)

![Improving language models • Capturing limited dependencies • Bigrams/Trigrams [Song & Croft 99] • Improving language models • Capturing limited dependencies • Bigrams/Trigrams [Song & Croft 99] •](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-18.jpg)

![Generative model of document & query [Lafferty & Zhai 01 b] Us er U Generative model of document & query [Lafferty & Zhai 01 b] Us er U](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-22.jpg)

- Slides: 29

Language Models Hongning Wang CS@UVa

![Twostage smoothing Zhai Lafferty 02 Stage1 Stage2 Explain unseen words Dirichlet prior Bayesian Two-stage smoothing [Zhai & Lafferty 02] Stage-1 Stage-2 -Explain unseen words -Dirichlet prior (Bayesian)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-2.jpg)

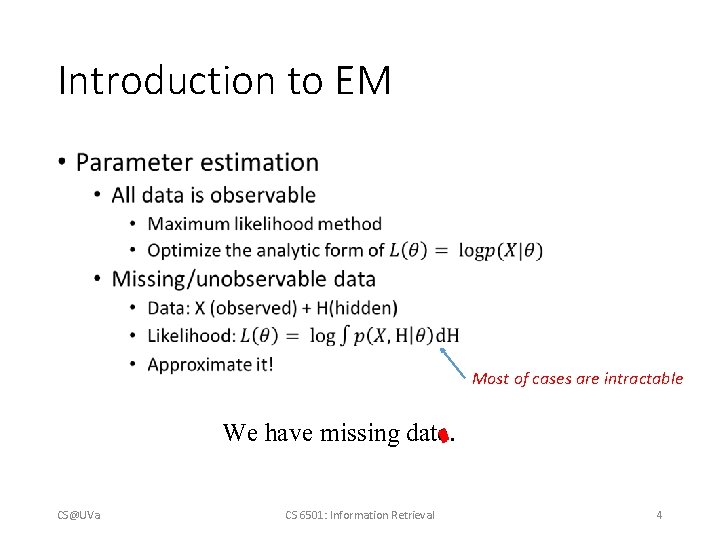

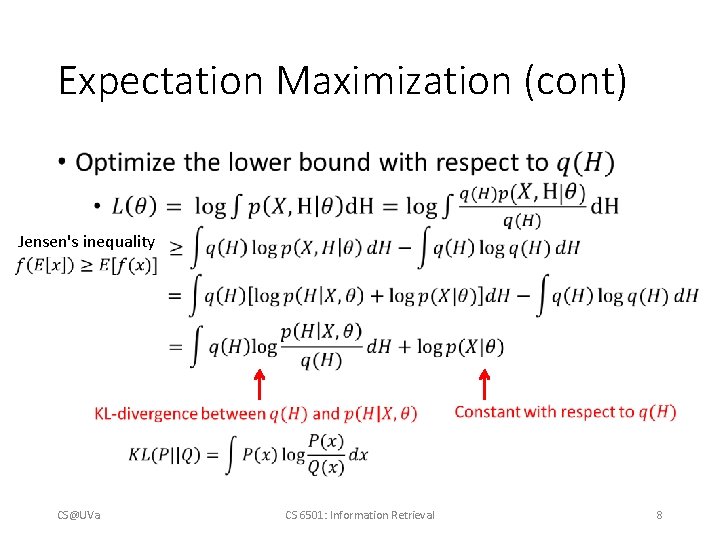

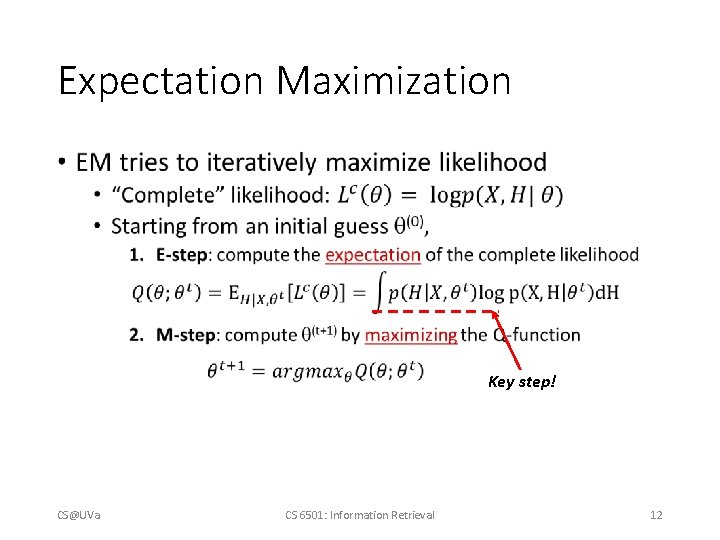

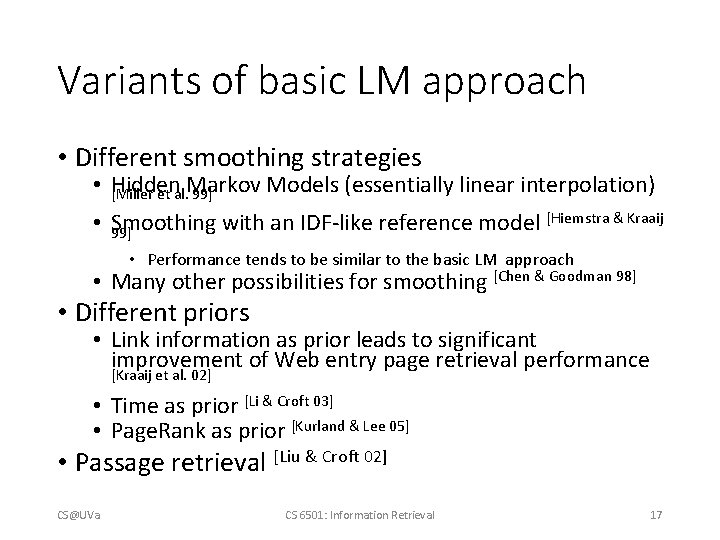

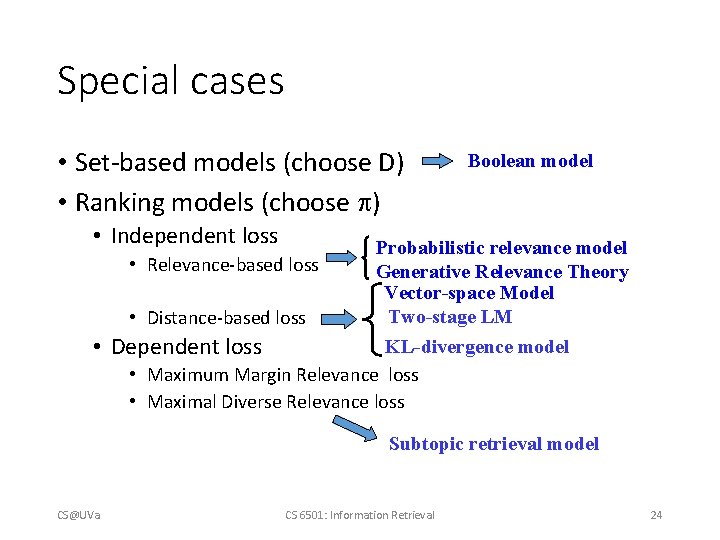

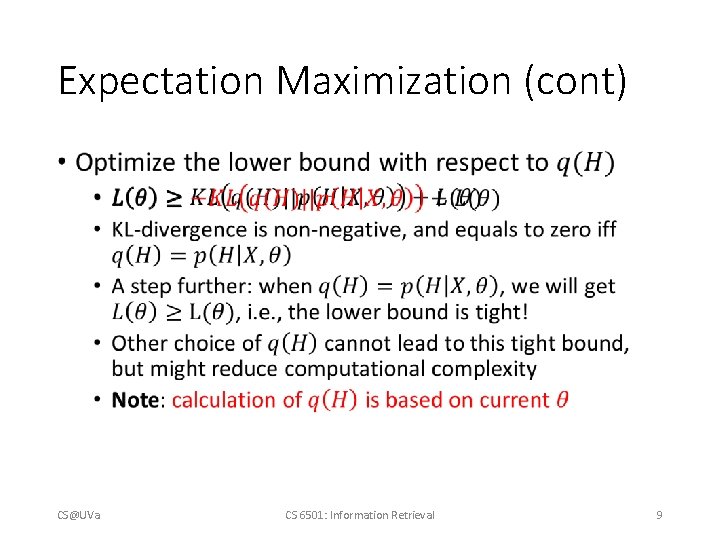

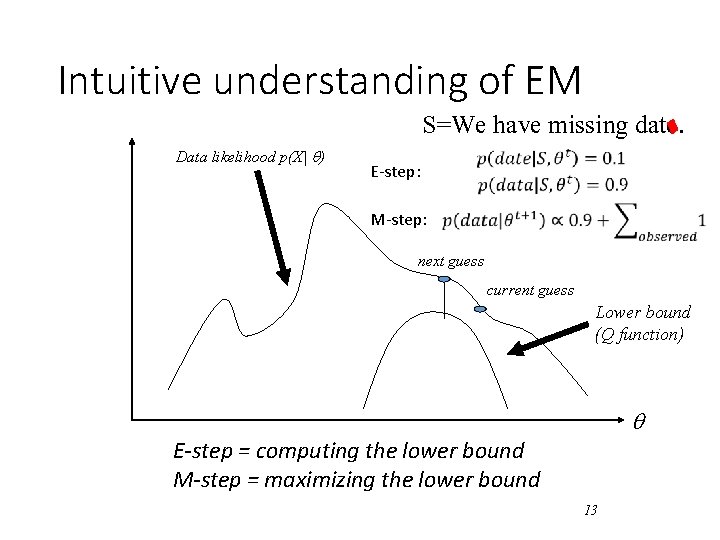

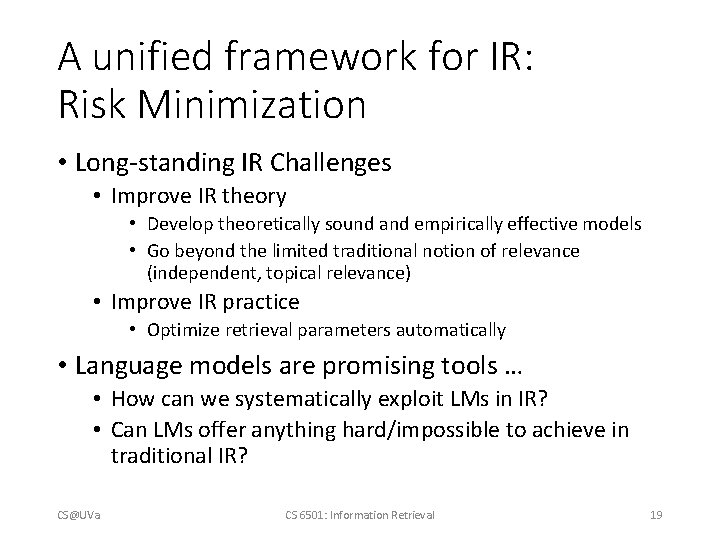

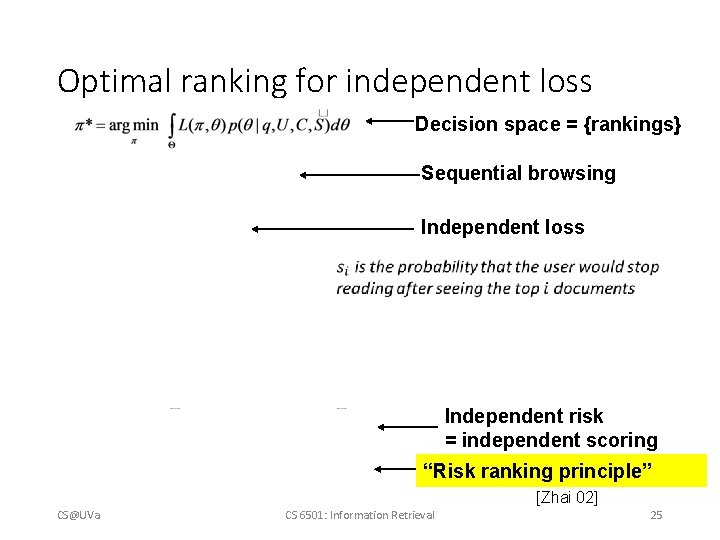

Two-stage smoothing [Zhai & Lafferty 02] Stage-1 Stage-2 -Explain unseen words -Dirichlet prior (Bayesian) -Explain noise in query -2 -component mixture P(w|d) = (1 - ) c(w, d) + p(w|C) |d| + Collection LM + p(w|U) User background model Can be approximated by p(w|C) CS@UVa CS 6501: Information Retrieval 2

![Estimating using EM algorithm Zhai Lafferty 02 Stage2 Stage1 d 1 Pwd 1 Estimating using EM algorithm [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-3.jpg)

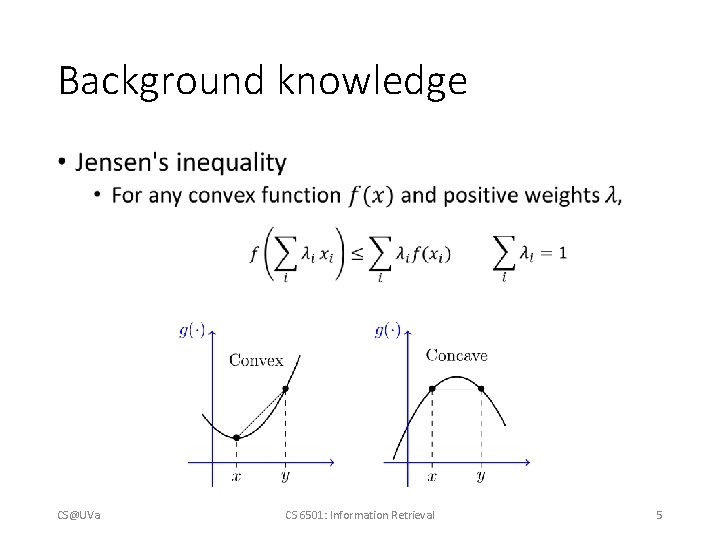

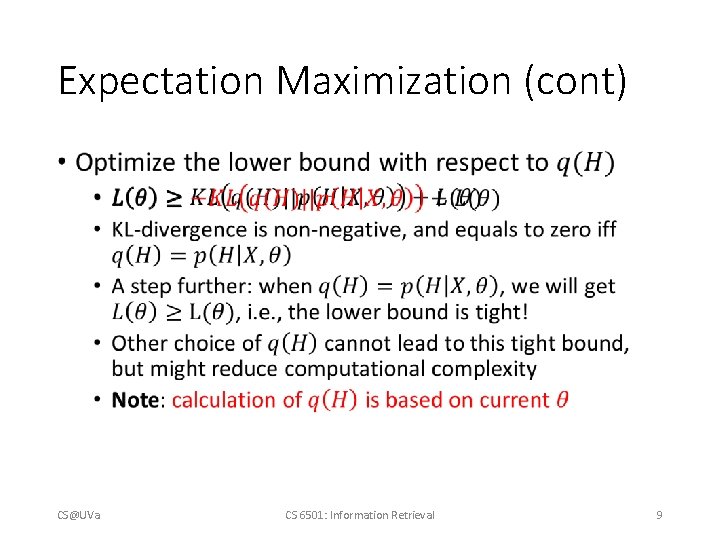

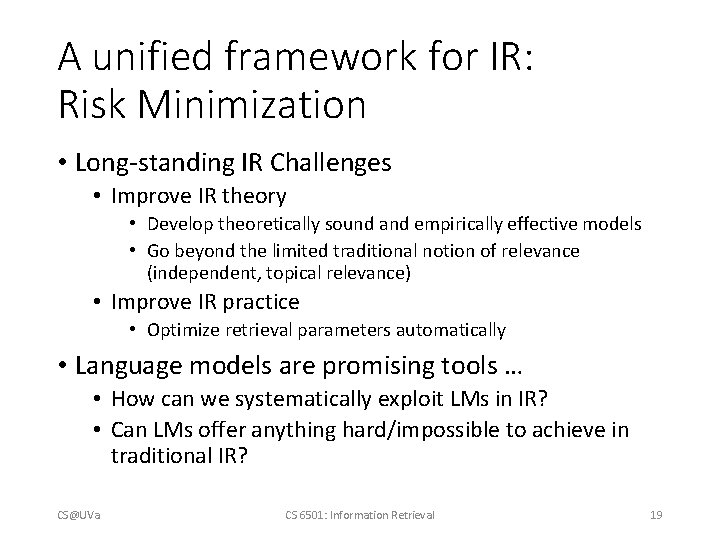

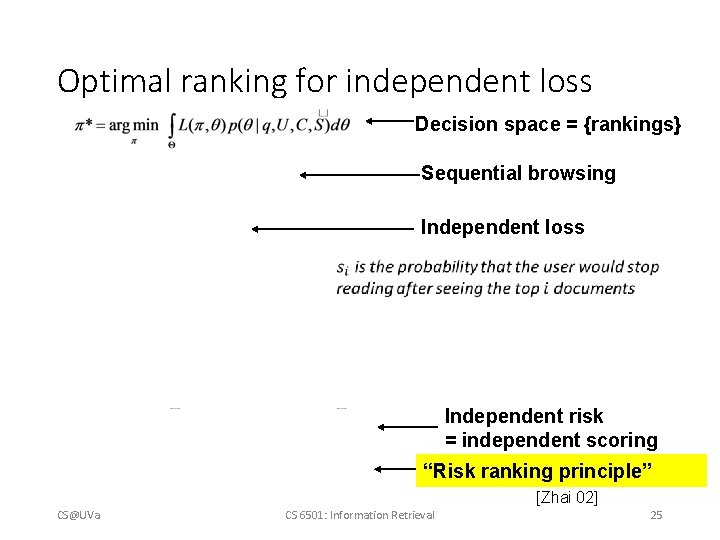

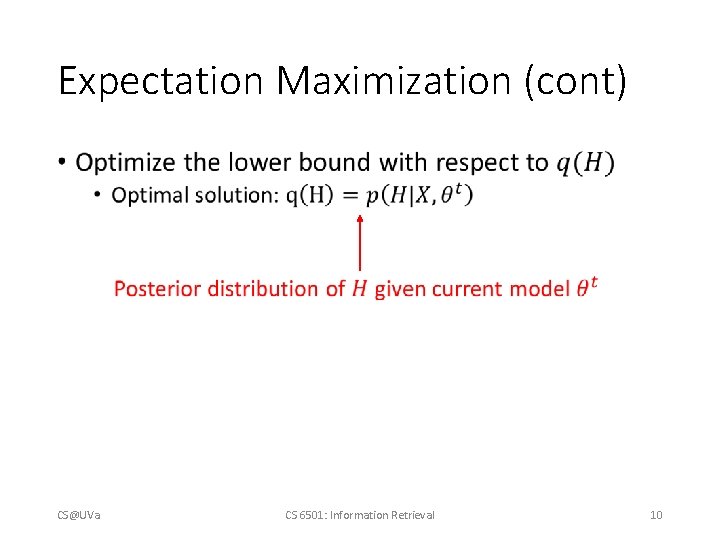

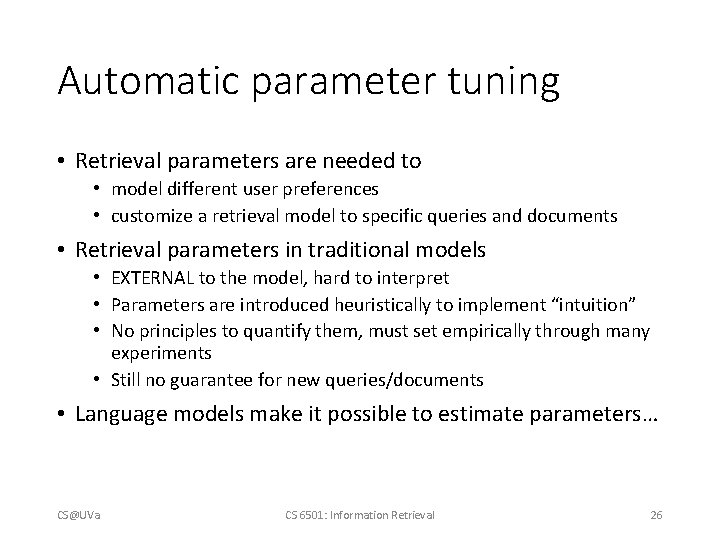

Estimating using EM algorithm [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) Consider query generation as a mixture over all the relevant documents (1 - )p(w|d 1)+ p(w|U) . . . …. . . d. N P(w|d. N) 1 N Query Q=q 1…qm (1 - )p(w|d. N)+ p(w|U) Estimated in stage-1 CS@UVa CS 6501: Information Retrieval 3

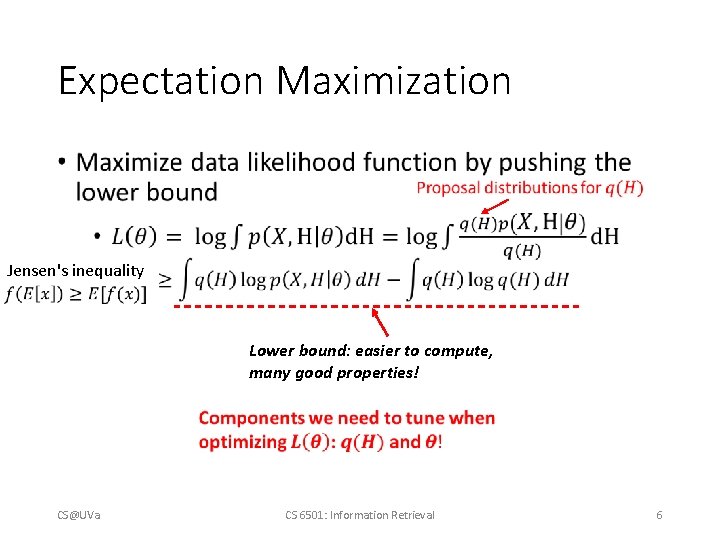

Introduction to EM • Most of cases are intractable We have missing date. CS@UVa CS 6501: Information Retrieval 4

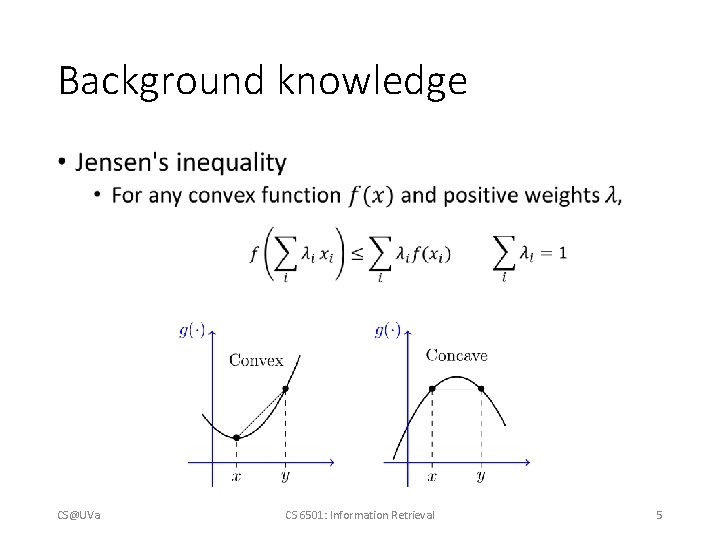

Background knowledge • CS@UVa CS 6501: Information Retrieval 5

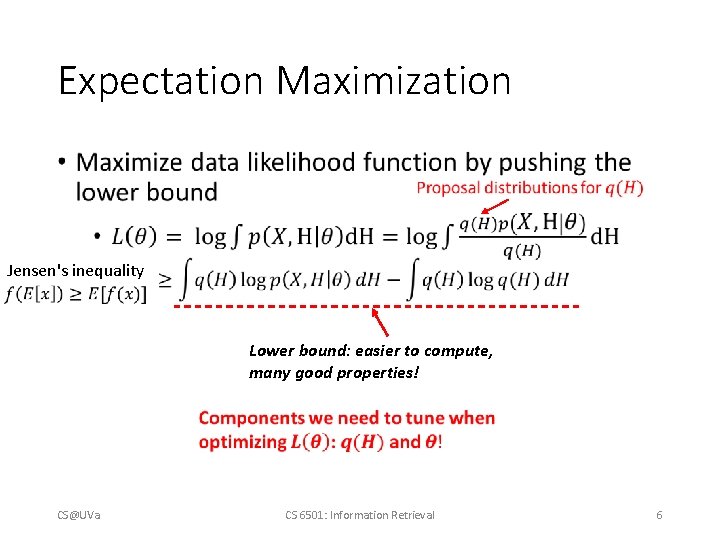

Expectation Maximization • Jensen's inequality Lower bound: easier to compute, many good properties! CS@UVa CS 6501: Information Retrieval 6

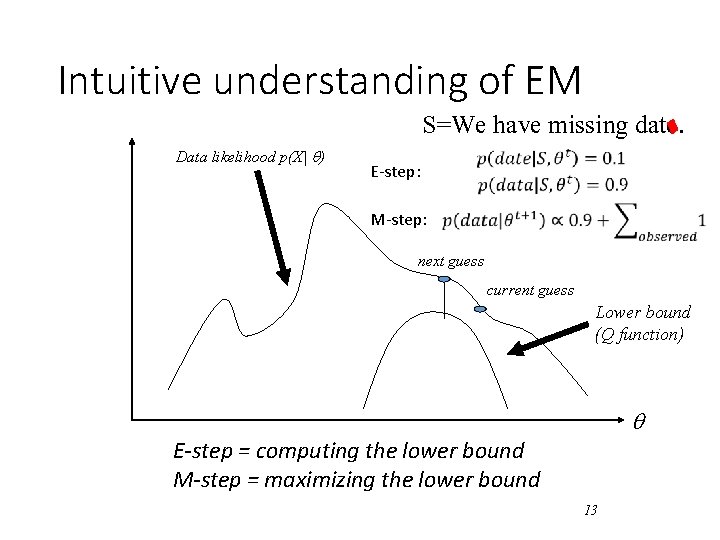

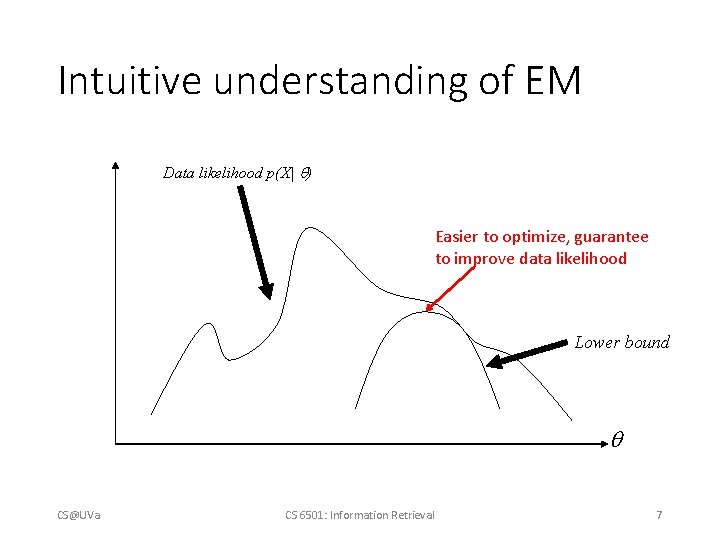

Intuitive understanding of EM Data likelihood p(X| ) Easier to optimize, guarantee to improve data likelihood Lower bound CS@UVa CS 6501: Information Retrieval 7

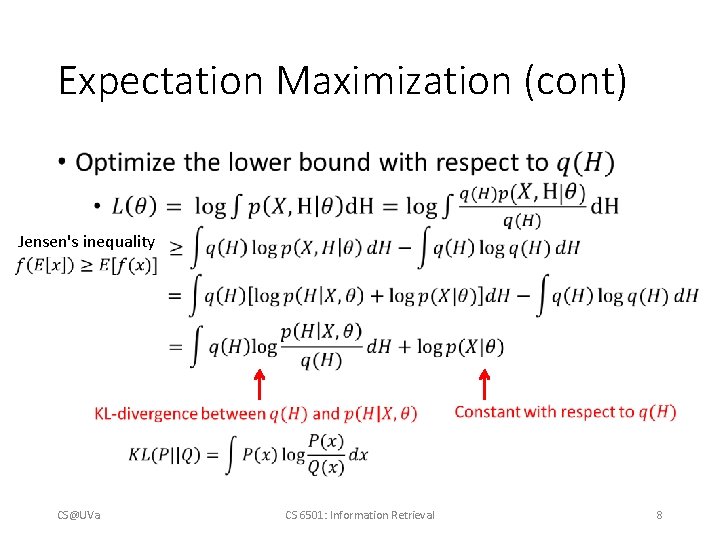

Expectation Maximization (cont) • Jensen's inequality CS@UVa CS 6501: Information Retrieval 8

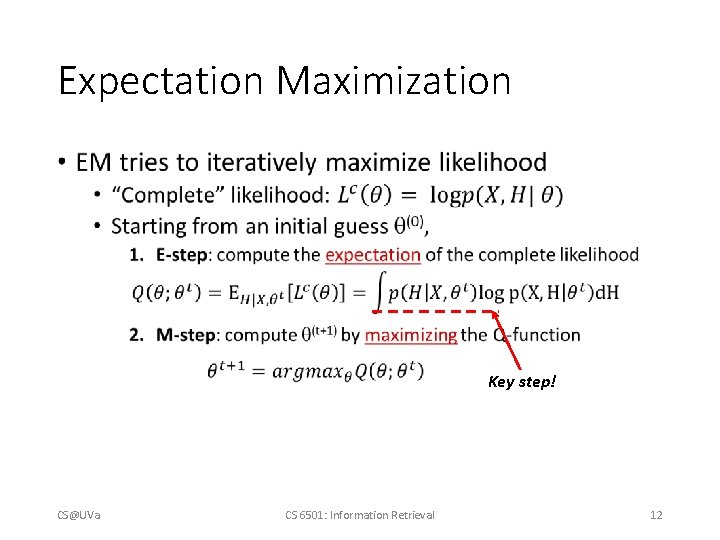

Expectation Maximization (cont) • CS@UVa CS 6501: Information Retrieval 9

Expectation Maximization (cont) • CS@UVa CS 6501: Information Retrieval 10

Expectation Maximization (cont) • Expectation of complete data likelihood CS@UVa CS 6501: Information Retrieval 11

Expectation Maximization • Key step! CS@UVa CS 6501: Information Retrieval 12

Intuitive understanding of EM Data likelihood p(X| ) S=We have missing date. E-step: M-step: next guess current guess Lower bound (Q function) E-step = computing the lower bound M-step = maximizing the lower bound 13

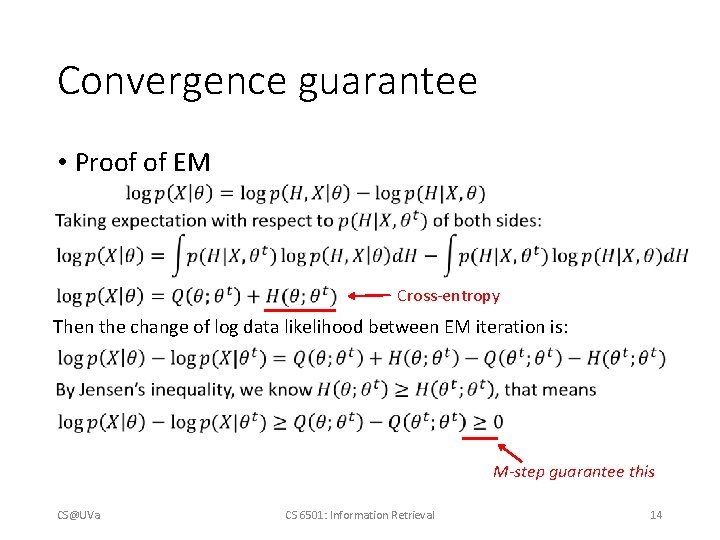

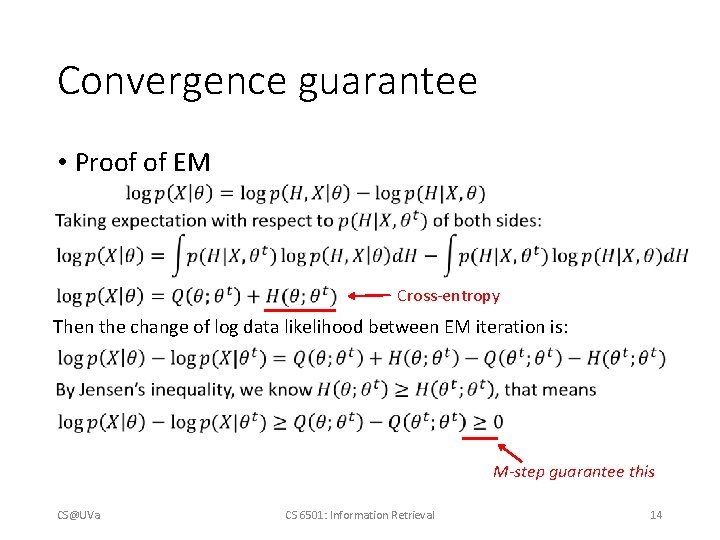

Convergence guarantee • Proof of EM Cross-entropy Then the change of log data likelihood between EM iteration is: M-step guarantee this CS@UVa CS 6501: Information Retrieval 14

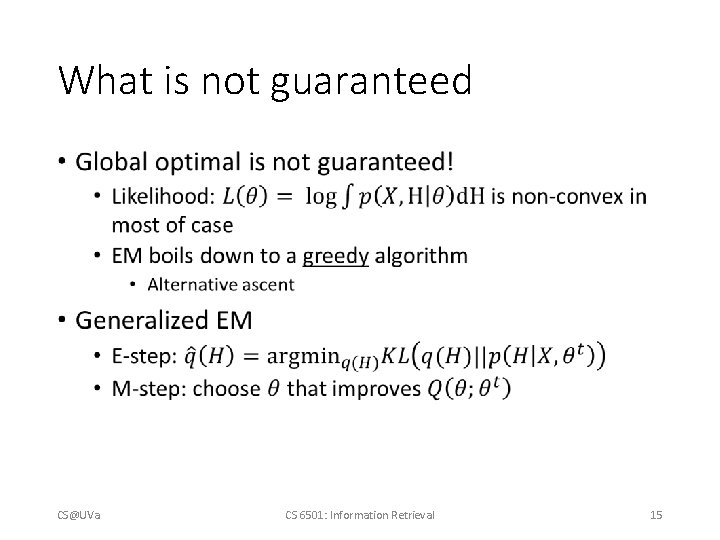

What is not guaranteed • CS@UVa CS 6501: Information Retrieval 15

![Estimating using Mixture Model Zhai Lafferty 02 Stage2 Stage1 d 1 Pwd 1 Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1)](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-16.jpg)

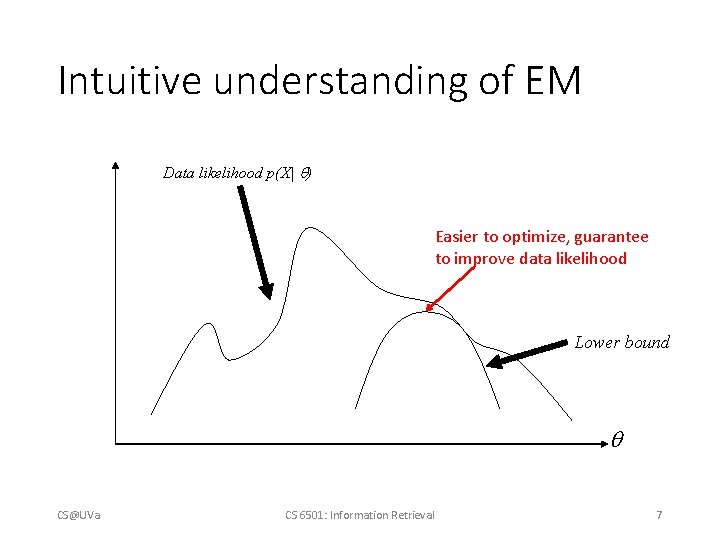

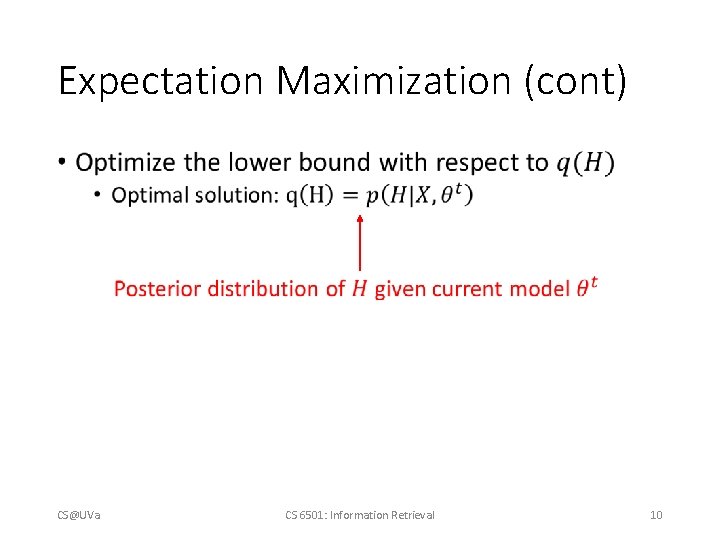

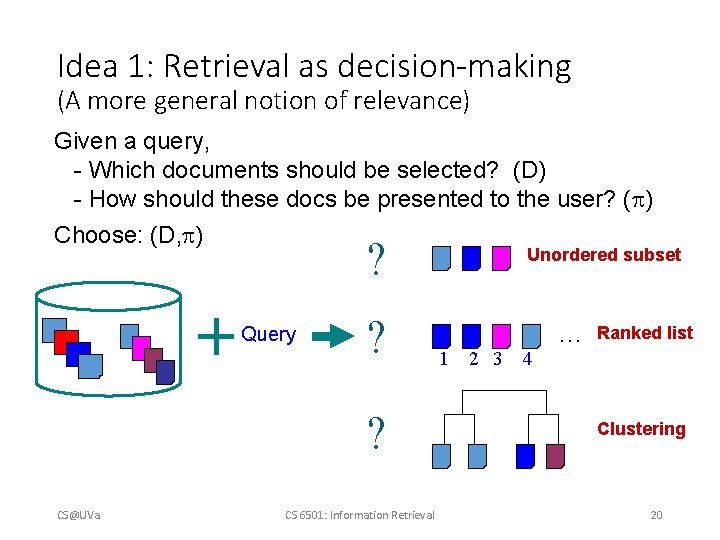

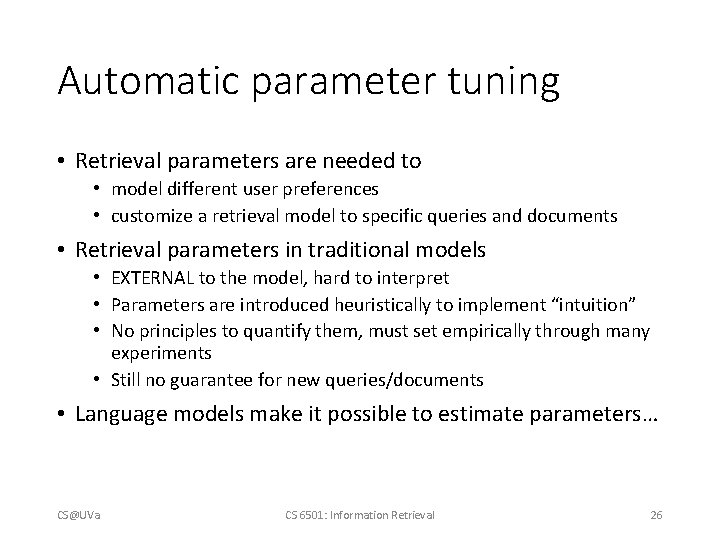

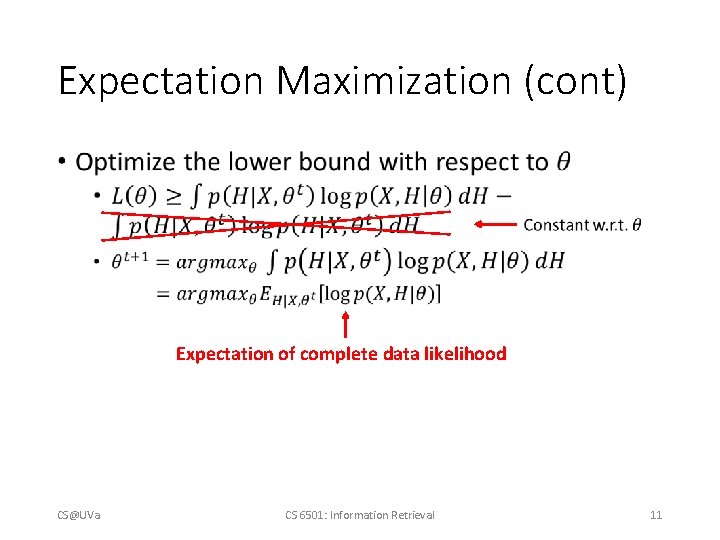

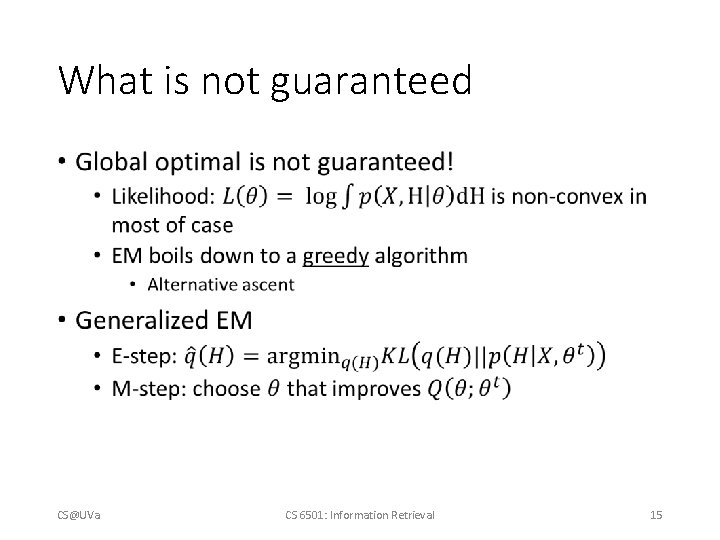

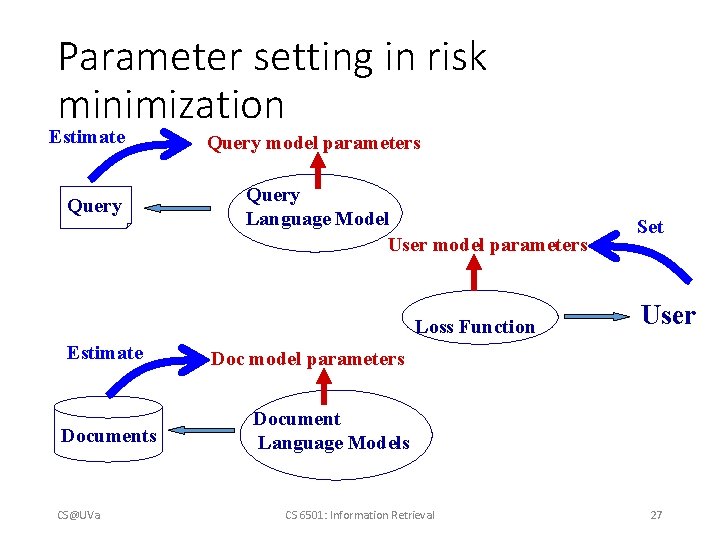

Estimating using Mixture Model [Zhai & Lafferty 02] Stage-2 Stage-1 d 1 P(w|d 1) Origin of the query term is unknown, i. e. , from user background or topic? (1 - )p(w|d 1)+ p(w|U) . . . …. . . d. N P(w|d. N) 1 N Query Q=q 1…qm (1 - )p(w|d. N)+ p(w|U) Estimated in stage-1 CS@UVa CS 6501: Information Retrieval 16

Variants of basic LM approach • Different smoothing strategies • Hidden Models (essentially linear interpolation) [Miller et al. Markov 99] [Hiemstra & Kraaij • Smoothing with an IDF-like reference model 99] • Performance tends to be similar to the basic LM approach • Many other possibilities for smoothing [Chen & Goodman 98] • Different priors • Link information as prior leads to significant improvement of Web entry page retrieval performance [Kraaij et al. 02] • Time as prior [Li & Croft 03] • Page. Rank as prior [Kurland & Lee 05] • Passage retrieval [Liu & Croft 02] CS@UVa CS 6501: Information Retrieval 17

![Improving language models Capturing limited dependencies BigramsTrigrams Song Croft 99 Improving language models • Capturing limited dependencies • Bigrams/Trigrams [Song & Croft 99] •](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-18.jpg)

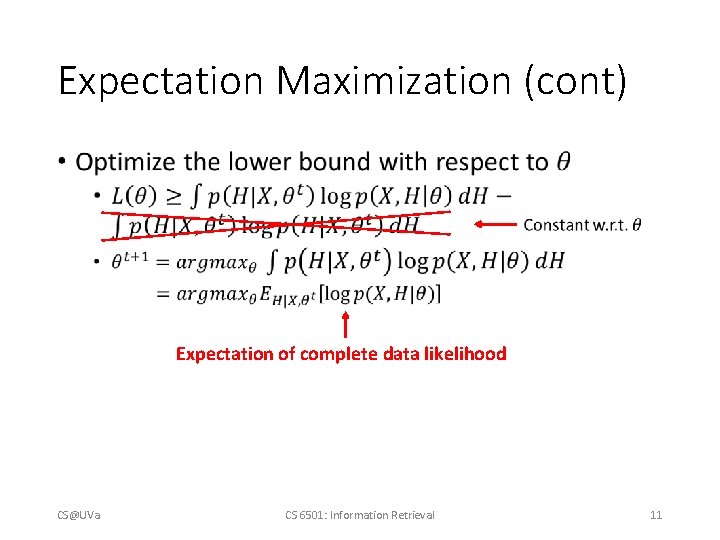

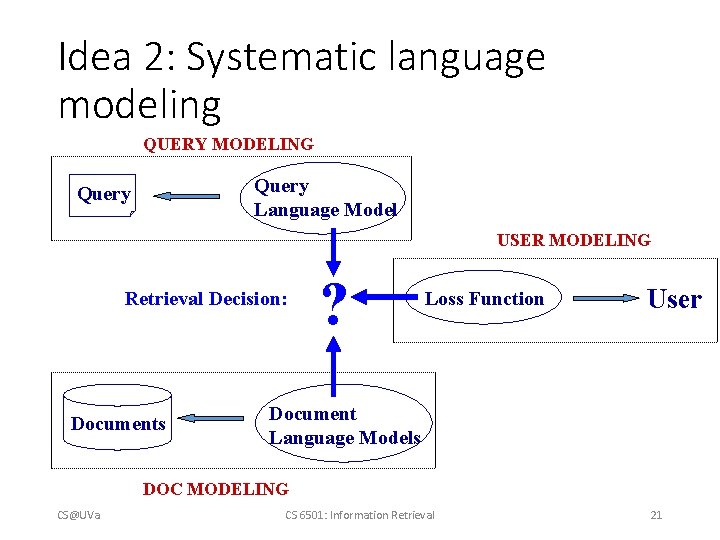

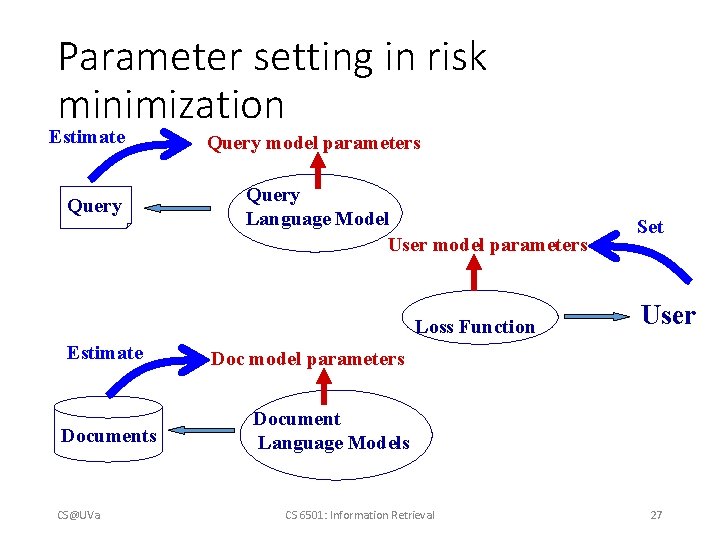

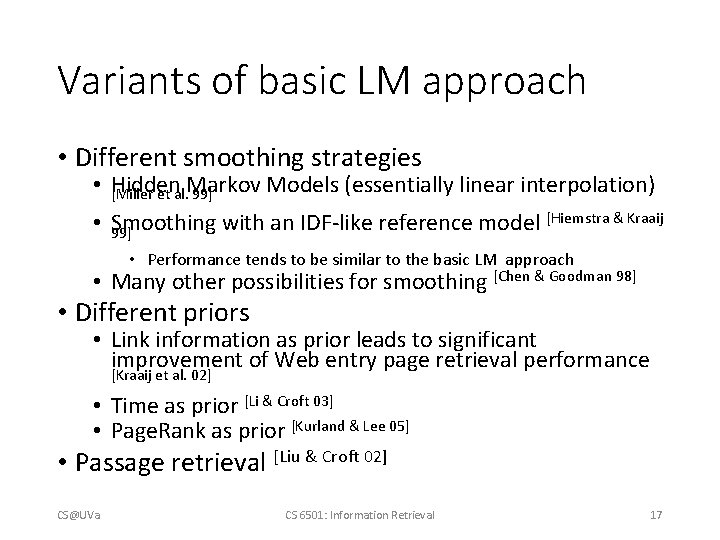

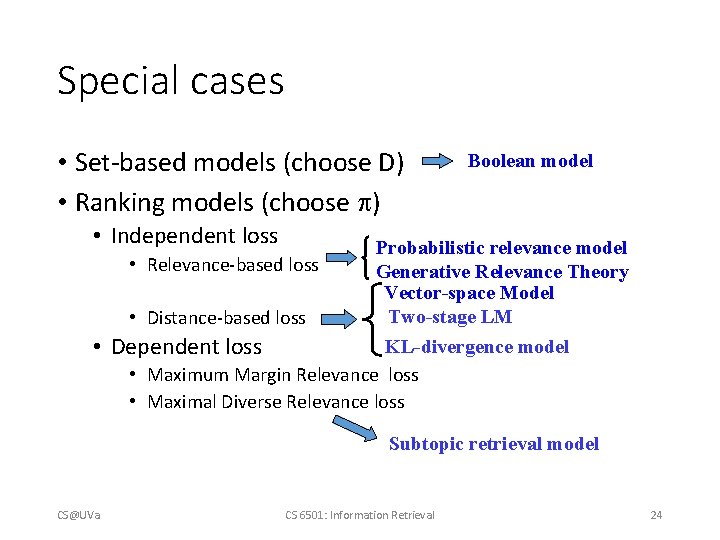

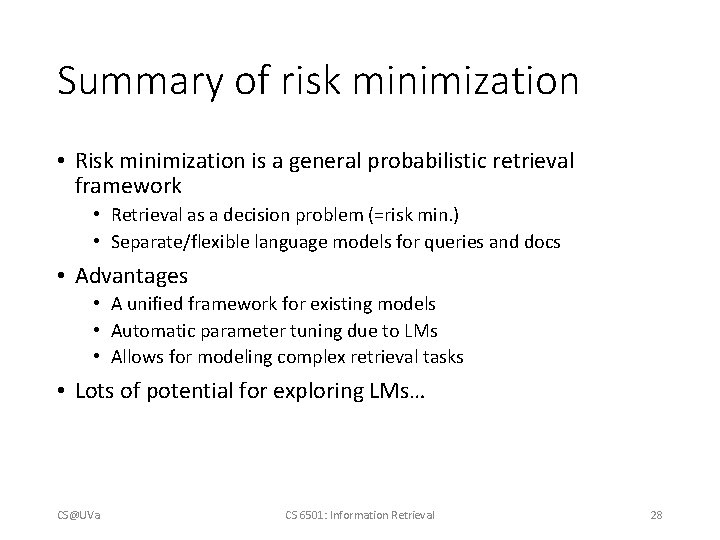

Improving language models • Capturing limited dependencies • Bigrams/Trigrams [Song & Croft 99] • Grammatical dependency [Nallapati & Allan 02, Srikanth & Srihari 03, Gao et al. 04] • Generally insignificant improvement as compared with other extensions such as feedback • Full Bayesian query likelihood [Zaragoza et al. 03] • Performance similar to the basic LM approach • Translation model for p(Q|D, R) [Berger & Lafferty 99, Jin et al. 02, Cao et al. 05] • Address polesemy and synonyms • Improves over the basic LM methods, but computationally expensive [Liu & Croft 04, Kurland & Lee 04, Tao et al. • Cluster-based smoothing/scoring 06] • Improves over the basic LM, but computationally expensive • Parsimonious LMs [Hiemstra et al. 04] • Using a mixture model to “factor out” non-discriminative words CS@UVa CS 6501: Information Retrieval 18

A unified framework for IR: Risk Minimization • Long-standing IR Challenges • Improve IR theory • Develop theoretically sound and empirically effective models • Go beyond the limited traditional notion of relevance (independent, topical relevance) • Improve IR practice • Optimize retrieval parameters automatically • Language models are promising tools … • How can we systematically exploit LMs in IR? • Can LMs offer anything hard/impossible to achieve in traditional IR? CS@UVa CS 6501: Information Retrieval 19

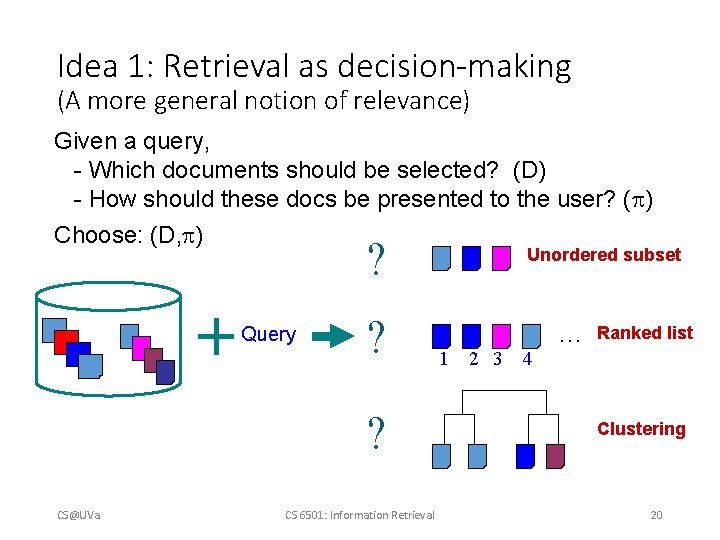

Idea 1: Retrieval as decision-making (A more general notion of relevance) Given a query, - Which documents should be selected? (D) - How should these docs be presented to the user? ( ) Choose: (D, ) ? Query ? ? CS@UVa CS 6501: Information Retrieval Unordered subset 1 2 3 4 … Ranked list Clustering 20

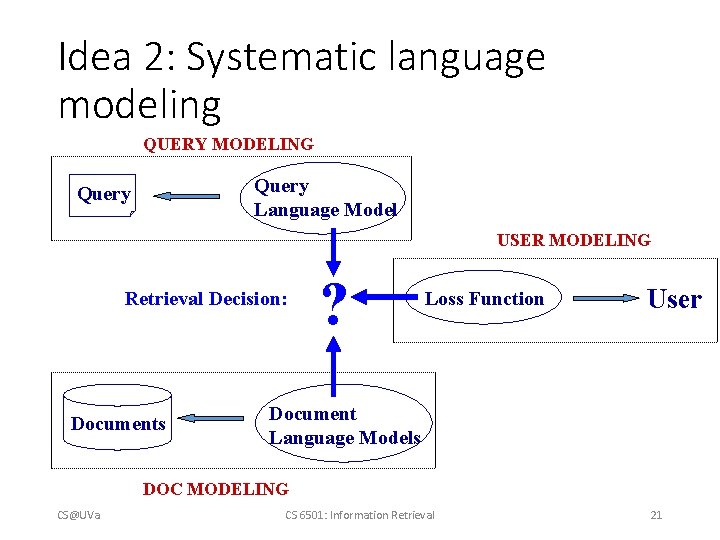

Idea 2: Systematic language modeling QUERY MODELING Query Language Model Query USER MODELING Retrieval Decision: Documents ? Loss Function User Document Language Models DOC MODELING CS@UVa CS 6501: Information Retrieval 21

![Generative model of document query Lafferty Zhai 01 b Us er U Generative model of document & query [Lafferty & Zhai 01 b] Us er U](https://slidetodoc.com/presentation_image_h2/f4806bb120c528a4effe3ee65cf609e7/image-22.jpg)

Generative model of document & query [Lafferty & Zhai 01 b] Us er U q Partially observed Sourc e observed R d S Query Documen inferred CS@UVa CS 6501: Information Retrieval 22

Applying Bayesian Decision Theory [Lafferty & Zhai 01 b, Zhai 02, Zhai & Lafferty 06] Loss Choice: (D 1, 1) L Choice: (D 2, 2) L query q user U q 1 . . . Choice: (Dn, n) L N loss RISK MINIMIZATION CS@UVa doc set C source S hidden observed Bayes risk for choice (D, ) CS 6501: Information Retrieval 23

Special cases • Set-based models (choose D) • Ranking models (choose ) Boolean model • Independent loss • Probabilistic relevance model • Relevance-based loss Generative Relevance Theory Vector-space Model Two-stage LM • Distance-based loss KL-divergence model Dependent loss • Maximum Margin Relevance loss • Maximal Diverse Relevance loss Subtopic retrieval model CS@UVa CS 6501: Information Retrieval 24

Optimal ranking for independent loss Decision space = {rankings} Sequential browsing Independent loss Independent risk = independent scoring “Risk ranking principle” [Zhai 02] CS@UVa CS 6501: Information Retrieval 25

Automatic parameter tuning • Retrieval parameters are needed to • model different user preferences • customize a retrieval model to specific queries and documents • Retrieval parameters in traditional models • EXTERNAL to the model, hard to interpret • Parameters are introduced heuristically to implement “intuition” • No principles to quantify them, must set empirically through many experiments • Still no guarantee for new queries/documents • Language models make it possible to estimate parameters… CS@UVa CS 6501: Information Retrieval 26

Parameter setting in risk minimization Estimate Query model parameters Query Language Model User model parameters Loss Function Estimate Documents CS@UVa Set User Doc model parameters Document Language Models CS 6501: Information Retrieval 27

Summary of risk minimization • Risk minimization is a general probabilistic retrieval framework • Retrieval as a decision problem (=risk min. ) • Separate/flexible language models for queries and docs • Advantages • A unified framework for existing models • Automatic parameter tuning due to LMs • Allows for modeling complex retrieval tasks • Lots of potential for exploring LMs… CS@UVa CS 6501: Information Retrieval 28

What you should know • EM algorithm • Risk minimization framework CS@UVa CS 6501: Information Retrieval 29

Hongning wang

Hongning wang Hongning wang

Hongning wang Hongning wang

Hongning wang Hongning wang

Hongning wang Lu lin uva

Lu lin uva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Csuva

Csuva Huazheng wang

Huazheng wang Kl divergence

Kl divergence Cheng xiang zhai

Cheng xiang zhai Seth zhai

Seth zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng xiang zhai

Cheng xiang zhai Cheng zhai

Cheng zhai Cheng xiang zhai

Cheng xiang zhai Molly zhai

Molly zhai What is the difference between models and semi modals

What is the difference between models and semi modals Adjusted exponential smoothing

Adjusted exponential smoothing What is smoothing in nlp

What is smoothing in nlp Morphological smoothing

Morphological smoothing Exponential smoothing formula

Exponential smoothing formula How to calculate exponential smoothing

How to calculate exponential smoothing Exponential smoothing formula

Exponential smoothing formula Simple exponential smoothing forecast

Simple exponential smoothing forecast