A quick introduction to SANs and Panasas Activ

- Slides: 19

A quick introduction to SANs and Panasas Activ. Stor STORAGE NETWORKING Kevin Haines, e. Science Centre, STFC, RAL

Wiki. Pedia defines a SAN: A storage area network (SAN) is an architecture to attach remote computer storage devices (such as disk arrays, tape libraries, and optical jukeboxes) to servers in such a way that the devices appear as locally attached to the operating system.

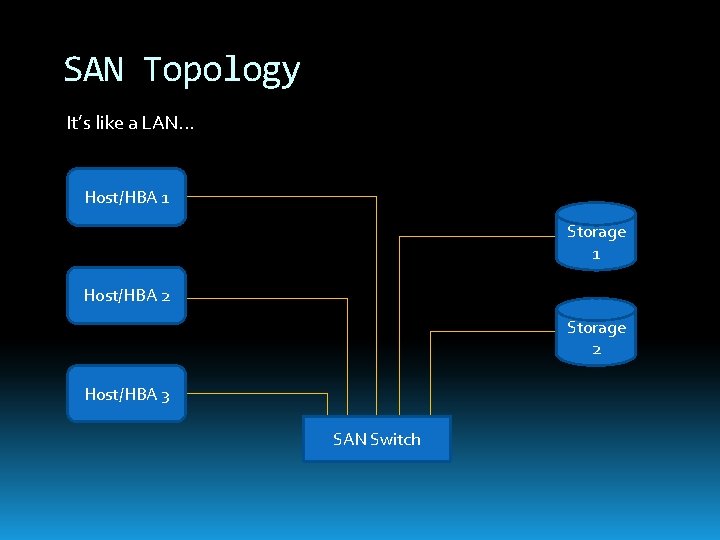

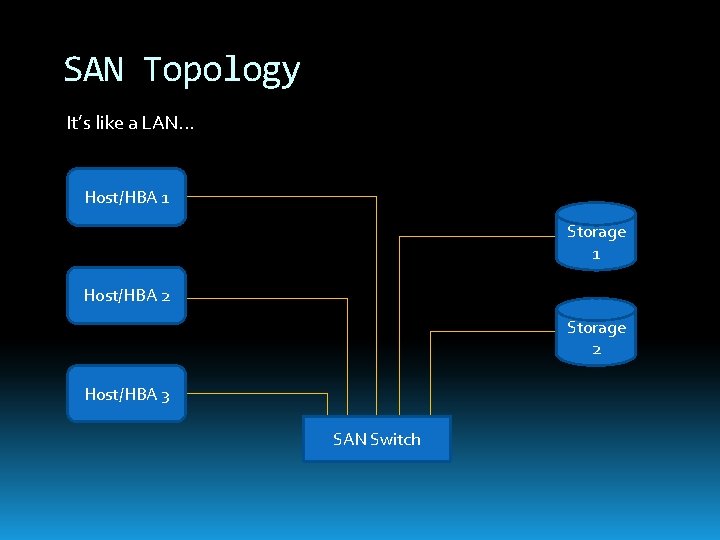

SAN Topology It’s like a LAN. . . Host/HBA 1 Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 SAN Switch

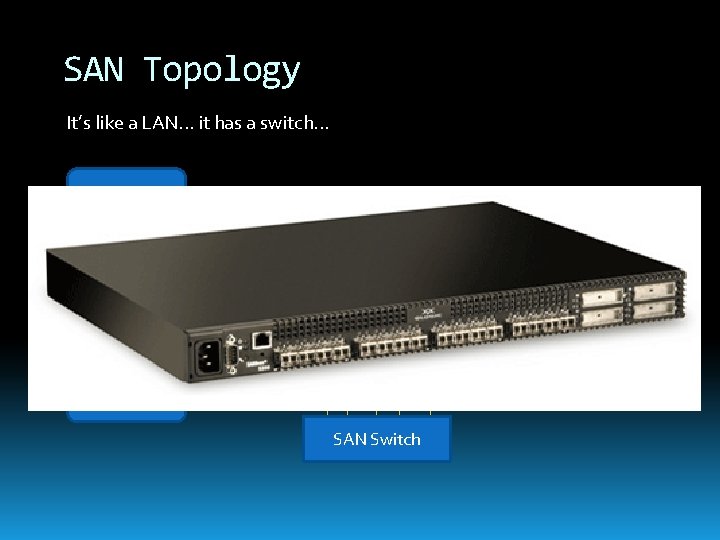

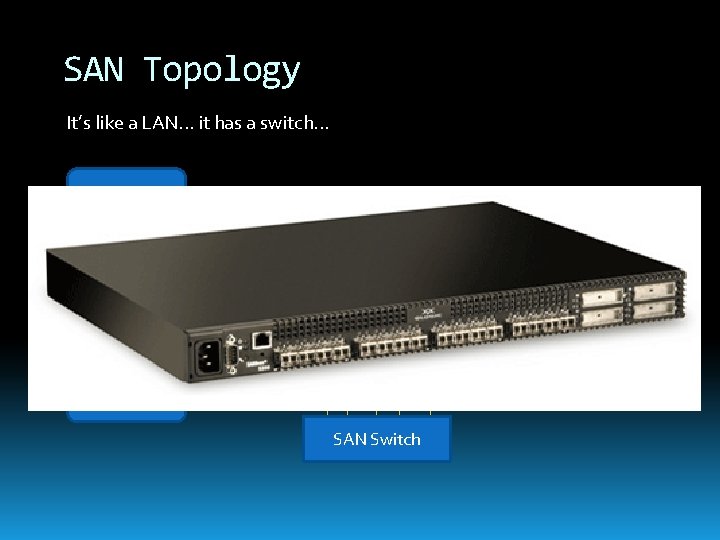

SAN Topology It’s like a LAN. . . it has a switch. . . Host/HBA 1 Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 SAN Switch

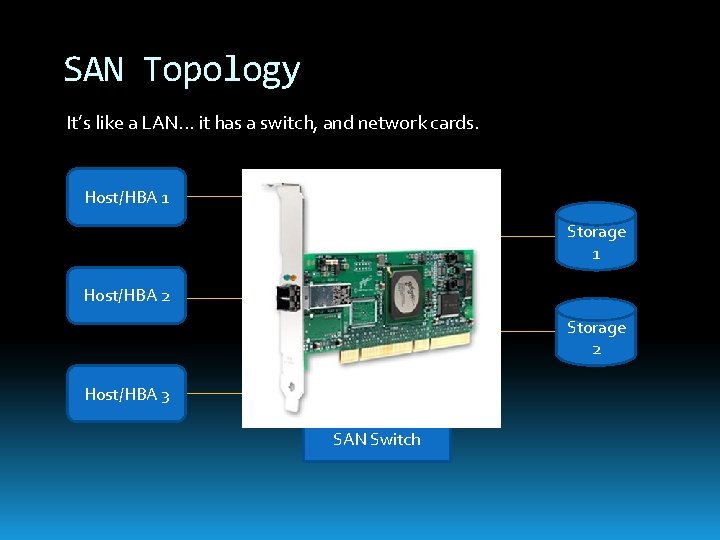

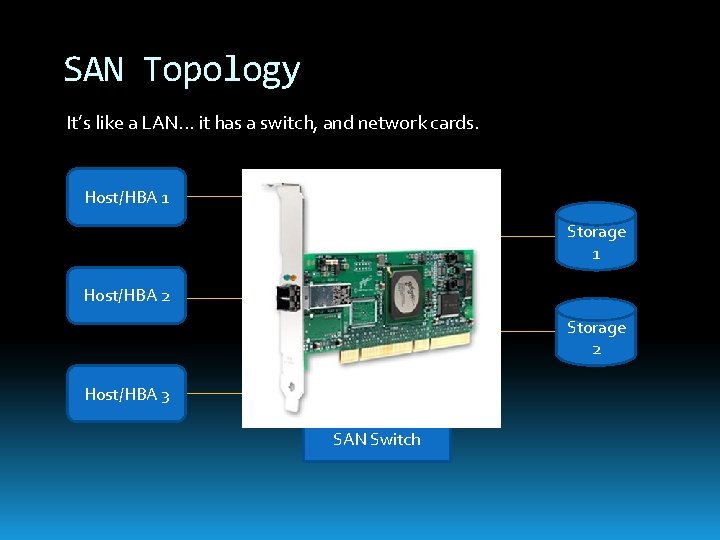

SAN Topology It’s like a LAN. . . it has a switch, and network cards. Host/HBA 1 Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 SAN Switch

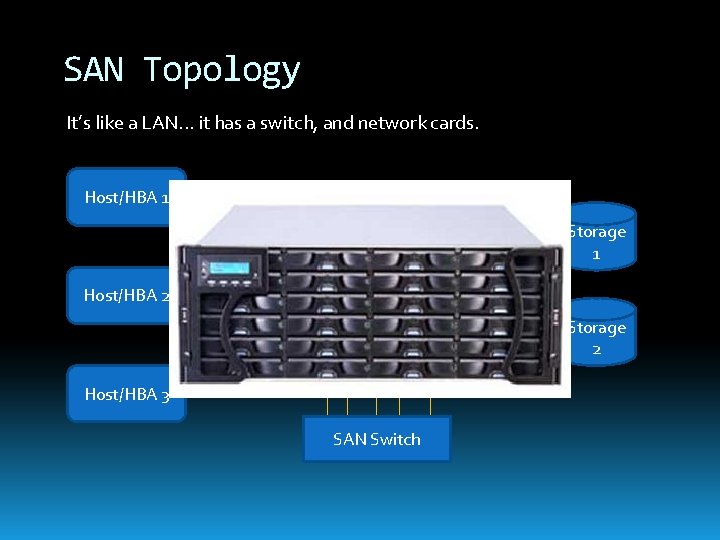

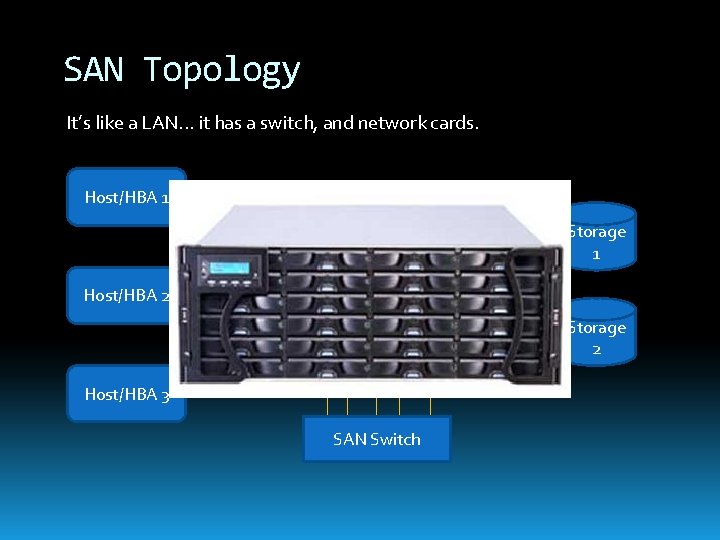

SAN Topology It’s like a LAN. . . it has a switch, and network cards. Host/HBA 1 Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 SAN Switch

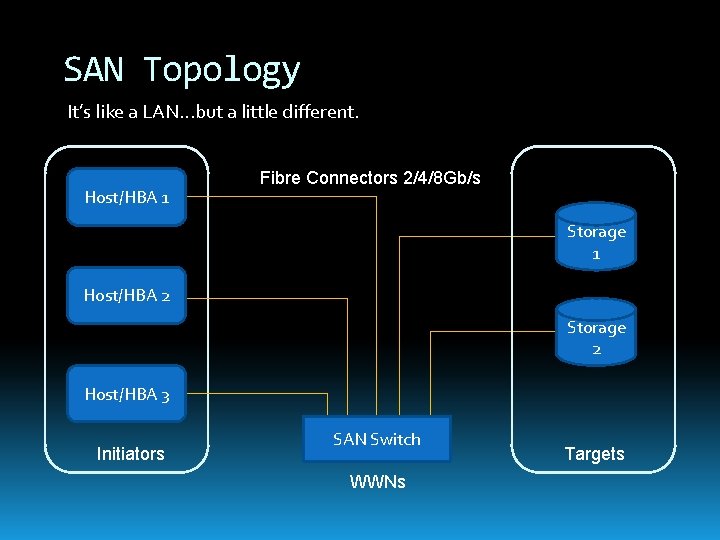

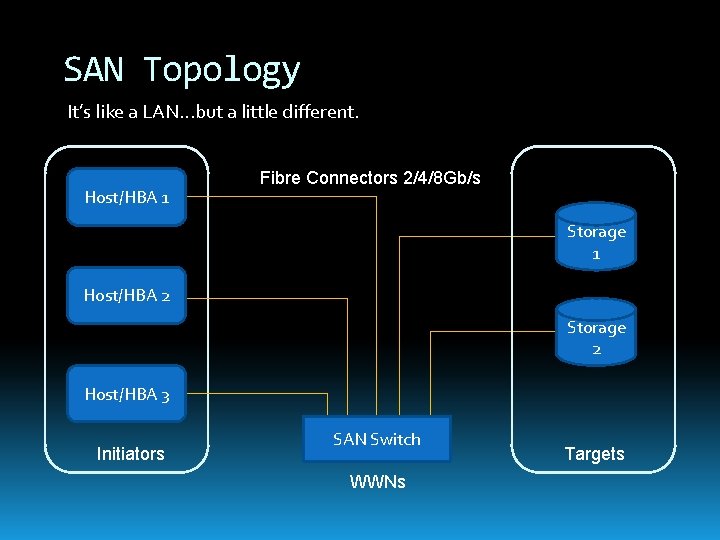

SAN Topology It’s like a LAN. . . but a little different. Host/HBA 1 Fibre Connectors 2/4/8 Gb/s Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 Initiators SAN Switch WWNs Targets

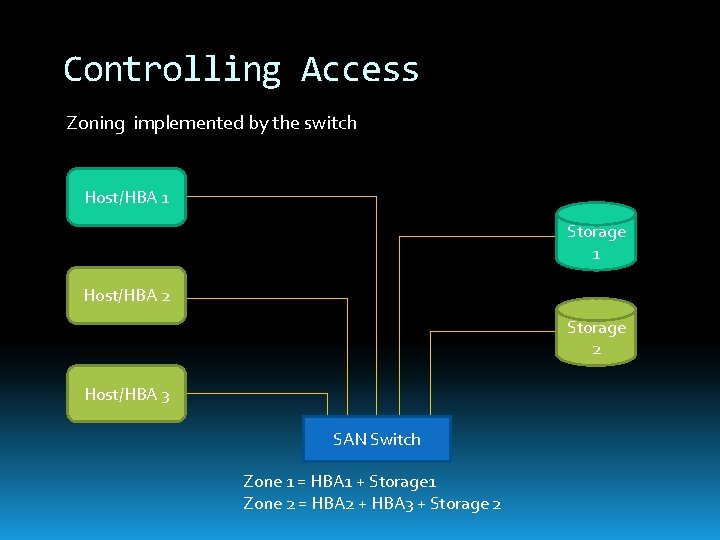

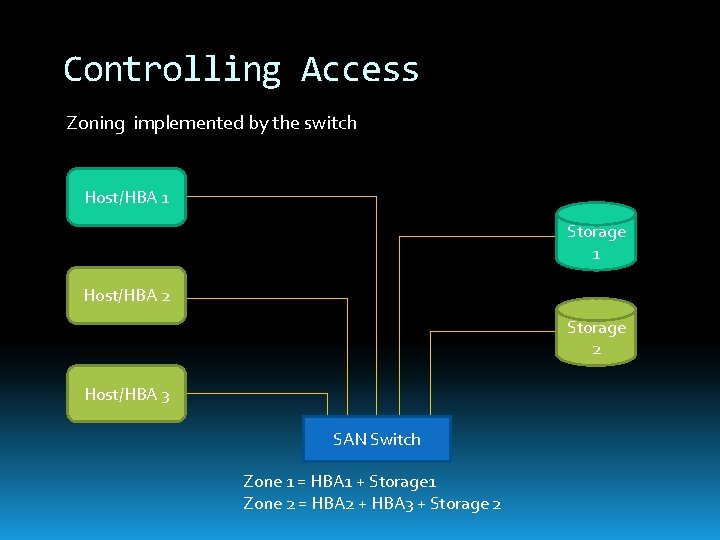

Controlling Access Zoning implemented by the switch Host/HBA 1 Storage 1 Host/HBA 2 Storage 2 Host/HBA 3 SAN Switch Zone 1 = HBA 1 + Storage 1 Zone 2 = HBA 2 + HBA 3 + Storage 2

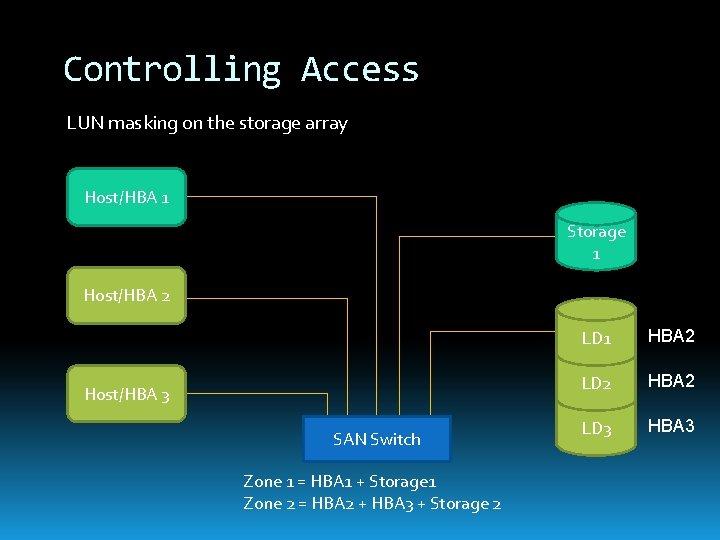

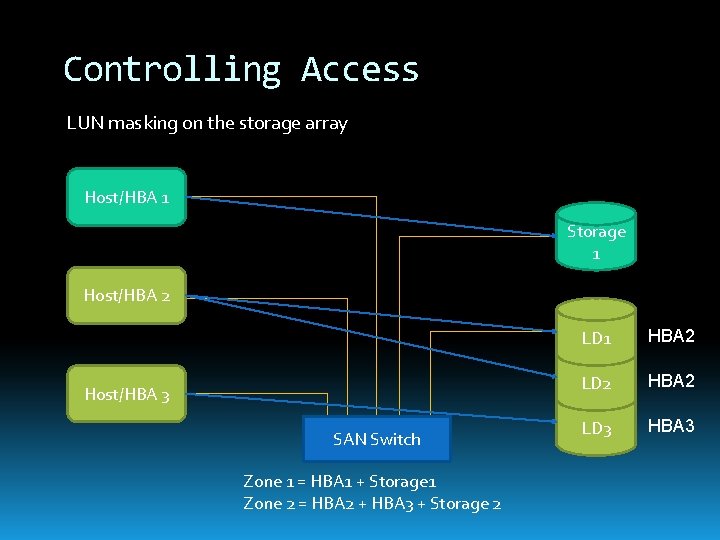

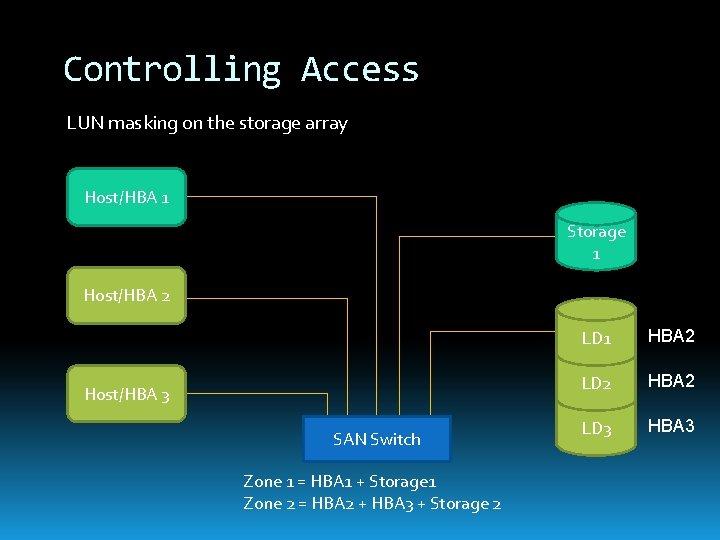

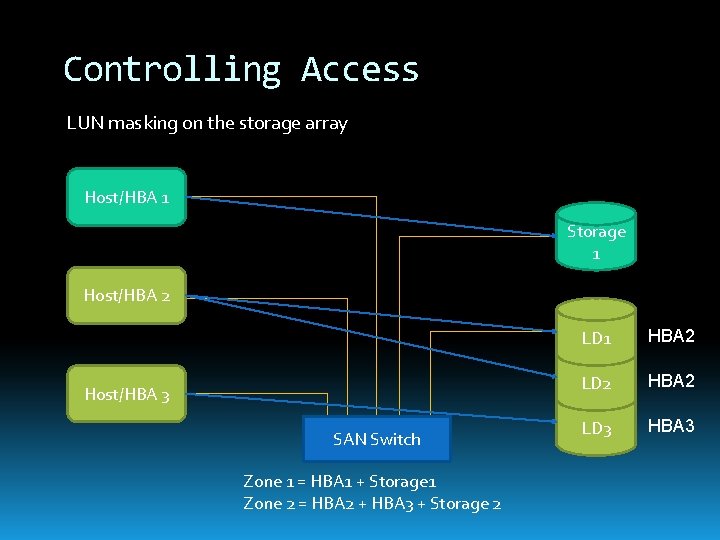

Controlling Access LUN masking on the storage array Host/HBA 1 Storage 1 Host/HBA 2 Host/HBA 3 SAN Switch Zone 1 = HBA 1 + Storage 1 Zone 2 = HBA 2 + HBA 3 + Storage 2 LD 1 HBA 2 LD 2 HBA 2 LD 3 HBA 3

Controlling Access LUN masking on the storage array Host/HBA 1 Storage 1 Host/HBA 2 Host/HBA 3 SAN Switch Zone 1 = HBA 1 + Storage 1 Zone 2 = HBA 2 + HBA 3 + Storage 2 LD 1 HBA 2 LD 2 HBA 2 LD 3 HBA 3

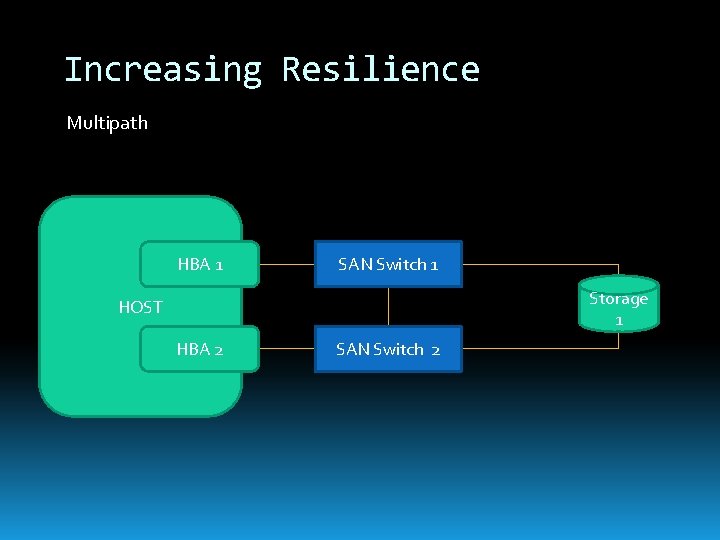

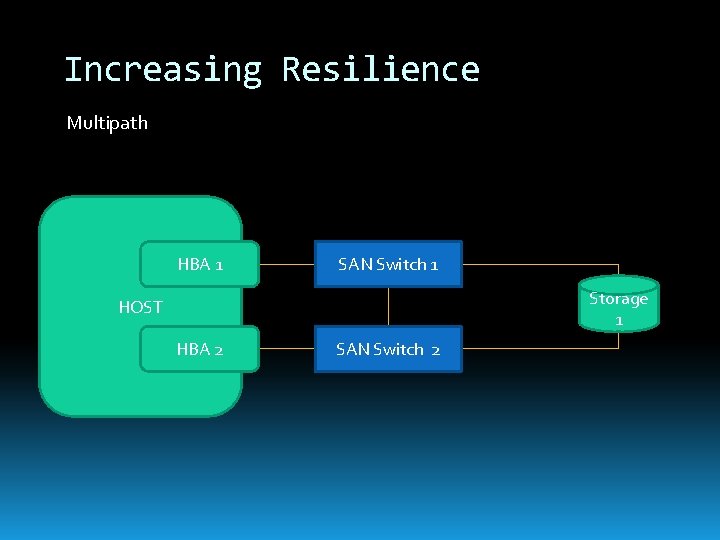

Increasing Resilience Multipath HBA 1 SAN Switch 1 Storage 1 HOST HBA 2 SAN Switch 2

Questions about SANs?

Panasas Activ. Stor

Panasas Activ. Stor

Panasas Activ. Stor • Director Blades • Meta-data and volume management services • NFS access gateway

Panasas Activ. Stor • Storage Blades • Two disks (up to 2 TB per blade) • 2 x. Gb/s ethernet (failover mode) • 11 per shelf (must be managed by DB)

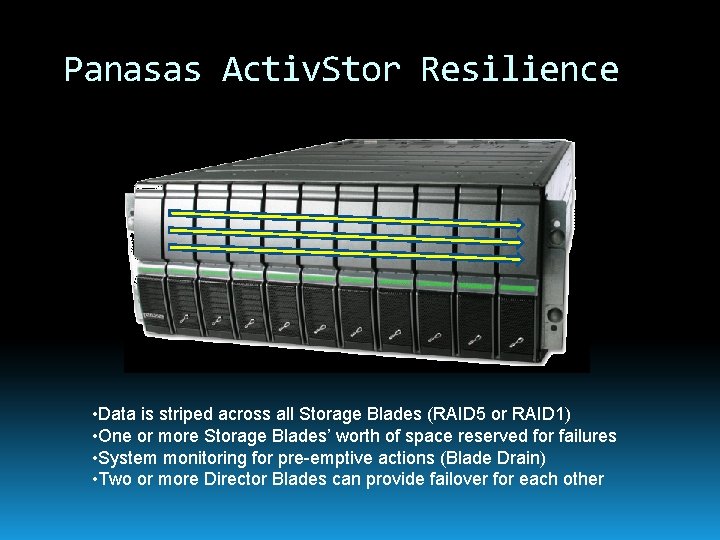

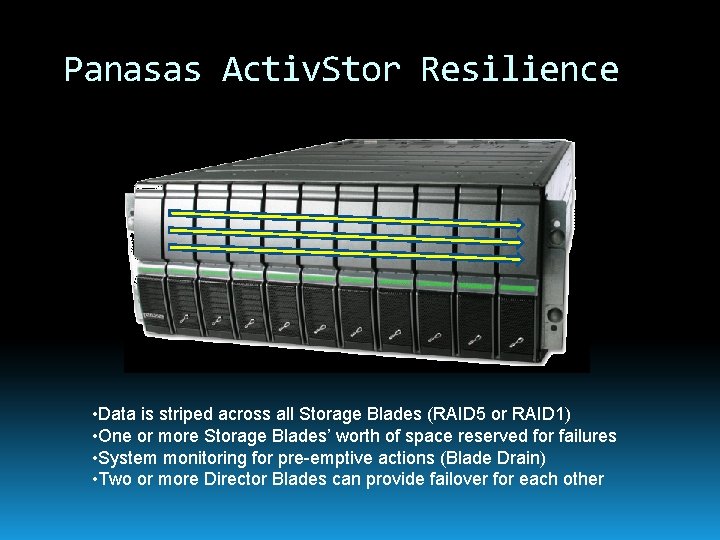

Panasas Activ. Stor Resilience • Data is striped across all Storage Blades (RAID 5 or RAID 1) • One or more Storage Blades’ worth of space reserved for failures • System monitoring for pre-emptive actions (Blade Drain) • Two or more Director Blades can provide failover for each other

Panasas Activ. Stor Performance Direct. FLOW clients Available for most major Linux distributions Directly communicates with Storage Blades RAID computations performed by client ~5000 supported (12000 option)

Panasas Activ. Stor Performance Direct. FLOW clients Available for most major Linux distributions Directly communicates with Storage Blades RAID computations performed by client ~5000 supported (12000 option) Results show scalable claim is true RAL: 1. 2 GB/s from 22 nodes (2 shelves) Road. Runner: 60 GB/s (103 shelves)