117 State Space and Planspace Planning Office hours

- Slides: 37

1/17: State Space and Plan-space Planning Office hours: 4: 30— 5: 30 pm T/Th CSE 574: Planning & Learning Subbarao Kambhampati

Do you know. . G G Factored vs. explicit state models Plan vs. Policy STRIPS assumption Conditional effects – Why is the conditional effect P=>Q allowed but the disjunction PVQ not allowed in deterministic planning? – And connection to executability G G Multi-valued fluents Durative vs. non-durative actions Partial vs. complete state Useful anlogies – “preconditions” are like “goals” – “effects” are like “init state literals” CSE 574: Planning & Learning Subbarao Kambhampati

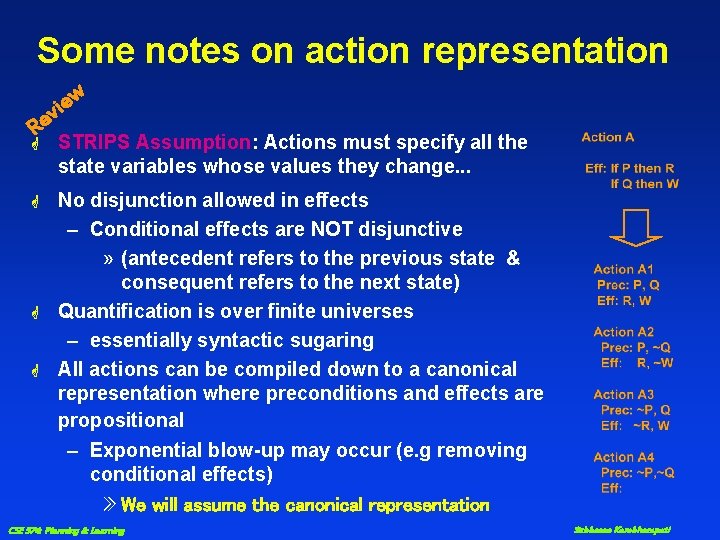

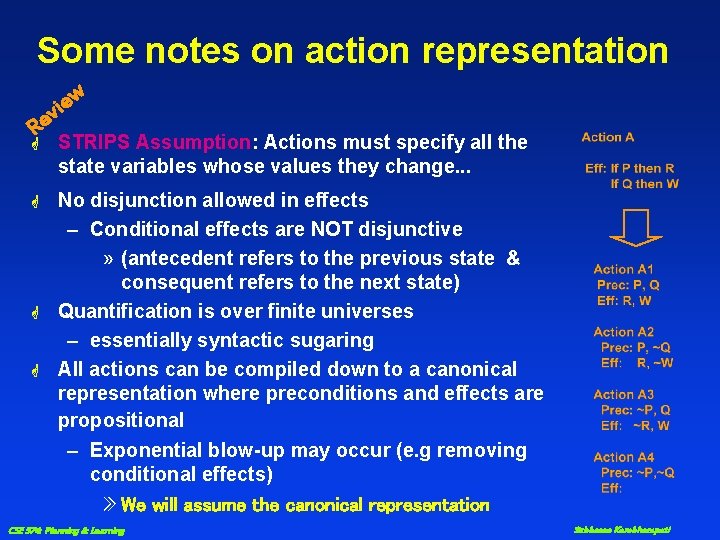

Some notes on action representation w e i v Re G STRIPS Assumption: Actions must specify all the state variables whose values they change. . . G No disjunction allowed in effects – Conditional effects are NOT disjunctive » (antecedent refers to the previous state & consequent refers to the next state) Quantification is over finite universes – essentially syntactic sugaring All actions can be compiled down to a canonical representation where preconditions and effects are propositional – Exponential blow-up may occur (e. g removing conditional effects) » We will assume the canonical representation G G CSE 574: Planning & Learning Subbarao Kambhampati

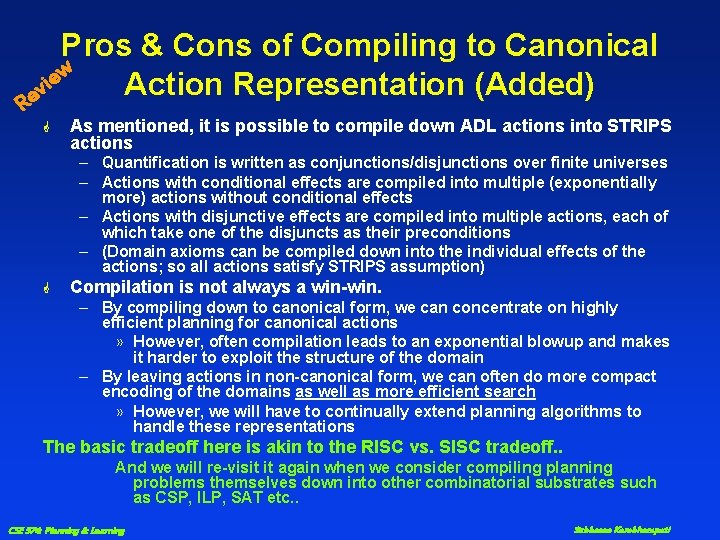

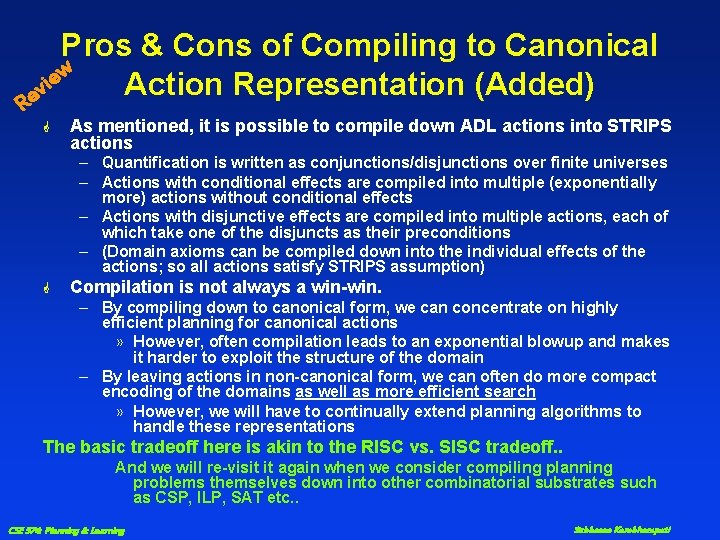

R Pros & Cons of Compiling to Canonical w e i Action Representation (Added) ev G As mentioned, it is possible to compile down ADL actions into STRIPS actions – Quantification is written as conjunctions/disjunctions over finite universes – Actions with conditional effects are compiled into multiple (exponentially more) actions without conditional effects – Actions with disjunctive effects are compiled into multiple actions, each of which take one of the disjuncts as their preconditions – (Domain axioms can be compiled down into the individual effects of the actions; so all actions satisfy STRIPS assumption) G Compilation is not always a win-win. – By compiling down to canonical form, we can concentrate on highly efficient planning for canonical actions » However, often compilation leads to an exponential blowup and makes it harder to exploit the structure of the domain – By leaving actions in non-canonical form, we can often do more compact encoding of the domains as well as more efficient search » However, we will have to continually extend planning algorithms to handle these representations The basic tradeoff here is akin to the RISC vs. SISC tradeoff. . And we will re-visit it again when we consider compiling planning problems themselves down into other combinatorial substrates such as CSP, ILP, SAT etc. . CSE 574: Planning & Learning Subbarao Kambhampati

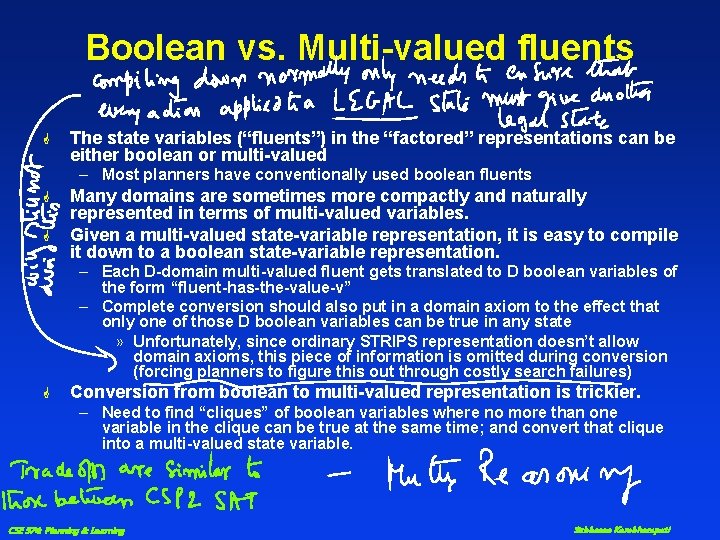

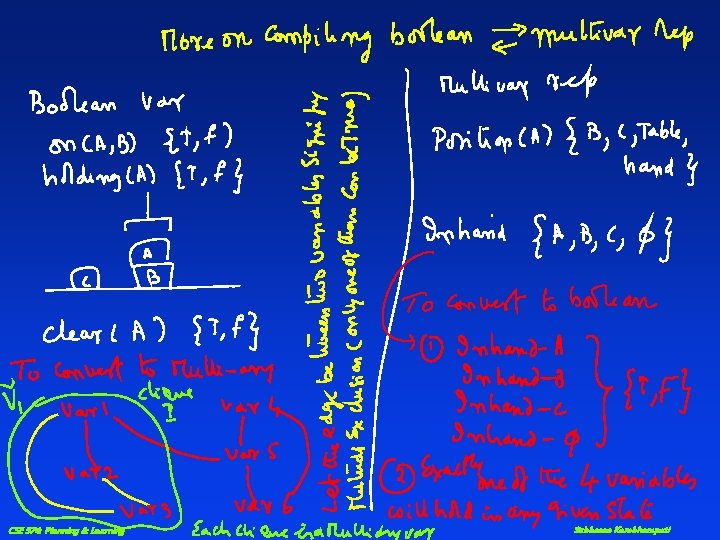

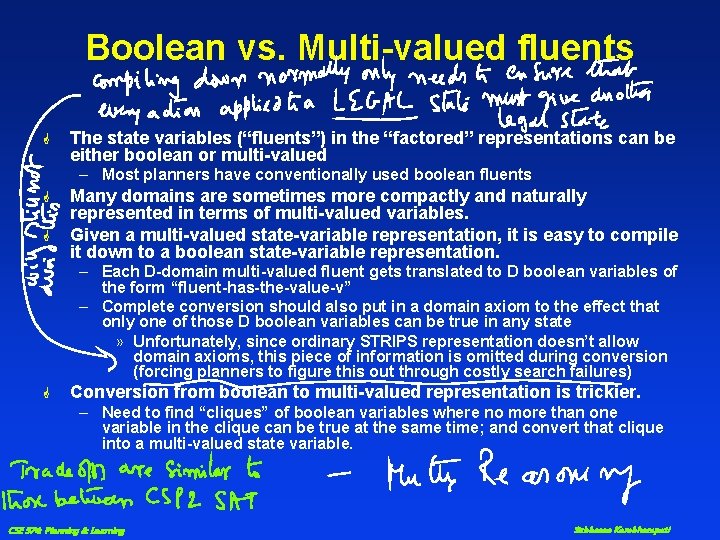

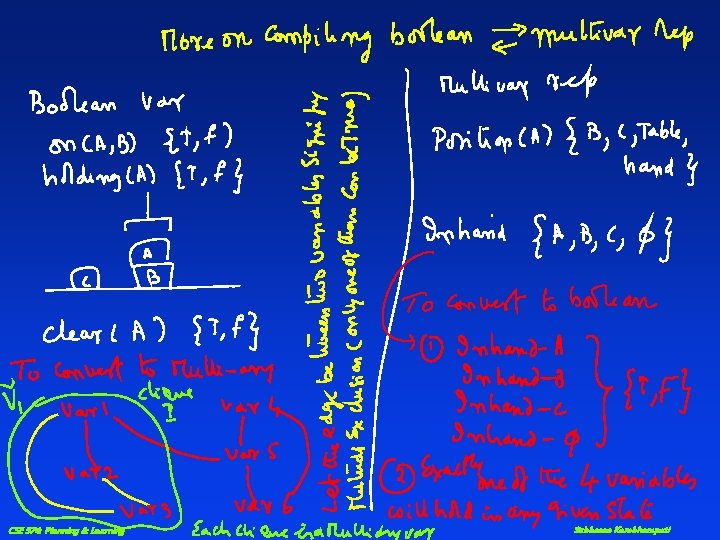

Boolean vs. Multi-valued fluents G The state variables (“fluents”) in the “factored” representations can be either boolean or multi-valued – Most planners have conventionally used boolean fluents G G Many domains are sometimes more compactly and naturally represented in terms of multi-valued variables. Given a multi-valued state-variable representation, it is easy to compile it down to a boolean state-variable representation. – Each D-domain multi-valued fluent gets translated to D boolean variables of the form “fluent-has-the-value-v” – Complete conversion should also put in a domain axiom to the effect that only one of those D boolean variables can be true in any state » Unfortunately, since ordinary STRIPS representation doesn’t allow domain axioms, this piece of information is omitted during conversion (forcing planners to figure this out through costly search failures) G Conversion from boolean to multi-valued representation is trickier. – Need to find “cliques” of boolean variables where no more than one variable in the clique can be true at the same time; and convert that clique into a multi-valued state variable. CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

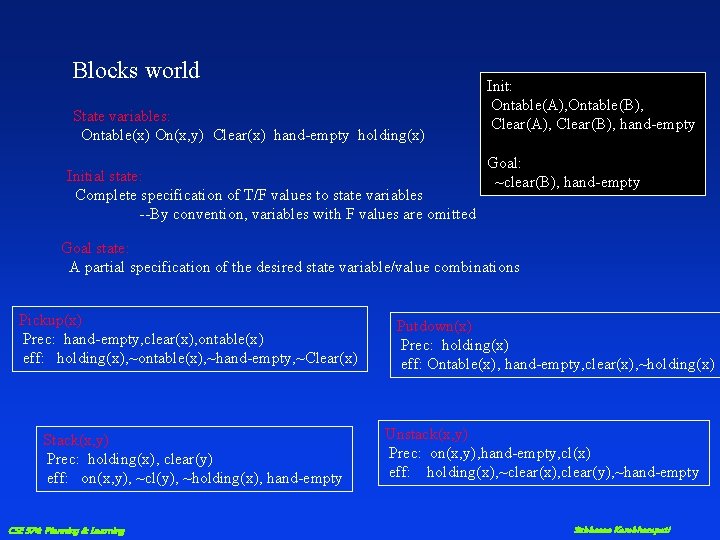

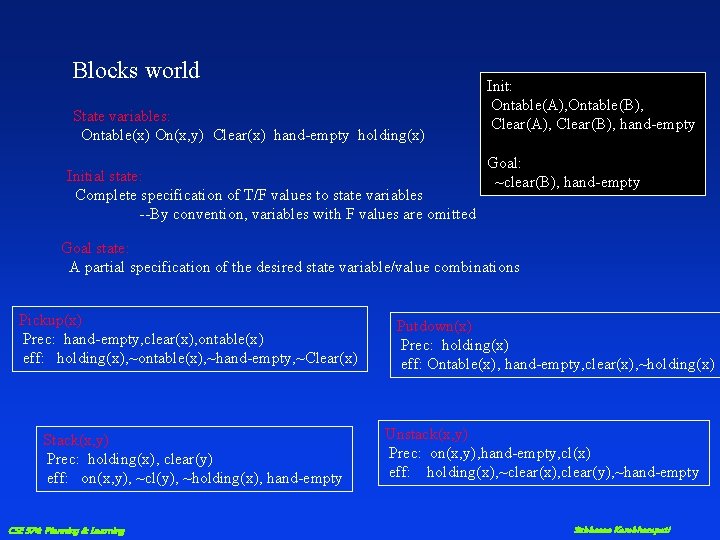

Blocks world State variables: Ontable(x) On(x, y) Clear(x) hand-empty holding(x) Initial state: Complete specification of T/F values to state variables --By convention, variables with F values are omitted Init: Ontable(A), Ontable(B), Clear(A), Clear(B), hand-empty Goal: ~clear(B), hand-empty Goal state: A partial specification of the desired state variable/value combinations Pickup(x) Prec: hand-empty, clear(x), ontable(x) eff: holding(x), ~ontable(x), ~hand-empty, ~Clear(x) Stack(x, y) Prec: holding(x), clear(y) eff: on(x, y), ~cl(y), ~holding(x), hand-empty CSE 574: Planning & Learning Putdown(x) Prec: holding(x) eff: Ontable(x), hand-empty, clear(x), ~holding(x) Unstack(x, y) Prec: on(x, y), hand-empty, cl(x) eff: holding(x), ~clear(x), clear(y), ~hand-empty Subbarao Kambhampati

PDDL—a standard for representing actions CSE 574: Planning & Learning Subbarao Kambhampati

PDDL Domains CSE 574: Planning & Learning Subbarao Kambhampati

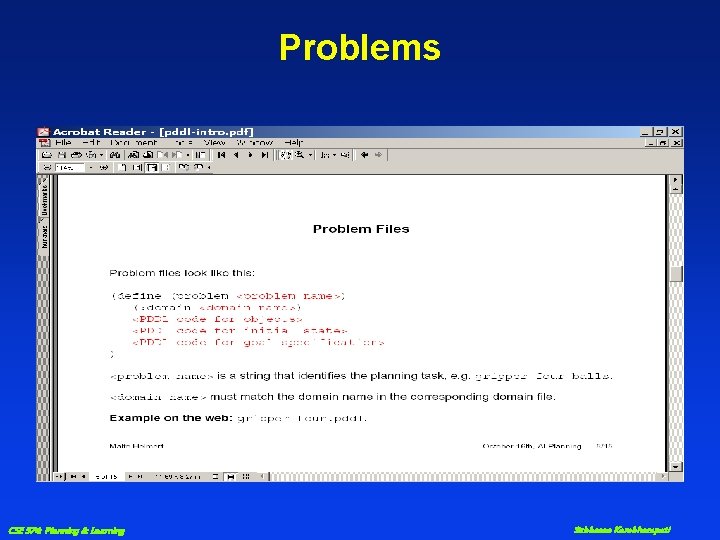

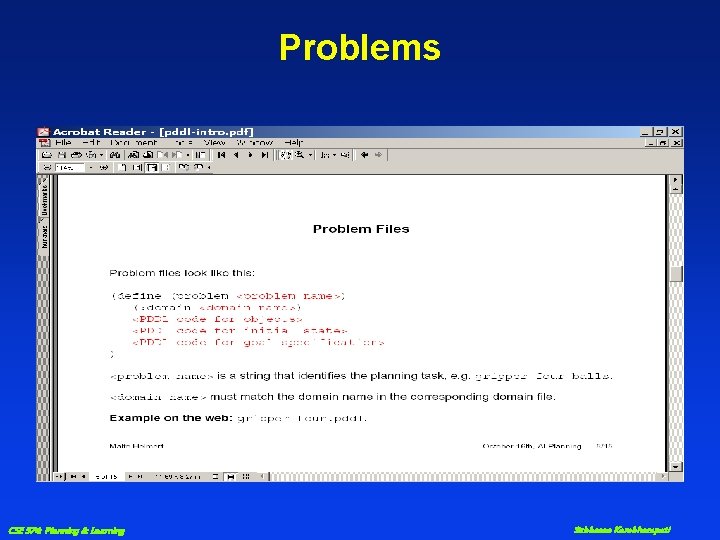

Problems CSE 574: Planning & Learning Subbarao Kambhampati

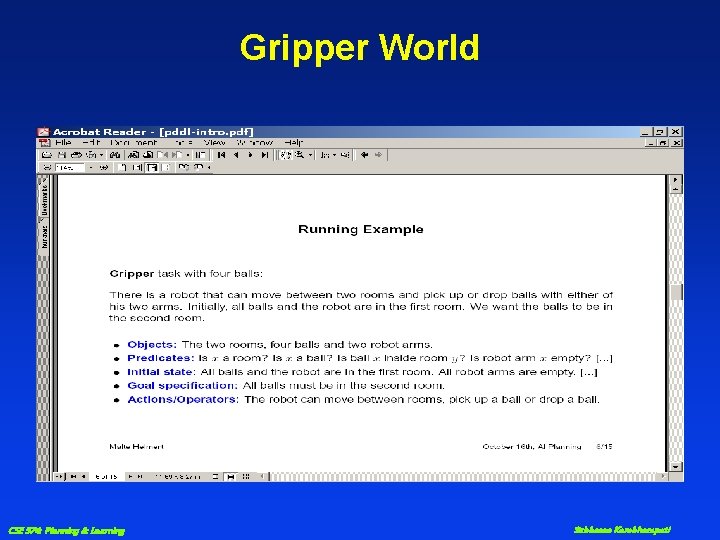

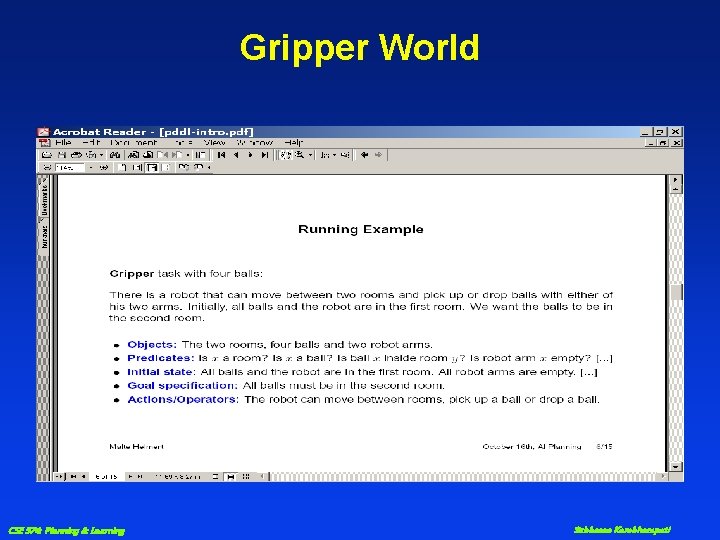

Gripper World CSE 574: Planning & Learning Subbarao Kambhampati

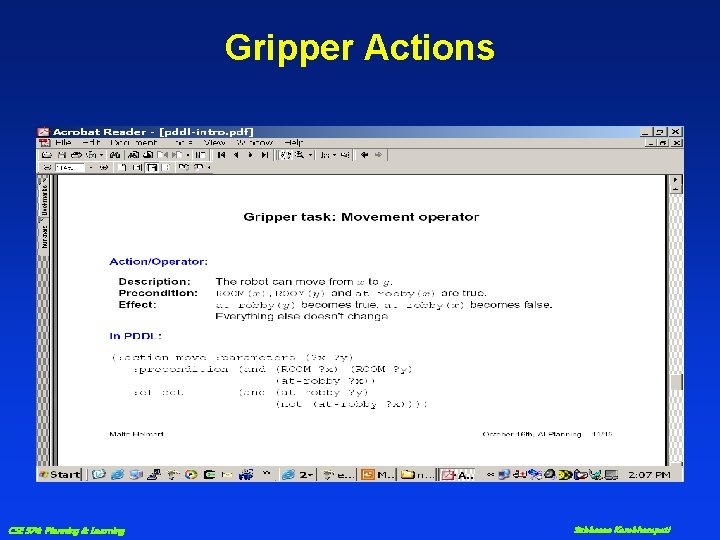

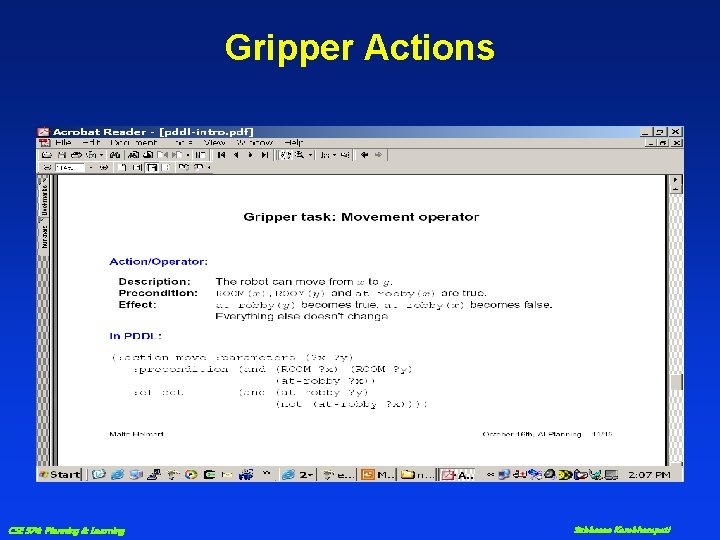

Gripper Actions CSE 574: Planning & Learning Subbarao Kambhampati

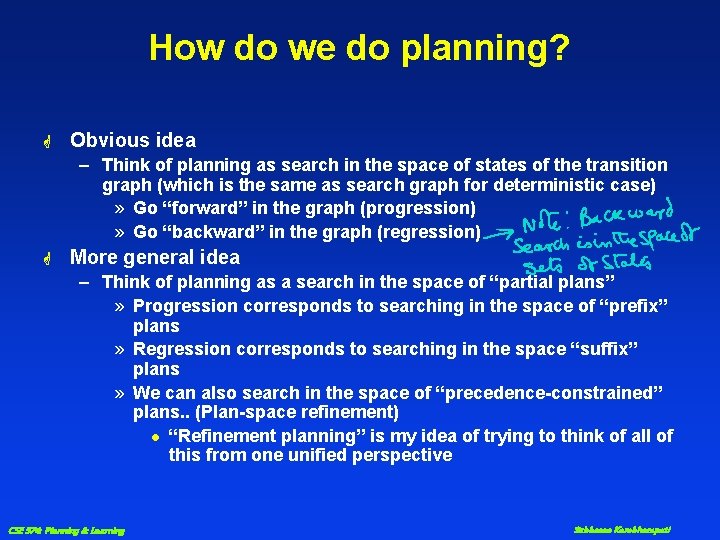

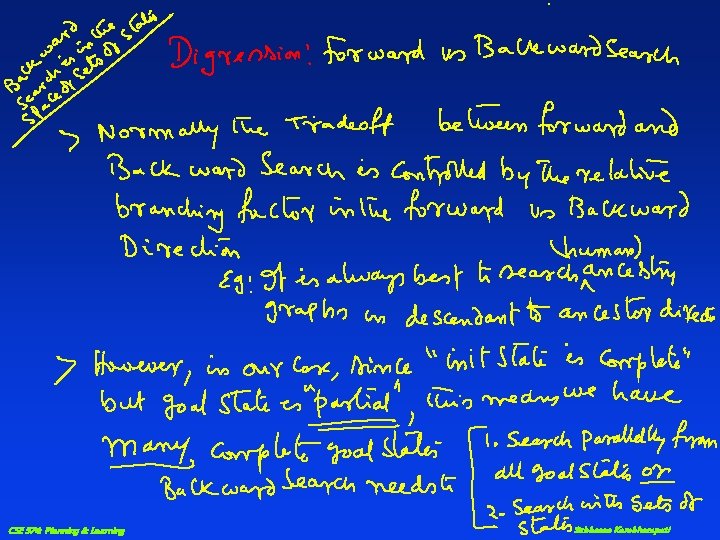

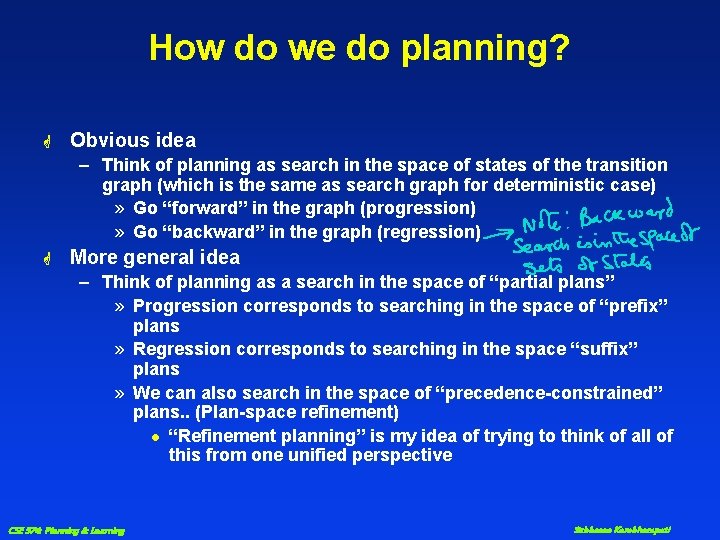

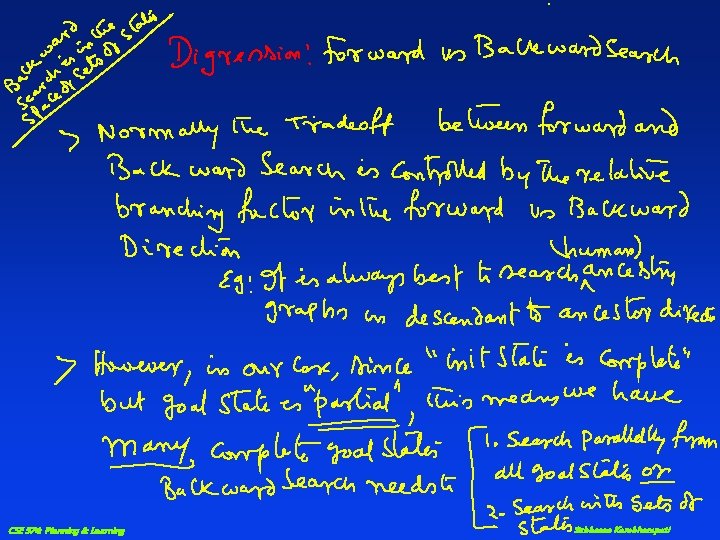

How do we do planning? G Obvious idea – Think of planning as search in the space of states of the transition graph (which is the same as search graph for deterministic case) » Go “forward” in the graph (progression) » Go “backward” in the graph (regression) G More general idea – Think of planning as a search in the space of “partial plans” » Progression corresponds to searching in the space of “prefix” plans » Regression corresponds to searching in the space “suffix” plans » We can also search in the space of “precedence-constrained” plans. . (Plan-space refinement) l “Refinement planning” is my idea of trying to think of all of this from one unified perspective CSE 574: Planning & Learning Subbarao Kambhampati

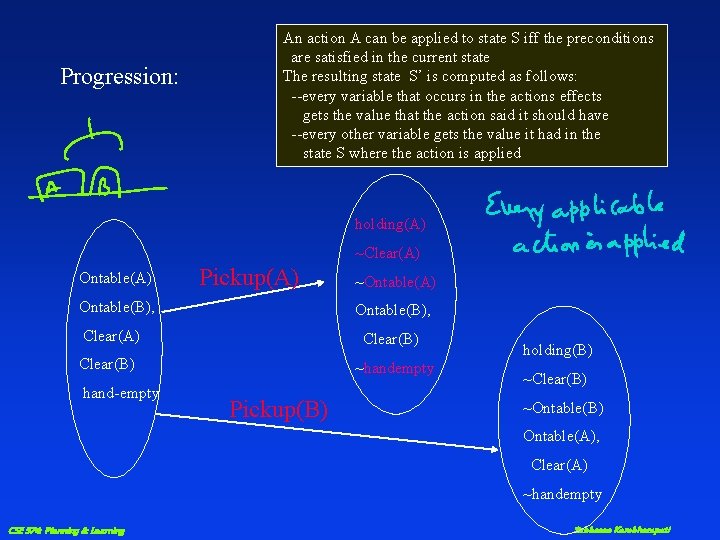

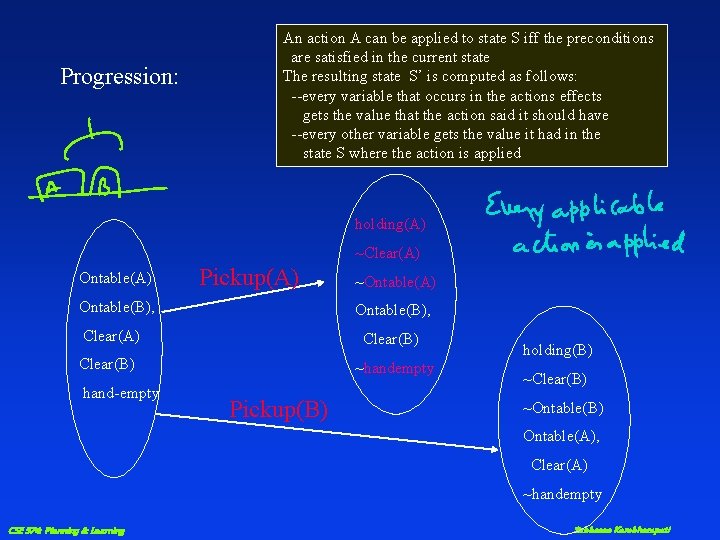

Progression: An action A can be applied to state S iff the preconditions are satisfied in the current state The resulting state S’ is computed as follows: --every variable that occurs in the actions effects gets the value that the action said it should have --every other variable gets the value it had in the state S where the action is applied holding(A) ~Clear(A) Ontable(A) Pickup(A) Ontable(B), ~Ontable(A) Ontable(B), Clear(A) Clear(B) ~handempty hand-empty Pickup(B) holding(B) ~Clear(B) ~Ontable(B) Ontable(A), Clear(A) ~handempty CSE 574: Planning & Learning Subbarao Kambhampati

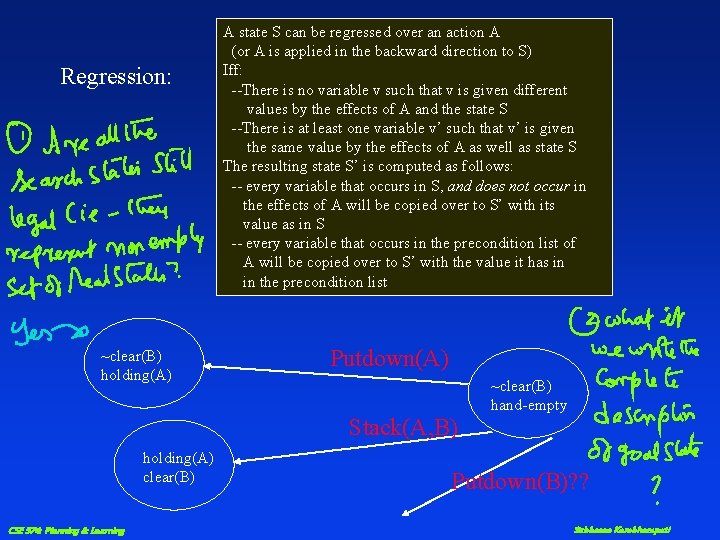

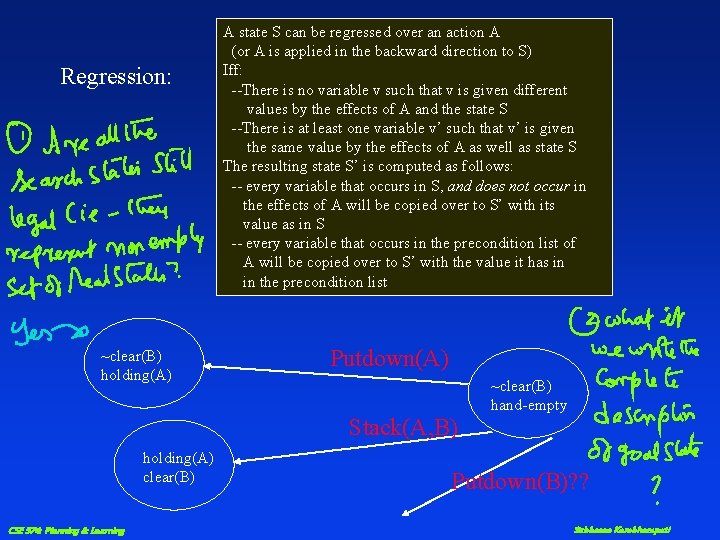

Regression: ~clear(B) holding(A) A state S can be regressed over an action A (or A is applied in the backward direction to S) Iff: --There is no variable v such that v is given different values by the effects of A and the state S --There is at least one variable v’ such that v’ is given the same value by the effects of A as well as state S The resulting state S’ is computed as follows: -- every variable that occurs in S, and does not occur in the effects of A will be copied over to S’ with its value as in S -- every variable that occurs in the precondition list of A will be copied over to S’ with the value it has in in the precondition list Putdown(A) Stack(A, B) holding(A) clear(B) CSE 574: Planning & Learning ~clear(B) hand-empty Putdown(B)? ? Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

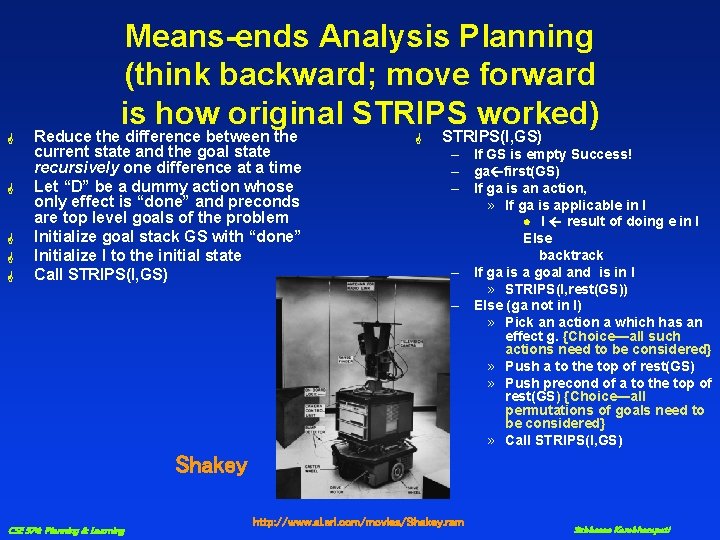

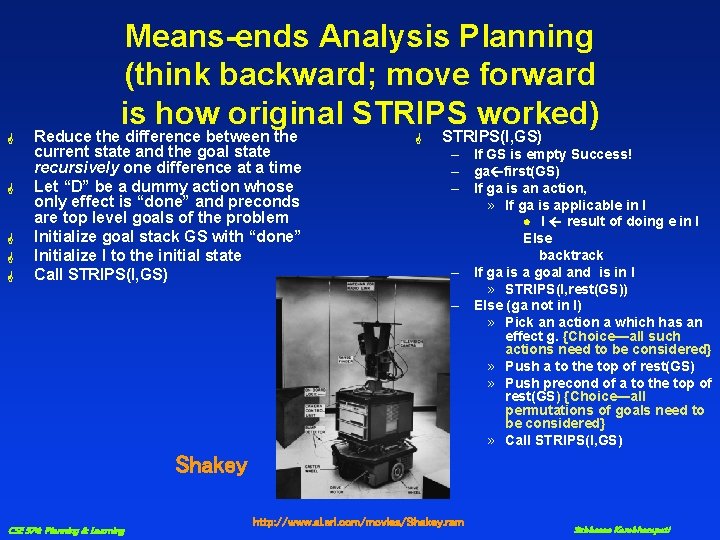

Means-ends Analysis Planning (think backward; move forward is how original STRIPS worked) G G G Reduce the difference between the current state and the goal state recursively one difference at a time Let “D” be a dummy action whose only effect is “done” and preconds are top level goals of the problem Initialize goal stack GS with “done” Initialize I to the initial state Call STRIPS(I, GS) G STRIPS(I, GS) – If GS is empty Success! – ga first(GS) – If ga is an action, » If ga is applicable in I l I result of doing e in I Else backtrack – If ga is a goal and is in I » STRIPS(I, rest(GS)) – Else (ga not in I) » Pick an action a which has an effect g. {Choice—all such actions need to be considered} » Push a to the top of rest(GS) » Push precond of a to the top of rest(GS) {Choice—all permutations of goals need to be considered} » Call STRIPS(I, GS) Shakey CSE 574: Planning & Learning http: //www. ai. sri. com/movies/Shakey. ram Subbarao Kambhampati

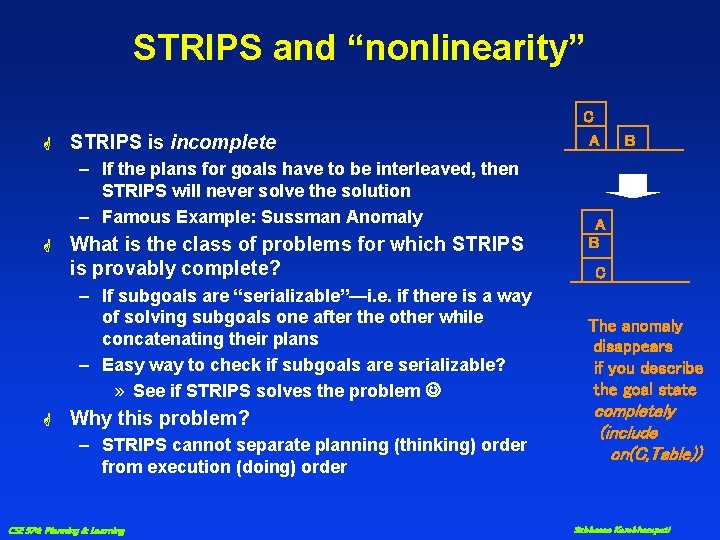

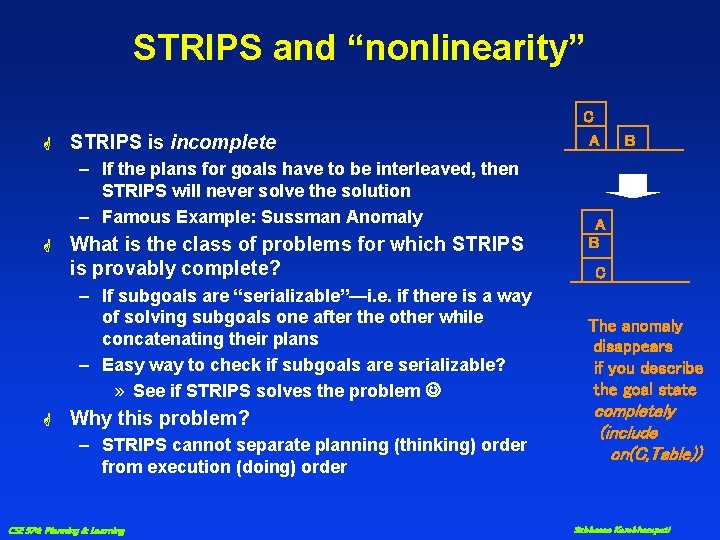

STRIPS and “nonlinearity” C G STRIPS is incomplete – If the plans for goals have to be interleaved, then STRIPS will never solve the solution – Famous Example: Sussman Anomaly G What is the class of problems for which STRIPS is provably complete? – If subgoals are “serializable”—i. e. if there is a way of solving subgoals one after the other while concatenating their plans – Easy way to check if subgoals are serializable? » See if STRIPS solves the problem G Why this problem? – STRIPS cannot separate planning (thinking) order from execution (doing) order CSE 574: Planning & Learning A B C The anomaly disappears if you describe the goal state completely (include on(C, Table)) Subbarao Kambhampati

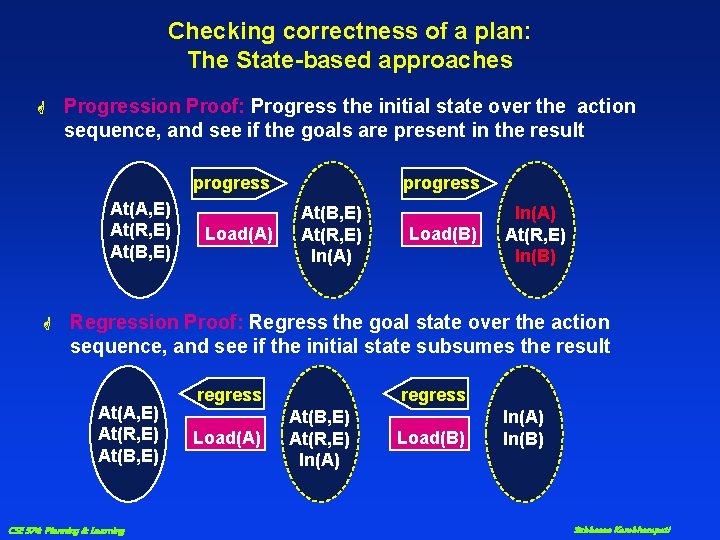

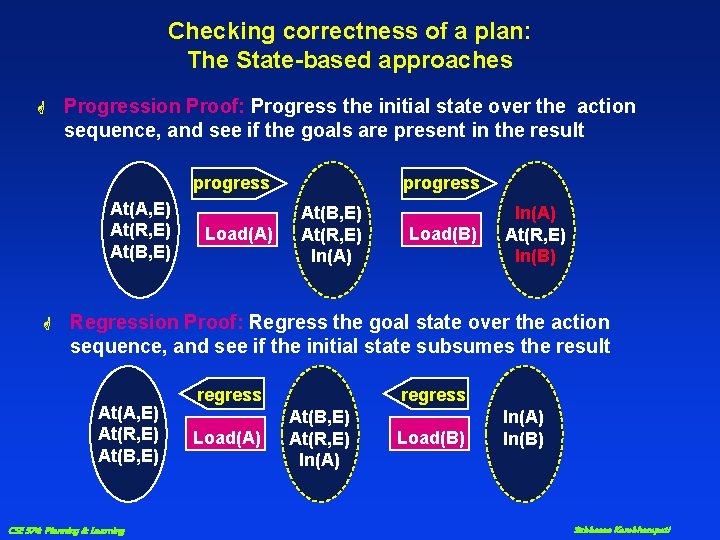

Checking correctness of a plan: The State-based approaches G Progression Proof: Progress the initial state over the action sequence, and see if the goals are present in the result progress At(A, E) At(R, E) At(B, E) G Load(A) progress At(B, E) At(R, E) In(A) Load(B) In(A) At(R, E) In(B) Regression Proof: Regress the goal state over the action sequence, and see if the initial state subsumes the result At(A, E) At(R, E) At(B, E) CSE 574: Planning & Learning regress Load(A) regress At(B, E) At(R, E) In(A) Load(B) In(A) In(B) Subbarao Kambhampati

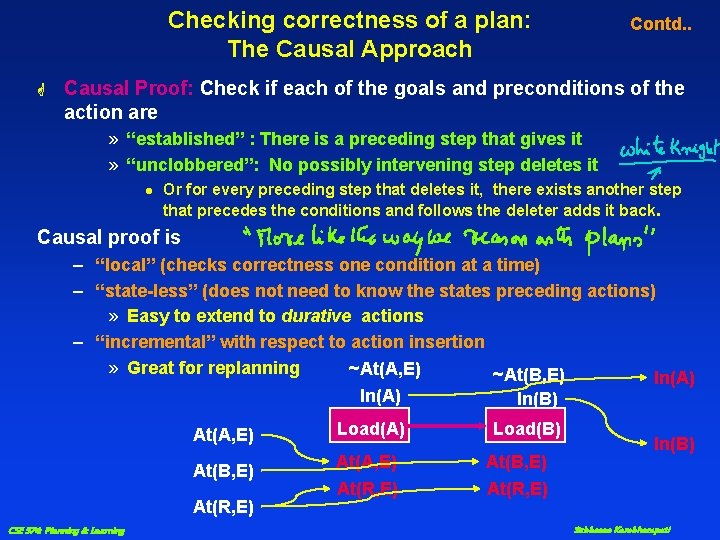

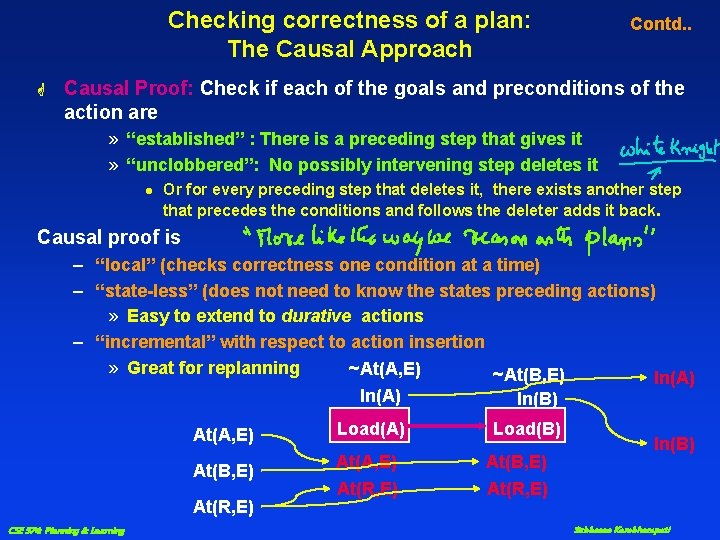

Checking correctness of a plan: The Causal Approach G Contd. . Causal Proof: Check if each of the goals and preconditions of the action are » “established” : There is a preceding step that gives it » “unclobbered”: No possibly intervening step deletes it l Or for every preceding step that deletes it, there exists another step that precedes the conditions and follows the deleter adds it back. Causal proof is – “local” (checks correctness one condition at a time) – “state-less” (does not need to know the states preceding actions) » Easy to extend to durative actions – “incremental” with respect to action insertion » Great for replanning ~At(A, E) ~At(B, E) In(A) In(B) At(A, E) At(B, E) At(R, E) CSE 574: Planning & Learning Load(A) Load(B) At(A, E) At(B, E) At(R, E) In(B) Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

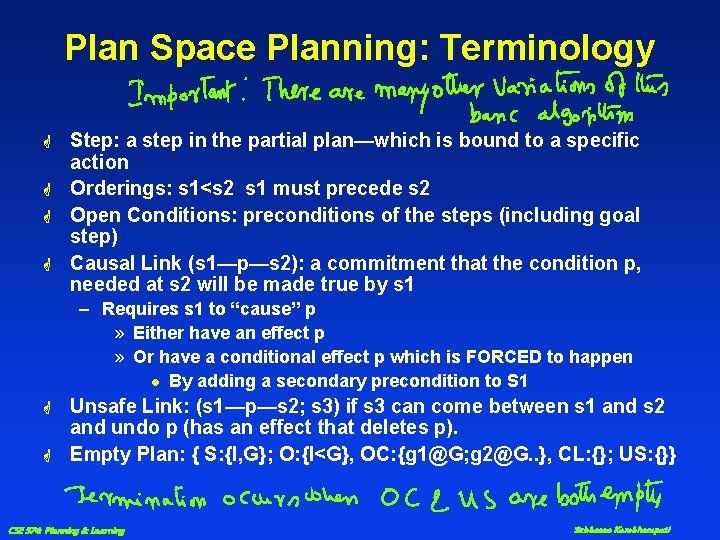

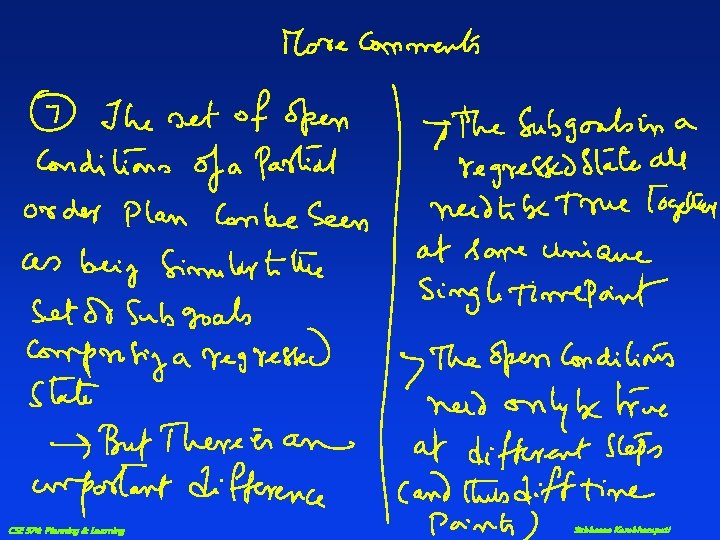

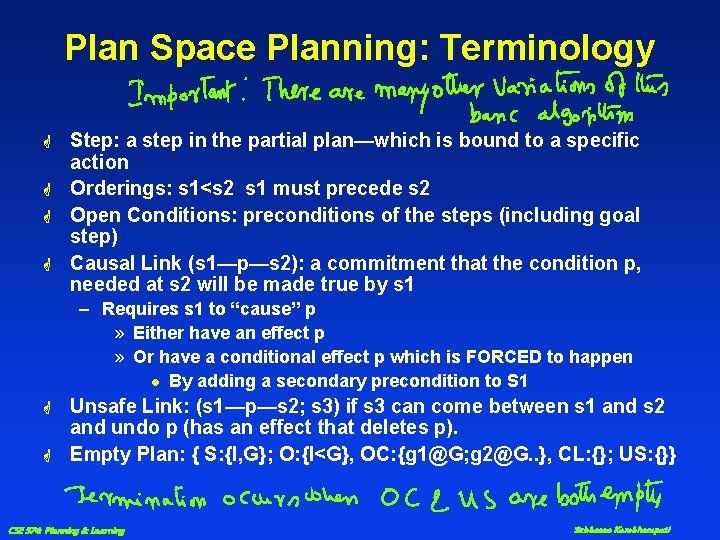

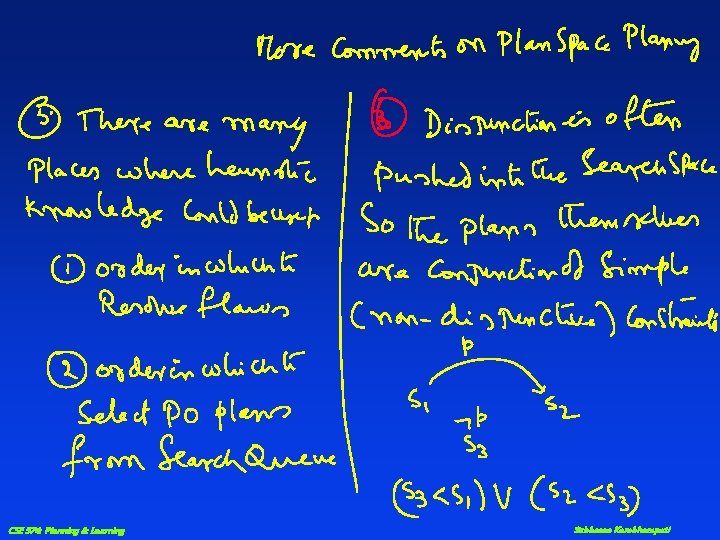

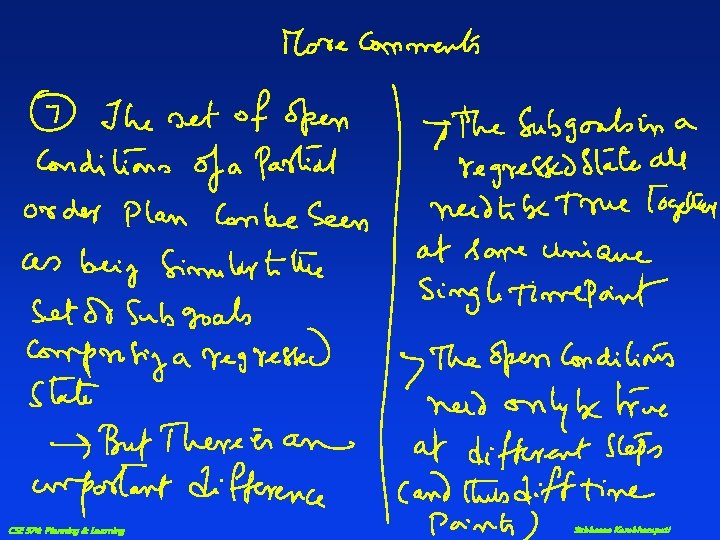

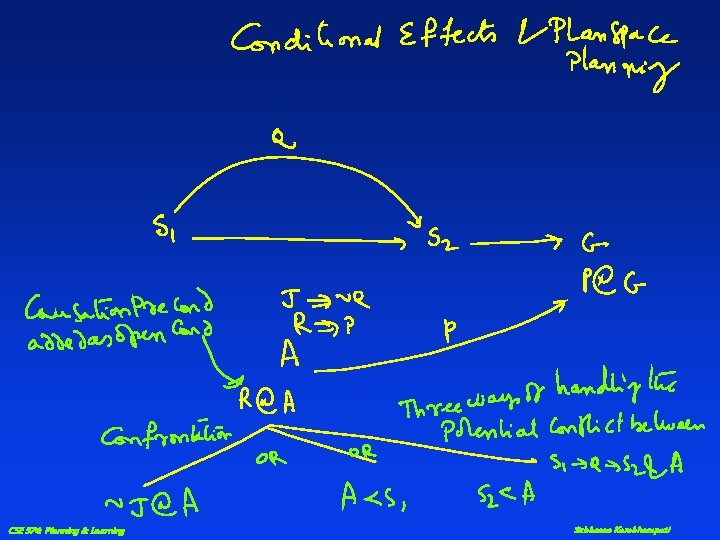

Plan Space Planning: Terminology G G Step: a step in the partial plan—which is bound to a specific action Orderings: s 1<s 2 s 1 must precede s 2 Open Conditions: preconditions of the steps (including goal step) Causal Link (s 1—p—s 2): a commitment that the condition p, needed at s 2 will be made true by s 1 – Requires s 1 to “cause” p » Either have an effect p » Or have a conditional effect p which is FORCED to happen l By adding a secondary precondition to S 1 G G Unsafe Link: (s 1—p—s 2; s 3) if s 3 can come between s 1 and s 2 and undo p (has an effect that deletes p). Empty Plan: { S: {I, G}; O: {I<G}, OC: {g 1@G; g 2@G. . }, CL: {}; US: {}} CSE 574: Planning & Learning Subbarao Kambhampati

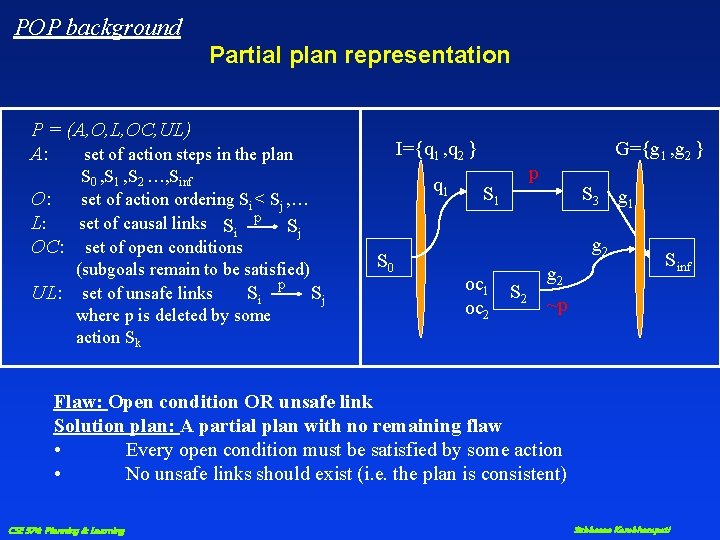

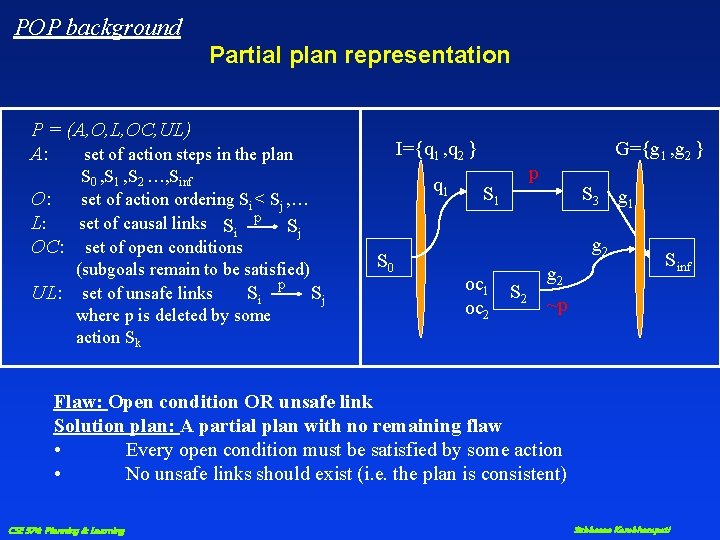

POP background Partial plan representation P = (A, O, L, OC, UL) A: set of action steps in the plan S 0 , S 1 , S 2 …, Sinf O: set of action ordering Si < Sj , … L: set of causal links Si p Sj OC: set of open conditions (subgoals remain to be satisfied) UL: set of unsafe links Si p Sj where p is deleted by some action Sk I={q 1 , q 2 } q 1 S 0 G={g 1 , g 2 } p S 1 S 3 g 1 g 2 oc 1 oc 2 S 2 g 2 Sinf ~p Flaw: Open condition OR unsafe link Solution plan: A partial plan with no remaining flaw • Every open condition must be satisfied by some action • No unsafe links should exist (i. e. the plan is consistent) CSE 574: Planning & Learning Subbarao Kambhampati

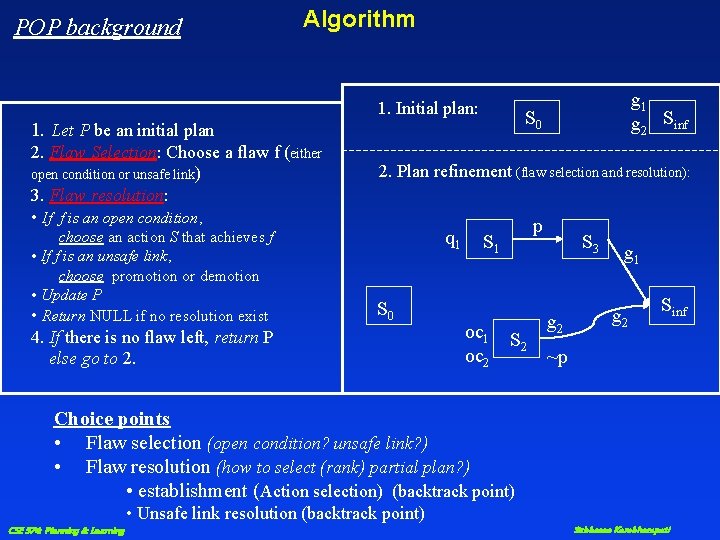

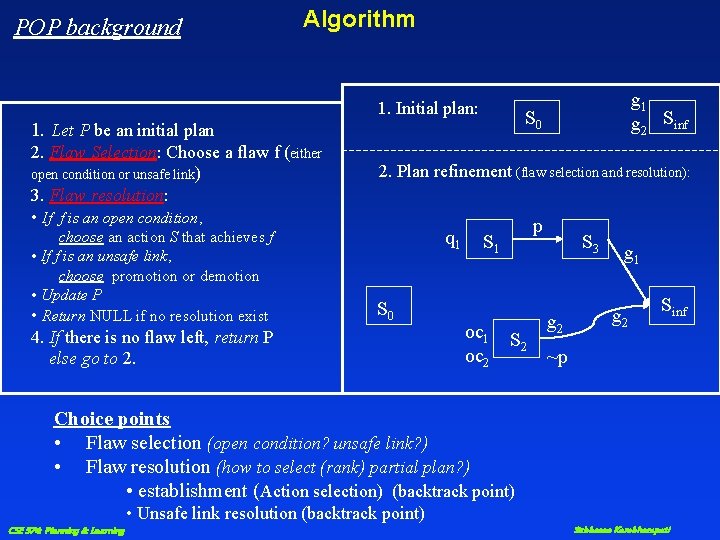

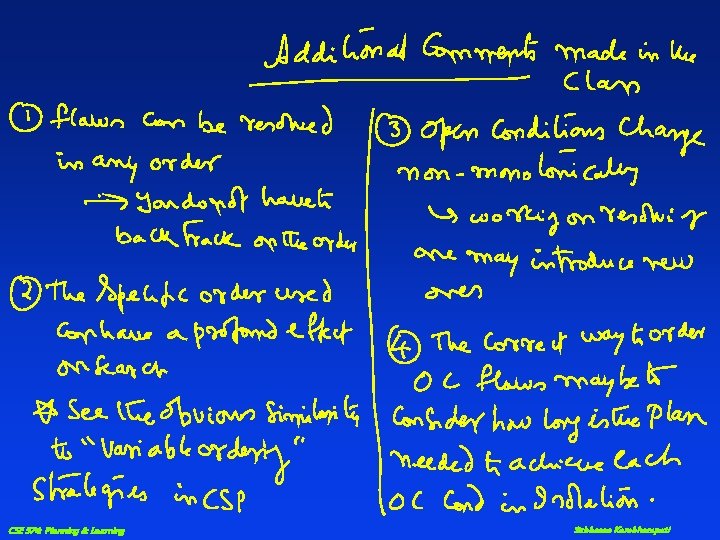

POP background Algorithm 1. Let P be an initial plan 2. Flaw Selection: Choose a flaw f (either open condition or unsafe link) 3. Flaw resolution: • If f is an open condition, choose an action S that achieves f • If f is an unsafe link, choose promotion or demotion • Update P • Return NULL if no resolution exist 1. Initial plan: g 1 g 2 Sinf S 0 2. Plan refinement (flaw selection and resolution): q 1 S 0 4. If there is no flaw left, return P else go to 2. p S 1 oc 2 S 3 g 2 g 1 g 2 Sinf ~p Choice points • Flaw selection (open condition? unsafe link? ) • Flaw resolution (how to select (rank) partial plan? ) • establishment (Action selection) (backtrack point) • Unsafe link resolution (backtrack point) CSE 574: Planning & Learning Subbarao Kambhampati

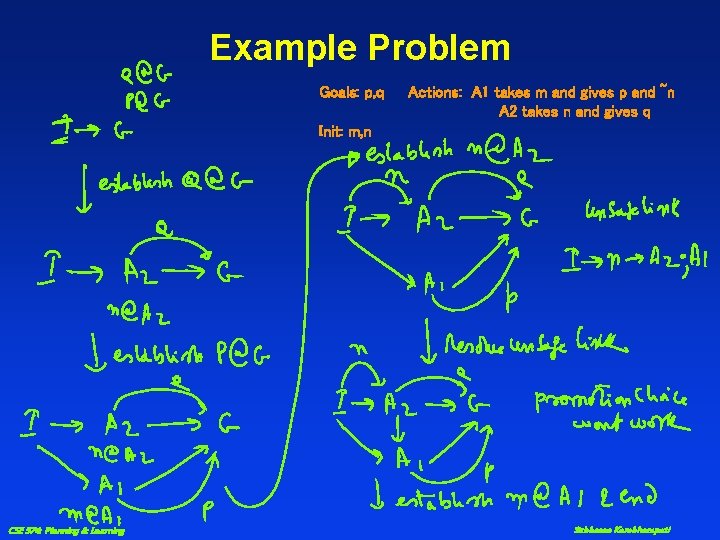

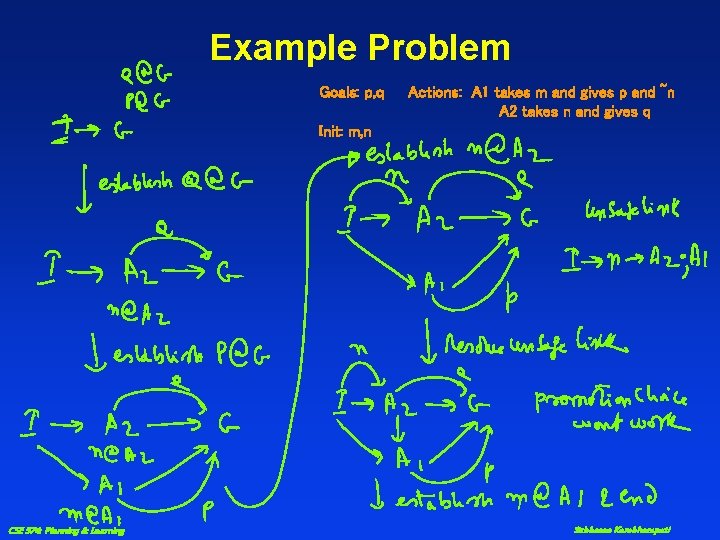

Example Problem Goals: p, q Actions: A 1 takes m and gives p and ~n A 2 takes n and gives q Init: m, n CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

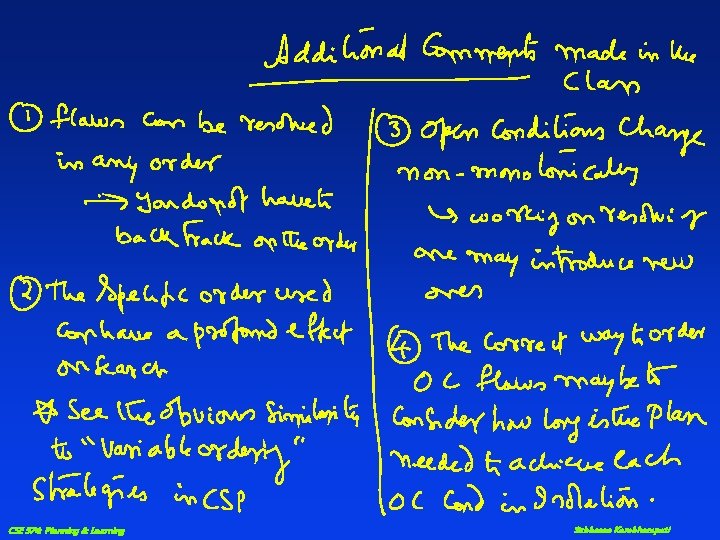

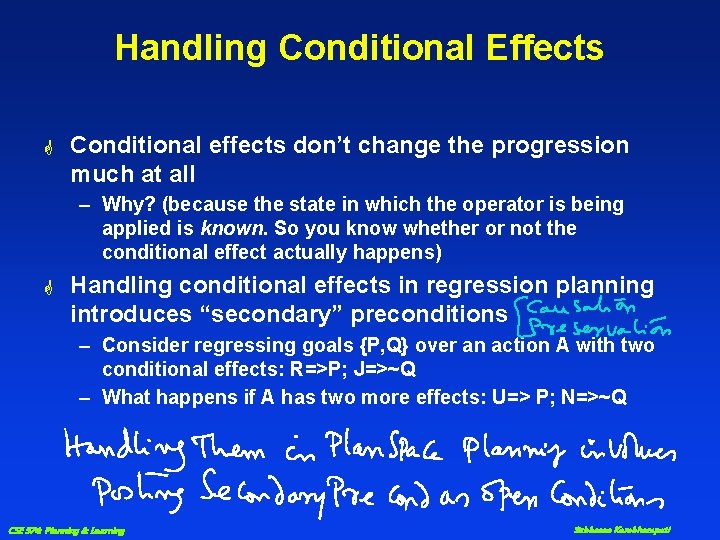

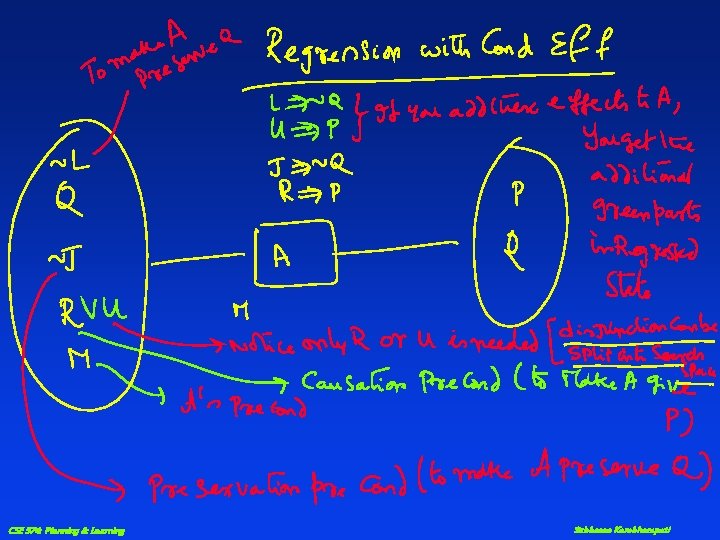

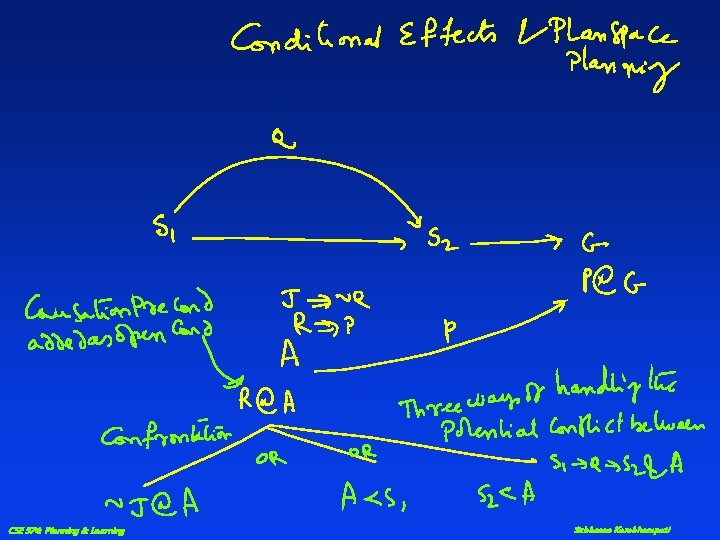

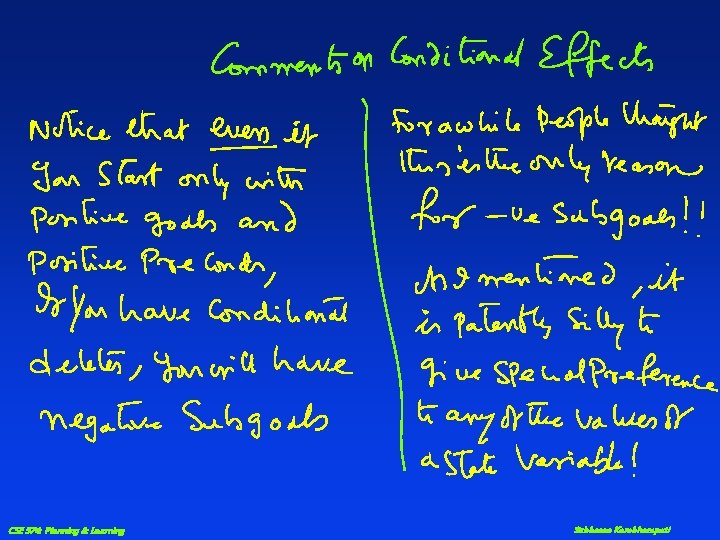

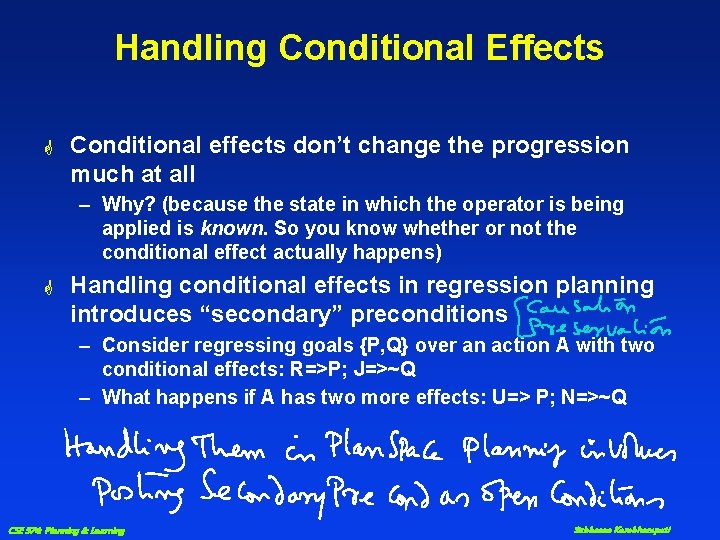

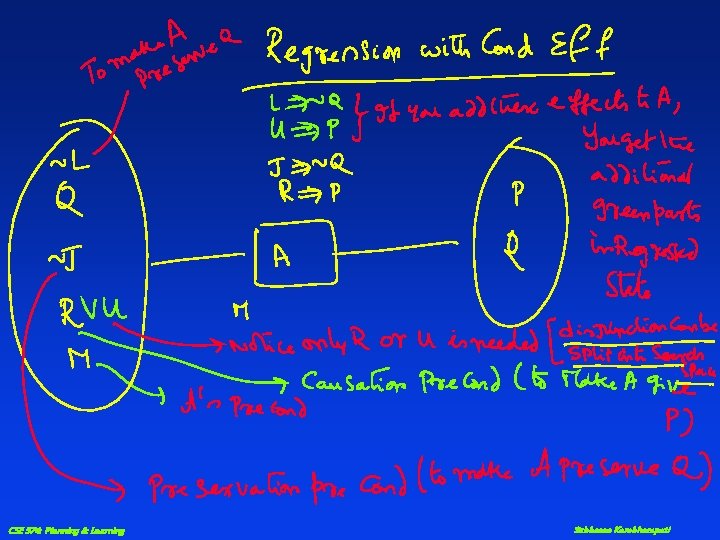

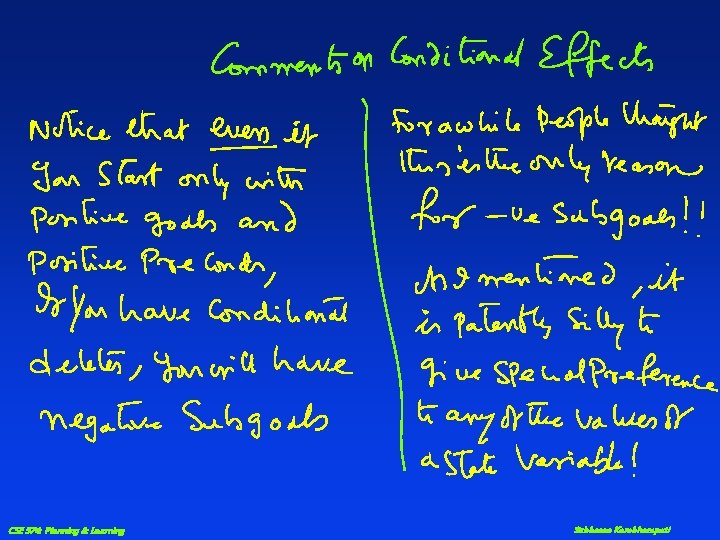

Handling Conditional Effects G Conditional effects don’t change the progression much at all – Why? (because the state in which the operator is being applied is known. So you know whether or not the conditional effect actually happens) G Handling conditional effects in regression planning introduces “secondary” preconditions – Consider regressing goals {P, Q} over an action A with two conditional effects: R=>P; J=>~Q – What happens if A has two more effects: U=> P; N=>~Q CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

CSE 574: Planning & Learning Subbarao Kambhampati

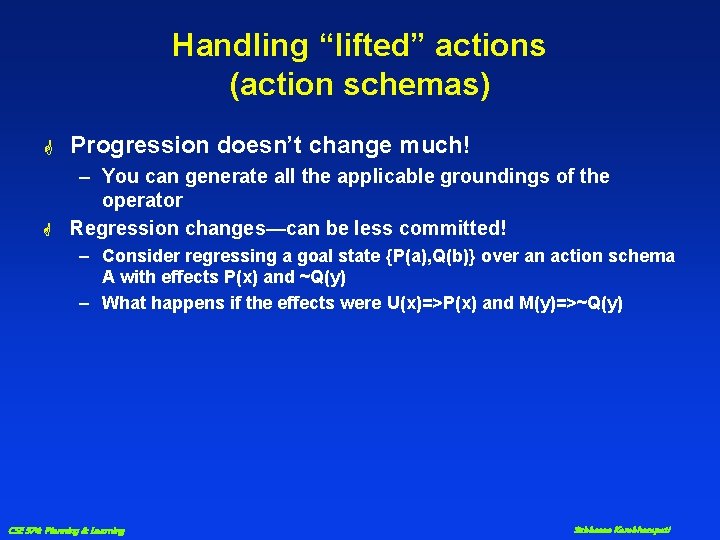

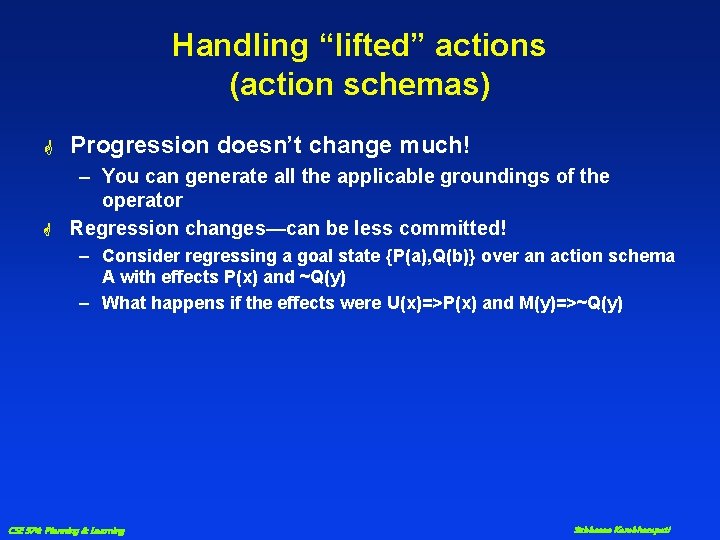

Handling “lifted” actions (action schemas) G Progression doesn’t change much! G – You can generate all the applicable groundings of the operator Regression changes—can be less committed! – Consider regressing a goal state {P(a), Q(b)} over an action schema A with effects P(x) and ~Q(y) – What happens if the effects were U(x)=>P(x) and M(y)=>~Q(y) CSE 574: Planning & Learning Subbarao Kambhampati

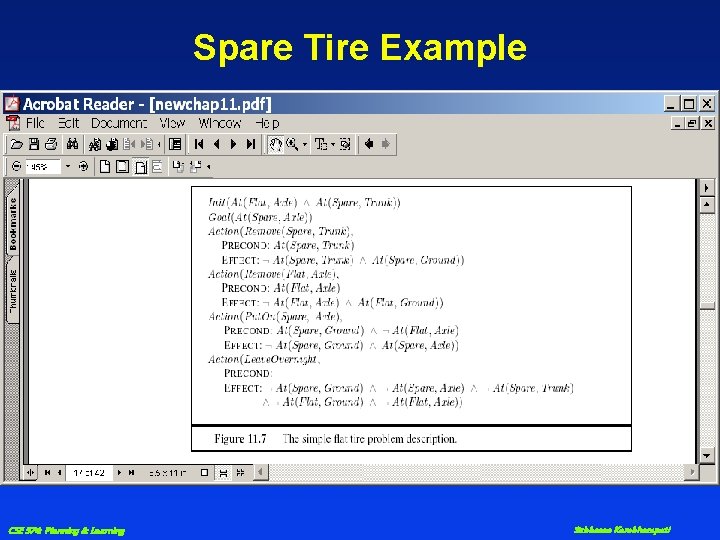

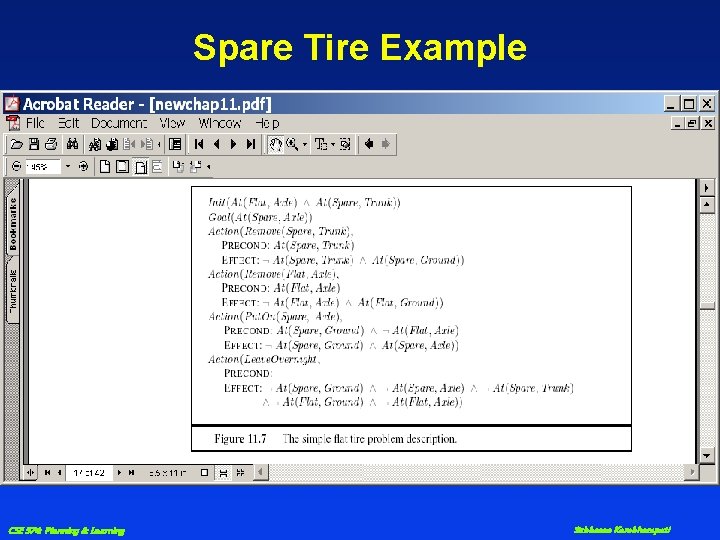

Spare Tire Example CSE 574: Planning & Learning Subbarao Kambhampati

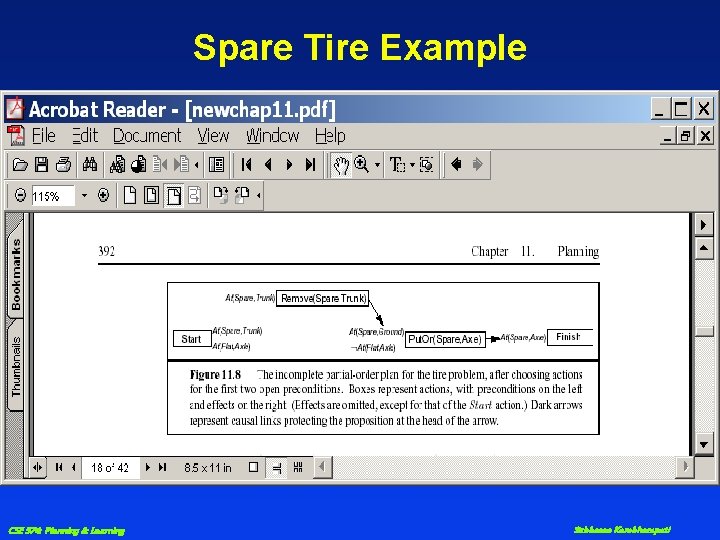

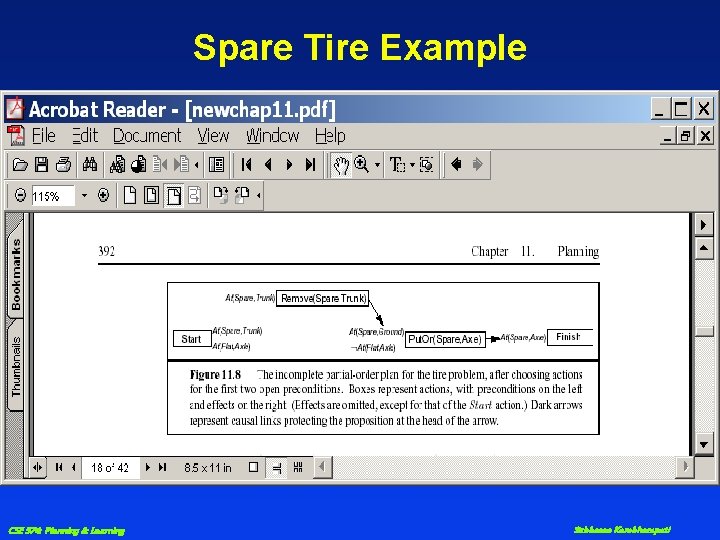

Spare Tire Example CSE 574: Planning & Learning Subbarao Kambhampati

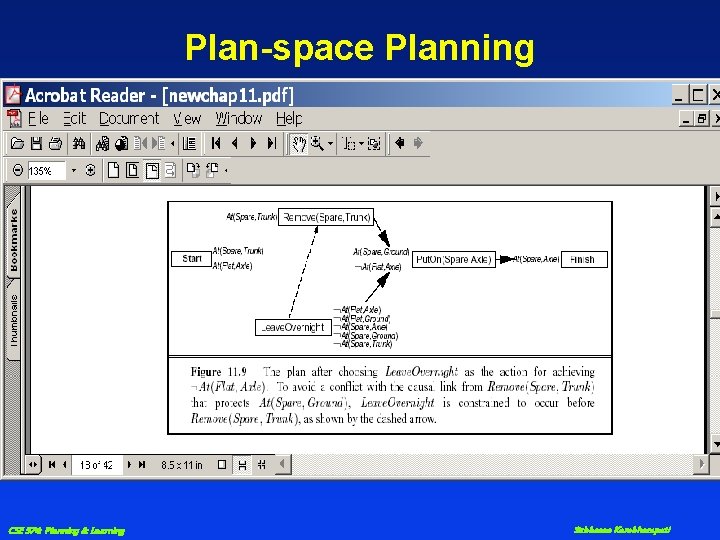

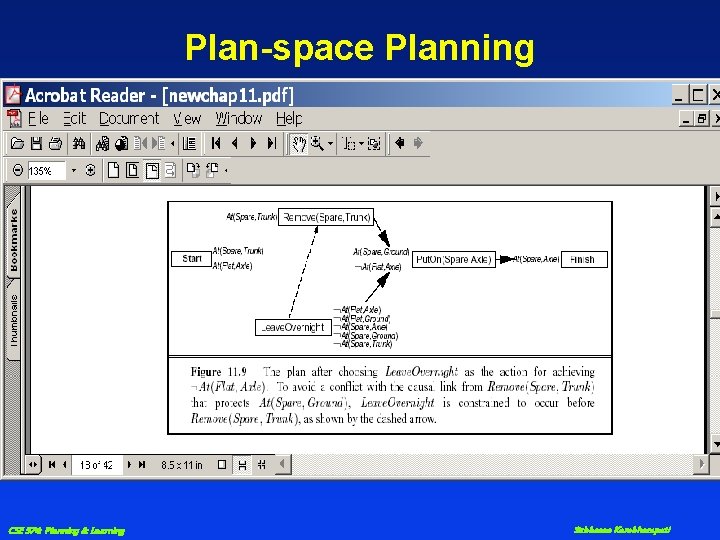

Plan-space Planning CSE 574: Planning & Learning Subbarao Kambhampati

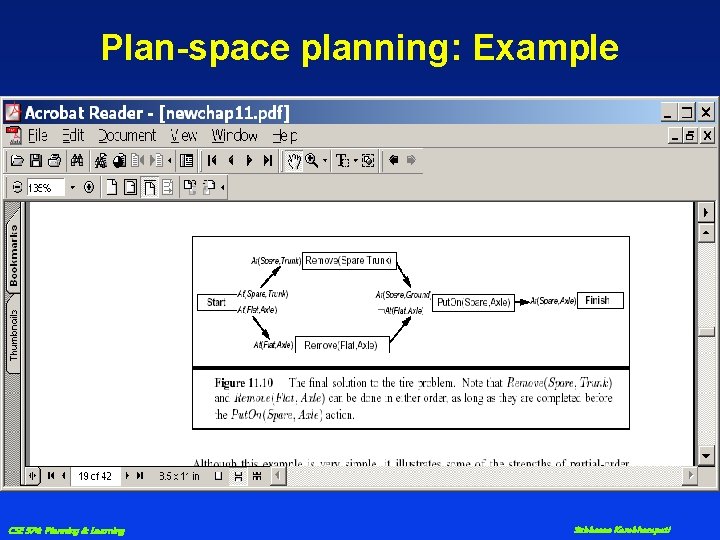

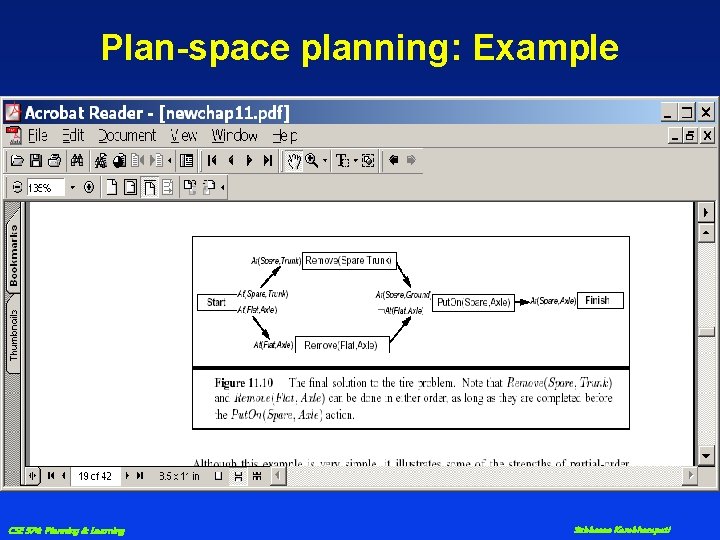

Plan-space planning: Example CSE 574: Planning & Learning Subbarao Kambhampati