XCache at Grid PP diskless site Teng LI

- Slides: 9

XCache at Grid. PP diskless site Teng LI University of Edinburgh

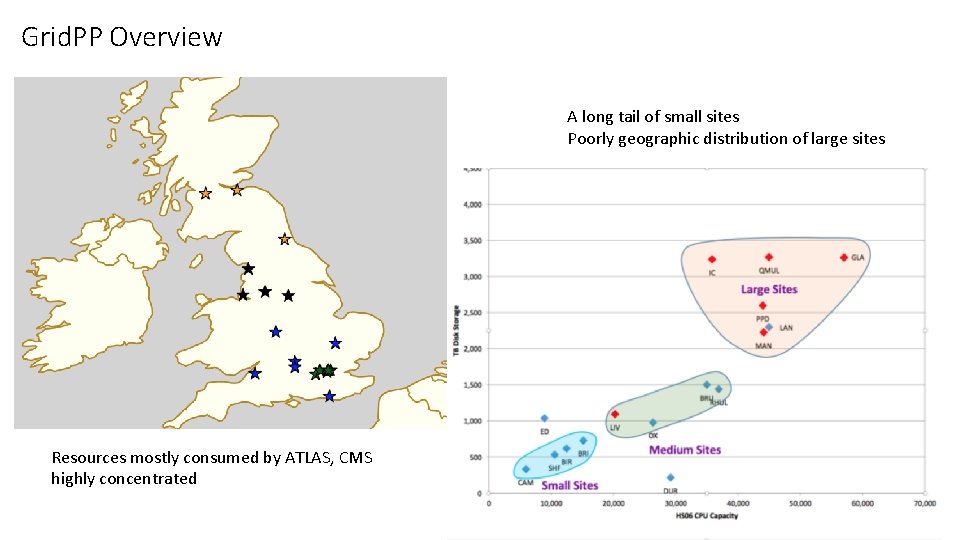

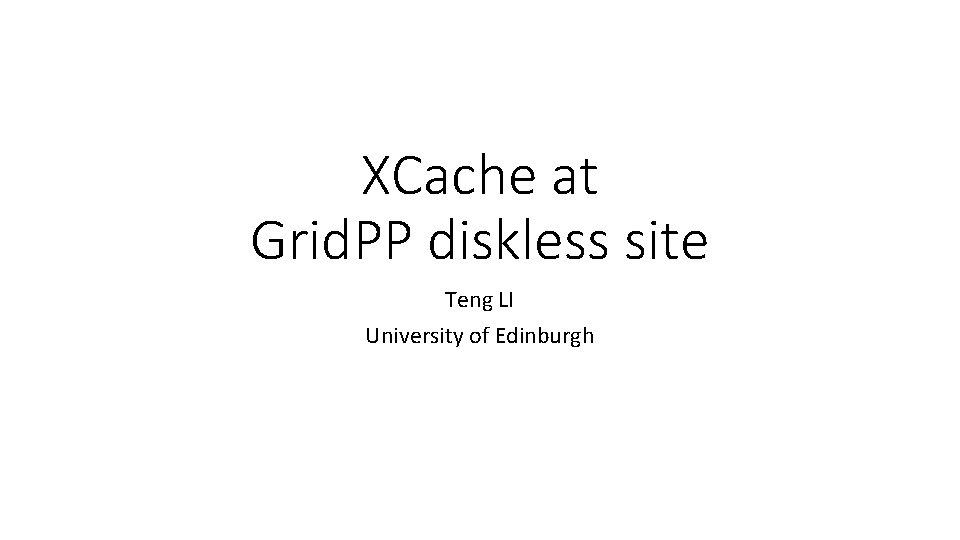

Grid. PP Overview A long tail of small sites Poorly geographic distribution of large sites Resources mostly consumed by ATLAS, CMS highly concentrated

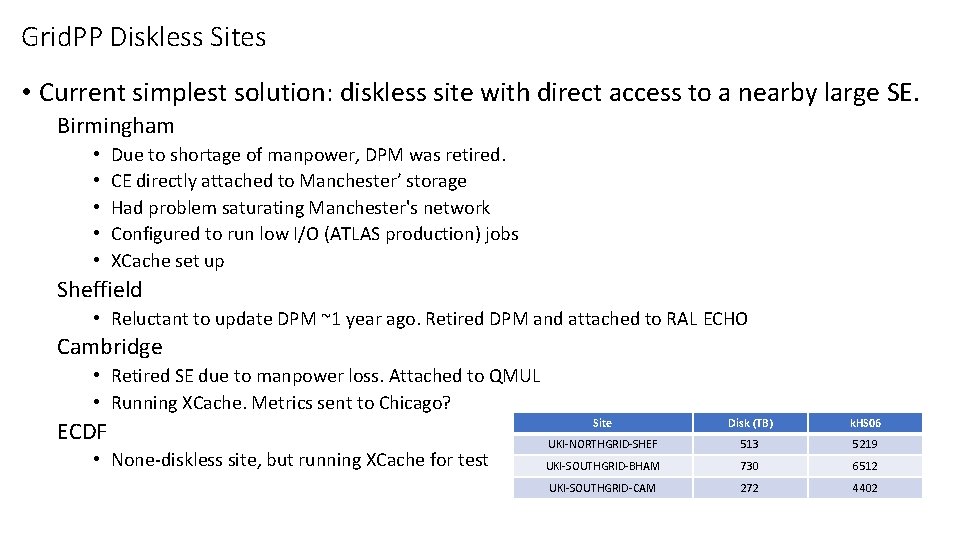

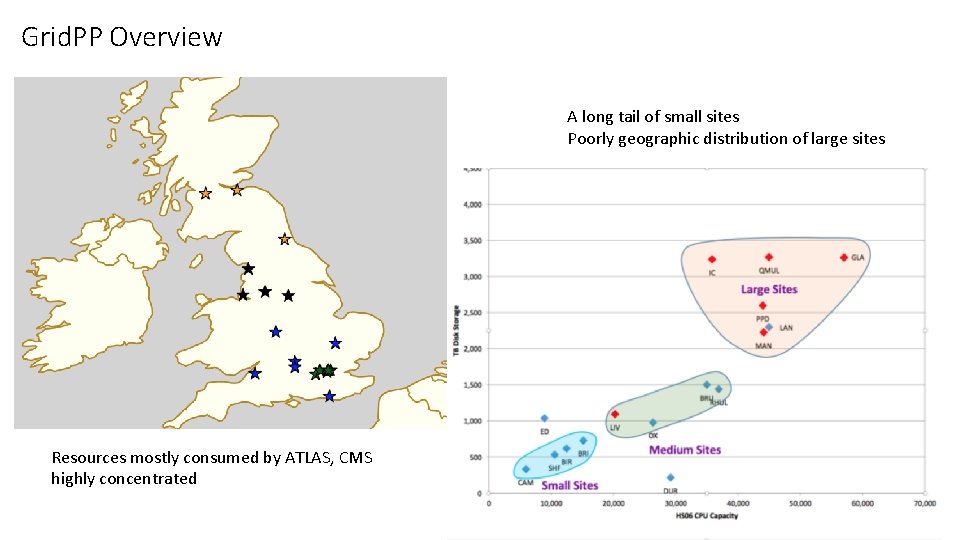

Grid. PP Diskless Sites • Current simplest solution: diskless site with direct access to a nearby large SE. Birmingham • • • Due to shortage of manpower, DPM was retired. CE directly attached to Manchester’ storage Had problem saturating Manchester's network Configured to run low I/O (ATLAS production) jobs XCache set up Sheffield • Reluctant to update DPM ~1 year ago. Retired DPM and attached to RAL ECHO Cambridge • Retired SE due to manpower loss. Attached to QMUL • Running XCache. Metrics sent to Chicago? ECDF • None-diskless site, but running XCache for test Site Disk (TB) k. HS 06 UKI-NORTHGRID-SHEF 513 5219 UKI-SOUTHGRID-BHAM 730 6512 UKI-SOUTHGRID-CAM 272 4402

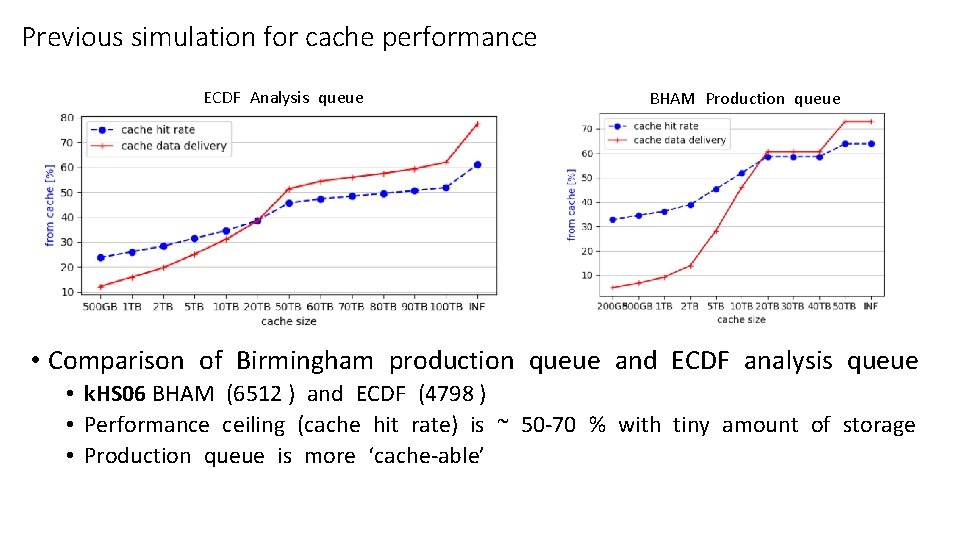

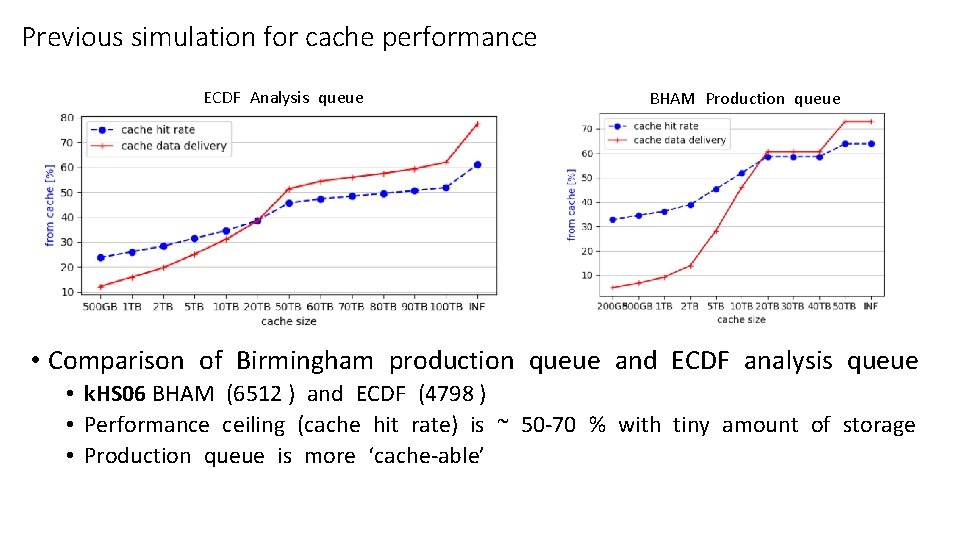

Previous simulation for cache performance ECDF Analysis queue BHAM Production queue • Comparison of Birmingham production queue and ECDF analysis queue • k. HS 06 BHAM (6512 ) and ECDF (4798 ) • Performance ceiling (cache hit rate) is ~ 50 -70 % with tiny amount of storage • Production queue is more ‘cache-able’

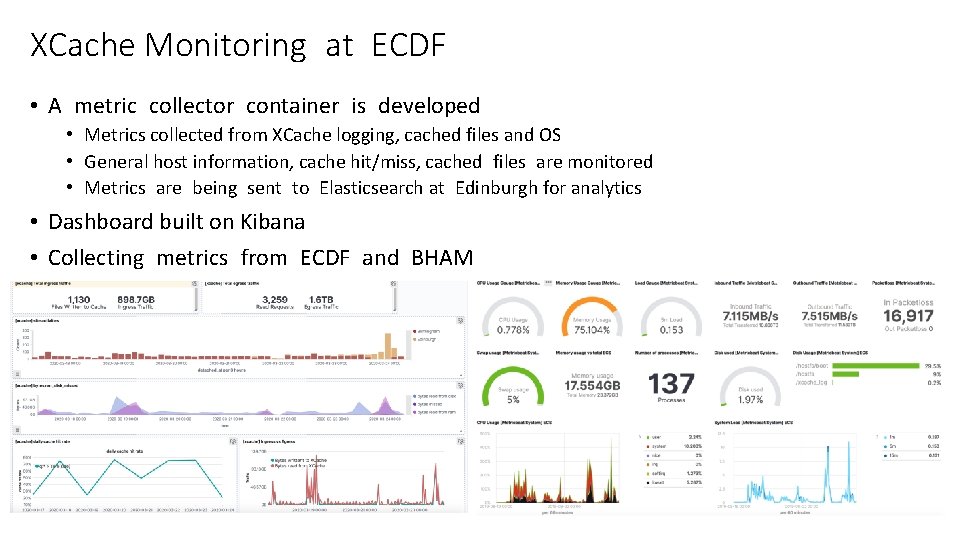

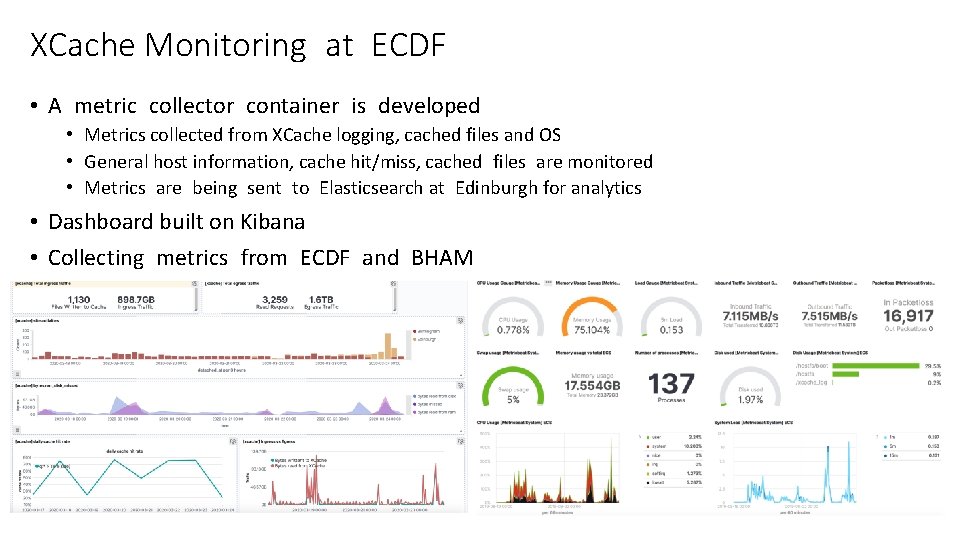

XCache Monitoring at ECDF • A metric collector container is developed • Metrics collected from XCache logging, cached files and OS • General host information, cache hit/miss, cached files are monitored • Metrics are being sent to Elasticsearch at Edinburgh for analytics • Dashboard built on Kibana • Collecting metrics from ECDF and BHAM

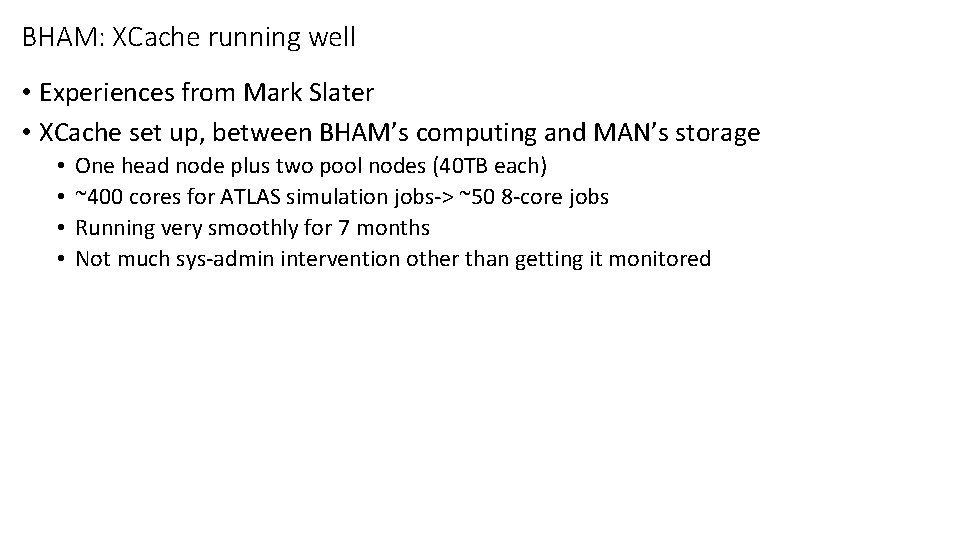

BHAM: XCache running well • Experiences from Mark Slater • XCache set up, between BHAM’s computing and MAN’s storage • • One head node plus two pool nodes (40 TB each) ~400 cores for ATLAS simulation jobs-> ~50 8 -core jobs Running very smoothly for 7 months Not much sys-admin intervention other than getting it monitored

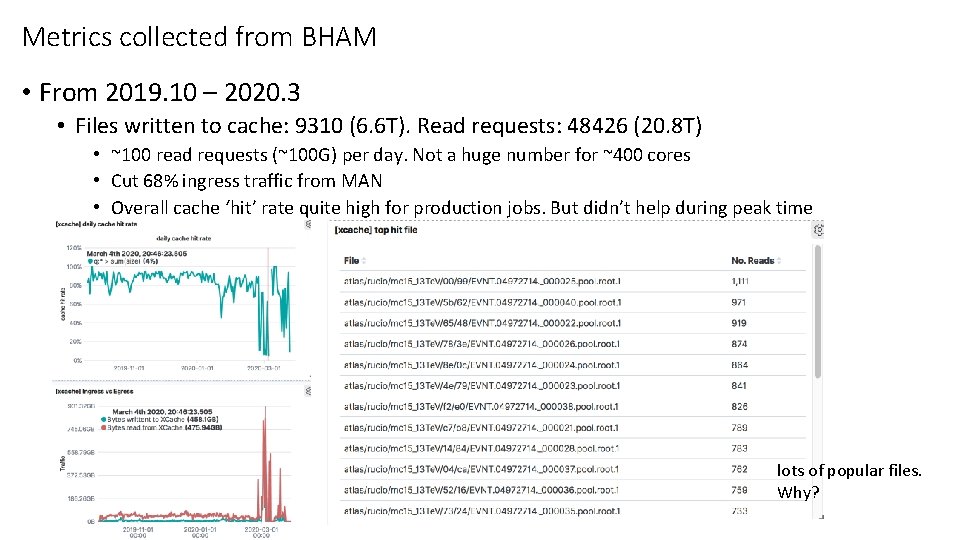

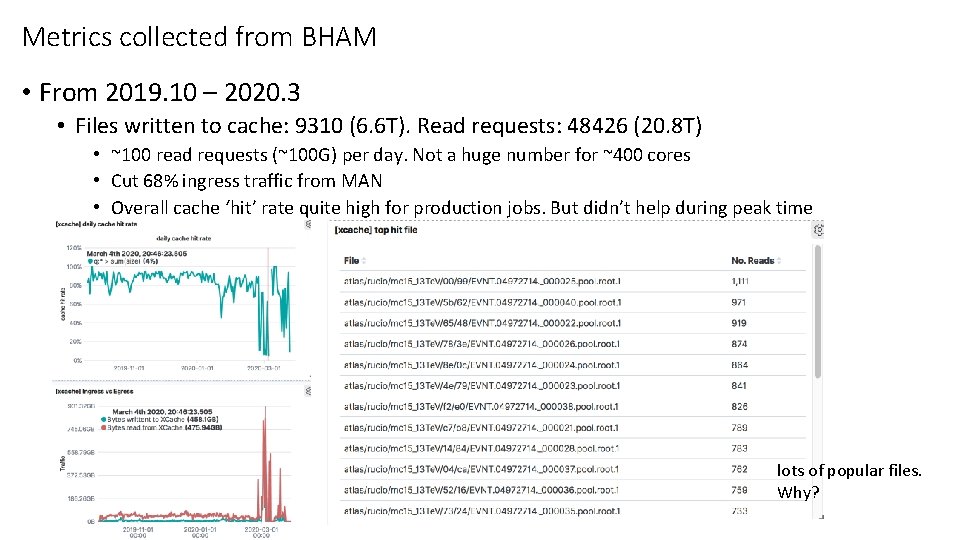

Metrics collected from BHAM • From 2019. 10 – 2020. 3 • Files written to cache: 9310 (6. 6 T). Read requests: 48426 (20. 8 T) • ~100 read requests (~100 G) per day. Not a huge number for ~400 cores • Cut 68% ingress traffic from MAN • Overall cache ‘hit’ rate quite high for production jobs. But didn’t help during peak time lots of popular files. Why?

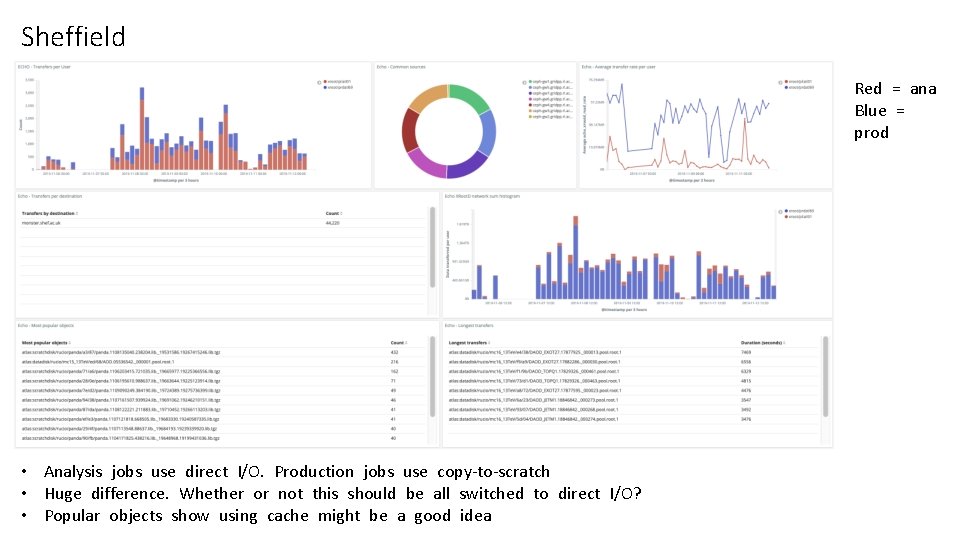

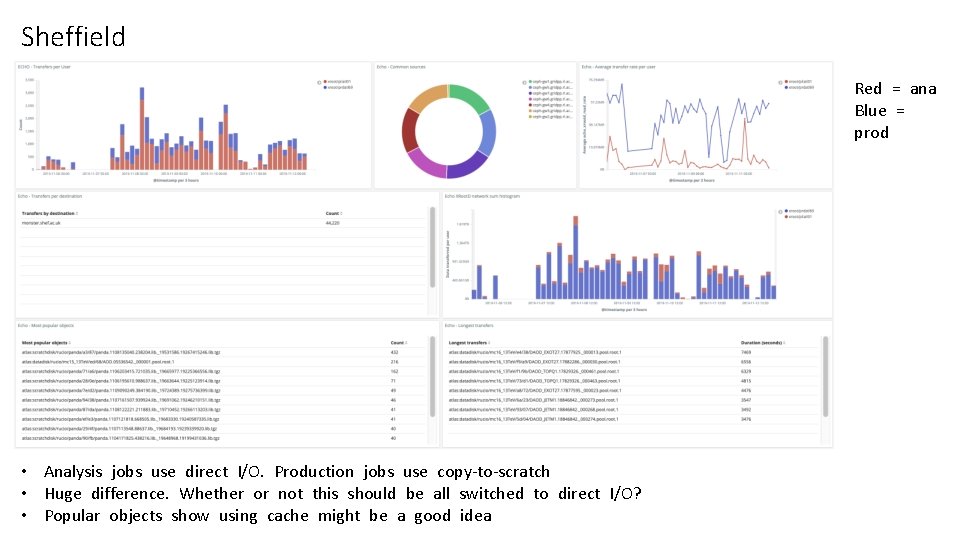

Sheffield Red = ana Blue = prod • Analysis jobs use direct I/O. Production jobs use copy-to-scratch • Huge difference. Whether or not this should be all switched to direct I/O? • Popular objects show using cache might be a good idea

Summary and future • Currently three (afaik) diskless sites • • • All attached to nearby large sites Cambridge and Birmingham running XCache Experiences from Birmingham is generally positive Sheffield under investigation, but metrics from Echo shows potential If more sites are coming in the future, plan to investigate cache federation.