Using Generative Adversarial Networks To Imitate HYDRA ALE

- Slides: 20

Using Generative Adversarial Networks To Imitate HYDRA ALE Simulations Toward the Goal of Automated Mesh Management Sujay Kazi, Christopher Yang Mentors: Chris Young, Jay Salmonson August 12, 2019 LLNL-PRES-787824 This work was performed under the auspices of the U. S. Department of Energy by Lawrence Livermore National Laboratory under contract DE-AC 52 -07 NA 27344. Lawrence Livermore National Security, LLC

About Me § MIT undergraduate, Class of 2021 § Double major math and physics § Other interests/hobbies: — Video games — Reading — Thinking about random things LLNL-PRES-787824 2

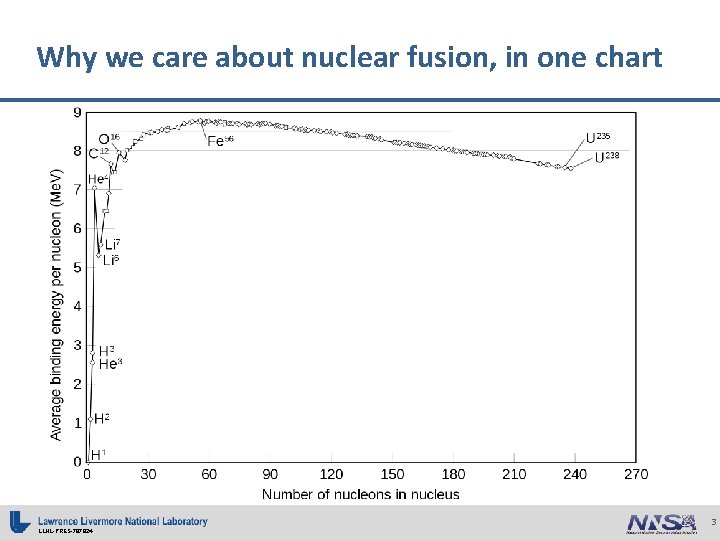

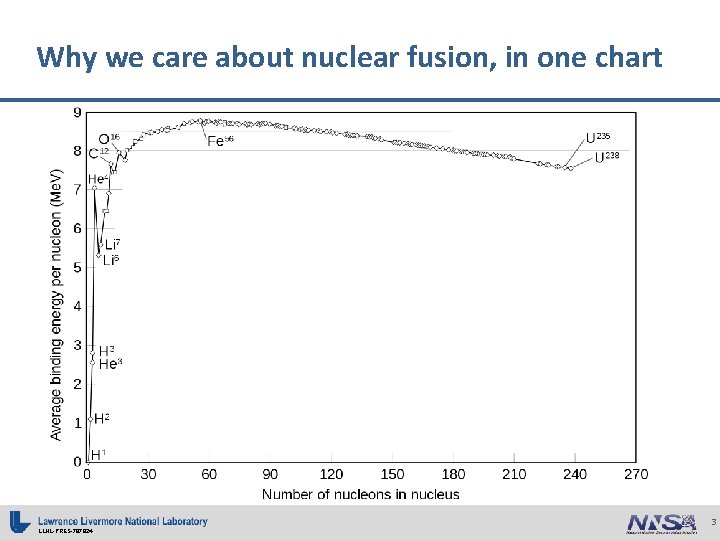

Why we care about nuclear fusion, in one chart LLNL-PRES-787824 3

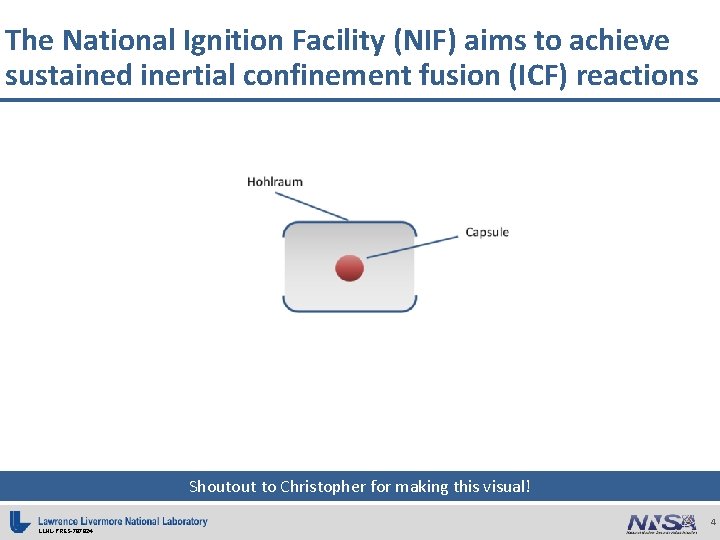

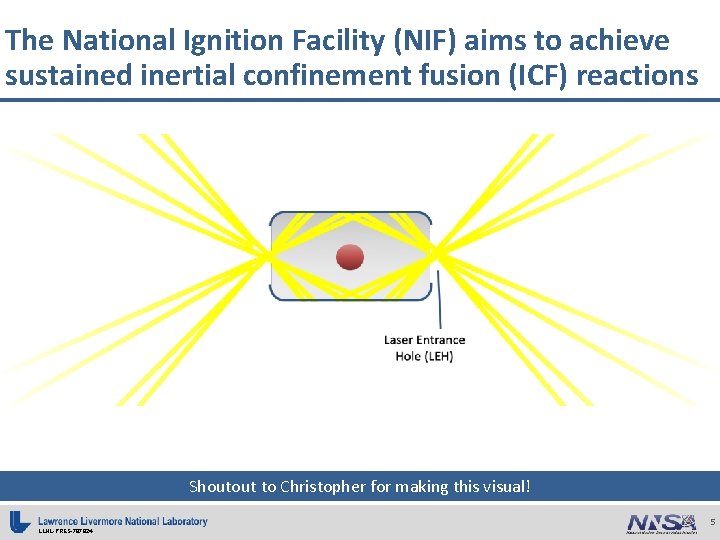

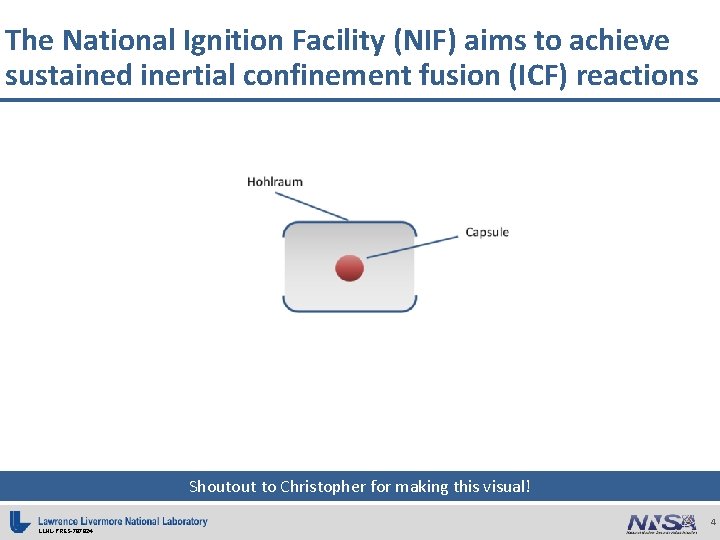

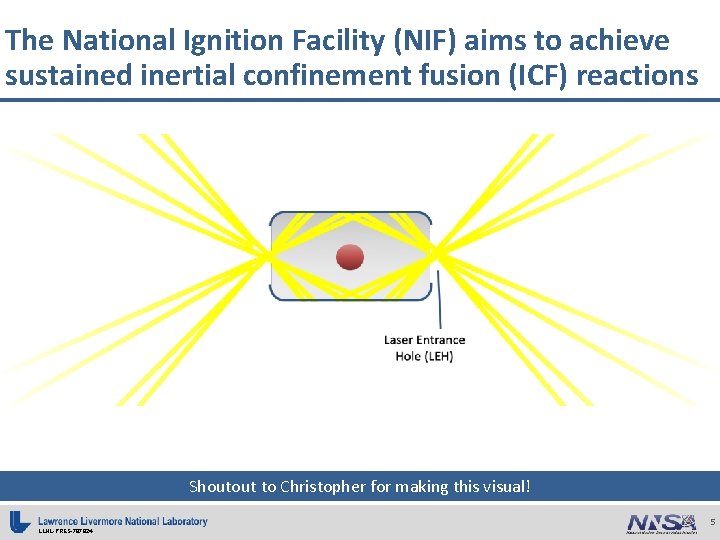

The National Ignition Facility (NIF) aims to achieve sustained inertial confinement fusion (ICF) reactions Shoutout to Christopher for making this visual! LLNL-PRES-787824 4

The National Ignition Facility (NIF) aims to achieve sustained inertial confinement fusion (ICF) reactions Shoutout to Christopher for making this visual! LLNL-PRES-787824 5

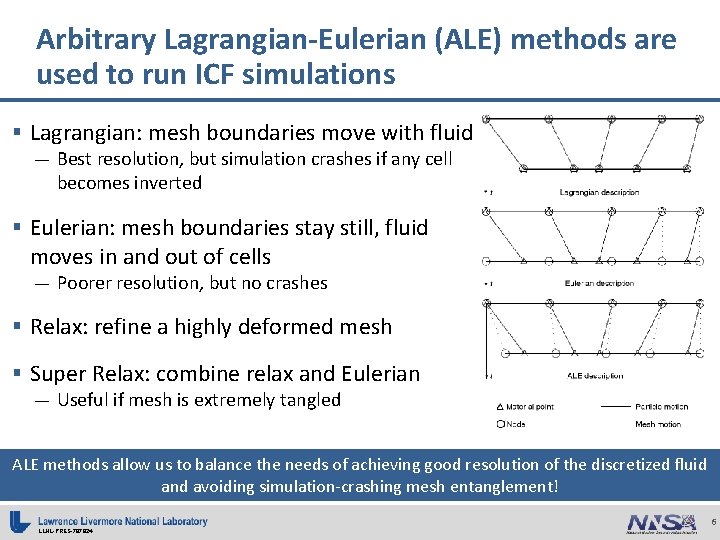

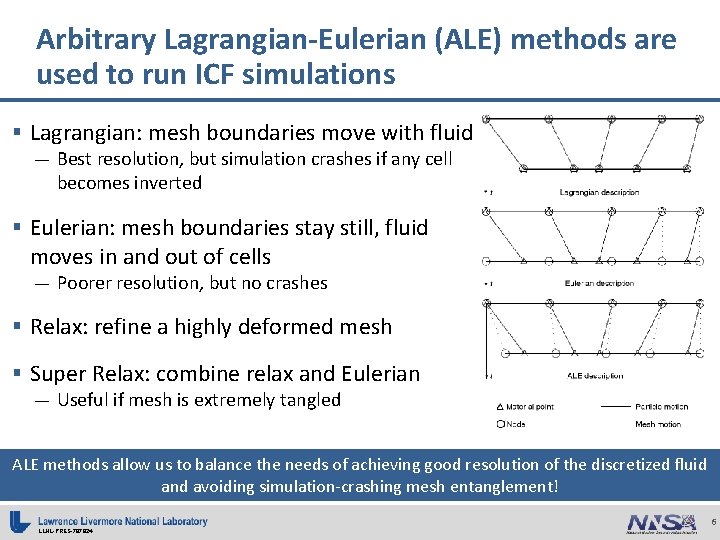

Arbitrary Lagrangian-Eulerian (ALE) methods are used to run ICF simulations § Lagrangian: mesh boundaries move with fluid — Best resolution, but simulation crashes if any cell becomes inverted § Eulerian: mesh boundaries stay still, fluid moves in and out of cells — Poorer resolution, but no crashes § Relax: refine a highly deformed mesh § Super Relax: combine relax and Eulerian — Useful if mesh is extremely tangled ALE methods allow us to balance the needs of achieving good resolution of the discretized fluid and avoiding simulation-crashing mesh entanglement! LLNL-PRES-787824 6

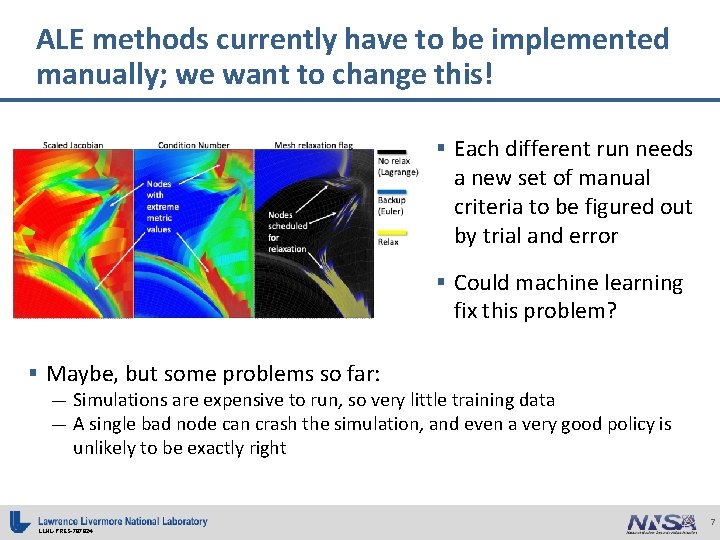

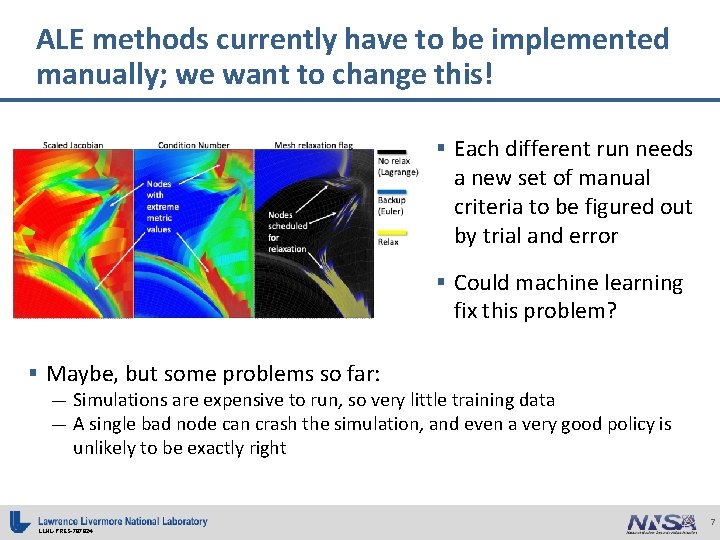

ALE methods currently have to be implemented manually; we want to change this! § Each different run needs a new set of manual criteria to be figured out by trial and error § Could machine learning fix this problem? § Maybe, but some problems so far: — Simulations are expensive to run, so very little training data — A single bad node can crash the simulation, and even a very good policy is unlikely to be exactly right LLNL-PRES-787824 7

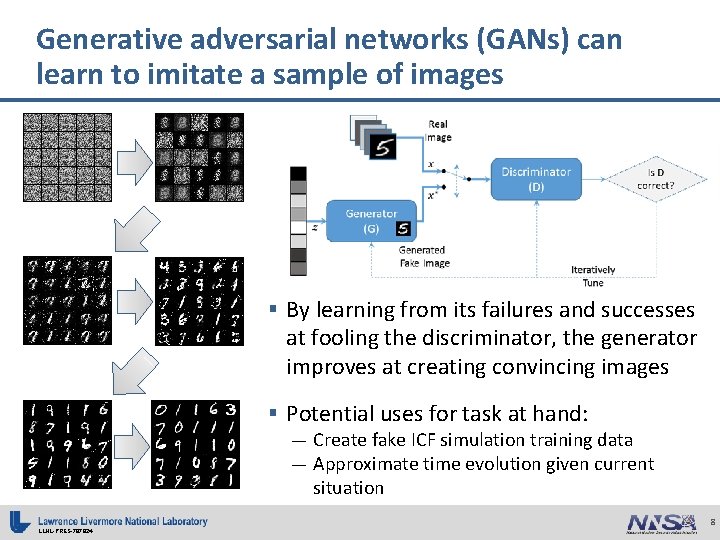

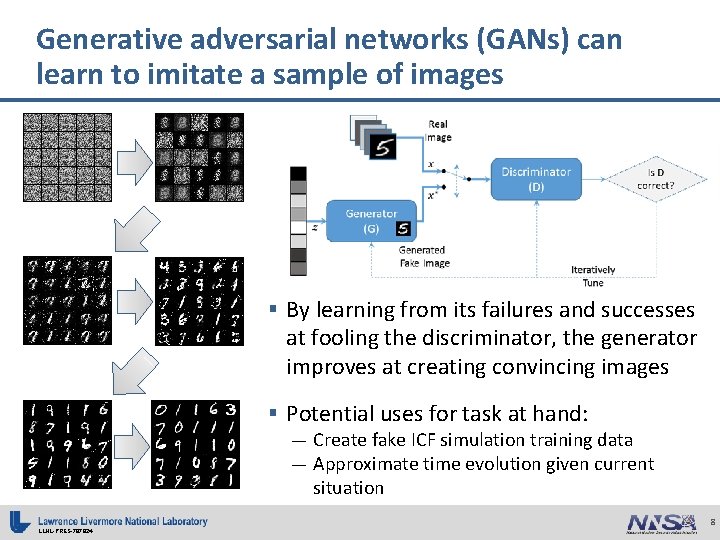

Generative adversarial networks (GANs) can learn to imitate a sample of images § By learning from its failures and successes at fooling the discriminator, the generator improves at creating convincing images § Potential uses for task at hand: — Create fake ICF simulation training data — Approximate time evolution given current situation LLNL-PRES-787824 8

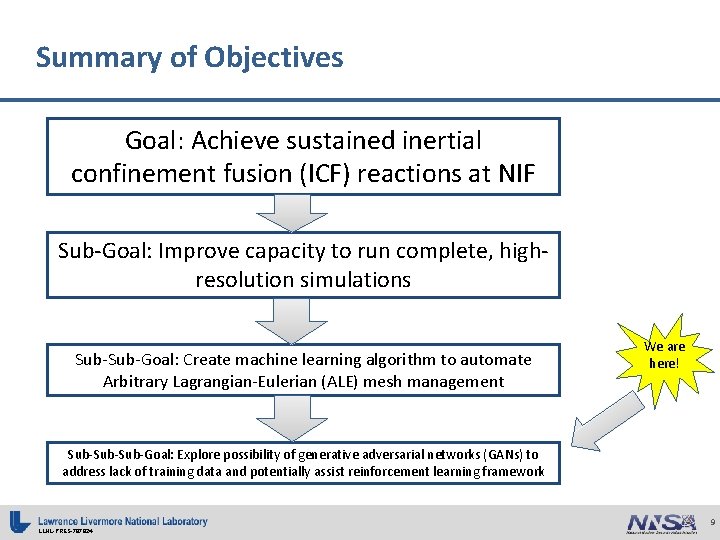

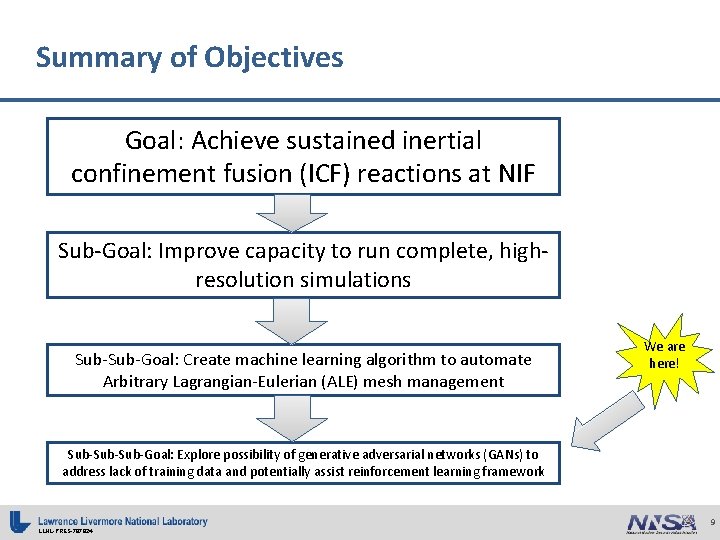

Summary of Objectives Goal: Achieve sustained inertial confinement fusion (ICF) reactions at NIF Sub-Goal: Improve capacity to run complete, highresolution simulations Sub-Goal: Create machine learning algorithm to automate Arbitrary Lagrangian-Eulerian (ALE) mesh management We are here! Sub-Sub-Goal: Explore possibility of generative adversarial networks (GANs) to address lack of training data and potentially assist reinforcement learning framework LLNL-PRES-787824 9

Methodology § We downloaded various GANs from a very useful Git. Hub repository (https: //github. com/eriklindernoren/Py. Torch-GAN) and modified them to accept ICF simulation data § We played around with various parameters to see what worked and what didn’t § Useful realizations: — Preconditioning the data is extremely important, because many of the features have very skewed distributions — Varying the basic neural network architecture (e. g. number of nodes per layer, Adam optimizer hyperparameters) hardly matters LLNL-PRES-787824 10

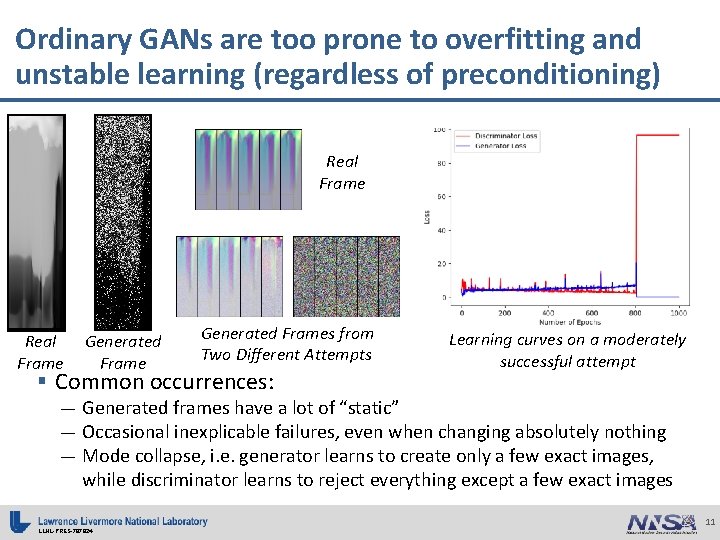

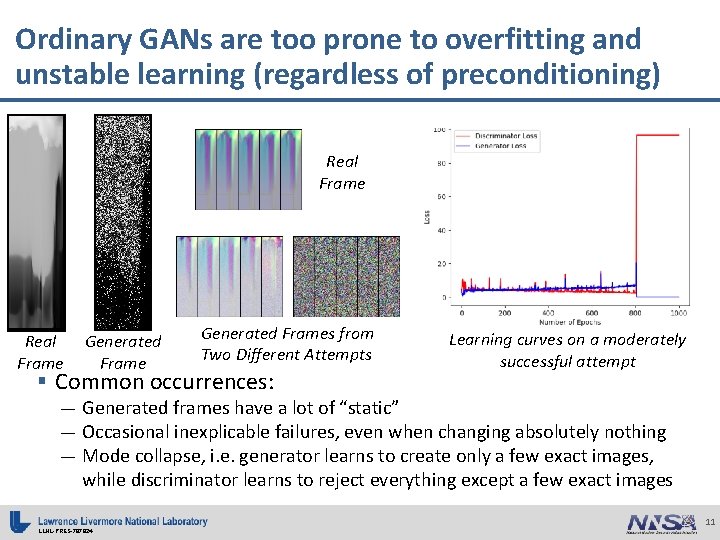

Ordinary GANs are too prone to overfitting and unstable learning (regardless of preconditioning) Real Frame Generated Frames from Two Different Attempts § Common occurrences: Learning curves on a moderately successful attempt — Generated frames have a lot of “static” — Occasional inexplicable failures, even when changing absolutely nothing — Mode collapse, i. e. generator learns to create only a few exact images, while discriminator learns to reject everything except a few exact images LLNL-PRES-787824 11

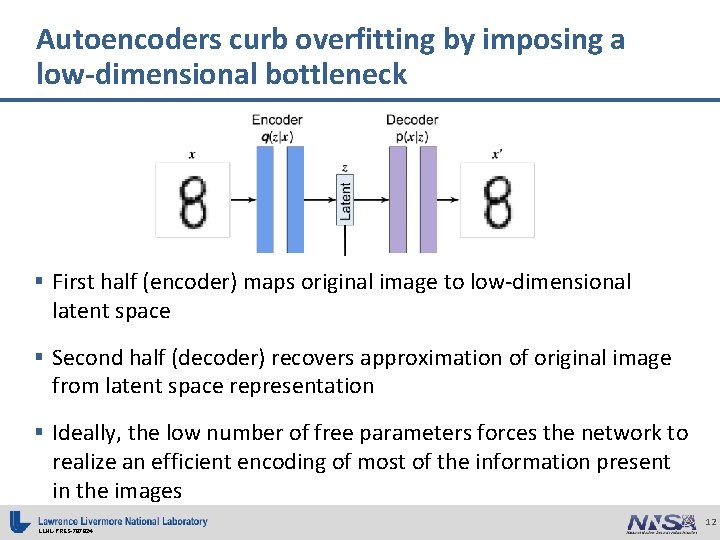

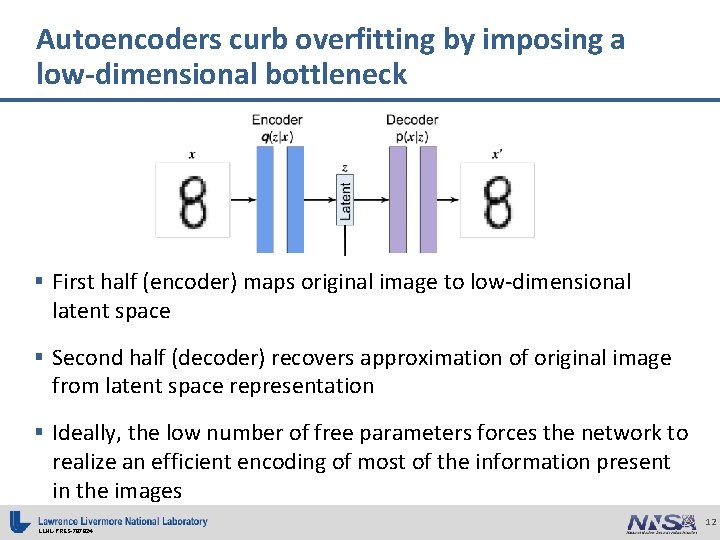

Autoencoders curb overfitting by imposing a low-dimensional bottleneck § First half (encoder) maps original image to low-dimensional latent space § Second half (decoder) recovers approximation of original image from latent space representation § Ideally, the low number of free parameters forces the network to realize an efficient encoding of most of the information present in the images LLNL-PRES-787824 12

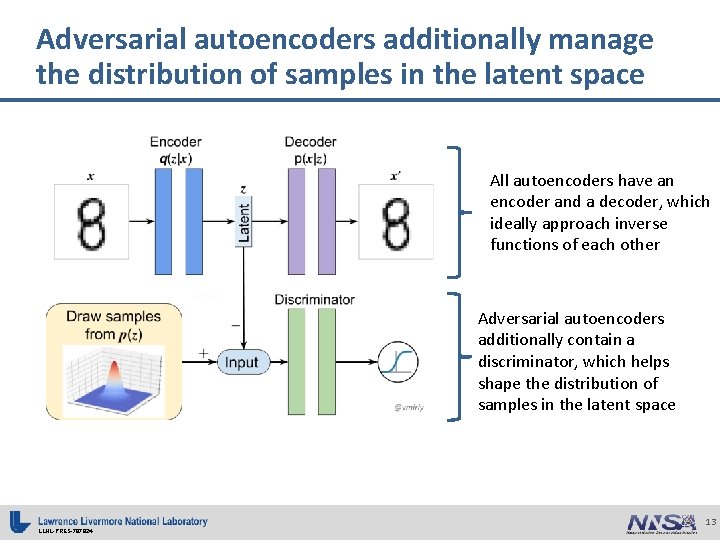

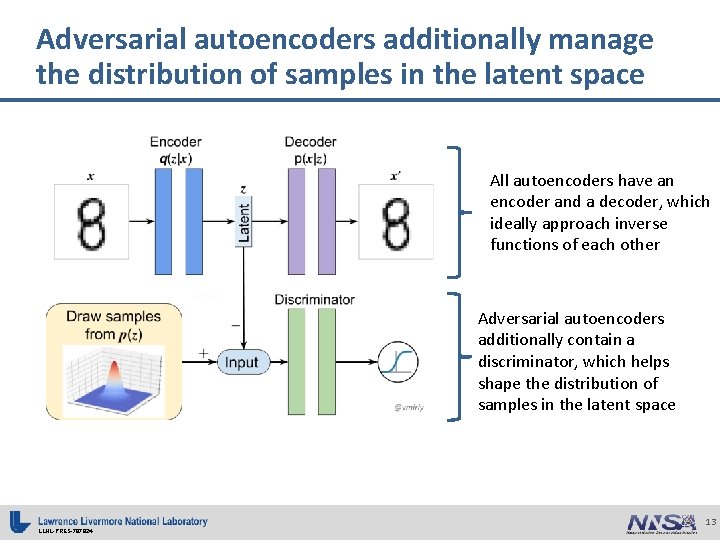

Adversarial autoencoders additionally manage the distribution of samples in the latent space All autoencoders have an encoder and a decoder, which ideally approach inverse functions of each other Adversarial autoencoders additionally contain a discriminator, which helps shape the distribution of samples in the latent space LLNL-PRES-787824 13

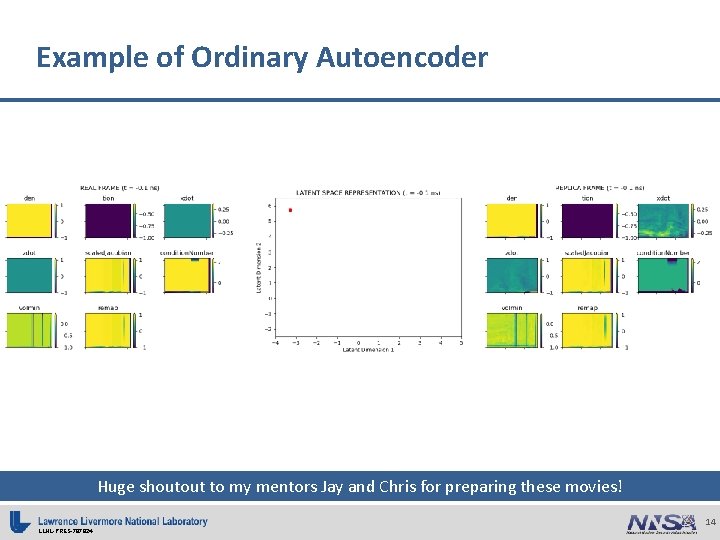

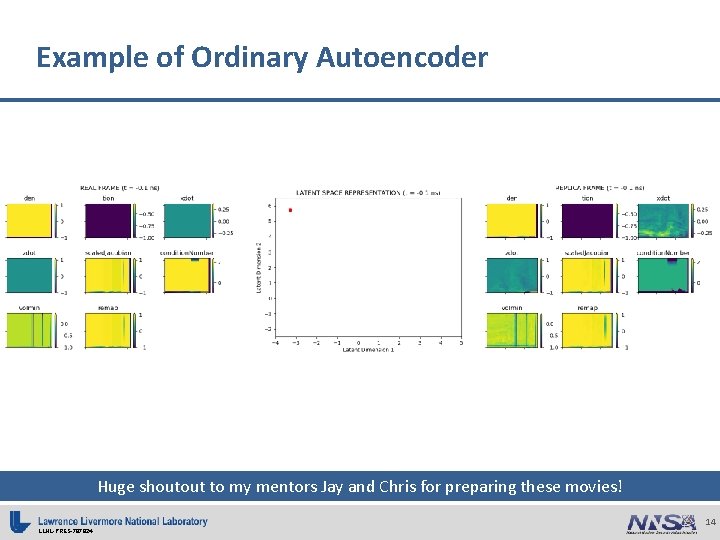

Example of Ordinary Autoencoder Huge shoutout to my mentors Jay and Chris for preparing these movies! LLNL-PRES-787824 14

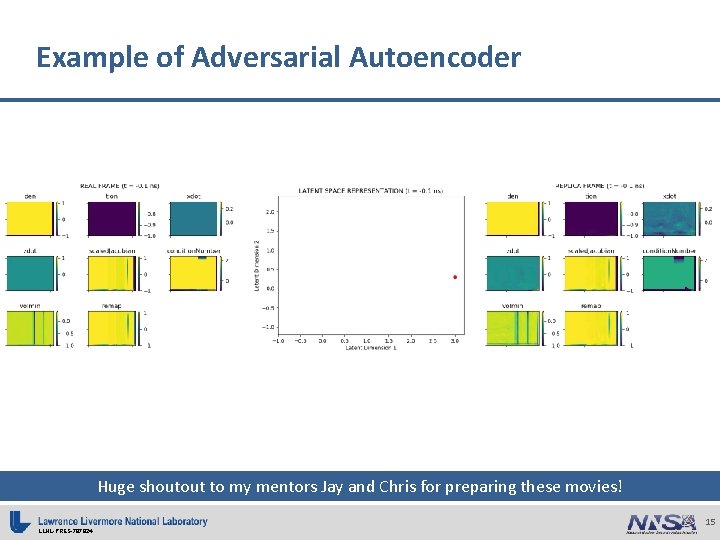

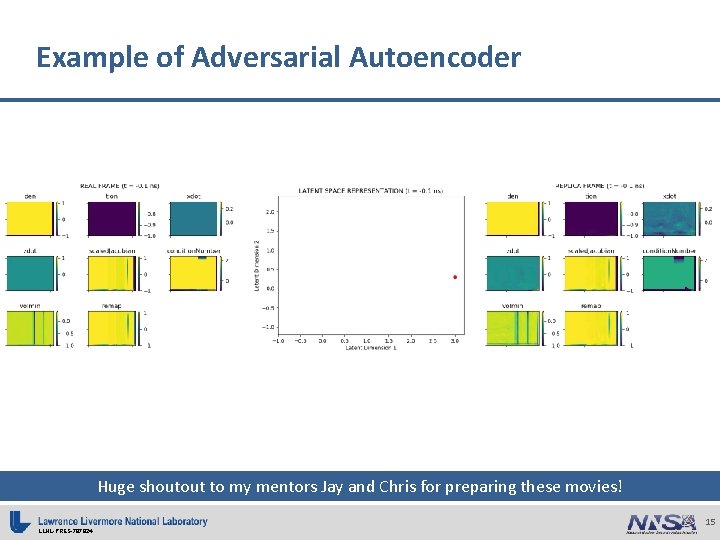

Example of Adversarial Autoencoder Huge shoutout to my mentors Jay and Chris for preparing these movies! LLNL-PRES-787824 15

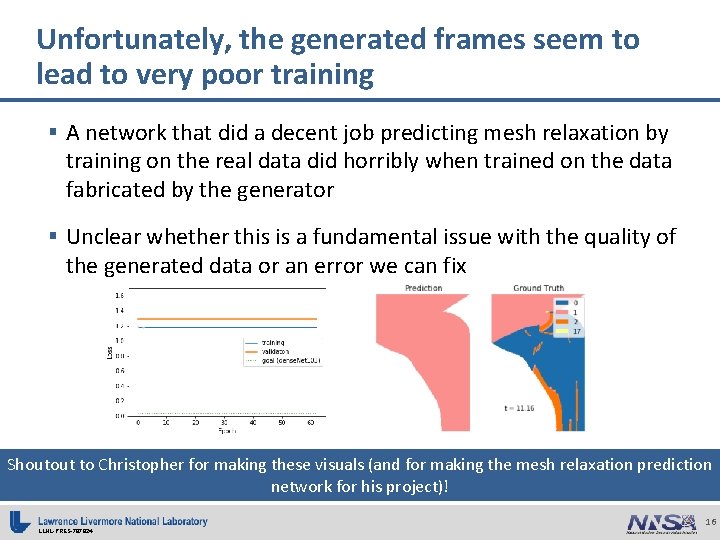

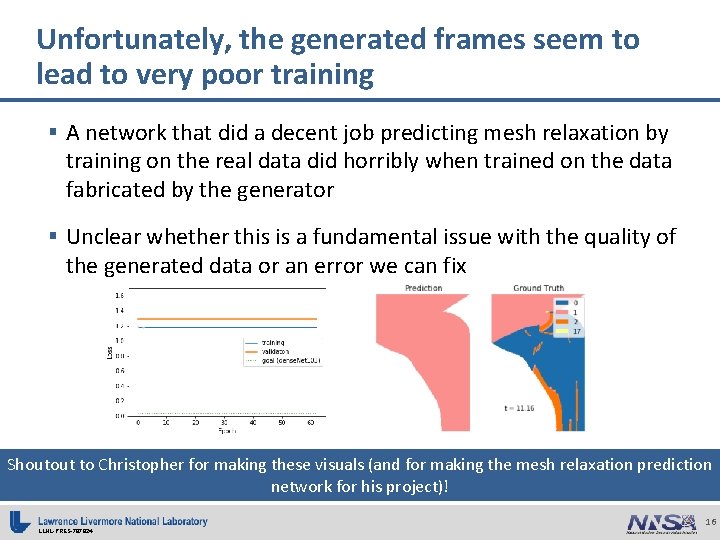

Unfortunately, the generated frames seem to lead to very poor training § A network that did a decent job predicting mesh relaxation by training on the real data did horribly when trained on the data fabricated by the generator § Unclear whether this is a fundamental issue with the quality of the generated data or an error we can fix Shoutout to Christopher for making these visuals (and for making the mesh relaxation prediction network for his project)! LLNL-PRES-787824 16

Conclusions § Major risk of overfitting with standard GANs § Autoencoders show some promise — Low dimensionality forces them to not just copy real frames exactly — Whether autoencoder-generated frames can serve as good training data remains to be seen § Most applications of GANs require only visual similarities between generated frames and real frames — Here, we care very much about individual pixel values, and it may well be unrealistic to expect this level of precision LLNL-PRES-787824 17

Future Avenues of Exploration § Explore how to further improve the quality of generated frames so that they can serve as training data § Continue working on the original mesh management ML algorithm to see whether fake training data is truly necessary § Explore a reinforcement learning framework where one starts with a pretty good policy and uses simulation runs to gradually refine that policy — Anticipating the approximate time evolution of a frame to compute reward values might be another application of GANs (although other methods may work as well) LLNL-PRES-787824 18

Acknowledgements § Chris Young, Jay Salmonson — Mentorship throughout the summer — Answering my dumb questions about command line and coding issues — Assistance with data visualization and visuals for this presentation § Christopher Yang — Many constructive conversations — Answering my dumb questions about command line and coding issues — Assistance with data visualization and visuals for this presentation § Rushil Anirudh, Jayaraman Thiagarajan, Brenden Petersen, Daniel Faissol — Machine learning expertise § Everyone else in WCI (especially R 1007) — Many fun times throughout the summer LLNL-PRES-787824 19

Disclaimer This document was prepared as an account of work sponsored by an agency of the United States government. Neither the United States government nor Lawrence Livermore National Security, LLC, nor any of their employees makes any warranty, expressed or implied, or assumes any legal liability or responsibility for the accuracy, completeness, or usefulness of any information, apparatus, product, or process disclosed, or represents that its use would not infringe privately owned rights. Reference herein to any specific commercial product, process, or service by trade name, trademark, manufacturer, or otherwise does not necessarily constitute or imply its endorsement, recommendation, or favoring by the United States government or Lawrence Livermore National Security, LLC. The views and opinions of authors expressed herein do not necessarily state or reflect those of the United States government or Lawrence Livermore National Security, LLC, and shall not be used for advertising or product endorsement purposes.