Automatic Modulation Recognition Using Generative Adversarial Networks Group

![Literature Review • • GAN 2014, Goodfellow et al. Generative Adversarial Network(GAN)[5] 2014, Mirza Literature Review • • GAN 2014, Goodfellow et al. Generative Adversarial Network(GAN)[5] 2014, Mirza](https://slidetodoc.com/presentation_image_h2/a569cfe37fa794f9b7ff5c56838f4c5d/image-5.jpg)

![Reference • • • [1]: Azzouz, E. E. ; Nandi, A. K. Automatic Modulation Reference • • • [1]: Azzouz, E. E. ; Nandi, A. K. Automatic Modulation](https://slidetodoc.com/presentation_image_h2/a569cfe37fa794f9b7ff5c56838f4c5d/image-13.jpg)

- Slides: 13

Automatic Modulation Recognition Using Generative Adversarial Networks Group 4 Jiawei Yin, Jinglong Du, Ziwen Li

Background • In recent years, due to the increasing number of fixed spectrum allocation and wireless devices, spectrum resources become more and more scarce. • Spectrum sensing is a technique that help to allocate the limited resources • A key enabler in spectrum sensing is automatic modulation recognition(AMR)

Background • In the past, AMR is done by the manual work. To expand AMR technique into unfamiliar tasks and signals, Generative Adversarial Networks (GAN) is introduced to help do the modulation classification. • GAN plays a min-max game which includes a generator(G) and a discriminator(D).

Literature Review • AMR • 1996, Azzouz et al. “Automatic Modulation Recognition of Communication Signals. ”[1] • 2016, O’Shea et al. propose a method to apply CNN to the modulation recognition field[2] and use time-domain in-phase orthogonal (IQ) signal as the input of the network. • 2018, Li et al. Robust Automated VHF Modulation Recognition Based on Deep Convolutional Neural Networks. [3] • 2018, Bin et al. [4] successfully linked signal processing with computer vision by application of GAN.

![Literature Review GAN 2014 Goodfellow et al Generative Adversarial NetworkGAN5 2014 Mirza Literature Review • • GAN 2014, Goodfellow et al. Generative Adversarial Network(GAN)[5] 2014, Mirza](https://slidetodoc.com/presentation_image_h2/a569cfe37fa794f9b7ff5c56838f4c5d/image-5.jpg)

Literature Review • • GAN 2014, Goodfellow et al. Generative Adversarial Network(GAN)[5] 2014, Mirza et al. conditional generative adversarial network (CGAN)[6] 2016, Radford et al. Deep Convolutional Generative Adversarial Network (DCGAN)[7]

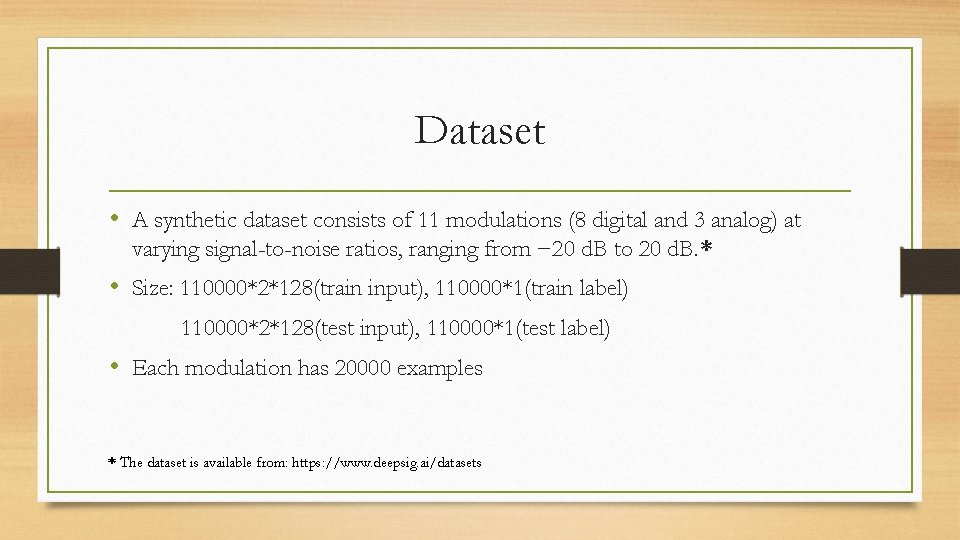

Dataset • A synthetic dataset consists of 11 modulations (8 digital and 3 analog) at varying signal-to-noise ratios, ranging from − 20 d. B to 20 d. B. * • Size: 110000*2*128(train input), 110000*1(train label) 110000*2*128(test input), 110000*1(test label) • Each modulation has 20000 examples * The dataset is available from: https: //www. deepsig. ai/datasets

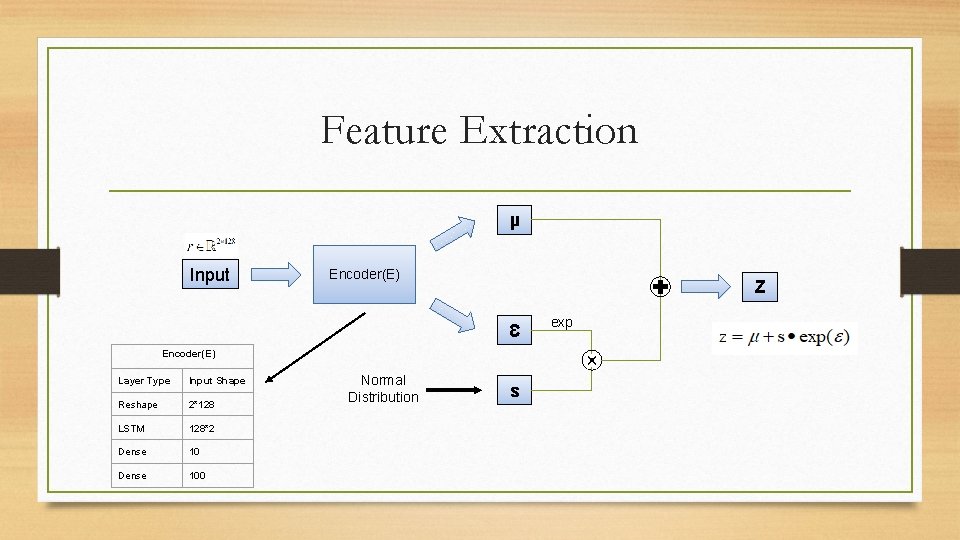

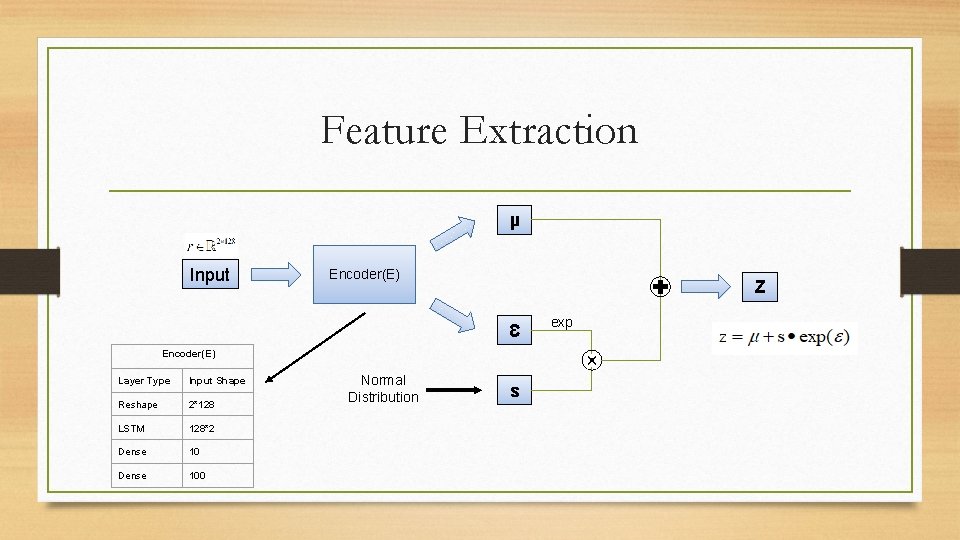

Feature Extraction µ Input Encoder(E) Z Ɛ Encoder(E) Layer Type Input Shape Reshape 2*128 LSTM 128*2 Dense 100 Normal Distribution s exp

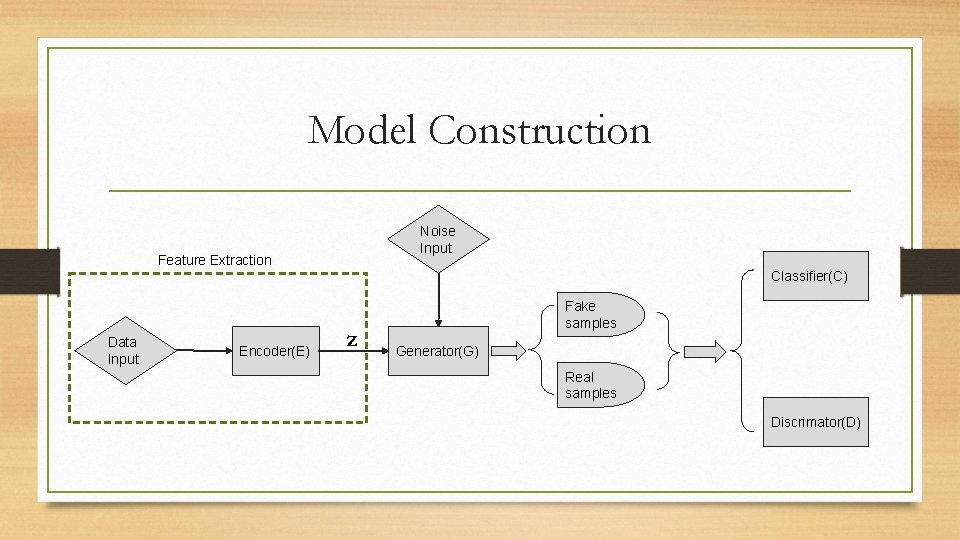

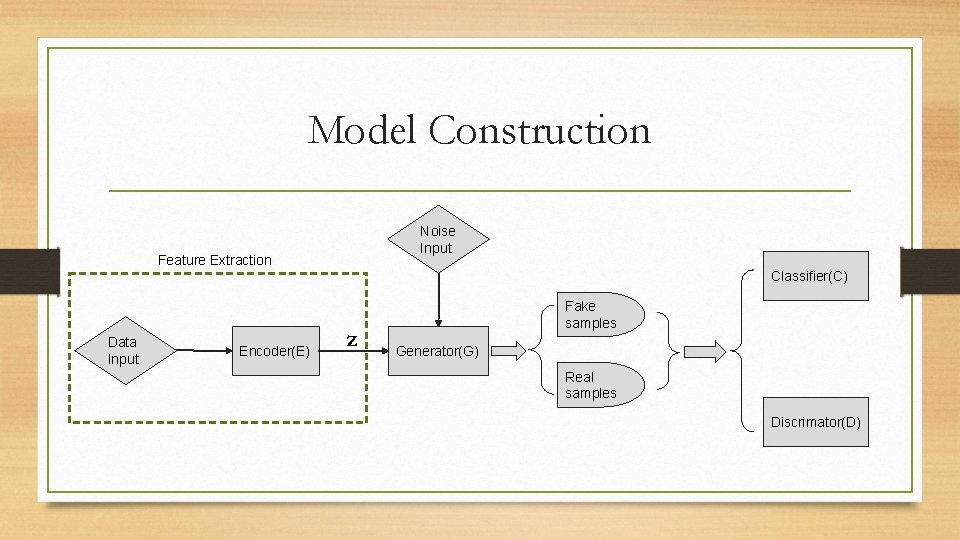

Model Construction Noise Input Feature Extraction Classifier(C) Data Input Encoder(E) z Fake samples Generator(G) Real samples Discrimator(D)

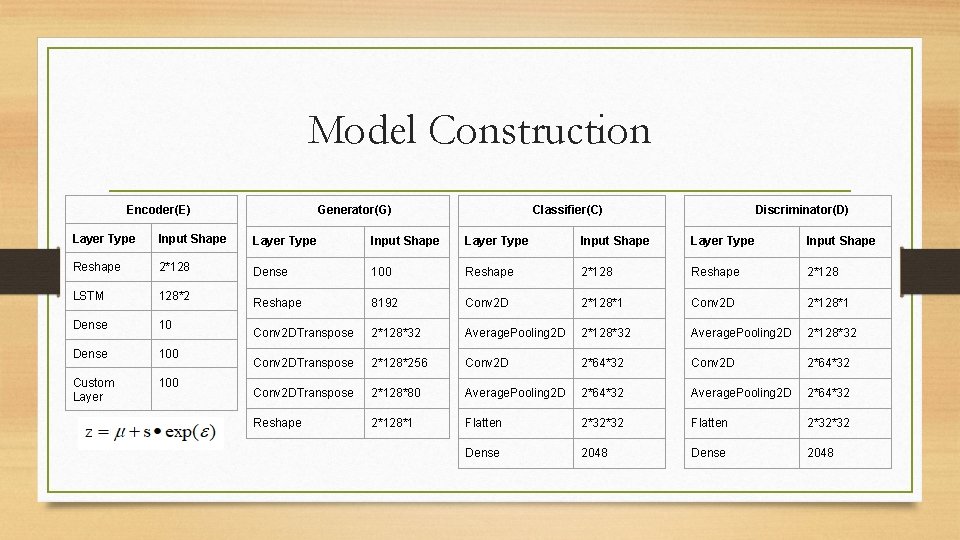

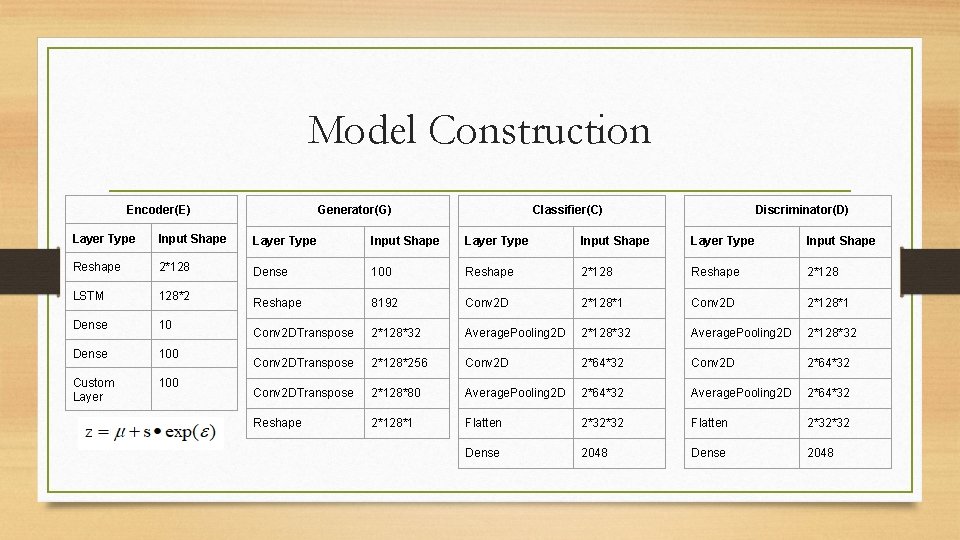

Model Construction Generator(G) Encoder(E) Classifier(C) Discriminator(D) Layer Type Input Shape Reshape 2*128 Dense 100 Reshape 2*128 LSTM 128*2 Reshape 8192 Conv 2 D 2*128*1 Dense 10 Conv 2 DTranspose 2*128*32 Average. Pooling 2 D 2*128*32 Dense 100 Conv 2 DTranspose 2*128*256 Conv 2 D 2*64*32 Custom Layer 100 Conv 2 DTranspose 2*128*80 Average. Pooling 2 D 2*64*32 Reshape 2*128*1 Flatten 2*32*32 Dense 2048

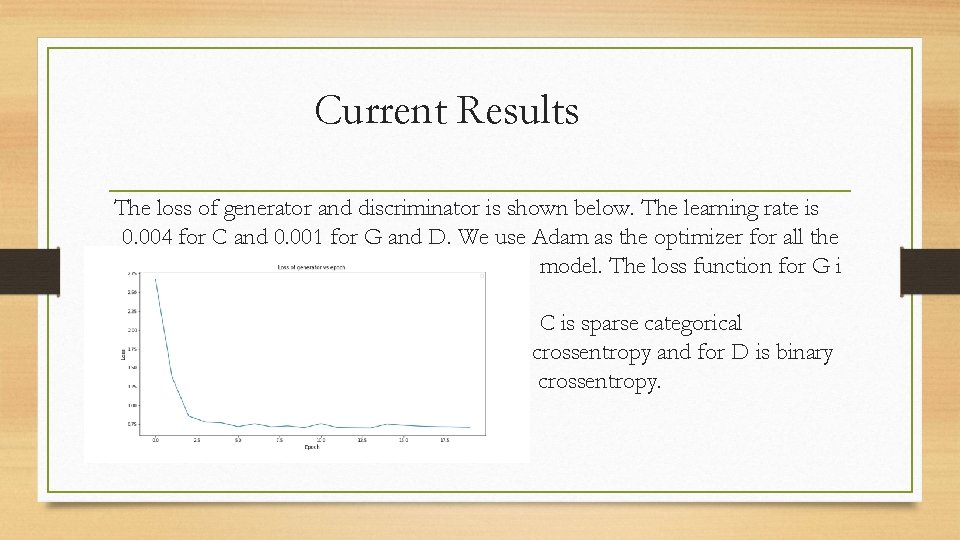

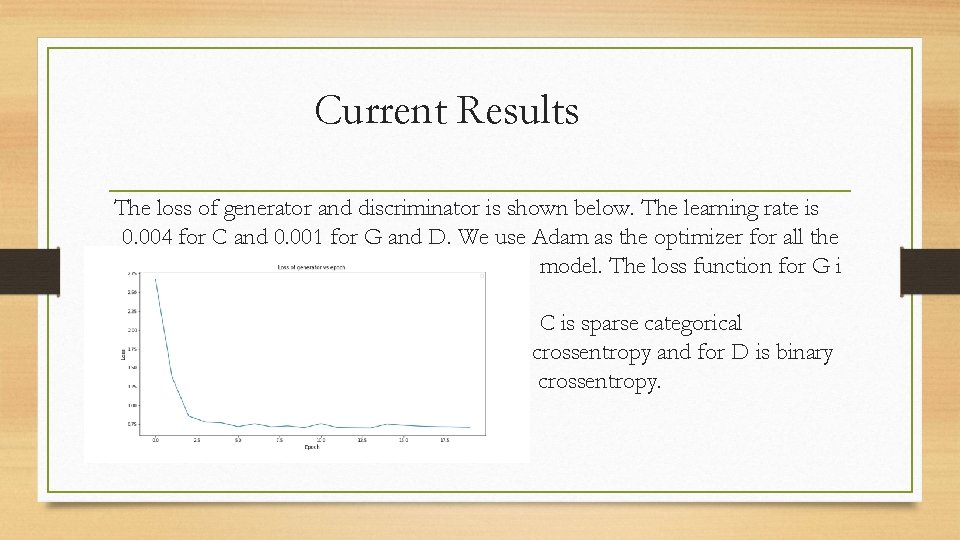

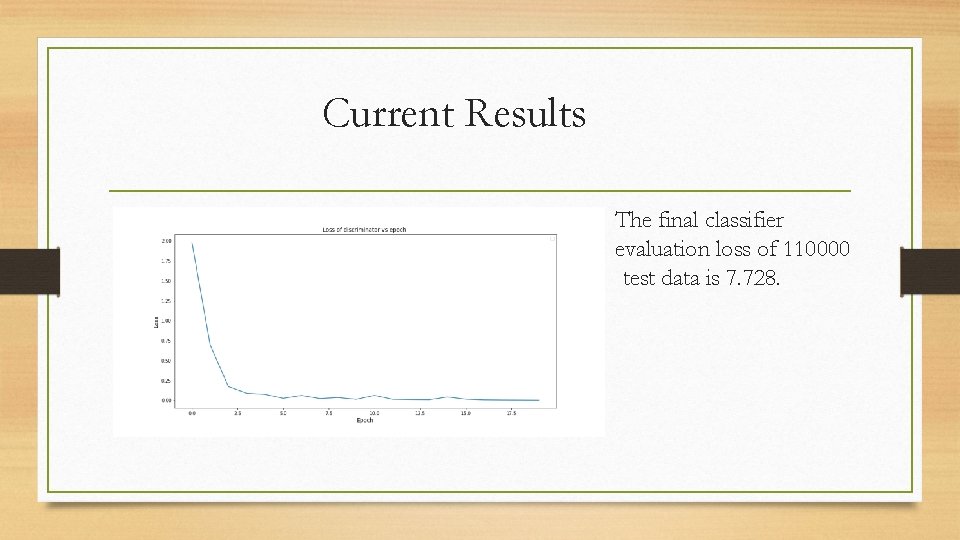

Current Results The loss of generator and discriminator is shown below. The learning rate is 0. 004 for C and 0. 001 for G and D. We use Adam as the optimizer for all the model. The loss function for G i is mean squared error, for C is sparse categorical crossentropy and for D is binary crossentropy.

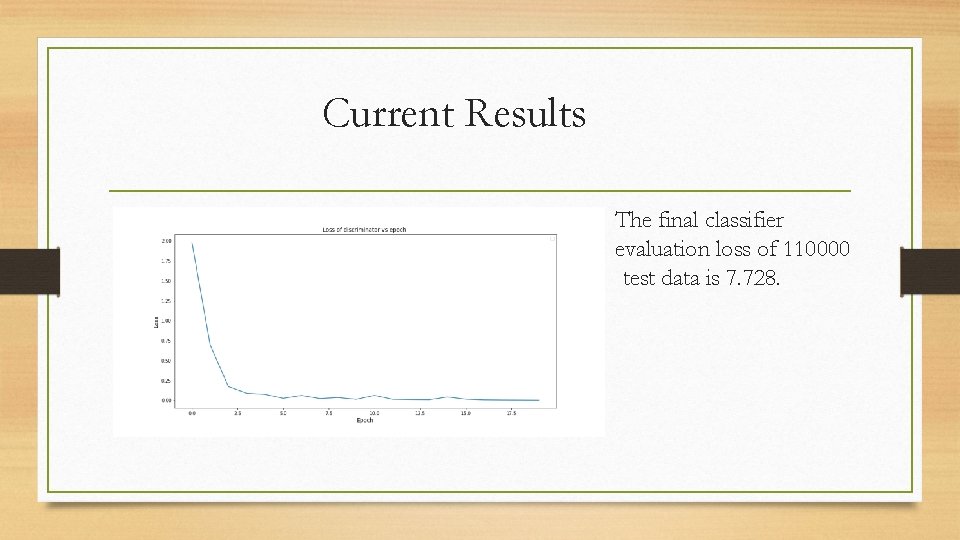

Current Results evaluation The final classifier evaluation loss of 110000 test data is 7. 728.

Further Work ● Preprocess the data to get better result. ● Solve the coding problem to get the accuracy of our trained model. ● Compare our approach with other DL methods.

![Reference 1 Azzouz E E Nandi A K Automatic Modulation Reference • • • [1]: Azzouz, E. E. ; Nandi, A. K. Automatic Modulation](https://slidetodoc.com/presentation_image_h2/a569cfe37fa794f9b7ff5c56838f4c5d/image-13.jpg)

Reference • • • [1]: Azzouz, E. E. ; Nandi, A. K. Automatic Modulation Recognition of Communication Signals. IEEE Trans. Commun. 1996, 431– 436. • [4]: Tang, B. ; Tu, Y. ; Zhang, Z. ; Lin, Y. Digital Signal Modulation Classification With Data Augmentation Using Generative Adversarial Nets in Cognitive Radio Networks. IEEE Access 2018, 6, 15713– 15722. • [5]: Goodfellow, I. J. ; Pouget-Abadie, J. ; Mirza, M. ; Xu, B. ; Warde-Farley, D. ; Ozair, S. ; Courville, A. ; Bengio, Y. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. 2014, 3, 2672– 2680. • [6]: Mirza, M. ; Osindero, S. Conditional Generative Adversarial Nets. In Proceedings of the Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8– 13 December 2014. • [7]: Radford, A. ; Metz, L. ; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, PR, USA, 2– 4 May 2016. [2]: O’Shea, T. J. ; Hoydis, J. An Introduction to Deep Learning for the Physical Layer. IEEE Trans. Cognit. Commum. Netw. 2017, 3, 563– 575. [3]: Li, R. ; Li, L. ; Yang, S. ; Li, S. Robust Automated VHF Modulation Recognition Based on Deep Convolutional Neural Networks. IEEE Commun. Lett. 2018, 22, 946– 949.