Ultra Gesture FineGrained Gesture Sensing and Recognition Kang

- Slides: 33

Ultra. Gesture: Fine-Grained Gesture Sensing and Recognition Kang Ling†, Haipeng Dai†, Yuntang Liu†, and Alex X. Liu†‡ Nanjing University†, Michigan State University‡ SECON'18 June 12 th, 2018

Outline Motivation Doppler vs. FMCW vs. CIR Solution Evaluation 2/33

Outline Motivation Doppler vs. FMCW vs. CIR Solution Evaluation 3/33

Motivation (a) AR/VR require new UI technology (b) Smartwatch with tiny input screen (c) Some inconvenient scenarios 4/33

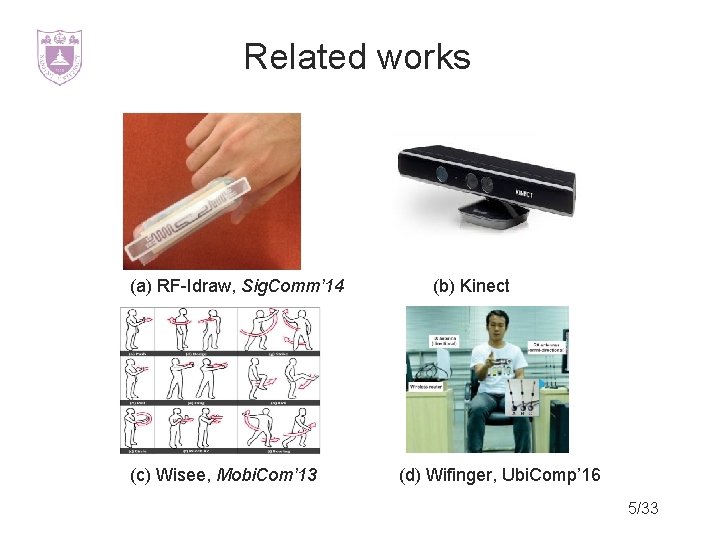

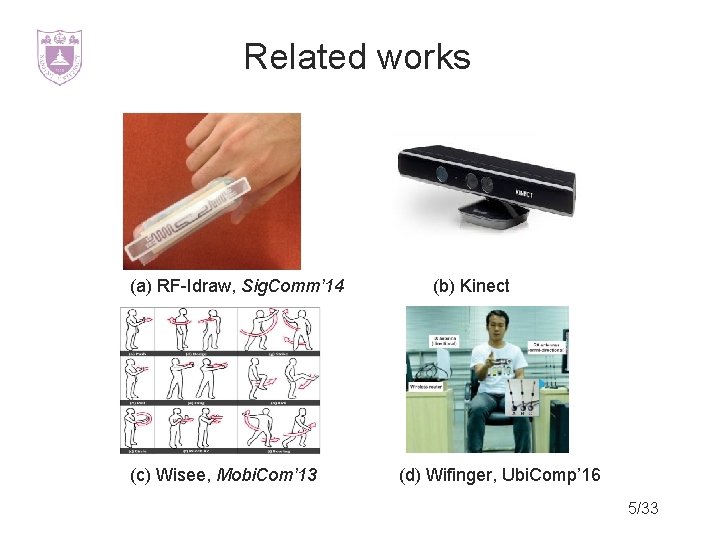

Related works (a) RF-Idraw, Sig. Comm’ 14 (c) Wisee, Mobi. Com’ 13 (b) Kinect (d) Wifinger, Ubi. Comp’ 16 5/33

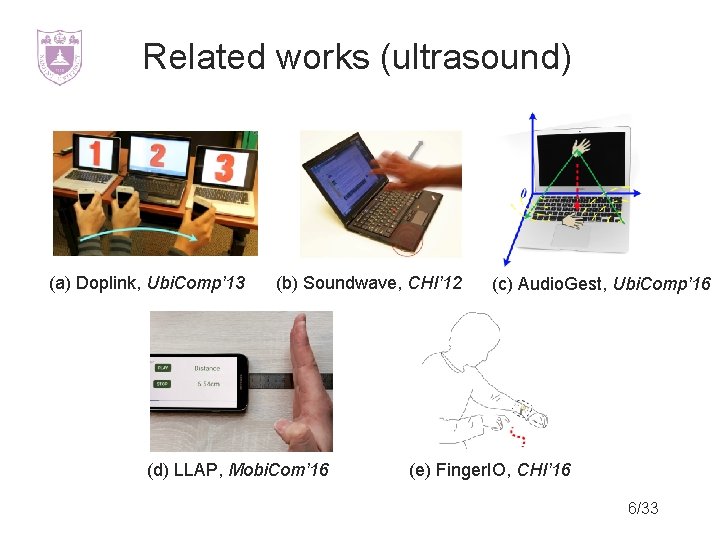

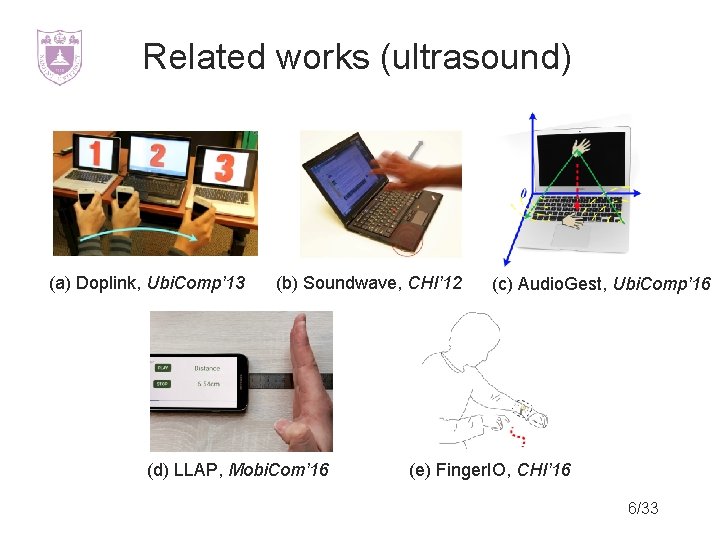

Related works (ultrasound) (a) Doplink, Ubi. Comp’ 13 (b) Soundwave, CHI’ 12 (d) LLAP, Mobi. Com’ 16 (c) Audio. Gest, Ubi. Comp’ 16 (e) Finger. IO, CHI’ 16 6/33

Outline Motivation Doppler vs. FMCW vs. CIR Solution Evaluation 7/33

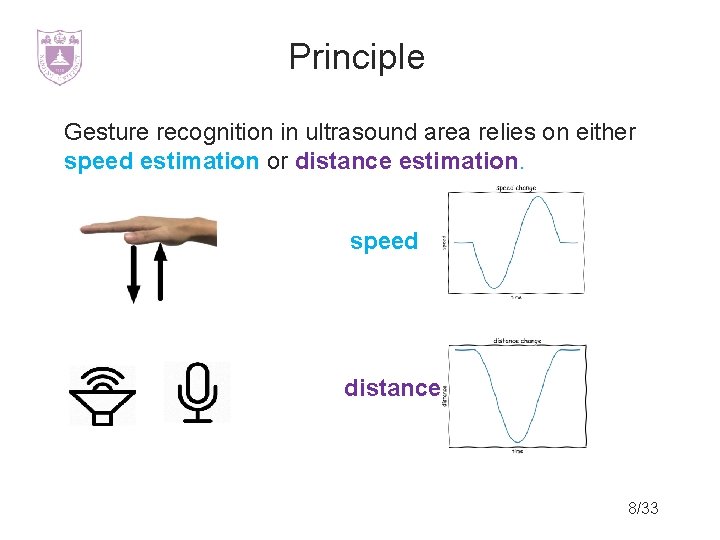

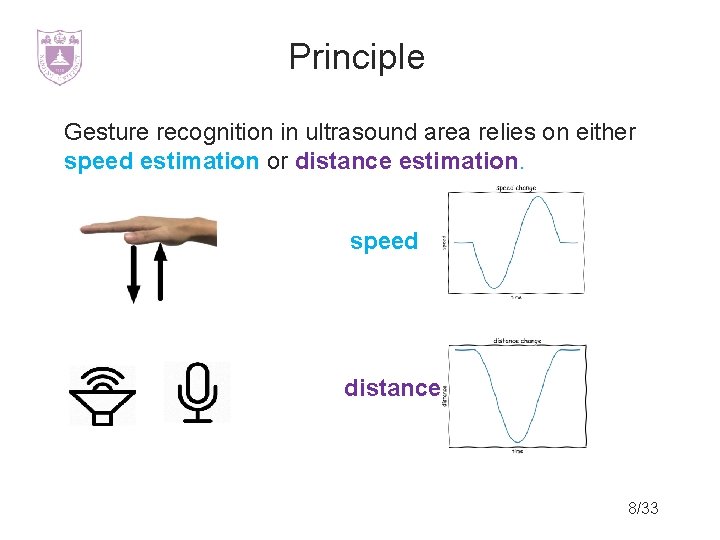

Principle Gesture recognition in ultrasound area relies on either speed estimation or distance estimation. speed distance 8/33

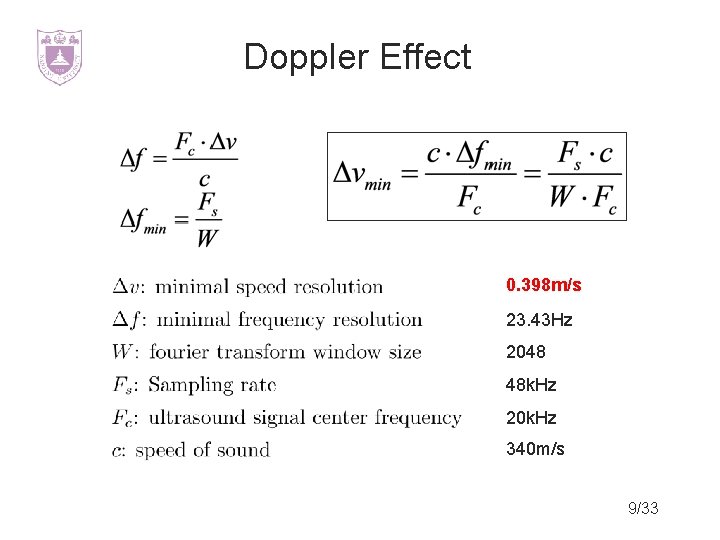

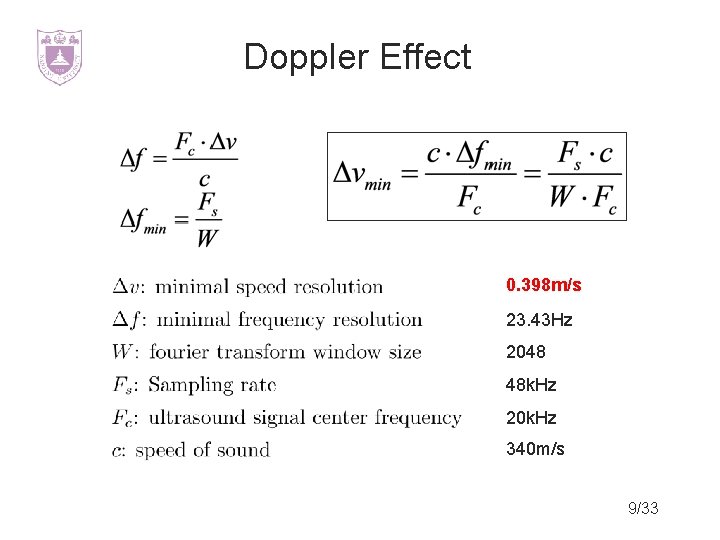

Doppler Effect 0. 398 m/s 23. 43 Hz 2048 48 k. Hz 20 k. Hz 340 m/s 9/33

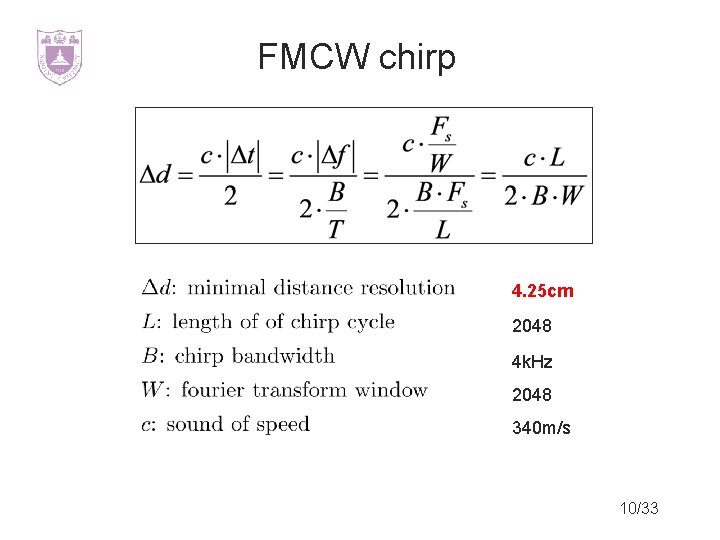

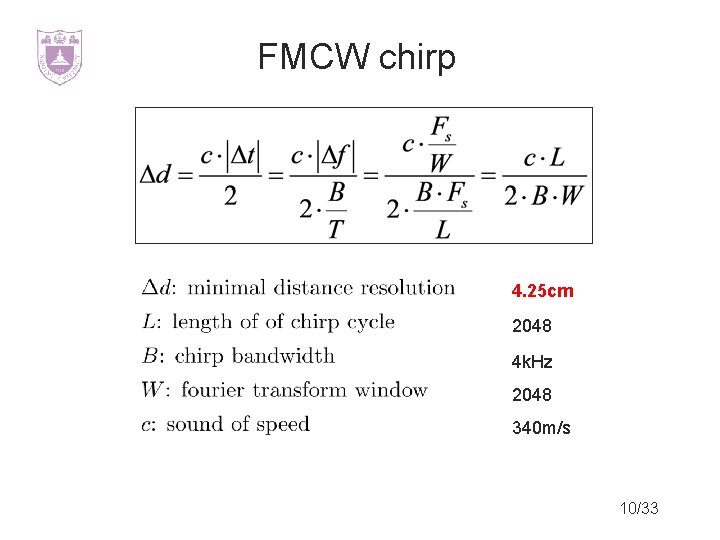

FMCW chirp 4. 25 cm 2048 4 k. Hz 2048 340 m/s 10/33

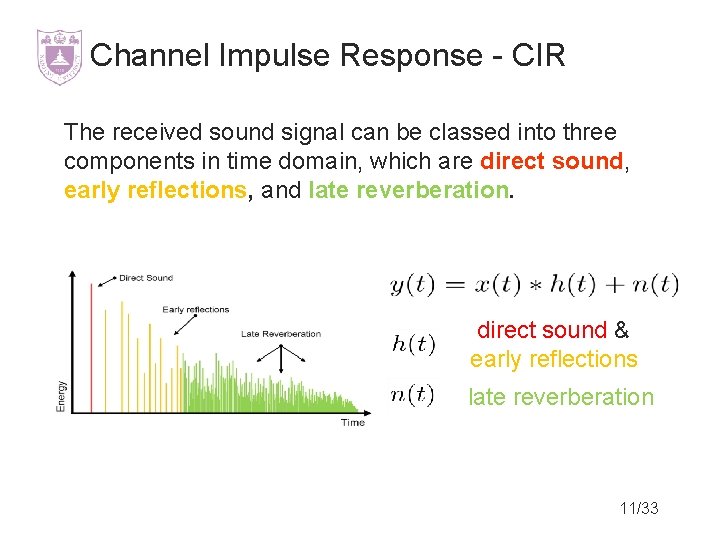

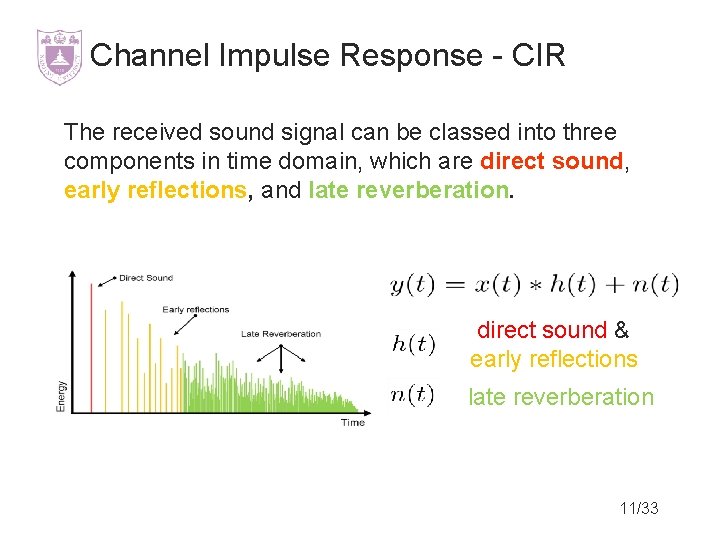

Channel Impulse Response - CIR The received sound signal can be classed into three components in time domain, which are direct sound, early reflections, and late reverberation. direct sound & early reflections late reverberation 11/33

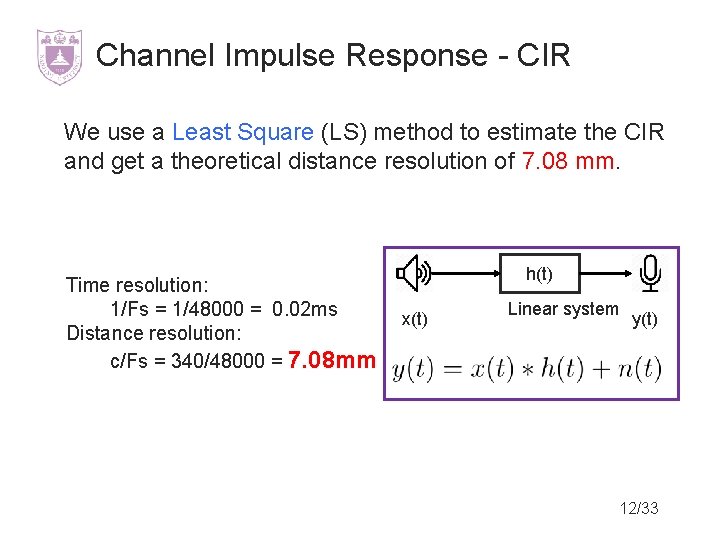

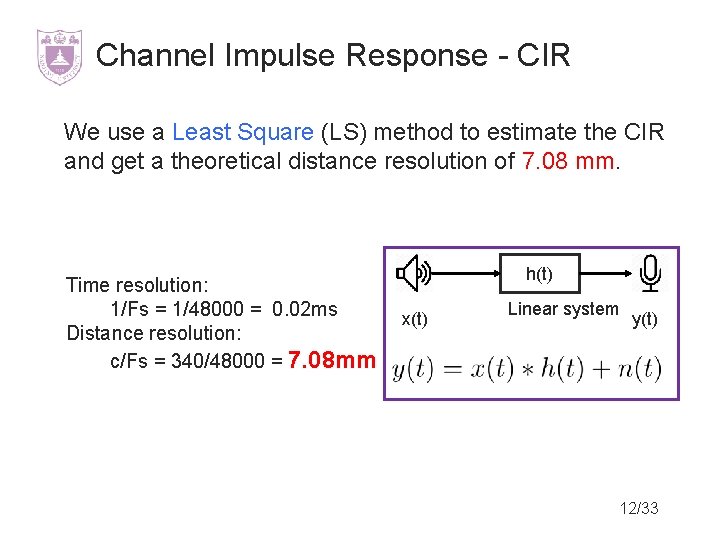

Channel Impulse Response - CIR We use a Least Square (LS) method to estimate the CIR and get a theoretical distance resolution of 7. 08 mm. Time resolution: 1/Fs = 1/48000 = 0. 02 ms Distance resolution: c/Fs = 340/48000 = 7. 08 mm h(t) x(t) Linear system y(t) 12/33

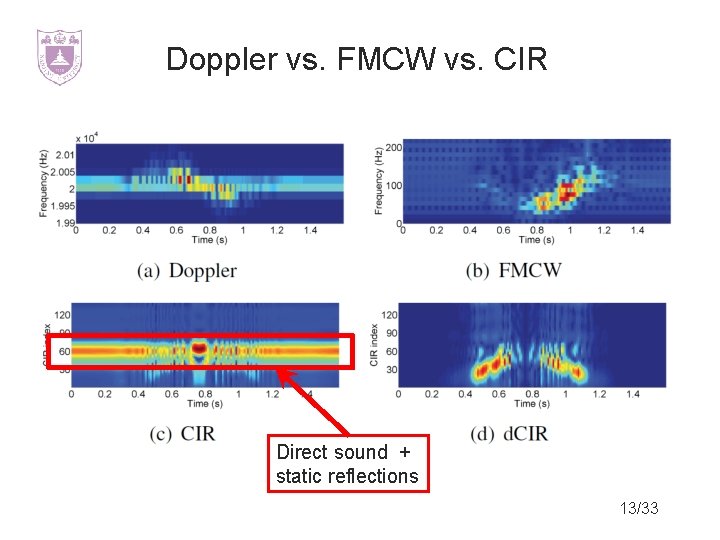

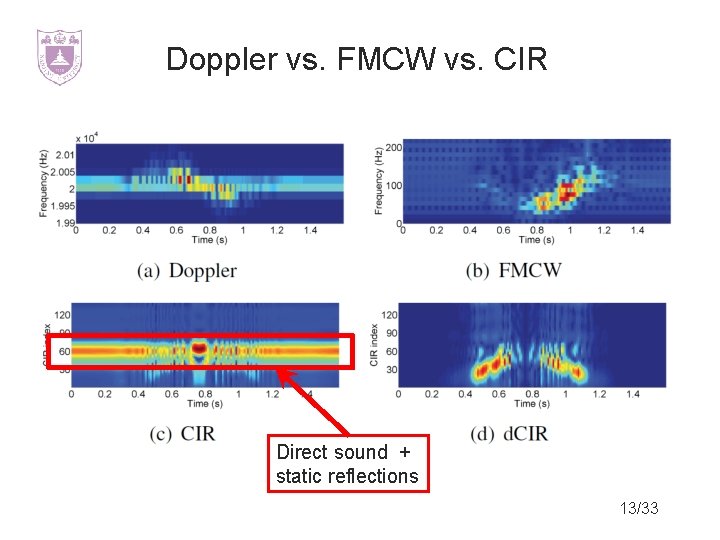

Doppler vs. FMCW vs. CIR Direct sound + static reflections 13/33

Outline Motivation Doppler vs. FMCW vs. CIR Solution Evaluation 14/33

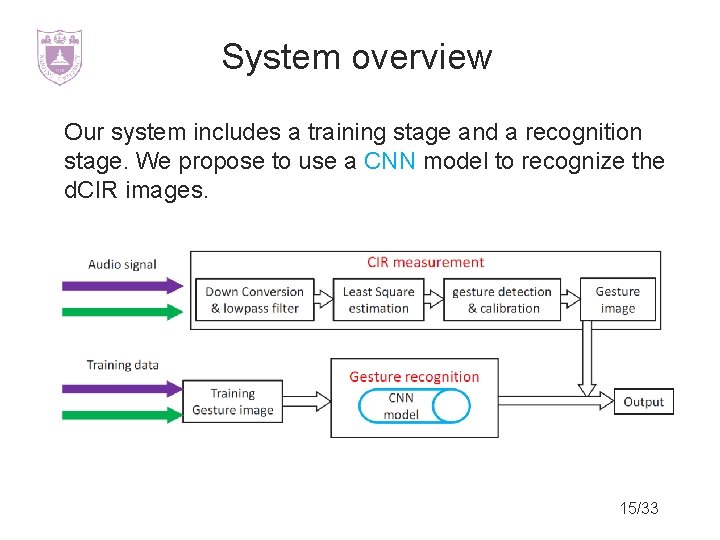

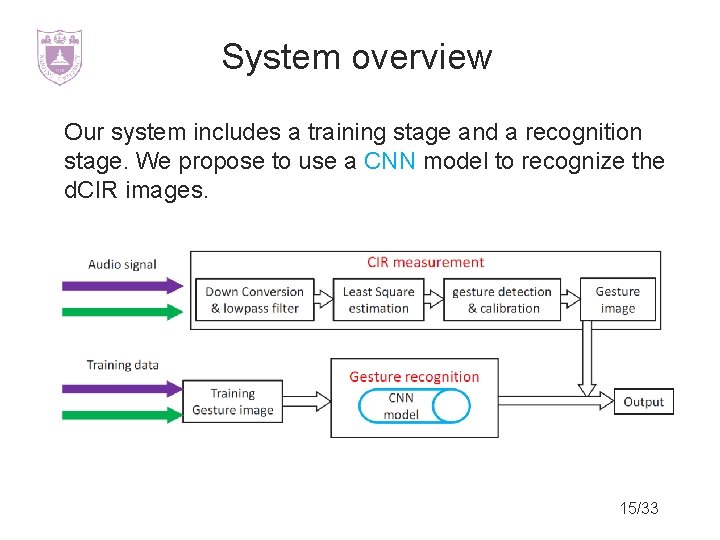

System overview Our system includes a training stage and a recognition stage. We propose to use a CNN model to recognize the d. CIR images. 15/33

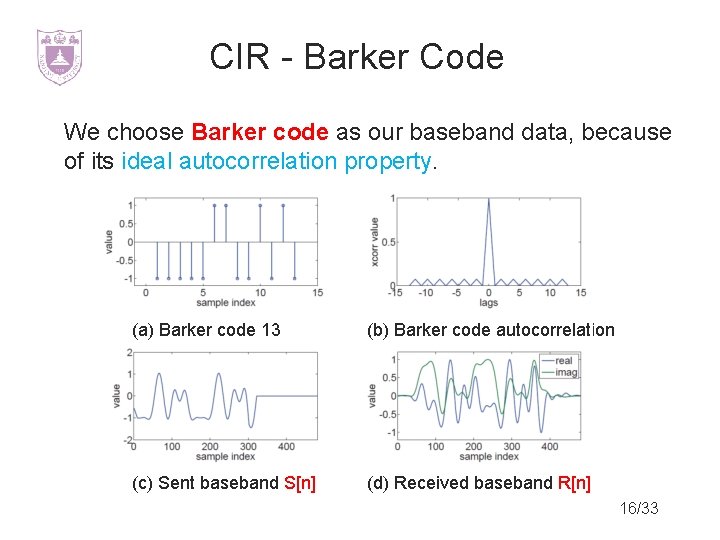

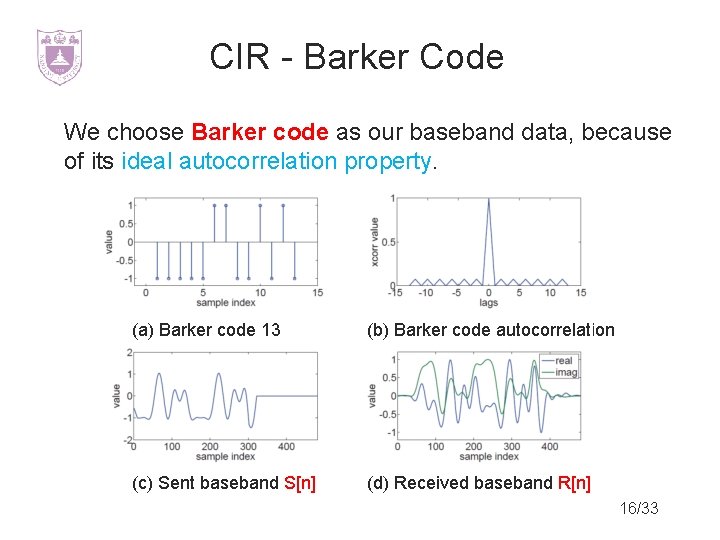

CIR - Barker Code We choose Barker code as our baseband data, because of its ideal autocorrelation property. (a) Barker code 13 (b) Barker code autocorrelation (c) Sent baseband S[n] (d) Received baseband R[n] 16/33

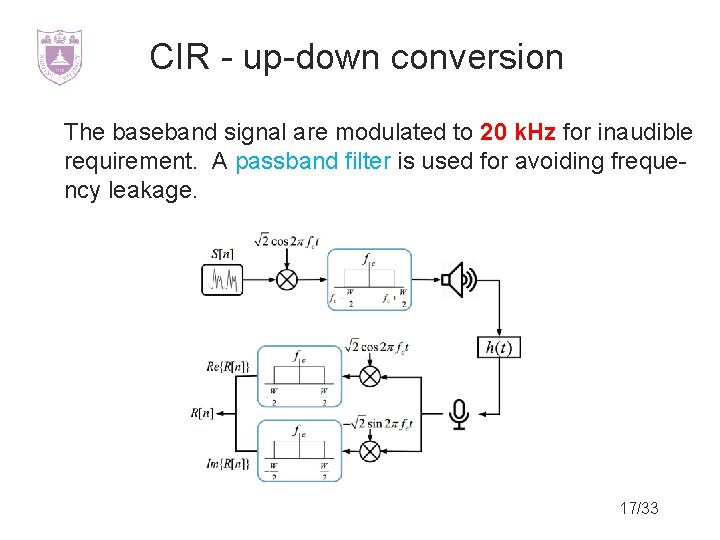

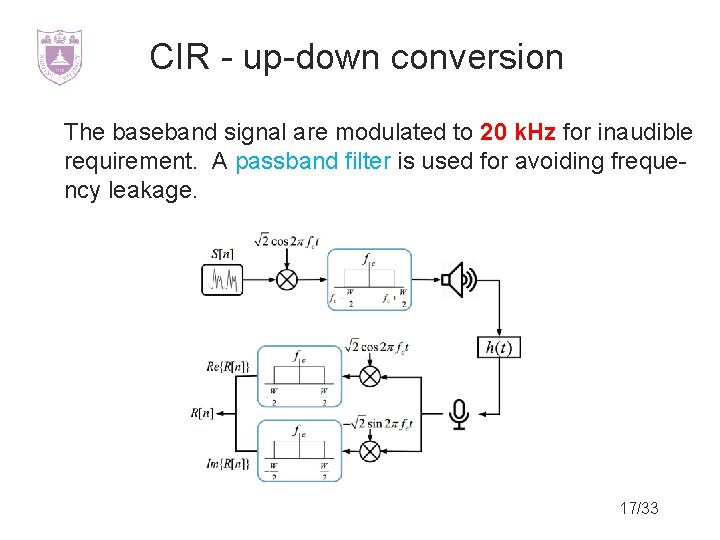

CIR - up-down conversion The baseband signal are modulated to 20 k. Hz for inaudible requirement. A passband filter is used for avoiding frequency leakage. 17/33

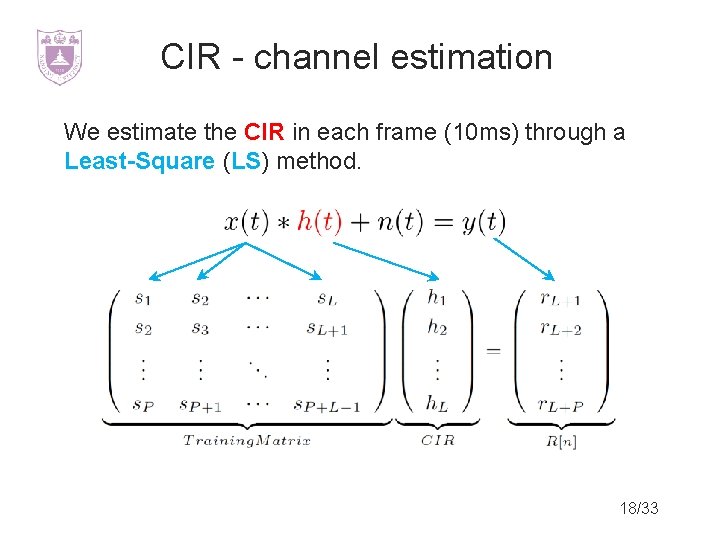

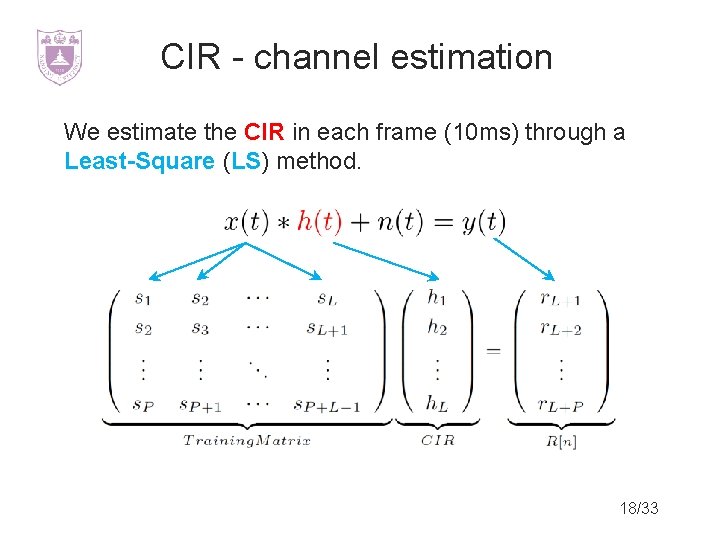

CIR - channel estimation We estimate the CIR in each frame (10 ms) through a Least-Square (LS) method. 18/33

CIR - gesture image Assemble the CIRs along time, we can get a CIR image. Subtract CIR values from last frame, the moving part can be revealed, we call this image d. CIR image. 19/33

d. CIR samples 20/33

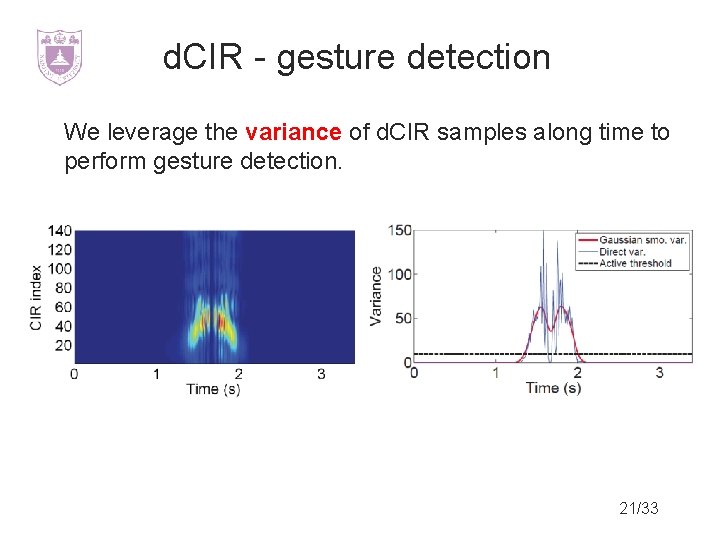

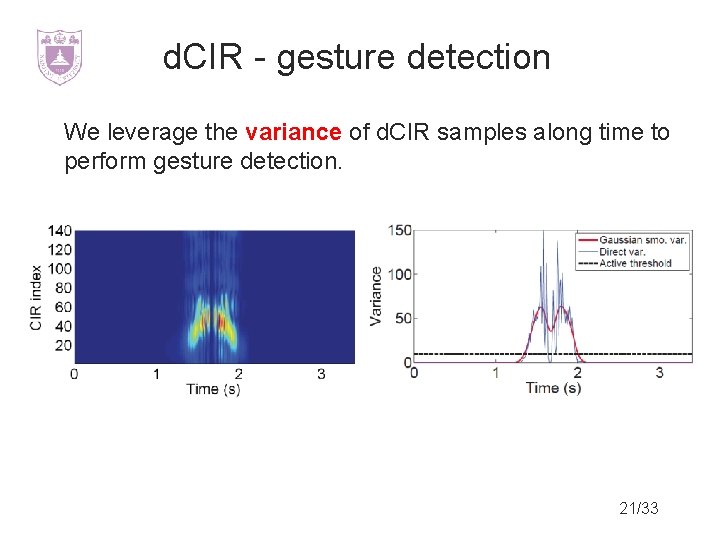

d. CIR - gesture detection We leverage the variance of d. CIR samples along time to perform gesture detection. 21/33

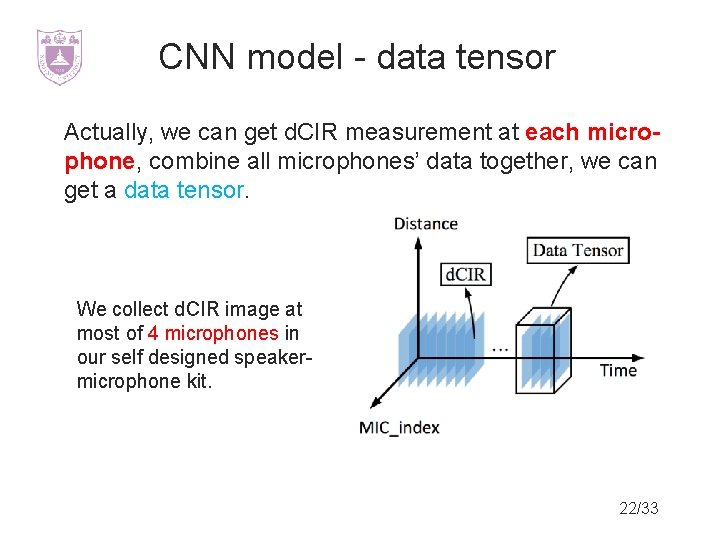

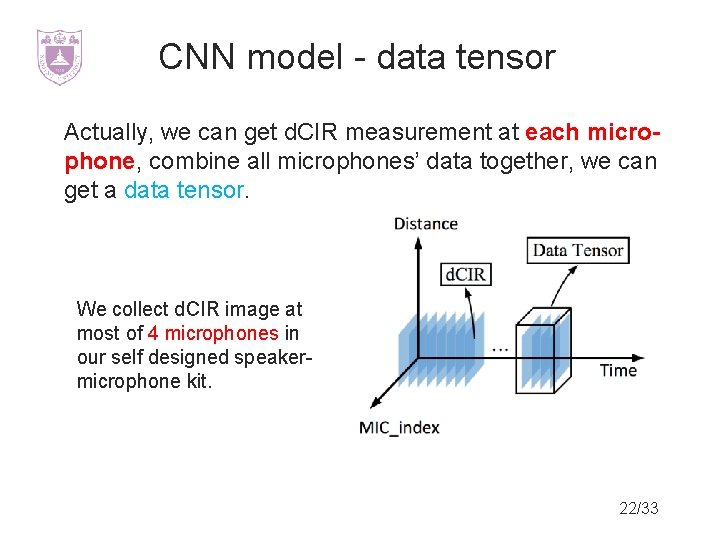

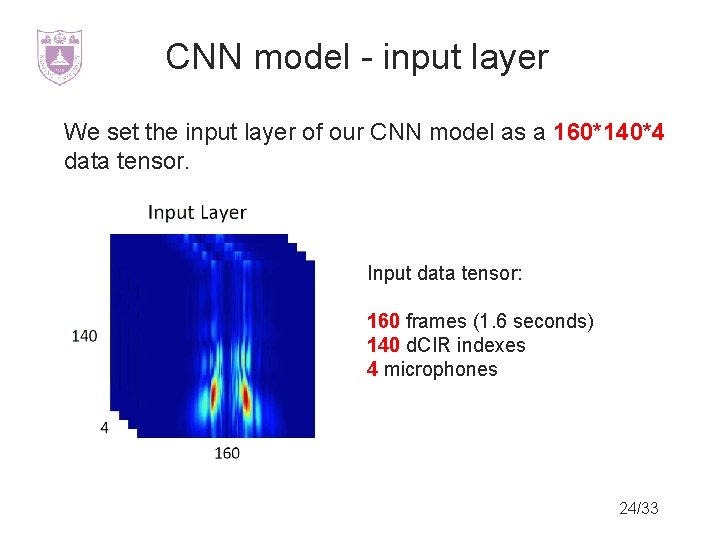

CNN model - data tensor Actually, we can get d. CIR measurement at each microphone, combine all microphones’ data together, we can get a data tensor. We collect d. CIR image at most of 4 microphones in our self designed speakermicrophone kit. 22/33

CNN model We choose CNN (Convolutional Neural Network) as the classifier in our work. l Classifiers, such as SVM, KNN, may miss valuable information in feature extraction process. l CNN is good at classifying high dimension tensor data i. e. image classification. 23/33

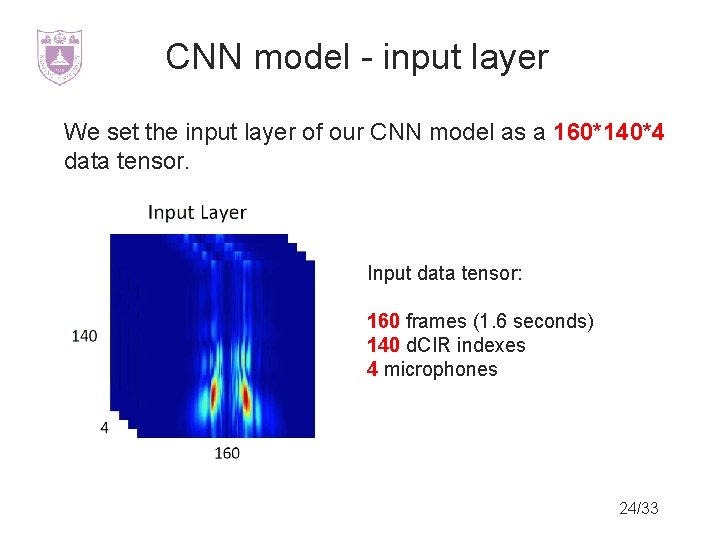

CNN model - input layer We set the input layer of our CNN model as a 160*140*4 data tensor. Input data tensor: 160 frames (1. 6 seconds) 140 d. CIR indexes 4 microphones 24/33

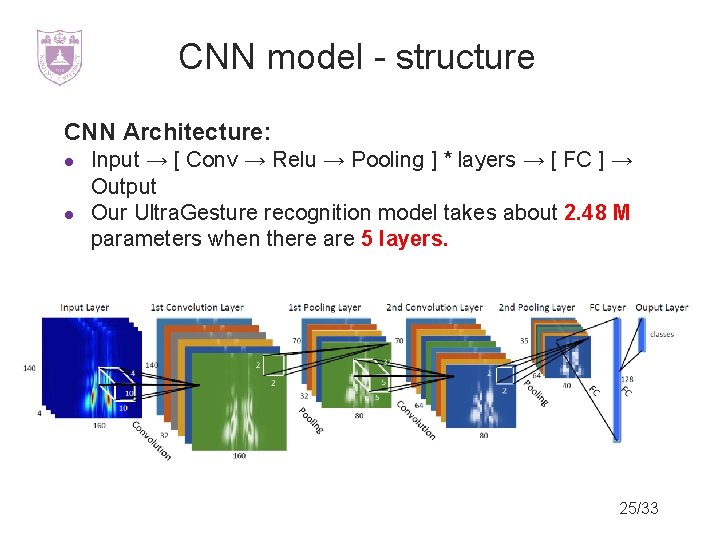

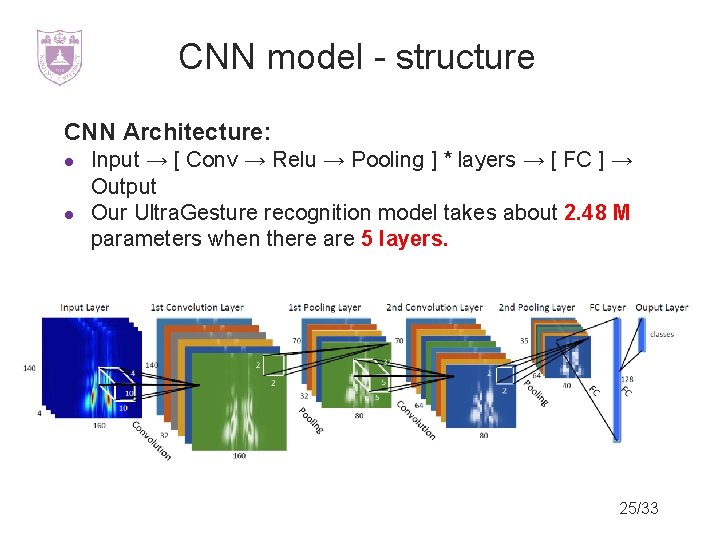

CNN model - structure CNN Architecture: l l Input → [ Conv → Relu → Pooling ] * layers → [ FC ] → Output Our Ultra. Gesture recognition model takes about 2. 48 M parameters when there are 5 layers. 25/33

Outline Motivation Doppler vs. FMCW vs. CIR Solution Evaluation 26/33

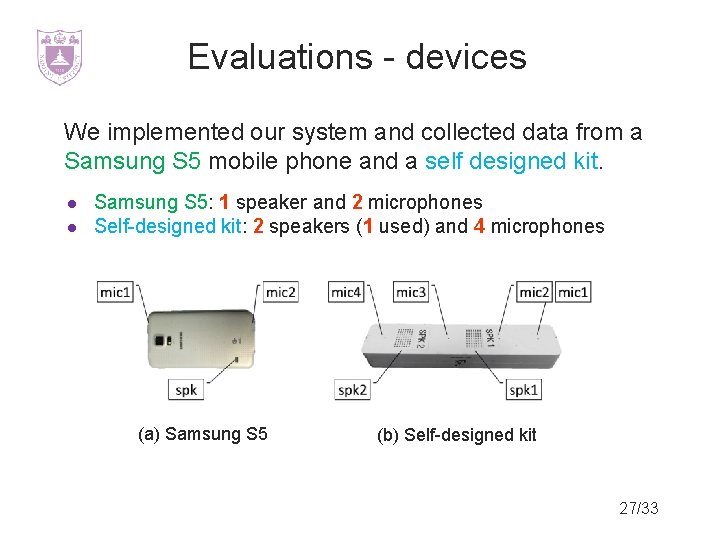

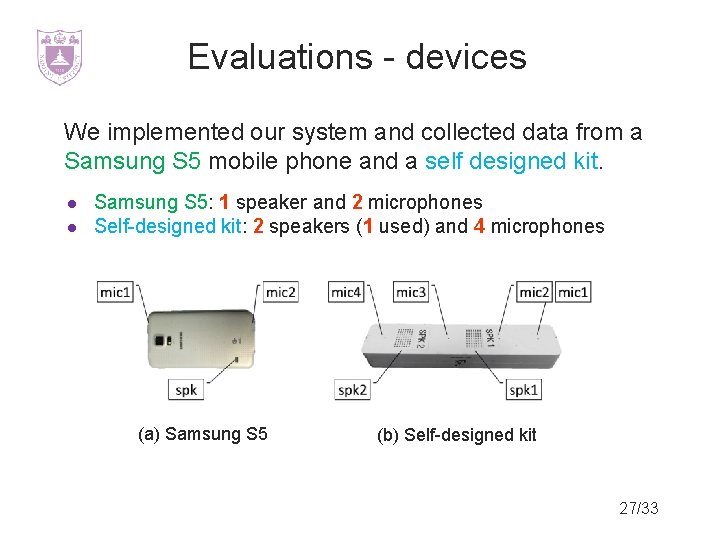

Evaluations - devices We implemented our system and collected data from a Samsung S 5 mobile phone and a self designed kit. l l Samsung S 5: 1 speaker and 2 microphones Self-designed kit: 2 speakers (1 used) and 4 microphones (a) Samsung S 5 (b) Self-designed kit 27/33

Evaluations - gestures We collected data for 12 basic gestures performed by 10 volunteers (8 males and 2 females) with a time span of over a month under different scenarios. 28/33

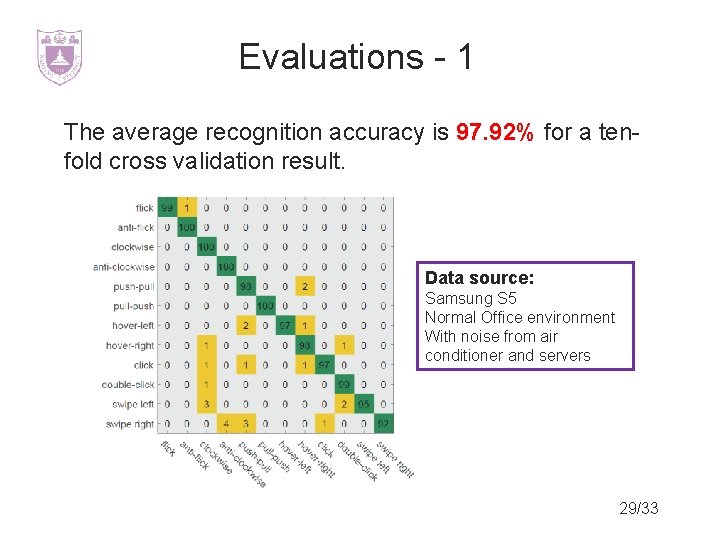

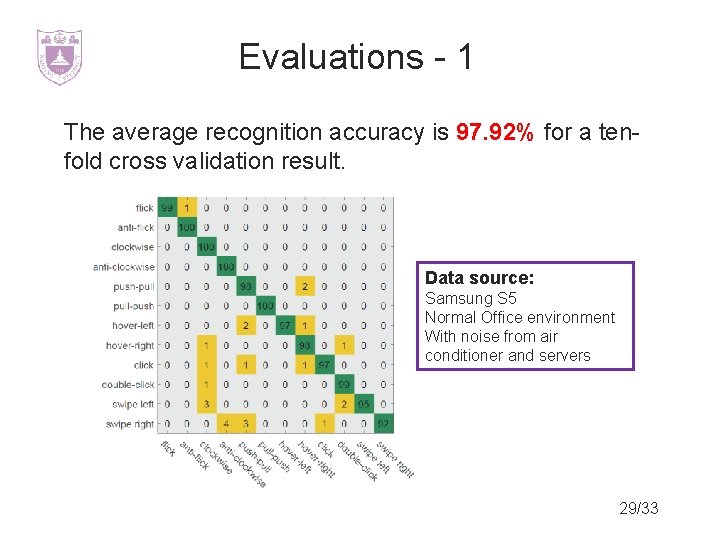

Evaluations - 1 The average recognition accuracy is 97. 92% for a tenfold cross validation result. Data source: Samsung S 5 Normal Office environment With noise from air conditioner and servers 29/33

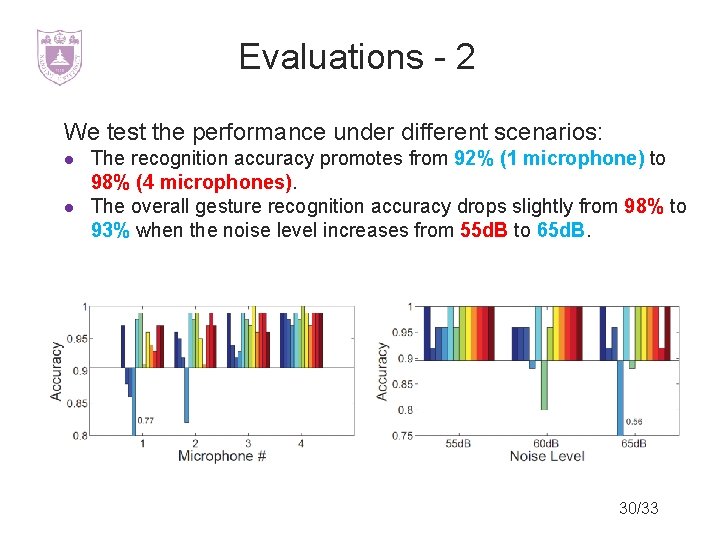

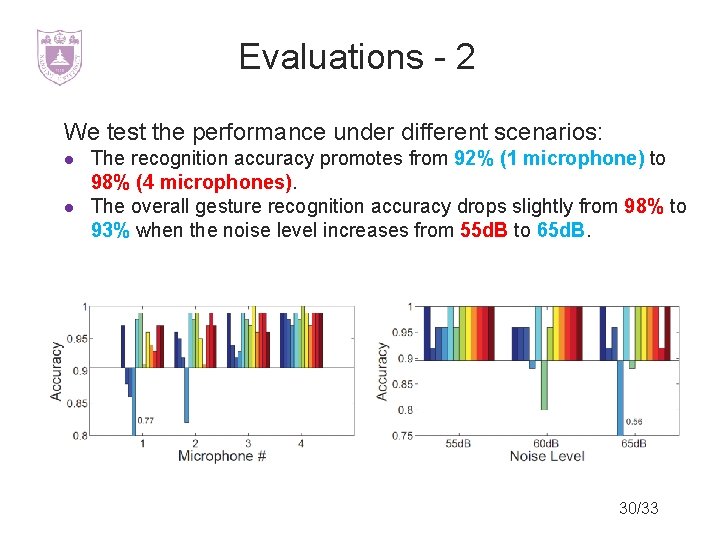

Evaluations - 2 We test the performance under different scenarios: l l The recognition accuracy promotes from 92% (1 microphone) to 98% (4 microphones). The overall gesture recognition accuracy drops slightly from 98% to 93% when the noise level increases from 55 d. B to 65 d. B. 30/33

Evaluations - 3 We test the system performance under some typical usage scenarios: l l l New users: 92. 56% Left hand: 98. 00% With gloves on: 97. 33% Occlusion: 85. 67% Using Ultra. Gesture while playing music: 88. 81% 31/33

Conclusion The contributions of our work can be concluded as follows: l l l We analyzed the inherent drawbacks of existing Doppler and FMCW based methods. We proposed to use CIR measurement to achieve higher distance resolution. We combined the deep learning framework CNN to achieve high recognition accuracy. 32/33

Q&A Email: lingkang@smail. nju. edu. cn 33/33