Transporting High Energy Physics Experiment Data over High

- Slides: 15

Transporting High Energy Physics Experiment Data over High Speed Genkai/Hyeonhae Yukio. Karita@KEK. jp on 4 October 2002 at Oita Korea-Kyushu Gigabit Network meeting

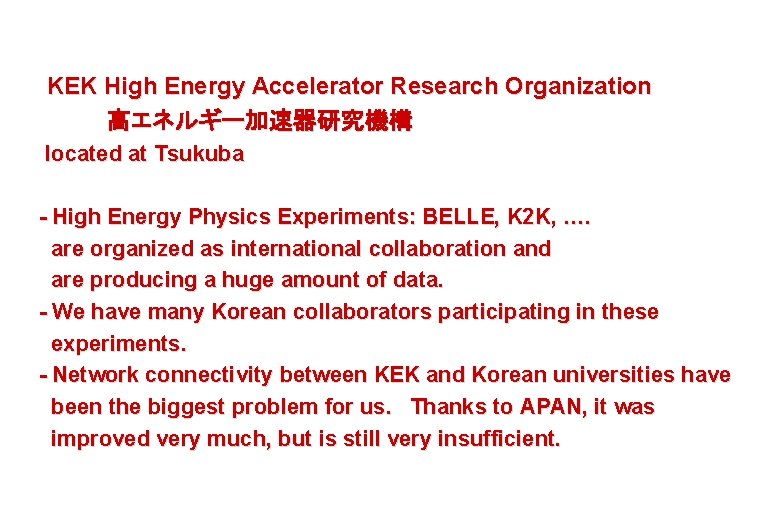

KEK High Energy Accelerator Research Organization 高エネルギー加速器研究機構 located at Tsukuba - High Energy Physics Experiments: BELLE, K 2 K, …. are organized as international collaboration and are producing a huge amount of data. - We have many Korean collaborators participating in these experiments. - Network connectivity between KEK and Korean universities have been the biggest problem for us. Thanks to APAN, it was improved very much, but is still very insufficient.

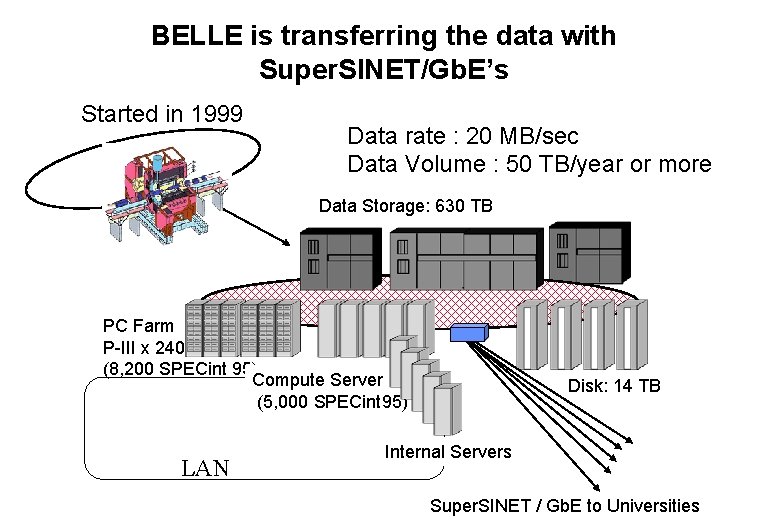

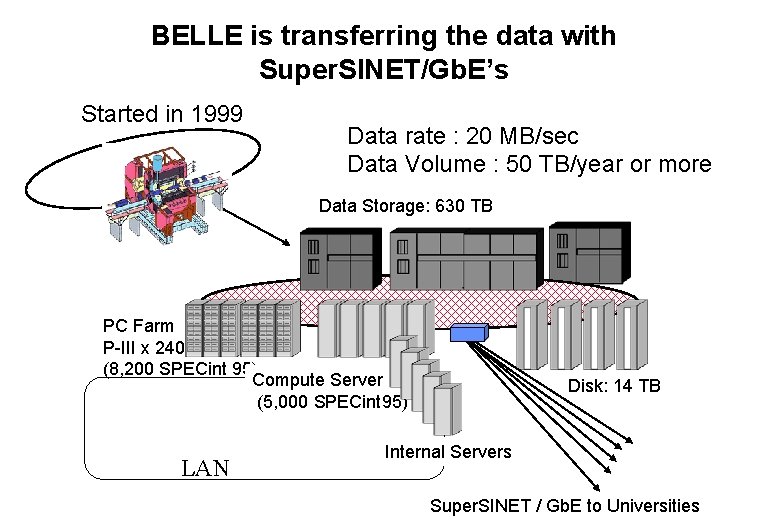

BELLE is transferring the data with Super. SINET/Gb. E’s Started in 1999 Data rate : 20 MB/sec Data Volume : 50 TB/year or more Data Storage: 630 TB PC Farm P-III x 240 (8, 200 SPECint 95) Compute Server (5, 000 SPECint 95) LAN Disk: 14 TB Internal Servers Super. SINET / Gb. E to Universities

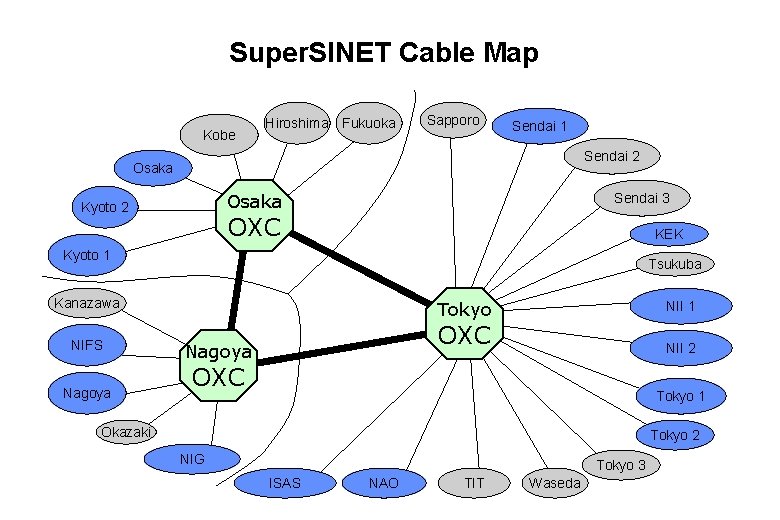

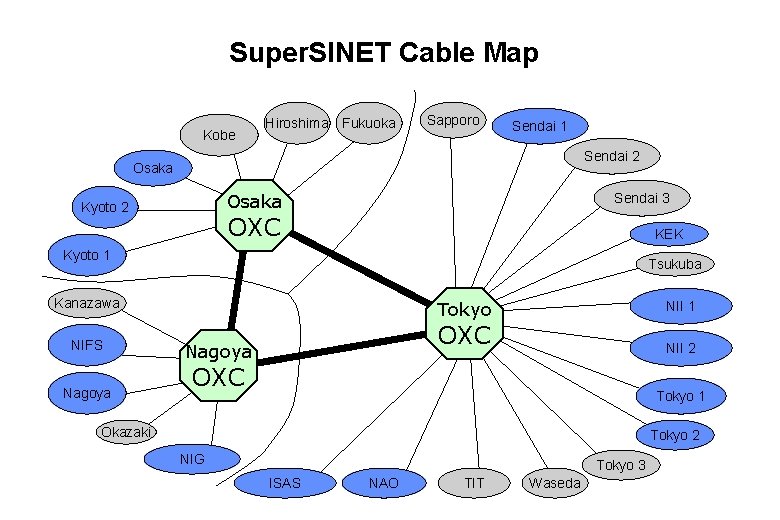

Super. SINET Cable Map Kobe Hiroshima Fukuoka Sapporo Sendai 1 Sendai 2 Osaka Kyoto 2 Sendai 3 OXC KEK Kyoto 1 Tsukuba Kanazawa NIFS Nagoya Tokyo NII 1 OXC Nagoya NII 2 OXC Tokyo 1 Okazaki Tokyo 2 NIG Tokyo 3 ISAS NAO TIT Waseda

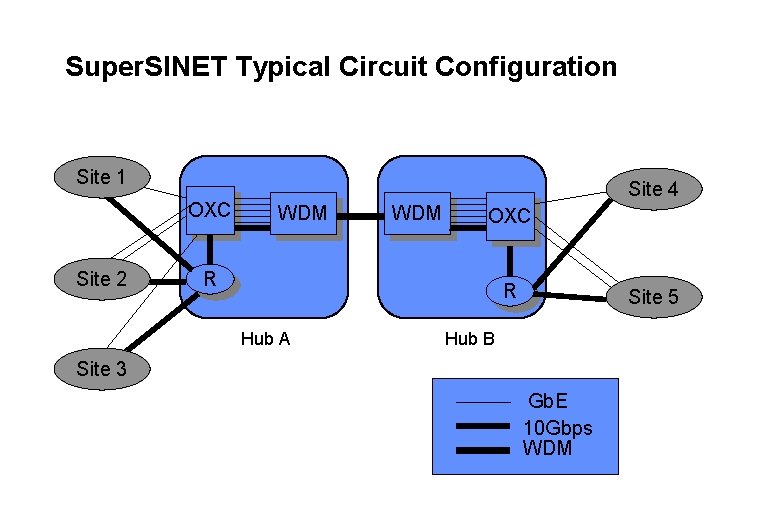

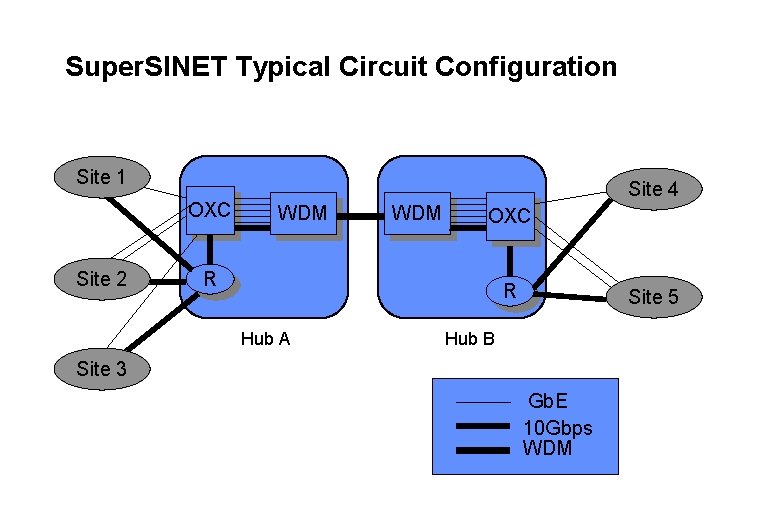

Super. SINET Typical Circuit Configuration Site 1 OXC Site 2 Site 4 WDM OXC R R Hub A Site 5 Hub B Site 3 Gb. E 10 Gbps WDM

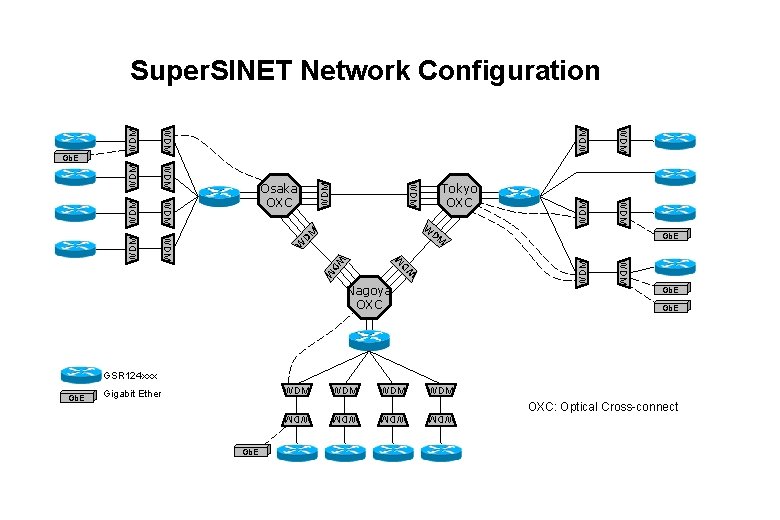

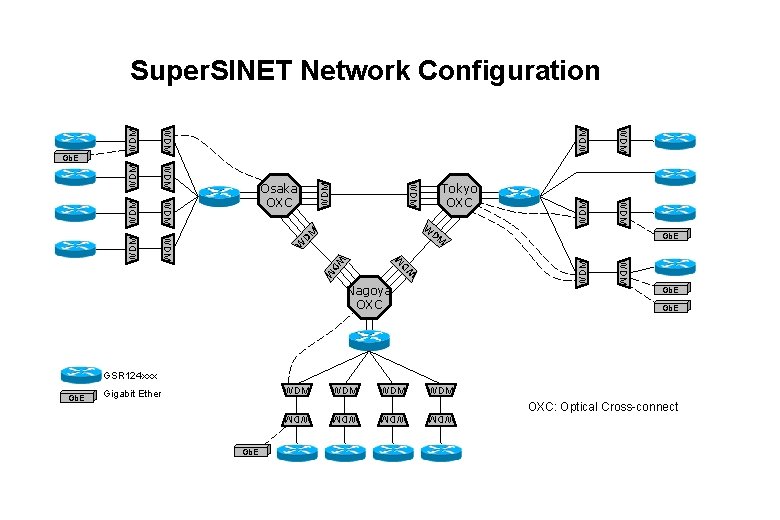

D W W WDM Gb. E WDM W D DM M WDM WDM M DM Nagoya OXC WDM WDM WDM Tokyo OXC WDM Osaka OXC WDM Gb. E WDM Super. SINET Network Configuration Gb. E GSR 124 xxx WDM Gigabit Ether WDM WDM OXC: Optical Cross-connect WDM WDM Gb. E

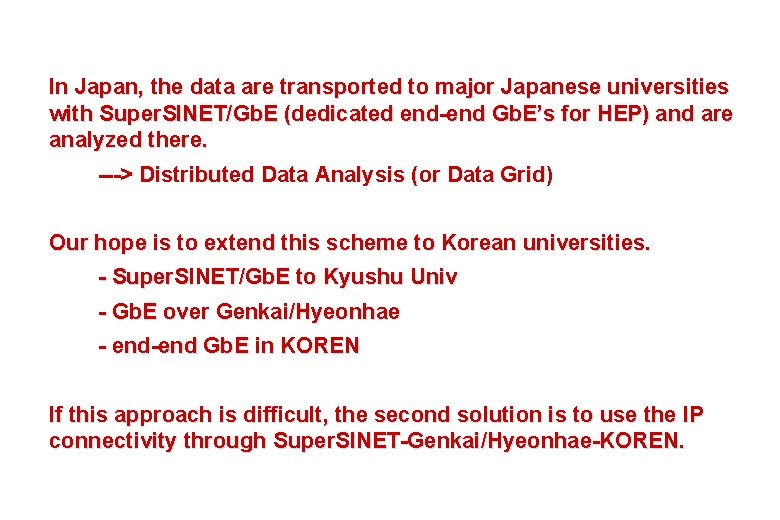

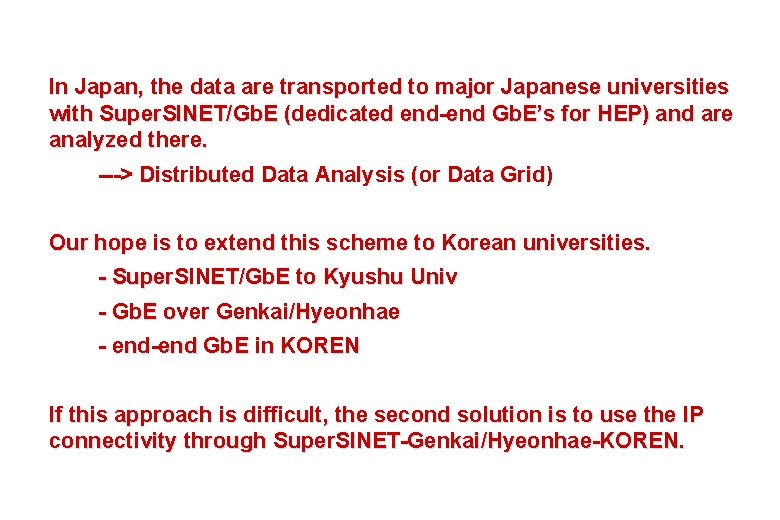

In Japan, the data are transported to major Japanese universities with Super. SINET/Gb. E (dedicated end-end Gb. E’s for HEP) and are analyzed there. ---> Distributed Data Analysis (or Data Grid) Our hope is to extend this scheme to Korean universities. - Super. SINET/Gb. E to Kyushu Univ - Gb. E over Genkai/Hyeonhae - end-end Gb. E in KOREN If this approach is difficult, the second solution is to use the IP connectivity through Super. SINET-Genkai/Hyeonhae-KOREN.

ICFA and International Networking u ICFA = International Committee for Future Accelerators u ICFA Statement on “Communications in Int’l HEP Collaborations” of October 17, 1996 See http: //www. fnal. gov/directorate/icfa_communicaes. html “ICFA urges that all countries and institutions wishing to participate even more effectively and fully in international HEP Collaborations should: q Review their operating methods to ensure they are fully adapted to remote participation q Strive to provide the necessary communications facilities and adequate international bandwidth”

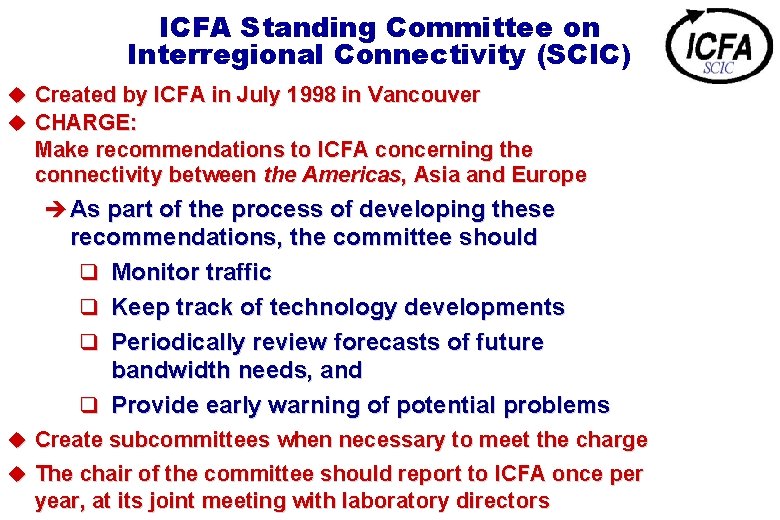

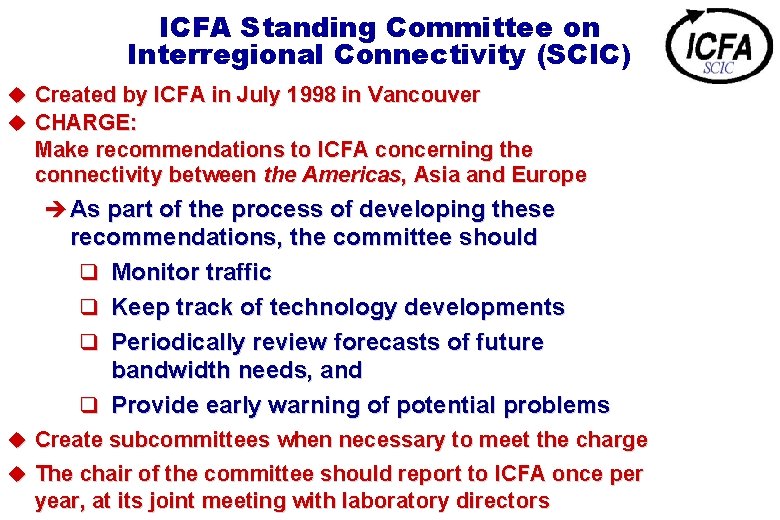

ICFA Standing Committee on Interregional Connectivity (SCIC) u Created by ICFA in July 1998 in Vancouver u CHARGE: Make recommendations to ICFA concerning the connectivity between the Americas, Asia and Europe è As part of the process of developing these recommendations, the committee should q Monitor traffic q Keep track of technology developments q Periodically review forecasts of future bandwidth needs, and q Provide early warning of potential problems u Create subcommittees when necessary to meet the charge u The chair of the committee should report to ICFA once per year, at its joint meeting with laboratory directors

ICFA-SCIC Core Membership u Representatives from major HEP laboratories: Manuel Delfino (CERN) (to W. Von Rueden) Michael Ernst (DESY) Matthias Kasemann (FNAL) Yukio Karita (KEK) Richard Mount (SLAC) u User Representatives Richard Hughes-Jones (UK) Harvey Newman (USA) Dean Karlen (Canada) u For Russia: Slava Ilyin (MSU) u ECFA representatives: Frederico Ruggieri (INFN Frascati), Denis Linglin (IN 2 P 3, Lyon) u ACFA representatives: Rongsheng Xu (IHEP Beijing) Hwan. Bae Park (Korea University) u For South America: Sergio F. Novaes (University de S. Paulo)

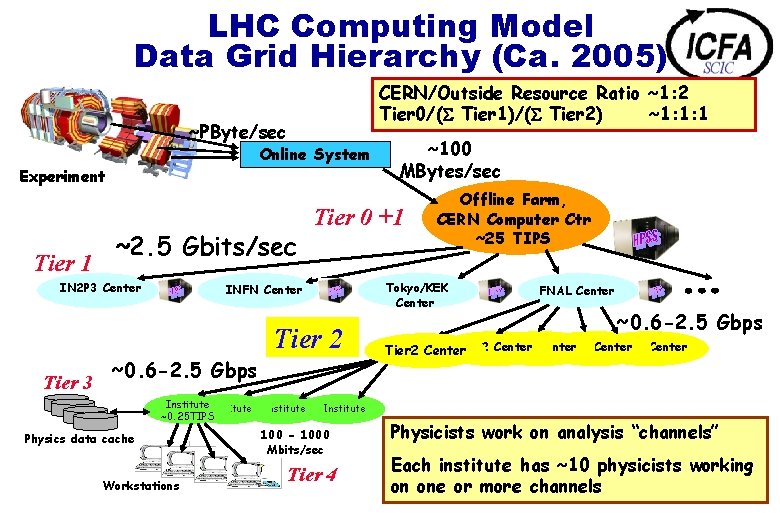

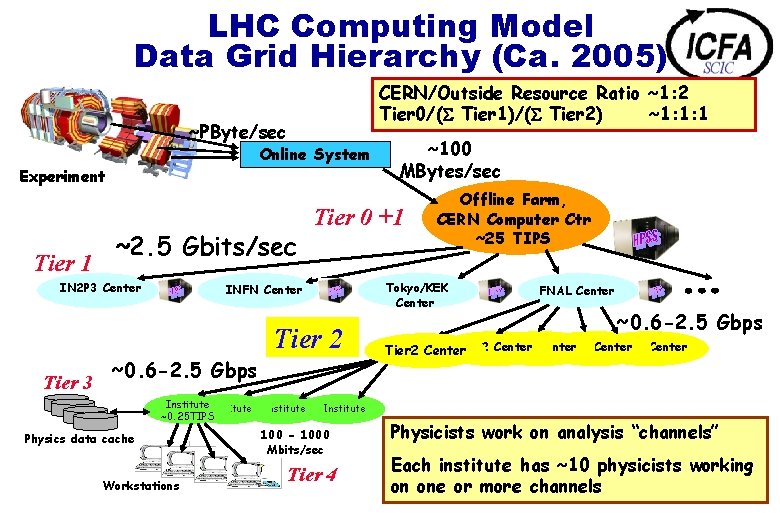

LHC Computing Model Data Grid Hierarchy (Ca. 2005) CERN/Outside Resource Ratio ~1: 2 Tier 0/( Tier 1)/( Tier 2) ~1: 1: 1 ~PByte/sec Online System Experiment Tier 1 ~2. 5 Gbits/sec IN 2 P 3 Center Tier 0 +1 ~0. 6 -2. 5 Gbps Institute ~0. 25 TIPS Physics data cache Workstations Institute Offline Farm, CERN Computer Ctr ~25 TIPS Tokyo/KEK Center INFN Center Tier 2 Tier 3 ~100 MBytes/sec FNAL Center ~0. 6 -2. 5 Gbps Tier 2 Center Tier 2 Center Institute 100 - 1000 Mbits/sec Tier 4 Physicists work on analysis “channels” Each institute has ~10 physicists working on one or more channels

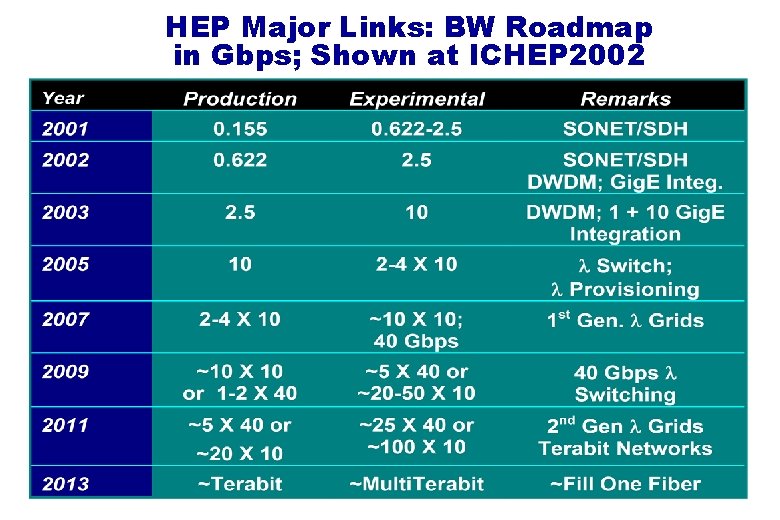

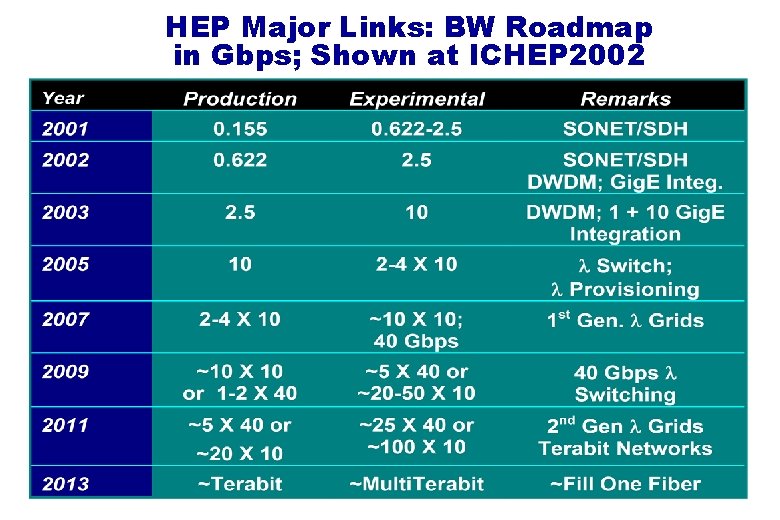

HEP Major Links: BW Roadmap in Gbps; Shown at ICHEP 2002

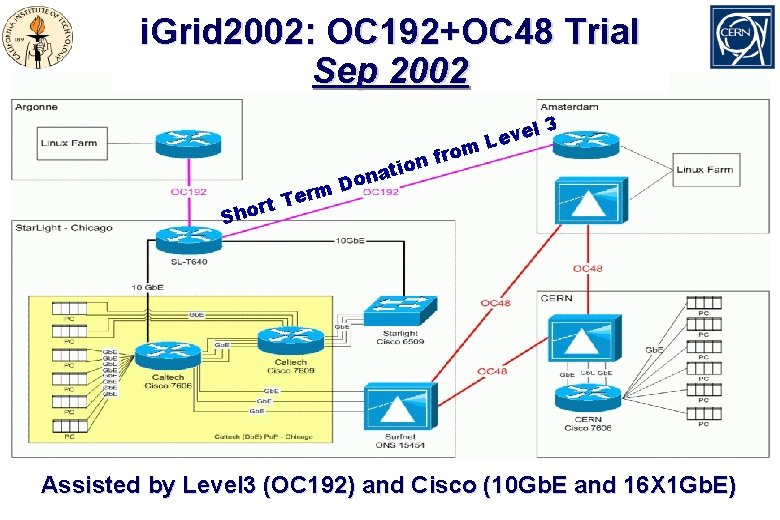

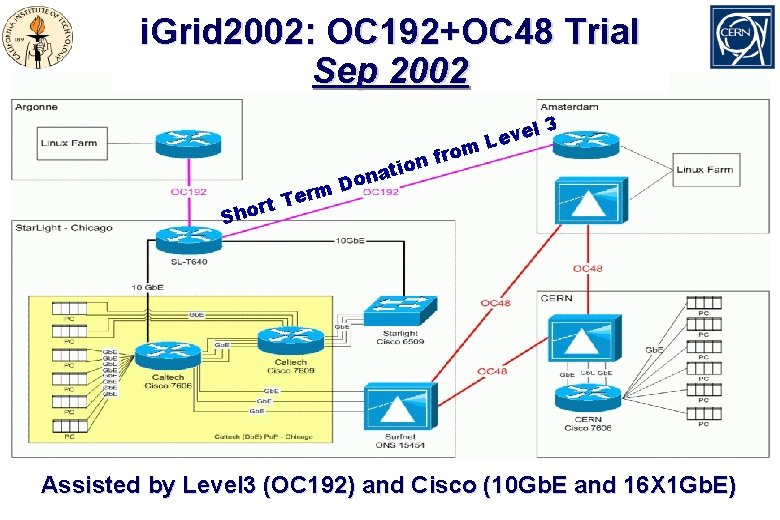

i. Grid 2002: OC 192+OC 48 Trial Sep 2002 rm e T rt Sho rom f n atio n o D l 3 e Lev Assisted by Level 3 (OC 192) and Cisco (10 Gb. E and 16 X 1 Gb. E)

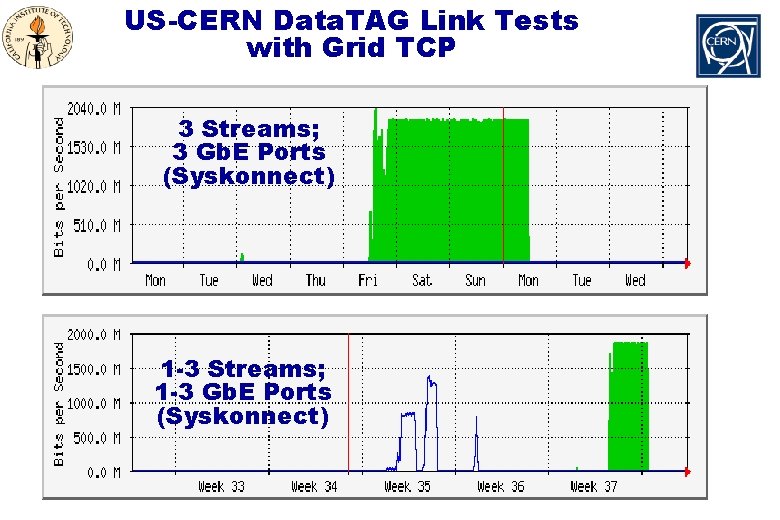

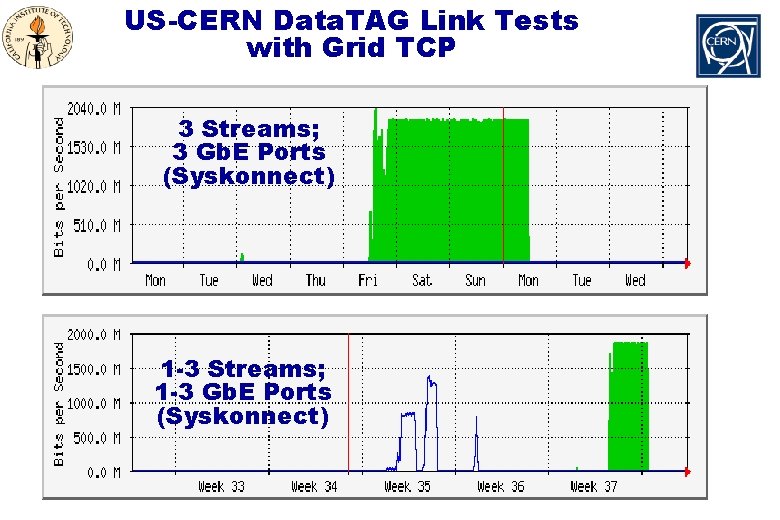

US-CERN Data. TAG Link Tests with Grid TCP 3 Streams; 3 Gb. E Ports (Syskonnect) 1 -3 Streams; 1 -3 Gb. E Ports (Syskonnect)

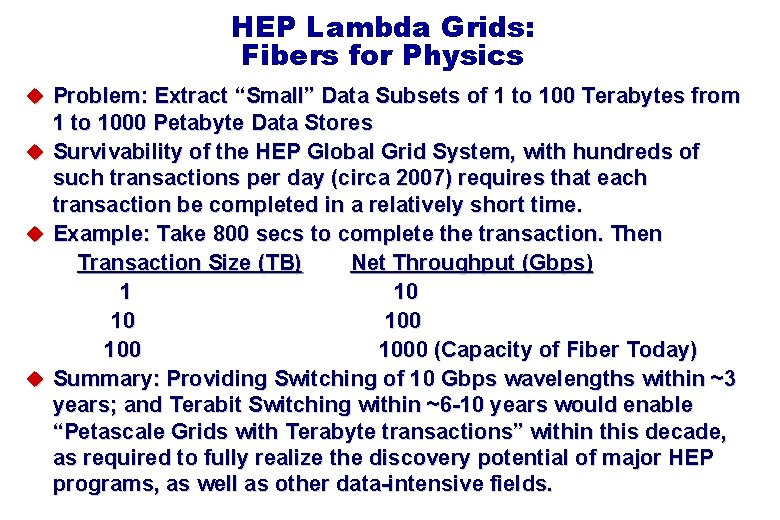

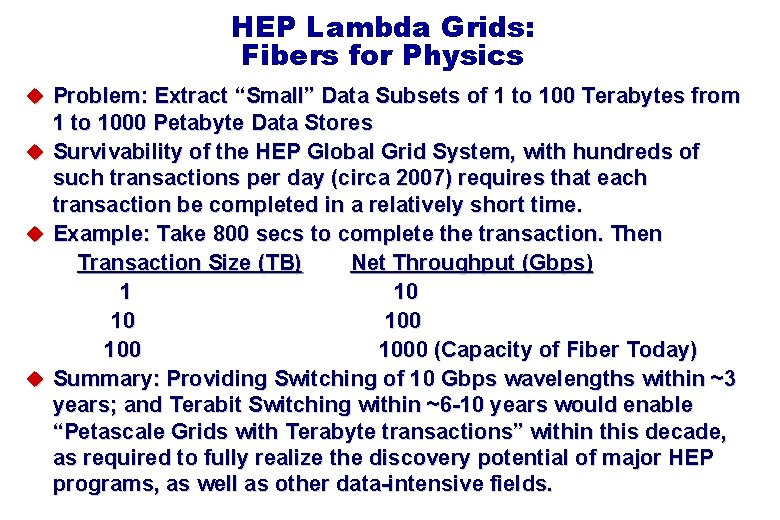

HEP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes from 1 to 1000 Petabyte Data Stores u Survivability of the HEP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. u Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 1 10 10 100 1000 (Capacity of Fiber Today) u Summary: Providing Switching of 10 Gbps wavelengths within ~3 years; and Terabit Switching within ~6 -10 years would enable “Petascale Grids with Terabyte transactions” within this decade, as required to fully realize the discovery potential of major HEP programs, as well as other data-intensive fields.