TERAFLUX OS support for Teraflux A Prototype Avi

- Slides: 13

TERAFLUX OS support for Teraflux A Prototype Avi Mendelson Doron Shamia

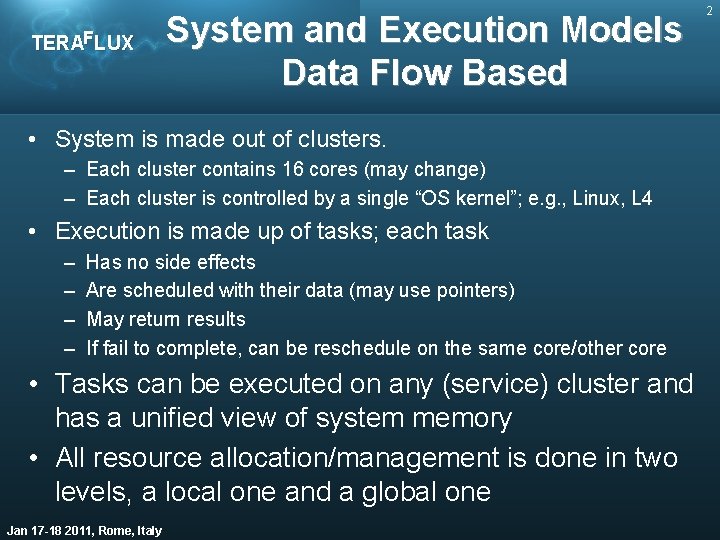

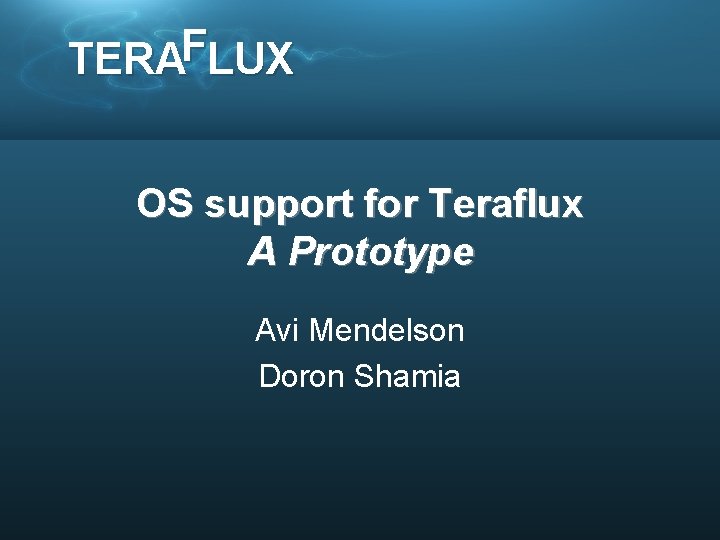

TERAFLUX System and Execution Models Data Flow Based • System is made out of clusters. – Each cluster contains 16 cores (may change) – Each cluster is controlled by a single “OS kernel”; e. g. , Linux, L 4 • Execution is made up of tasks; each task – – Has no side effects Are scheduled with their data (may use pointers) May return results If fail to complete, can be reschedule on the same core/other core • Tasks can be executed on any (service) cluster and has a unified view of system memory • All resource allocation/management is done in two levels, a local one and a global one Jan 17 -18 2011, Rome, Italy 2

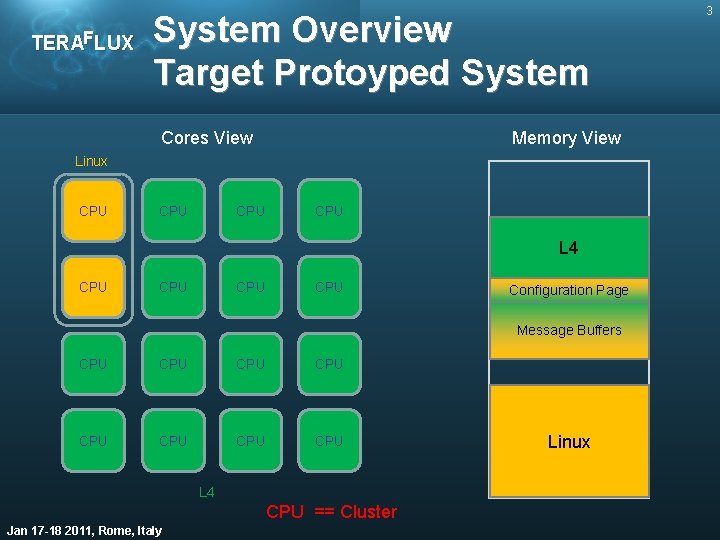

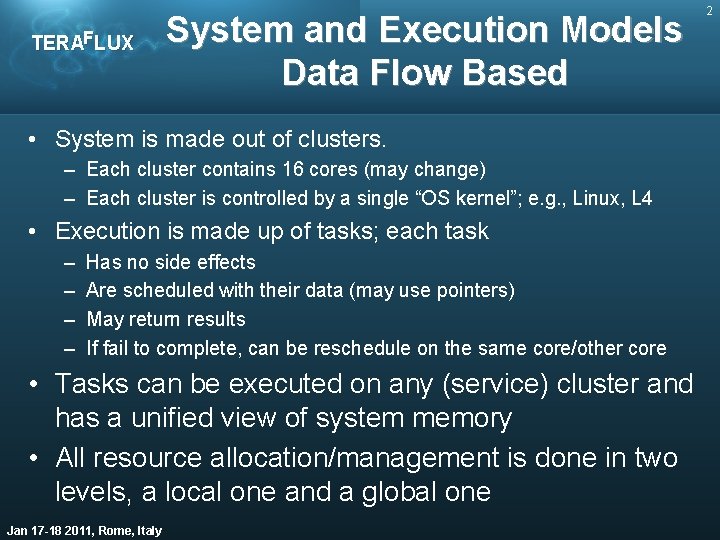

TERAFLUX System Overview Target Protoyped System Cores View Memory View Linux CPU CPU L 4 CPU CPU Configuration Page Message Buffers CPU CPU L 4 CPU == Cluster Jan 17 -18 2011, Rome, Italy Linux 3

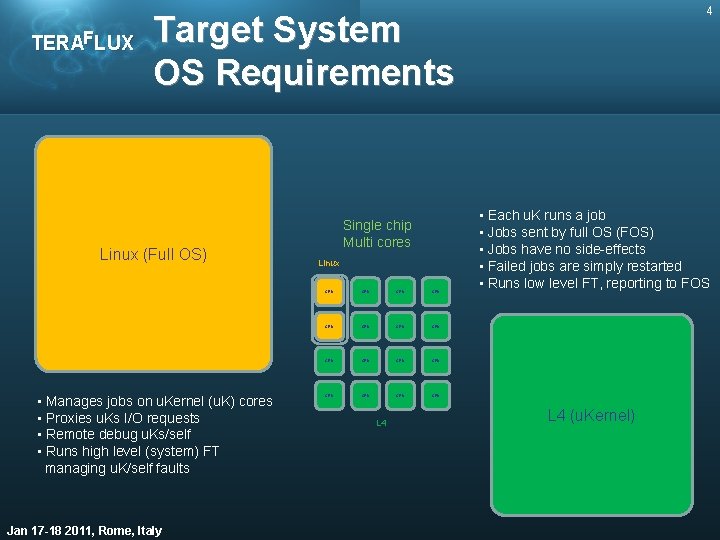

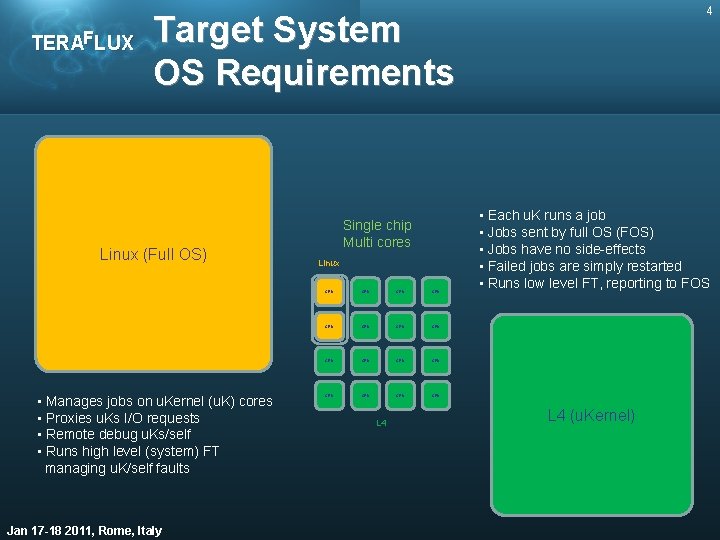

TERAFLUX 4 Target System OS Requirements Linux (Full OS) • Manages jobs on u. Kernel (u. K) cores • Proxies u. Ks I/O requests • Remote debug u. Ks/self • Runs high level (system) FT managing u. K/self faults Jan 17 -18 2011, Rome, Italy Single chip Multi cores Linux CPU CPU CPU CPU L 4 • Each u. K runs a job • Jobs sent by full OS (FOS) • Jobs have no side-effects • Failed jobs are simply restarted • Runs low level FT, reporting to FOS L 4 (u. Kernel)

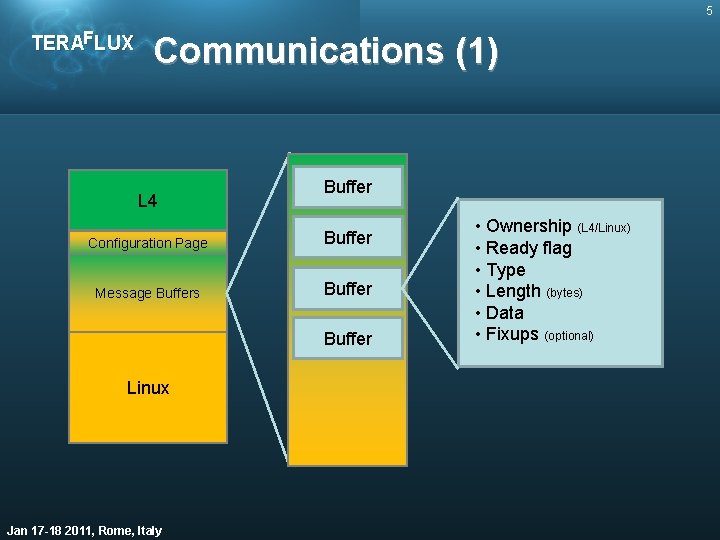

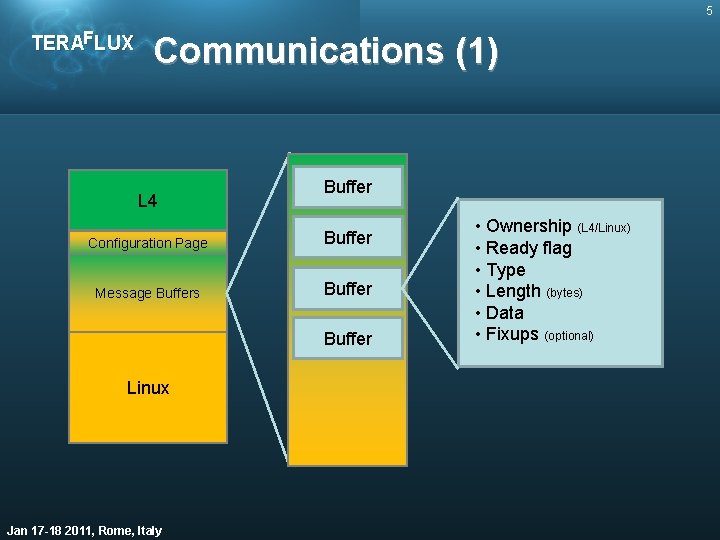

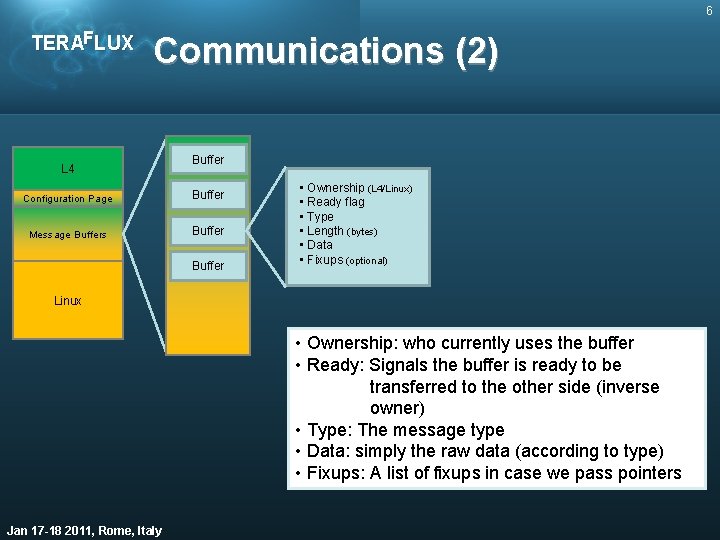

5 TERAFLUX Communications (1) L 4 Buffer Configuration Page Buffer Message Buffers Buffer Linux Jan 17 -18 2011, Rome, Italy • Ownership (L 4/Linux) • Ready flag • Type • Length (bytes) • Data • Fixups (optional)

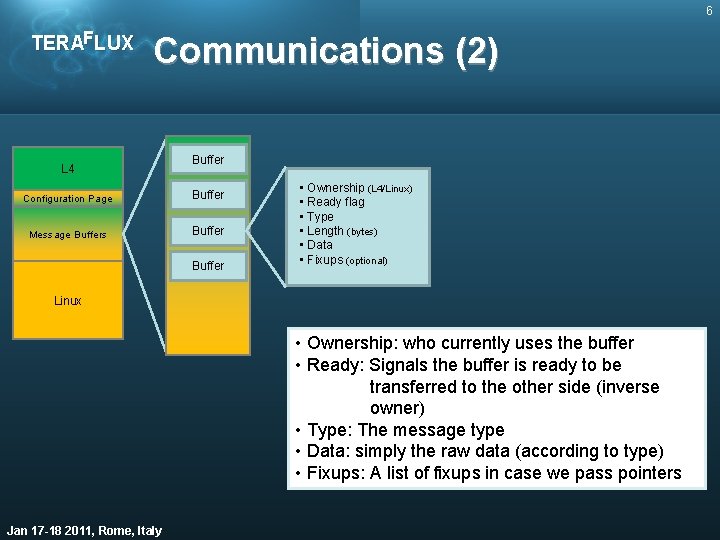

6 TERAFLUX Communications (2) L 4 Buffer Configuration Page Buffer Message Buffers Buffer • Ownership (L 4/Linux) • Ready flag • Type • Length (bytes) • Data • Fixups (optional) Linux • Ownership: who currently uses the buffer • Ready: Signals the buffer is ready to be transferred to the other side (inverse owner) • Type: The message type • Data: simply the raw data (according to type) • Fixups: A list of fixups in case we pass pointers Jan 17 -18 2011, Rome, Italy

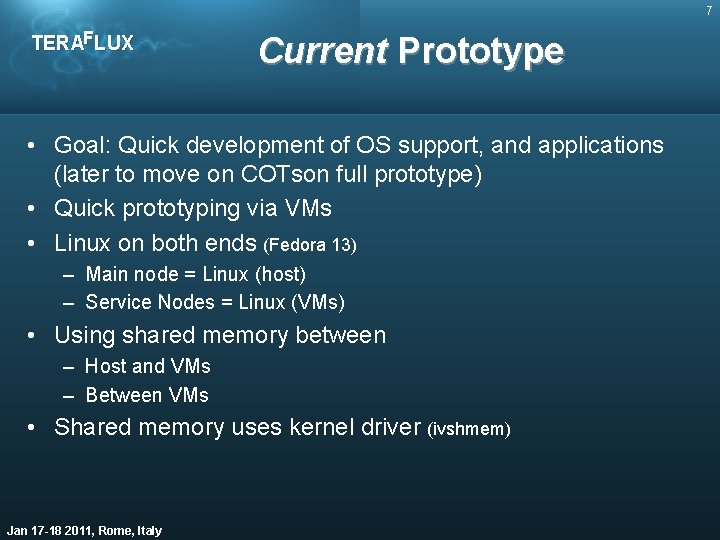

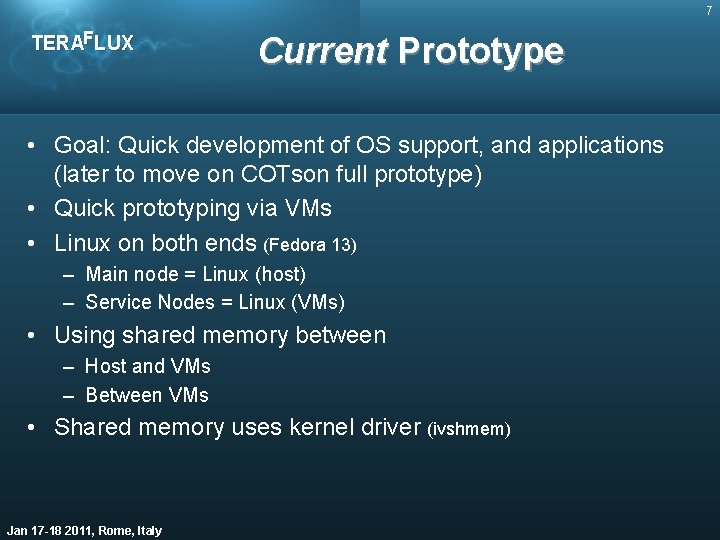

7 TERAFLUX Current Prototype • Goal: Quick development of OS support, and applications (later to move on COTson full prototype) • Quick prototyping via VMs • Linux on both ends (Fedora 13) – Main node = Linux (host) – Service Nodes = Linux (VMs) • Using shared memory between – Host and VMs – Between VMs • Shared memory uses kernel driver (ivshmem) Jan 17 -18 2011, Rome, Italy

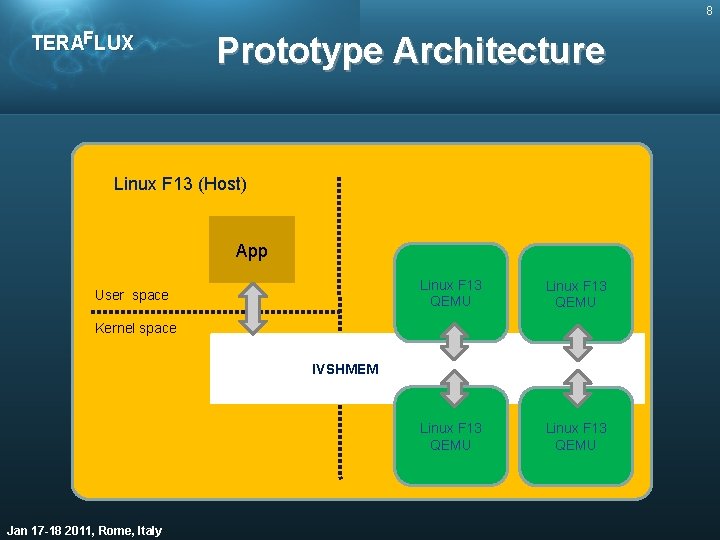

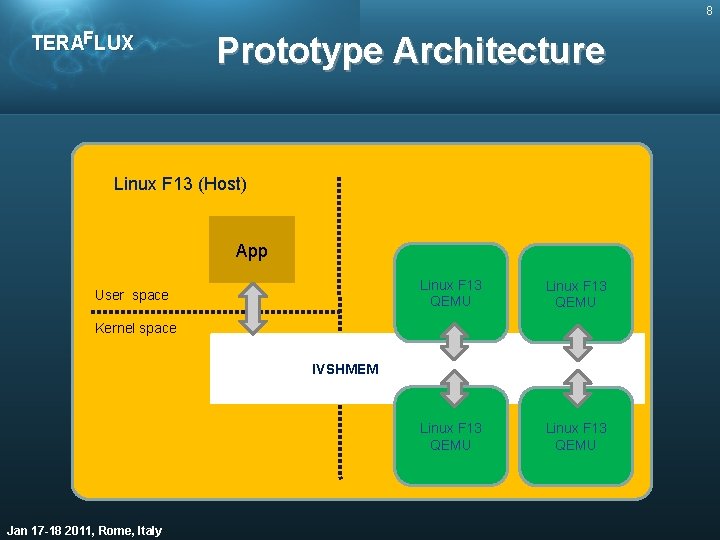

8 TERAFLUX Prototype Architecture Linux F 13 (Host) App User space Linux F 13 QEMU Kernel space IVSHMEM Jan 17 -18 2011, Rome, Italy

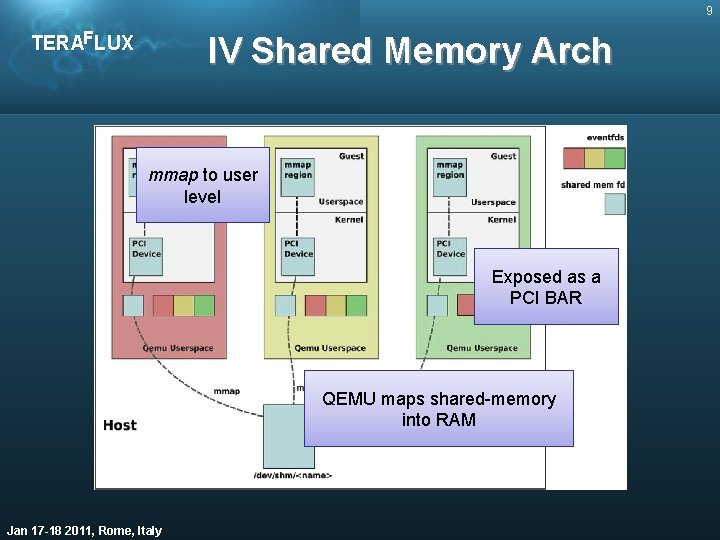

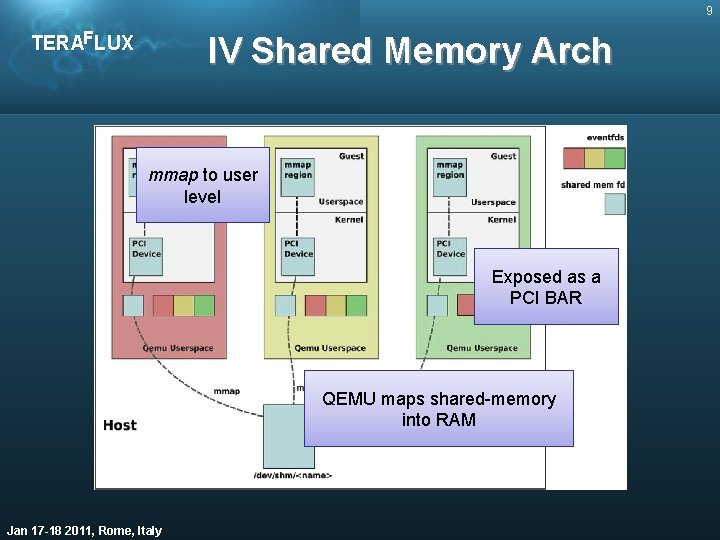

9 TERAFLUX IV Shared Memory Arch mmap to user level Exposed as a PCI BAR QEMU maps shared-memory into RAM Jan 17 -18 2011, Rome, Italy

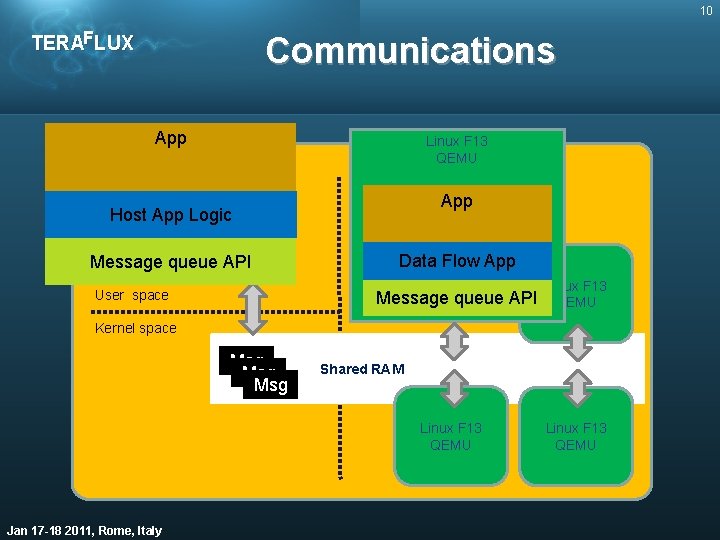

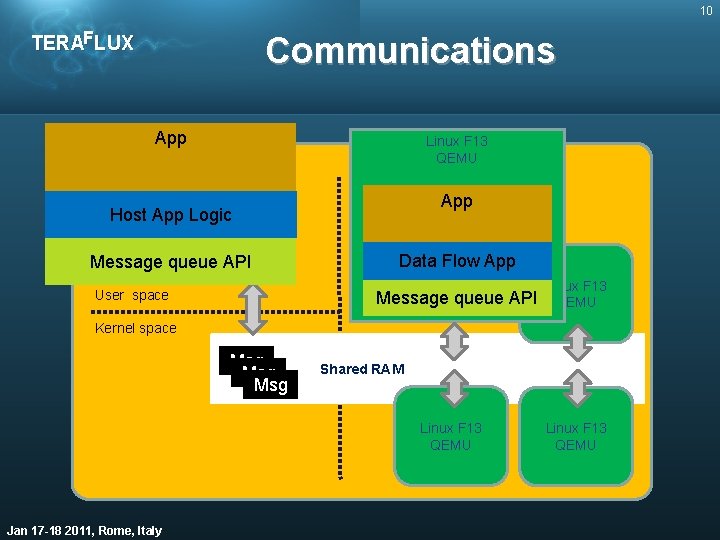

10 TERAFLUX Communications App Linux F 13 QEMU Linux F 13 (Host) App Host App Logic Message queue API Data Flow App Message queue API User space Linux F 13 QEMU Kernel space Msg Msg Shared RAM Linux F 13 QEMU Jan 17 -18 2011, Rome, Italy Linux F 13 QEMU

11 TERAFLUX Demo (toy) Apps • Distributed sum app – Single work dispatcher (host) – Multiple sum-engines (VMs) • Distributed Mandelbrot – Single work dispatcher – lines (host) – Multiple compute engines – compute pixels of each line (VMs) Jan 17 -18 2011, Rome, Italy

12 TERAFLUX Futures • Single Boot – A Tera. Flux chips boots a FOS – FOS boots the u. Ks on the other cores – Looks like a single boot process • Distributed Fault Tolerance – Allow u. K/FOS to test each others health – One step beyond FOS-centric FT • Cores Repurposing – If FOS cores fail, u. K cores re-boot as FOS – New FOS takes over using last valid data snapshot Jan 17 -18 2011, Rome, Italy

13 TERAFLUX References • Inter-VM Shared memory Jan 17 -18 2011, Rome, Italy