Synthesizing Effective Data Compression Algorithms for GPUs Annie

- Slides: 27

Synthesizing Effective Data Compression Algorithms for GPUs Annie Yang and Martin Burtscher* Department of Computer Science

Highlights § MPC compression algorithm § Brand-new lossless compression algorithm for single- and double-precision floating-point data § Systematically derived to work well on GPUs § MPC features § Compression ratio is similar to best CPU algorithms § Throughput is much higher § Requires little internal state (no tables or dictionaries) Synthesizing Effective Data Compression Algorithms for GPUs 2

Introduction § High-Performance Computing Systems § Depend increasingly on accelerators § Process large amounts of floating-point (FP) data § Moving this data is often the performance bottleneck § Data compression § Can increase transfer throughput § Can reduce storage requirement § But only if effective, fast (real-time), and lossless Synthesizing Effective Data Compression Algorithms for GPUs 3

Problem Statement § Existing FP compression algorithms for GPUs § Fast but compress poorly § Existing FP compression algorithms for CPUs § Compress much better but are slow § Parallel codes run serial algorithms on multiple chunks § Too much state per thread for a GPU implementation § Best serial algos may not be scalably parallelizable § Do effective FP compression algos for GPUs exist? § And if so, how can we create such an algorithm? Synthesizing Effective Data Compression Algorithms for GPUs 4

Our Approach § Need a brand-new massively-parallel algorithm § Study existing FP compression algorithms § Break them down into constituent parts § Only keep GPU-friendly parts § Generalize them as much as possible § Resulted in algorithmic components Charles Trevelyan for http: //plus. maths. org/ § CUDA implementation: each component takes sequence of values as input and outputs transformed sequence § Components operate on integer representation of data Synthesizing Effective Data Compression Algorithms for GPUs 5

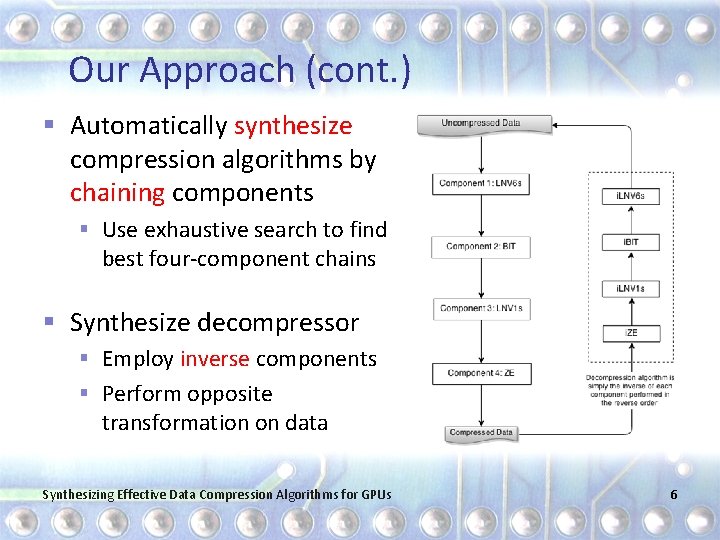

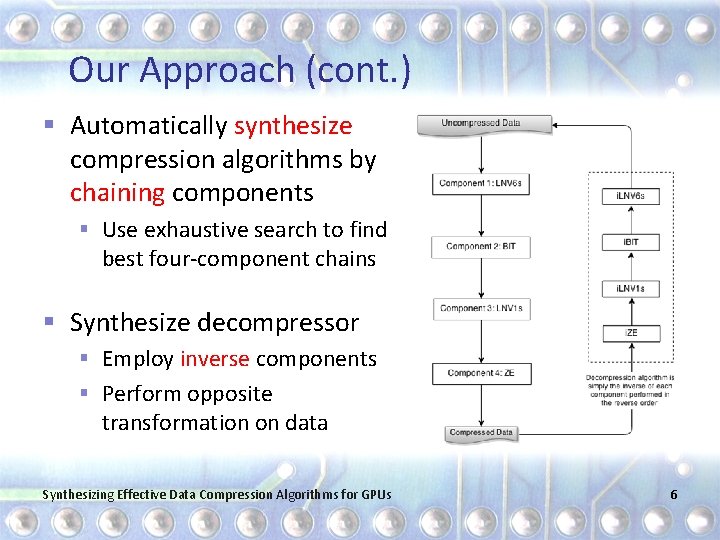

Our Approach (cont. ) § Automatically synthesize compression algorithms by chaining components § Use exhaustive search to find best four-component chains § Synthesize decompressor § Employ inverse components § Perform opposite transformation on data Synthesizing Effective Data Compression Algorithms for GPUs 6

Mutator Components § Mutators computationally transform each value § Do not use information about any other value § NUL outputs the input block (identity) § INV flips all the bits § │, called cut, is a singleton pseudo component that converts a block of words into a block of bytes § Merely a type cast, i. e. , no computation or data copying § Byte granularity can be better for compression Synthesizing Effective Data Compression Algorithms for GPUs 7

Shuffler Components § Shufflers reorder whole values or bits of values § Do not perform any computation § Each thread block operates on a chunk of values § BIT emits most significant bits of all values, followed by the second most significant bits, etc. § DIMn groups values by dimension n § Tested n = 2, 3, 4, 5, 8, 16, and 32 § For example, DIM 2 has the following effect: sequence A, B, C, D, E, F becomes A, C, E, B, D, F Synthesizing Effective Data Compression Algorithms for GPUs 8

Predictor Components § Predictors guess values based on previous values and compute residuals (true minus guessed value) § Residuals tend to cluster around zero, making them easier to compress than the original sequence § Each thread block operates on a chunk of values § LNVns subtracts nth prior value from current value § Tested n = 1, 2, 3, 5, 6, and 8 § LNVnx XORs current with nth prior value § Tested n = 1, 2, 3, 5, 6, and 8 Synthesizing Effective Data Compression Algorithms for GPUs 9

Reducer Components § Reducers eliminate redundancies in value sequence § All other components cannot change length of sequence, i. e. , only reducers can compress sequence § Each thread block operates on a chunk of values § ZE emits bitmap of 0 s followed by non-zero values § Effective if input sequence contains many zeros § RLE performs run-length encoding, i. e. , replaces repeating values by count and a single value § Effective if input contains many repeating values Synthesizing Effective Data Compression Algorithms for GPUs 10

Algorithm Synthesis § Determine best four-stage algorithms with a cut § Exhaustive search of all possible 138, 240 combinations § 13 double-precision data sets (19 – 277 MB) § Observational data, simulation results, MPI messages § Single-precision data derived from double-precision data § Create general GPU-friendly compression algorithm § Analyze best algorithm for each data set and precision § Find commonalities and generalize into one algorithm Synthesizing Effective Data Compression Algorithms for GPUs 11

Best of 138, 240 Algorithms Synthesizing Effective Data Compression Algorithms for GPUs 12

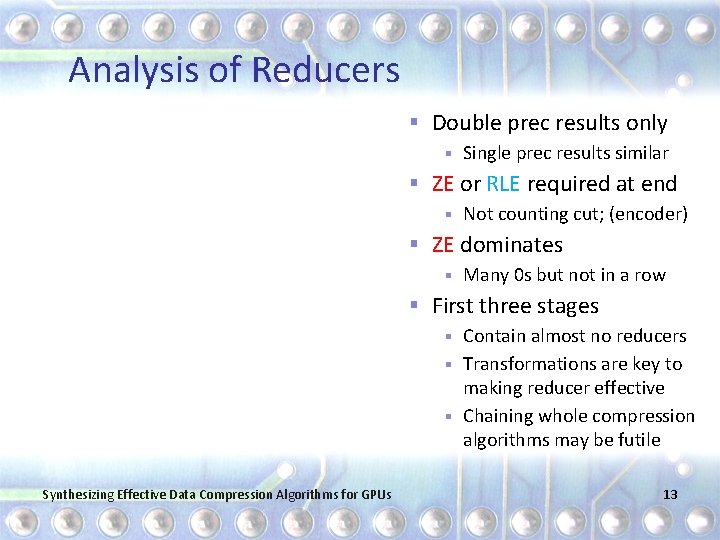

Analysis of Reducers § Double prec results only § Single prec results similar § ZE or RLE required at end § Not counting cut; (encoder) § ZE dominates § Many 0 s but not in a row § First three stages Contain almost no reducers § Transformations are key to making reducer effective § Chaining whole compression algorithms may be futile § Synthesizing Effective Data Compression Algorithms for GPUs 13

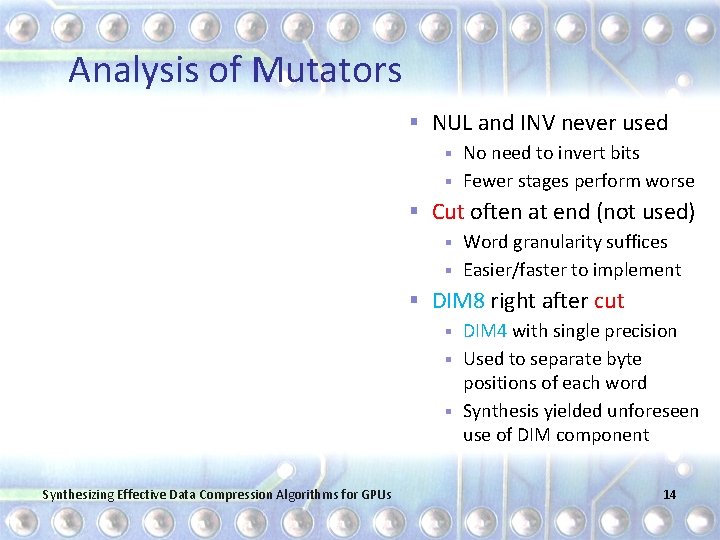

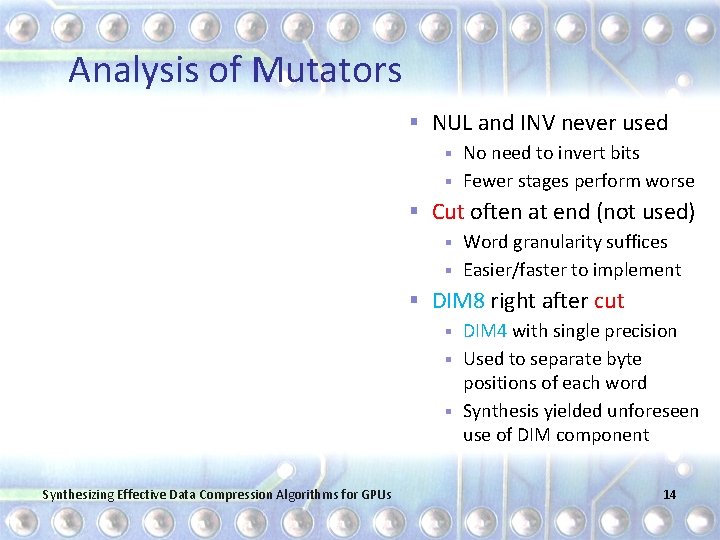

Analysis of Mutators § NUL and INV never used No need to invert bits § Fewer stages perform worse § § Cut often at end (not used) Word granularity suffices § Easier/faster to implement § § DIM 8 right after cut DIM 4 with single precision § Used to separate byte positions of each word § Synthesis yielded unforeseen use of DIM component § Synthesizing Effective Data Compression Algorithms for GPUs 14

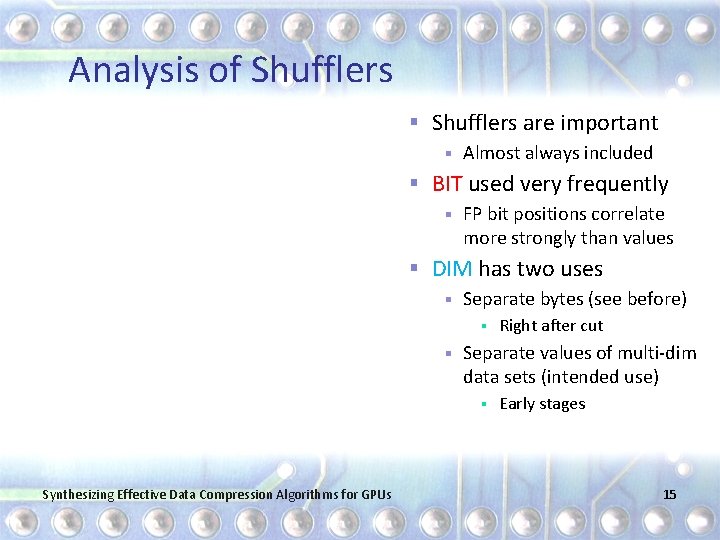

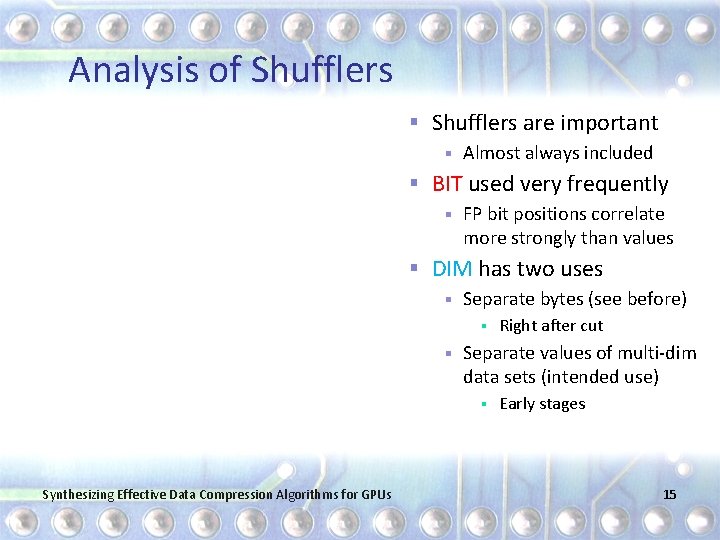

Analysis of Shufflers § Shufflers are important § Almost always included § BIT used very frequently § FP bit positions correlate more strongly than values § DIM has two uses § Separate bytes (see before) § § Separate values of multi-dim data sets (intended use) § Synthesizing Effective Data Compression Algorithms for GPUs Right after cut Early stages 15

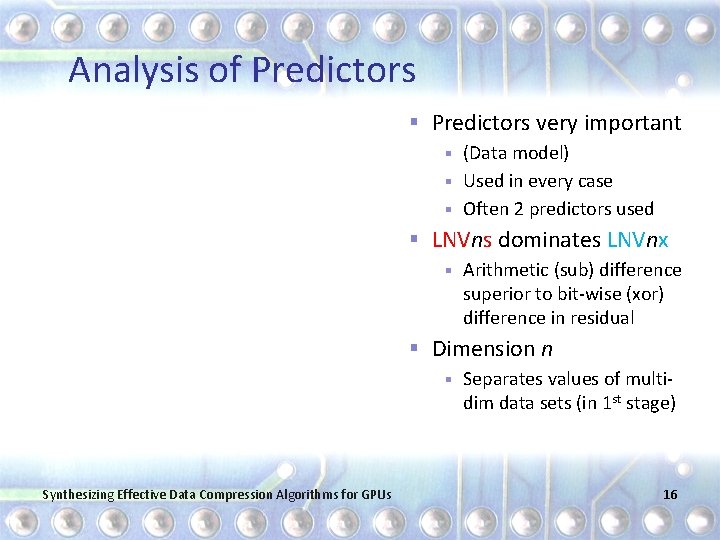

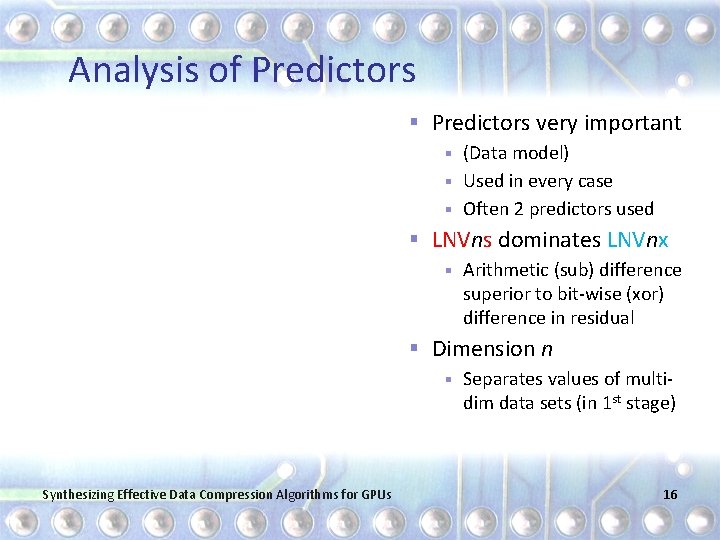

Analysis of Predictors § Predictors very important (Data model) § Used in every case § Often 2 predictors used § § LNVns dominates LNVnx § Arithmetic (sub) difference superior to bit-wise (xor) difference in residual § Dimension n § Synthesizing Effective Data Compression Algorithms for GPUs Separates values of multidim data sets (in 1 st stage) 16

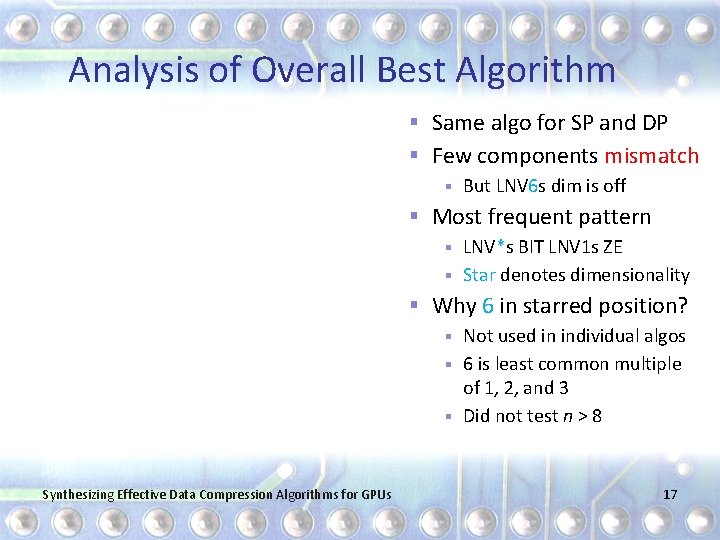

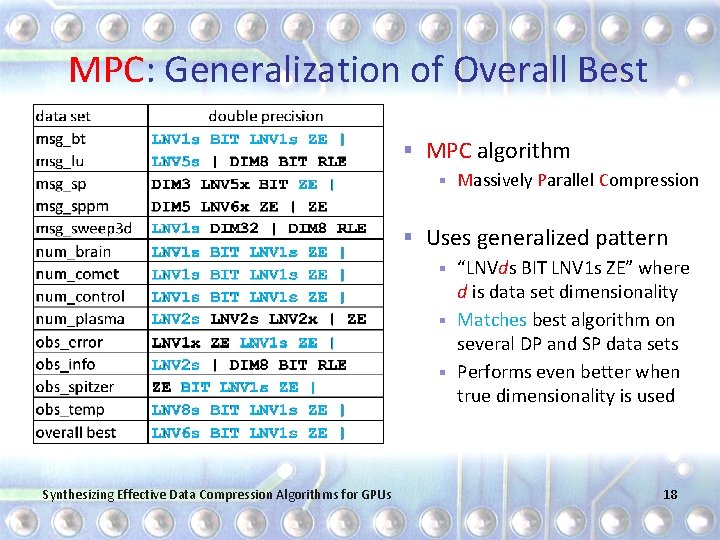

Analysis of Overall Best Algorithm § Same algo for SP and DP § Few components mismatch § But LNV 6 s dim is off § Most frequent pattern LNV*s BIT LNV 1 s ZE § Star denotes dimensionality § § Why 6 in starred position? Not used in individual algos § 6 is least common multiple of 1, 2, and 3 § Did not test n > 8 § Synthesizing Effective Data Compression Algorithms for GPUs 17

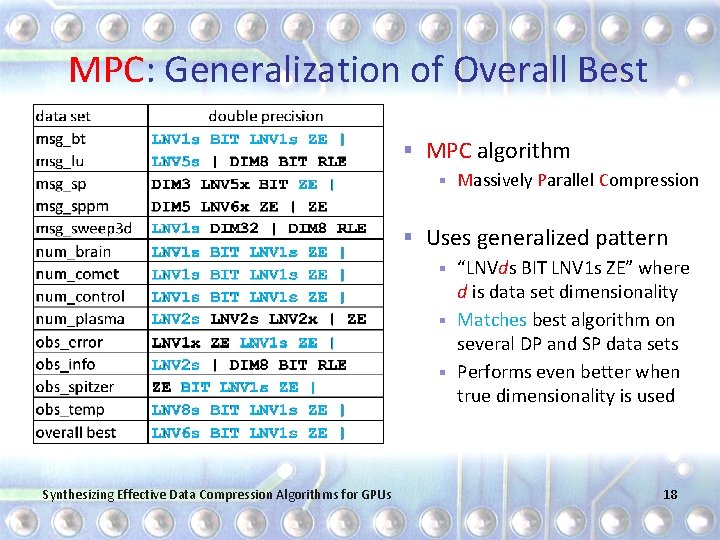

MPC: Generalization of Overall Best § MPC algorithm § Massively Parallel Compression § Uses generalized pattern “LNVds BIT LNV 1 s ZE” where d is data set dimensionality § Matches best algorithm on several DP and SP data sets § Performs even better when true dimensionality is used § Synthesizing Effective Data Compression Algorithms for GPUs 18

Evaluation Methodology § System § Dual 10 -core Xeon E 5 -2680 v 2 CPU § K 40 GPU with 15 SMs (2880 cores) § 13 DP and 13 SP real-world data sets § Same as before § Compression algorithms § CPU: bzip 2, gzip, lzop, and p. FPC § GPU: GFC and MPC (our algorithm) Synthesizing Effective Data Compression Algorithms for GPUs 19

Compression Ratio (Double Precision) § MPC delivers record compression on 5 data sets § In spite of “GPU-friendly components” constraint § MPC outperformed by bzip 2 and p. FPC on average § Due to msg_sppm and num_plasma § MPC superior to GFC (only other GPU compressor) Synthesizing Effective Data Compression Algorithms for GPUs 20

Compression Ratio (Single Precision) § MPC delivers record compression 8 data sets § In spite of “GPU-friendly components” constraint § MPC is outperformed by bzip 2 on average § Due to num_plasma § MPC is “superior” to GFC and p. FPC § They do not support single-precision data, MPC does Synthesizing Effective Data Compression Algorithms for GPUs 21

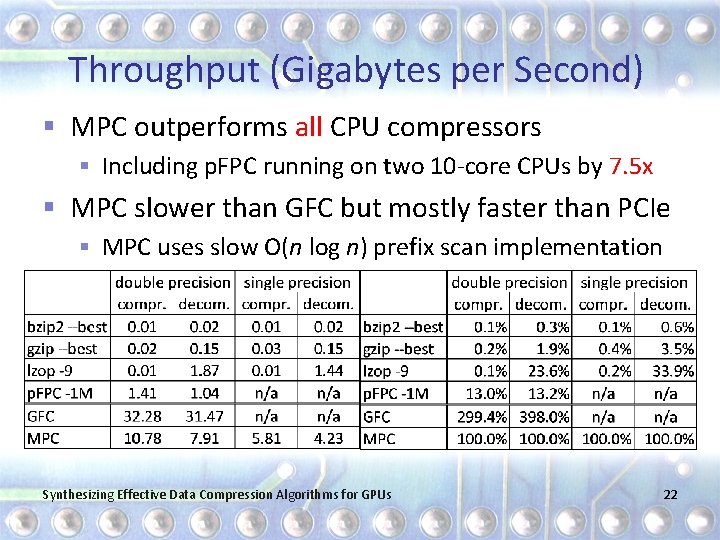

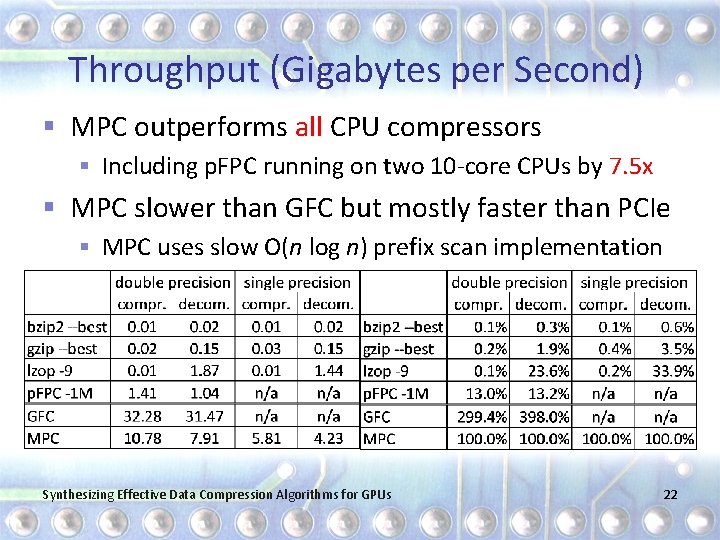

Throughput (Gigabytes per Second) § MPC outperforms all CPU compressors § Including p. FPC running on two 10 -core CPUs by 7. 5 x § MPC slower than GFC but mostly faster than PCIe § MPC uses slow O(n log n) prefix scan implementation Synthesizing Effective Data Compression Algorithms for GPUs 22

Summary § Goal of research § Create an effective algorithm for FP data compression that is suitable for massively-parallel GPUs § Approach § Extracted 24 GPU-friendly components and evaluated 138, 240 combinations to find best 4 -stage algorithms § Generalized findings to derive MPC algorithm § Result § Brand new compression algorithm for SP and DP data § Compresses about as well as CPU algos but much faster Synthesizing Effective Data Compression Algorithms for GPUs 23

Future Work and Acknowledgments § Future work § Faster implementation, more components, longer chains, and other inputs, data types, and constraints § Acknowledgments § National Science Foundation § NVIDIA Corporation § Texas Advanced Computing Center Nvidia § Contact information § burtscher@txstate. edu Synthesizing Effective Data Compression Algorithms for GPUs 24

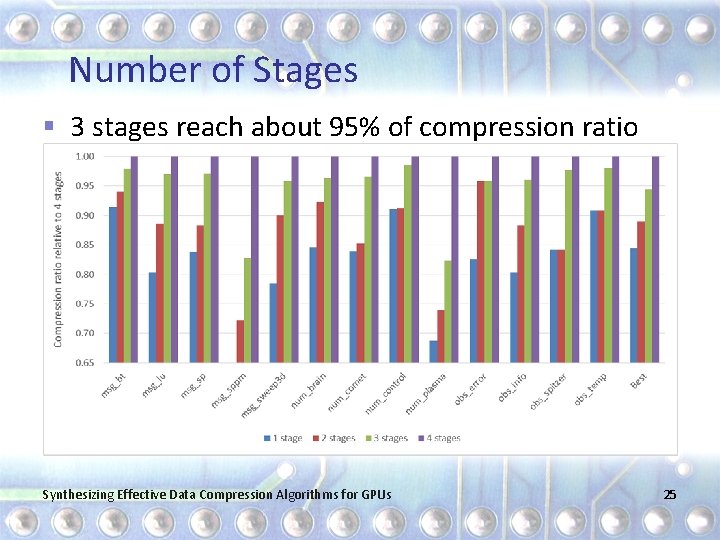

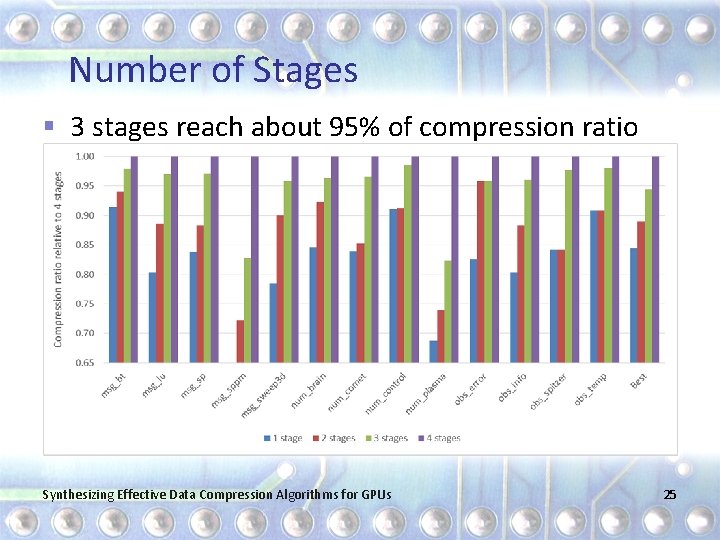

Number of Stages § 3 stages reach about 95% of compression ratio Synthesizing Effective Data Compression Algorithms for GPUs 25

Single- vs Double-Precision Algorithms Synthesizing Effective Data Compression Algorithms for GPUs 26

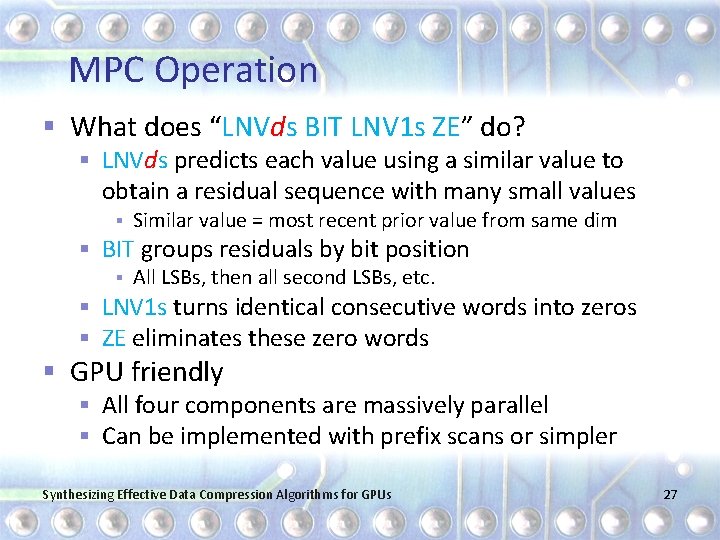

MPC Operation § What does “LNVds BIT LNV 1 s ZE” do? § LNVds predicts each value using a similar value to obtain a residual sequence with many small values § Similar value = most recent prior value from same dim § BIT groups residuals by bit position § All LSBs, then all second LSBs, etc. § LNV 1 s turns identical consecutive words into zeros § ZE eliminates these zero words § GPU friendly § All four components are massively parallel § Can be implemented with prefix scans or simpler Synthesizing Effective Data Compression Algorithms for GPUs 27