Summary of 2015 Mengyuan Zhao CSLT RIIT Tsinghua

- Slides: 13

Summary of 2015 Mengyuan Zhao CSLT, RIIT, Tsinghua University 2016 -01 -06

Contents • Research • DAE/CDAE • Speaker adapted ASR / Language vector in ASR • Dark knowledge • Project • • • Bi-lingual AM Tag-LM Phone number / Car number recognition LSTM AM (based on nnet 1) Parallel AM training (based on nnet 3) • Other • Server purchase/maintain

Research

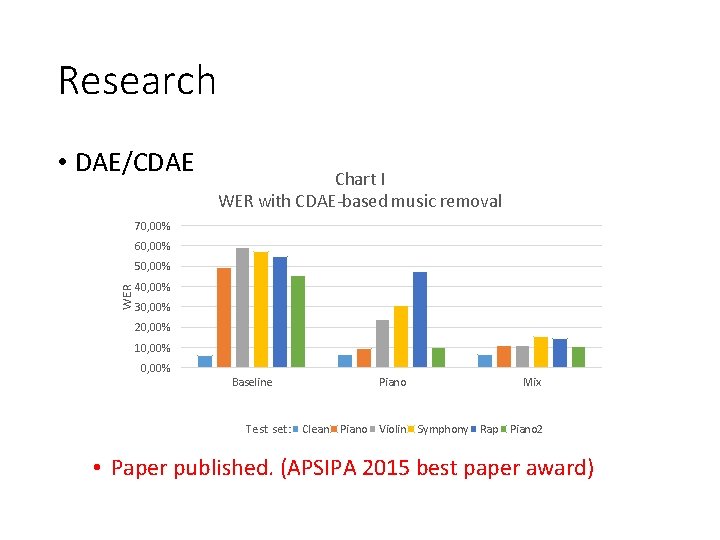

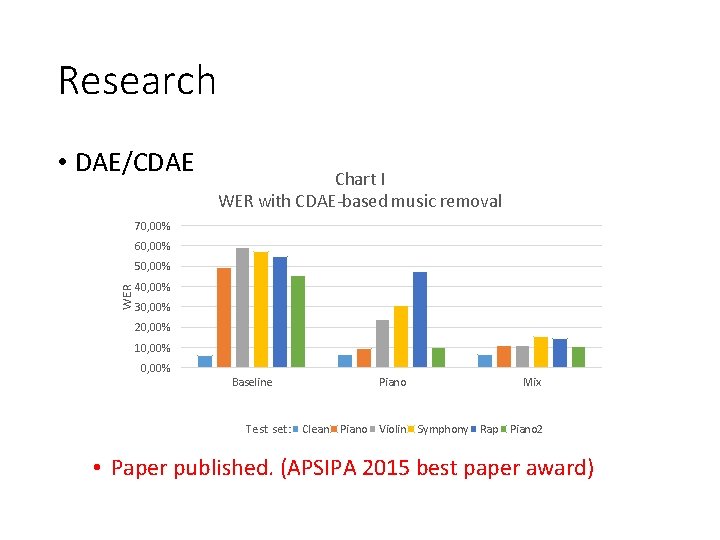

Research • DAE/CDAE Chart I WER with CDAE-based music removal 70, 00% 60, 00% 50, 00% WER 40, 00% 30, 00% 20, 00% 10, 00% Baseline Piano Mix Test set: Clean Piano Violin Symphony Rap Piano 2 • Paper published. (APSIPA 2015 best paper award)

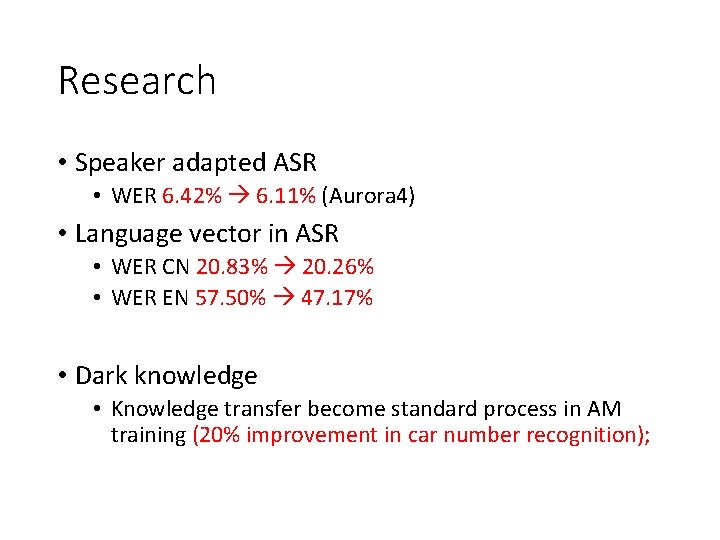

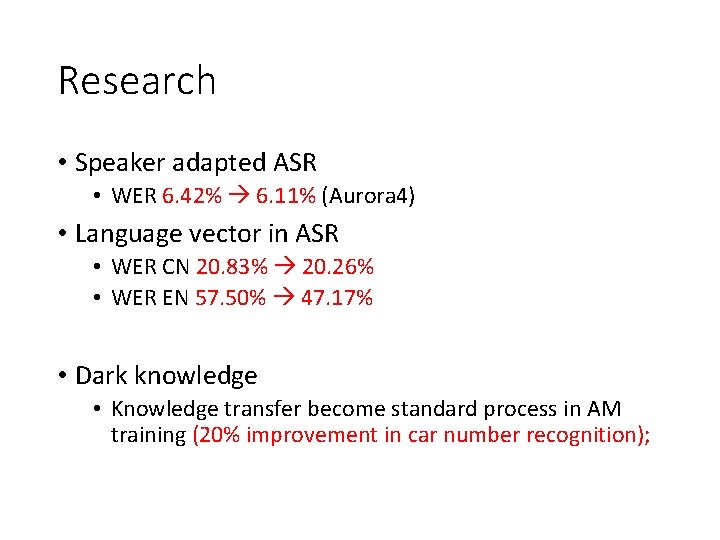

Research • Speaker adapted ASR • WER 6. 42% 6. 11% (Aurora 4) • Language vector in ASR • WER CN 20. 83% 20. 26% • WER EN 57. 50% 47. 17% • Dark knowledge • Knowledge transfer become standard process in AM training (20% improvement in car number recognition);

Project

Project • Bi-lingual AM • Chinese, OK; • English, OK; • Chinese + few English words, OK; • Tag-lm • Deal with new words out of LM word list, for example, actor names, movie names.

Project • Phone number / Car number recognition • Phone number recognition • SER < 10% • Car number recognition • SER 50% 20% (better than Baidu)

Project • LSTM AM (based on nnet 1) • • • LSTM has strong learning ability; LSTM perform so good with small LM; LSTM is easy to diverge and over-fitting; Not so good with big LM; Training is so slow (7×slower than DNN).

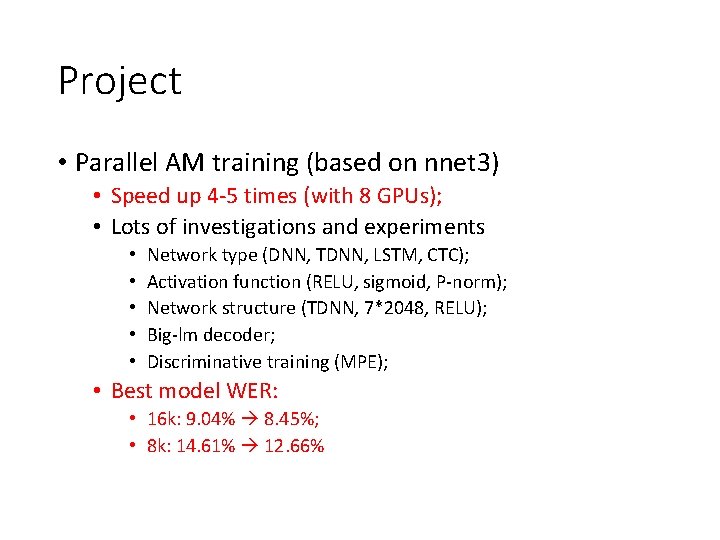

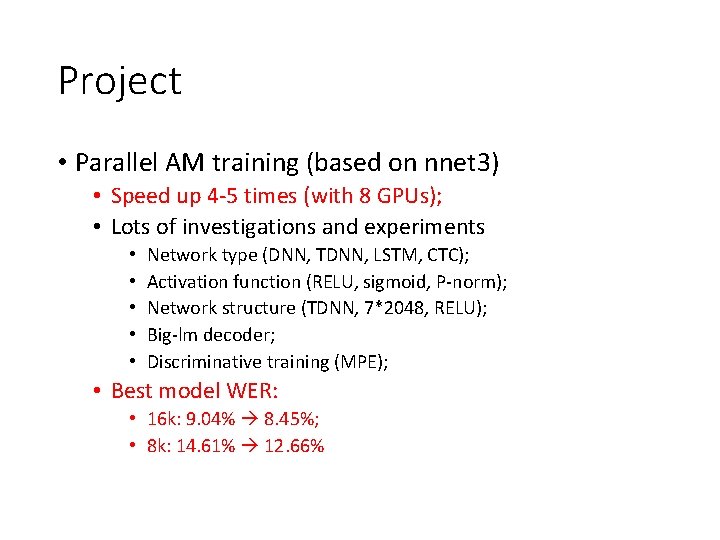

Project • Parallel AM training (based on nnet 3) • Speed up 4 -5 times (with 8 GPUs); • Lots of investigations and experiments • • • Network type (DNN, TDNN, LSTM, CTC); Activation function (RELU, sigmoid, P-norm); Network structure (TDNN, 7*2048, RELU); Big-lm decoder; Discriminative training (MPE); • Best model WER: • 16 k: 9. 04% 8. 45%; • 8 k: 14. 61% 12. 66%

Other

Other • Purchase / Install • • Computer: 2 servers, 3 PCs; GPU: 2*GTX 970; Hard disk: /work 3; n*cpu fans; • Maintain • Psychology lecture.

Thanks